By Zhong Tianyun, R&D engineer of the containerized application ecosystem team of Alibaba Cloud

Serverless is an extension of cloud computing. Therefore, serverless inherits the key feature of cloud computing, which is on-demand elasticity. A serverless architecture eliminates the need for developers to be concerned about resource deployments. This allows developers to make full use of resources and enterprises to benefit from on-demand elasticity. An increasing number of cloud vendors are adopting serverless architectures.

Serverless architectures support flexible configurations that allow you to maximize resource utilization in a simple, less intrusive, flexible, and configurable manner. Serverless architectures decouple resource capacity planning from workload resource configuration. This article introduces the following controllers: ElasticWorkload, WorkloadSpread, UnitedDeployment, and ResourcePolicy. Through these contents, we aim to share our technical evolution and thinking in dealing with scaling issues of workloads deployed on serverless architectures.

With the maturity of serverless computing technologies, more and more enterprises tend to use elastic resources, such as Alibaba Cloud Container Compute Service (ACS) and other cloud services that provide serverless pods, rather than static resources, such as Elastic Compute Service (ECS) resource pools and self-managed data centers, to host applications that have temporary, fluctuating, and bursty traffic. Elastic resources can be used on demand, therefore improving resource utilization and lowering overall costs. The following list describes typical elastic scenarios:

You can use the controllers described in this article to meet the preceding requirements. This article describes the advantages and use scenarios of different controllers. You can select suitable controllers for your workloads based on the actual business scenarios.

• ElasticWorkload: a controller provided by ACK that allows you to create multiple clones of a Kubernetes workload and configure different scheduling policies for each clone. This way, you can distribute the same workload to different domains as the workload is scaled. This controller is discontinued and is only available in ACK clusters that run Kubernetes 1.18 or earlier.

• WorkloadSpread: a controller provided by OpenKruise that can intercept matching pod creation requests by using webhooks and inject custom pod configurations by using patches. This controller is suitable for multi-domain partitioning. You can use the metadata and spec parameters to customize pod configurations in each domain.

• UnitedDeployment: a controller provided by OpenKruise that supports multi-domain partitioning and custom pod configurations. Compared with the WorkloadSpread controller, this controller allows you to customize more pod configurations and provides improved resource capacity planning capabilities. This controller is suitable for multi-domain partitioning. You can configure separate workloads for different domains.

• ResourcePolicy: a controller provided by ACK that is suitable for scenarios where you need to configure fine-grained scheduling policies for multiple elastic domains but do not require custom pod configurations.

ElasticWorkload is an elastic workload controller provided by ACK in earlier times. Although this controller is discontinued, its innovative designs in achieving configurable multi-domain partitioning provide an important technical reference for the development of subsequent solutions.

By declaring a reference to the source workload and defining an elastic unit, an ElasticWorkload can continuously listen for the status of the source workload and generate multiple workload clones of the same type but with different scheduling policies based on the configurations of each elastic unit. ElasticWorkloads enable flexible multi-domain partitioning for basic workloads such as Deployments by properly adjusting the number of pod replicas for each workload clone. This helps improve resource utilization and business elasticity. ElasticWorkloads are compatible with Horizontal Pod Autoscaler (HPA).

You can use ElasticWorkloads to resolve issues related to elastic scheduling policies in multi-domain scenarios. Unlike traditional workload controllers that use pods as the minimum control unit, ElasticWorkloads use elastic units as the minimum control unit. Each elastic unit selects a set of nodes by label and specifies the maximum and minimum number of pod replicas that can be deployed on the nodes. An ElasticWorkload creates a separate elastic workload for each elastic unit. Each elastic workload shares the same type and specification as the source workload. In addition, an ElasticWorkload specifies a scheduling policy for each elastic workload to ensure that the pods provisioned by each elastic workload are scheduled to nodes that meet the conditions specified by the policy. The following code block is an example of an ElasticWorkload:

apiVersion: autoscaling.alibabacloud.com/v1beta1

kind: ElasticWorkload

metadata:

name: elasticworkload-sample

spec:

sourceTarget:

name: nginx-deployment-basic

kind: Deployment

apiVersion: apps/v1

replicas: 6

elasticUnit:

- name: ecs # If the number of pods provisioned for the source workload does not exceed five, all pods are scheduled to ECS instances.

labels:

alibabacloud.com/acs: "false"

max: 5

- name: acs # If the number of pods provisioned for the source workload is more than five, the excess pods are scheduled to virtual nodes in ACS.

labels:

alibabacloud.com/acs: "true"An ElasticWorkload takes over the replica management of the source workload. Therefore, you must specify the number of pod replicas in the ElasticWorkload configurations. If you want to scale an application, you need to modify the replicas parameter in the ElasticWorkload configurations, instead of modifying the replicas parameter in the source workload (such as a Deployment) configurations. ElasticWorkloads are suitable for the following scenarios:

ElasticWorkloads implement scale-in activities in the reverse order of the scale-out activities across elastic units. When you scale out an ElasticWorkload, new pod replicas are scheduled to elastic units specified in the ElasticWorkload configurations in descending order. If the number of pod replicas deployed in an elastic unit reaches the upper limit, pending pods are scheduled to the following elastic unit. When you scale in an ElasticWorkload, pod replicas are removed from elastic units specified in the ElasticWorkload configurations in ascending order. If the number of pods deployed in an elastic unit is scaled to zero, the system starts to remove pods from the preceding elastic unit.

The scaling policy of ElasticWorkloads meets the basic elasticity requirements and ensures a stable number of pods for processing daily traffic. During peak hours, ElasticWorkloads can launch additional elastic instances to handle traffic spikes. After peak hours end, ElasticWorkloads deletes the additional elastic instances. This enables efficient resource utilization and ensures business stability during peak hours.

WorkloadSpread is a controller provided by OpenKruise that can distribute pods of the source workload to different types of nodes based on specific rules. This achieves multi-domain deployment and elastic deployment.

WorkloadSpreads support Jobs, CloneSets, Deployments, ReplicaSets, and StatefulSets. WorkloadSpreads are similar to ElasticWorkloads. WorkloadSpreads mount resources to basic workloads to enhance multi-environmental adaptability and flexibility without the need to modify the configurations of the source workload. This meets various deployment requirements and conditions. The following code block is an example of a WorkloadSpread:

apiVersion: apps.kruise.io/v1alpha1

kind: WorkloadSpread

metadata:

name: workloadspread-demo

spec:

targetRef: # WorkloadSpreads support both Kubernetes workloads and Kruise workloads.

apiVersion: apps/v1 | apps.kruise.io/v1alpha1

kind: Deployment | CloneSet

name: workload-xxx

subsets:

- name: subset-a

# If the number of pod replicas provisioned by the workload does not exceed three, all pods are scheduled to this subset.

maxReplicas: 3

# Pod affinity settings.

requiredNodeSelectorTerm:

matchExpressions:

- key: topology.kubernetes.io/zone

operator: In

values:

- zone-a

patch:

# Inject an additional custom label into pods that are scheduled to this subset.

metadata:

labels:

xxx-specific-label: xxx

- name: subset-b

# This elastic subset is deployed on ACS. The capacity of the subset and the number of pod replicas that can be deployed in this subset are not limited.

requiredNodeSelectorTerm:

matchExpressions:

- key: topology.kubernetes.io/zone

operator: In

values:

- acs-cn-hangzhou

scheduleStrategy:

# The scheduling policy. If you set the value to Adaptive, the system attempts to schedule pods that fail to be scheduled to other subsets.

type: Adaptive | Fixed

adaptive:

rescheduleCriticalSeconds: 30WorkloadSpreads partition elastic domains by using subsets, which are similar to the elastic units used by ElasticWorkloads. Similar to ElasticWorkloads, WorkloadSpreads implement scale-in activities in the reverse order of the scale-out activities across subsets.

Compared with ElasticWorkloads, WorkloadSpreads provides additional powerful capabilities that are developed by the OpenKruise community.

In addition to selecting nodes by label, WorkloadSpreads allow you to customize toleration rules for pods in each subset. For example, you can use the requiredNodeSelectorTerm field to specify the required node attributes used to select nodes for pod scheduling, use the preferredNodeSelectorTerms field to specify the preferred node attributes used to select nodes for pod scheduling, and use the tolerations field to specify toleration rules of pods for specific node taints. With the preceding advanced capabilities, WorkloadSpreads achieve fine-grained control of pod scheduling and distribution to meet a variety of complex deployment requirements.

WorkloadSpreads allow you to use the scheduleStrategy field to specify the scheduling policy for all subsets. WorkloadSpreads provide two scheduling policies: Fixed and Adaptive. The Fixed policy strictly distributes pods across predefined subsets, even though pods may fail to be scheduled. This policy is suitable for scenarios where strict pod distribution is required. Compared with the Fixed policy, the Adaptive policy improves the flexibility of pod scheduling. When a subset does not meet the requirements for pod scheduling, the system automatically schedules pending pods to other available subsets, which improves the stability and reliability of your business.

With powerful and efficient scheduling policies, WorkloadSpreads ensure flexible and balanced distribution of pods across different elastic domains in complex business scenarios.

When you configure a subset in a WorkloadSpread, you can use the patch field to configure custom parameters for pods that are scheduled to this subset. You can customize almost all patchable parameters, which include but are not limited to the image, resource limit, environment variables, volume mounting configurations, startup commands, probes, and labels. Fined-grained custom pod configurations decouple basic pod configurations (in the source workload template) from special pod configurations (in the patch field of the subset configurations) for environmental adaption. This allows your workloads to flexibly adapt to different domains that have different environmental conditions. The following code blocks provide some examples of custom pod configurations:

...

# patch pod with a topology label:

patch:

metadata:

labels:

topology.application.deploy/zone: "zone-a"

...The preceding code block shows how to add a label to all pods in a subset or modify a label on all pods in a subset.

...

# patch pod container resources:

patch:

spec:

containers:

- name: main

resources:

limit:

cpu: "2"

memory: 800Mi

...The preceding code block shows how to modify the resource configurations of all pods in a subset.

...

# patch pod container env with a zone name:

patch:

spec:

containers:

- name: main

env:

- name: K8S_AZ_NAME

value: zone-a

...The preceding code block shows how to modify the environment variables of all pods in a subset.

WorkloadSpreads use pod webhooks to modify pod configurations created by workloads instead of workload configurations. If you want to scale a workload, you need to modify the replicas parameter in the source workload configurations. Compared with ElasticWorkloads, WorkloadSpreads are less intrusive and modify pod configurations by using separate controllers and webhooks. Therefore, WorkloadSpreads provide a higher degree of cohesion.

When a pod that matches the webhook of a WorkloadSpread is created, the webhook intercepts the pod creation request and reads the corresponding WorkloadSpread configurations. Then, the webhook selects an appropriate subset from the subset list in descending order for pod scheduling and modifies the pod configurations based on the scheduling and custom settings specified in the subset configurations. In addition, the controller maintains the controller.kubernetes.io/pod-deletion-cost label for all relevant pods, which ensures that scale-in activities are performed in a correct order.

WorkloadSpreads involve multiple uncoupled components, and are loosely executed.

WorkloadSpreads use pod webhooks to intercept all matching pod creation requests in the cluster. When the webhook pod, which is the kruise-manager pod, is not working as normal or even fails, you may fail to create new pods in the cluster. As a result, the webhook may cause performance bottlenecks in scenarios where you need to perform large-scale scaling activities in a short period of time.

Although WorkloadSpreads use webhooks to achieve pod-level customization, which is less intrusive to workloads, some limits apply. For example, if the source workload is a CloneSet, you can use the partition field to specify only a cluster-side canary release ratio. In this case, you cannot specify the canary release ratio for each subset. The features of WorkloadSpreads cannot be implemented on subsets.

In this case, the customer needs to stress test an online system before a shopping festival promotion. For this purpose, the customer develops a load-agent program to generate requests, and creates a CloneSet to control the number of pod replicas for the agent so as to control the amount of traffic for stress tests. After analyzing the business scenario, the customer decides to provision 3,000 pod replicas for the agent and allocate a bandwidth of 200 Mbit/s to every 300 pod replicas. Therefore, the customer purchases 10 Internet Shared Bandwidth instances, each providing a bandwidth of 200 Mbit/s. The customer plans to dynamically allocate the bandwidth to the pod replicas provisioned for the agent.

Considering that the CloneSet for sending requests is released and dynamically scaled through the stress testing system and is not suitable for reconstruction, the customer chooses to create a WorkloadSpread for the CloneSet to implement bandwidth plan allocation. Specifically, the customer creates a WorkloadSpread that points the above CloneSet in the stress testing cluster. The WorkloadSpread contains 11 subsets. The first 10 subsets support a maximum of 300 pod replicas, and a patch is added to modify the pod annotations to associate a bandwidth plan with the pods in the subsets. The number of pod replicas in the last subset is unlimited, and no bandwidth plan is associated with the last subset in case the total number of pod replicas provisioned for the agent exceeds 3,000.

apiVersion: apps.kruise.io/v1alpha1

kind: WorkloadSpread

metadata:

name: bandwidth-spread

namespace: loadtest

spec:

targetRef:

apiVersion: apps.kruise.io/v1alpha1

kind: CloneSet

name: load-agent-XXXXX

subsets:

- name: bandwidthPackage-1

maxReplicas: 300

patch:

metadata:

annotations:

k8s.aliyun.com/eip-common-bandwidth-package-id: <id1>

- ...

- name: bandwidthPackage-10

maxReplicas: 300

patch:

metadata:

annotations:

k8s.aliyun.com/eip-common-bandwidth-package-id: <id10>

- name: no-eipIn this case, the customer has a service running on a private cloud. As the business develops, additional compute resources are required. However, data centers cannot be expanded due to some reasons for the time being. Therefore, the customer chooses to deploy Virtual Kubelet to use the computing power provided by ACS. The applications the customer uses some acceleration services, such as Fluid, some components of which are pre-deployed on nodes in the private cloud by using DaemonSets. However, the basic services are not deployed on the cloud. Therefore, an additional sidecar container needs to be injected into pods to provide acceleration capabilities.

The customer demands that the eight pod replicas of the source workload (Deployment) remain unchanged and modifications can be made only on the pods that are deployed on the cloud during scale-out activities. The customer requirements can be fulfilled by the combination of a SidecarSet and a WorkloadSpread., the SidecarSet capability of OpenKruise with the WorkloadSpread capability. The customer first deploys a SidecarSet in the cluster to specify a rule to inject a sidecar container that runs an acceleration component into the pods that have the needs-acceleration-sidecar=true label. Then, the customer creates a WorkloadSpread to add this label to the pods that are created in ACS. The following code shows an example of the WorkloadSpread:

apiVersion: apps.kruise.io/v1alpha1

kind: WorkloadSpread

metadata:

name: data-processor-spread

spec:

targetRef:

apiVersion: apps/v1

kind: Deployment

name: data-processor

subsets:

- name: local

maxReplicas: 8

patch:

metadata:

labels:

needs-acceleration-sidecar: "false"

- name: aliyun-acs

patch:

metadata:

labels:

needs-acceleration-sidecar: "true"A UnitedDeployment is a lightweight advanced workload controller provided by the OpenKruise community that supports multi-domain management. Unlike ElasticWorkloads and WorkloadSpreads, which are designed to augment a base workload, UnitedDeployments provide a new model for managing elastic applications in multiple domains. A UnitedDeployment defines an application in a template, and creates multiple subordinate workloads in different domains. The most notable feature of UnitedDeployments is that, as an all-in-one elastic load, it integrates various application lifecycle management operations, such as application configurations, domain configurations, capacity management, scaling activities, and application upgrades, in a single resource. The following code block provides an example of a UnitedDeployment:

apiVersion: apps.kruise.io/v1alpha1

kind: UnitedDeployment

metadata:

name: sample-ud

spec:

replicas: 6

selector:

matchLabels:

app: sample

template:

# The UnitedDeployment uses this template to create a separate CloneSet for each domain.

cloneSetTemplate:

metadata:

labels:

app: sample

spec:

# CloneSet Spec

...

topology:

subsets:

- name: ecs

# Pod replicas in this domain are scheduled to managed node pools that have the ecs label.

nodeSelectorTerm:

matchExpressions:

- key: node

operator: In

values:

- ecs

# At most two pod replicas can be deployed in this domain.

maxReplicas: 2

- name: acs-serverless

# This domain is deployed on ACS, and the number of pod replicas in this domain is unlimited.

nodeSelectorTerm:

matchExpressions:

- key: node

operator: In

values:

- acs-virtual-kubeletUnitedDeployments provides one-stop application management to allow you to define, partition, scale, and upgrade applications by using only one resource.

The UnitedDeployment controller manages a subordinate workload of a corresponding type for each domain based on the workload template. The subordinate workloads do not require additional attention from users. In the preceding figure, the user only needs to manage the blue applications and domains, and the UnitedDeployment controller automatically manages each subordinate workload based on the global configurations, including creation, modification, and deletion. In addition, the controller also monitors the status of pods created by subordinate workloads and makes adjustments when necessary. Since all relevant resources are directly managed by the controller, the timing of all resource operations is controllable. Therefore, the UnitedDeployment controller can always obtain correct information about the relevant resources, and perform operations at the right time, which prevents inconsistency issues.

The number of pod replicas configured in a UnitedDeployment will be properly allocated to each subordinate workload by the UnitedDeployment, and the UnitedDeployment will perform specific scaling operations. Using UnitedDeployment for scaling has the same effect as directly scaling workloads. Users do not need additional learning.

UnitedDeployments can update and upgrade applications based on the ability to manage subordinate workloads. When a configuration change occurs in the workload template, the change will be synchronized to the corresponding subordinate workload, and the workload controller will execute the specific upgrade logic. This means that features such as in-place upgrades of CloneSets can take effect in each domain. In addition, if the subordinate workloads within a domain support canary releases, UnitedDeployments can also unify their partition field values to achieve fine-grained canary releases across domains.

UnitedDeployments have two built-in capacity allocation algorithms, allowing you to use fine-grained domain capacity configurations to cope with various scenarios of elastic applications.

The elastic allocation algorithm implements the classic elastic capacity allocation method similar to that of ElasticWorkloads and WorkloadSpreads. By setting the upper and lower limits of the capacity for each domain, pods can be scaled out across the domains in descending order and scaled in across the domains in ascending order. This method has been fully described above, and will not be repeated here.

Assigning an allocation algorithm is a new way of allocating capacities. This mode directly specifies capacities for some domains by using a fixed value or percentage, and reserves at least one elastic domain for the remaining pod replicas. The specified allocation algorithm can adapt to some scenarios that the traditional elastic allocation algorithm cannot cope with. For example, specifying a fixed capacity is suitable for components such as control nodes and ingress gateways. Specifying a fixed capacity percentage is suitable for distributing core pod replicas to nodes in different regions and reserving some elastic resources for bursty traffic.

In addition to capacity allocation, UnitedDeployments also allow you to configure any Spec field (including the container image) of a pod for each domain, which improves the flexibility of domain configurations. Theoretically, it is even possible to use one UnitedDeployment to manage and deploy a complete set of microservices applications or large language model (LLM) pipelines.

UnitedDeployments provide strong adaptable elasticity and can automatically complete operations such as scaling and rescheduling without excessive attention from users, reducing O&M costs.

UnitedDeployments support Horizontal Pod Autoscaler (HPA) of Kubernetes, which automatically performs scaling operations based on pre-configured conditions. The operations performed by HPA strictly follow the configurations of each domain. By using HPA, when the resources of a domain are about to be exhausted, applications can automatically scale out to other domains to achieve elasticity, improving resource utilization.

UnitedDeployments also have adaptable pod rescheduling capabilities. When the controller finds that some pods in a domain fail to be scheduled and remain in the Pending state for a long period of time due to various reasons, it reschedules them to other domains to meet the required number of available pods. The rescheduling operations strictly follow the capacity allocation rules configured for the domain. During scale-out activities, the controller avoids allocating pods to an unschedulable domain even if the domain has remaining capacity. You can configure the timeout period for scheduling failures and the time for domains to recover from an unavailable state. This allows you to better control the adaptable scheduling capability.

The powerful adaptability of UnitedDeployments allow users to partition domains without the need to plan the capacity of each domain. The controller will automatically allocate pod replicas across domains without user intervention.

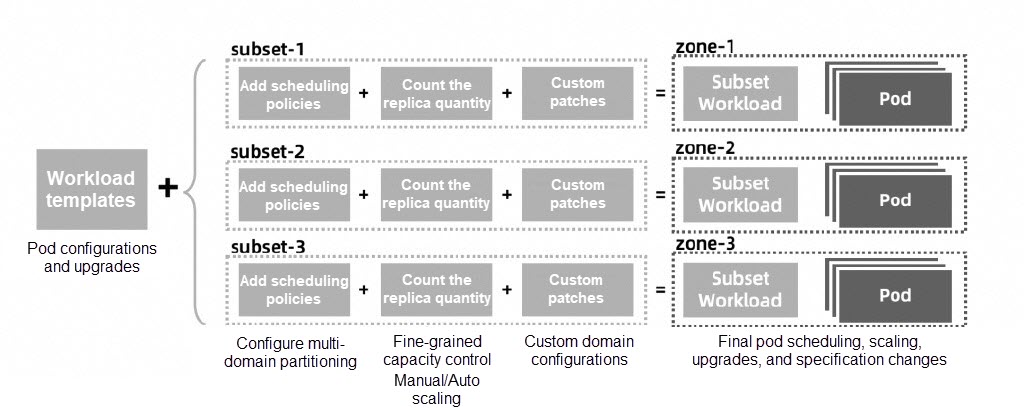

UnitedDeployments contains three levels of workloads. A UnitedDeployment itself serves as the first-level workload, including the workload template, domain configurations, and number of pod replicas. The controller creates and manages a subordinate workload for each domain (subset).

These subordinate workloads are specific instances generated after the workload template is applied to the corresponding domain configurations (such as the scheduling policy, calculated pod replica count, and custom pod configurations) in the UnitedDeployment. The controllers of these subordinate workloads, such as Deployments, CloneSets, and StatefulSets, will manage pods, which are the third-level workloads.

With the three workload levels, UnitedDeployments do not directly manage pods, but can reuse the various capabilities of subordinate workloads. As UnitedDeployments supports more subordinate workload types in the future, UnitedDeployments will provide more capabilities to support more complex elastic scenarios.

Many of the advantages of UnitedDeployments derive from its one-stop management capabilities as a standalone workload. However, this also leads to some disadvantages, such as high business intrusion. For your existing business, you will need to transform the upper platform, such as the O&M system and publishing system) to migrate from existing workloads, such as Deployments and CloneSets, to UnitedDeployments.

In this case, the customer is ready to launch a new business, which is expected to have obvious peak hours and off-peak hours. The traffic volume in peak hours may be tens of times higher than the traffic volume in off-peak hours. To cope with this situation, the customer purchases a group of ECS instances to create an ACK cluster to handle daily traffic and quickly scales out new pod replicas in ACS to handle traffic spikes during peak hours. At the same time, the applications of the customer have certain particularities, and some additional configurations are required to run in a serverless environment.

The customer demands that ECS instances are preferably used and HPA is used to enable elasticity when ECS instances are insufficient. In addition, the customer needs to inject different environment variables for different environments. UnitedDeployments are suitable for the preceding complex requirements. The following code block is an example of a UnitedDeployment:

apiVersion: apps.kruise.io/v1alpha1

kind: UnitedDeployment

metadata:

name: elastic-app

spec:

# The workload template is not displayed.

...

topology:

# Enable the Adaptive scheduling policy to schedule pods to ECS node pools and ACS instances in an adaptive way.

scheduleStrategy:

type: Adaptive

adaptive:

# The system attempts to schedule pods to ACS Serverless instances 10 seconds after it fails to schedule pods to ECS nodes.

rescheduleCriticalSeconds: 10

# The system no longer schedules pods to ECS nodes within 1 hour after the preceding scheduling fails.

unschedulableLastSeconds: 3600

subsets:

# Pods are preferably scheduled to ECS instances. The number of pod replicas that can be scheduled to ECS instances is unlimited. Pods are scheduled to ACS instances only when pods fail to be scheduled to ECS instances.

# During scale-in activities, pods on ACS instances are deleted first. When all pods on ACS instances are deleted, the system starts to delete pods in ECS node pools.

- name: ecs

nodeSelectorTerm:

matchExpressions:

- key: type

operator: NotIn

values:

- acs-virtual-kubelet

- name: acs-serverless

nodeSelectorTerm:

matchExpressions:

- key: type

operator: In

values:

- acs-virtual-kubelet

# Use patches to modify the environment variables in pods that are scheduled to elastic compute resources. This way, these pods run in serverless mode.

patch:

spec:

containers:

- name: main

env:

- name: APP_RUNTIME_MODE

value: SERVERLESS

---

# Enable HPA for elasticity.

apiVersion: autoscaling/v2beta1

kind: HorizontalPodAutoscaler

metadata:

name: elastic-app-hpa

spec:

minReplicas: 1

maxReplicas: 100

metrics:

- resource:

name: cpu

targetAverageUtilization: 2

type: Resource

scaleTargetRef:

apiVersion: apps.kruise.io/v1alpha1

kind: UnitedDeployment

name: elastic-appIn this case, the customer wants to deploy a new online service after purchasing several ECS instances with different CPU architectures on different platforms including Intel, AMD, and ARM platforms. The customer demands that pods scheduled to different platforms have similar performance and can handle the same QPS. Stress test results show that the pods on the AMD platform need more CPU cores and pods on the ARM platform need more memory in order to handle the same traffic volume. Among the ECS instances purchased by the customer, instances that use Intel account for about 50%, and instances that use AMD and instances that use ARM each account for about 25%.

The customer demands that the pods provisioned by the workload of the new service be proportionally allocated to different compute resources and different resource configurations are applied to pods based on the platforms to which the pods are deployed. This ensures stable service performance. To meet the preceding requirements, you can create a UnitedDeployment and use it to customize pod configurations and specify a capacity allocation algorithm. The following code block is an example of a UnitedDeployment:

apiVersion: apps.kruise.io/v1alpha1

kind: UnitedDeployment

metadata:

name: my-app

spec:

replicas: 4

selector:

matchLabels:

app: my-app

template:

deploymentTemplate:

... # The workload template is not displayed.

topology:

# Schedule 50% of pod replicas to Intel-based ECS instances, 25% of pod replicas to AMD-based ECS instances, and 25% of pod replicas to Yitian 710 ARM-based ECS instances.

subsets:

- name: intel

replicas: 50%

nodeSelectorTerm:

... # Select an Intel-based node pool by label.

patch:

spec:

containers:

- name: main

resources:

limits:

cpu: 2000m

memory: 4000Mi

- name: amd64

replicas: 25%

nodeSelectorTerm:

... # Select an AMD-based node pool by label.

# Allocate more CPU cores to pods that are scheduled to AMD-based ECS instances.

patch:

spec:

containers:

- name: main

resources:

limits:

cpu: 3000m

memory: 4000Mi

- name: yitian-arm

replicas: 25%

nodeSelectorTerm:

... # Select an ARM-based node pool by label.

# Allocate more CPU cores to pods that are scheduled to ARM-based ECS instances.

patch:

spec:

containers:

- name: main

resources:

limits:

cpu: 2000m

memory: 6000MiYou can use ResourcePolicies provided by the ACK scheduler to specify scheduling policies. ResourcePolicies you to specify the order in which pods are scheduled to different types of node resources. During scale-in, pods are scheduled in the reverse order. The following code block is an example of a ResourcePolicy:

apiVersion: scheduling.alibabacloud.com/v1alpha1

kind: ResourcePolicy

metadata:

name: test

namespace: default

spec:

# Manage existing pods.

ignorePreviousPod: false

# Ignore the closed pods.

ignoreTerminatingPod: true

# Count the number of pod replicas that have the specified label keys separately.

matchLabelKeys:

- pod-template-hash

# Preempt resources when pods fail to be scheduled to the last unit.

preemptPolicy: AfterAllUnits

# Conditions used to select pods that can be managed.

selector:

key1: value1

strategy: prefer

# The following two units respectively correspond to ECS node pools and elastic container instances.

units:

- nodeSelector:

unit: first

resource: ecs

- resource: eci

# When the system fails to scheduled pods to a unit, the system attempts to schedule pods to the following unit.

whenTryNextUnits:

policy: TimeoutOrExceedMax

timeout: 1mIn elastic scenarios, external components need to be introduced whether you are using WorkloadSpreads or UnitedDeployments. Although these components provide a rich variety of functions, the components are excessively complex and therefore not specialized for pod scheduling. ResourcePolicies provide ACK-native capabilities that allow you to customize pod scheduling policies without the need to modify your clusters.

ResourcePolicies allow you to select pods by label and use the units parameter to specify custom scheduling units. During scale-out activities, pods are scheduled to the specified units in descending order. During scale-out activities, pods are deleted from the specified units in ascending order. Currently, ResourcePolicies support three resource types: elastic container instances, ECS instances, and elastic resources (such as ACS instances).

A ResourcePolicy is used to manage all pods whose labels match the selector in the same namespace. The pods are deployed to multiple pre-defined units based on priorities. During scale-out activities, pods are scheduled to the specified units in descending order. During scale-out activities, pods are deleted from the specified units in ascending order. You can specify a wide range of custom configurations for units. For example, you can use the max parameter to specify the maximum number of pod replicas that can be scheduled to a unit and use the nodeSelector parameter to specify a label used to select ECS nodes.

If multiple units are specified in a ResourcePolicy, you can use the preemptPolicy parameter to specify whether to allow the ResourcePolicy to attempt to preempt resources when pods fail to be scheduled to a unit. The BeforeNextUnit policy indicates that the scheduler attempts to preempt resources when pods fail to be scheduled to any unit. The AfterAllUnits policy indicates that the ResourcePolicy attempts to preempt resources only when pods fail to be scheduled to the last unit. Compared with the timeout-trigger rescheduling of WorkloadSpreads and UnitedDeployments, the unit-triggered resource preemption of ResourcePolicies provides finer-grained control and more timely adjustment on pod scheduling.

After you create a WorkloadSpread or ElasticWorkload, it uses the default logic to ignore pods that already exist. The WorkloadSpread or ElasticWorkload manages newly created pods. These pods cannot be managed by users. In this regard, ResourcePolicies provide finer-grained control of pods.

After you use the max parameter to configure a capacity limit for each unit, you can set IgnorePreviousPod to True to ignore the pods that have been scheduled before the ResourcePolicy is created when counting pods. You can also set IgnoreTerminatingPod to True to ignore the pod replicas that are in the Terminating state when counting pods.

You can also specify a group of label keys in the MatchLabelKeys parameter of a ResourcePolicy. The way, the scheduler selects pods that have the specified label keys and classifies them into different groups based on the corresponding label values. The capacity limit specified by the max parameter applies to each group separately.

In most cases, a ResourcePolicy allocates pods to the specified units in ascending order. Before a pod is scheduled, if the scheduler finds that the current unit has insufficient resources, the scheduler attempts to schedule the pod to the following unit. This prevents pending pods caused by scheduling failures. You can use the WhenTryNextUnits parameter to configure this behavior in a fine-grained manner.

Currently, ResourcePolicies support four adaptive unit scheduling policies: ExceedMax, LackResourceAndNoTerminating, TimeoutOrExceedMax, and LackResourceOrExceedMax.

• ExceedMax: When the max parameter for a unit is not specified or the pod count within the unit meets or exceeds the max parameter value, pods may utilize resources from the following unit.

• TimeoutOrExceedMax: If the max parameter is specified for a unit and the number of pods within the unit is less than the specified max parameter value, or the max parameter is not specified for the unit and the type of the unit is elastic, pods wait for being scheduled to the unit when the unit has insufficient resources and are scheduled to the following unit when the specified timeout period is reached.

• LackResourceOrExceedMax: If the number of pods in a unit reaches or exceeds the max parameter value, or if resources in the unit are insufficient, pods may utilize resources from the following unit. This is the default policy and is suitable for most scenarios.

• LackResourceAndNoTerminating: If a unit hosts a number of pods that reaches or exceeds the max parameter value, or the unit has insufficient resources and no pod in the unit is in the Terminating state, pods may utilize resources from the following unit. This policy is suitable for rolling updates because it can prevent dispatching new pods to the following units if pods in the Terminating state exist in the current unit.

Compared with the pending pod-triggered rescheduling of WorkloadSpreads and UnitedDeployments, the adaptive scheduling of ResourcePolicies provides more benefits based on the ACK scheduler.

ResourcePolicies are provided by the ACK scheduler and therefore are integrated with the capabilities of ACK. For example, ResourcePolicies support elasticity based on ACK node pools, which are not Kubernetes-native resources. If you want to use other components to reach a similar elastic effect, you must separately select nodes from different node pools by using label selectors. ResourcePolicies provide a more intuitive and convenient method to utilize ACK-native capabilities.

ResourcePolicies are provided by the ACK scheduler and therefore are not available in other Kubernetes clusters. In addition, ResourcePolicies are specialized for pod scheduling and do not allow you to customize pod configurations for different scheduling domains, which is supported by WorkloadSpreads and UnitedDeployments.

In this case, the service traffic of the customer is relatively stable. To handle daily traffic, you only need to deploy four pods in an ACK cluster and allocate 4 vCores and 8 GiB of memory to each pod. However, occasionally (about once or twice a year), when some related hot events occur, the service may encounter a large volume of bursty traffic. In this case, the service is likely to be overwhelmed and even fail, which increases O&M costs. To resolve this issue, the customer wants to enable elasticity for the service by using ACS to handle traffic spikes. This way, when traffic spikes occur in peak hours, additional elastic compute resources are launched to handle traffic spikes. When peak hours end, the additional elastic compute resources are released.

Installing OpenKuirse to handle traffic spikes is not cost-effective because traffic spikes do not occur frequently. In addition, some features of OpenKuirse are not required in terms of handling traffic spikes. Therefore, using ResourcePolicies to handle traffic spikes is more suitable to meet the customer requirements. No modifications are required on the four pod replicas. You only need to launch ACS pods during peak hours and delete the ACS pods when peak hours end. The following code block is an example of a ResourcePolicy:

apiVersion: scheduling.alibabacloud.com/v1alpha1

kind: ResourcePolicy

metadata:

name: news-rp

namespace: default

spec:

selector:

app: newx-xxx

strategy: prefer

units:

- resource: ecs

max: 4 # If the number of pods exceeds four, the fifth and following pod replicas are scheduled to elastic container instances. During scale-in activities, pods on elastic container instances are deleted first.

nodeSelector:

paidtype: subscription

- resource: elasticThe preceding example allows you to deploy new pod replicas that are created during scale-out activities on ACS instances without business intrusion. In addition, no additional resource and O&M costs are incurred.

Elastic compute power can greatly reduce business costs and effectively increase the upper limit of service performance. To make full use of elastic compute power, you need to select appropriate elastic technologies based on your business characteristics. The following table compares the capabilities of the four components described in this article.

|

Component |

Cluster requirements |

Partitioning implementation |

Transformation complexity (non-intrusive) |

Partitioning granularity |

Degree of customization |

Scheduling flexibility |

Open source |

|

ElasticWorkload (Deprecated) |

ACK clusters |

Replicate the source workload |

Medium |

Coarse-grained |

N/A |

Low |

No |

|

WorkloadSpread |

Kubernetes 1.18 or later |

Modify pod configuration by using webhooks |

Low |

Medium-grained |

Medium |

Medium |

Yes |

|

UnitedDeployment |

Kubernetes 1.18 or later |

Use a template to create multiple workloads |

High |

Fine-grained |

High |

Medium |

Yes |

|

ResourcePolicy |

ACK Pro clusters that run Kubernetes 1.20 or later |

Directly use the schedule to implement elasticity |

Low |

Fine-grained |

N/A |

High |

No |

ACK Edge and IDC: New Breakthrough in Efficient Container Network Communication

End-to-end Canary Release through Alibaba Cloud ASM Lanes and Kruise Rollout

194 posts | 33 followers

FollowAlibaba Container Service - August 25, 2020

Alibaba Cloud Native Community - April 23, 2023

Alibaba Cloud Native Community - July 6, 2022

Alibaba Container Service - December 26, 2024

Alibaba Developer - February 1, 2021

Alibaba Clouder - July 15, 2020

194 posts | 33 followers

Follow ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Elastic Container Instance

Elastic Container Instance

An agile and secure serverless container instance service.

Learn MoreMore Posts by Alibaba Container Service