By Lu Chen (Luchen)

The demand for LLM inference services exhibits significant tidal traffic characteristics, with a surge in demand during specific periods and relatively low demand in other periods. This unbalanced traffic pattern poses a severe challenge to the allocation and management of GPU computing resources. Particularly, when deploying LLM inference services in on-premises data centers (IDCs), computing resources are often insufficient during peak hours while there exist idle resources during off-peak hours. This leads to resource waste and impairs the high availability and response speed of services, which in turn weakens user experience and business continuity. To address this issue, we provide a hybrid cloud LLM elastic inference solution based on ACK Edge. ACK Edge manages on-premises and cloud computing resources in a unified manner. On-premises GPU resources are preferentially used for inference tasks during off-peak hours; during peak periods, if on-premises resources are insufficient, ACK Edge can quickly allocate GPU resources on the cloud to handle business traffic. With this solution, enterprises can significantly reduce the operating costs of LLM inference services. By dynamically adjusting resource usage according to actual needs, enterprises can avoid unnecessary resource expenditures during off-peak hours, and obtain sufficient computing resources during peak hours to meet business needs. This flexible resource utilization method ensures service stability and effectively avoids idleness and waste of resources.

The overall solution is implemented based on ACK Edge. ACK Edge provides integrated cloud-edge management capabilities to manage on-premises and cloud computing resources in a unified manner and dynamically allocate computing tasks. In addition, we use KServe in the cluster to configure elastic policies to quickly deploy a set of LLM elastic inference services.

During off-peak hours, this solution uses the custom priority-based resource scheduling (ResourcePolicy) capability of ACK Edge to set the priority of resource pools. This ensures that the resource pools in data centers are preferentially used for inference tasks. During peak hours, KServe utilizes the powerful monitoring capabilities of ACK Edge to monitor the usage of GPU resources and business workloads in real time and dynamically scale up the replicas of the inference service based on your business requirements. In this case, the on-premises GPU resource pool may not be able to meet the burst of computing requirements. By pre-configuring an elastic node pool, the system can quickly and automatically allocate GPU resources on the cloud to quickly receive peak traffic and ensure service continuity and stability.

ACK Edge is a cloud-native application platform that manages standard Kubernetes clusters on the cloud and supports edge computing resources, quick access to services, and centralized management and O&M. It helps you achieve cloud-edge collaboration. For the cloud, through ACK Edge, you can directly employ the rich cloud-based ecosystem capabilities of Alibaba Cloud, including network, storage, elasticity, security, monitoring, and logging. For the complex environments of edge scenarios, ACK Edge provides edge node autonomy, cross-domain container network solutions, multi-region workloads, and service management to address the pain points of edge scenarios.

KServe is an open source cloud-native model service platform designed to simplify the process of deploying and running machine learning models on Kubernetes. KServe supports multiple machine learning frameworks and provides the auto scaling feature. KServe allows you to deploy models by defining simple YAML configuration files with declarative APIs. This way, you can easily configure and manage model services.

KServe provides a series of CustomResourceDefinitions (CRDs) to manage and deliver machine learning model services. KServe provides easy-to-use advanced interfaces and standardized data plane protocols for a wide range of models such as TensorFlow, XGBoost, scikit-learn, PyTorch, and Huggingface Transformer/LLM. In addition, KServe encapsulates the complex operations of AutoScaling, networking, health checking, and server configuration to implement features including GPU auto scaling, Scale to Zero, and Canary Rollouts. These features simplify the deployment and maintenance process of AI models.

Node auto scaling is a mechanism that automatically adjusts cluster resources to meet the scheduling requirements of application pods. The cluster-autoscaler component manages the process, which periodically checks the cluster status and automatically scales nodes. If a pod cannot be scheduled due to resource insufficiency, the node scaling mechanism monitors and determines whether the pod needs to be scaled out. It simulates the scheduling process, identifies a node pool that meets the requirements, and automatically adds nodes. This automated scaling mechanism efficiently manages resources and ensures the stable operation of applications.

Custom priority-based resource scheduling is an advanced elastic scheduling policy provided by the scheduler of ACK Edge to meet the refined management requirements for different types of resources. This policy allows you to customize resource policies to precisely control the scheduling sequence of pods on different types of node resources during application release or scale-out.

Specifically, you can pre-define the priority order of pod scheduling based on business requirements and resource characteristics. For example, a high-performance compute node may be preferentially allocated to an application instance with high demand for computing resources, while a node with rich storage resources can be preferentially allocated to an application instance that needs to store a large amount of data. In addition, during the scale-in process, custom priority-based resource scheduling policies can scale in applications in reverse order based on the scheduling sequence at the time of release or scale-out. This means that the pod that is first scheduled to the high-performance compute node is released last at the time of scale-in and the pod that is first scheduled to the node with rich storage resources is released first.

When the environment is ready, the resources in the cluster can be divided into three categories based on node pools:

• Cloud control resource pool: deploys control components such as ACK Edge, KServe, and autoscaler.

• On-premises resource pool: contains the on-premises resources of users and manages LLM inference services.

• Cloud elastic resource pool: flexibly scales based on the resource usage of clusters to manage LLM inference services during peak hours.

You can use OSS or NAS to prepare model data. For more information, see Step 1 in Deploy a vLLM model as an inference service.

You can create a ResourcePolicy CRD to define priority-based resource scheduling rules. For example, here we define a rule for the application whose labelSelector field matches apps: isvc.qwen-predictor. This rule specifies that such applications should be preferentially scheduled to the on-premises resource pool and then to the cloud elastic resource pool. For more information about how to use ResourcePolicy, see Configure priority-based resource scheduling_Alibaba Cloud Container Service for Kubernetes (ACK).

apiVersion: scheduling.alibabacloud.com/v1alpha1

kind: ResourcePolicy

metadata:

name: qwen-chat

namespace: default

spec:

selector:

app: isvc.qwen-predictor # You must specify the label of the pods to which you want to apply the ResourcePolicy.

strategy: prefer

units:

- resource: ecs

nodeSelector:

alibabacloud.com/nodepool-id: npxxxxxx # On-premises resource pool

- resource: elastic

nodeSelector:

alibabacloud.com/nodepool-id: npxxxxxy # Elastic resource poolThrough the Arena client, you can use a command to start an elastic LLM inference service deployed based on KServe. In the command line parameters, this command defines the name of this inference service as qwen-chat, uses the vLLM inference framework, and utilizes the GPU utilization (DCGM_CUSTOM_PROCESS_SM_UTIL) to scale applications. For other metrics, see Documentation. When the GPU utilization exceeds 50%, the replicas will be scaled out. The minimum number of replicas is 1 and the maximum number is 3. Each pod needs a GPU with the configuration of 4 CPU cores and 12 GB of memory. The model is stored in an llm-model prepared in advance.

arena serve kserve \

--name=qwen-chat \

--image=kube-ai-registry.cn-shanghai.cr.aliyuncs.com/kube-ai/vllm:0.4.1 \

--scale-metric=DCGM_CUSTOM_PROCESS_SM_UTIL \

--scale-target=50 \

--min-replicas=1 \

--max-replicas=3 \

--gpus=1 \

--cpu=4 \

--memory=12Gi \

--data="llm-model:/mnt/models/Qwen" \

"python3 -m vllm.entrypoints.openai.api_server --port 8080 --trust-remote-code --served-model-name qwen --model /mnt/models/Qwen --gpu-memory-utilization 0.95 --quantization gptq --max-model-len=6144"Once deployed, we obtain an LLM inference service with auto scaling feature. We can verify whether the deployment is successful by directly requesting the service. The requested address can be found in the Ingress resource details automatically created by KServe.

curl -H "Host: qwen-chat-default.example.com" \

-H "Content-Type: application/json" \

http://xx.xx.xx.xx:80/v1/chat/completions \

-X POST \

-d '{"model": "qwen", "messages": [{"role": "user", "content": "Hello"}], "max_tokens": 512, "temperature": 0.7, "top_p": 0.9, "seed": 10, "stop":["<|endoftext|>", "<|im_end|>", "<|im_start|>"]}Next, we use the stress testing tool hey to simulate sending a large number of requests to this service.

hey -z 2m -c 5 \

-m POST -host qwen-chat-default.example.com \

-H "Content-Type: application/json" \

-d '{"model": "qwen", "messages": [{"role": "user", "content": "Test"}], "max_tokens": 10, "temperature": 0.7, "top_p": 0.9, "seed": 10}' \

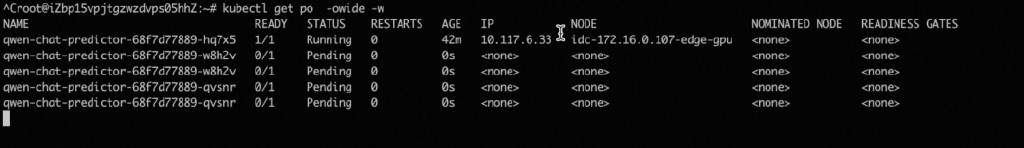

http://xx.xx.xx.xx:80/v1/chat/completionsFirst, these requests are sent to the existing pods. The GPU usage exceeds the threshold due to excessive requests. In this case, the application HPA rule we defined earlier is triggered to start scaling out the replicas. You can see that three replicas appear in the following figure.

Since the on-premises data center has only one GPU in our test environment, the two pods that are scaled out cannot find available resources and are in a pending state. In this case, the pending pods trigger node scaling in the elastic node pool. Auto-scaler automatically adds two GPU nodes on the cloud to manage the two pods.

The tidal traffic characteristics of LLM inference services lead to the difficulty in allocating computing resources. In this article, we present a hybrid cloud LLM elastic inference solution based on ACK Edge. By managing on-premises and cloud resources in a unified manner, we can prioritize the use of on-premises GPU resources during off-peak hours and automatically scale out cloud resources during peak hours. The solution dynamically adjusts resources, significantly reducing operating costs and ensuring service stability and efficient resource utilization.

ACK One Registered Clusters Help Solve GPU Resource Shortage in Data Centers

206 posts | 33 followers

FollowAlibaba Container Service - May 27, 2025

Alibaba Container Service - November 15, 2024

Alibaba Container Service - May 19, 2025

ray - April 16, 2025

Alibaba Container Service - December 19, 2024

Alibaba Container Service - November 7, 2024

206 posts | 33 followers

Follow EasyDispatch for Field Service Management

EasyDispatch for Field Service Management

Apply the latest Reinforcement Learning AI technology to your Field Service Management (FSM) to obtain real-time AI-informed decision support.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn More Conversational AI Service

Conversational AI Service

This solution provides you with Artificial Intelligence services and allows you to build AI-powered, human-like, conversational, multilingual chatbots over omnichannel to quickly respond to your customers 24/7.

Learn MoreMore Posts by Alibaba Container Service