Adam Optimizer in Tensorflow

Last Updated :

25 May, 2025

Adam (Adaptive Moment Estimation) is an optimizer that combines the best features of two optimizers i.e Momentum and RMSprop. Adam is used in deep learning due to its efficiency and adaptive learning rate capabilities.

To use Adam in TensorFlow we can pass the string value 'adam' to the optimizer argument of the model.compile() function. Here's a simple example of how to do this:

model.compile(optimizer="adam")

This method passes the Adam optimizer object to the function with default values for parameters like betas and learning rate. Alternatively we can use the Adam class provided in tf.keras.optimizers. Below is the syntax for using the Adam class directly:

Adam(learning_rate, beta_1, beta_2, epsilon, amsgrad, name)

Here is a description of the parameters in the Adam optimizer:

- learning_rate: The learning rate to use in the algorithm (default value: 0.001).

- beta_1: The exponential decay rate for the 1st moment estimates (default value: 0.9).

- beta_2: The exponential decay rate for the 2nd moment estimates (default value: 0.999).

- epsilon: A small constant for numerical stability (default value: 1e-7).

- amsgrad: A boolean flag to specify whether to apply the AMSGrad variant (default value: False).

- name: Optional name for the operations created when applying gradients (default value: 'Adam').

Using Adam Optimizer in TensorFlow

Let's now look at an example where we will create a simple neural network model using TensorFlow and compile it using the Adam optimizer.

1. Creating the Model

We will create a simple neural network with 2 Dense layers for demonstration.

Python

import tensorflow as tf

def createModel(input_shape):

X_input = tf.keras.layers.Input(input_shape)

X = tf.keras.layers.Dense(10, activation='relu')(X_input)

X_output = tf.keras.layers.Dense(2, activation='softmax')(X)

model = tf.keras.Model(inputs=X_input, outputs=X_output)

return model

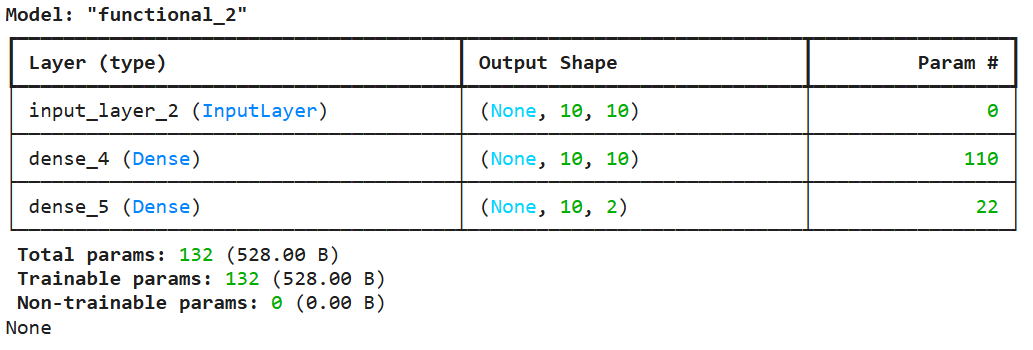

Now, we will create an instance of this model and print the summary to inspect the architecture.

Python

model = createModel((10, 10))

print(model.summary())

Output:

Adam Optimizer in Tensorflow

Adam Optimizer in Tensorflow2. Checking Initial Weights

Before training the model, let's print out the initial weights for the layers.

Python

print('Initial Layer Weights')

for i in range(1, len(model.layers)):

print(f'Weight for Layer {i}:')

print(model.layers[i].get_weights()[0])

print()

Output:

Adam Optimizer in Tensorflow

Adam Optimizer in Tensorflow3. Generating Dummy Data

For this example we will generate random data to train the model.

Python

tf.random.set_seed(5)

X = tf.random.normal((2, 10, 10))

Y = tf.random.normal((2, 10, 2))

4. Compiling Model

Now we compile the model using the Adam optimizer, the categorical cross-entropy loss function and accuracy as the evaluation metric.

Python

model.compile(optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy'])

print(model.optimizer.get_config())

Output:

{'name': 'adam', 'learning_rate': 0.0010000000474974513, 'weight_decay': None, 'clipnorm': None, 'global_clipnorm': None, 'clipvalue': None, 'use_ema': False, 'ema_momentum': 0.99, 'ema_overwrite_frequency': None, 'loss_scale_factor': None, 'gradient_accumulation_steps': None, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False}

The output shows the default configuration of the Adam Optimizer. Now, let's train the model using the dummy data we generated and check the weights of the model after training.

Python

model.fit(X, Y)

print('Final Layer Weights')

for i in range(1, len(model.layers)):

print(f'Weight for Layer {i}:')

print(model.layers[i].get_weights()[0])

print()

Output:

Adam Optimizer in Tensorflow

Adam Optimizer in TensorflowThe output shows how well the model is performing on the training data based on the loss and accuracy and also allows you to observe how the model's weights have changed as a result of the training process.

Similar Reads

Python Tutorial | Learn Python Programming Language Python Tutorial – Python is one of the most popular programming languages. It’s simple to use, packed with features and supported by a wide range of libraries and frameworks. Its clean syntax makes it beginner-friendly.Python is:A high-level language, used in web development, data science, automatio

10 min read

Machine Learning Tutorial Machine learning is a branch of Artificial Intelligence that focuses on developing models and algorithms that let computers learn from data without being explicitly programmed for every task. In simple words, ML teaches the systems to think and understand like humans by learning from the data.Machin

5 min read

Python Interview Questions and Answers Python is the most used language in top companies such as Intel, IBM, NASA, Pixar, Netflix, Facebook, JP Morgan Chase, Spotify and many more because of its simplicity and powerful libraries. To crack their Online Assessment and Interview Rounds as a Python developer, we need to master important Pyth

15+ min read

Non-linear Components In electrical circuits, Non-linear Components are electronic devices that need an external power source to operate actively. Non-Linear Components are those that are changed with respect to the voltage and current. Elements that do not follow ohm's law are called Non-linear Components. Non-linear Co

11 min read

Python OOPs Concepts Object Oriented Programming is a fundamental concept in Python, empowering developers to build modular, maintainable, and scalable applications. By understanding the core OOP principles (classes, objects, inheritance, encapsulation, polymorphism, and abstraction), programmers can leverage the full p

11 min read

Python Projects - Beginner to Advanced Python is one of the most popular programming languages due to its simplicity, versatility, and supportive community. Whether you’re a beginner eager to learn the basics or an experienced programmer looking to challenge your skills, there are countless Python projects to help you grow.Here’s a list

10 min read

Linear Regression in Machine learning Linear regression is a type of supervised machine-learning algorithm that learns from the labelled datasets and maps the data points with most optimized linear functions which can be used for prediction on new datasets. It assumes that there is a linear relationship between the input and output, mea

15+ min read

Python Exercise with Practice Questions and Solutions Python Exercise for Beginner: Practice makes perfect in everything, and this is especially true when learning Python. If you're a beginner, regularly practicing Python exercises will build your confidence and sharpen your skills. To help you improve, try these Python exercises with solutions to test

9 min read

Support Vector Machine (SVM) Algorithm Support Vector Machine (SVM) is a supervised machine learning algorithm used for classification and regression tasks. It tries to find the best boundary known as hyperplane that separates different classes in the data. It is useful when you want to do binary classification like spam vs. not spam or

9 min read

Python Programs Practice with Python program examples is always a good choice to scale up your logical understanding and programming skills and this article will provide you with the best sets of Python code examples.The below Python section contains a wide collection of Python programming examples. These Python co

11 min read