Understanding TF-IDF (Term Frequency-Inverse Document Frequency)

Last Updated :

07 Feb, 2025

TF-IDF (Term Frequency-Inverse Document Frequency) is a statistical measure used in natural language processing and information retrieval to evaluate the importance of a word in a document relative to a collection of documents (corpus).

Unlike simple word frequency, TF-IDF balances common and rare words to highlight the most meaningful terms.

How TF-IDF Works?

TF-IDF combines two components: Term Frequency (TF) and Inverse Document Frequency (IDF).

Term Frequency (TF): Measures how often a word appears in a document. A higher frequency suggests greater importance. If a term appears frequently in a document, it is likely relevant to the document’s content. Formula:

Term Frequency (TF)

Term Frequency (TF)Limitations of TF Alone:

- TF does not account for the global importance of a term across the entire corpus.

- Common words like "the" or "and" may have high TF scores but are not meaningful in distinguishing documents.

Inverse Document Frequency (IDF): Reduces the weight of common words across multiple documents while increasing the weight of rare words. If a term appears in fewer documents, it is more likely to be meaningful and specific. Formula:

Inverse Document Frequency (IDF)

Inverse Document Frequency (IDF)- The logarithm is used to dampen the effect of very large or very small values, ensuring the IDF score scales appropriately.

- It also helps balance the impact of terms that appear in extremely few or extremely many documents.

Limitations of IDF Alone:

- IDF does not consider how often a term appears within a specific document.

- A term might be rare across the corpus (high IDF) but irrelevant in a specific document (low TF).

Converting Text into vectors with TF-IDF : Example

To better grasp how TF-IDF works, let’s walk through a detailed example. Imagine we have a corpus (a collection of documents) with three documents:

- Document 1: "The cat sat on the mat."

- Document 2: "The dog played in the park."

- Document 3: "Cats and dogs are great pets."

Our goal is to calculate the TF-IDF score for specific terms in these documents. Let’s focus on the word "cat" and see how TF-IDF evaluates its importance.

Step 1: Calculate Term Frequency (TF)

For Document 1:

- The word "cat" appears 1 time.

- The total number of terms in Document 1 is 6 ("the", "cat", "sat", "on", "the", "mat").

- So, TF(cat,Document 1) = 1/6

For Document 2:

- The word "cat" does not appear.

- So, TF(cat,Document 2)=0.

For Document 3:

- The word "cat" appears 1 time (as "cats").

- The total number of terms in Document 3 is 6 ("cats", "and", "dogs", "are", "great", "pets").

- So, TF(cat,Document 3)=1/6

- In Document 1 and Document 3, the word "cat" has the same TF score. This means it appears with the same relative frequency in both documents.

- In Document 2, the TF score is 0 because the word "cat" does not appear.

Step 2: Calculate Inverse Document Frequency (IDF)

- Total number of documents in the corpus (D): 3

- Number of documents containing the term "cat": 2 (Document 1 and Document 3).

So, IDF(cat,D)=log \frac{3}{2} ≈0.176

The IDF score for "cat" is relatively low. This indicates that the word "cat" is not very rare in the corpus—it appears in 2 out of 3 documents. If a term appeared in only 1 document, its IDF score would be higher, indicating greater uniqueness.

Step 3: Calculate TF-IDF

The TF-IDF score for "cat" is 0.029 in Document 1 and Document 3, and 0 in Document 2 that reflects both the frequency of the term in the document (TF) and its rarity across the corpus (IDF).

TF-IDF

TF-IDFA higher TF-IDF score means the term is more important in that specific document.

Why is TF-IDF Useful in This Example?

1. Identifying Important Terms: TF-IDF helps us understand that "cat" is somewhat important in Document 1 and Document 3 but irrelevant in Document 2.

If we were building a search engine, this score would help rank Document 1 and Document 3 higher for a query like "cat".

2. Filtering Common Words: Words like "the" or "and" would have high TF scores but very low IDF scores because they appear in almost all documents. Their TF-IDF scores would be close to 0, indicating they are not meaningful.

3. Highlighting Unique Terms: If a term like "mat" appeared only in Document 1, it would have a higher IDF score, making its TF-IDF score more significant in that document.

Implementing TF-IDF in Sklearn with Python

In python tf-idf values can be computed using TfidfVectorizer() method in sklearn module.

Syntax:

sklearn.feature_extraction.text.TfidfVectorizer(input)

Parameters:

- input: It refers to parameter document passed, it can be a filename, file or content itself.

Attributes:

- vocabulary_: It returns a dictionary of terms as keys and values as feature indices.

- idf_: It returns the inverse document frequency vector of the document passed as a parameter.

Returns:

- fit_transform(): It returns an array of terms along with tf-idf values.

- get_feature_names(): It returns a list of feature names.

Step-by-step Approach:

Python

# import required module

from sklearn.feature_extraction.text import TfidfVectorizer

- Collect strings from documents and create a corpus having a collection of strings from the documents d0, d1, and d2.

Python

# assign documents

d0 = 'Geeks for geeks'

d1 = 'Geeks'

d2 = 'r2j'

# merge documents into a single corpus

string = [d0, d1, d2]

- Get tf-idf values from fit_transform() method.

Python

# create object

tfidf = TfidfVectorizer()

# get tf-df values

result = tfidf.fit_transform(string)

- Display idf values of the words present in the corpus.

Python

# get idf values

print('\nidf values:')

for ele1, ele2 in zip(tfidf.get_feature_names(), tfidf.idf_):

print(ele1, ':', ele2)

Output:

- Display tf-idf values along with indexing.

Python

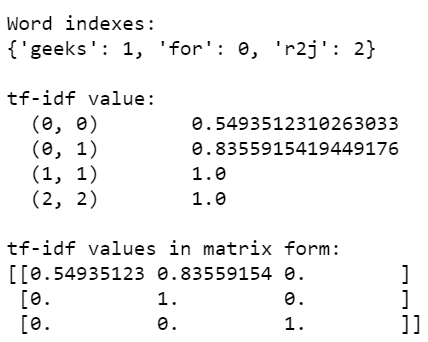

# get indexing

print('\nWord indexes:')

print(tfidf.vocabulary_)

# display tf-idf values

print('\ntf-idf value:')

print(result)

# in matrix form

print('\ntf-idf values in matrix form:')

print(result.toarray())

Output:

The result variable consists of unique words as well as the tf-if values. It can be elaborated using the below image:

From the above image the below table can be generated:

| Document | Word | Document Index | Word Index | tf-idf value |

|---|

| d0 | for | 0 | 0 | 0.549 |

| d0 | geeks | 0 | 1 | 0.8355 |

| d1 | geeks | 1 | 1 | 1.000 |

| d2 | r2j | 2 | 2 | 1.000 |

Below are some examples which depict how to compute tf-idf values of words from a corpus:

Example 1: Below is the complete program based on the above approach:

Python

# import required module

from sklearn.feature_extraction.text import TfidfVectorizer

# assign documents

d0 = 'Geeks for geeks'

d1 = 'Geeks'

d2 = 'r2j'

# merge documents into a single corpus

string = [d0, d1, d2]

# create object

tfidf = TfidfVectorizer()

# get tf-df values

result = tfidf.fit_transform(string)

# get idf values

print('\nidf values:')

for ele1, ele2 in zip(tfidf.get_feature_names(), tfidf.idf_):

print(ele1, ':', ele2)

# get indexing

print('\nWord indexes:')

print(tfidf.vocabulary_)

# display tf-idf values

print('\ntf-idf value:')

print(result)

# in matrix form

print('\ntf-idf values in matrix form:')

print(result.toarray())

Output:

Example 2: Here, tf-idf values are computed from a corpus having unique values.

Python

# import required module

from sklearn.feature_extraction.text import TfidfVectorizer

# assign documents

d0 = 'geek1'

d1 = 'geek2'

d2 = 'geek3'

d3 = 'geek4'

# merge documents into a single corpus

string = [d0, d1, d2, d3]

# create object

tfidf = TfidfVectorizer()

# get tf-df values

result = tfidf.fit_transform(string)

# get indexing

print('\nWord indexes:')

print(tfidf.vocabulary_)

# display tf-idf values

print('\ntf-idf values:')

print(result)

Output:

Example 3: In this program, tf-idf values are computed from a corpus having similar documents.

Python

# import required module

from sklearn.feature_extraction.text import TfidfVectorizer

# assign documents

d0 = 'Geeks for geeks!'

d1 = 'Geeks for geeks!'

# merge documents into a single corpus

string = [d0, d1]

# create object

tfidf = TfidfVectorizer()

# get tf-df values

result = tfidf.fit_transform(string)

# get indexing

print('\nWord indexes:')

print(tfidf.vocabulary_)

# display tf-idf values

print('\ntf-idf values:')

print(result)

Output:

Example 4: Below is the program in which we try to calculate tf-idf value of a single word geeks is repeated multiple times in multiple documents.

Python

# import required module

from sklearn.feature_extraction.text import TfidfVectorizer

# assign corpus

string = ['Geeks geeks']*5

# create object

tfidf = TfidfVectorizer()

# get tf-df values

result = tfidf.fit_transform(string)

# get indexing

print('\nWord indexes:')

print(tfidf.vocabulary_)

# display tf-idf values

print('\ntf-idf values:')

print(result)

Output:

Similar Reads

Natural Language Processing (NLP) Tutorial Natural Language Processing (NLP) is a branch of Artificial Intelligence (AI) that helps machines to understand and process human languages either in text or audio form. It is used across a variety of applications from speech recognition to language translation and text summarization.Natural Languag

5 min read

Introduction to NLP

Natural Language Processing (NLP) - OverviewNatural Language Processing (NLP) is a field that combines computer science, artificial intelligence and language studies. It helps computers understand, process and create human language in a way that makes sense and is useful. With the growing amount of text data from social media, websites and ot

9 min read

NLP vs NLU vs NLGNatural Language Processing(NLP) is a subset of Artificial intelligence which involves communication between a human and a machine using a natural language than a coded or byte language. It provides the ability to give instructions to machines in a more easy and efficient manner. Natural Language Un

3 min read

Applications of NLPAmong the thousands and thousands of species in this world, solely homo sapiens are successful in spoken language. From cave drawings to internet communication, we have come a lengthy way! As we are progressing in the direction of Artificial Intelligence, it only appears logical to impart the bots t

6 min read

Why is NLP important?Natural language processing (NLP) is vital in efficiently and comprehensively analyzing text and speech data. It can navigate the variations in dialects, slang, and grammatical inconsistencies typical of everyday conversations. Table of Content Understanding Natural Language ProcessingReasons Why NL

6 min read

Phases of Natural Language Processing (NLP)Natural Language Processing (NLP) helps computers to understand, analyze and interact with human language. It involves a series of phases that work together to process language and each phase helps in understanding structure and meaning of human language. In this article, we will understand these ph

7 min read

The Future of Natural Language Processing: Trends and InnovationsThere are no reasons why today's world is thrilled to see innovations like ChatGPT and GPT/ NLP(Natural Language Processing) deployments, which is known as the defining moment of the history of technology where we can finally create a machine that can mimic human reaction. If someone would have told

7 min read

Libraries for NLP

NLTK - NLPNatural Language Toolkit (NLTK) is one of the largest Python libraries for performing various Natural Language Processing tasks. From rudimentary tasks such as text pre-processing to tasks like vectorized representation of text - NLTK's API has covered everything. In this article, we will accustom o

5 min read

Tokenization Using SpacyBefore we get into tokenization, let's first take a look at what spaCy is. spaCy is a popular library used in Natural Language Processing (NLP). It's an object-oriented library that helps with processing and analyzing text. We can use spaCy to clean and prepare text, break it into sentences and word

3 min read

Python | Tokenize text using TextBlobTokenization is a fundamental task in Natural Language Processing that breaks down a text into smaller units such as words or sentences which is used in tasks like text classification, sentiment analysis and named entity recognition. TextBlob is a python library for processing textual data and simpl

3 min read

Hugging Face Transformers IntroductionHugging Face is an online community where people can team up, explore, and work together on machine-learning projects. Hugging Face Hub is a cool place with over 350,000 models, 75,000 datasets, and 150,000 demo apps, all free and open to everyone. In this article we are going to understand a brief

10 min read

NLP Gensim Tutorial - Complete Guide For BeginnersThis tutorial is going to provide you with a walk-through of the Gensim library.Gensim : It is an open source library in python written by Radim Rehurek which is used in unsupervised topic modelling and natural language processing. It is designed to extract semantic topics from documents. It can han

14 min read

NLP Libraries in PythonIn today's AI-driven world, text analysis is fundamental for extracting valuable insights from massive volumes of textual data. Whether analyzing customer feedback, understanding social media sentiments, or extracting knowledge from articles, text analysis Python libraries are indispensable for data

15+ min read

Text Normalization in NLP

Normalizing Textual Data with PythonIn this article, we will learn How to Normalizing Textual Data with Python. Let's discuss some concepts : Textual data ask systematically collected material consisting of written, printed, or electronically published words, typically either purposefully written or transcribed from speech.Text normal

7 min read

Regex Tutorial - How to write Regular Expressions?A regular expression (regex) is a sequence of characters that define a search pattern. Here's how to write regular expressions: Start by understanding the special characters used in regex, such as ".", "*", "+", "?", and more.Choose a programming language or tool that supports regex, such as Python,

6 min read

Tokenization in NLPTokenization is a fundamental step in Natural Language Processing (NLP). It involves dividing a Textual input into smaller units known as tokens. These tokens can be in the form of words, characters, sub-words, or sentences. It helps in improving interpretability of text by different models. Let's u

8 min read

Python | Lemmatization with NLTKLemmatization is a fundamental text pre-processing technique widely applied in natural language processing (NLP) and machine learning. Serving a purpose akin to stemming, lemmatization seeks to distill words to their foundational forms. In this linguistic refinement, the resultant base word is refer

6 min read

Introduction to StemmingStemming is a method in text processing that eliminates prefixes and suffixes from words, transforming them into their fundamental or root form, The main objective of stemming is to streamline and standardize words, enhancing the effectiveness of the natural language processing tasks. The article ex

8 min read

Removing stop words with NLTK in PythonIn natural language processing (NLP), stopwords are frequently filtered out to enhance text analysis and computational efficiency. Eliminating stopwords can improve the accuracy and relevance of NLP tasks by drawing attention to the more important words, or content words. The article aims to explore

9 min read

POS(Parts-Of-Speech) Tagging in NLPOne of the core tasks in Natural Language Processing (NLP) is Parts of Speech (PoS) tagging, which is giving each word in a text a grammatical category, such as nouns, verbs, adjectives, and adverbs. Through improved comprehension of phrase structure and semantics, this technique makes it possible f

11 min read

Text Representation and Embedding Techniques

One-Hot Encoding in NLPNatural Language Processing (NLP) is a quickly expanding discipline that works with computer-human language exchanges. One of the most basic jobs in NLP is to represent text data numerically so that machine learning algorithms can comprehend it. One common method for accomplishing this is one-hot en

9 min read

Bag of words (BoW) model in NLPIn this article, we are going to discuss a Natural Language Processing technique of text modeling known as Bag of Words model. Whenever we apply any algorithm in NLP, it works on numbers. We cannot directly feed our text into that algorithm. Hence, Bag of Words model is used to preprocess the text b

4 min read

Understanding TF-IDF (Term Frequency-Inverse Document Frequency)TF-IDF (Term Frequency-Inverse Document Frequency) is a statistical measure used in natural language processing and information retrieval to evaluate the importance of a word in a document relative to a collection of documents (corpus). Unlike simple word frequency, TF-IDF balances common and rare w

6 min read

N-Gram Language Modelling with NLTKLanguage modeling is the way of determining the probability of any sequence of words. Language modeling is used in various applications such as Speech Recognition, Spam filtering, etc. Language modeling is the key aim behind implementing many state-of-the-art Natural Language Processing models.Metho

5 min read

Word Embedding using Word2VecWord Embedding is a language modelling technique that maps words to vectors (numbers). It represents words or phrases in vector space with several dimensions. Various methods such as neural networks, co-occurrence matrices and probabilistic models can generate word embeddings.. Word2Vec is also a me

6 min read

Pre-trained Word embedding using Glove in NLP modelsIn modern Natural Language Processing (NLP), understanding and processing human language in a machine-readable format is essential. Since machines interpret numbers, it's important to convert textual data into numerical form. One of the most effective and widely used approaches to achieve this is th

7 min read

Overview of Word Embedding using Embeddings from Language Models (ELMo)What is word embeddings? It is the representation of words into vectors. These vectors capture important information about the words such that the words sharing the same neighborhood in the vector space represent similar meaning. There are various methods for creating word embeddings, for example, W

2 min read

NLP Deep Learning Techniques

NLP Projects and Practice

Sentiment Analysis with an Recurrent Neural Networks (RNN)Recurrent Neural Networks (RNNs) are used in sequence tasks such as sentiment analysis due to their ability to capture context from sequential data. In this article we will be apply RNNs to analyze the sentiment of customer reviews from Swiggy food delivery platform. The goal is to classify reviews

5 min read

Text Generation using Recurrent Long Short Term Memory NetworkLSTMs are a type of neural network that are well-suited for tasks involving sequential data such as text generation. They are particularly useful because they can remember long-term dependencies in the data which is crucial when dealing with text that often has context that spans over multiple words

4 min read

Machine Translation with Transformer in PythonMachine translation means converting text from one language into another. Tools like Google Translate use this technology. Many translation systems use transformer models which are good at understanding the meaning of sentences. In this article, we will see how to fine-tune a Transformer model from

6 min read

Building a Rule-Based Chatbot with Natural Language ProcessingA rule-based chatbot follows a set of predefined rules or patterns to match user input and generate an appropriate response. The chatbot can’t understand or process input beyond these rules and relies on exact matches making it ideal for handling repetitive tasks or specific queries.Pattern Matching

4 min read

Text Classification using scikit-learn in NLPThe purpose of text classification, a key task in natural language processing (NLP), is to categorise text content into preset groups. Topic categorization, sentiment analysis, and spam detection can all benefit from this. In this article, we will use scikit-learn, a Python machine learning toolkit,

5 min read

Text Summarizations using HuggingFace ModelText summarization is a crucial task in natural language processing (NLP) that involves generating concise and coherent summaries from longer text documents. This task has numerous applications, such as creating summaries for news articles, research papers, and long-form content, making it easier fo

5 min read

Advanced Natural Language Processing Interview QuestionNatural Language Processing (NLP) is a rapidly evolving field at the intersection of computer science and linguistics. As companies increasingly leverage NLP technologies, the demand for skilled professionals in this area has surged. Whether preparing for a job interview or looking to brush up on yo

9 min read