Parallel Programming in Python: Speeding up your analysis

2 likes1,210 views

Slides for the workshop on Parallel Programming in Python I gave on November 10th, 2015 at PyData NYC.

1 of 23

Downloaded 24 times

![Don't oversaturate your resources

Domino Data Lab November 10, 2015

itemIDs = [1, 2, … , n]

parallel-for-each(i = itemIDs){

item = fetchData(i)

result = computeSomething(item)

saveResult(result)

}](https://image.slidesharecdn.com/parallelprogrammingpydata-151107234945-lva1-app6891/85/Parallel-Programming-in-Python-Speeding-up-your-analysis-8-320.jpg)

![Parallelize tasks to match your resources

Domino Data Lab November 10, 2015

items = fetchData([1, 2, … , n])

results = parallel-for-each(i = items){

computeSomething(item)

}

saveResult(results)](https://image.slidesharecdn.com/parallelprogrammingpydata-151107234945-lva1-app6891/85/Parallel-Programming-in-Python-Speeding-up-your-analysis-9-320.jpg)

![Avoid modifying global state

Domino Data Lab November 10, 2015

itemIDs = [0, 0, 0, 0]

parallel-for-each(i = 1:4) {

itemIDs[i] = i

}

A = [0,0,0,0]Array initialized in process 1

[0,0,0,0] [0,0,0,0][0,0,0,0][0,0,0,0]Array copied to each sub-process

[0,0,0,0] [0,0,0,3][0,0,2,0][0,1,0,0]The copy is modified

[0,0,0,0]

When all parallel tasks finish, array in original process remained unchanged](https://image.slidesharecdn.com/parallelprogrammingpydata-151107234945-lva1-app6891/85/Parallel-Programming-in-Python-Speeding-up-your-analysis-10-320.jpg)

Ad

Recommended

Data analytics in the cloud with Jupyter notebooks.

Data analytics in the cloud with Jupyter notebooks.Graham Dumpleton Jupyter Notebooks provide an interactive computational environment, in which you can combine Python code, rich text, mathematics, plots and rich media. It provides a convenient way for data analysts to explore, capture and share their research.

Numerous options exist for working with Jupyter Notebooks, including running a Jupyter Notebook instance locally or by using a Jupyter Notebook hosting service.

This talk will provide a quick tour of some of the more well known options available for running Jupyter Notebooks. It will then look at custom options for hosting Jupyter Notebooks yourself using public or private cloud infrastructure.

An in-depth look at how you can run Jupyter Notebooks in OpenShift will be presented. This will cover how you can directly deploy a Jupyter Notebook server image, as well as how you can use Source-to-Image (S2I) to create a custom application for your requirements by combining an existing Jupyter Notebook server image with your own notebooks, additional code and research data.

Specific use cases around Jupyter Notebooks which will be explored will include individual use, team use within an organisation, and class room environments for teaching. Other issues which will be covered include importing of notebooks and data into an environment, storing data using persistent volumes and other forms of centralised storage.

As an example of the possibilities of using Jupyter Notebooks with a cloud, it will be shown how you can easily use OpenShift to set up a distributed parallel computing cluster using ‘ipyparallel’ and use it in conjunction with a Jupyter Notebook.

Parallel programming using python

Parallel programming using python Samah Gad This document provides an introduction to parallel programming using Python. It discusses the motivation for parallel programming by utilizing multiple CPU cores simultaneously. The two main approaches in Python are forking processes using os.fork and spawning threads. It provides examples of forking processes and using threads via the _thread and threading modules. It also discusses challenges like synchronizing access to shared objects and introduces solutions like the multiprocessing module for interprocess communication.

Intro to Python

Intro to PythonTarek Abdul-Kader , Android Developer introduction to Python by Mohamed Hegazy , in this slides you will find some code samples , these slides first presented in TensorFlow Dev Summit 2017 Extended by GDG Helwan

GPU Computing for Data Science

GPU Computing for Data Science Domino Data Lab When working with big data or complex algorithms, we often look to parallelize our code to optimize runtime. By taking advantage of a GPUs 1000+ cores, a data scientist can quickly scale out solutions inexpensively and sometime more quickly than using traditional CPU cluster computing. In this webinar, we will present ways to incorporate GPU computing to complete computationally intensive tasks in both Python and R.

See the full presentation here: 👉 https://vimeo.com/153290051

Learn more about the Domino data science platform: https://www.dominodatalab.com

NANO266 - Lecture 9 - Tools of the Modeling Trade

NANO266 - Lecture 9 - Tools of the Modeling TradeUniversity of California, San Diego UCSD NANO 266 Quantum Mechanical Modelling of Materials and Nanostructures is a graduate class that provides students with a highly practical introduction to the application of first principles quantum mechanical simulations to model, understand and predict the properties of materials and nano-structures. The syllabus includes: a brief introduction to quantum mechanics and the Hartree-Fock and density functional theory (DFT) formulations; practical simulation considerations such as convergence, selection of the appropriate functional and parameters; interpretation of the results from simulations, including the limits of accuracy of each method. Several lab sessions provide students with hands-on experience in the conduct of simulations. A key aspect of the course is in the use of programming to facilitate calculations and analysis.

TensorFlow

TensorFlowSang-Houn Choi TensorFlow에 대한 분석 내용

- TensorFlow?

- 배경

- DistBelief

- Tutorial - Logistic regression

- TensorFlow - 내부적으로는

- Tutorial - CNN, RNN

- Benchmarks

- 다른 오픈 소스들

- TensorFlow를 고려한다면

- 설치

- 참고 자료

An Introduction to TensorFlow architecture

An Introduction to TensorFlow architectureMani Goswami Introduces you to the internals of TensorFlow and deep dives into distributed version of TensorFlow. Refer to https://github.com/manigoswami/tensorflow-examples for examples.

Large Scale Deep Learning with TensorFlow

Large Scale Deep Learning with TensorFlow Jen Aman Large-scale deep learning with TensorFlow allows storing and performing computation on large datasets to develop computer systems that can understand data. Deep learning models like neural networks are loosely based on what is known about the brain and become more powerful with more data, larger models, and more computation. At Google, deep learning is being applied across many products and areas, from speech recognition to image understanding to machine translation. TensorFlow provides an open-source software library for machine learning that has been widely adopted both internally at Google and externally.

FireWorks workflow software

FireWorks workflow softwareAnubhav Jain FireWorks is Python workflow software that was created to address issues with running computational jobs like VASP calculations. It has no error detection, failure recovery, or ability to rerun jobs that failed. FireWorks provides features like automatic storage of job details, error detection and recovery, ability to rerun failed jobs with one command, and scaling of jobs without manual effort. It uses a Launchpad to define and launch workflows of FireTasks that can run jobs on different machines and directories.

MAVRL Workshop 2014 - pymatgen-db & custodian

MAVRL Workshop 2014 - pymatgen-db & custodianUniversity of California, San Diego This presentation was part of the workshop on Materials Project Software infrastructure conducted for the Materials Virtual Lab in Nov 10 2014. It presents an introduction to the pymatgen-db database plugin for the pymatge) materials analysis library, and the custodian error recovery framework.

Pymatgen-db enables the creation of Materials Project-style MongoDB databases for management of materials data. A query engine is also provided to enable the easy translation of MongoDB docs to useful pymatgen objects for analysis purposes.

Custodian is a simple, robust and flexible just-in-time (JIT) job management framework written in Python. Using custodian, you can create wrappers that perform error checking, job management and error recovery. It has a simple plugin framework that allows you to develop specific job management workflows for different applications. Error recovery is an important aspect of many high-throughput projects that generate data on a large scale. The specific use case for custodian is for long running jobs, with potentially random errors. For example, there may be a script that takes several days to run on a server, with a 1% chance of some IO error causing the job to fail. Using custodian, one can develop a mechanism to gracefully recover from the error, and restart the job with modified parameters if necessary. The current version of Custodian also comes with sub-packages for error handling for Vienna Ab Initio Simulation Package (VASP) and QChem calculations.

D3 in Jupyter : PyData NYC 2015

D3 in Jupyter : PyData NYC 2015Brian Coffey This document summarizes Brian Coffey's presentation on using D3.js for data visualization in Jupyter notebooks. It introduces using HTML displays to add DOM elements like CSS, JavaScript, and divs. D3.js is described as a JavaScript library that modifies the DOM based on data arrays. Python data can be written into script elements for D3 to use. Examples shown include a scatterplot, bar chart with interactivity, networks and maps using different D3 blocks. The document also references an external Python library that can encapsulate boilerplate code for D3 visualizations in Jupyter.

More Data Science with Less Engineering: Machine Learning Infrastructure at N...

More Data Science with Less Engineering: Machine Learning Infrastructure at N...Ville Tuulos Presentation about Netlfix's human-centric machine learning infrastructure, Metaflow, at SF Big Analytics Meetup in September 2019.

Deep learning with TensorFlow

Deep learning with TensorFlowNdjido Ardo BAR This document provides an agenda for a presentation on deep learning with TensorFlow. It includes:

1. An introduction to machine learning and deep networks, including definitions of machine learning, neural networks, and deep learning.

2. An overview of TensorFlow, including its architecture, evolution, language features, computational graph, TensorBoard, and use in Google Cloud ML.

3. Details of TensorFlow hands-on examples, including linear models, shallow and deep neural networks for MNIST digit classification, and convolutional neural networks for MNIST.

Data Science and Deep Learning on Spark with 1/10th of the Code with Roope As...

Data Science and Deep Learning on Spark with 1/10th of the Code with Roope As...Databricks Scalability and interactivity make Spark an excellent platform for data scientists who want to analyze very large datasets and build predictive models. However, the productivity of data scientists is hampered by lack of abstractions for building models for diverse types of data. For example, processing text or image data requires low level data coercion and transformation steps, which are not easy to compose into complex workflows for production applications. There is also a lack of domain specific libraries, for example for computer vision and image processing.

We present an open-source Spark library which simplifies common data science tasks such as feature construction and hyperparameter tuning, and allows data scientists to iterate and experiment on their models faster. The library integrates seamlessly with SparkML pipeline object model, and is installable through spark-packages.

The library brings deep learning and image processing to Spark through CNTK, OpenCV and Tensorflow in frictionless manner, thus enabling scenarios such training on GPU-enabled nodes, deep neural net featurization and transfer learning on large image datasets. We discuss the design and architecture of the library, and show examples of building a machine learning models for image classification.

Tensorflow presentation

Tensorflow presentationAhmed rebai The document is a presentation about TensorFlow. It begins with an introduction that defines machine learning and deep learning. It then discusses what TensorFlow is, including that it is an open-source library for deep learning and ML, was developed by Google Brain, and uses data flow graphs to represent computations. The presentation explains benefits of TensorFlow like parallelism, distributed execution, and portability. It provides examples of companies using TensorFlow and demonstrates cool projects that can be built with it, like image classification, object detection, and speech recognition. Finally, it concludes that TensorFlow is helping achieve amazing advancements in machine learning.

TensorFlow in Context

TensorFlow in ContextAltoros TensorFlow is an open source machine learning framework developed by Google. It has several advantages over other frameworks including its flexibility to run on CPU, GPU, and in distributed environments. The document discusses key features of TensorFlow such as its Python API and compares it to other frameworks. It also explains how TensorFlow can be used for deep learning applications on large, unstructured datasets with tools like Keras and how its distributed capabilities allow training on multiple GPUs and even across clusters.

Tensorflow 101 @ Machine Learning Innovation Summit SF June 6, 2017

Tensorflow 101 @ Machine Learning Innovation Summit SF June 6, 2017Ashish Bansal TensorFlow is a machine learning library for building and training neural networks. It can handle everything from low-level graph construction using tensors and operations, to high-level neural network models. Key steps in using TensorFlow include defining the network architecture, preprocessing data, training the model to minimize a loss function, and deploying the trained model. Hello world examples demonstrate building simple graphs to multiply and add placeholders and constants.

Numba lightning

Numba lightningTravis Oliphant Numba is a Python compiler that uses type information to generate optimized machine code from Python functions. It allows Python code to run as fast as natively compiled languages for numeric computation. The goal is to provide rapid iteration and development along with fast code execution. Numba works by compiling Python code to LLVM bitcode then to machine code using type information from NumPy. An example shows a sinc function being JIT compiled. Future work includes supporting more Python features like structures and objects.

TensorFlow 101

TensorFlow 101Raghu Rajah This document provides an overview of Machine Learning with TensorFlow 101. It introduces TensorFlow, describing its programming model and how it uses computational graphs for distributed execution. It then gives a simplified view of machine learning, and provides examples of linear regression and deep learning with TensorFlow. The presenter is an entrepreneur who has been dabbling with machine learning for the past 3 years using tools like Spark, H2O.ai and TensorFlow.

Scale up and Scale Out Anaconda and PyData

Scale up and Scale Out Anaconda and PyDataTravis Oliphant With Anaconda (in particular Numba and Dask) you can scale up your NumPy and Pandas stack to many cpus and GPUs as well as scale-out to run on clusters of machines including Hadoop.

Jonathan Coveney: Why Pig?

Jonathan Coveney: Why Pig?mortardata Pig is a useful tool for exploring data sets and describing data flows easily through scripts. However, it has some weaknesses like inconsistent syntax, lack of testing support, and an aging code base. While Pig works well for many companies, its suitability may decline as companies' data needs grow more complex with distributed teams of data scientists and engineers. For Pig to remain useful, it will need improvements in areas like types, performance, UDF support, and testing to better support "big data" teams in the future.

High Performance Machine Learning in R with H2O

High Performance Machine Learning in R with H2OSri Ambati This document summarizes a presentation by Erin LeDell from H2O.ai about machine learning using the H2O software. H2O is an open-source machine learning platform that provides APIs for R, Python, Scala and other languages. It allows distributed machine learning on large datasets across clusters. The presentation covers H2O's architecture, algorithms like random forests and deep learning, and how to use H2O within R including loading data, training models, and running grid searches. It also discusses H2O on Spark via Sparkling Water and real-world use cases with customers.

Introduction To TensorFlow

Introduction To TensorFlowSpotle.ai Develop a fundamental overview of Google TensorFlow, one of the most widely adopted technologies for advanced deep learning and neural network applications. Understand the core concepts of artificial intelligence, deep learning and machine learning and the applications of TensorFlow in these areas.

The deck also introduces the Spotle.ai masterclass in Advanced Deep Learning With Tensorflow and Keras.

SciPy 2019: How to Accelerate an Existing Codebase with Numba

SciPy 2019: How to Accelerate an Existing Codebase with Numbastan_seibert The document discusses a four step process for accelerating existing code with Numba: 1) Make an honest self-inventory of why speeding up code is needed, 2) Perform measurement of code through unit testing and profiling, 3) Refactor code following rules like paying attention to data types and writing code like Fortran, 4) Share accelerated code with others by packaging with Numba as a dependency. Key rules discussed include always using @jit(nopython=True), paying attention to supported data types, writing functions over classes, and targeting serial execution first before parallelism.

Introduction to Storm

Introduction to Storm Chandler Huang Storm is a distributed and fault-tolerant realtime computation system. It was created at BackType/Twitter to analyze tweets, links, and users on Twitter in realtime. Storm provides scalability, reliability, and ease of programming. It uses components like Zookeeper, ØMQ, and Thrift. A Storm topology defines the flow of data between spouts that read data and bolts that process data. Storm guarantees processing of all data through its reliability APIs and guarantees no data loss even during failures.

Rajat Monga, Engineering Director, TensorFlow, Google at MLconf 2016

Rajat Monga, Engineering Director, TensorFlow, Google at MLconf 2016MLconf This document provides an overview of TensorFlow, an open source machine learning framework. It discusses how machine learning systems can become complex with modeling complexity, heterogeneous systems, and distributed systems. It then summarizes key aspects of TensorFlow, including its architecture, platforms, languages, parallelism approaches, algorithms, and tooling. The document emphasizes that TensorFlow handles complexity so users can focus on their machine learning ideas.

Tom Peters, Software Engineer, Ufora at MLconf ATL 2016

Tom Peters, Software Engineer, Ufora at MLconf ATL 2016MLconf Say What You Mean: Scaling Machine Learning Algorithms Directly from Source Code: Scaling machine learning applications is hard. Even with powerful systems like Spark, Tensor Flow, and Theano, the code you write has more to do with getting these systems to work at all than it does with your algorithm itself. But it doesn’t have to be this way!

In this talk, I’ll discuss an alternate approach we’ve taken with Pyfora, an open-source platform for scalable machine learning and data science in Python. I’ll show how it produces efficient, large scale machine learning implementations directly from the source code of single-threaded Python programs. Instead of programming to a complex API, you can simply say what you mean and move on. I’ll show some classes of problem where this approach truly shines, discuss some practical realities of developing the system, and I’ll talk about some future directions for the project.

Tensorflow internal

Tensorflow internalHyunghun Cho TensorFlow is a dataflow-like model that runs on a wide variety of hardware platforms. It uses tensors and a directed graph to describe computations. Operations are abstract computations implemented by kernels that run on different devices like CPUs and GPUs. The core C++ implementation defines the framework and kernel functions, while the Python implementation focuses on operations, training, and providing APIs. Additional libraries like Keras, TensorFlow Slim, Skflow, PrettyTensor, and TFLearn build on TensorFlow to provide higher-level abstractions.

Class & Object - Intro

Class & Object - IntroPRN USM This document provides an introduction to object-oriented programming (OOP) concepts. It discusses problem solving using both structured programming and OOP approaches. The key concepts of OOP covered include objects, classes, methods, encapsulation, and inheritance. It also provides examples of defining a Rectangle class with attributes like length and width, and methods to calculate the area and perimeter. The document demonstrates how to create Rectangle objects, call methods on them to get the area, and use them in an application class.

Object and class relationships

Object and class relationshipsPooja mittal A class diagram shows the structure of a system through classes, attributes, operations, and relationships between classes. It includes classes and their properties like attributes and methods. It also shows relationships between classes like associations, aggregations, generalizations, and dependencies. The class diagram is a key tool in object-oriented analysis and design.

Ad

More Related Content

What's hot (20)

FireWorks workflow software

FireWorks workflow softwareAnubhav Jain FireWorks is Python workflow software that was created to address issues with running computational jobs like VASP calculations. It has no error detection, failure recovery, or ability to rerun jobs that failed. FireWorks provides features like automatic storage of job details, error detection and recovery, ability to rerun failed jobs with one command, and scaling of jobs without manual effort. It uses a Launchpad to define and launch workflows of FireTasks that can run jobs on different machines and directories.

MAVRL Workshop 2014 - pymatgen-db & custodian

MAVRL Workshop 2014 - pymatgen-db & custodianUniversity of California, San Diego This presentation was part of the workshop on Materials Project Software infrastructure conducted for the Materials Virtual Lab in Nov 10 2014. It presents an introduction to the pymatgen-db database plugin for the pymatge) materials analysis library, and the custodian error recovery framework.

Pymatgen-db enables the creation of Materials Project-style MongoDB databases for management of materials data. A query engine is also provided to enable the easy translation of MongoDB docs to useful pymatgen objects for analysis purposes.

Custodian is a simple, robust and flexible just-in-time (JIT) job management framework written in Python. Using custodian, you can create wrappers that perform error checking, job management and error recovery. It has a simple plugin framework that allows you to develop specific job management workflows for different applications. Error recovery is an important aspect of many high-throughput projects that generate data on a large scale. The specific use case for custodian is for long running jobs, with potentially random errors. For example, there may be a script that takes several days to run on a server, with a 1% chance of some IO error causing the job to fail. Using custodian, one can develop a mechanism to gracefully recover from the error, and restart the job with modified parameters if necessary. The current version of Custodian also comes with sub-packages for error handling for Vienna Ab Initio Simulation Package (VASP) and QChem calculations.

D3 in Jupyter : PyData NYC 2015

D3 in Jupyter : PyData NYC 2015Brian Coffey This document summarizes Brian Coffey's presentation on using D3.js for data visualization in Jupyter notebooks. It introduces using HTML displays to add DOM elements like CSS, JavaScript, and divs. D3.js is described as a JavaScript library that modifies the DOM based on data arrays. Python data can be written into script elements for D3 to use. Examples shown include a scatterplot, bar chart with interactivity, networks and maps using different D3 blocks. The document also references an external Python library that can encapsulate boilerplate code for D3 visualizations in Jupyter.

More Data Science with Less Engineering: Machine Learning Infrastructure at N...

More Data Science with Less Engineering: Machine Learning Infrastructure at N...Ville Tuulos Presentation about Netlfix's human-centric machine learning infrastructure, Metaflow, at SF Big Analytics Meetup in September 2019.

Deep learning with TensorFlow

Deep learning with TensorFlowNdjido Ardo BAR This document provides an agenda for a presentation on deep learning with TensorFlow. It includes:

1. An introduction to machine learning and deep networks, including definitions of machine learning, neural networks, and deep learning.

2. An overview of TensorFlow, including its architecture, evolution, language features, computational graph, TensorBoard, and use in Google Cloud ML.

3. Details of TensorFlow hands-on examples, including linear models, shallow and deep neural networks for MNIST digit classification, and convolutional neural networks for MNIST.

Data Science and Deep Learning on Spark with 1/10th of the Code with Roope As...

Data Science and Deep Learning on Spark with 1/10th of the Code with Roope As...Databricks Scalability and interactivity make Spark an excellent platform for data scientists who want to analyze very large datasets and build predictive models. However, the productivity of data scientists is hampered by lack of abstractions for building models for diverse types of data. For example, processing text or image data requires low level data coercion and transformation steps, which are not easy to compose into complex workflows for production applications. There is also a lack of domain specific libraries, for example for computer vision and image processing.

We present an open-source Spark library which simplifies common data science tasks such as feature construction and hyperparameter tuning, and allows data scientists to iterate and experiment on their models faster. The library integrates seamlessly with SparkML pipeline object model, and is installable through spark-packages.

The library brings deep learning and image processing to Spark through CNTK, OpenCV and Tensorflow in frictionless manner, thus enabling scenarios such training on GPU-enabled nodes, deep neural net featurization and transfer learning on large image datasets. We discuss the design and architecture of the library, and show examples of building a machine learning models for image classification.

Tensorflow presentation

Tensorflow presentationAhmed rebai The document is a presentation about TensorFlow. It begins with an introduction that defines machine learning and deep learning. It then discusses what TensorFlow is, including that it is an open-source library for deep learning and ML, was developed by Google Brain, and uses data flow graphs to represent computations. The presentation explains benefits of TensorFlow like parallelism, distributed execution, and portability. It provides examples of companies using TensorFlow and demonstrates cool projects that can be built with it, like image classification, object detection, and speech recognition. Finally, it concludes that TensorFlow is helping achieve amazing advancements in machine learning.

TensorFlow in Context

TensorFlow in ContextAltoros TensorFlow is an open source machine learning framework developed by Google. It has several advantages over other frameworks including its flexibility to run on CPU, GPU, and in distributed environments. The document discusses key features of TensorFlow such as its Python API and compares it to other frameworks. It also explains how TensorFlow can be used for deep learning applications on large, unstructured datasets with tools like Keras and how its distributed capabilities allow training on multiple GPUs and even across clusters.

Tensorflow 101 @ Machine Learning Innovation Summit SF June 6, 2017

Tensorflow 101 @ Machine Learning Innovation Summit SF June 6, 2017Ashish Bansal TensorFlow is a machine learning library for building and training neural networks. It can handle everything from low-level graph construction using tensors and operations, to high-level neural network models. Key steps in using TensorFlow include defining the network architecture, preprocessing data, training the model to minimize a loss function, and deploying the trained model. Hello world examples demonstrate building simple graphs to multiply and add placeholders and constants.

Numba lightning

Numba lightningTravis Oliphant Numba is a Python compiler that uses type information to generate optimized machine code from Python functions. It allows Python code to run as fast as natively compiled languages for numeric computation. The goal is to provide rapid iteration and development along with fast code execution. Numba works by compiling Python code to LLVM bitcode then to machine code using type information from NumPy. An example shows a sinc function being JIT compiled. Future work includes supporting more Python features like structures and objects.

TensorFlow 101

TensorFlow 101Raghu Rajah This document provides an overview of Machine Learning with TensorFlow 101. It introduces TensorFlow, describing its programming model and how it uses computational graphs for distributed execution. It then gives a simplified view of machine learning, and provides examples of linear regression and deep learning with TensorFlow. The presenter is an entrepreneur who has been dabbling with machine learning for the past 3 years using tools like Spark, H2O.ai and TensorFlow.

Scale up and Scale Out Anaconda and PyData

Scale up and Scale Out Anaconda and PyDataTravis Oliphant With Anaconda (in particular Numba and Dask) you can scale up your NumPy and Pandas stack to many cpus and GPUs as well as scale-out to run on clusters of machines including Hadoop.

Jonathan Coveney: Why Pig?

Jonathan Coveney: Why Pig?mortardata Pig is a useful tool for exploring data sets and describing data flows easily through scripts. However, it has some weaknesses like inconsistent syntax, lack of testing support, and an aging code base. While Pig works well for many companies, its suitability may decline as companies' data needs grow more complex with distributed teams of data scientists and engineers. For Pig to remain useful, it will need improvements in areas like types, performance, UDF support, and testing to better support "big data" teams in the future.

High Performance Machine Learning in R with H2O

High Performance Machine Learning in R with H2OSri Ambati This document summarizes a presentation by Erin LeDell from H2O.ai about machine learning using the H2O software. H2O is an open-source machine learning platform that provides APIs for R, Python, Scala and other languages. It allows distributed machine learning on large datasets across clusters. The presentation covers H2O's architecture, algorithms like random forests and deep learning, and how to use H2O within R including loading data, training models, and running grid searches. It also discusses H2O on Spark via Sparkling Water and real-world use cases with customers.

Introduction To TensorFlow

Introduction To TensorFlowSpotle.ai Develop a fundamental overview of Google TensorFlow, one of the most widely adopted technologies for advanced deep learning and neural network applications. Understand the core concepts of artificial intelligence, deep learning and machine learning and the applications of TensorFlow in these areas.

The deck also introduces the Spotle.ai masterclass in Advanced Deep Learning With Tensorflow and Keras.

SciPy 2019: How to Accelerate an Existing Codebase with Numba

SciPy 2019: How to Accelerate an Existing Codebase with Numbastan_seibert The document discusses a four step process for accelerating existing code with Numba: 1) Make an honest self-inventory of why speeding up code is needed, 2) Perform measurement of code through unit testing and profiling, 3) Refactor code following rules like paying attention to data types and writing code like Fortran, 4) Share accelerated code with others by packaging with Numba as a dependency. Key rules discussed include always using @jit(nopython=True), paying attention to supported data types, writing functions over classes, and targeting serial execution first before parallelism.

Introduction to Storm

Introduction to Storm Chandler Huang Storm is a distributed and fault-tolerant realtime computation system. It was created at BackType/Twitter to analyze tweets, links, and users on Twitter in realtime. Storm provides scalability, reliability, and ease of programming. It uses components like Zookeeper, ØMQ, and Thrift. A Storm topology defines the flow of data between spouts that read data and bolts that process data. Storm guarantees processing of all data through its reliability APIs and guarantees no data loss even during failures.

Rajat Monga, Engineering Director, TensorFlow, Google at MLconf 2016

Rajat Monga, Engineering Director, TensorFlow, Google at MLconf 2016MLconf This document provides an overview of TensorFlow, an open source machine learning framework. It discusses how machine learning systems can become complex with modeling complexity, heterogeneous systems, and distributed systems. It then summarizes key aspects of TensorFlow, including its architecture, platforms, languages, parallelism approaches, algorithms, and tooling. The document emphasizes that TensorFlow handles complexity so users can focus on their machine learning ideas.

Tom Peters, Software Engineer, Ufora at MLconf ATL 2016

Tom Peters, Software Engineer, Ufora at MLconf ATL 2016MLconf Say What You Mean: Scaling Machine Learning Algorithms Directly from Source Code: Scaling machine learning applications is hard. Even with powerful systems like Spark, Tensor Flow, and Theano, the code you write has more to do with getting these systems to work at all than it does with your algorithm itself. But it doesn’t have to be this way!

In this talk, I’ll discuss an alternate approach we’ve taken with Pyfora, an open-source platform for scalable machine learning and data science in Python. I’ll show how it produces efficient, large scale machine learning implementations directly from the source code of single-threaded Python programs. Instead of programming to a complex API, you can simply say what you mean and move on. I’ll show some classes of problem where this approach truly shines, discuss some practical realities of developing the system, and I’ll talk about some future directions for the project.

Tensorflow internal

Tensorflow internalHyunghun Cho TensorFlow is a dataflow-like model that runs on a wide variety of hardware platforms. It uses tensors and a directed graph to describe computations. Operations are abstract computations implemented by kernels that run on different devices like CPUs and GPUs. The core C++ implementation defines the framework and kernel functions, while the Python implementation focuses on operations, training, and providing APIs. Additional libraries like Keras, TensorFlow Slim, Skflow, PrettyTensor, and TFLearn build on TensorFlow to provide higher-level abstractions.

Viewers also liked (20)

Class & Object - Intro

Class & Object - IntroPRN USM This document provides an introduction to object-oriented programming (OOP) concepts. It discusses problem solving using both structured programming and OOP approaches. The key concepts of OOP covered include objects, classes, methods, encapsulation, and inheritance. It also provides examples of defining a Rectangle class with attributes like length and width, and methods to calculate the area and perimeter. The document demonstrates how to create Rectangle objects, call methods on them to get the area, and use them in an application class.

Object and class relationships

Object and class relationshipsPooja mittal A class diagram shows the structure of a system through classes, attributes, operations, and relationships between classes. It includes classes and their properties like attributes and methods. It also shows relationships between classes like associations, aggregations, generalizations, and dependencies. The class diagram is a key tool in object-oriented analysis and design.

No-Bullshit Data Science

No-Bullshit Data ScienceDomino Data Lab by Szilard Pafka

Chief Scientist at Epoch

Szilard studied Physics in the 90s in Budapest and has obtained a PhD by using statistical methods to analyze the risk of financial portfolios. Next he has worked in finance quantifying and managing market risk. A decade ago he moved to California to become the Chief Scientist of a credit card processing company doing what now is called data science (data munging, analysis, modeling, visualization, machine learning etc). He is the founder/organizer of several data science meetups in Santa Monica, and he is also a visiting professor at CEU in Budapest, where he teaches data science in the Masters in Business Analytics program.

While extracting business value from data has been performed by practitioners for decades, the last several years have seen an unprecedented amount of hype in this field. This hype has created not only unrealistic expectations in results, but also glamour in the usage of the newest tools assumably capable of extraordinary feats. In this talk I will apply the much needed methods of critical thinking and quantitative measurements (that data scientists are supposed to use daily in solving problems for their companies) to assess the capabilities of the most widely used software tools for data science. I will discuss in details two such analyses, one concerning the size of datasets used for analytics and the other one regarding the performance of machine learning software used for supervised learning.

Anomaly Detection for Security

Anomaly Detection for SecurityCody Rioux I take our currently implemented real-time analytics platform which makes decisions and takes autonomous action within our environment and repurpose it for a hypothetical solution to a phishing problem at a hypothetical startup.

The Dark of Building an Production Incident Syste

The Dark of Building an Production Incident SysteAlois Reitbauer The document discusses building an effective production incident system using statistics. It explains that using the median and percentiles to define a baseline range captures normal system behavior better than trying to fit a specific distribution model. Two examples are provided: 1) Using the binomial distribution to determine if an error rate exceeds expectations. 2) Using percentiles to check if response times have drifted above the median without knowing the underlying distribution. The key is applying statistical methods to objectively determine what constitutes a normal range of values versus a problem requiring alerting.

Traffic anomaly detection and attack

Traffic anomaly detection and attackQrator Labs This presentatiom provides a method of mathematical representation of the traffic flow of network states. Anomalous behavior in this model is represented as a point, not grouped in clusters allocated by the "alpha-stream" process

Anomaly Detection for Real-World Systems

Anomaly Detection for Real-World SystemsManojit Nandi (1) Anomaly detection aims to identify data points that are noticeably different from expected patterns in a dataset. (2) Common approaches include statistical modeling, machine learning classification, and algorithms designed specifically for anomaly detection. (3) Streaming data poses unique challenges due to limited memory and need for rapid identification of anomalies. (4) Heuristics like z-scores and median absolute deviation provide robust ways to measure how extreme observations are compared to a distribution's center. (5) Density-based methods quantify how isolated data points are to identify anomalies. (6) Time series algorithms decompose trends and seasonality to identify global and local anomalous spikes and troughs.

Where is Data Going? - RMDC Keynote

Where is Data Going? - RMDC KeynoteTed Dunning I describe where I think (big) data is going. This presentation alternates between the business view of things and the technical.

Monitoring without alerts

Monitoring without alertsAlois Reitbauer Why modern monitoring software infrastructures require artificial intelligence based problem analysis

The Dark Art of Production Alerting

The Dark Art of Production AlertingAlois Reitbauer The document discusses how to build an effective incident detection system using statistics. It explains that a baseline is needed to determine what normal behavior looks like and how to define abnormal behavior that requires an alert. Key metrics like errors, response times, and percentiles are identified. The document provides examples of how to use statistical distributions like the binomial distribution to calculate the likelihood of an observed value and determine if it warrants an alert or is still within the expected range of normal behavior.

Can a monitoring tool pass the turing test

Can a monitoring tool pass the turing testAlois Reitbauer The document discusses whether a monitoring tool could pass the Turing test, which tests a machine's ability to exhibit human-like intelligence through natural language conversations. It introduces the concept of ChatOps, which uses conversation-driven operations to provide proactive, knowledge-based and guided interactions that are more than just simple commands. It concludes that while a monitoring tool does not need full human-level intelligence, it aims to provide a system that is intelligent, helpful and informative through natural language interactions.

Monitoring large scale Docker production environments

Monitoring large scale Docker production environmentsAlois Reitbauer This document discusses the challenges of monitoring large scale Docker production environments. It notes that monitoring is critical for Docker in production according to 46% of respondents. When using microservices and Docker, environments can be 20 times larger, requiring techniques like network monitoring, machine-assisted problem resolution, and monitoring from the infrastructure to the application level. Effective monitoring also requires monitoring the orchestration layer, container dynamics, components like those from Netflix OSS, and the network. It should provide capabilities like visualizing the impact of automation, automated problem analysis, massive scalability, and acting as a platform feature through auto-injection and self-configuration.

PyGotham 2016

PyGotham 2016Manojit Nandi This document discusses various algorithms and techniques for anomaly detection in real-world systems. It begins with an overview and definitions of anomalies, then describes approaches to detecting anomalies in data streams using techniques like z-scores and median absolute deviation. It also covers density-based methods like local outlier factor to identify isolated points, and time series methods like seasonal-hybrid ESD to detect spikes and troughs. The document stresses the importance of testing algorithms in different environments using synthetic datasets with built-in anomalies.

The definition of normal - An introduction and guide to anomaly detection.

The definition of normal - An introduction and guide to anomaly detection. Alois Reitbauer What is normal behaviour?

How are expectations about future behaviour derived from data?

How do anomaly detection algorithms work including trending and seasonality?

How do these algorithms know whether something is an anomaly?

Which algorithms can be used for which type of data?

SSL Certificate Expiration and Howler Monkey's Inception

SSL Certificate Expiration and Howler Monkey's Inceptionroyrapoport The document discusses how Netflix moved from SSL certificates expiring without notice in production to deploying Security Monkey (later renamed Howler Monkey) to permanently eliminate SSL certificate expiration as an issue. Netflix had dozens of certificates in various environments that needed monitoring and faced potential outages if certificates expired. The standard approach of tracking certificates manually in Excel worksheets was not feasible for Netflix's complex infrastructure.

Cloud Tech III: Actionable Metrics

Cloud Tech III: Actionable Metricsroyrapoport The document discusses how Netflix uses actionable metrics and a decentralized approach to enable effective decision-making. It emphasizes building flexible, scalable, and self-service systems for collecting, visualizing, and setting alerts on telemetry data. Thresholds for alerts are tuned over time based on historical data to reduce alert fatigue. The systems evolved to better support change management through improved visibility into changes and faster mean time to resolution, especially for issues caused by code deployments.

Python Through the Back Door: Netflix Presentation at CodeMash 2014

Python Through the Back Door: Netflix Presentation at CodeMash 2014royrapoport A discussion of the technical problems that resulted in adopting Python as a first-class language within Netflix's cloud environment

Monitoring Docker Application in Production

Monitoring Docker Application in ProductionAlois Reitbauer Lessons learned at Ruxit on what it means to run dockerized applications and how your monitoring practices have to change.

Ruxit - How we launched a global monitoring platform on AWS in 80 days.

Ruxit - How we launched a global monitoring platform on AWS in 80 days. Alois Reitbauer How Ruxit has developed a global monitoring solution on AWS within 80 days. Talking about architecture, processes and tools.

Five Things I Learned While Building Anomaly Detection Tools - Toufic Boubez ...

Five Things I Learned While Building Anomaly Detection Tools - Toufic Boubez ...tboubez This is my presentation from LISA 2014 in Seattle on November 14, 2014.

Most IT Ops teams only keep an eye on a small fraction of the metrics they collect because analyzing this haystack of data and extracting signal from the noise is not easy and generates too many false positives.

In this talk I will show some of the types of anomalies commonly found in dynamic data center environments and discuss the top 5 things I learned while building algorithms to find them. You will see how various Gaussian based techniques work (and why they don’t!), and we will go into some non-parametric methods that you can use to great advantage.

Ad

Similar to Parallel Programming in Python: Speeding up your analysis (20)

Blastn plus jupyter on Docker

Blastn plus jupyter on DockerLynn Langit Examples from bioinformatics - using containers for bioinformatics tools (such as blastn), plus example Jupyter notebooks

Databricks for Dummies

Databricks for DummiesRodney Joyce Tech talk on what Azure Databricks is, why you should learn it and how to get started. We'll use PySpark and talk about some real live examples from the trenches, including the pitfalls of leaving your clusters running accidentally and receiving a huge bill ;)

After this you will hopefully switch to Spark-as-a-service and get rid of your HDInsight/Hadoop clusters.

This is part 1 of an 8 part Data Science for Dummies series:

Databricks for dummies

Titanic survival prediction with Databricks + Python + Spark ML

Titanic with Azure Machine Learning Studio

Titanic with Databricks + Azure Machine Learning Service

Titanic with Databricks + MLS + AutoML

Titanic with Databricks + MLFlow

Titanic with DataRobot

Deployment, DevOps/MLops and Operationalization

Stackato

StackatoJonas Brømsø Presentation of ActiveStates micro-cloud solution Stackato at Open Source Days 2012.

Stackato is a cloud solution from renowned ActiveState. It is based on the Open Source CloudFoundry and offers a serious cloud solution for Perl programmers, but also supports Python, Ruby, Node.js, PHP, Clojure and Java.

Stackato is very strong in the private PaaS area, but do also support as public PaaS and deployment onto Amazon's EC2.

The presentation will cover basic use of Stackato and the reason for using a PaaS, public as private. Stackato can also be used as a micro-cloud for developers supporting vSphere, VMware Fusion, Parallels and VirtualBox.

Stackato is currently in public beta, but it is already quite impressive in both features and tools. Stackato is not Open Source, but CloudFoundry is and Stackato offers a magnificent platform for deployment of Open Source projects, sites and services.

ActiveState has committed to keeping the micro-cloud solution free so it offers an exciting capability and extension to the developers toolbox and toolchain.

More information available at: https://logiclab.jira.com/wiki/display/OPEN/Stackato

PyData: The Next Generation | Data Day Texas 2015

PyData: The Next Generation | Data Day Texas 2015Cloudera, Inc. This document discusses the past, present, and future of Python for big data analytics. It provides background on the rise of Python as a data analysis tool through projects like NumPy, pandas, and scikit-learn. However, as big data systems like Hadoop became popular, Python was not initially well-suited for problems at that scale. Recent projects like PySpark, Blaze, and Spartan aim to bring Python to big data, but challenges remain around data formats, distributed computing interfaces, and competing with Scala. The document calls for continued investment in high performance Python tools for big data to ensure its relevance in coming years.

Stackato v5

Stackato v5Jonas Brømsø Stackato presentation done at the Nordic Perl Workshop 2012 in Stockholm, Sweden

More information available at: https://logiclab.jira.com/wiki/display/OPEN/Stackato

PyData Boston 2013

PyData Boston 2013Travis Oliphant A description of Continuum Analytics projects focusing on the open source work. Includes descriptions of Wakari, Numba, Conda, Bokeh, Blaze, and CDX.

Data processing with celery and rabbit mq

Data processing with celery and rabbit mqJeff Peck This document summarizes a presentation about using Python, Celery, and RabbitMQ for data processing. It describes using Celery to efficiently process large amounts of data from multiple sources in parallel and deploy the results to different targets. It provides a practical example of using Celery to parse 500,000 emails and load them into a MySQL database and Elasticsearch index. The example code demonstrates setting up Celery, defining tasks, and using Fabric to start workers and process files.

Python ml

Python mlShubham Sharma I am shubham sharma graduated from Acropolis Institute of technology in Computer Science and Engineering. I have spent around 2 years in field of Machine learning. I am currently working as Data Scientist in Reliance industries private limited Mumbai. Mainly focused on problems related to data handing, data analysis, modeling, forecasting, statistics and machine learning, Deep learning, Computer Vision, Natural language processing etc. Area of interests are Data Analytics, Machine Learning, Machine learning, Time Series Forecasting, web information retrieval, algorithms, Data structures, design patterns, OOAD.

NLP based Data Engineering and ETL Tool - Ask On Data.pdf

NLP based Data Engineering and ETL Tool - Ask On Data.pdfHelicalInsight1 Ask On Data is World's first chat & AI based data pipeline tool which can be used by anyone. It can act as your AI assistance for all of your data related requirements.

The biggest USPs of Ask On Data are

- No learning curve: With a simple chat based interface all you need is to type and your entire data transformations can happen.

- NO technical knowledge required. Even non tech person can use

- Chat interface : Allowing anyone to use

- Data transformations at the speed of typing. You can save approx 93% of time in doing any data engineering work.

- Save money by decouping processing

Ask On Data can be used for various tasks like Data Loading/Migration from one source to target, Data Warehouse population, Data Lake population, Data Wrangling and Data Cleaning, Data Integration. This tool can be used by AI and ML to get clean processed data for their AI ML algorithms. This an be used by Data Engineers to create data pipelines for Data Warehoue, Data lake or Reporting DB population. It can also be used by Data Analyst/ Business Analyst/BI developers to get calculated processed data for doing further data visualization and analysis for senior management and busiess leaders.

Get in touch on [email protected]

Phingified ci and deployment strategies ipc 2012

Phingified ci and deployment strategies ipc 2012TEQneers GmbH & Co. KG The document discusses using Phing, an open source build tool based on Apache Ant, for continuous integration and deployment strategies. It provides an introduction to Phing and its features for automating tasks like testing, documentation generation, and file manipulation. Continuous integration with Phing is described as running automated builds and tests frequently during development. Examples of deployment strategies that can be implemented with Phing include packaging code as zip files, PHAR archives, or PEAR packages and distributing them via FTP, SSH, or a web page.

Data science in ruby, is it possible? is it fast? should we use it?

Data science in ruby, is it possible? is it fast? should we use it?Rodrigo Urubatan Slides used in my presentation at http://thedevelopersconference.com.br in the #ruby track this year in são Paulo,

Talking a little about data science, what are the alternatives to do it in ruby, how to integrate ruby and python and what are the best solutions available.

.NET per la Data Science e oltre

.NET per la Data Science e oltreMarco Parenzan Come può .NET contribuire alla Data Science? Cosa è .NET Interactive? Cosa c'entrano i notebook? E Apache Spark? E il pythonismo? E Azure? Vediamo in questa sessione di mettere in ordine le idee.

Proud to be polyglot!

Proud to be polyglot!NLJUG The document discusses the benefits of using a polyglot approach to application development. It argues that a single technology or programming language is no longer suitable for modern applications given the variety of data types, need for scalability, and rapid pace of change. The document provides examples of when different programming languages may be better suited to different tasks, such as using Node.js for real-time interactions, Scala for data processing, and JavaScript for querying. It advocates choosing the right tool based on factors like maturity, features, learning curve, and productivity.

Dev ops lessons learned - Michael Collins

Dev ops lessons learned - Michael CollinsDevopsdays The document discusses lessons learned from trying to implement DevOps in a rapidly growing company. Some key lessons include: (1) being able to clearly articulate what DevOps means for both individuals and the organization; (2) trusting developers and providing them with what they need; and (3) starting DevOps efforts with a focus on development environments rather than just production. The document also emphasizes focusing on toolchains rather than individual tools, using a service delivery pipeline approach, and ensuring good communication and hiring practices.

Architecting Your First Big Data Implementation

Architecting Your First Big Data ImplementationAdaryl "Bob" Wakefield, MBA This document provides an overview of architecting a first big data implementation. It defines key concepts like Hadoop, NoSQL databases, and real-time processing. It recommends asking questions about data, technology stack, and skills before starting a project. Distributed file systems, batch tools, and streaming systems like Kafka are important technologies for big data architectures. The document emphasizes moving from batch to real-time processing as a major opportunity.

Deep learning in production with the best

Deep learning in production with the bestAdam Gibson Getting deep learning adopted at your company. The current landscape of academia vs industry. Presentation at AI with the best (online conference):

http://ai.withthebest.com/

Open Source Big Graph Analytics on Neo4j with Apache Spark

Open Source Big Graph Analytics on Neo4j with Apache SparkKenny Bastani In this talk I will introduce you to a Docker container that provides an easy way to do distributed graph processing using Apache Spark GraphX and a Neo4j graph database. You’ll learn how to analyze big data graphs that are exported from Neo4j and consequently updated from the results of a Spark GraphX analysis. The types of analysis I will be talking about are PageRank, connected components, triangle counting, and community detection.

Stackato v6

Stackato v6Jonas Brømsø Stackato is a PaaS cloud platform from ActiveState that allows developers to easily deploy applications to the cloud. It supports multiple languages including Perl, Ruby, and JavaScript. The presentation demonstrated deploying simple Perl apps to Stackato using the Mojolicious framework. Key benefits of Stackato include minimal differences between development and production environments, one-click deployments, and allowing developers to manage infrastructure. ActiveState is very open and provides documentation, examples, and a community forum to support Stackato users.

State of Play. Data Science on Hadoop in 2015 by SEAN OWEN at Big Data Spain ...

State of Play. Data Science on Hadoop in 2015 by SEAN OWEN at Big Data Spain ...Big Data Spain http://www.bigdataspain.org/2014/conference/state-of-play-data-science-on-hadoop-in-2015-keynote

Machine Learning is not new. Big Machine Learning is qualitatively different: More data beats algorithm improvement, scale trumps noise and sample size effects, can brute-force manual tasks.

Session presented at Big Data Spain 2014 Conference

18th Nov 2014

Kinépolis Madrid

http://www.bigdataspain.org

Event promoted by: http://www.paradigmatecnologico.com

Slides: https://speakerdeck.com/bigdataspain/state-of-play-data-science-on-hadoop-in-2015-by-sean-owen-at-big-data-spain-2014

Background processing with hangfire

Background processing with hangfireAleksandar Bozinovski Hangfire

An easy way to perform background processing in .NET and .NET Core applications. No Windows Service or separate process required.

Why Background Processing?

Lengthy operations like updating lot of records in DB

Checking every 2 hours for new data or files

Invoice generation at the end of every billing period

Monthly Reporting

Rebuild data, indexes or search-optimized index after data change

Automatic subscription renewal

Regular Mailings

Send an email due to an action

Background service provisioning

Ad

Recently uploaded (20)

#StandardsGoals for 2025: Standards & certification roundup - Tech Forum 2025

#StandardsGoals for 2025: Standards & certification roundup - Tech Forum 2025BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, transcript, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

UiPath Agentic Automation: Community Developer Opportunities

UiPath Agentic Automation: Community Developer OpportunitiesDianaGray10 Please join our UiPath Agentic: Community Developer session where we will review some of the opportunities that will be available this year for developers wanting to learn more about Agentic Automation.

tecnologias de las primeras civilizaciones.pdf

tecnologias de las primeras civilizaciones.pdffjgm517 descaripcion detallada del avance de las tecnologias en mesopotamia, egipto, roma y grecia.

Special Meetup Edition - TDX Bengaluru Meetup #52.pptx

Special Meetup Edition - TDX Bengaluru Meetup #52.pptxshyamraj55 We’re bringing the TDX energy to our community with 2 power-packed sessions:

🛠️ Workshop: MuleSoft for Agentforce

Explore the new version of our hands-on workshop featuring the latest Topic Center and API Catalog updates.

📄 Talk: Power Up Document Processing

Dive into smart automation with MuleSoft IDP, NLP, and Einstein AI for intelligent document workflows.

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungen

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungenpanagenda Webinar Recording: https://www.panagenda.com/webinars/hcl-nomad-web-best-practices-und-verwaltung-von-multiuser-umgebungen/

HCL Nomad Web wird als die nächste Generation des HCL Notes-Clients gefeiert und bietet zahlreiche Vorteile, wie die Beseitigung des Bedarfs an Paketierung, Verteilung und Installation. Nomad Web-Client-Updates werden “automatisch” im Hintergrund installiert, was den administrativen Aufwand im Vergleich zu traditionellen HCL Notes-Clients erheblich reduziert. Allerdings stellt die Fehlerbehebung in Nomad Web im Vergleich zum Notes-Client einzigartige Herausforderungen dar.

Begleiten Sie Christoph und Marc, während sie demonstrieren, wie der Fehlerbehebungsprozess in HCL Nomad Web vereinfacht werden kann, um eine reibungslose und effiziente Benutzererfahrung zu gewährleisten.

In diesem Webinar werden wir effektive Strategien zur Diagnose und Lösung häufiger Probleme in HCL Nomad Web untersuchen, einschließlich

- Zugriff auf die Konsole

- Auffinden und Interpretieren von Protokolldateien

- Zugriff auf den Datenordner im Cache des Browsers (unter Verwendung von OPFS)

- Verständnis der Unterschiede zwischen Einzel- und Mehrbenutzerszenarien

- Nutzung der Client Clocking-Funktion

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep Dive

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep DiveScyllaDB Want to learn practical tips for designing systems that can scale efficiently without compromising speed?

Join us for a workshop where we’ll address these challenges head-on and explore how to architect low-latency systems using Rust. During this free interactive workshop oriented for developers, engineers, and architects, we’ll cover how Rust’s unique language features and the Tokio async runtime enable high-performance application development.

As you explore key principles of designing low-latency systems with Rust, you will learn how to:

- Create and compile a real-world app with Rust

- Connect the application to ScyllaDB (NoSQL data store)

- Negotiate tradeoffs related to data modeling and querying

- Manage and monitor the database for consistently low latencies

Big Data Analytics Quick Research Guide by Arthur Morgan

Big Data Analytics Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

HCL Nomad Web – Best Practices and Managing Multiuser Environments

HCL Nomad Web – Best Practices and Managing Multiuser Environmentspanagenda Webinar Recording: https://www.panagenda.com/webinars/hcl-nomad-web-best-practices-and-managing-multiuser-environments/

HCL Nomad Web is heralded as the next generation of the HCL Notes client, offering numerous advantages such as eliminating the need for packaging, distribution, and installation. Nomad Web client upgrades will be installed “automatically” in the background. This significantly reduces the administrative footprint compared to traditional HCL Notes clients. However, troubleshooting issues in Nomad Web present unique challenges compared to the Notes client.

Join Christoph and Marc as they demonstrate how to simplify the troubleshooting process in HCL Nomad Web, ensuring a smoother and more efficient user experience.

In this webinar, we will explore effective strategies for diagnosing and resolving common problems in HCL Nomad Web, including

- Accessing the console

- Locating and interpreting log files

- Accessing the data folder within the browser’s cache (using OPFS)

- Understand the difference between single- and multi-user scenarios

- Utilizing Client Clocking

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptx

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptxAnoop Ashok In today's fast-paced retail environment, efficiency is key. Every minute counts, and every penny matters. One tool that can significantly boost your store's efficiency is a well-executed planogram. These visual merchandising blueprints not only enhance store layouts but also save time and money in the process.

Heap, Types of Heap, Insertion and Deletion

Heap, Types of Heap, Insertion and DeletionJaydeep Kale This pdf will explain what is heap, its type, insertion and deletion in heap and Heap sort

Are Cloud PBX Providers in India Reliable for Small Businesses (1).pdf

Are Cloud PBX Providers in India Reliable for Small Businesses (1).pdfTelecoms Supermarket Discover how reliable cloud PBX providers in India are for small businesses. Explore benefits, top vendors, and integration with modern tools.

TrsLabs - Leverage the Power of UPI Payments

TrsLabs - Leverage the Power of UPI PaymentsTrs Labs Revolutionize your Fintech growth with UPI Payments

"Riding the UPI strategy" refers to leveraging the Unified Payments Interface (UPI) to drive digital payments in India and beyond. This involves understanding UPI's features, benefits, and potential, and developing strategies to maximize its usage and impact. Essentially, it's about strategically utilizing UPI to promote digital payments, financial inclusion, and economic growth.

Into The Box Conference Keynote Day 1 (ITB2025)

Into The Box Conference Keynote Day 1 (ITB2025)Ortus Solutions, Corp This is the keynote of the Into the Box conference, highlighting the release of the BoxLang JVM language, its key enhancements, and its vision for the future.

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, presentation slides, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

Hybridize Functions: A Tool for Automatically Refactoring Imperative Deep Lea...

Hybridize Functions: A Tool for Automatically Refactoring Imperative Deep Lea...Raffi Khatchadourian Efficiency is essential to support responsiveness w.r.t. ever-growing datasets, especially for Deep Learning (DL) systems. DL frameworks have traditionally embraced deferred execution-style DL code—supporting symbolic, graph-based Deep Neural Network (DNN) computation. While scalable, such development is error-prone, non-intuitive, and difficult to debug. Consequently, more natural, imperative DL frameworks encouraging eager execution have emerged but at the expense of run-time performance. Though hybrid approaches aim for the “best of both worlds,” using them effectively requires subtle considerations to make code amenable to safe, accurate, and efficient graph execution—avoiding performance bottlenecks and semantically inequivalent results. We discuss the engineering aspects of a refactoring tool that automatically determines when it is safe and potentially advantageous to migrate imperative DL code to graph execution and vice-versa.

Connect and Protect: Networks and Network Security

Connect and Protect: Networks and Network SecurityVICTOR MAESTRE RAMIREZ Connect and Protect: Networks and Network Security

Technology Trends in 2025: AI and Big Data Analytics

Technology Trends in 2025: AI and Big Data AnalyticsInData Labs At InData Labs, we have been keeping an ear to the ground, looking out for AI-enabled digital transformation trends coming our way in 2025. Our report will provide a look into the technology landscape of the future, including:

-Artificial Intelligence Market Overview

-Strategies for AI Adoption in 2025

-Anticipated drivers of AI adoption and transformative technologies

-Benefits of AI and Big data for your business

-Tips on how to prepare your business for innovation

-AI and data privacy: Strategies for securing data privacy in AI models, etc.

Download your free copy nowand implement the key findings to improve your business.

Hybridize Functions: A Tool for Automatically Refactoring Imperative Deep Lea...

Hybridize Functions: A Tool for Automatically Refactoring Imperative Deep Lea...Raffi Khatchadourian

Parallel Programming in Python: Speeding up your analysis

- 1. Domino Data Lab November 10, 2015 Faster data science — without a cluster Parallel programming in Python Manojit Nandi [email protected] @mnandi92

- 2. Who am I? Domino Data Lab November 10, 2015 • Data Scientist at STEALTHBits Technologies • Data Science Evangelist at Domino Data Lab • BS in Decision Science

- 3. Domino Data Lab November 10, 2015 Agenda and Goals • Motivation • Conceptual intro to parallelism, general principles and pitfalls • Machine learning applications • Demos Goal: Leave you with principles, and practical concrete tools, that will help you run your code much faster

- 4. Motivation Domino Data Lab November 10, 2015 • Lots of “medium data” problems • Can fit in memory on one machine • Lots of naturally parallel problems • Easy to access large machines • Clusters are hard • Not everything fits map-reduce CPUs with multiple cores have become the standard in the recent development of modern computer architectures and we can not only find them in supercomputer facilities but also in our desktop machines at home, and our laptops; even Apple's iPhone 5S got a 1.3 GHz Dual-core processor in 2013. - Sebastian Rascka

- 5. Parallel programing 101 Domino Data Lab November 10, 2015 • Think about independent tasks (hint: “for” loops are a good place to start!) • Should be CPU-bound tasks • Warning and pitfalls • Not a substitute for good code • Overhead • Shared resource contention • Thrashing Source: Blaise Barney, Lawrence Livermore National Laboratory

- 6. Can parallelize at different “levels” Domino Data Lab November 10, 2015 Will focus on algorithms, with some brief comments on Experiments Run against underlying libraries that parallelize low-level operations, e.g., openBLAS, ATLAS Write your code (or use a package) to parallelize functions or steps within your analysis Run different analyses at once Math ops Algorithms Experiments

- 7. Parallelize tasks to match your resources Domino Data Lab November 10, 2015 Computing something (CPU) Reading from disk/database Writing to disk/database Network IO (e.g., web scraping) Saturating a resource will create a bottleneck

- 8. Don't oversaturate your resources Domino Data Lab November 10, 2015 itemIDs = [1, 2, … , n] parallel-for-each(i = itemIDs){ item = fetchData(i) result = computeSomething(item) saveResult(result) }

- 9. Parallelize tasks to match your resources Domino Data Lab November 10, 2015 items = fetchData([1, 2, … , n]) results = parallel-for-each(i = items){ computeSomething(item) } saveResult(results)

- 10. Avoid modifying global state Domino Data Lab November 10, 2015 itemIDs = [0, 0, 0, 0] parallel-for-each(i = 1:4) { itemIDs[i] = i } A = [0,0,0,0]Array initialized in process 1 [0,0,0,0] [0,0,0,0][0,0,0,0][0,0,0,0]Array copied to each sub-process [0,0,0,0] [0,0,0,3][0,0,2,0][0,1,0,0]The copy is modified [0,0,0,0] When all parallel tasks finish, array in original process remained unchanged

- 11. Demo Domino Data Lab November 10, 2015

- 12. Many ML tasks are parallelized Domino Data Lab November 10, 2015 • Cross-Validation • Grid Search Selection • Random Forest • Kernel Density Estimation • K-Means Clustering • Probabilistic Graphical Models • Online Learning • Neural Networks (Backpropagation) Harder to parallelize Intuitive to parallelize

- 13. Cross validation Domino Data Lab November 10, 2015

- 14. Grid search Domino Data Lab November 10, 2015 1 10 100 1000 Linear RBF C Kernel

- 15. Random forest Domino Data Lab November 10, 2015

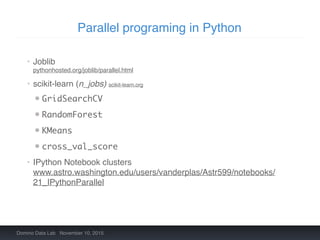

- 16. Parallel programing in Python Domino Data Lab November 10, 2015 • Joblib pythonhosted.org/joblib/parallel.html • scikit-learn (n_jobs) scikit-learn.org • GridSearchCV • RandomForest • KMeans • cross_val_score • IPython Notebook clusters www.astro.washington.edu/users/vanderplas/Astr599/notebooks/ 21_IPythonParallel

- 17. Demo Domino Data Lab November 10, 2015

- 18. Parallel Programming using the GPU Domino Data Lab November 10, 2015 • GPUs are essential to deep learning because they can yield 10x speed-up when training the neural networks. • Use PyCUDA library to write Python code that executes using the GPU.

- 19. Demo Domino Data Lab November 10, 2015

- 20. Can compose layers of parallelism Domino Data Lab November 10, 2015 c1 c2 cn… c1 c2 cn…c1 c2 cn… Machines (experiments) Cores RF NN GridSearched SVC

- 21. Demo Domino Data Lab November 10, 2015

- 22. FYI: Parallel programing in R Domino Data Lab November 10, 2015 • General purpose • parallel • foreach cran.r-project.org/web/packages/foreach • More specialized • randomForest cran.r-project.org/web/packages/randomForest • caret topepo.github.io/caret • plyr cran.r-project.org/web/packages/plyr

- 23. Domino Data Lab November 10, 2015 dominodatalab.com blog.dominodatalab.com @dominodatalab Check us out!