Scalable Recommendation Algorithms with LSH

- 1. Scalable Recommendation Algorithms for Massive Data Maruf Aytekin PhD Candidate Computer Engineering Department Bahcesehir University

- 2. Outline • Introduction • Collaborative Filtering (CF) and Scalability Problem • Locality Sensitive Hashing (LSH) for Recommendation • Improvement for LSH methods • Preliminary Results • Work Plan

- 3. Recommender Systems •Recommender systems •Applied to various domains: •Book/movie/news recommendations •Contextual advertising •Search engine personalization •Matchmaking •Two type of problems: • Preference elicitation (prediction) • Set-based recommendations (top-N)

- 4. Recommender Systems • Content-based filtering • Collaborative filtering (CF) • Model-based • Neighborhood-based

- 5. Neighborhood-based Methods The idea: Similar users behave in a similar way. • User-based: rely on the opinion of like-minded users to predict a rating. • Item-based: look at rating given to similar items. Require computation of similarity weights to select trusted neighbors whose ratings are used in the prediction.

- 6. Neighborhood-based Methods Problem • Compare all users/items to find trusted neighbors (k-nearest-neighbors) • Not scale well with data size (# of users/items) Computational Complexity Space Model Build Query User-based O(m2) O(m2n) O(m) Item-based O(n2) O(n2m) O(n) m : number of users n : number of items

- 7. Various Methods Model-based recommendation techniques • Dimensionality reduction (SVD, PCA, Random projections) • Classification (like, dislike) • Neural network classifier • Clustering (ANN) • Bayesian inference techniques Distributed computation • Map-reduce • Distributed CF algorithms

- 8. Locality Sensitive Hashing (LSH) • ANN search method • Provides a way to eliminate searching all of the data to find the nearest neighbors • Finds the nearest neighbors fast in basic neighbourhood based methods.

- 9. Locality Sensitive Hashing (LSH) General approach: • “Hash” items several times, in such a way that similar items are more likely to be hashed to the same bucket than dissimilar items are. • Pairs hashed to the same bucket candidate pairs. • Check only the candidate pairs for similarity.

- 10. Locality-Sensitive Functions The function h will “hash” items, and the decision will be based on whether or not the result is equal. • h(x) = h(y) make x and y a candidate pair. • h(x) ≠ h(y) do not make x and y a candidate pair. g = h1 AND h2 AND h3 … or g = h1 OR h2 OR h3 … A collection of functions of this form will be called a family of functions.

- 11. LSH for Cosine Charikar defines family of functions for Cosine as follows: Let u and v be rating vectors and r is a random generated vector whose components are +1 and −1. The family of hash functions (H) generated: , where shows the probability of u and v being declared as a candidate pair.

- 12. LSH for Cosine Example: r1 = [-1, 1, 1,-1,-1] r2 = [ 1, 1, 1,-1,-1] r3 = [-1,-1, 1,-1, 1] r4 = [-1, 1,-1, 1,-1] h1(u1) = u1.r1 = -6 => 0 h2(u1) = u1.r2 = 4 => 1 h3(u1) = u1.r3 = -12 => 0 h4(u1) = u1.r4 = 2 => 1 u1 = [5, 4, 0, 4, 1] u2 = [2, 1, 1, 1, 4] u3 = [4, 3, 0, 5, 2] g(u1) = 0 1 0 1 g(u2) = 0 0 1 0 g(u3) = 0 1 0 1 AND g(u1) = 0101 max 24 = 16 buckets

- 13. LSH Model Build U1 U2 U3 Um . . . . . h1 h3 U7 U11 U10 . . U13 U39 . . Um U1 U3 U5 . . U2 U9 U6 . . bucket 1 key: 0101 bucket 2 key: 1110 bucket 3 key: 1101 bucket 4 key: 1001 h2 h4 [0,1] [0,1] AND-Construction [0,1] [0,1] K = 4, number of hash functions . . . .

- 14. Hash Tables (Bands) U2 U6 U1 U3 . . . candidate set for U5 C(U5) L = 2 K = 4 hash table 1 hash table 2

- 15. LSH Methods • Clustering Based: • UB-KNN-LSH: User-based CF prediction with LSH • IB-KNN-LSH: Item-based CF with LSH • Frequency Based: • UB-LSH1: User-based prediction with LSH • IB-LSH1: Item-based prediction with LSH

- 17. UB-KNN-LSH IB-KNN-LSH • find candidate set, C, for target user, u, with LSH. • find k-nearest-neighbors to u from C that have rated on i. • use k-nearest-neighbors to generate a prediction for u on i. • find candidate set, C, for target item, i, with LSH. • find k-nearest-neighbors to i from C which user u rated on. • use k-nearest-neighbors to generate a prediction for u on item i. LSH MethodsPrediction

- 18. UB-LSH1 IB-LSH1 • find candidate users list, Cl, for u who rated on i with LSH. • calculate frequency of each user in Cl who rated on i. • sort candidate users based on frequency and get top k users • use frequency as weight to predict rating for u on i with user-based prediction. • find candidate items list, Cl, for i with LSH. • calculate frequency of items in Cl which is rated by u. • sort candidate items based on frequency and get top k items. • use frequency as weight to predict rating for u on i with item based prediction. LSH MethodsPrediction

- 19. ImprovementPrediction UB-LSH2 IB-LSH2 • find candidate users list, Cl, for u who rated on i with LSH. • select k users from Cl randomly. • predict rating for u on i with user-based prediction as the average ratings of k users. • find candidate items list, Cl, for i with LSH. • select k items rated by u from Cl randomly. • predict rating for u on i with item-based prediction as the average ratings of k items. - Eliminate frequency calculation and sorting. - Frequent users or items in Cl have higher chance to be selected randomly.

- 20. Complexity Prediction Space Model Build Prediction User-based O(m) O(m2) O(mn) Item-based O(n) O(n2) O(mn) UB-KNN-LSH O(mL) O(mLKt) O(L+|C|n+k) IB-KNN-LSH O(nL) O(nLKt) O(L+|C|m+k) UB-LSH1 O(mL) O(mLKt) O(L+|Cl|+|Cl|lg(|Cl|)+k) IB-LSH1 O(nL) O(nLKt) O(L+|Cl|+|Cl|lg(|Cl|)+k) UB-LSH2 O(mL) O(mLKt) O(L+2k) IB-LSH2 O(nL) O(nLKt) O(L+2k) m : number of users n : number of items L: number of hash tables K : number of hash functions t : time to evaluate a hash function C: Candidate user (or item) set ( |C| ≤ Lm / 2K or |C| ≤ Ln / 2K ) Cl : Candidate user (or item) list ( | Cl | ≤ Lm / 2K or | Cl | ≤ Ln / 2K )

- 21. | Cl | ≤ Lm / 2K L = 5 m =16,042 Candidate List (Cl) Prediction 0 10000 20000 30000 40000 50000 1 2 3 4 5 6 7 8 9 10 NumberofUsers Number of Hash Functions Cl m | Cl | ≤ Ln / 2K L = 5 n =17,454 0 10000 20000 30000 40000 50000 1 2 3 4 5 6 7 8 9 10 NumberofItems Number of Hash Functions Cl n

- 23. ResultsPrediction 0.8 1 1.2 1.4 1.6 1.8 4 5 6 7 8 9 10 11 12 13 MAE Number of Hash Functions UB-KNN IB-KNN UB-KNN-LSH IB-KNN-LSH UB-LSH1 UB-LSH2 IB-LSH1 IB-LSH2 0.7 0.8 0.9 1 1.1 1.2 1.3 4 5 6 7 8 9 10 11 12 13 MAE Number of Hash Functions UB-KNN IB-KNN UB-KNN-LSH IB-KNN-LSH UB-LSH1 UB-LSH2 IB-LSH1 IB-LSH2 Movie Lens 1M Amazon Movies

- 24. 0 2 4 6 8 10 12 14 4 5 6 7 8 9 10 11 12 13 RunTime(ms) Number of Hash Functions UB-KNN-LSH IB-KNN-LSH UB-LSH1 UB-LSH2 IB-LSH1 IB-LSH2 0 0.2 0.4 0.6 0.8 1 1.2 1.4 4 5 6 7 8 9 10 11 12 13 RunTime(ms) Number of Hash Functions UB-KNN-LSH IB-KNN-LSH UB-LSH1 UB-LSH2 IB-LSH1 IB-LSH2 Movie Lens 1M Amazon Movies ResultsPrediction

- 25. 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 4 5 6 7 8 9 10 11 12 13 RunTime(ms.) Number of Hash Functions UB-LSH1 UB-LSH2 IB-LSH1 IB-LSH2 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 4 5 6 7 8 9 10 11 12 13 RunTime(ms.) Number of Hash Functions UB-LSH1 UB-LSH2 IB-LSH1 IB-LSH2 Movie Lens 1M Amazon Movies ResultsPrediction

- 26. 0 0.2 0.4 0.6 0.8 1 4 5 6 7 8 9 10 11 12 13 PredictionCoverage Number of Hash Functions UB-KNN-LSH IB-KNN-LSH UB-LSH1 UB-LSH2 IB-LSH1 IB-LSH2 0 0.2 0.4 0.6 0.8 1 4 5 6 7 8 9 10 11 12 13 PredictionCoverage Number of Hash Functions UB-KNN-LSH IB-KNN-LSH UB-LSH1 UB-LSH2 IB-LSH1 IB-LSH2 Movie Lens 1M Amazon Movies ResultsPrediction

- 27. 0.4 0.5 0.6 0.7 0.8 0.9 1 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 Coverage-higherisbetter Runtime -lower is better Performance-Coverage tradeoff -upper and left is better UB-LSH1 UB-LSH2 IB-LSH1 IB-LSH2 0 0.2 0.4 0.6 0.8 1 0 0.2 0.4 0.6 0.8 1 Coverage-higherisbetter Runtime -lower is better Performance-Coverage tradeoff -upper and left is better UB-LSH1 UB-LSH2 IB-LSH1 IB-LSH2 Movie Lens 1M Amazon Movies ResultsPrediction

- 28. ResultsPrediction 0 0.2 0.4 0.6 0.8 1 0.8 0.85 0.9 0.95 1 1.05 1.1 RunningTime(ms.)-lowerisbetter MAE -lower is better MAE-Performance tradeoff -lower and left is better UB-LSH1 UB-LSH2 IB-LSH1 IB-LSH2 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.75 0.8 0.85 0.9 0.95 1 RunningTime(ms.)-lowerisbetter MAE -lower is better MAE-Performance tradeoff -lower and left is better UB-LSH1 UB-LSH2 IB-LSH1 IB-LSH2 Movie Lens 1M Amazon Movies

- 30. UB-LSH1 IB-LSH1 • find candidate set, C, for user u with LSH. • for each user, v, in C; retrieve items that rated by v and add to a running candidate list, Cl. • calculate frequency of items in Cl. • sort Cl based on frequency. • recommend the most frequent N items to u. • for each item, i, u rated; retrieve candidate set, C, for i with LSH and add C to a running candidate list, Cl. • calculate frequency of items in Cl. • sort Cl based on frequency. • recommend the most frequent N items to u. LSH MethodsTop-N Recommendation

- 31. Improvement Top-N Recommendation UB-LSH2 IB-LSH2 • find candidate set, C, for user u with LSH. • for each user, v, in C; retrieve items that rated by v and add to a running candidate list, Cl. • select N items from Cl randomly and recommend to u. • for each item, i, u rated; retrieve candidate set, C, for i with LSH and add to a running candidate list, Cl. • select N items from Cl randomly and recommend to u. Eliminates frequency calculation and sorting.

- 32. Complexity Top-N Recommendation Space Model Build Top-N Recommendation User-based O(m) O(m2) O(mn) Item-based O(n) O(n2) O(mn) UB-LSH1 O(mL) O(mLKt) O(L+|C|+|Cl|+|Cl|lg(|Cl|) IB-LSH1 O(nL) O(nLKt) O(pL+|Cl|+|Cl|lg(|Cl|)) UB-LSH2 O(mL) O(mLKt) O(L+|C|+N) IB-LSH2 O(nL) O(nLKt) O(pL+N) m : number of users n : number of items p : number of ratings of a user L : number of hash tables K : number of hash functions t : time to evaluate a hash function C : Candidate user (or item) set ( |C| ≤ Lm / 2K or |C| ≤ Ln / 2K) Cl : Candidate item list ( |Cl| ≤ p|C| for UB-LSH1 and IB-LSH1 s.t. |Cl| ≤ Lpn / 2K )

- 33. |Cl| ≤ Lpn / 2K ) L = 5 n =1000 p = 100 (avg. number of ratings for a user) Candidate List (Cl) Top-N Recommendation 0 5000 10000 15000 20000 25000 30000 35000 4 5 6 7 8 9 10 11 12 13 NumberofItems Number of Hash Functions min Cl max Cl n

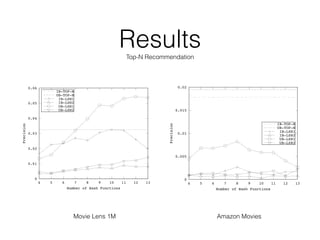

- 34. ResultsTop-N Recommendation 0 0.01 0.02 0.03 0.04 0.05 0.06 4 5 6 7 8 9 10 11 12 13 Precision Number of Hash Functions IB-TOP-N UB-TOP-N IB-LSH1 IB-LSH2 UB-LSH1 UB-LSH2 0 0.005 0.01 0.015 0.02 4 5 6 7 8 9 10 11 12 13 Precision Number of Hash Functions IB-TOP-N UB-TOP-N IB-LSH1 IB-LSH2 UB-LSH1 UB-LSH2 Movie Lens 1M Amazon Movies

- 35. 0 20 40 60 80 100 4 5 6 7 8 9 10 11 12 13 AvgRecc.Time(ms.) Number of Hash Functions IB-LSH1 IB-LSH2 UB-LSH1 UB-LSH2 ResultsTop-N Recommendation 0 10 20 30 40 50 60 70 4 5 6 7 8 9 10 11 12 13 AvgRecc.Time(ms.) Number of Hash Functions IB-LSH1 IB-LSH2 UB-LSH1 UB-LSH2 Movie Lens 1M Amazon Movies

- 36. 0 500 1000 1500 2000 2500 3000 3500 4 5 6 7 8 9 10 11 12 13 AggregateDiversity Number of Hash Functions IB-TOP-N UB-TOP-N IB-LSH1 IB-LSH2 UB-LSH1 UB-LSH2 ResultsTop-N Recommendation 0 1000 2000 3000 4000 5000 4 5 6 7 8 9 10 11 12 13 AggregateDiversity Number of Hash Functions IB-TOP-N UB-TOP-N IB-LSH1 IB-LSH2 UB-LSH1 UB-LSH2 Movie Lens 1M Amazon Movies

- 37. 0 0.2 0.4 0.6 0.8 1 4 5 6 7 8 9 10 11 12 13 Diversity Number of Hash Functions IB-TOP-N UB-TOP-N IB-LSH1 IB-LSH2 UB-LSH1 UB-LSH2 ResultsTop-N Recommendation 0 0.2 0.4 0.6 0.8 1 4 5 6 7 8 9 10 11 12 13 Diversity Number of Hash Functions IB-TOP-N UB-TOP-N IB-LSH1 IB-LSH2 UB-LSH1 UB-LSH2 Movie Lens 1M Amazon Movies

- 38. 0 2 4 6 8 10 12 4 5 6 7 8 9 10 11 12 13 Novelty Number of Hash Functions IB-TOP-N UB-TOP-N IB-LSH1 IB-LSH2 UB-LSH1 UB-LSH2 ResultsTop-N Recommendation Movie Lens 1M Amazon Movies 5 5.5 6 6.5 7 7.5 8 8.5 9 9.5 4 5 6 7 8 9 10 11 12 13 Novelty Number of Hash Functions IB-TOP-N UB-TOP-N IB-LSH1 IB-LSH2 UB-LSH1 UB-LSH2

- 39. ResultsTop-N Recommendation Our improvement is simple but efficient; • Improves: • Performance • Diversity • Coverage • Novelty • but costs accuracy.

- 40. • LSH as a real-time stream recommendation algorithm • Dimensionality reduction methods (e.g., Matrix Factorization) • Other ANN Methods: • Tree based • Clustering based Work Plan

- 41. Q & A

![LSH for Cosine

Example:

r1 = [-1, 1, 1,-1,-1]

r2 = [ 1, 1, 1,-1,-1]

r3 = [-1,-1, 1,-1, 1]

r4 = [-1, 1,-1, 1,-1]

h1(u1) = u1.r1 = -6 => 0

h2(u1) = u1.r2 = 4 => 1

h3(u1) = u1.r3 = -12 => 0

h4(u1) = u1.r4 = 2 => 1

u1 = [5, 4, 0, 4, 1]

u2 = [2, 1, 1, 1, 4]

u3 = [4, 3, 0, 5, 2]

g(u1) = 0 1 0 1

g(u2) = 0 0 1 0

g(u3) = 0 1 0 1

AND

g(u1) = 0101

max 24 = 16 buckets](https://image.slidesharecdn.com/scalablereccalgorithms-160726185805/85/Scalable-Recommendation-Algorithms-with-LSH-12-320.jpg)

![LSH Model Build

U1

U2

U3

Um

.

.

.

.

.

h1

h3

U7

U11

U10

.

.

U13

U39

.

.

Um

U1

U3

U5

.

.

U2

U9

U6

.

.

bucket 1

key: 0101

bucket 2

key: 1110

bucket 3

key: 1101

bucket 4

key: 1001

h2

h4

[0,1]

[0,1]

AND-Construction

[0,1]

[0,1]

K = 4, number of hash functions . . . .](https://image.slidesharecdn.com/scalablereccalgorithms-160726185805/85/Scalable-Recommendation-Algorithms-with-LSH-13-320.jpg)

![[Paper review] BERT](https://cdn.slidesharecdn.com/ss_thumbnails/paperreviewbert-190507052754-thumbnail.jpg?width=560&fit=bounds)

![[AFEL] Neighborhood Troubles: On the Value of User Pre-Filtering To Speed Up ...](https://cdn.slidesharecdn.com/ss_thumbnails/cikm18eyrepres-181022081818-thumbnail.jpg?width=560&fit=bounds)

![[WI 2014]Context Recommendation Using Multi-label Classification](https://cdn.slidesharecdn.com/ss_thumbnails/slidewic2014context-140805210546-phpapp01-thumbnail.jpg?width=560&fit=bounds)

![MODULE 03 - CLOUD COMPUTING- [BIS 613D] 2022 scheme.pptx](https://cdn.slidesharecdn.com/ss_thumbnails/module03-cloudcomputing-250506150212-e107fd7e-thumbnail.jpg?width=560&fit=bounds)