QuadIron An open source library for number theoretic transform-based erasure codes

1 like2,152 views

QuadIron is an open source library which implements erasure codes in a way that can scale better than any other existing system.

1 of 51

Download to read offline

Ad

Recommended

Polyraptor

PolyraptorMohammedAlasmar2 1) The document discusses the use of RaptorQ coding in data center networks to address various traffic patterns like incast, one-to-many, many-to-one flows.

2) RaptorQ codes allow symbols to be sprayed across multiple paths and receivers can reconstruct the data from any subset of symbols. This enables efficient handling of multi-path, multi-source and multicast traffic.

3) Evaluation results show that RaptorQ coding improves throughput compared to TCP, especially in scenarios with incast traffic or multiple senders transmitting to a receiver. The rateless property and resilience to packet loss makes it well-suited for data center network traffic.

Performance Optimization of SPH Algorithms for Multi/Many-Core Architectures

Performance Optimization of SPH Algorithms for Multi/Many-Core ArchitecturesDr. Fabio Baruffa In the framework of the Intel Parallel Computing Centre at the Research Campus Garching in Munich, our group at LRZ presents recent results on performance optimization of Gadget-3, a widely used community code for computational astrophysics. We identify and isolate a sample code kernel, which is representative of a typical Smoothed Particle Hydrodynamics (SPH) algorithm and focus on threading parallelism optimization, change of the data layout into Structure of Arrays (SoA), compiler auto-vectorization and algorithmic improvements in the particle sorting. We measure lower execution time and improved threading scalability both on Intel Xeon (2.6× on Ivy Bridge) and Xeon Phi (13.7× on Knights Corner) systems. First tests on second generation Xeon Phi (Knights Landing) demonstrate the portability of the devised optimization solutions to upcoming architectures.

RISC-V Linker Relaxation and LLD

RISC-V Linker Relaxation and LLDRay Song The document discusses RISC-V linker relaxation and LLD. It describes linker relaxation techniques used on x86-64, PowerPC64, and RISC-V to improve performance by rewriting instruction sequences. It also discusses how DWARF address ranges and debug line information are handled differently on RISC-V due to its instruction encoding requiring more bytes per entry. LLD is introduced as a linker that supports these RISC-V specific optimizations.

Klessydra-T: Designing Configurable Vector Co-Processors for Multi-Threaded E...

Klessydra-T: Designing Configurable Vector Co-Processors for Multi-Threaded E...RISC-V International The document summarizes the Klessydra-T architecture for designing vector coprocessors for multi-threaded edge computing cores. It describes the interleaved multi-threading baseline, parameterized vector acceleration schemes using the Klessydra vector intrinsic functions. Performance results show up to 3x speedup over a baseline core for benchmarks like convolution, FFT, and matrix multiplication on FPGA implementations with different configurations of vector lanes, functional units, and scratchpad memories.

H 264 in cuda presentation

H 264 in cuda presentationashoknaik120 H.264, also known as MPEG-4 Part 10 or AVC, is a video compression standard that provides significantly better compression than previous standards such as MPEG-2. It achieves this through spatial and temporal redundancy reduction techniques including intra-frame prediction, inter-frame prediction, and entropy coding. Motion estimation, which finds motion vectors between frames to enable inter-frame prediction, is the most computationally intensive part of H.264 encoding. Previous GPU implementations of H.264 motion estimation have sacrificed quality for parallelism or have not fully addressed dependencies between blocks. This document proposes a pyramid motion estimation approach on GPU that can better address dependencies while maintaining quality.

International Journal of Engineering Research and Development

International Journal of Engineering Research and DevelopmentIJERD Editor Electrical, Electronics and Computer Engineering,

Information Engineering and Technology,

Mechanical, Industrial and Manufacturing Engineering,

Automation and Mechatronics Engineering,

Material and Chemical Engineering,

Civil and Architecture Engineering,

Biotechnology and Bio Engineering,

Environmental Engineering,

Petroleum and Mining Engineering,

Marine and Agriculture engineering,

Aerospace Engineering.

Video decoding: SDI interface implementation &H.264/AVC bitstreamdecoder hard...

Video decoding: SDI interface implementation &H.264/AVC bitstreamdecoder hard...Vicheka Phor The document describes the implementation of an SDI video interface and an H.264/AVC bitstream decoder on an FPGA. It discusses using a Lattice tri-rate SDI PHY IP core to provide 3G/HD/SD SDI video input functionality. It also outlines the design of a custom H.264 bitstream decoder hardware architecture, including decoding techniques like FLC, Exp-Golomb and CAVLD. Simulation results showed the decoder design could run on a Lattice FPGA at up to 198 MHz, meeting the 150 MHz clock constraint.

PyCoRAM: Yet Another Implementation of CoRAM Memory Architecture for Modern F...

PyCoRAM: Yet Another Implementation of CoRAM Memory Architecture for Modern F...Shinya Takamaeda-Y This document describes PyCoRAM, a Python-based implementation of the CoRAM memory architecture for FPGA-based computing. PyCoRAM provides a high-level abstraction for memory management that decouples computing logic from memory access behaviors. It allows defining memory access patterns using Python control threads. PyCoRAM generates an IP core that integrates with standard IP cores on Xilinx FPGAs using the AMBA AXI4 interconnect. It supports parameterized RTL design and achieves high memory bandwidth utilization of over 84% on two FPGA boards in evaluations of an array summation application.

A Library for Emerging High-Performance Computing Clusters

A Library for Emerging High-Performance Computing ClustersIntel® Software This document discusses the challenges of developing communication libraries for exascale systems using hybrid MPI+X programming models. It describes how current MPI+PGAS approaches use separate runtimes, which can lead to issues like deadlock. The document advocates for a unified runtime that can support multiple programming models simultaneously to avoid such issues and enable better performance. It also outlines MVAPICH2's work on designs like multi-endpoint that integrate MPI and OpenMP to efficiently support emerging highly threaded systems.

FPGA Implementation of Mixed Radix CORDIC FFT

FPGA Implementation of Mixed Radix CORDIC FFTIJSRD In this Paper, the architecture and FPGA implementation of a Coordinate Rotation Digital Computer (CORDIC) pipeline Fast Fourier Transform (FFT) processor is presented. Fast Fourier Transforms (FFT) is highly efficient algorithm which uses Divide and Conquer approach for speedy calculation of Discrete Fourier transform (DFT) to obtain the frequency spectrum. CORDIC algorithm which is hardware efficient and avoids the use of conventional multiplication and accumulation (MAC) units but evaluates the trigonometric functions by the rotation of a complex vector by means of only add and shift operations. We have developed Fixed point FFT processors using VHDL language for implementation on Field Programmable Gate Array. A Mixed Radix 8 point DIF FFT/IFFT architecture with CORDIC Twiddle factor generation unit with use of pipeline implementation FFT processor has been developed using Xilinx XC3S500E Spartan-3E FPGA and simulated with maximum frequency of 157.359 MHz for 16 bit length 8 point FFT. Results show that the processor uses less number of LUTs and achieves Maximum Frequency.

A High Performance Heterogeneous FPGA-based Accelerator with PyCoRAM (Runner ...

A High Performance Heterogeneous FPGA-based Accelerator with PyCoRAM (Runner ...Shinya Takamaeda-Y A High Performance Heterogeneous FPGA-based Accelerator with PyCoRAM (Received Runner Up Award at Digilent Design Contest 2014 Japan Region)

My review on low density parity check codes

My review on low density parity check codespulugurtha venkatesh This document discusses low-density parity-check (LDPC) codes. It begins with an overview of LDPC codes, noting they were originally invented in the 1960s but gained renewed interest after turbo codes. It then covers LDPC code performance and construction, including generator and parity check matrices. Various representations of LDPC codes are examined, such as matrix and graphical representations using Tanner graphs. Applications of LDPC codes include wireless, wired, and optical communications. In conclusions, turbo codes achieved theoretical limits with a small gap and led to new codes like LDPC codes, which provide high-speed and high-throughput performance close to the Shannon limit.

Design and Implementation of Area Efficiency AES Algoritham with FPGA and ASIC,

Design and Implementation of Area Efficiency AES Algoritham with FPGA and ASIC,paperpublications3 Abstract: A public domain encryption standard is subject to continuous, vigilant, expert cryptanalysis. AES is a symmetric encryption algorithm processing data in block of 128 bits. Under the influence of a key, a 128-bit block is encrypted by transforming it in a unique way into a new block of the same size. To implement AES Rijndael algorithm on FPGA using Verilog and synthesis using Xilinx, Plain text of 128 bit data is considered for encryption using Rijndael algorithm utilizing key. This encryption method is versatile used for military applications. The same key is used for decryption to recover the original 128 bit plain text. For high speed applications, the Non LUT based implementation of AES S-box and inverse S-box is preferred. Development of physical design of AES-128 bit is done using cadence SoC encounter. Performance evaluation of the physical design with respect to area, power, and time has been done. The core consumes 10.11 mW of power for the core area of 330100.742 μm2.

Keywords: Encryption, Decryption Rijndael algorithm, FPGA implementation, Physical Design.

Parallella: Embedded HPC For Everybody

Parallella: Embedded HPC For Everybodyjerlbeck This talk is about architecture and programming of the Epiphany processor on the Parallella board, discussing step-by-step how to improve and optimize software kernels on such distributed DSP systems. It was held at the "Softwarekonferenz für Parallel Programming, Concurrency

und Multicore-Systeme" in Karlsruhe/Germany 2014.

2 1

2 1Arthur Sanchez This document describes a bit-serial message-passing low-density parity-check (LDPC) decoder. It discusses the history and use of channel coding and different coding techniques. It then explains the structure and min-sum decoding algorithm for LDPC codes. The document proposes a bit-serial message passing technique for the decoder architecture to reduce routing congestion compared to fully parallel architectures. It provides implementation details of an application-specific integrated circuit (ASIC) and field-programmable gate array (FPGA) version of the decoder.

Lzw

LzwDaniel A Lempel-Ziv-Welch (LZW) is a universal lossless data compression algorithm that replaces strings of characters with single codes, achieving smaller file sizes and faster transmission. LZW is commonly used to compress files like TIFF, GIF, PDF, and in file compression formats like Unix Compress and gzip. It works by building a table of strings and assigning a code whenever it encounters a new string, allowing for efficient encoding of repeated patterns in data.

64bit SMP OS for TILE-Gx many core processor

64bit SMP OS for TILE-Gx many core processorToru Nishimura concise introduction of TILE-Gx many-core processor design and features, how SMP operating system was ported for the processor, and the proposed applications for the up to 72 core computer systems.

FNR : Arbitrary length small domain block cipher proposal

FNR : Arbitrary length small domain block cipher proposalSashank Dara We propose a practical flexible (or arbitrary) length small domain block cipher, FNR encryption scheme. FNR denotes Flexible Naor and Reingold. It can cipher small domain data formats like IPv4, Port numbers, MAC Addresses, Credit card numbers, any random short strings while preserving their input length. In addition to the classic Feistel networks, Naor and Reingold propose usage of Pair-wise independent permutation (PwIP) functions based on Galois Field GF(2 n). Instead we propose usage of random N ×N Invertible matrices in GF(2)

HKG18-411 - Introduction to OpenAMP which is an open source solution for hete...

HKG18-411 - Introduction to OpenAMP which is an open source solution for hete...Linaro Session ID: HKG18-411

Session Name: HKG18-411 - Introduction to OpenAMP which is an open source solution for heterogeneous system orchestration and communication

Speaker: Wendy Liang

Track: IoT, Embedded

★ Session Summary ★

Introduction to OpenAMP which is an open source solution for heterogeneous system orchestration and communication

---------------------------------------------------

★ Resources ★

Event Page: http://connect.linaro.org/resource/hkg18/hkg18-411/

Presentation: http://connect.linaro.org.s3.amazonaws.com/hkg18/presentations/hkg18-411.pdf

Video: http://connect.linaro.org.s3.amazonaws.com/hkg18/videos/hkg18-411.mp4

---------------------------------------------------

★ Event Details ★

Linaro Connect Hong Kong 2018 (HKG18)

19-23 March 2018

Regal Airport Hotel Hong Kong

---------------------------------------------------

Keyword: IoT, Embedded

'http://www.linaro.org'

'http://connect.linaro.org'

---------------------------------------------------

Follow us on Social Media

https://www.facebook.com/LinaroOrg

https://www.youtube.com/user/linaroorg?sub_confirmation=1

https://www.linkedin.com/company/1026961

Polyraptor

PolyraptorMohammedAlasmar2 The document discusses Polyraptor, a transport protocol designed for data center networks. It supports various data transfer patterns including:

- One-to-many: Where clients fetch data from multiple servers. Polyraptor uses RaptorQ erasure coding where encoding symbols from different senders can be used to decode the original data.

- Many-to-one: Where data is replicated to multiple servers. With RaptorQ, each server can contribute encoding symbols at its available capacity to transmit the data.

- Incast: RaptorQ's rateless and systematic properties make it resilient to packet loss and out-of-order delivery, eliminating the need for extensive buffering.

Introduction to DPDK RIB library

Introduction to DPDK RIB libraryГлеб Хохлов The document introduces the DPDK RIB and LPM libraries. The RIB library contains route metadata and supports various management plane lookups through its compressed binary tree. It allows faster route insertion than LPM and supports storing metadata with routes. The LPM library focuses on fast data plane lookups but does not support metadata or management operations. Improvements to the DIR24-8 algorithm in RIB include eliminating redundant tables, faster modifications, and supporting larger next hop sizes.

Ppt fnr arbitrary length small domain block cipher proposal

Ppt fnr arbitrary length small domain block cipher proposalKarunakar Saroj FNR is a proposed block cipher that can encrypt data of arbitrary lengths, from 32 to 128 bits, while preserving the input length. It uses a Feistel network structure with pairwise independent permutations and AES as the round function. The key advantages are that it has no length expansion, preserves the input range, and supports arbitrary length inputs without depending on key length. However, it has disadvantages of potential performance overhead, lack of integrity features, and vulnerability to attacks when used in ECB mode.

LDPC Codes

LDPC CodesSahar Foroughi This document provides an overview of low-density parity-check (LDPC) codes. It discusses Shannon's coding theorem and the evolution of coding technology. LDPC codes were invented by Gallager in 1963 and have simple decoding algorithms that allow them to achieve performance close to the Shannon limit. The document defines regular and irregular LDPC codes using parity check matrices and Tanner graphs. It also discusses code construction, applications of LDPC codes in wireless communications standards, and concludes that LDPC codes are becoming the mainstream in coding technology.

Andes open cl for RISC-V

Andes open cl for RISC-VRISC-V International This document discusses OpenCL support for RISC-V cores. It provides an introduction to OpenCL and describes how it can be used for heterogeneous platforms with RISC-V cores. It outlines an OpenCL framework for RISC-V with the host on x86 and devices as RISC-V cores like the AndeSim NX27V. It also describes OpenCL C extensions for the RISC-V Vector extension and the compilation flow from OpenCL C to LLVM IR to target binaries. Current status includes passing most OpenCL conformance tests on QEMU and work ongoing for the x86+AndeSim platform.

Everything You Need to Know About the Intel® MPI Library

Everything You Need to Know About the Intel® MPI LibraryIntel® Software The document discusses tuning the Intel MPI library. It begins with an introduction to factors that impact MPI performance like CPUs, memory, network speed and job size. It notes that MPI libraries must make choices that may not be optimal for all applications. The document then outlines its plan to cover basic tuning techniques like profiling, hostfiles and process placement, as well as intermediate topics like point-to-point optimization and collective tuning. The goal is to help reduce time and memory usage of MPI applications.

Lecture 14 run time environment

Lecture 14 run time environmentIffat Anjum The document discusses run-time environments and how compilers support program execution through run-time environments. It covers:

1) The compiler cooperates with the OS and system software through a run-time environment to implement language abstractions during execution.

2) The run-time environment handles storage layout/allocation, variable access, procedure linkage, parameter passing and interfacing with the OS.

3) Memory is typically divided into code, static storage, heap and stack areas, with the stack and heap growing towards opposite ends of memory dynamically during execution.

Spectra DTP4700 Linux Based Development for Software Defined Radio (SDR) Soft...

Spectra DTP4700 Linux Based Development for Software Defined Radio (SDR) Soft...ADLINK Technology IoT These slides focus on the latest addition to PrismTech’s Spectra DTP family of advanced Software Communications Architecture (SCA) compliant and Radio Frequency (FR) capable Software Defined Radio (SDR) and test platforms. Spectra DTP4700 is based on the latest digital processing subsystem from Mistral and contains a high performance Texas Instruments AM/DM37x Sitara processor with GPP and DSP functionality on the same chip. When combined with DataSoft’s Monsoon Transceiver, DTP4700 provides a fully-fieldable radio with a base level of RF performance between 400 MHz and 4 GHz. For ease of development DTP4700 provides an integrated SCA Operating Environment containing an optimised Linux operating system, SCA Core Framework (CF), CORBA middleware, SCA platform and SCA sample waveform. Spectra DTP4700 is fully supported by PrismTech’s market leading Spectra CX tool for SCA waveform modeling, code generation and compliance validation. Spectra DTP4700 is an affordable, yet functionally advanced desktop system targeted at waveform and application development/test teams in major radio OEMs and their end customers, advanced wireless communications (government and defense) laboratories conducting research in fields such as cognitive radio, electronic warfare, and secure SDR waveforms, internal research and development (IR&D) projects and independent SCA developers creating software IP for the SDR market. For further information please visit http://www.prismtech.com/spectra/products

Pragmatic optimization in modern programming - modern computer architecture c...

Pragmatic optimization in modern programming - modern computer architecture c...Marina Kolpakova There are three key aspects of computer architecture: instruction set architecture, microarchitecture, and hardware design. Modern architectures aim to either hide latency or maximize throughput. Reduced instruction set computers (RISC) became popular due to simpler decoding and pipelining allowing higher clock speeds. While complex instruction set computers (CISC) focused on code density, RISC architectures are now dominant due to their efficiency. Very long instruction word (VLIW) and vector processors targeted specialized workloads but their concepts influence modern designs. Load-store RISC architectures with fixed-width instructions and minimal addressing modes provide an optimal balance between performance and efficiency.

Lecture summary: architectures for baseband signal processing of wireless com...

Lecture summary: architectures for baseband signal processing of wireless com...Frank Kienle The problem with this parallel processing of the interleaver is that it requires random access to the memory locations storing the interleaved addresses. However, achieving random access to multiple memory locations in parallel is difficult and inefficient in hardware implementations. It is better to generate the interleaved addresses sequentially rather than requiring parallel random access.

Ag32224229

Ag32224229IJERA Editor This document proposes implementing a product Reed-Solomon code on an FPGA chip for a NAND flash memory controller to correct errors. It discusses using a (255,223) product Reed-Solomon code with two shortened RS codes arranged column-wise and one conventional RS code arranged row-wise. This structure allows correcting multiple random and burst errors. The proposed coding scheme is tested on an FPGA simulator and can correct up to 16 symbol errors, providing lower decoding complexity than BCH codes commonly used for NAND flash memories.

Ad

More Related Content

What's hot (20)

A Library for Emerging High-Performance Computing Clusters

A Library for Emerging High-Performance Computing ClustersIntel® Software This document discusses the challenges of developing communication libraries for exascale systems using hybrid MPI+X programming models. It describes how current MPI+PGAS approaches use separate runtimes, which can lead to issues like deadlock. The document advocates for a unified runtime that can support multiple programming models simultaneously to avoid such issues and enable better performance. It also outlines MVAPICH2's work on designs like multi-endpoint that integrate MPI and OpenMP to efficiently support emerging highly threaded systems.

FPGA Implementation of Mixed Radix CORDIC FFT

FPGA Implementation of Mixed Radix CORDIC FFTIJSRD In this Paper, the architecture and FPGA implementation of a Coordinate Rotation Digital Computer (CORDIC) pipeline Fast Fourier Transform (FFT) processor is presented. Fast Fourier Transforms (FFT) is highly efficient algorithm which uses Divide and Conquer approach for speedy calculation of Discrete Fourier transform (DFT) to obtain the frequency spectrum. CORDIC algorithm which is hardware efficient and avoids the use of conventional multiplication and accumulation (MAC) units but evaluates the trigonometric functions by the rotation of a complex vector by means of only add and shift operations. We have developed Fixed point FFT processors using VHDL language for implementation on Field Programmable Gate Array. A Mixed Radix 8 point DIF FFT/IFFT architecture with CORDIC Twiddle factor generation unit with use of pipeline implementation FFT processor has been developed using Xilinx XC3S500E Spartan-3E FPGA and simulated with maximum frequency of 157.359 MHz for 16 bit length 8 point FFT. Results show that the processor uses less number of LUTs and achieves Maximum Frequency.

A High Performance Heterogeneous FPGA-based Accelerator with PyCoRAM (Runner ...

A High Performance Heterogeneous FPGA-based Accelerator with PyCoRAM (Runner ...Shinya Takamaeda-Y A High Performance Heterogeneous FPGA-based Accelerator with PyCoRAM (Received Runner Up Award at Digilent Design Contest 2014 Japan Region)

My review on low density parity check codes

My review on low density parity check codespulugurtha venkatesh This document discusses low-density parity-check (LDPC) codes. It begins with an overview of LDPC codes, noting they were originally invented in the 1960s but gained renewed interest after turbo codes. It then covers LDPC code performance and construction, including generator and parity check matrices. Various representations of LDPC codes are examined, such as matrix and graphical representations using Tanner graphs. Applications of LDPC codes include wireless, wired, and optical communications. In conclusions, turbo codes achieved theoretical limits with a small gap and led to new codes like LDPC codes, which provide high-speed and high-throughput performance close to the Shannon limit.

Design and Implementation of Area Efficiency AES Algoritham with FPGA and ASIC,

Design and Implementation of Area Efficiency AES Algoritham with FPGA and ASIC,paperpublications3 Abstract: A public domain encryption standard is subject to continuous, vigilant, expert cryptanalysis. AES is a symmetric encryption algorithm processing data in block of 128 bits. Under the influence of a key, a 128-bit block is encrypted by transforming it in a unique way into a new block of the same size. To implement AES Rijndael algorithm on FPGA using Verilog and synthesis using Xilinx, Plain text of 128 bit data is considered for encryption using Rijndael algorithm utilizing key. This encryption method is versatile used for military applications. The same key is used for decryption to recover the original 128 bit plain text. For high speed applications, the Non LUT based implementation of AES S-box and inverse S-box is preferred. Development of physical design of AES-128 bit is done using cadence SoC encounter. Performance evaluation of the physical design with respect to area, power, and time has been done. The core consumes 10.11 mW of power for the core area of 330100.742 μm2.

Keywords: Encryption, Decryption Rijndael algorithm, FPGA implementation, Physical Design.

Parallella: Embedded HPC For Everybody

Parallella: Embedded HPC For Everybodyjerlbeck This talk is about architecture and programming of the Epiphany processor on the Parallella board, discussing step-by-step how to improve and optimize software kernels on such distributed DSP systems. It was held at the "Softwarekonferenz für Parallel Programming, Concurrency

und Multicore-Systeme" in Karlsruhe/Germany 2014.

2 1

2 1Arthur Sanchez This document describes a bit-serial message-passing low-density parity-check (LDPC) decoder. It discusses the history and use of channel coding and different coding techniques. It then explains the structure and min-sum decoding algorithm for LDPC codes. The document proposes a bit-serial message passing technique for the decoder architecture to reduce routing congestion compared to fully parallel architectures. It provides implementation details of an application-specific integrated circuit (ASIC) and field-programmable gate array (FPGA) version of the decoder.

Lzw

LzwDaniel A Lempel-Ziv-Welch (LZW) is a universal lossless data compression algorithm that replaces strings of characters with single codes, achieving smaller file sizes and faster transmission. LZW is commonly used to compress files like TIFF, GIF, PDF, and in file compression formats like Unix Compress and gzip. It works by building a table of strings and assigning a code whenever it encounters a new string, allowing for efficient encoding of repeated patterns in data.

64bit SMP OS for TILE-Gx many core processor

64bit SMP OS for TILE-Gx many core processorToru Nishimura concise introduction of TILE-Gx many-core processor design and features, how SMP operating system was ported for the processor, and the proposed applications for the up to 72 core computer systems.

FNR : Arbitrary length small domain block cipher proposal

FNR : Arbitrary length small domain block cipher proposalSashank Dara We propose a practical flexible (or arbitrary) length small domain block cipher, FNR encryption scheme. FNR denotes Flexible Naor and Reingold. It can cipher small domain data formats like IPv4, Port numbers, MAC Addresses, Credit card numbers, any random short strings while preserving their input length. In addition to the classic Feistel networks, Naor and Reingold propose usage of Pair-wise independent permutation (PwIP) functions based on Galois Field GF(2 n). Instead we propose usage of random N ×N Invertible matrices in GF(2)

HKG18-411 - Introduction to OpenAMP which is an open source solution for hete...

HKG18-411 - Introduction to OpenAMP which is an open source solution for hete...Linaro Session ID: HKG18-411

Session Name: HKG18-411 - Introduction to OpenAMP which is an open source solution for heterogeneous system orchestration and communication

Speaker: Wendy Liang

Track: IoT, Embedded

★ Session Summary ★

Introduction to OpenAMP which is an open source solution for heterogeneous system orchestration and communication

---------------------------------------------------

★ Resources ★

Event Page: http://connect.linaro.org/resource/hkg18/hkg18-411/

Presentation: http://connect.linaro.org.s3.amazonaws.com/hkg18/presentations/hkg18-411.pdf

Video: http://connect.linaro.org.s3.amazonaws.com/hkg18/videos/hkg18-411.mp4

---------------------------------------------------

★ Event Details ★

Linaro Connect Hong Kong 2018 (HKG18)

19-23 March 2018

Regal Airport Hotel Hong Kong

---------------------------------------------------

Keyword: IoT, Embedded

'http://www.linaro.org'

'http://connect.linaro.org'

---------------------------------------------------

Follow us on Social Media

https://www.facebook.com/LinaroOrg

https://www.youtube.com/user/linaroorg?sub_confirmation=1

https://www.linkedin.com/company/1026961

Polyraptor

PolyraptorMohammedAlasmar2 The document discusses Polyraptor, a transport protocol designed for data center networks. It supports various data transfer patterns including:

- One-to-many: Where clients fetch data from multiple servers. Polyraptor uses RaptorQ erasure coding where encoding symbols from different senders can be used to decode the original data.

- Many-to-one: Where data is replicated to multiple servers. With RaptorQ, each server can contribute encoding symbols at its available capacity to transmit the data.

- Incast: RaptorQ's rateless and systematic properties make it resilient to packet loss and out-of-order delivery, eliminating the need for extensive buffering.

Introduction to DPDK RIB library

Introduction to DPDK RIB libraryГлеб Хохлов The document introduces the DPDK RIB and LPM libraries. The RIB library contains route metadata and supports various management plane lookups through its compressed binary tree. It allows faster route insertion than LPM and supports storing metadata with routes. The LPM library focuses on fast data plane lookups but does not support metadata or management operations. Improvements to the DIR24-8 algorithm in RIB include eliminating redundant tables, faster modifications, and supporting larger next hop sizes.

Ppt fnr arbitrary length small domain block cipher proposal

Ppt fnr arbitrary length small domain block cipher proposalKarunakar Saroj FNR is a proposed block cipher that can encrypt data of arbitrary lengths, from 32 to 128 bits, while preserving the input length. It uses a Feistel network structure with pairwise independent permutations and AES as the round function. The key advantages are that it has no length expansion, preserves the input range, and supports arbitrary length inputs without depending on key length. However, it has disadvantages of potential performance overhead, lack of integrity features, and vulnerability to attacks when used in ECB mode.

LDPC Codes

LDPC CodesSahar Foroughi This document provides an overview of low-density parity-check (LDPC) codes. It discusses Shannon's coding theorem and the evolution of coding technology. LDPC codes were invented by Gallager in 1963 and have simple decoding algorithms that allow them to achieve performance close to the Shannon limit. The document defines regular and irregular LDPC codes using parity check matrices and Tanner graphs. It also discusses code construction, applications of LDPC codes in wireless communications standards, and concludes that LDPC codes are becoming the mainstream in coding technology.

Andes open cl for RISC-V

Andes open cl for RISC-VRISC-V International This document discusses OpenCL support for RISC-V cores. It provides an introduction to OpenCL and describes how it can be used for heterogeneous platforms with RISC-V cores. It outlines an OpenCL framework for RISC-V with the host on x86 and devices as RISC-V cores like the AndeSim NX27V. It also describes OpenCL C extensions for the RISC-V Vector extension and the compilation flow from OpenCL C to LLVM IR to target binaries. Current status includes passing most OpenCL conformance tests on QEMU and work ongoing for the x86+AndeSim platform.

Everything You Need to Know About the Intel® MPI Library

Everything You Need to Know About the Intel® MPI LibraryIntel® Software The document discusses tuning the Intel MPI library. It begins with an introduction to factors that impact MPI performance like CPUs, memory, network speed and job size. It notes that MPI libraries must make choices that may not be optimal for all applications. The document then outlines its plan to cover basic tuning techniques like profiling, hostfiles and process placement, as well as intermediate topics like point-to-point optimization and collective tuning. The goal is to help reduce time and memory usage of MPI applications.

Lecture 14 run time environment

Lecture 14 run time environmentIffat Anjum The document discusses run-time environments and how compilers support program execution through run-time environments. It covers:

1) The compiler cooperates with the OS and system software through a run-time environment to implement language abstractions during execution.

2) The run-time environment handles storage layout/allocation, variable access, procedure linkage, parameter passing and interfacing with the OS.

3) Memory is typically divided into code, static storage, heap and stack areas, with the stack and heap growing towards opposite ends of memory dynamically during execution.

Spectra DTP4700 Linux Based Development for Software Defined Radio (SDR) Soft...

Spectra DTP4700 Linux Based Development for Software Defined Radio (SDR) Soft...ADLINK Technology IoT These slides focus on the latest addition to PrismTech’s Spectra DTP family of advanced Software Communications Architecture (SCA) compliant and Radio Frequency (FR) capable Software Defined Radio (SDR) and test platforms. Spectra DTP4700 is based on the latest digital processing subsystem from Mistral and contains a high performance Texas Instruments AM/DM37x Sitara processor with GPP and DSP functionality on the same chip. When combined with DataSoft’s Monsoon Transceiver, DTP4700 provides a fully-fieldable radio with a base level of RF performance between 400 MHz and 4 GHz. For ease of development DTP4700 provides an integrated SCA Operating Environment containing an optimised Linux operating system, SCA Core Framework (CF), CORBA middleware, SCA platform and SCA sample waveform. Spectra DTP4700 is fully supported by PrismTech’s market leading Spectra CX tool for SCA waveform modeling, code generation and compliance validation. Spectra DTP4700 is an affordable, yet functionally advanced desktop system targeted at waveform and application development/test teams in major radio OEMs and their end customers, advanced wireless communications (government and defense) laboratories conducting research in fields such as cognitive radio, electronic warfare, and secure SDR waveforms, internal research and development (IR&D) projects and independent SCA developers creating software IP for the SDR market. For further information please visit http://www.prismtech.com/spectra/products

Pragmatic optimization in modern programming - modern computer architecture c...

Pragmatic optimization in modern programming - modern computer architecture c...Marina Kolpakova There are three key aspects of computer architecture: instruction set architecture, microarchitecture, and hardware design. Modern architectures aim to either hide latency or maximize throughput. Reduced instruction set computers (RISC) became popular due to simpler decoding and pipelining allowing higher clock speeds. While complex instruction set computers (CISC) focused on code density, RISC architectures are now dominant due to their efficiency. Very long instruction word (VLIW) and vector processors targeted specialized workloads but their concepts influence modern designs. Load-store RISC architectures with fixed-width instructions and minimal addressing modes provide an optimal balance between performance and efficiency.

Spectra DTP4700 Linux Based Development for Software Defined Radio (SDR) Soft...

Spectra DTP4700 Linux Based Development for Software Defined Radio (SDR) Soft...ADLINK Technology IoT

Similar to QuadIron An open source library for number theoretic transform-based erasure codes (20)

Lecture summary: architectures for baseband signal processing of wireless com...

Lecture summary: architectures for baseband signal processing of wireless com...Frank Kienle The problem with this parallel processing of the interleaver is that it requires random access to the memory locations storing the interleaved addresses. However, achieving random access to multiple memory locations in parallel is difficult and inefficient in hardware implementations. It is better to generate the interleaved addresses sequentially rather than requiring parallel random access.

Ag32224229

Ag32224229IJERA Editor This document proposes implementing a product Reed-Solomon code on an FPGA chip for a NAND flash memory controller to correct errors. It discusses using a (255,223) product Reed-Solomon code with two shortened RS codes arranged column-wise and one conventional RS code arranged row-wise. This structure allows correcting multiple random and burst errors. The proposed coding scheme is tested on an FPGA simulator and can correct up to 16 symbol errors, providing lower decoding complexity than BCH codes commonly used for NAND flash memories.

Netflix Open Source Meetup Season 4 Episode 2

Netflix Open Source Meetup Season 4 Episode 2aspyker In this episode, we will take a close look at 2 different approaches to high-throughput/low-latency data stores, developed by Netflix.

The first, EVCache, is a battle-tested distributed memcached-backed data store, optimized for the cloud. You will also hear about the road ahead for EVCache it evolves into an L1/L2 cache over RAM and SSDs.

The second, Dynomite, is a framework to make any non-distributed data-store, distributed. Netflix's first implementation of Dynomite is based on Redis.

Come learn about the products' features and hear from Thomson and Reuters, Diego Pacheco from Ilegra and other third party speakers, internal and external to Netflix, on how these products fit in their stack and roadmap.

FEC & File Multicast

FEC & File MulticastYoss Cohen The document discusses applications and simulations of error correction coding (ECC) for multicast file transfer. It provides an overview of different ECC and feedback-based multicast protocols and evaluates their performance based on simulations. Reed-Solomon coding on blocks provided faster decoding times than on entire files, while tornado coding had the fastest decoding but required slightly more packets for reconstruction. Simulations of protocols like MFTP and MFTP/EC using network simulators showed that using ECC like Reed-Muller codes significantly improved performance over regular MFTP.

Introduction to DPDK

Introduction to DPDKKernel TLV DPDK is a set of drivers and libraries that allow applications to bypass the Linux kernel and access network interface cards directly for very high performance packet processing. It is commonly used for software routers, switches, and other network applications. DPDK can achieve over 11 times higher packet forwarding rates than applications using the Linux kernel network stack alone. While it provides best-in-class performance, DPDK also has disadvantages like reduced security and isolation from standard Linux services.

Accelerate Reed-Solomon coding for Fault-Tolerance in RAID-like system

Accelerate Reed-Solomon coding for Fault-Tolerance in RAID-like systemShuai Yuan The document discusses accelerating Reed-Solomon erasure codes on GPUs. It aims to accelerate two main computation bottlenecks: arithmetic operations in Galois fields and matrix multiplication. For Galois field operations, it evaluates loop-based and table-based methods and chooses a log-exponential table approach. It also proposes tiling algorithms to optimize matrix multiplication on GPUs by reducing data transfers and improving memory access patterns. The goal is to make Reed-Solomon encoding and decoding faster for cloud storage systems using erasure codes.

Application Caching: The Hidden Microservice (SAConf)

Application Caching: The Hidden Microservice (SAConf)Scott Mansfield Presentation given at the O'Reilly Software Architecture Conference in San Francisco on November 15, 2016.

24-02-18 Rejender pratap.pdf

24-02-18 Rejender pratap.pdfFrangoCamila This document discusses the basics of RTL design and synthesis. It covers stages of synthesis including identifying state machines, inferring logic and state elements, optimization, and mapping to target technology. It notes that not everything that can be simulated can be synthesized. Good coding style reduces hazards and improves optimization. Examples are given of how logic, sequential logic, and datapaths can be synthesized. Pipelining is discussed as dividing complex operations into simpler operations processed sequentially.

Application Caching: The Hidden Microservice

Application Caching: The Hidden MicroserviceScott Mansfield 1. Application caching is used heavily at Netflix to improve performance for many microservices and user experiences like the home page, video playback, and personalization.

2. EVCache is Netflix's custom key-value cache that is distributed, replicated across AWS regions and optimized for their use cases. It handles trillions of operations per day across thousands of servers.

3. Moneta is the next generation of EVCache, using SSD storage in addition to RAM to reduce costs by 70% while maintaining performance. It intelligently manages hot and cold data between storage types.

Digital logic-formula-notes-final-1

Digital logic-formula-notes-final-1Kshitij Singh The document discusses number systems and coding schemes. It describes how to convert between decimal, binary, octal, hexadecimal and other number systems. It also discusses various coding schemes like binary coded decimal, excess-3 code, gray code, alphanumeric codes and complements. The key points are:

1) A number system with base 'r' contains 'r' different digits from 0 to r-1. Decimal to other bases conversions involve dividing the integer part by the base and multiplying the fractional part by the base.

2) Coding schemes discussed include binary coded decimal (BCD), excess-3 code, gray code, alphanumeric codes like EBCDIC.

3) Complements like 1's complement

Introduce Apache Cassandra - JavaTwo Taiwan, 2012

Introduce Apache Cassandra - JavaTwo Taiwan, 2012Boris Yen Cassandra is a distributed database that is highly scalable and fault tolerant. It uses a dynamic partitioning approach to distribute and replicate data across nodes. Cassandra offers tunable consistency levels and supports various client libraries like Hector and CQL for Java applications to interface with Cassandra. Some key features include horizontal scaling by adding nodes, replication of data for fault tolerance, and tunable consistency levels for reads and writes.

Raptor codes

Raptor codesJosé Lopes Fountain codes like Raptor codes allow reliable transmission of data over unreliable networks without requiring retransmissions. They work by encoding the original data into a limitless stream of encoding symbols such that recovering the original data requires receiving only a subset of the encoding symbols. Specifically, Raptor codes provide very low failure probabilities, faster encoding and decoding than previous fountain codes, and can recover from packet losses without requiring a reverse channel for retransmissions. They are well-suited for applications involving point-to-multipoint data transmission where retransmissions are costly or impossible, such as content delivery networks.

A Dataflow Processing Chip for Training Deep Neural Networks

A Dataflow Processing Chip for Training Deep Neural Networksinside-BigData.com In this deck from the Hot Chips conference, Chris Nicol from Wave Computing presents: A Dataflow Processing Chip for Training Deep Neural Networks.

Watch the video: https://wp.me/p3RLHQ-k6W

Learn more: https://wavecomp.ai/

and

http://www.hotchips.org/

Sign up for our insideHPC Newsletter: http://insidehpc.com/newsletter

CA-Lec4-RISCV-Instructions-1aaaaaaaaaa.pptx

CA-Lec4-RISCV-Instructions-1aaaaaaaaaa.pptxtrupeace This document discusses RISC-V instructions, specifically arithmetic and logical instructions. It begins with background on RISC vs CISC architectures and an overview of the RISC-V ISA. The document then covers different categories of RISC-V instructions like data processing, memory access, and branches. It provides examples of RISC-V arithmetic instructions like add and explains the instruction format and fields. Register conventions and an example register file implementation in Verilog are also summarized.

Simon Peyton Jones: Managing parallelism

Simon Peyton Jones: Managing parallelismSkills Matter If you want to program a parallel computer, it obviously makes sense to start with a computational paradigm in which parallelism is the default (ie functional programming), rather than one in which computation is based on sequential flow of control (the imperative paradigm). And yet, and yet ... functional programmers have been singing this tune since the 1980s, but do not yet rule the world. In this talk I’ll say why I think parallelism is too complex a beast to be slain at one blow, and how we are going to be driven, willy-nilly, towards a world in which side effects are much more tightly controlled than now. I’ll sketch a whole range of ways of writing parallel program in a functional paradigm (implicit parallelism, transactional memory, data parallelism, DSLs for GPUs, distributed processes, etc, etc), illustrating with examples from the rapidly moving Haskell community, and identifying some of the challenges we need to tackle.

Peyton jones-2011-parallel haskell-the_future

Peyton jones-2011-parallel haskell-the_futureTakayuki Muranushi The document discusses parallel programming approaches for multicore processors, advocating for using Haskell and embracing diverse approaches like task parallelism with explicit threads, semi-implicit parallelism by evaluating pure functions in parallel, and data parallelism. It argues that functional programming is well-suited for parallel programming due to its avoidance of side effects and mutable state, but that different problems require different solutions and no single approach is a silver bullet.

Unit IV Memory and Programmable Logic.pptx

Unit IV Memory and Programmable Logic.pptxJeevaSadhasivam The programmable array logic (PAL) is a logic device with fixed OR array and a programmable AND array. It is easier to program but not as flexible as PLA.

TiDB vs Aurora.pdf

TiDB vs Aurora.pdfssuser3fb50b TiDB and Amazon Aurora can be combined to provide analytics on transactional data without needing a separate data warehouse. TiDB Data Migration (DM) tool allows migrating and replicating data from Aurora into TiDB for analytics queries. DM provides full data migration and incremental replication of binlog events from Aurora into TiDB. This allows joining transactional and analytical workloads on the same dataset without needing ETL pipelines.

Reed solomon codes

Reed solomon codesSamreen Reyaz Ansari The document discusses Reed-Solomon codes, an error-correcting code invented in 1960 that remains widely used. It describes how Reed-Solomon codes work by adding redundant bits that allow the decoder to detect and correct a certain number of errors by processing each data block. Applications include data storage, wireless communications, QR codes, and more. The document also covers topics like symbol errors, decoding procedures, implementation methods, and finite field arithmetic used.

Current Trends in HPC

Current Trends in HPCPutchong Uthayopas This document discusses current trends in high performance computing. It begins with an introduction to high performance computing and its applications in science, engineering, business analysis, and more. It then discusses why high performance computing is needed due to changes in scientific discovery, the need to solve larger problems, and modern business needs. The document also discusses the top 500 supercomputers in the world and provides examples of some of the most powerful systems. It then covers performance development trends and challenges in increasing processor speeds. The rest of the document discusses parallel computing approaches using multi-core and many-core architectures, as well as cluster, grid, and cloud computing models for high performance.

Ad

More from Scality (13)

Introducing MetalK8s, An Opinionated Kubernetes Implementation

Introducing MetalK8s, An Opinionated Kubernetes ImplementationScality Scality Architect Nicolas Trangez introduces MetalK8s, an opinionated Kubernetes distribution with a focus on long-term on-prem deployments, launched by Scality to deploy its Zenko solution in customer data centers. Nicolas presented this at the OpenStack Summit in Vancouver, on May 22, 2018.

Wally MacDermid presents Scality Connect for Microsoft Azure at Microsoft Ign...

Wally MacDermid presents Scality Connect for Microsoft Azure at Microsoft Ign...Scality Wally MacDermid, Scality VP of Cloud Business Development, shares the an overview of Scality Connect for Microsoft Azure Blob Storage at the Microsoft Ignite Conference in Orlando, FL, September 28, 2017.

Storage that Powers Digital Business: Scality for Enterprise Backup

Storage that Powers Digital Business: Scality for Enterprise BackupScality Explore Scality Enterprise Backup solutions and how they dramatically reduce the risk of data loss. Discover more about Scality Ring at www.scality.com

2017 Hackathon Scality & 42 School

2017 Hackathon Scality & 42 SchoolScality Discover the hackathon Scality did at 42 School in September 2017! Tons of great ideas and exciting projects. Check this out at www.zenko.io

Leader in Cloud and Object Storage for Service Providers

Leader in Cloud and Object Storage for Service ProvidersScality Cloud-based services are growing as they become real opportunities for service providers. Discover more about Scality RING Software-Defined Object Storage. Learn more at www.scality.com.

Scality medical imaging storage

Scality medical imaging storageScality Discover how Scality RING is the right solution to store medical imaging. Visit www.scality.com for more details.

Zenko: Enabling Data Control in a Multi-cloud World

Zenko: Enabling Data Control in a Multi-cloud WorldScality Watch the webinar replay here:

http://www.zenko.io/webinar

Announcing New Scality Open Source Data Controller, Zenko

How to simplify your data management and get a global view across clouds?

Zenko, the new multi-cloud data controller by Scality, provides a unified interface across clouds. This allows any cloud to be accessed with the same API and access layer. It can run anywhere in physical, virtualized or cloud environments.

Zenko builds on the success of Scality Cloud Server, the open-source implementation of the Amazon S3 API, which has enjoyed more than half a million DockerHub downloads since it was introduced in June 2016. Scality is releasing this new code to the open source community, under an Apache 2.0 license, so that any developer can use and extend Zenko in their development.

Superior Streaming and CDN Solutions: Cloud Storage Revolutionizes Digital Media

Superior Streaming and CDN Solutions: Cloud Storage Revolutionizes Digital MediaScality This document discusses video streaming infrastructure requirements and how object storage can help meet those requirements. It outlines the key elements of a video streaming infrastructure, including scalable, flexible, high-performance storage. Traditional on-premises storage solutions have limitations around scaling and availability that can be addressed through an object storage approach. Object storage provides scalability to hundreds of petabytes, built-in data protection and no single point of failure. Real-world examples are presented of media companies using object storage as the origin server storage in hybrid on-premises and public cloud architectures.

AWS re:Invent 2016 - Scality's Open Source AWS S3 Server

AWS re:Invent 2016 - Scality's Open Source AWS S3 ServerScality Presented by Giorgio Regni, CTO

Try Scality S3 Server Today!

https://s3.scality.com/

http://www.scality.com/scality-s3-server/

https://hub.docker.com/r/scality/s3server/

Hackathon scality holberton seagate 2016 v5

Hackathon scality holberton seagate 2016 v5Scality S3 Server, a Scality product, was born after a hackathon in Paris, France in 2015. What better way to continue with our philosophy of innovation than to host a hackathon of our own?

On October 21st, coders joined us for a weekend of coding, developing new solutions for storage, integrations for S3 and much more!

This event was sponsored by Seagate and hosted at Holberton School.

S3 Server Hackathon Presented by S3 Server, a Scality Product, Seagate and Ho...

S3 Server Hackathon Presented by S3 Server, a Scality Product, Seagate and Ho...Scality S3 Server was founded by Scality, after a team created open source object-storage at a Hackathon in Paris, France. To keep our innovation, (and innovative team) growing, what better way than to host a hackathon of our own? The goal of the hackathon was to showcase the endless creativity in advancing storage applications, or integrations for current storage solutions. This 3-day event was sponsored by Seagate and Holberton School.

These slides are a recap from Day 1.

Scality S3 Server: Node js Meetup Presentation

Scality S3 Server: Node js Meetup PresentationScality This document discusses Scality's experiences building their first Node.js project. It summarizes that the project was building a TiVo-like cloud service for 25 million users, which required high parallelism and throughput of terabytes per second. It also discusses lessons learned around logging performance, optimizing the event loop and buffers, and useful Node.js tools.

Scality Holberton Interview Training

Scality Holberton Interview TrainingScality Scality CTO Giorgio Regni is mentoring Holberton School engineering students and training them on how to perform technical job interviews

Ad

Recently uploaded (20)

How analogue intelligence complements AI

How analogue intelligence complements AIPaul Rowe

Artificial Intelligence is providing benefits in many areas of work within the heritage sector, from image analysis, to ideas generation, and new research tools. However, it is more critical than ever for people, with analogue intelligence, to ensure the integrity and ethical use of AI. Including real people can improve the use of AI by identifying potential biases, cross-checking results, refining workflows, and providing contextual relevance to AI-driven results.

News about the impact of AI often paints a rosy picture. In practice, there are many potential pitfalls. This presentation discusses these issues and looks at the role of analogue intelligence and analogue interfaces in providing the best results to our audiences. How do we deal with factually incorrect results? How do we get content generated that better reflects the diversity of our communities? What roles are there for physical, in-person experiences in the digital world?

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager API

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager APIUiPathCommunity Join this UiPath Community Berlin meetup to explore the Orchestrator API, Swagger interface, and the Test Manager API. Learn how to leverage these tools to streamline automation, enhance testing, and integrate more efficiently with UiPath. Perfect for developers, testers, and automation enthusiasts!

📕 Agenda

Welcome & Introductions

Orchestrator API Overview

Exploring the Swagger Interface

Test Manager API Highlights

Streamlining Automation & Testing with APIs (Demo)

Q&A and Open Discussion

Perfect for developers, testers, and automation enthusiasts!

👉 Join our UiPath Community Berlin chapter: https://community.uipath.com/berlin/

This session streamed live on April 29, 2025, 18:00 CET.

Check out all our upcoming UiPath Community sessions at https://community.uipath.com/events/.

Special Meetup Edition - TDX Bengaluru Meetup #52.pptx

Special Meetup Edition - TDX Bengaluru Meetup #52.pptxshyamraj55 We’re bringing the TDX energy to our community with 2 power-packed sessions:

🛠️ Workshop: MuleSoft for Agentforce

Explore the new version of our hands-on workshop featuring the latest Topic Center and API Catalog updates.

📄 Talk: Power Up Document Processing

Dive into smart automation with MuleSoft IDP, NLP, and Einstein AI for intelligent document workflows.

Quantum Computing Quick Research Guide by Arthur Morgan

Quantum Computing Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

Into The Box Conference Keynote Day 1 (ITB2025)

Into The Box Conference Keynote Day 1 (ITB2025)Ortus Solutions, Corp This is the keynote of the Into the Box conference, highlighting the release of the BoxLang JVM language, its key enhancements, and its vision for the future.

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In France

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In Francechb3 The latest updates on Manifest's pre-seed stage progress.

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...Alan Dix Talk at the final event of Data Fusion Dynamics: A Collaborative UK-Saudi Initiative in Cybersecurity and Artificial Intelligence funded by the British Council UK-Saudi Challenge Fund 2024, Cardiff Metropolitan University, 29th April 2025

https://alandix.com/academic/talks/CMet2025-AI-Changes-Everything/

Is AI just another technology, or does it fundamentally change the way we live and think?

Every technology has a direct impact with micro-ethical consequences, some good, some bad. However more profound are the ways in which some technologies reshape the very fabric of society with macro-ethical impacts. The invention of the stirrup revolutionised mounted combat, but as a side effect gave rise to the feudal system, which still shapes politics today. The internal combustion engine offers personal freedom and creates pollution, but has also transformed the nature of urban planning and international trade. When we look at AI the micro-ethical issues, such as bias, are most obvious, but the macro-ethical challenges may be greater.

At a micro-ethical level AI has the potential to deepen social, ethnic and gender bias, issues I have warned about since the early 1990s! It is also being used increasingly on the battlefield. However, it also offers amazing opportunities in health and educations, as the recent Nobel prizes for the developers of AlphaFold illustrate. More radically, the need to encode ethics acts as a mirror to surface essential ethical problems and conflicts.

At the macro-ethical level, by the early 2000s digital technology had already begun to undermine sovereignty (e.g. gambling), market economics (through network effects and emergent monopolies), and the very meaning of money. Modern AI is the child of big data, big computation and ultimately big business, intensifying the inherent tendency of digital technology to concentrate power. AI is already unravelling the fundamentals of the social, political and economic world around us, but this is a world that needs radical reimagining to overcome the global environmental and human challenges that confront us. Our challenge is whether to let the threads fall as they may, or to use them to weave a better future.

HCL Nomad Web – Best Practices and Managing Multiuser Environments

HCL Nomad Web – Best Practices and Managing Multiuser Environmentspanagenda Webinar Recording: https://www.panagenda.com/webinars/hcl-nomad-web-best-practices-and-managing-multiuser-environments/

HCL Nomad Web is heralded as the next generation of the HCL Notes client, offering numerous advantages such as eliminating the need for packaging, distribution, and installation. Nomad Web client upgrades will be installed “automatically” in the background. This significantly reduces the administrative footprint compared to traditional HCL Notes clients. However, troubleshooting issues in Nomad Web present unique challenges compared to the Notes client.

Join Christoph and Marc as they demonstrate how to simplify the troubleshooting process in HCL Nomad Web, ensuring a smoother and more efficient user experience.

In this webinar, we will explore effective strategies for diagnosing and resolving common problems in HCL Nomad Web, including

- Accessing the console

- Locating and interpreting log files

- Accessing the data folder within the browser’s cache (using OPFS)

- Understand the difference between single- and multi-user scenarios

- Utilizing Client Clocking

Role of Data Annotation Services in AI-Powered Manufacturing

Role of Data Annotation Services in AI-Powered ManufacturingAndrew Leo From predictive maintenance to robotic automation, AI is driving the future of manufacturing. But without high-quality annotated data, even the smartest models fall short.

Discover how data annotation services are powering accuracy, safety, and efficiency in AI-driven manufacturing systems.

Precision in data labeling = Precision on the production floor.

Splunk Security Update | Public Sector Summit Germany 2025

Splunk Security Update | Public Sector Summit Germany 2025Splunk Splunk Security Update

Sprecher: Marcel Tanuatmadja

Cybersecurity Identity and Access Solutions using Azure AD

Cybersecurity Identity and Access Solutions using Azure ADVICTOR MAESTRE RAMIREZ Cybersecurity Identity and Access Solutions using Azure AD

Cyber Awareness overview for 2025 month of security

Cyber Awareness overview for 2025 month of securityriccardosl1 Cyber awareness training educates employees on risk associated with internet and malicious emails

Massive Power Outage Hits Spain, Portugal, and France: Causes, Impact, and On...

Massive Power Outage Hits Spain, Portugal, and France: Causes, Impact, and On...Aqusag Technologies In late April 2025, a significant portion of Europe, particularly Spain, Portugal, and parts of southern France, experienced widespread, rolling power outages that continue to affect millions of residents, businesses, and infrastructure systems.

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...organizerofv IEDM 2024 Tutorial2

Build Your Own Copilot & Agents For Devs

Build Your Own Copilot & Agents For DevsBrian McKeiver May 2nd, 2025 talk at StirTrek 2025 Conference.

Technology Trends in 2025: AI and Big Data Analytics

Technology Trends in 2025: AI and Big Data AnalyticsInData Labs At InData Labs, we have been keeping an ear to the ground, looking out for AI-enabled digital transformation trends coming our way in 2025. Our report will provide a look into the technology landscape of the future, including:

-Artificial Intelligence Market Overview

-Strategies for AI Adoption in 2025

-Anticipated drivers of AI adoption and transformative technologies

-Benefits of AI and Big data for your business

-Tips on how to prepare your business for innovation

-AI and data privacy: Strategies for securing data privacy in AI models, etc.

Download your free copy nowand implement the key findings to improve your business.

Andrew Marnell: Transforming Business Strategy Through Data-Driven Insights

Andrew Marnell: Transforming Business Strategy Through Data-Driven InsightsAndrew Marnell With expertise in data architecture, performance tracking, and revenue forecasting, Andrew Marnell plays a vital role in aligning business strategies with data insights. Andrew Marnell’s ability to lead cross-functional teams ensures businesses achieve sustainable growth and operational excellence.

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...TrustArc Most consumers believe they’re making informed decisions about their personal data—adjusting privacy settings, blocking trackers, and opting out where they can. However, our new research reveals that while awareness is high, taking meaningful action is still lacking. On the corporate side, many organizations report strong policies for managing third-party data and consumer consent yet fall short when it comes to consistency, accountability and transparency.

This session will explore the research findings from TrustArc’s Privacy Pulse Survey, examining consumer attitudes toward personal data collection and practical suggestions for corporate practices around purchasing third-party data.

Attendees will learn:

- Consumer awareness around data brokers and what consumers are doing to limit data collection

- How businesses assess third-party vendors and their consent management operations

- Where business preparedness needs improvement

- What these trends mean for the future of privacy governance and public trust

This discussion is essential for privacy, risk, and compliance professionals who want to ground their strategies in current data and prepare for what’s next in the privacy landscape.

QuadIron An open source library for number theoretic transform-based erasure codes

- 1. A Library for Number Theoretic Transforms Erasure Codes Zenko Live: QuadIron

- 2. Agenda • Introduction to Zenko • The problem space of data resiliency • Giorgio Regni, CTO • QuadIron demo • Vianney Rancurel, R&D • Questions and Answers What’s Coming

- 3. QuadIron

- 4. Why? Planetary scale decentralized storage: • Distributing data over hundreds of drives, servers, locations with minimum overhead • Guaranteeing that each parities is useful and can help reconstruct the original data • Keeping the data secure even though fragments are present in many different places

- 5. Pro Tip: When you get stuck, change vectorial space To be updated by Giorgio

- 6. What happens when you need more parities?

- 7. Demo • Video file of ~90MB • Using coding 90+160 • Split in 90 fragments of 1MB: x.00 … x.89 • Generate 160 parities (in fact 250) (overhead of 2.77): x.c00 … x.c249 (non-systematic code) • Delete data fragments • Delete 100 parities (tolerate 100 drive failures!) • Repair • Play the video

- 8. QuadIron

- 9. Lib QuadIron is online Forum https://forum.zenko.io Website: www.zenko.io/blog Code: https://github.com/scality/quadiron https://www.zenko.io/blog/free-library-erasure-codes/

- 11. Backup

- 12. Properties of Erasure Codes: Definition A C(n,k) erasure code is defined by n=k+m ❒ k being the number of data fragments. ❒ m being the number of desired erasure fragments. Example: C(9, 6)

- 13. Properties of Erasure Codes ❒ Optimality: e.g. MDS (Maximum Distance Separable) erasure code guarantees that any k fragments can be used to decode a file ❒ Systematicity: Systematic codes generate n-k erasure fragments and therefore maintain k data fragments. Non-systematic codes generate n erasure fragments ❒ Speed: Erasure codes are characterized by their encode/decode speed. Speed may vary acc/to the rate (k and m parameters). Speeds may also be more or less predictive acc/to codes. ❒ Rate sensitivity: Erasure codes can also be compared by their sensitivity to the rate r=k/n, which may or may not impact the encoding and decoding speed ❒ Rate adaptivity: Changing k and m without having to generate all the erasure codes ❒ Confidentiality: determined if an attacker can partially decode the data if he obtains less than k fragments. Non-systematic codes are confidential (different from threshold schemes) ❒ Repair Bandwidth: the number of fragments required to repair a fragment.

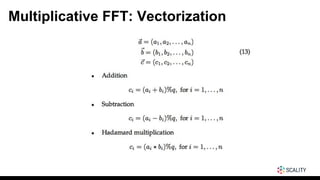

- 14. (Main) Types of Erasure Codes ❒ Traditional RS Codes (e.g. Vandermonde or Cauchy matrices) ❒ LDPC Codes ❒ Locally-Repairable-Codes (LRC) ❒ FFT Based RS Codes ❒ Multiplicative FFTs (prime fields) ❒ Additive FFTs (binary extension fields)

- 15. Types of Codes: Traditional RS Codes

- 16. Types of Codes: Traditional RS Codes

- 17. Types of Codes: Traditional RS Codes The good: ❒ Simple ❒ Support systematic and adaptive rates. The bad: ❒ Matrix multiplication: O(k x n)

- 18. Types of Codes: LDPC Codes ❏ H is a matrix for a C(8,4) code ❏ wc is the number of 1 in a col ❏ wr is the number of 1s in a row ❏ To be called low density wc << n and wr << m ❏ Regular if wc constant and wr = wc .(n/m) ❏ Matrix can be generated pseudo-randomly ❏ Presence of short cycles f1, f2 bad Source: Bernhard M.J. Leiner

- 19. Types of Codes: LDPC Codes Low-Density-Parity-Check (LDPC) codes are also an important class of erasure codes and are constructed over sparse parity-check matrices. The good: ❒ Theoretically an LDPC code optimal for all the interesting properties for a given use case exist. The bad: ❒ LDPC are not MDS: it is always possible to find a pattern that cannot decode (e.g. having only k fragments out of n). Overhead is k*f or k+f with a small f, but the overhead is not deterministic. ❒ You can always find/design an LDPC code optimized for few properties (i.e. tailored for a specific use case) but it will be sub-optimal for the other properties ❒ Designing a good LDPC code is some kind of black art that requires a lot of fine tuning and experimentation.

- 20. Types of Codes: LRC Codes ❏ P1, P2, P3 and P4 are constructed over a standard RS ❏ S1 + S2 + S3 = 0 ❏ No need to store S3 Source: XORing Elephants: Novel Erasure Codes for Big Data

- 21. Types of Codes: LRC Codes Locally-Repairable-Codes (LRC) have tackled the repair bandwidth issue of the RS codes. They combine multiple layers of RS: the local codes and the global codes. The good: ❒ Better repair bandwidth than RS codes. Because with RS code we need to read k fragments to decode. The bad: ❒ Those codes are not MDS and they require an higher storage overhead than MDS codes.

- 22. Types of Codes: Multiplicative FFT

- 23. Types of Codes: Multiplicative FFT

- 24. Types of Codes: Additive FFT

- 25. Types of Codes: FFT Based RS Codes Fast Fourier transform (FFT) have a good set of desirable properties. The good: ❒ Relatively simple ❒ O(N.log(N)) (because we use FFT to speed up the matrix multiplication) ❒ MDS ❒ Fast for large n The bad: ❒ Repair bandwidth: If there is a missing erasure, we need k codes to recover the data fragments. For systematic codes, in any case we need to download k codes.

- 27. Multiplicative FFT: Horizontal Vectorization

- 28. Multiplicative FFT: Vertical Vectorization

- 29. Multiplicative FFT: Vertical Vectorization

- 30. Multiplicative FFT: Vertical Vectorization

- 31. Multiplicative FFT: Vertical Vectorization

- 32. Speed Comparison ❏ Isa-l: Intel Intelligent Storage Acceleration Library. Matrix based RS HW accelerated: http://01.org/intel-storage-acceleration-library-open-source-version ❏ Wirehair: Fast and Portable Fountain Codes in C. Hybrid LDPC. https://github.com/catid/wirehair ❏ Leopard: MDS Reed-Solomon Erasure Correction Codes for Large Data in C. Additive FFT based. https://github.com/catid/leopard Thanks Catid !

- 33. Types of Codes: Speed Comparison

- 34. Types of Codes: Speed Comparison

- 35. Types of Codes: Speed Comparison

- 36. Types of Codes: Speed Comparison

- 37. Types of Codes: Speed Comparison

- 38. Types of Codes: Speed Comparison

- 39. Types of Codes: Speed Comparison

- 40. Types of Codes: Speed Comparison

- 42. Application: Decentralized Storage Requirements for an erasure code for a decentralized storage archive: ❒ Simple (e.g. may compile on WASM) ❒ Fast, e.g. for > 24 fragments ❒ MDS: A rock solid contract ❒ Work with all rates, and all combinations of n and k ❒ Systematic for smaller fragments ❒ Non-systematic for larger fragments -> Confidentiality ensured if fragments not stored on same servers (not a threshold scheme though, must be combined with encryption) ❒ Repair-Bandwidth not critical

- 44. Application: Decentralized Storage ❒ Multiple locations, multiple servers per location ❒ Each server is a “Quadiron Provider” ❒ E.g. 10 locations on the globe with 5 servers/location: C(50,35) => can lose 3 locations or 15 servers for an overhead of 1.4 ❒ A server is just a bunch of disks, e.g. 45 drives ❒ Can have local parities on servers to avoid repairing too often on the network e.g. C(45, 40) = 1.125 ❒ Total overhead 1.4 * 1.125 = 1.57 ❒ E.g. w/ 10TB drives, 22PB => 14PB useful ❒ Use blockchain transactions to store the location of blocks ❒ E.g. using Parity, proof-of-work (non-trusted env) or proof-of-authority (trusted env => millions tx/s) ❒ Index the ledgers by block-ids ❒ Use the indexes to locate the blocks ❒ Consolidate indexes

- 45. Decentralized Storage: Zenko QuadIron ❏ Multi-cloud data controller ❏ 1 API endpoint S3 compatible ❏ Native cloud storage ❏ Metadata search across clouds ❏ 100% open source ❏ github.com/scality/zenko ❏ zenko.io ❏ forum.zenko.io ❏ Give us feedback ! ❏ Try the sandbox on Orbit ! S3 API Wasabi, Digital Ocean, etc

- 47. Using the Library C++ Library is available at: https://github.com/scality/quadiron LICENSE: BSD 3-clause Compiling: $ mkdir build $ cd build $ cmake -G 'Unix Makefiles' .. $ make

- 48. Using the Library: Code // #include <quadiron.h> const int word_size = 8; const int n_data = 16; const int n_parities = 64; const size_t pkt_size = 1024; quadiron::fec::RsFnt<T>* fec = new quadiron::fec::RsFnt<uint64_t>( quadiron::fec::FecType::NON_SYSTEMATIC, word_size, n_data, n_parities, pkt_size ); // encode std::vector<std::istream*> d_files(fec->n_data, nullptr); std::vector<std::ostream*> c_files(fec->n_outputs, nullptr); std::vector<quadiron::Properties> c_props(fec->n_outputs); fec->encode_packet(d_files, c_files, c_props); // decode std::vector<std::ostream*> r_files(fec->n_data, nullptr); fec->decode_bufs(d_files, c_files, c_props, r_files);