Ad

Supporting Change in Product Lines within the Context of Use Case-driven Development and Testing

- 1. .lusoftware verification & validation VVS Supporting Change in Product Lines within the Context of Use Case-driven Development and Testing Lionel Briand SnT Centre for Security, Reliability and Trust University of Luxembourg, Luxembourg

- 2. Context 2 International Electronics & Engineering (IEE) Software Verification and Validation Laboratory (SVV) BodySenseTM Seat Belt Reminder (SBR) Smart Trunk Opener (STO)

- 3. Research Team • Researchers: Ines Hajri (SnT, PhD thesis work), Arda Goknil (SnT), Thierry Stephany (IEE, industry partner) 3 Ines Hajri SnT Arda Goknil SnT Thierry Stephany IEE

- 4. Context 4 Automotive Domain Use Case Driven Development and Testing Product Line Use Case Diagram Use Case Specifications Domain Model Common RE practices

- 5. Common “PLM” Practice 5 STO requirements for C1 STO requirements for C2 STO requirements for C3 STO test suite for C1 STO test suite for C2 STO test suite for C3 evolves to (copy and modify) evolves to derived from derived from derived from Test engineer Requirements analyst Customer C1 Customer C2 Customer C3 evolves to (select, prioritize and modify) evolves to (copy and modify) (select, prioritize and modify)

- 6. PLM Practice • Despite many years of academic research, most companies follow ad-hoc PL practices. • Lack of systematic and convenient ways to handle variability in requirements among customers • Configuration of product specific (PS) requirements is manual, expensive, and error prone • Regression testing in product families is manual and expensive 6

- 7. Root Causes? • Representations of requirements and variability matter • Assumptions about requirements modeling • Overhead: Variability modeling (e.g., feature modeling), traceability, etc. • Lack of adequate, practical tooling providing benefits • Solution: Make realistic assumptions, minimize overhead 7

- 8. Research Questions • RQ1: How to model variability in use case and domain models without additional traceability to feature models? • RQ2: How to support requirements analysts in - making requirements configuration decisions? - generating product specific use case and domain models? - performing change impact analysis in use case models of a product family? • RQ3: How to classify and prioritize system test cases for new products in an effective manner? 8

- 9. 9 Proposed Methodology Sensors Recognize Gesture Identify System Operating Status Storing Error Status Provide System Operating Status Tester <<include>> <<Variant>> Store Error Status <<include>> Clearing Error Status <<Variant>> Clear Error Status 0..1 0..1 <<require>> STO Controller <<include>> Model Variability in Use Case and Domain Models PL Use Case Diagram PL Use Case Specifications PL Domain Model ¨ <<s>> <<p>> <<p>> <<m>> Interactive Configuration of PS Use Case and Domain Models ≠ Automated Configuration of PS Use Case and Domain Models Change Impact Analysis for Configuration Decision Changes Incremental Reconfiguration of PS Models {<latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit> PS Use Case Diagram Impact Analysis Report PS Use Case Specifications PS Domain Model PL: Product Line PS: Product Specific Automated Test Case Classification and Prioritization Æ Prioritized Test Suite for new Product

- 10. 10 Product Line Use Case Modeling Method (PUM) Sensors Recognize Gesture Identify System Operating Status Storing Error Status Provide System Operating Status Tester <<include>> <<Variant>> Store Error Status <<include>> Clearing Error Status <<Variant>> Clear Error Status 0..1 0..1 <<require>> STO Controller <<include>> Model Variability in Use Case and Domain Models PL Use Case Diagram PL Use Case Specifications PL Domain Model ¨ <<s>> <<p>> <<p>> <<m>> Interactive Configuration of PS Use Case and Domain Models ≠ Automated Configuration of PS Use Case and Domain Models Change Impact Analysis for Configuration Decision Changes Incremental Reconfiguration of PS Models {<latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit> PS Use Case Diagram Impact Analysis Report PS Use Case Specifications PS Domain Model PL: Product Line PS: Product Specific Automated Test Case Classification and Prioritization Æ Prioritized Test Suite for new Product

- 11. These approaches entail additional modeling and traceability effort into practice Related Work in Product Line Use Case Driven Development • Relating feature models and use cases [Griss et al., 1998; Eriksson et al., 2009; Buhne et al., 2006] • Modeling variability either in use case diagrams or use case specifications [Azevedo et al., 2012; John and Muthig, 2004; Halmans and Pohl, 2003] 11

- 12. Objective • Modeling variability in use case models in a practical way by: - relying only on commonly used artifacts in use case driven development - enabling automated guidance for product configuration and testing 12

- 13. 13 2. Model variability in use case specifications 3. Model variability in domain models Reuse existing work 1. Model variability in use case diagrams Introduce new extensions for use case specifications Reuse existing work [Yue et al., TOSEM’13] [Ziadi and Jezequel, SPLC’06][Halmans and Phol, SoSyM’03] A modeling method that cover the above artifacts {<latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit> Modeling Method: PUM

- 14. 14 Elicit Product Line Models 1 <<s>> <<p>> <<p>> <<m>> Sensors Recognize Gesture Identify System Operating Status Storing Error Status Provide System Operating Status Tester <<include>> <<Variant>> Store Error Status <<include>> Clearing Error Status <<Variant>> Clear Error Status 0..1 0..1 <<require>> STO Controller <<include>> Product Line Use Case Diagram Product Line Use Case Specifications Product Line Domain Model Check consistency among artifacts {<latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit> 2 •• •• •• •• •• •• •• •• List of Inconsistencies Uses Natural Language Processing Uses UML Profiles Overview of PUM

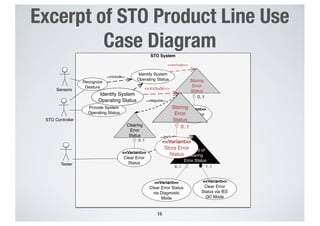

- 15. 15 STO System Sensors Recognize Gesture Identify System Operating Status Storing Error Status Provide System Operating Status Tester <<include>> <<Variant>> Store Error Status <<include>> Clearing Error Status <<Variant>> Clear Error Status 0..1 0..1 <<Variant>> Clear Error Status via Diagnostic Mode <<Variant>> Clear Error Status via IEE QC Mode 0..1 <<include>> Method of Clearing Error Status 1..1 <<require>> STO Controller <<include>> STO System Sensors Recognize Gesture Identify System Operating Status Storing Error Status Provide System Operating Status Tester <<include>> <<Variant>> Store Error Status <<include>> Clearing Error Status <<Variant>> Clear Error Status 0..1 0..1 <<Variant>> Clear Error Status via Diagnostic Mode <<Variant>> Clear Error Status via IEE QC Mode 0..1 <<include>> Method of Clearing Error Status 1..1 <<require>> STO Controller <<include>> STO System Sensors Recognize Gesture Identify System Operating Status Storing Error Status Provide System Operating Status Tester <<include>> <<Variant>> Store Error Status <<include>> Clearing Error Status <<Variant>> Clear Error Status 0..1 0..1 <<Variant>> Clear Error Status via Diagnostic Mode <<Variant>> Clear Error Status via IEE QC Mode 0..1 <<include>> Method of Clearing Error Status 1..1 <<require>> STO Controller <<include>> Excerpt of STO Product Line Use Case Diagram Variation Point Variant Use Case

- 16. 16 STO System Sensors Recognize Gesture Identify System Operating Status Storing Error Status Provide System Operating Status Tester <<include>> <<Variant>> Store Error Status <<include>> Clearing Error Status <<Variant>> Clear Error Status 0..1 0..1 <<Variant>> Clear Error Status via Diagnostic Mode <<Variant>> Clear Error Status via IEE QC Mode 0..1 <<include>> Method of Clearing Error Status 1..1 <<require>> STO Controller <<include>> Identify System Operating Status Storing Error Status <<Variant>> Store Error Status <<include>> 0..1 Excerpt of STO Product Line Use Case Diagram

- 17. 17 STO System Sensors Recognize Gesture Identify System Operating Status Storing Error Status Provide System Operating Status Tester <<include>> <<Variant>> Store Error Status <<include>> Clearing Error Status <<Variant>> Clear Error Status 0..1 0..1 <<Variant>> Clear Error Status via Diagnostic Mode <<Variant>> Clear Error Status via IEE QC Mode 0..1 <<include>> Method of Clearing Error Status 1..1 <<require>> STO Controller <<include>> STO System Sensors Recognize Gesture Identify System Operating Status Storing Error Status Provide System Operating Status Tester <<include>> <<Variant>> Store Error Status <<include>> Clearing Error Status <<Variant>> Clear Error Status 0..1 0..1 <<Variant>> Clear Error Status via Diagnostic Mode <<Variant>> Clear Error Status via IEE QC Mode 0..1 <<include>> Method of Clearing Error Status 1..1 <<require>> STO Controller <<include>> STO System Sensors Recognize Gesture Identify System Operating Status Storing Error Status Provide System Operating Status Tester <<include>> <<Variant>> Store Error Status <<include>> Clearing Error Status <<Variant>> Clear Error Status 0..1 0..1 <<Variant>> Clear Error Status via Diagnostic Mode <<Variant>> Clear Error Status via IEE QC Mode 0..1 <<include>> Method of Clearing Error Status 1..1 <<require>> STO Controller <<include>> STO System Sensors Recognize Gesture Identify System Operating Status Storing Error Status Provide System Operating Status Tester <<include>> <<Variant>> Store Error Status <<include>> Clearing Error Status <<Variant>> Clear Error Status 0..1 0..1 <<Variant>> Clear Error Status via Diagnostic Mode <<Variant>> Clear Error Status via IEE QC Mode 0..1 <<include>> Method of Clearing Error Status 1..1 <<require>> STO Controller <<include>> Excerpt of STO Product Line Use Case Diagram

- 18. 18 Tester <<Variant>> Clear Error Status 0..1 Clearing Error Status <<include>> <<Variant>> Clear Error Status via Diagnostic Mode <<Variant>> Clear Error Status via IEE QC Mode 0..1 1..1 Method of Clearing Error Status Excerpt of STO Product Line Use Case Diagram

- 19. 19 STO System Sensors Recognize Gesture Identify System Operating Status Storing Error Status Provide System Operating Status Tester <<include>> <<Variant>> Store Error Status <<include>> Clearing Error Status <<Variant>> Clear Error Status 0..1 0..1 <<Variant>> Clear Error Status via Diagnostic Mode <<Variant>> Clear Error Status via IEE QC Mode 0..1 <<include>> Method of Clearing Error Status 1..1 <<require>> STO Controller <<include>> Storing Error Status <<Variant>> Store Error Status Clearing Error Status <<Variant>> Clear Error Status 0..1 0..1 <<require>> Excerpt of STO Product Line Use Case Diagram

- 20. Elicit Product Line Models 1 <<s>> <<p>> <<p>> <<m>> Sensors Recognize Gesture Identify System Operating Status Storing Error Status Provide System Operating Status Tester <<include>> <<Variant>> Store Error Status <<include>> Clearing Error Status <<Variant>> Clear Error Status 0..1 0..1 <<require>> STO Controller <<include>> Product Line Use Case Diagram Product Line Use Case Specifications Product Line Domain Model Check conformance among artifacts {<latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit> 2 •• •• •• •• •• •• •• •• List of Inconsistencies Modeling Method: PUM

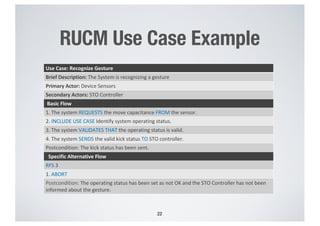

- 21. Restricted Use Case Modeling: RUCM • RUCM is a use case modeling approach that is based on: - a use case template - a set of well-defined restriction rules and keywords 21 [Yue et al. TOSEM’13]

- 22. RUCM Use Case Example 22 Use Case: Recognize Gesture Brief Description: The System is recognizing a gesture Primary Actor: Device Sensors Secondary Actors: STO Controller Basic Flow 1. The system REQUESTS the move capacitance FROM the sensor. 2. INCLUDE USE CASE Identify system operating status. 3. The system VALIDATES THAT the operating status is valid. 4. The system SENDS the valid kick status TO STO controller. Postcondition: The kick status has been sent. Specific Alternative Flow RFS 3 1. ABORT Postcondition: The operating status has been set as not OK and the STO Controller has not been informed about the gesture.

- 23. RUCM Extension (1) • Keyword: INCLUDE VARIATION POINT: ... • Variation points can be included in basic or alternative flows of use cases 23 Use Case: Identify system operating status Basic Flow 1. The system VALIDATES THAT the ROM is valid. 2. The system VALIDATES THAT the RAM is valid. 3. The system VALIDATES THAT the sensors are valid. 4. The system VALIDATES THAT there is no error detected Specific Alternative Flow RFS 4 1. INCLUDE VARIATION POINT: Storing error status. 2. ABORT

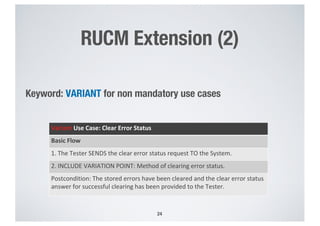

- 24. RUCM Extension (2) Keyword: VARIANT for non mandatory use cases 24 Variant Use Case: Clear Error Status Basic Flow 1. The Tester SENDS the clear error status request TO the System. 2. INCLUDE VARIATION POINT: Method of clearing error status. Postcondition: The stored errors have been cleared and the clear error status answer for successful clearing has been provided to the Tester.

- 25. RUCM Extension (3) Keyword: OPTIONAL for non-mandatory steps and non-mandatory alternative flows 25 Variant Use Case: Provide System User Data via Standard Mode Basic Flow 1. OPTIONAL STEP: The system VALIDATES THAT the “switch off communication” feature is disabled. 2. OPTIONAL STEP: The system SENDS calibration data TO the Tester. 3. OPTIONAL STEP: The system SENDS trace data TO the Tester. 4. OPTIONAL STEP: The system SENDS error data TO the Tester. 5. OPTIONAL STEP: The system SENDS sensor data TO the Tester. OPTIONAL Specific Alternative Flow RFS 1 1. ABORT. Postcondition: the switch off communication feature has been enabled.

- 26. RUCM Extension (4) Keyword: V for variant order of steps 26 Variant Use Case: Provide System User Data via Standard Mode Basic Flow 1. OPTIONAL STEP: The system VALIDATES THAT the “switch off communication” feature is disabled. V1. OPTIONAL STEP: The system SENDS calibration data TO the Tester. V2. OPTIONAL STEP: The system SENDS trace data TO the Tester. V3. OPTIONAL STEP: The system SENDS error data TO the Tester. V4. OPTIONAL STEP: The system SENDS sensor data TO the Tester. OPTIONAL Specific Alternative Flow RFS 1 1. ABORT. Postcondition: the switch off communication feature has been enabled.

- 27. Elicit Product Line Models 1 <<s>> <<p>> <<p>> <<m>> Sensors Recognize Gesture Identify System Operating Status Storing Error Status Provide System Operating Status Tester <<include>> <<Variant>> Store Error Status <<include>> Clearing Error Status <<Variant>> Clear Error Status 0..1 0..1 <<require>> STO Controller <<include>> Product Line Use Case Diagram Product Line Use Case Specifications Product Line Domain Model Check consistency among artifacts {<latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit> 2 •• •• •• •• •• •• •• •• List of Inconsistencies Modeling Method: PUM

- 28. Excerpt of STO Product Line Domain Model 28 <<Variation>> Request - code: integer - name: Boolean - response: ResponseType <<Variant>> ClearError StatusRequest SmartTrunkOpener - operatingStatus: Boolean - overuseCounter: integer <<Variation>> ProvideSystem UserDataRequest Kick - isValid : Boolean - moveAmplitude: integer - moveDuration: integer - moveVelocity: integer StandardMode ProvideDataReq QCMode ProvideDataReq <<Variant>> DiagnosticMode ProvideDataReq <<Optional>> VoltageDiagnostic - guardACVoltage : integer - guardCurrent: integer [Ziadi and Jezequel, SPLC’06]

- 29. Elicit Product Line Models 1 <<s>> <<p>> <<p>> <<m>> Sensors Recognize Gesture Identify System Operating Status Storing Error Status Provide System Operating Status Tester <<include>> <<Variant>> Store Error Status <<include>> Clearing Error Status <<Variant>> Clear Error Status 0..1 0..1 <<require>> STO Controller <<include>> Product Line Use Case Diagram Product Line Use Case Specifications Product Line Domain Model Check consistency among artifacts {<latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit> 2 •• •• •• •• •• •• •• •• List of Inconsistencies Modeling Method: PUM

- 30. Natural Language Processing 30 Variant Use Case: Clear Error Status Basic Flow 1. The Tester SENDS the clear error status request TO the System. 2. INCLUDE VARIATION POINT: Method of clearing error status. Postcondition: The stored errors have been cleared and the clear error status answer for successful clearing has been provided to the Tester. Input Step Variation Point Variant Use Case Post Condition

- 31. Consistency Checks • The tool for our modeling method: - checks conformance of the use case specifications with extended RUCM template - checks use case diagram and use case specifications consistency - checks use case specifications and the domain model consistency 31

- 32. 32 Use Case Models Consistency Example Use case diagram Use case specifications

- 33. Evaluation • We evaluate our modeling method in terms of adoption effort, expressiveness, and tool support through a questionnaire study and a case study • Presentation of our: 1) modeling method, 2) detailed examples from STO, and 3) tool demo • Participants were also encouraged to provide open, written comments 33

- 34. Model Sizes 34 # of use cases # of variation points # of basic flows # of alternative flows # of steps # of condition steps Essential Use Cases 15 5 15 70 269 75 Variant Use Cases 14 3 14 132 479 140 Total 29 8 29 202 748 215 # of classes Essential 42 Variant 12 Total 54

- 35. Participants • Interviews with seven participants who: - hold various roles at IEE: software development and process manager, software engineer, and system engineer - have substantial industry experience, ranging from seven to thirty years - have previous experience with use case-driven development and modeling 35

- 36. Example Questions from the Questionnaire 36 Do you think that our modeling method provide useful assistance for capturing and analyzing variability ? 0 1 2 3 4 5 6 Very probably Probably Probably not Surely not Would you see added value in adopting our modeling method? 0 1 2 3 4 5 6 7 Very probably Probably Probably not Surely not

- 37. Questionnaire Results: Positive Aspects • The extensions: - are simple enough to facilitate communication between analysts and customers - provide enough expressiveness to conveniently capture variability • The effort required for learning PUM is reasonable • The tool provides useful assistance for minimizing inconsistencies in artifacts 37

- 38. 38 Automated Configuration Sensors Recognize Gesture Identify System Operating Status Storing Error Status Provide System Operating Status Tester <<include>> <<Variant>> Store Error Status <<include>> Clearing Error Status <<Variant>> Clear Error Status 0..1 0..1 <<require>> STO Controller <<include>> Model Variability in Use Case and Domain Models PL Use Case Diagram PL Use Case Specifications PL Domain Model ¨ <<s>> <<p>> <<p>> <<m>> Interactive Configuration of PS Use Case and Domain Models ≠ Automated Configuration of PS Use Case and Domain Models Change Impact Analysis for Configuration Decision Changes Incremental Reconfiguration of PS Models {<latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit> PS Use Case Diagram Impact Analysis Report PS Use Case Specifications PS Domain Model PL: Product Line PS: Product Specific Automated Test Case Classification and Prioritization Æ Prioritized Test Suite for new Product

- 39. Related Work • Relating feature models to use case artifacts [Eriksson et al., 2009; Czarnecki et al., 2005; Alferez et al.,2009] • Using new artifact: decision model [John and Muthig, 2004; Faulk, 2001] 39

- 40. Limitations of Existing Configurators • Most configurators rely on feature models and require variability to be expressed in a generic notation or language • Generic configurators require considerable effort and tool-specific internal knowledge to be customized for use case models • Most use case configurators do not provide any automated decision-making support - For example, automated detection and explanation of contradicting decisions 40

- 41. Overview of Configuration Approach 41 Elicitation of Configuration Decisions with Consistency Checking Generation of Product Specific Use Case and Domain Models 1 2 PL Use Case Diagram PL Domain Model <<s>> <<p>> <<p>> <<m>> PL Use Case Specifications YesAre decisions consistent and complete?List of Contradicting Decisions No PS Use Case Diagram PS Domain Model PS Use Case Specifications Actor Reques t Order Show catalog Pay For •• •• •• •• •• •• •• ••

- 42. Elicitation of Decisions with Consistency Checking 42 List of Contradicting Decisions List of VPs Filtering VPs¨ ≠Collecting a Decision Checking Decision Consistency ÆVP1 VP2 VP3 Decision for VP VP1 Are Decisions Consistent?[Yes] [No]

- 43. Filtering and Ordering Example 43 Tester Clearing Error Status <<Variant>> Clear Error Status 0..1 <<Variant>> Clear Error Status via Diagnostic Mode <<Variant>> Clear Error Status via IEE QC Mode 0..1 <<include>> Method of Clearing Error Status 1..1

- 44. Checking Decisions Consistency 44 List of Contradicting Decisions List of VPs Filtering VPs¨ ≠Collecting a Decision Checking Decision Consistency ÆVP1 VP2 VP3 Decision for VP VP1 Are Decisions Consistent?[Yes] [No]

- 45. STO System Sensors Recognize Gesture Identify System Operating Status Storing Error Status Provide System Operating Status Tester <<include>> <<Variant>> Store Error Status <<include>> Clearing Error Status <<Variant>> Clear Error Status 0..1 0..1 <<Variant>> Clear Error Status via Diagnostic Mode <<Variant>> Clear Error Status via IEE QC Mode 0..1 <<include>> Method of Clearing Error Status 1..1 <<require>> STO Controller <<include>> 45 Checking Decisions Consistency Example (1)

- 46. Storing Error Status <<Variant>> Store Error Status Clearing Error Status <<Variant>> Clear Error Status 0..1 0..1 <<require>> 46 Variation point A Variation point B Decision D1 Decision D2 Checking Decisions Consistency Example (2)

- 47. 47 • Variability relations and multiplicities are mapped to a set of propositional logic formulas • In our example, A requires B becomes A implies B • We infer the root cause of a given conflict using the initial elements that were used for deriving the propositional formula Checking Decisions Consistency Example (3)

- 48. 48 Elicitation of Configuration Decisions with Consistency Checking Generation of Product Specific Use Case and Domain Models 1 2 PL Use Case Diagram PL Domain Model <<s>> <<p>> <<p>> <<m>> PL Use Case Specifications YesAre decisions consistent and complete?List of Contradicting Decisions No PS Use Case Diagram PS Domain Model PS Use Case Specifications Actor Reques t Order Show catalog Pay For •• •• •• •• •• •• •• •• Generation of Product Specific Use Case and Domain Models

- 49. Generation of PS Use Case Diagram and Specifications 49 UC <<Variant>> UCn <<include>> Variation Point X 1..n <<Variant>> UC1 … Actor PL Model Decision PS Model Select UC1 and UCn No optional step selected UC UCn <<include>> UC1 <<include>> … Actor Dependency INCLUDE VARIATION POINT X. Basic Flow Steps Flow of events 1. Step INCLUDE VARIATION POINT X. Steps OPTIONAL STEP: Flow of events 2.<latexit sha1_base64="(null)">(null)</latexit><latexit sha1_base64="(null)">(null)</latexit><latexit sha1_base64="(null)">(null)</latexit><latexit sha1_base64="(null)">(null)</latexit> Dependency INCLUDE UC1, INCLUDE UCn Basic Flow Steps Flow of events 1. Step VALIDATES THAT Pre-condition of UC1. Step INCLUDE UC1. Specific Alternative Flow Step RFS Validation step in Basic Flow Step INCLUDE UCn.<latexit sha1_base64="(null)">(null)</latexit><latexit sha1_base64="(null)">(null)</latexit><latexit sha1_base64="(null)">(null)</latexit><latexit sha1_base64="(null)">(null)</latexit>

- 50. Evaluation 50 Is our configuration approach practical and beneficial to configure PS models in industrial settings?

- 51. Example Questions from the Questionnaire 51 Do you think that the configurator provides useful assistance for identifying and resolving inconsistent decisions in PL use case diagram? 0 1 2 3 4 5 6 7 Very probably Probably Probably not Surely not Would you see added value in adopting our configurator? 0 1 2 3 4 5 6 Very probably Probably Probably not Surely not

- 52. Questionnaire Results • The configurator: - provides useful assistance for configuring PS use case models compared to IEE current practice - facilitates communication between analysts and stakeholders during configuration • The effort required to learn and apply our configurator is reasonable 52

- 53. 53 Sensors Recognize Gesture Identify System Operating Status Storing Error Status Provide System Operating Status Tester <<include>> <<Variant>> Store Error Status <<include>> Clearing Error Status <<Variant>> Clear Error Status 0..1 0..1 <<require>> STO Controller <<include>> Model Variability in Use Case and Domain Models PL Use Case Diagram PL Use Case Specifications PL Domain Model ¨ <<s>> <<p>> <<p>> <<m>> Interactive Configuration of PS Use Case and Domain Models ≠ Automated Configuration of PS Use Case and Domain Models Change Impact Analysis for Configuration Decision Changes Incremental Reconfiguration of PS Models {<latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit> PS Use Case Diagram Impact Analysis Report PS Use Case Specifications PS Domain Model PL: Product Line PS: Product Specific Automated Test Case Classification and Prioritization Æ Prioritized Test Suite for new Product Change Impact Analysis for Configuration Decision Changes

- 54. Related Work • Impact analysis approaches for product lines using feature models [Thüm et al., 2009; Seidl et al., 2012; Dintzner et al., 2014] • Reasoning approaches for product lines [Benavides et al., 2010; Durán et al., 2017; White et al., 2008, 2010] 54

- 55. Main Limitation of Existing Work Existing approaches identify only the impacted decisions and do not provide any explanation regarding the cause of the impact of decision changes 55

- 56. Motivation • Identify the cause of the impact of changing decisions for PL use case diagrams - Violation of dependency relations (i.e., requires and conflicts) - Unsatisfiability of cardinality constraints of variation points - Restrictions on subsequent decisions • Improve decision making process by informing analysts about the causes of change impacts on configuration decisions 56

- 57. Overview of Change Impact Analysis Approach 57 Identify the Change Impact on Other decisions Propose a Change for a Decision Apply the Proposed Change Proposed Change Do you want to apply the proposed change? •• •• •• •• •• •• •• •• Impacted Decisions [No] [Yes] •• •• •• •• •• •• •• •• Added/Removed Updated Decisions 1 2 3[No] [Yes] Do you want to propose a change for any other decision?

- 58. Overview of Change Impact Analysis Approach 58 Identify the Change Impact on Other decisions Propose a Change for a Decision Apply the Proposed Change Proposed Change Do you want to apply the proposed change? •• •• •• •• •• •• •• •• Impacted Decisions [No] [Yes] •• •• •• •• •• •• •• •• Added/Removed Updated Decisions 1 3[No] [Yes] Do you want to propose a change for any other decision? 2

- 59. Identification of Change Impact on Other Decisions • Step1: check contradictions with prior decisions • Step2: infer restrictions on subsequent decisions èdelimits future selection of variant use cases in still undecided variation points • Step3: check if the inferred restrictions bring new contradictions 59

- 60. Inferring Decision Restrictions for Subsequent Decisions 60 • Proposed decision change for VP1: select UC1 and unselect UC2 • Inferred (future) restrictions by (recursive) traversal of dependencies: - UC3 must be selected - UC5 should not be selected - UC7 should not be selected è Contradiction: cardinality of VP3 cannot be satisfied <<Variant>> UC1 0..1 <<Variant>> UC3 <<Variant>> UC4 0..1 VP2 1..1 <<require>> VP1 <<Variant>> UC2 <<Variant>> UC5 <<Variant>> UC6 VP3 2..3 <<Variant>> UC7 <<conflict>> <<require>>

- 61. Generation of Impact Report 61 Violet Cardinality* constraints*that* can*no*longer*be* sa2sfied Variant*use*cases* that*must*be* selected*due*to* requires*rela2on* and*decision* change Brown Red Variant use cases that are selected in the decision change Orange Variant use cases that are unselected in the decision change Explanation Colors Variant*use*cases* that*must*be* unselected*due*to* conflict*rela2on* and*decision* change Green <<Variant>> UC1 <<Variant>> UC2 <<Variant>> UC5 <<Variant>> UC6 <<Variant>> UC3 <<Variant>> UC4 VP2 <<Variant>> UC7 0..1 <<conflict>> <<require>> VP1 VP3 2..3 <<require>> Subsequent decision for VP3 Subsequent decision for VP2 Impacted Decisions

- 62. Research Question Does our approach support engineers to identify the impact of decision changes? 62

- 63. Example Questions from the Questionnaire 63 Do you think that the steps in our change impact analysis method are easy to follow, given appropriate training? Do you think that the effort required to learn how to apply the change impact analysis method is reasonable? 0 1 2 3 4 5 6 Strongly agree Agree Disagree Strongly disagree 0 0.5 1 1.5 2 2.5 3 3.5 4 4.5 Strongly agree Agree Disagree Strongly disagree

- 64. Questionnaire Results • Our approach is sufficient to determine and explain the impact of decision changes for PL use case diagrams • Strong agreement among participants about the value of adopting our change impact analysis approach • Very positive feedback about the approach and the impact analysis reports provided by the tool 64

- 65. 65 Incremental Reconfiguration of PS models Sensors Recognize Gesture Identify System Operating Status Storing Error Status Provide System Operating Status Tester <<include>> <<Variant>> Store Error Status <<include>> Clearing Error Status <<Variant>> Clear Error Status 0..1 0..1 <<require>> STO Controller <<include>> Model Variability in Use Case and Domain Models PL Use Case Diagram PL Use Case Specifications PL Domain Model ¨ <<s>> <<p>> <<p>> <<m>> Interactive Configuration of PS Use Case and Domain Models ≠ Automated Configuration of PS Use Case and Domain Models Change Impact Analysis for Configuration Decision Changes Incremental Reconfiguration of PS Models {<latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit> PS Use Case Diagram Impact Analysis Report PS Use Case Specifications PS Domain Model PL: Product Line PS: Product Specific Automated Test Case Classification and Prioritization Æ Prioritized Test Suite for new Product

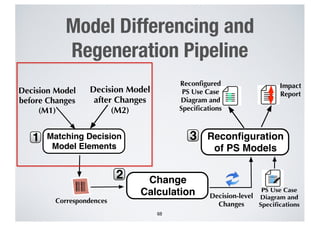

- 66. Motivation • Analysts manually assign traces from the generated PS models to other external documents • Each time that a configuration decision changes, analysts must manually re-assign all the traces again • We aim to reduce the effort of manually assigning traceability links between product PS models and external documents 66 Incrementally regenerate PS use case models by focusing only on impacted decisions

- 67. Model Differencing and Regeneration Pipeline 67 Decision Model before Changes (M1) Decision Model after Changes (M2) Matching Decision Model Elements 1 Correspondences •• •• •• •• •• •• •• •• 2 Change Calculation Reconfiguration of PS Models 3 Decision-level Changes PS Use Case Diagram and Specifications Reconfigured PS Use Case Diagram and Specifications Impact Report

- 68. Model Differencing and Regeneration Pipeline 68 Decision Model before Changes (M1) Decision Model after Changes (M2) Matching Decision Model Elements 1 Correspondences •• •• •• •• •• •• •• •• 2 Change Calculation Reconfiguration of PS Models 3 Decision-level Changes PS Use Case Diagram and Specifications Reconfigured PS Use Case Diagram and Specifications Impact Report

- 69. Decision Model Example 69 :DecisionModel - name = “Provide System User Data” :EssentialUseCase - name = “Method of Providing Data” :MandatoryVariationPoint - name = “Provide System User Data via Standard Mode” - isSelected = True :VariantUseCase - name = “Provide System User Data via Diagnostic Mode” - isSelected = True :VariantUseCase - name = “Provide System User Data via IEE QC Mode” - isSelected = True :VariantUseCase variants - number = 1 :BasicFlow - name = “V” :VariantOrder - orderNumber = 4 - variantOrderNumber = 1 - isSelected = True :OptionalStep - orderNumber = 1 - variantOrderNumber = 2 - isSelected = True :OptionalStep - orderNumber = 0 - variantOrderNumber = 3 - isSelected = False :OptionalStep usecases variationpoint

- 70. Matching Decision Model Elements • The structural differencing of M1 and M2 is done by searching for the correspondences in M1 and M2 • A correspondence between two elements E1 and E2 denotes that E1 and E2 represent decisions for the same variation in M1 and M2 70

- 71. Matching Decision Model Elements Example 71 Excerpt of Decision Model M1 (before the change) Excerpt of Decision Model M2 (after the change) - name = “Provide System User Data via Standard Mode” - isSelected = True B11:VariantUseCase - number = 1 B12:BasicFlow - orderNumber = 0 - variantOrderNumber = 2 - isSelected = False B14:OptionalStep Triplet (use case, flow, step ) - name = “Provide System User Data via Standard Mode” - isSelected = True C11:VariantUseCase - number = 1 C12:BasicFlow - orderNumber = 1 - variantOrderNumber = 2 - isSelected = True C14:OptionalStep - name = “Provide System User Data via Diagnostic Mode” - isSelected = True C9:VariantUse Case

- 72. Matching Decision Model Elements Example 72 Excerpt of Decision Model M1 (before the change) Excerpt of Decision Model M2 (after the change) - name = “Provide System User Data via Standard Mode” - isSelected = True B11:VariantUseCase - number = 1 B12:BasicFlow - orderNumber = 0 - variantOrderNumber = 2 - isSelected = False B14:OptionalStep - name = “Provide System User Data via Diagnostic Mode” - isSelected = True C9:VariantUse Case - name = “Provide System User Data via Standard Mode” - isSelected = True C11:VariantUseCase - number = 1 C12:BasicFlow - orderNumber = 1 - variantOrderNumber = 2 - isSelected = True C14:OptionalStep

- 73. Model Differencing and Regeneration Pipeline 73 Decision Model before Changes (M1) Decision Model after Changes (M2) Matching Decision Model Elements 1 Correspondences •• •• •• •• •• •• •• •• 2 Change Calculation Reconfiguration of PS Models 3 Decision-level Changes PS Use Case Diagram and Specifications Reconfigured PS Use Case Diagram and Specifications Impact Report

- 74. Change Calculation • Identifies decision-level changes from the corresponding model elements • Identifies deleted, added, and updated decisions for use case diagram and specification 74

- 75. Change Calculation Example 75 Excerpt of Decision Model M1 (before the change) Excerpt of Decision Model M2 (after the change) - name = “Provide System User Data via Standard Mode” - isSelected = True B11:VariantUseCase - number = 1 B12:BasicFlow - orderNumber = 0 - variantOrderNumber = 2 - isSelected = False B14:OptionalStep - name = “Provide System User Data via Standard Mode” - isSelected = True C11:VariantUseCase - number = 1 C12:BasicFlow - orderNumber = 1 - variantOrderNumber = 2 - isSelected = True C14:OptionalStep - name = “Provide System User Data via Diagnostic Mode” - isSelected = True C9:VariantUse Case

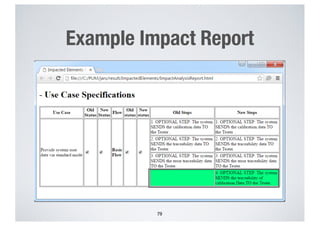

- 76. 76 Decision Model before Changes (M1) Decision Model after Changes (M2) Matching Decision Model Elements 1 Correspondences •• •• •• •• •• •• •• •• 2 Change Calculation Reconfiguration of PS Models 3 Decision-level Changes PS Use Case Diagram and Specifications Reconfigured PS Use Case Diagram and Specifications Impact Report Reconfiguration of Product Specific Use Case Models

- 77. Reconfiguration of Product Specific Use Case Models • Regenerates the product specific use case diagram and specifications only for parts impacted by at least an added, deleted, and updated decision • Generates a report for the impacted and regenerated parts of the product specific models 77

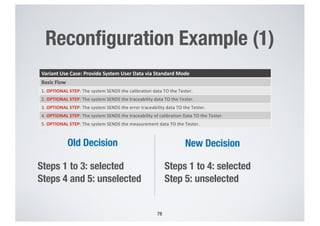

- 78. Reconfiguration Example (1) 78 Variant Use Case: Provide System User Data via Standard Mode Basic Flow 1. OPTIONAL STEP: The system SENDS the calibration data TO the Tester. 2. OPTIONAL STEP: The system SENDS the traceability data TO the Tester. 3. OPTIONAL STEP: The system SENDS the error traceability data TO the Tester. 4. OPTIONAL STEP: The system SENDS the traceability of calibration Data TO the Tester. 5. OPTIONAL STEP: The system SENDS the measurement data TO the Tester. Old Decision New Decision Steps 1 to 3: selected Steps 4 and 5: unselected Steps 1 to 4: selected Step 5: unselected

- 80. Reconfiguration of PS Models Example 80 Traceability link preserved Only one step is inserted of the regenerated model

- 81. • We configured product specific models for four customers • We chose the most recent product to be used for reconfiguration • The selected product includes: - 36 traces in the PS use case diagram - 278 traces in PS use case specifications • We considered 8 diverse change scenarios 81 Evaluation: Industrial Case Study

- 82. Research Questions • RQ1: To what extent our approach can preserve trace links? • RQ2: Does our approach significantly reduce manual effort? 82

- 83. Improving Trace Reuse 83 0 20 40 60 80 100 120 S1 S2 S3 S4 S5 S6 S7 S8 Decision Change Scenarios % of preserved traces for PS use case diagram % of preserved traces for PS use case specification In average, 96% of the use case diagram and specification traces were preserved

- 84. Reducing Manual Effort 84 0 50 100 150 200 250 300 350 S1 S2 S3 S4 S5 S6 S7 S8 Decision Change Scenarios # of manually assigned traces in use case specifications without using our approach # of manually added traces in use case specifications using our approach On average, 4% of the use case specification traces need to be manually assigned (when using our approach)

- 85. 85 Sensors Recognize Gesture Identify System Operating Status Storing Error Status Provide System Operating Status Tester <<include>> <<Variant>> Store Error Status <<include>> Clearing Error Status <<Variant>> Clear Error Status 0..1 0..1 <<require>> STO Controller <<include>> Model Variability in Use Case and Domain Models PL Use Case Diagram PL Use Case Specifications PL Domain Model ¨ <<s>> <<p>> <<p>> <<m>> Interactive Configuration of PS Use Case and Domain Models ≠ Automated Configuration of PS Use Case and Domain Models Change Impact Analysis for Configuration Decision Changes Incremental Reconfiguration of PS Models {<latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit> PS Use Case Diagram Impact Analysis Report PS Use Case Specifications PS Domain Model PL: Product Line PS: Product Specific Automated Test Case Classification and Prioritization Æ Prioritized Test Suite for new Product Test Case Classification and Prioritization

- 86. Current Practice 86 STO requirement s for C1 STO requirements for C2 STO requirements for C3 STO test suite for C1 STO test suite for C2 STO test suite for C3 evolves to (copy and modify) evolves to derived from derived from derived from Test engineer Requirements analyst Customer C1 Customer C2 Customer C3 (copy and modify) evolves to (select, prioritize and modify) evolves to (select, prioritize and modify)

- 87. Related Work • Regression testing techniques using system design artifacts or feature models [Wang et al., 2016; Runeson and Engstrom, 2012; Muccini et al., 2006] • Test case selection approaches based on test cases generation for the product family [Lity et al., 2012, 2016; Lochau et al., 2014] • Search-based approaches for multi-objective test case prioritization in product lines [Parejo et al., 2016; Wang et al., 2014; Pradhan et al., 2011] • Test case prioritization techniques using user knowledge through machine learning algorithms [Lachmann et al., 2016; Tonella et al., 2006] 87

- 88. Limitations of Existing Work • Some exiting approaches require: - all test cases of the product line be derived upfront even if some of them may never be executed - detailed system design artifacts (e.g., finite state machines and UML sequence diagrams), rather than requirements in natural language 88

- 89. Objective Support the definition and the prioritization of the test suite for a new product by maximizing the reuse of test suites of existing products in the product line 89

- 90. Main Challenge Avoid relying on behavioral system models and early generation of test cases for the product family when testing new products in product lines 90

- 91. 91 Overview of Test Case Classification and Prioritization 1. Classify System Test Cases for the new Product 2. Create New Test Cases Using Guidance 3. Prioritize System Test Cases for the New Product • PS Models and Decision Model for the New Product • Test Cases, PS Models and their Traces, and Decision Models for Previous Product(s) • Partial Test Suite for the New Product • Guidance to Update Test Cases Test suite for the new Product • Test Execution History • Variability Information • Size of Use Case Scenarios • Classification of Test Cases Prioritized Test Suite for the New Product

- 92. 92 Approach Overview 1. Classify System Test Cases for the new Product 2. Create New Test Cases Using Guidance 3. Prioritize System Test Cases for the New Product • PS Models and Decision Model for the New Product • Test Cases, PS Models and their Traces, and Decision Models for Previous Product(s) • Partial Test Suite for the New Product • Guidance to Update Test Cases Test suite for the new Product • Test Execution History • Variability Information • Size of Use Case Scenarios • Classification of Test Cases Prioritized Test Suite for the New Product

- 93. Test Case Classification Approach 93 Matching Decision Model Elements Change Calculation Impact Report Generation Test Case Classification Decision Models of Previous Products Decision Models of New Product •• •• •• •• •• •• •• •• Correspondence Decision Change Test Cases, Trace Links, and PS Use Cases of the Previous Products Classified Test Cases for the New Product [Yes] [No] Is there any other previous product? Impact Report 1 2 34

- 94. Test Case Classification Approach 94 Matching Decision Model Elements Change Calculation Impact Report Generation Test Case Classification Decision Models of Previous Products Decision Models of New Product •• •• •• •• •• •• •• •• Correspondence Decision Change Test Cases, Trace Links, and PS Use Cases of the Previous Products Classified Test Cases for the New Product [Yes] [No] Is there any other previous product? Impact Report 1 2 34 These two steps are also used for incremental reconfiguration of PS use case models

- 95. Test Case Classification Approach 95 Matching Decision Model Elements Change Calculation Impact Report Generation Test Case Classification Decision Models of Previous Products Decision Models of New Product •• •• •• •• •• •• •• •• Correspondence Decision Change Test Cases, Trace Links, and PS Use Cases of the Previous Products Classified Test Cases for the New Product [Yes] [No] Is there any other previous product? Impact Report 1 2 34

- 96. Test Case Classification • To test a new product, we classify system test cases of previous product(s) into reusable, retestable, and obsolete • The classification is based on: - Decision changes - Trace links between the system test cases and the PS use case specifications 96

- 97. Use Case Scenario Model (1) 97 1. The system requests the move capacitance from the sensors 2. INCLUDE USE CASE Identify System Operating Status 1. The system increments the OveruseCounter by the increment step 3. The system VALIDATES THAT the operating status is valid 4. The system VALIDATES THAT the movement is a valid kick 1. ABORT 5. The system SENDS the valid kick status TO the STO Controller 2. ABORT true true false false A B C D E F H G This use case scenario model covers 3 scenarios: 1. A->B->C->D->E 2. A->B->C->D->G->H 3. A->B->C->F

- 98. Use Case Scenario Model (2) 98 A B C D F E G Test Case Id Covered scenario TC1 A->B->C->D->E (Basic Flow) TC2 A->B->C->D->G->H (Basic Flow + SAF2) TC3 A->B->C->F (Basic Flow + SAF1) TC1 Description Test caseTo check: Recognize gesture-Basic Flow-Valid kick detected - Success 4.1.1.1.1 Objective: - To check if the operating status is OK. - To check if the ECU can recognize a valid kick gesture. Method: - Trigger a valid kick gesture. - Check if the operating status is OK and the overuse protection status is not active. - Check if the valid kick gesture can be recognized. Traceability table H

- 99. Test Cases Classification for New Product (1) 99 A B C D F E G Old product How to classify TC1 for a new product? A B C D F E G New product No decision change TC1 -> reusable H H exercises an execution sequence of use case steps that has remained valid in the new product

- 100. Test Cases Classification for New Product (2) 100 A B C D F E G Old product How to classify TC1 for a new product? A B C D F E G New product Decision change does not impact the tested scenario TC1 -> reusable XH H

- 101. Test Cases Classification for New Product (3) 101 A B C D F E G Old product How to classify TC1 for a new product? New product Decision change impacts the tested scenario + type of impacted steps TC1 -> obsolete C D F E G A Input step H B H exercises an invalid execution sequence of use case steps in the new product

- 102. Test Cases Classification for New Product (4) 102 B C D F E G Old product How to classify TC1 for a new product? New product Decision change impacts the tested scenario + type of impacted steps TC1 -> retestable A C D F E G B Internal step H A H exercises execution sequence of use case steps that has remained valid in the new product except for internal steps

- 103. 103 Create New Test Cases Using Guidance 1. Classify System Test Cases for the new Product 2. Create New Test Cases Using Guidance 3. Prioritize System Test Cases for the New Product • PS Models and Decision Model for the New Product • Test Cases, PS Models and their Traces, and Decision Models for Previous Product(s) • Partial Test Suite for the New Product • Guidance to Update Test Cases Test suite for the new Product • Test Execution History • Variability Information • Size of Use Case Scenarios • Classification of Test Cases Prioritized Test Suite for the New Product

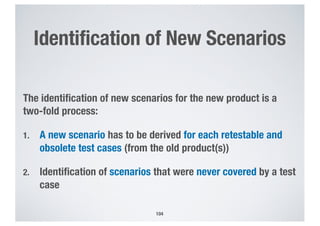

- 104. Identification of New Scenarios The identification of new scenarios for the new product is a two-fold process: 1. A new scenario has to be derived for each retestable and obsolete test cases (from the old product(s)) 2. Identification of scenarios that were never covered by a test case 104

- 105. Identification of New Scenarios (1) 105 B C D F E G New product H X Z Id Classification Covered scenario New scenario to cover TC1 Reusable A->B->C ->D->E - TC2 Retestable A->B->C ->D->G->H A->B->C->D->H TC3 Obsolete A->B->C->F A->B->C->X->F A

- 106. Identification of New Scenarios (2) 106 B C D F E H X Z ATrue (TC1) and false (TC3) paths already covered True (TC1) and false (TC2) paths already covered Only the true path is covered by the new scenario extracted from TC3 1. We keep track of already covered steps (blue nodes) 2. For each conditional step, we also store the (true and false) paths that were already visited 3. We traverse the use case scenario model and use the stored information to extract uncovered scenarios. • Here, A->B->C->X->Z is uncovered

- 107. Guidance Example 107 1. The system requests the move capacitance from the sensors 2. INCLUDE USE CASE Identify System Operating Status 1. The system increments the OveruseCounter by the increment step 3. The system VALIDATES THAT the operating status is valid 4. The system VALIDATES THAT the movement is a valid kick 2. ABORT true false A B C D H G Please update the existing test case “TC2” to account for the fact that the step in red was deleted from the scenario of the use case specifications of the previous product.

- 108. 108 Prioritize System Test Cases for the New Product 1. Classify System Test Cases for the new Product 2. Create New Test Cases Using Guidance 3. Prioritize System Test Cases for the New Product • PS Models and Decision Model for the New Product • Test Cases, PS Models and their Traces, and Decision Models for Previous Product(s) • Partial Test Suite for the New Product • Guidance to Update Test Cases Test suite for the new Product • Test Execution History • Variability Information • Size of Use Case Scenarios • Classification of Test Cases Prioritized Test Suite for the New Product

- 109. Test Case Prioritization • Sort test cases as to maximize the likelihood of executing failing test cases first • Sorting is based on a set of factors that correlate with the presence of faults: - Size of the scenario - Degree of variability in the use case scenario - Number of products and versions in which the test case failed - Classification of the test case, i.e., reusable, retestable 109

- 110. Test Case Prioritization Approach 110 Identifying Significant Factors 1 Size of Use Case Scenarios and Variability Information Classification of Test Cases Prioritizing Test Cases Based on Significant Factors2 List of Significant Factors Selected and Modified Test Cases Prioritized Test Suite Test Execution History Rely on Logistic Regression

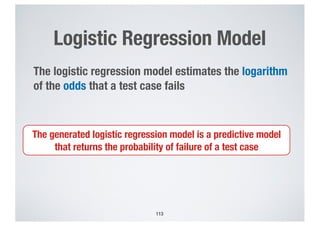

- 111. Logistic Regression • Predictive analysis aimed to determine the relationship between one dependent binary variable and one or more independent variables - Dependent binary variable: the failure of a given test case - Independent variables: the chosen predictors, e.g., number of the products in which the test case failed 111

- 112. Logistic Regression Model Ln(P/(1-P)) = B0+B1*S+B2*V+B3*FP+B4*FV+B5*R 112 Size Failing Products Degree of variability Failing Versions Is Retestable? The logistic regression model estimates the logarithm of the odds that a test case fails Intercept Estimated Coefficient

- 113. Logistic Regression Model 113 The logistic regression model estimates the logarithm of the odds that a test case fails The generated logistic regression model is a predictive model that returns the probability of failure of a test case

- 114. Test Case Prioritization Approach 114 Identifying Significant Factors 1 Size of Use Case Scenarios and Variability Information Classification of Test Cases Prioritizing Test Cases Based on Significant Factors2 List of Significant Factors Selected and Modified Test Cases Prioritized Test Suite Test Execution History

- 115. Identifying Significant Factors • We rely on the p-value computed by Wald Test on the logistic regression model trained by including all the factors • We keep the factors whose p-value is smaller than the threshold (0.05) 115

- 116. Test Case Prioritization Approach 116 Identifying Significant Factors 1 Size of Use Case Scenarios and Variability Information Classification of Test Cases Prioritizing Test Cases Based on Significant Factors2 List of Significant Factors Selected and Modified Test Cases Prioritized Test Suite Test Execution History

- 117. Prioritizing Test Cases 1. We derive a logistic regression model that includes only significant factors 2. Probabilities of failures are calculated from the regression model 3. Test cases are sorted in descending order of probability 117

- 118. 118 Example of Prioritized Test Suite TC id Failed Retestable Size Degree of Variability Failing Products Failing Versions Probability TCS2320 1 0 24 0 2 19 0.999 TCS239 1 0 25 0 2 17 0.999 TCS16 1 0 24 0 2 17 0.999 TCS304 1 0 32 0 2 15 0.998 TCS115 1 0 8 0 2 14 0.997 TCS2581 1 0 6 0 2 13 0.994 TCS345 1 0 25 0 2 12 0.879 TCS384 1 0 25 0 2 9 0.859 TCS559 1 0 8 1 1 8 0.711 TCS322 1 0 6 0 1 7 0.638 TCS591 1 0 5 1 1 6 0.57 TCS1516 0 0 8 0 0 0 0.458 TCS512 0 0 7 0 0 0 0.444 TCS1953 0 0 6 0 0 0 0.444 TCS3660 0 0 6 0 0 0 0.432

- 119. Empirical Evaluation • RQ1: Does the proposed approach provide correct test case classification results? • RQ2: Does the proposed approach accurately identify new scenarios that are relevant for testing a new product? • RQ3: Does the proposed approach successfully prioritize test cases? • RQ4: Can the proposed approach significantly reduce testing costs compared to current industrial practice? 119

- 120. RQ1: Correct Classification Results 120 Classified Test Suites Product to be Tested Reusable Retestable Obsolete Precision Recall P1 P2 94 2 14 1.0 1.0 P1, P2 P3 107 0 2 1.0 1.0 P1, P2, P3 P4 102 0 12 1.0 1.0 P1, P2, P3, P4 P5 93 15 1 1.0 1.0 Our approach has perfect precision and recall

- 121. Our approach identifies more than 80% of the failures by executing less than 50% of the test cases RQ3: Effectiveness of Test Case Prioritization 121 Classified Test Suites Product to be Tested % Test Case Executed to Identify % Failures Detected with 50% of Test Cases All Failures 80% of Failures P1 P2 72.09 38.37 97.43 P1, P2 P3 41.66 22.91 100 P1, P2, P3 P4 51.80 22.89 95 P1, P2, P3, P4 P5 26.54 18.58 100

- 122. • We compared results from our approach with the ideal situation where all failing test cases are executed first • For both cases: - We computed the Area Under Curve (AUC) for the cumulative percentage of failures triggered by executed test cases - We computed the AUC ratio 122 RQ3: Effectiveness of Test Case Prioritization

- 123. 123 0 20 40 60 80 0.00.20.40.60.81.0 TCs Percentageoffailures(cumulative) Ideal Observed 0 20 40 60 80 0.00.20.40.60.81.0 TCs Percentageoffailures(cumulative) Ideal Observed 0 20 40 60 80 0.20.40.60.81.0 TCs Percentageoffailures(cumulative) Ideal Observed 0 20 40 60 80 100 0.20.40.60.81.0 TCs Percentageoffailures(cumulative) Ideal Observed Y=CumulativePercentageoffailure X= Test Cases AUC ratio = 0.98 AUC ratio = 0.99 AUC ratio = 0.97 AUC ratio = 0.95

- 124. References • [Hajri et al., 2019], “Automating Test Case Classification and Prioritization for Use Case-Driven Testing in Product Lines”, arXiv:1905.11699, 2019 • [Hajri et al., 2018], “Change impact analysis for evolving configuration decisions in product line use case models”, JSS. • [Hajri et al., 2018], “Configuring use case models in product families”, SoSyM. 124

- 125. References • [Hajri et al., 2017], “Incremental reconfiguration of product specific use case models for evolving configuration decisions”, REFSQ 2017. • [Hajri et al., 2016], “PUMConf: A tool to configure product specific use case and domain models in a product line”, FSE 2016. • [Hajri et al., 2015], “Applying product line use case modeling in an industrial automotive embedded system: Lessons learned and a refined approach”, MoDELS 2015. 125

- 126. .lusoftware verification & validation VVS Supporting Change in Product Lines within the Context of Use Case-driven Development and Testing Lionel Briand SnT Centre for Security, Reliability and Trust University of Luxembourg, Luxembourg

![These approaches entail additional modeling and

traceability effort into practice

Related Work in Product Line Use

Case Driven Development

• Relating feature models and use cases [Griss et al., 1998;

Eriksson et al., 2009; Buhne et al., 2006]

• Modeling variability either in use case diagrams or use case

specifications [Azevedo et al., 2012; John and Muthig, 2004;

Halmans and Pohl, 2003]

11](https://image.slidesharecdn.com/sst19-190529103010/85/Supporting-Change-in-Product-Lines-within-the-Context-of-Use-Case-driven-Development-and-Testing-11-320.jpg)

![13

2. Model variability

in use case

specifications

3. Model

variability in

domain models

Reuse existing work

1. Model

variability in use

case diagrams

Introduce new

extensions for use

case specifications

Reuse existing work

[Yue et al., TOSEM’13] [Ziadi and Jezequel, SPLC’06][Halmans and Phol, SoSyM’03]

A modeling method

that cover the above artifacts

{<latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit><latexitsha1_base64="(null)">(null)</latexit>

Modeling Method: PUM](https://image.slidesharecdn.com/sst19-190529103010/85/Supporting-Change-in-Product-Lines-within-the-Context-of-Use-Case-driven-Development-and-Testing-13-320.jpg)

![Restricted Use Case Modeling:

RUCM

• RUCM is a use case modeling approach that is based on:

- a use case template

- a set of well-defined restriction rules and keywords

21

[Yue et al. TOSEM’13]](https://image.slidesharecdn.com/sst19-190529103010/85/Supporting-Change-in-Product-Lines-within-the-Context-of-Use-Case-driven-Development-and-Testing-21-320.jpg)

![Excerpt of STO Product Line

Domain Model

28

<<Variation>>

Request

- code: integer

- name: Boolean

- response: ResponseType

<<Variant>>

ClearError

StatusRequest

SmartTrunkOpener

- operatingStatus: Boolean

- overuseCounter: integer

<<Variation>>

ProvideSystem

UserDataRequest

Kick

- isValid : Boolean

- moveAmplitude: integer

- moveDuration: integer

- moveVelocity: integer

StandardMode

ProvideDataReq

QCMode

ProvideDataReq

<<Variant>>

DiagnosticMode

ProvideDataReq

<<Optional>>

VoltageDiagnostic

- guardACVoltage : integer

- guardCurrent: integer

[Ziadi and Jezequel,

SPLC’06]](https://image.slidesharecdn.com/sst19-190529103010/85/Supporting-Change-in-Product-Lines-within-the-Context-of-Use-Case-driven-Development-and-Testing-28-320.jpg)

![Related Work

• Relating feature models to use case artifacts [Eriksson et

al., 2009; Czarnecki et al., 2005; Alferez et al.,2009]

• Using new artifact: decision model [John and Muthig,

2004; Faulk, 2001]

39](https://image.slidesharecdn.com/sst19-190529103010/85/Supporting-Change-in-Product-Lines-within-the-Context-of-Use-Case-driven-Development-and-Testing-39-320.jpg)

![Elicitation of Decisions with

Consistency Checking

42

List of Contradicting Decisions

List of VPs

Filtering VPs¨ ≠Collecting a

Decision

Checking

Decision

Consistency

ÆVP1

VP2

VP3

Decision

for VP

VP1

Are

Decisions

Consistent?[Yes]

[No]](https://image.slidesharecdn.com/sst19-190529103010/85/Supporting-Change-in-Product-Lines-within-the-Context-of-Use-Case-driven-Development-and-Testing-42-320.jpg)

![Checking Decisions Consistency

44

List of Contradicting Decisions

List of VPs

Filtering VPs¨ ≠Collecting a

Decision

Checking

Decision

Consistency

ÆVP1

VP2

VP3

Decision

for VP

VP1

Are

Decisions

Consistent?[Yes]

[No]](https://image.slidesharecdn.com/sst19-190529103010/85/Supporting-Change-in-Product-Lines-within-the-Context-of-Use-Case-driven-Development-and-Testing-44-320.jpg)

![Related Work

• Impact analysis approaches for product lines using feature

models [Thüm et al., 2009; Seidl et al., 2012; Dintzner et

al., 2014]

• Reasoning approaches for product lines [Benavides et al.,

2010; Durán et al., 2017; White et al., 2008, 2010]

54](https://image.slidesharecdn.com/sst19-190529103010/85/Supporting-Change-in-Product-Lines-within-the-Context-of-Use-Case-driven-Development-and-Testing-54-320.jpg)

![Overview of Change Impact

Analysis Approach

57

Identify the Change

Impact on Other

decisions

Propose a Change

for a Decision

Apply the Proposed

Change

Proposed

Change

Do you want to apply

the proposed change?

•• •• •• •• •• •• •• ••

Impacted

Decisions

[No]

[Yes]

•• •• •• •• •• •• •• ••

Added/Removed

Updated Decisions

1 2

3[No]

[Yes]

Do you want to propose a

change for any other decision?](https://image.slidesharecdn.com/sst19-190529103010/85/Supporting-Change-in-Product-Lines-within-the-Context-of-Use-Case-driven-Development-and-Testing-57-320.jpg)

![Overview of Change Impact

Analysis Approach

58

Identify the Change

Impact on Other

decisions

Propose a Change

for a Decision

Apply the Proposed

Change

Proposed

Change

Do you want to apply

the proposed change?

•• •• •• •• •• •• •• ••

Impacted

Decisions

[No]

[Yes]

•• •• •• •• •• •• •• ••

Added/Removed

Updated Decisions

1

3[No]

[Yes]

Do you want to propose a

change for any other decision?

2](https://image.slidesharecdn.com/sst19-190529103010/85/Supporting-Change-in-Product-Lines-within-the-Context-of-Use-Case-driven-Development-and-Testing-58-320.jpg)

![Related Work

• Regression testing techniques using system design artifacts or feature

models [Wang et al., 2016; Runeson and Engstrom, 2012; Muccini et al.,

2006]

• Test case selection approaches based on test cases generation for the

product family [Lity et al., 2012, 2016; Lochau et al., 2014]

• Search-based approaches for multi-objective test case prioritization in

product lines [Parejo et al., 2016; Wang et al., 2014; Pradhan et al., 2011]

• Test case prioritization techniques using user knowledge through machine

learning algorithms [Lachmann et al., 2016; Tonella et al., 2006]

87](https://image.slidesharecdn.com/sst19-190529103010/85/Supporting-Change-in-Product-Lines-within-the-Context-of-Use-Case-driven-Development-and-Testing-87-320.jpg)

![Test Case Classification Approach

93

Matching

Decision Model

Elements

Change

Calculation

Impact Report

Generation

Test Case

Classification

Decision

Models

of Previous

Products Decision Models

of New Product

•• •• •• •• •• •• •• ••

Correspondence

Decision

Change

Test Cases, Trace

Links, and PS Use

Cases of the Previous

Products

Classified

Test Cases for the

New Product

[Yes]

[No]

Is there any other

previous product?

Impact

Report

1

2

34](https://image.slidesharecdn.com/sst19-190529103010/85/Supporting-Change-in-Product-Lines-within-the-Context-of-Use-Case-driven-Development-and-Testing-93-320.jpg)

![Test Case Classification Approach

94

Matching

Decision Model

Elements

Change

Calculation

Impact Report

Generation

Test Case

Classification

Decision

Models

of Previous

Products Decision Models

of New Product

•• •• •• •• •• •• •• ••

Correspondence

Decision

Change

Test Cases, Trace

Links, and PS Use

Cases of the Previous

Products

Classified

Test Cases for the

New Product

[Yes]

[No]

Is there any other

previous product?

Impact

Report

1

2

34

These two steps are

also used for incremental

reconfiguration of PS use

case models](https://image.slidesharecdn.com/sst19-190529103010/85/Supporting-Change-in-Product-Lines-within-the-Context-of-Use-Case-driven-Development-and-Testing-94-320.jpg)

![Test Case Classification Approach

95

Matching

Decision Model

Elements

Change

Calculation

Impact Report

Generation

Test Case

Classification

Decision

Models

of Previous

Products Decision Models

of New Product

•• •• •• •• •• •• •• ••

Correspondence

Decision

Change

Test Cases, Trace

Links, and PS Use

Cases of the Previous

Products

Classified

Test Cases for the

New Product

[Yes]

[No]

Is there any other

previous product?

Impact

Report

1

2

34](https://image.slidesharecdn.com/sst19-190529103010/85/Supporting-Change-in-Product-Lines-within-the-Context-of-Use-Case-driven-Development-and-Testing-95-320.jpg)

![References

• [Hajri et al., 2019], “Automating Test Case Classification and

Prioritization for Use Case-Driven Testing in Product Lines”,

arXiv:1905.11699, 2019

• [Hajri et al., 2018], “Change impact analysis for evolving

configuration decisions in product line use case models”, JSS.

• [Hajri et al., 2018], “Configuring use case models in product

families”, SoSyM.

124](https://image.slidesharecdn.com/sst19-190529103010/85/Supporting-Change-in-Product-Lines-within-the-Context-of-Use-Case-driven-Development-and-Testing-124-320.jpg)

![References

• [Hajri et al., 2017], “Incremental reconfiguration of product

specific use case models for evolving configuration decisions”,

REFSQ 2017.

• [Hajri et al., 2016], “PUMConf: A tool to configure product specific

use case and domain models in a product line”, FSE 2016.

• [Hajri et al., 2015], “Applying product line use case modeling in

an industrial automotive embedded system: Lessons learned and

a refined approach”, MoDELS 2015.

125](https://image.slidesharecdn.com/sst19-190529103010/85/Supporting-Change-in-Product-Lines-within-the-Context-of-Use-Case-driven-Development-and-Testing-125-320.jpg)

![PRTG Network Monitor Crack Latest Version & Serial Key 2025 [100% Working]](https://cdn.slidesharecdn.com/ss_thumbnails/revolutionizingresidentialwi-fi-250422112639-60fb726f-250504182022-6534c7c0-thumbnail.jpg?width=560&fit=bounds)

![Get & Download Wondershare Filmora Crack Latest [2025]](https://cdn.slidesharecdn.com/ss_thumbnails/revolutionizingresidentialwi-fi-250422112639-60fb726f-250429170801-59e1b240-thumbnail.jpg?width=560&fit=bounds)