Program evaluation

- 1. Program Evaluation Joy Anne R. Puazo Marie Buena S. Bunsoy

- 2. Evaluation Evaluation ◦ The systematic gathering of information for purposes of making decisions ◦ - Richards et al. (1985. p. 98)

- 3. Evaluation “Systematic educational evaluation consists of a formal assessment of the worth of educational phenomena.” - Popham (1975, p.8)

- 4. Evaluation “Evaluation is the determination of the worth of a thing. It includes obtaining information for use in judging the worth of the program, product, procedure, or object, or the potential utility of alternative approaches designed to attain specified objectives.” - Worthen and Sanders (1973, p.19)

- 5. Evaluation “Evaluation is the systematic collection and analysis of all relevant information necessary to promote the improvement of the curriculum and assess its effectiveness within the context of the particular institutions involved.” - Brown

- 6. Evaluation Testing ◦ Procedures that are based on test, whether they be criterion-referenced or norm- referenced in nature. Measurement ◦ Testing is included; other types of measurements that result in quantitative data such as attendance records, questionnaires, teacher-student ratings

- 7. Evaluation Evaluation ◦More qualitative in nature Case studies, classroom observations, meetings, diaries, and conversations

- 8. Approaches to Program Evaluation Product-oriented approaches ◦Focus: goals and instructional objectives ◦Tyler, Hammond, and Metfessel and Michael

- 9. Product-oriented approaches The programs should be built on explicitly defined goals, specified in terms of the society, the students, the subject matter, as well as on measurable behavioral objectives. Purpose: To determine whether the objectives have been achieved, and whether the goals have been met. - Tyler

- 10. Product-oriented approaches Five steps to be followed in performing a curriculum evaluation: Hammond (Worthen and Sanders 1973, p. 168) 1. Identifying precisely what is to be evaluated 2. Defining descriptive variables 3. Stating objectives in behavioral terms 4. Assessing the behavior described in the objectives 5. Analyzing the results and determining the effectiveness of the program

- 11. Product-oriented approaches 8 Major Evaluation Process: Metfessel and Michael (1967) 1. Direct and indirect involvement of the total school community 2. Formation of a cohesive model of broad goals and specific objectives 3. Transformation of specific objectives into communicable form

- 12. Product-oriented approaches 4. Instrumentation necessary for furnishing measures allowing inferences about program effectiveness 5. Periodic observation of behaviors 6. Analysis of data given by status and change measures 7. Interpretation of the data relative to specific objectives and broad goals 8. Recommendations culminating in further implementations, modifications, and in revisions of broad goals and specific objectives

- 13. Approaches to Program Evaluation Static-Characteristic Approaches ◦ Conducted by outside experts who inspect outside the program by examining various records Accreditation ◦ Process whereby an association of institutions sets up criteria and evaluation procedures for the purposes of deciding whether individual institutions should be certified as members in good standing of that association

- 14. Static-Characteristic Approaches “A major reason for the diminishing interest in accreditation conceptions of evaluation is the recognition of their almost total reliance on intrinsic rather than extrinsic factors. Although there are some intuitive support for the proposition that these process factors are associated with the final outcomes of an instructional sequence, the scarcity of the empirical evidence to confirm the relationship has created growing dissatisfaction with the accreditation approach among the educators.” - Popham (1975, p. 25)

- 15. Approaches to Program Evaluation Process-Oriented Approaches (Scriven and Stake) Scriven’s Model/Goal-free evaluation ◦ Limits are not set on studying the expected effects of the program vis-à- vis the goals

- 16. Process-Oriented Approaches Countenance model (Stake; 1967) 1. Begin with a rationale 2. Fix on descriptive operations 3. End with judgmental operations at 3 levels: Antecedents Transactions Outcomes

- 17. Approaches to Program Evaluation Decision-Facilitation Approaches ◦ Evaluators attempt to avoid making judgments ◦ Gathering information that will help the administrators and faculty in the program make their own judgments and evaluation ◦ Examples: CICP, CSE, Discrepancy model

- 18. Decision-Facilitation Approaches CIPP (Context, Input, Process, Product) 4 key elements in performing program evaluation: Stufflebeam (1974) 1. Evaluation is performed in the service of decision making, hence it should provide information that is useful to decision makers. 2. Evaluation is a cyclic, continuing process and therefore must be implemented through a systematic program. 3. The evaluation process includes 3 main steps of delineating, obtaining, and providing. (methodology) 4. The delineating and providing steps in the evaluation process are interface activities requiring collaboration.

- 19. Decision-Facilitation Approaches CSE (Center for the Study of Evaluation) 5 different categories of decisions (Alkin; 1969) 1. System assessment 2. Program planning 3. Program implementation 4. Program improvement 5. Program certification

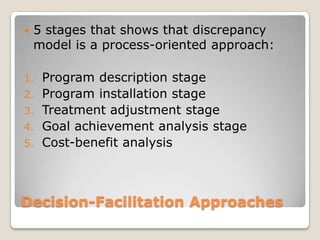

- 20. Decision-Facilitation Approaches Discrepancy Model (Provus; 1971) “Program evaluation is the process of (1) defining program standards; (2) determining whether the discrepancy exists between some aspect of program performance and the standards governing the aspect of the program; and (3) using discrepancy information either to change performance or to change program standards.”

- 21. Decision-Facilitation Approaches 5 stages that shows that discrepancy model is a process-oriented approach: 1. Program description stage 2. Program installation stage 3. Treatment adjustment stage 4. Goal achievement analysis stage 5. Cost-benefit analysis

- 22. Three Dimensions that Shape Point of View on Evaluation: 1. Formative vs. Summative 2. Process vs. Product 3. Quantitative vs. Qualitative

- 23. Three Dimensions Purpose of Information Formative evaluation ◦ During the ongoing curriculum dev’t process ◦ To collect and analyze information that will help in improving the curriculum Summative evaluation ◦ End of a program ◦ To determine the degree to which the program is successful, efficient, and effective.

- 24. Weakness of Summative Evaluation Most language program are continuing institutions that do not conveniently come to an end so that such evaluation can be performed

- 25. Benefits of Summative Evaluation Identifying the success and failure of the program Provides an opportunity to stand back or consider what has been achieved in the longer view Combination of F and S: ◦ Can put the program and its staff in a strong position for responding any crises that might be brought on by the evaluation from outside the program

- 29. Three Dimensions Types of Information Process evaluation ◦Focuses on the workings of a program Product evaluation ◦Focus on whether the goals of the program are being achieved

- 30. Three Dimensions Types of Data and Analyses Quantitative data ◦ Countable bits of information which are usually gathered using measures that produce results in the form of language Qualitative Data ◦ Consist of more holistic information based on observations

- 32. Instruments and Procedures Quantitative Qualitative Existing information Records Analysis X X Systems X X Literature Review X Letter Writing X

- 33. Instruments and Procedures Quantitative Qualitative Tests Proficiency X Placement X Diagnostic X Achievement X

- 34. Instruments and Procedures Quantitative Qualitative Observations Case Studies X Diary Studies X Behavior Observation X Interactional Analyses X Inventories X

- 36. Instruments and Procedures Quantitative Qualitative Meetings Delphi Technique X Advisory X Interest group X Review X

- 37. Instruments and Procedures Quantitative Qualitative Questionnaires Biodata Survey X Opinion Survey X X Self-ratings X Judgmental ratings X Q-sort X

- 38. Gathering Evaluation Data Quantitative Evaluation Studies Qualitative Evaluation Studies Using Both Quantitative and Qualitative Methods

- 39. Gathering Evaluation Data Bits of information that are countable and are gathered using measures that produce results in the form of numbers.

- 40. Gathering Evaluation Data The importance of using quantitative data is not so much in the collection of those data, but rather in the analysis of the data, which should be carried out in such a way that patterns emerge.

- 41. Gathering Evaluation Data A classic example of what many people think an evaluation study ought to be is a quantitative, statistics-based experimental study designed to investigate the effectiveness of a given program.

- 42. Gathering Evaluation Data Key Terms: Experimental Group One that receives the treatment Control Group Receives no treatment

- 43. Gathering Evaluation Data is something that the experimenter does to the experimental group or rather than an experience through which they go (as in learning experience).

- 44. Gathering Evaluation Data The purpose of giving treatment to the experimental group and nothing to the control group is to determine whether the treatment has been effective.

- 45. Gathering Evaluation Data Consists of information that is more holistic than quantitative data.

- 46. Gathering Evaluation Data To collect data in order to analyze them in such a way that patterns emerge so that sense can be made of the results and the quality of the program can be evaluated.

- 47. Gathering Evaluation Data Using Both Quantitative and Qualitative Methods Both types of data can yield valuable information in any evaluation, and therefore ignoring either type of information would be pointless and self- defeating. Sound evaluation practices will be based on all available perspectives so that many types of information can be gathered to strengthen the evaluation process and ensure that the resulting decisions will be as informed, accurate, and useful as possible.

- 48. Program Components as Data Sources The purpose of gathering all this information is, of course, to determine the effectiveness of the program, so as to improve each of the components and the ways that they work together.

- 49. Program Components as Data Sources The overall purpose of evaluation is to determine the general effectiveness of the program, usually for purposes of improving it or defending its utility to outside administrators or agencies. Naturally, the curriculum components under discussion are needs analysis, objectives, testing, materials, teaching, and the evaluation itself.

- 50. Program Components as Data Sources Quantitative study that demonstrates the students (who receive a language learning treatment) significantly outperformed a control group (who did not receive the treatment) is really only showing that the treatment in question is better than nothing. Lynch (1986)

- 51. Questions Primary Data Sources N A E N E A D L S Y S I S Which of the needs that were originally identified turned out to be accurate (now that the program has more experience with students and their relationship to the program) in terms of what has been learned in testing, developing materials, teaching and evaluation? All original needs analysis documents O B J E C T I V E S Which of the original objectives reflect real student needs in view of the changing perceptions of those needs and all of the other information gathered in testing, materials development, teaching, and evaluation? Criterion-Referenced Tests (Diagnostic)

- 52. T E S T I N G To what degree are the students achieving the objectives of the courses? Were the norm- referenced and criterion- referenced tests valid? Criterion-referenced tests (achievement), Test Evaluation Procedures M A T E R I A L S How effective are the materials (whether adopted, developed, or adapted) at meeting the needs of the students as expressed in the objectives? Materials Evaluation Procedure T E A C H I N G To what degree is instruction effective? Classroom observations and student evaluations

- 53. Program Component as Data Sources More detailed questions: What were the original perception of the students’ needs? How accurate was this initial thinking (now that we have more experience with the students and their relationship to the said program)? Which of the original needs, especially as reflected in the goals and objectives, are useful and which are not useful? What newly perceived needs must be addressed? How do these relate to those perceptions that were found to be accurate? How must be the goals and objectives be adjusted accordingly?

- 54. Program Component as Data Sources Efficient? Evaluators could set up a study to investigate the degree to which the amount of time can be compressed to make the learning process more efficient.

- 55. Questions Primary Data Sources N A E N E A D L S Y S I S Which of the original student needs turned out to be the most efficiently learned? Which were superfluous? Original needs analysis documents and criterion referenced tests (both diagnostic and achievement) O B J E C T I V E S Which objectives turned out to be needed by the students and which they already know? Criterion-referenced tests (diagnostic)

- 56. T E S T I N G Were the norm-referenced and criterion-referenced tests efficient and reliable? Test Evaluation Procedures M A T E R I A L S How can material resources be reorganized for more efficient use by teachers and students? Materials blueprint and scope- and-sequence charts T E A C H I N G What types of support are provided to help teachers and students? Orientation documentation and administrative support structure

- 57. Program Component as Data Sources Attitudes The third general area concern in language program evaluation will usually center on the attitudes of the teachers, students, and administrators regarding the various components of the curriculum as they were implemented in the program.

- 58. Questions Primary Data Sources Needs Analysis What are the students’, teachers’, and administrators’ attitude or feelings about the situational and language needs of the students? Before program? After? Needs analysis questionnaires and any resulting documents Objectives What are the students’, teachers’, and administrators’ attitude or feelings about the usefulness of the objectives as originally formulated? Before program? After? Evaluation interviews and questionnaires

- 59. Testing What are the students’, teachers’, and administrators’ attitude or feelings about the usefulness of the tests as originally developed? Before? After? Evaluation interviews, meetings, and questionnaires Materials What are the students’, teachers’, and administrators’ attitude or feelings about the usefulness of the materials as originally adopted, developed and/or adapted? Before? After? Evaluation interviews, meetings, and questionnaires Teaching What are the students’, teachers’, and administrators’ attitude or feelings about the usefulness of the teaching as originally delivered? Before? Evaluation interviews, meetings, and questionnaires