Tc basics

Download as PPTX, PDF8 likes4,492 views

An introduction on tc basic concepts and the common used parameters. Shows how parameters are used in tbf internally.

1 of 37

Downloaded 119 times

![qdisc handle

• Qdisc handle is used to identify Qdisc

- {none|major[:]}

- none is TC_H_UNSPEC

- major is 16bits HEX number(Without ‘0x’ prefix)

- : is optional

• Internally, qdisc_handle = major<<16](https://image.slidesharecdn.com/tcbasics-121014205427-phpapp02/85/Tc-basics-17-320.jpg)

![classid

• Classid is used to identify Class

- {none|root|[major]:minor}

- none is TC_H_UNSPEC, root is TC_H_ROOT

- major/minor are both 16bits HEX numbers(Without

‘0x’ prefix), major is optional

• Internally, classid = (major<<16)|minor](https://image.slidesharecdn.com/tcbasics-121014205427-phpapp02/85/Tc-basics-18-320.jpg)

![tbf

• limit/latency

• buffer/burst/maxburst, capcity of token

bucket: size[/cell]

• mpu: minimum process unit

• rate/peakrate

• Max(Rate/HZ,MTU)](https://image.slidesharecdn.com/tcbasics-121014205427-phpapp02/85/Tc-basics-32-320.jpg)

Ad

Recommended

Linux Linux Traffic Control

Linux Linux Traffic ControlSUSE Labs Taipei Linux Traffic Control allows administrators to control network traffic through mechanisms like shaping, scheduling, classifying, policing, dropping and marking. It uses components like queuing disciplines (qdiscs), classes, filters, and actions. The tc command can be used to configure these components by adding, changing or deleting traffic control settings on network interfaces.

Debug dpdk process bottleneck & painpoints

Debug dpdk process bottleneck & painpointsVipin Varghese generic step by step debug and troubleshoot for performance and functional issues found commonly in packet processing with DPDK.

netfilter and iptables

netfilter and iptablesKernel TLV netfilter is a framework provided by the Linux kernel that allows various networking-related operations to be implemented in the form of customized handlers.

iptables is a user-space application program that allows a system administrator to configure the tables provided by the Linux kernel firewall (implemented as different netfilter modules) and the chains and rules it stores.

Many systems use iptables/netfilter, Linux's native packet filtering/mangling framework since Linux 2.4, be it home routers or sophisticated cloud network stacks.

In this session, we will talk about the netfilter framework and its facilities, explain how basic filtering and mangling use-cases are implemented using iptables, and introduce some less common but powerful extensions of iptables.

Shmulik Ladkani, Chief Architect at Nsof Networks.

Long time network veteran and kernel geek.

Shmulik started his career at Jungo (acquired by NDS/Cisco) implementing residential gateway software, focusing on embedded Linux, Linux kernel, networking and hardware/software integration.

Some billions of forwarded packets later, Shmulik left his position as Jungo's lead architect and joined Ravello Systems (acquired by Oracle) as tech lead, developing a virtual data center as a cloud-based service, focusing around virtualization systems, network virtualization and SDN.

Recently he co-founded Nsof Networks, where he's been busy architecting network infrastructure as a cloud-based service, gazing at internet routes in astonishment, and playing the chkuku.

Linux Network Stack

Linux Network StackAdrien Mahieux - The document discusses Linux network stack monitoring and configuration. It begins with definitions of key concepts like RSS, RPS, RFS, LRO, GRO, DCA, XDP and BPF.

- It then provides an overview of how the network stack works from the hardware interrupts and driver level up through routing, TCP/IP and to the socket level.

- Monitoring tools like ethtool, ftrace and /proc/interrupts are described for viewing hardware statistics, software stack traces and interrupt information.

FD.io Vector Packet Processing (VPP)

FD.io Vector Packet Processing (VPP)Kirill Tsym This document provides an overview of Vector Packet Processing (VPP), an open source packet processing platform developed as part of the FD.io project. VPP is based on DPDK for high performance packet processing in userspace. It includes a full networking stack and can perform L2/L3 forwarding and routing at speeds of over 14 million packets per second on a single core. VPP processing is divided into individual nodes connected by a graph. Packets are passed between nodes as vectors to support batch processing. VPP supports both single and multicore modes using different threading models. It can be used to implement routers, switches, and other network functions and topologies.

introduction to linux kernel tcp/ip ptocotol stack

introduction to linux kernel tcp/ip ptocotol stack monad bobo This document provides an introduction and overview of the networking code in the Linux kernel source tree. It discusses the different layers including link (L2), network (L3), and transport (L4) layers. It describes the input and output processing, device interfaces, traffic directions, and major developers for each layer. Config and benchmark tools are also mentioned. Resources for further learning about the Linux kernel networking code are provided at the end.

Ixgbe internals

Ixgbe internalsSUSE Labs Taipei This document discusses the internals of the ixgbe driver, which is the Intel 10 Gigabit Ethernet driver for Linux. It describes how the driver handles transmission and reception of packets using ring buffers and NAPI. It also discusses how the driver supports eXpress Data Path (XDP) by using a separate ring for XDP packets and adjusting page reference counts to support XDP operations like redirection. Upcoming features that will improve XDP support for this driver are also mentioned.

Network Programming: Data Plane Development Kit (DPDK)

Network Programming: Data Plane Development Kit (DPDK)Andriy Berestovskyy This presentation introduces Data Plane Development Kit overview and basics. It is a part of a Network Programming Series.

First, the presentation focuses on the network performance challenges on the modern systems by comparing modern CPUs with modern 10 Gbps ethernet links. Then it touches memory hierarchy and kernel bottlenecks.

The following part explains the main DPDK techniques, like polling, bursts, hugepages and multicore processing.

DPDK overview explains how is the DPDK application is being initialized and run, touches lockless queues (rte_ring), memory pools (rte_mempool), memory buffers (rte_mbuf), hashes (rte_hash), cuckoo hashing, longest prefix match library (rte_lpm), poll mode drivers (PMDs) and kernel NIC interface (KNI).

At the end, there are few DPDK performance tips.

Tags: access time, burst, cache, dpdk, driver, ethernet, hub, hugepage, ip, kernel, lcore, linux, memory, pmd, polling, rss, softswitch, switch, userspace, xeon

Demystify eBPF JIT Compiler

Demystify eBPF JIT CompilerNetronome This slide deck focuses on eBPF JIT compilation infrastructure and how it plays an important role in the entire eBPF life cycle inside the Linux kernel. First, it does quite a number of control flow checks to reject vulnerable programs and then JIT compiles the eBPF program to either host or offloading target instructions which boost performance. However, there is little documentation about this topic which this slide deck will dive into.

BPF: Tracing and more

BPF: Tracing and moreBrendan Gregg Video: https://www.youtube.com/watch?v=JRFNIKUROPE . Talk for linux.conf.au 2017 (LCA2017) by Brendan Gregg, about Linux enhanced BPF (eBPF). Abstract:

A world of new capabilities is emerging for the Linux 4.x series, thanks to enhancements that have been included in Linux for to Berkeley Packet Filter (BPF): an in-kernel virtual machine that can execute user space-defined programs. It is finding uses for security auditing and enforcement, enhancing networking (including eXpress Data Path), and performance observability and troubleshooting. Many new open source tools that have been written in the past 12 months for performance analysis that use BPF. Tracing superpowers have finally arrived for Linux!

For its use with tracing, BPF provides the programmable capabilities to the existing tracing frameworks: kprobes, uprobes, and tracepoints. In particular, BPF allows timestamps to be recorded and compared from custom events, allowing latency to be studied in many new places: kernel and application internals. It also allows data to be efficiently summarized in-kernel, including as histograms. This has allowed dozens of new observability tools to be developed so far, including measuring latency distributions for file system I/O and run queue latency, printing details of storage device I/O and TCP retransmits, investigating blocked stack traces and memory leaks, and a whole lot more.

This talk will summarize BPF capabilities and use cases so far, and then focus on its use to enhance Linux tracing, especially with the open source bcc collection. bcc includes BPF versions of old classics, and many new tools, including execsnoop, opensnoop, funcccount, ext4slower, and more (many of which I developed). Perhaps you'd like to develop new tools, or use the existing tools to find performance wins large and small, especially when instrumenting areas that previously had zero visibility. I'll also summarize how we intend to use these new capabilities to enhance systems analysis at Netflix.

DPDK: Multi Architecture High Performance Packet Processing

DPDK: Multi Architecture High Performance Packet ProcessingMichelle Holley What is DPDK? Why DPDK? How DPDK enhances OVS/NFV Infrastructure

Author: Muthurajan (M Jay) Jayakumar

LinuxCon 2015 Linux Kernel Networking Walkthrough

LinuxCon 2015 Linux Kernel Networking WalkthroughThomas Graf This presentation features a walk through the Linux kernel networking stack for users and developers. It will cover insights into both, existing essential networking features and recent developments and will show how to use them properly. Our starting point is the network card driver as it feeds a packet into the stack. We will follow the packet as it traverses through various subsystems such as packet filtering, routing, protocol stacks, and the socket layer. We will pause here and there to look into concepts such as networking namespaces, segmentation offloading, TCP small queues, and low latency polling and will discuss how to configure them.

BPF Internals (eBPF)

BPF Internals (eBPF)Brendan Gregg USENIX LISA2021 talk by Brendan Gregg (https://www.youtube.com/watch?v=_5Z2AU7QTH4). This talk is a deep dive that describes how BPF (eBPF) works internally on Linux, and dissects some modern performance observability tools. Details covered include the kernel BPF implementation: the verifier, JIT compilation, and the BPF execution environment; the BPF instruction set; different event sources; and how BPF is used by user space, using bpftrace programs as an example. This includes showing how bpftrace is compiled to LLVM IR and then BPF bytecode, and how per-event data and aggregated map data are fetched from the kernel.

The TCP/IP Stack in the Linux Kernel

The TCP/IP Stack in the Linux KernelDivye Kapoor The document discusses various data structures and functions related to network packet processing in the Linux kernel socket layer. It describes the sk_buff structure that is used to pass packets between layers. It also explains the net_device structure that represents a network interface in the kernel. When a packet is received, the interrupt handler will raise a soft IRQ for processing. The packet will then traverse various protocol layers like IP and TCP to be eventually delivered to a socket and read by a userspace application.

Linux Networking Explained

Linux Networking ExplainedThomas Graf Linux offers an extensive selection of programmable and configurable networking components from traditional bridges, encryption, to container optimized layer 2/3 devices, link aggregation, tunneling, several classification and filtering languages all the way up to full SDN components. This talk will provide an overview of many Linux networking components covering the Linux bridge, IPVLAN, MACVLAN, MACVTAP, Bonding/Team, OVS, classification & queueing, tunnel types, hidden routing tricks, IPSec, VTI, VRF and many others.

DevConf 2014 Kernel Networking Walkthrough

DevConf 2014 Kernel Networking WalkthroughThomas Graf This presentation features a walk through the Linux kernel networking stack covering the essentials and recent developments a developer needs to know. Our starting point is the network card driver as it feeds a packet into the stack. We will follow the packet as it traverses through various subsystems such as packet filtering, routing, protocol stacks, and the socket layer. We will pause here and there to look into concepts such as segmentation offloading, TCP small queues, and low latency polling. We will cover APIs exposed by the kernel that go beyond use of write()/read() on sockets and will look into how they are implemented on the kernel side.

Faster packet processing in Linux: XDP

Faster packet processing in Linux: XDPDaniel T. Lee SOSCON 2019.10.17

What are the methods for packet processing on Linux? And how fast are each packet processing methods? In this presentation, we will learn how to handle packets on Linux (User space, socket filter, netfilter, tc), and compare performance with analysis of where each packet processing is done in the network stack (hook point). Also, we will discuss packet processing using XDP, an in-kernel fast-path recently added to the Linux kernel. eXpress Data Path (XDP) is a high-performance programmable network data-path within the Linux kernel. The XDP is located at the lowest level of access through SW in the network stack, the point at which driver receives the packet. By using the eBPF infrastructure at this hook point, the network stack can be expanded without modifying the kernel.

Daniel T. Lee (Hoyeon Lee)

@danieltimlee

Daniel T. Lee currently works as Software Engineer at Kosslab and contributing to Linux kernel BPF project. He has interest in cloud, Linux networking, and tracing technologies, and likes to analyze the kernel's internal using BPF technology.

The linux networking architecture

The linux networking architecturehugo lu The document discusses Linux networking architecture and covers several key topics in 3 paragraphs or less:

It first describes the basic structure and layers of the Linux networking stack including the network device interface, network layer protocols like IP, transport layer, and sockets. It then discusses how network packets are managed in Linux through the use of socket buffers and associated functions. The document also provides an overview of the data link layer and protocols like Ethernet, PPP, and how they are implemented in Linux.

Introduction to DPDK

Introduction to DPDKKernel TLV DPDK is a set of drivers and libraries that allow applications to bypass the Linux kernel and access network interface cards directly for very high performance packet processing. It is commonly used for software routers, switches, and other network applications. DPDK can achieve over 11 times higher packet forwarding rates than applications using the Linux kernel network stack alone. While it provides best-in-class performance, DPDK also has disadvantages like reduced security and isolation from standard Linux services.

Building Network Functions with eBPF & BCC

Building Network Functions with eBPF & BCCKernel TLV eBPF (Extended Berkeley Packet Filter) is an in-kernel virtual machine that allows running user-supplied sandboxed programs inside of the kernel. It is especially well-suited to network programs and it's possible to write programs that filter traffic, classify traffic and perform high-performance custom packet processing.

BCC (BPF Compiler Collection) is a toolkit for creating efficient kernel tracing and manipulation programs. It makes use of eBPF.

BCC provides an end-to-end workflow for developing eBPF programs and supplies Python bindings, making eBPF programs much easier to write.

Together, eBPF and BCC allow you to develop and deploy network functions safely and easily, focusing on your application logic (instead of kernel datapath integration).

In this session, we will introduce eBPF and BCC, explain how to implement a network function using BCC, discuss some real-life use-cases and show a live demonstration of the technology.

About the speaker

Shmulik Ladkani, Chief Technology Officer at Meta Networks,

Long time network veteran and kernel geek.

Shmulik started his career at Jungo (acquired by NDS/Cisco) implementing residential gateway software, focusing on embedded Linux, Linux kernel, networking and hardware/software integration.

Some billions of forwarded packets later, Shmulik left his position as Jungo's lead architect and joined Ravello Systems (acquired by Oracle) as tech lead, developing a virtual data center as a cloud-based service, focusing around virtualization systems, network virtualization and SDN.

Recently he co-founded Meta Networks where he's been busy architecting secure, multi-tenant, large-scale network infrastructure as a cloud-based service.

DockerCon 2017 - Cilium - Network and Application Security with BPF and XDP

DockerCon 2017 - Cilium - Network and Application Security with BPF and XDPThomas Graf This talk will start with a deep dive and hands on examples of BPF, possibly the most promising low level technology to address challenges in application and network security, tracing, and visibility. We will discuss how BPF evolved from a simple bytecode language to filter raw sockets for tcpdump to the a JITable virtual machine capable of universally extending and instrumenting both the Linux kernel and user space applications. The introduction is followed by a concrete example of how the Cilium open source project applies BPF to solve networking, security, and load balancing for highly distributed applications. We will discuss and demonstrate how Cilium with the help of BPF can be combined with distributed system orchestration such as Docker to simplify security, operations, and troubleshooting of distributed applications.

Introduction to eBPF and XDP

Introduction to eBPF and XDPlcplcp1 This document provides an introduction to eBPF and XDP. It discusses the history of BPF and how it evolved into eBPF. Key aspects of eBPF covered include the instruction set, JIT compilation, verifier, helper functions, and maps. XDP is introduced as a way to program the data plane using eBPF programs attached early in the receive path. Example use cases and performance benchmarks for XDP are also mentioned.

eBPF Trace from Kernel to Userspace

eBPF Trace from Kernel to UserspaceSUSE Labs Taipei This document discusses eBPF (extended Berkeley Packet Filter), which allows tracing from the Linux kernel to userspace using BPF programs. It provides an overview of eBPF including extended registers, verification, maps, and probes. Examples are given of using eBPF for tracing functions like kfree_skb() and the C library function malloc. The Berkeley Compiler Collection (BCC) makes it easy to write eBPF programs in C and Python.

DPDK & Layer 4 Packet Processing

DPDK & Layer 4 Packet ProcessingMichelle Holley Learn about Transport Layer Development Kit and let us accelerate beyond the Layer 2/Layer 3.

Author: Muthurajan (M Jay) Jayakumar

Fun with Network Interfaces

Fun with Network InterfacesKernel TLV Agenda:

In this session, Shmulik Ladkani discusses the kernel's net_device abstraction, its interfaces, and how net-devices interact with the network stack. The talk covers many of the software network devices that exist in the Linux kernel, the functionalities they provide and some interesting use cases.

Speaker:

Shmulik Ladkani is a Tech Lead at Ravello Systems.

Shmulik started his career at Jungo (acquired by NDS/Cisco) implementing residential gateway software, focusing on embedded Linux, Linux kernel, networking and hardware/software integration.

51966 coffees and billions of forwarded packets later, with millions of homes running his software, Shmulik left his position as Jungo’s lead architect and joined Ravello Systems (acquired by Oracle) as tech lead, developing a virtual data center as a cloud service. He's now focused around virtualization systems, network virtualization and SDN.

BPF - in-kernel virtual machine

BPF - in-kernel virtual machineAlexei Starovoitov BPF of Berkeley Packet Filter mechanism was first introduced in linux in 1997 in version 2.1.75. It has seen a number of extensions of the years. Recently in versions 3.15 - 3.19 it received a major overhaul which drastically expanded it's applicability. This talk will cover how the instruction set looks today and why. It's architecture, capabilities, interface, just-in-time compilers. We will also talk about how it's being used in different areas of the kernel like tracing and networking and future plans.

Tutorial: Using GoBGP as an IXP connecting router

Tutorial: Using GoBGP as an IXP connecting routerShu Sugimoto - Show you how GoBGP can be used as a software router in conjunction with quagga

- (Tutorial) Walk through the setup of IXP connecting router using GoBGP

Linux kernel tracing

Linux kernel tracingViller Hsiao This document discusses tracing in the Linux kernel. It describes various tracing mechanisms like ftrace, tracepoints, kprobes, perf, and eBPF. Ftrace allows tracing functions via compiler instrumentation or dynamically. Tracepoints define custom trace events that can be inserted at specific points. Kprobes and related probes like jprobes allow tracing kernel functions. Perf provides performance monitoring capabilities. eBPF enables custom tracing programs to be run efficiently in the kernel via just-in-time compilation. Tracing tools like perf, systemtap, and LTTng provide user interfaces.

Cache aware hybrid sorter

Cache aware hybrid sorterManchor Ko The document describes a cache-aware hybrid sorter that is faster than the STL sort. It first radix sorts input streams into substreams that fit into the CPU cache. This is done in a cache-friendly manner by splitting streams based on cache size. The substreams are then merged using a loser tree merge, which has better memory access patterns than a heap-based priority queue. Testing showed the hybrid sort was 2-6 times faster than STL sort and scaled well on multi-core CPUs.

Network emulator

Network emulatorjeromy fu This document discusses network emulation using tc. It begins with an agenda that covers why emulation is useful, what aspects of a network can be emulated, how tc works, how to do emulation with tc, a comparison of tc to Nistnet and WANem, and references for further information. It then goes into detail on each agenda item, providing explanations of concepts like qdiscs, classes, filters, and examples of using tc for tasks like bandwidth emulation, delay/jitter emulation, and loss emulation. The key advantages and limitations of Nistnet and WANem are also outlined.

Ad

More Related Content

What's hot (20)

Demystify eBPF JIT Compiler

Demystify eBPF JIT CompilerNetronome This slide deck focuses on eBPF JIT compilation infrastructure and how it plays an important role in the entire eBPF life cycle inside the Linux kernel. First, it does quite a number of control flow checks to reject vulnerable programs and then JIT compiles the eBPF program to either host or offloading target instructions which boost performance. However, there is little documentation about this topic which this slide deck will dive into.

BPF: Tracing and more

BPF: Tracing and moreBrendan Gregg Video: https://www.youtube.com/watch?v=JRFNIKUROPE . Talk for linux.conf.au 2017 (LCA2017) by Brendan Gregg, about Linux enhanced BPF (eBPF). Abstract:

A world of new capabilities is emerging for the Linux 4.x series, thanks to enhancements that have been included in Linux for to Berkeley Packet Filter (BPF): an in-kernel virtual machine that can execute user space-defined programs. It is finding uses for security auditing and enforcement, enhancing networking (including eXpress Data Path), and performance observability and troubleshooting. Many new open source tools that have been written in the past 12 months for performance analysis that use BPF. Tracing superpowers have finally arrived for Linux!

For its use with tracing, BPF provides the programmable capabilities to the existing tracing frameworks: kprobes, uprobes, and tracepoints. In particular, BPF allows timestamps to be recorded and compared from custom events, allowing latency to be studied in many new places: kernel and application internals. It also allows data to be efficiently summarized in-kernel, including as histograms. This has allowed dozens of new observability tools to be developed so far, including measuring latency distributions for file system I/O and run queue latency, printing details of storage device I/O and TCP retransmits, investigating blocked stack traces and memory leaks, and a whole lot more.

This talk will summarize BPF capabilities and use cases so far, and then focus on its use to enhance Linux tracing, especially with the open source bcc collection. bcc includes BPF versions of old classics, and many new tools, including execsnoop, opensnoop, funcccount, ext4slower, and more (many of which I developed). Perhaps you'd like to develop new tools, or use the existing tools to find performance wins large and small, especially when instrumenting areas that previously had zero visibility. I'll also summarize how we intend to use these new capabilities to enhance systems analysis at Netflix.

DPDK: Multi Architecture High Performance Packet Processing

DPDK: Multi Architecture High Performance Packet ProcessingMichelle Holley What is DPDK? Why DPDK? How DPDK enhances OVS/NFV Infrastructure

Author: Muthurajan (M Jay) Jayakumar

LinuxCon 2015 Linux Kernel Networking Walkthrough

LinuxCon 2015 Linux Kernel Networking WalkthroughThomas Graf This presentation features a walk through the Linux kernel networking stack for users and developers. It will cover insights into both, existing essential networking features and recent developments and will show how to use them properly. Our starting point is the network card driver as it feeds a packet into the stack. We will follow the packet as it traverses through various subsystems such as packet filtering, routing, protocol stacks, and the socket layer. We will pause here and there to look into concepts such as networking namespaces, segmentation offloading, TCP small queues, and low latency polling and will discuss how to configure them.

BPF Internals (eBPF)

BPF Internals (eBPF)Brendan Gregg USENIX LISA2021 talk by Brendan Gregg (https://www.youtube.com/watch?v=_5Z2AU7QTH4). This talk is a deep dive that describes how BPF (eBPF) works internally on Linux, and dissects some modern performance observability tools. Details covered include the kernel BPF implementation: the verifier, JIT compilation, and the BPF execution environment; the BPF instruction set; different event sources; and how BPF is used by user space, using bpftrace programs as an example. This includes showing how bpftrace is compiled to LLVM IR and then BPF bytecode, and how per-event data and aggregated map data are fetched from the kernel.

The TCP/IP Stack in the Linux Kernel

The TCP/IP Stack in the Linux KernelDivye Kapoor The document discusses various data structures and functions related to network packet processing in the Linux kernel socket layer. It describes the sk_buff structure that is used to pass packets between layers. It also explains the net_device structure that represents a network interface in the kernel. When a packet is received, the interrupt handler will raise a soft IRQ for processing. The packet will then traverse various protocol layers like IP and TCP to be eventually delivered to a socket and read by a userspace application.

Linux Networking Explained

Linux Networking ExplainedThomas Graf Linux offers an extensive selection of programmable and configurable networking components from traditional bridges, encryption, to container optimized layer 2/3 devices, link aggregation, tunneling, several classification and filtering languages all the way up to full SDN components. This talk will provide an overview of many Linux networking components covering the Linux bridge, IPVLAN, MACVLAN, MACVTAP, Bonding/Team, OVS, classification & queueing, tunnel types, hidden routing tricks, IPSec, VTI, VRF and many others.

DevConf 2014 Kernel Networking Walkthrough

DevConf 2014 Kernel Networking WalkthroughThomas Graf This presentation features a walk through the Linux kernel networking stack covering the essentials and recent developments a developer needs to know. Our starting point is the network card driver as it feeds a packet into the stack. We will follow the packet as it traverses through various subsystems such as packet filtering, routing, protocol stacks, and the socket layer. We will pause here and there to look into concepts such as segmentation offloading, TCP small queues, and low latency polling. We will cover APIs exposed by the kernel that go beyond use of write()/read() on sockets and will look into how they are implemented on the kernel side.

Faster packet processing in Linux: XDP

Faster packet processing in Linux: XDPDaniel T. Lee SOSCON 2019.10.17

What are the methods for packet processing on Linux? And how fast are each packet processing methods? In this presentation, we will learn how to handle packets on Linux (User space, socket filter, netfilter, tc), and compare performance with analysis of where each packet processing is done in the network stack (hook point). Also, we will discuss packet processing using XDP, an in-kernel fast-path recently added to the Linux kernel. eXpress Data Path (XDP) is a high-performance programmable network data-path within the Linux kernel. The XDP is located at the lowest level of access through SW in the network stack, the point at which driver receives the packet. By using the eBPF infrastructure at this hook point, the network stack can be expanded without modifying the kernel.

Daniel T. Lee (Hoyeon Lee)

@danieltimlee

Daniel T. Lee currently works as Software Engineer at Kosslab and contributing to Linux kernel BPF project. He has interest in cloud, Linux networking, and tracing technologies, and likes to analyze the kernel's internal using BPF technology.

The linux networking architecture

The linux networking architecturehugo lu The document discusses Linux networking architecture and covers several key topics in 3 paragraphs or less:

It first describes the basic structure and layers of the Linux networking stack including the network device interface, network layer protocols like IP, transport layer, and sockets. It then discusses how network packets are managed in Linux through the use of socket buffers and associated functions. The document also provides an overview of the data link layer and protocols like Ethernet, PPP, and how they are implemented in Linux.

Introduction to DPDK

Introduction to DPDKKernel TLV DPDK is a set of drivers and libraries that allow applications to bypass the Linux kernel and access network interface cards directly for very high performance packet processing. It is commonly used for software routers, switches, and other network applications. DPDK can achieve over 11 times higher packet forwarding rates than applications using the Linux kernel network stack alone. While it provides best-in-class performance, DPDK also has disadvantages like reduced security and isolation from standard Linux services.

Building Network Functions with eBPF & BCC

Building Network Functions with eBPF & BCCKernel TLV eBPF (Extended Berkeley Packet Filter) is an in-kernel virtual machine that allows running user-supplied sandboxed programs inside of the kernel. It is especially well-suited to network programs and it's possible to write programs that filter traffic, classify traffic and perform high-performance custom packet processing.

BCC (BPF Compiler Collection) is a toolkit for creating efficient kernel tracing and manipulation programs. It makes use of eBPF.

BCC provides an end-to-end workflow for developing eBPF programs and supplies Python bindings, making eBPF programs much easier to write.

Together, eBPF and BCC allow you to develop and deploy network functions safely and easily, focusing on your application logic (instead of kernel datapath integration).

In this session, we will introduce eBPF and BCC, explain how to implement a network function using BCC, discuss some real-life use-cases and show a live demonstration of the technology.

About the speaker

Shmulik Ladkani, Chief Technology Officer at Meta Networks,

Long time network veteran and kernel geek.

Shmulik started his career at Jungo (acquired by NDS/Cisco) implementing residential gateway software, focusing on embedded Linux, Linux kernel, networking and hardware/software integration.

Some billions of forwarded packets later, Shmulik left his position as Jungo's lead architect and joined Ravello Systems (acquired by Oracle) as tech lead, developing a virtual data center as a cloud-based service, focusing around virtualization systems, network virtualization and SDN.

Recently he co-founded Meta Networks where he's been busy architecting secure, multi-tenant, large-scale network infrastructure as a cloud-based service.

DockerCon 2017 - Cilium - Network and Application Security with BPF and XDP

DockerCon 2017 - Cilium - Network and Application Security with BPF and XDPThomas Graf This talk will start with a deep dive and hands on examples of BPF, possibly the most promising low level technology to address challenges in application and network security, tracing, and visibility. We will discuss how BPF evolved from a simple bytecode language to filter raw sockets for tcpdump to the a JITable virtual machine capable of universally extending and instrumenting both the Linux kernel and user space applications. The introduction is followed by a concrete example of how the Cilium open source project applies BPF to solve networking, security, and load balancing for highly distributed applications. We will discuss and demonstrate how Cilium with the help of BPF can be combined with distributed system orchestration such as Docker to simplify security, operations, and troubleshooting of distributed applications.

Introduction to eBPF and XDP

Introduction to eBPF and XDPlcplcp1 This document provides an introduction to eBPF and XDP. It discusses the history of BPF and how it evolved into eBPF. Key aspects of eBPF covered include the instruction set, JIT compilation, verifier, helper functions, and maps. XDP is introduced as a way to program the data plane using eBPF programs attached early in the receive path. Example use cases and performance benchmarks for XDP are also mentioned.

eBPF Trace from Kernel to Userspace

eBPF Trace from Kernel to UserspaceSUSE Labs Taipei This document discusses eBPF (extended Berkeley Packet Filter), which allows tracing from the Linux kernel to userspace using BPF programs. It provides an overview of eBPF including extended registers, verification, maps, and probes. Examples are given of using eBPF for tracing functions like kfree_skb() and the C library function malloc. The Berkeley Compiler Collection (BCC) makes it easy to write eBPF programs in C and Python.

DPDK & Layer 4 Packet Processing

DPDK & Layer 4 Packet ProcessingMichelle Holley Learn about Transport Layer Development Kit and let us accelerate beyond the Layer 2/Layer 3.

Author: Muthurajan (M Jay) Jayakumar

Fun with Network Interfaces

Fun with Network InterfacesKernel TLV Agenda:

In this session, Shmulik Ladkani discusses the kernel's net_device abstraction, its interfaces, and how net-devices interact with the network stack. The talk covers many of the software network devices that exist in the Linux kernel, the functionalities they provide and some interesting use cases.

Speaker:

Shmulik Ladkani is a Tech Lead at Ravello Systems.

Shmulik started his career at Jungo (acquired by NDS/Cisco) implementing residential gateway software, focusing on embedded Linux, Linux kernel, networking and hardware/software integration.

51966 coffees and billions of forwarded packets later, with millions of homes running his software, Shmulik left his position as Jungo’s lead architect and joined Ravello Systems (acquired by Oracle) as tech lead, developing a virtual data center as a cloud service. He's now focused around virtualization systems, network virtualization and SDN.

BPF - in-kernel virtual machine

BPF - in-kernel virtual machineAlexei Starovoitov BPF of Berkeley Packet Filter mechanism was first introduced in linux in 1997 in version 2.1.75. It has seen a number of extensions of the years. Recently in versions 3.15 - 3.19 it received a major overhaul which drastically expanded it's applicability. This talk will cover how the instruction set looks today and why. It's architecture, capabilities, interface, just-in-time compilers. We will also talk about how it's being used in different areas of the kernel like tracing and networking and future plans.

Tutorial: Using GoBGP as an IXP connecting router

Tutorial: Using GoBGP as an IXP connecting routerShu Sugimoto - Show you how GoBGP can be used as a software router in conjunction with quagga

- (Tutorial) Walk through the setup of IXP connecting router using GoBGP

Linux kernel tracing

Linux kernel tracingViller Hsiao This document discusses tracing in the Linux kernel. It describes various tracing mechanisms like ftrace, tracepoints, kprobes, perf, and eBPF. Ftrace allows tracing functions via compiler instrumentation or dynamically. Tracepoints define custom trace events that can be inserted at specific points. Kprobes and related probes like jprobes allow tracing kernel functions. Perf provides performance monitoring capabilities. eBPF enables custom tracing programs to be run efficiently in the kernel via just-in-time compilation. Tracing tools like perf, systemtap, and LTTng provide user interfaces.

Similar to Tc basics (20)

Cache aware hybrid sorter

Cache aware hybrid sorterManchor Ko The document describes a cache-aware hybrid sorter that is faster than the STL sort. It first radix sorts input streams into substreams that fit into the CPU cache. This is done in a cache-friendly manner by splitting streams based on cache size. The substreams are then merged using a loser tree merge, which has better memory access patterns than a heap-based priority queue. Testing showed the hybrid sort was 2-6 times faster than STL sort and scaled well on multi-core CPUs.

Network emulator

Network emulatorjeromy fu This document discusses network emulation using tc. It begins with an agenda that covers why emulation is useful, what aspects of a network can be emulated, how tc works, how to do emulation with tc, a comparison of tc to Nistnet and WANem, and references for further information. It then goes into detail on each agenda item, providing explanations of concepts like qdiscs, classes, filters, and examples of using tc for tasks like bandwidth emulation, delay/jitter emulation, and loss emulation. The key advantages and limitations of Nistnet and WANem are also outlined.

Revisão: Forwarding Metamorphosis: Fast Programmable Match-Action Processing ...

Revisão: Forwarding Metamorphosis: Fast Programmable Match-Action Processing ...Bruno Castelucci Lendo e apresentando o artigo: Forwarding Metamorphosis: Fast Programmable Match-Action Processing in Hardware for SDN.

http://conferences.sigcomm.org/sigcomm/2013/program.php

http://conferences.sigcomm.org/sigcomm/2013/papers/sigcomm/p99.pdf

Paralell

ParalellMark Vicuna The document provides an introduction to parallel programming concepts. It discusses Flynn's taxonomy of parallel systems including SISD, SIMD, MIMD, and MISD models. It then gives examples of adding two vectors in parallel using different models like SISD, SIMD, SIMD-SIMT, MIMD-MPMD, and MIMD-SPMD. It also covers parallel programming concepts like synchronization, computational patterns like map, reduce, and pipeline, and data usage patterns like gather, scatter, subdivide and pack. Finally, it provides an overview of CUDA hardware including its memory model, execution model using kernels, blocks, threads and warps. It highlights some caveats of CUDA like data

Update on OpenTSDB and AsyncHBase

Update on OpenTSDB and AsyncHBase HBaseCon This document summarizes an update on OpenTSDB, an open source time series database. It discusses OpenTSDB's ability to store trillions of data points at scale using HBase, Cassandra, or Bigtable as backends. Use cases mentioned include systems monitoring, sensor data, and financial data. The document outlines writing and querying functionality and describes the data model and table schema. It also discusses new features in OpenTSDB 2.2 and 2.3 like downsampling, expressions, and data stores. Community projects using OpenTSDB are highlighted and the future of OpenTSDB is discussed.

Mum bandwidth management and qos

Mum bandwidth management and qosTeav Sovandara The presentation covered bandwidth management and quality of service (QoS) techniques in MikroTik RouterOS, including mangle rules to mark traffic, hierarchical token bucket (HTB) queue structures, and different queue types. Mangle rules are used to identify traffic by protocol, port, or address and mark it for further processing. HTB implements hierarchical queues that can set committed and maximum rates. Queue types include simple queues for easy bandwidth limiting and queue trees for priority-based queuing of pre-marked traffic. The talk provided examples and explanations of how to implement these QoS features in RouterOS for bandwidth control and prioritization of different traffic classes.

High performace network of Cloud Native Taiwan User Group

High performace network of Cloud Native Taiwan User GroupHungWei Chiu The document discusses high performance networking and summarizes a presentation about improving network performance. It describes drawbacks of the current Linux network stack, including kernel overhead and data copying. It then discusses approaches like DPDK and RDMA that can help improve performance by reducing overhead and enabling zero-copy data transfers. A case study is presented on using RDMA to improve TensorFlow performance by eliminating unnecessary data copies between devices.

VMworld 2016: vSphere 6.x Host Resource Deep Dive

VMworld 2016: vSphere 6.x Host Resource Deep DiveVMworld 1. This document provides an overview and agenda for a presentation on vSphere 6.x host resource deep dive topics including compute, storage, and network.

2. It introduces the presenters, Niels Hagoort and Frank Denneman, and provides background on their expertise.

3. The document outlines the topics to be covered under each section, including NUMA, CPU cache, DIMM configuration, I/O queue placement, driver considerations, RSS and NetQueue scaling for networking.

06 - Qt Communication

06 - Qt CommunicationAndreas Jakl Most applications will need to communicate with other services or devices at some point, or at least save settings on the host computer. These concepts are covered in this module.

After introducing the generic concept behind devices, short examples show how to use files.

Afterwards, the module covers networking and its representation in Qt. In addition to providing classes for handling low level sockets, network managers simplify handling web service requests and responses like for the HTTP protocol. At the end, a short section explains the basics of different methods of parsing XML in Qt, including DOM trees, SAX, pull parsing and XQuery/XPath.

A section about internationalization demonstrates the process step-by-step, showing all required components to make your application multi-lingual.

Fast Userspace OVS with AF_XDP, OVS CONF 2018

Fast Userspace OVS with AF_XDP, OVS CONF 2018Cheng-Chun William Tu OVS uses AF_XDP to provide a fast userspace datapath. AF_XDP is a Linux socket that receives frames with low overhead via XDP. OVS implements an AF_XDP netdev that passes packets to the OVS userspace datapath with minimal processing in the kernel. Optimizations like batching and pre-allocation reduce cache misses and system calls. Prototype tests show L2 forwarding at 14Mpps and PVP at 3.3Mpps, approaching but still slower than DPDK performance. Further optimizations to AF_XDP and OVS are needed to achieve wire speed processing without dedicated hardware.

Understanding DPDK

Understanding DPDKDenys Haryachyy 1. DPDK achieves high throughput packet processing on commodity hardware by reducing kernel overhead through techniques like polling, huge pages, and userspace drivers.

2. In Linux, packet processing involves expensive operations like system calls, interrupts, and data copying between kernel and userspace. DPDK avoids these by doing all packet processing in userspace.

3. DPDK uses techniques like isolating cores for packet I/O threads, lockless ring buffers, and NUMA awareness to further optimize performance. It can achieve throughput of over 14 million packets per second on 10GbE interfaces.

400-101 CCIE Routing and Switching IT Certification

400-101 CCIE Routing and Switching IT Certificationwrouthae This document provides an overview and agenda for a session on QoS and queuing basics, as well as QoS implementation on Nexus platforms. The session objectives are to refresh knowledge of QoS and queuing fundamentals, understand Nexus hardware architecture, examine QoS configuration on Nexus, and review real-world examples. The agenda includes introductions, discussions of QoS/queuing basics, QoS on Nexus platforms, Nexus 7000/7700, 5600/6000 and 2000 QoS, and concludes with real-world configurations.

8 Tc

8 Tcgobed This document provides an overview of traffic control (tc) in Linux and describes its main components:

1. Qdiscs (queueing disciplines) which control the sending of packets to interfaces. Common qdiscs include pfifo, pfifo_fast, red, sfq, and tbf.

2. Classes which further organize traffic within some qdiscs in a tree hierarchy. Common classful qdiscs are CBQ, HTB, and PRIO.

3. Filters which classify packets into different classes based on packet headers or other criteria.

Brkdct 3101

Brkdct 3101Nguyen Van Linh The document provides an overview of the architecture of Nexus 9000 series switches and techniques for troubleshooting them. It discusses the modular components of Nexus 9500 switches including supervisors, fabrics, I/O modules, and line cards. It also covers tools for monitoring system health and detailed troubleshooting techniques. The goal is to provide an understanding of the Nexus 9000 architecture and introduce system telemetry and troubleshooting case scenarios.

mTCP使ってみた

mTCP使ってみたHajime Tazaki mTCP enables high-performance userspace TCP/IP stacks by bypassing the kernel and reducing system call overhead. It was shown to achieve up to 25x higher throughput than Linux for short flows. The document discusses porting the iperf benchmark to use mTCP, which required only minor changes. Performance tests found that mTCP-ified iperf achieved similar throughput as Linux iperf for different packet sizes, demonstrating mTCP's ability to easily accelerate networking applications with minimal changes. The author concludes mTCP is a simple and effective way to improve TCP performance but notes that for full-featured stacks, a system like NUSE may be preferable as it can provide the high performance of userspace stacks while supporting the full functionality of kernel

CNIT 50: 6. Command Line Packet Analysis Tools

CNIT 50: 6. Command Line Packet Analysis ToolsSam Bowne This document provides an overview of 6 command line packet analysis tools used for network security monitoring: Tcpdump, Dumpcap, Tshark, Argus, the Argus Ra client, and Argus Racluster. It describes what each tool is used for, basic syntax and examples of using filters to view specific traffic like ICMP, DNS, TCP handshakes. It also covers running these tools from the command line, reading captured packet files, and examining Argus session data files.

lect13_programmable_dp.pptx

lect13_programmable_dp.pptxSidharthSharma546629 The document summarizes SDN forwarding abstractions and two related papers. It discusses how programmable data planes and flexible matching and actions in hardware can support SDN. The first paper introduces the Reconfigurable Match Table (RMT) model which aims to make hardware switches flexible while keeping costs low. The second paper introduces the P4 language for programming protocol-independent packet processors to configure parsers, tables, and packet processing on switches in a target-independent way.

Real-Time Analytics with Kafka, Cassandra and Storm

Real-Time Analytics with Kafka, Cassandra and StormJohn Georgiadis Common patterns and anti-patterns to consider when integrating Kafka, Cassandra and Storm for a real-time streaming analytics platform.

The Next Generation Firewall for Red Hat Enterprise Linux 7 RC

The Next Generation Firewall for Red Hat Enterprise Linux 7 RCThomas Graf FirewallD provides firewall management as a service in RHEL 7, abstracting policy definition and handling configuration. The kernel includes new filtering capabilities like connection tracking targets and extended accounting. Nftables, a new packet filtering subsystem to eventually replace iptables, uses a state machine-based approach with unified nft user interface.

Ad

Recently uploaded (20)

Generative Artificial Intelligence (GenAI) in Business

Generative Artificial Intelligence (GenAI) in BusinessDr. Tathagat Varma My talk for the Indian School of Business (ISB) Emerging Leaders Program Cohort 9. In this talk, I discussed key issues around adoption of GenAI in business - benefits, opportunities and limitations. I also discussed how my research on Theory of Cognitive Chasms helps address some of these issues

#StandardsGoals for 2025: Standards & certification roundup - Tech Forum 2025

#StandardsGoals for 2025: Standards & certification roundup - Tech Forum 2025BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, transcript, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

Andrew Marnell: Transforming Business Strategy Through Data-Driven Insights

Andrew Marnell: Transforming Business Strategy Through Data-Driven InsightsAndrew Marnell With expertise in data architecture, performance tracking, and revenue forecasting, Andrew Marnell plays a vital role in aligning business strategies with data insights. Andrew Marnell’s ability to lead cross-functional teams ensures businesses achieve sustainable growth and operational excellence.

Dev Dives: Automate and orchestrate your processes with UiPath Maestro

Dev Dives: Automate and orchestrate your processes with UiPath MaestroUiPathCommunity This session is designed to equip developers with the skills needed to build mission-critical, end-to-end processes that seamlessly orchestrate agents, people, and robots.

📕 Here's what you can expect:

- Modeling: Build end-to-end processes using BPMN.

- Implementing: Integrate agentic tasks, RPA, APIs, and advanced decisioning into processes.

- Operating: Control process instances with rewind, replay, pause, and stop functions.

- Monitoring: Use dashboards and embedded analytics for real-time insights into process instances.

This webinar is a must-attend for developers looking to enhance their agentic automation skills and orchestrate robust, mission-critical processes.

👨🏫 Speaker:

Andrei Vintila, Principal Product Manager @UiPath

This session streamed live on April 29, 2025, 16:00 CET.

Check out all our upcoming Dev Dives sessions at https://community.uipath.com/dev-dives-automation-developer-2025/.

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptx

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptxAnoop Ashok In today's fast-paced retail environment, efficiency is key. Every minute counts, and every penny matters. One tool that can significantly boost your store's efficiency is a well-executed planogram. These visual merchandising blueprints not only enhance store layouts but also save time and money in the process.

Procurement Insights Cost To Value Guide.pptx

Procurement Insights Cost To Value Guide.pptxJon Hansen Procurement Insights integrated Historic Procurement Industry Archives, serves as a powerful complement — not a competitor — to other procurement industry firms. It fills critical gaps in depth, agility, and contextual insight that most traditional analyst and association models overlook.

Learn more about this value- driven proprietary service offering here.

Special Meetup Edition - TDX Bengaluru Meetup #52.pptx

Special Meetup Edition - TDX Bengaluru Meetup #52.pptxshyamraj55 We’re bringing the TDX energy to our community with 2 power-packed sessions:

🛠️ Workshop: MuleSoft for Agentforce

Explore the new version of our hands-on workshop featuring the latest Topic Center and API Catalog updates.

📄 Talk: Power Up Document Processing

Dive into smart automation with MuleSoft IDP, NLP, and Einstein AI for intelligent document workflows.

Drupalcamp Finland – Measuring Front-end Energy Consumption

Drupalcamp Finland – Measuring Front-end Energy ConsumptionExove How to measure web front-end energy consumption using Firefox Profiler. Presented in DrupalCamp Finland on April 25th, 2025.

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungen

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungenpanagenda Webinar Recording: https://www.panagenda.com/webinars/hcl-nomad-web-best-practices-und-verwaltung-von-multiuser-umgebungen/

HCL Nomad Web wird als die nächste Generation des HCL Notes-Clients gefeiert und bietet zahlreiche Vorteile, wie die Beseitigung des Bedarfs an Paketierung, Verteilung und Installation. Nomad Web-Client-Updates werden “automatisch” im Hintergrund installiert, was den administrativen Aufwand im Vergleich zu traditionellen HCL Notes-Clients erheblich reduziert. Allerdings stellt die Fehlerbehebung in Nomad Web im Vergleich zum Notes-Client einzigartige Herausforderungen dar.

Begleiten Sie Christoph und Marc, während sie demonstrieren, wie der Fehlerbehebungsprozess in HCL Nomad Web vereinfacht werden kann, um eine reibungslose und effiziente Benutzererfahrung zu gewährleisten.

In diesem Webinar werden wir effektive Strategien zur Diagnose und Lösung häufiger Probleme in HCL Nomad Web untersuchen, einschließlich

- Zugriff auf die Konsole

- Auffinden und Interpretieren von Protokolldateien

- Zugriff auf den Datenordner im Cache des Browsers (unter Verwendung von OPFS)

- Verständnis der Unterschiede zwischen Einzel- und Mehrbenutzerszenarien

- Nutzung der Client Clocking-Funktion

Cyber Awareness overview for 2025 month of security

Cyber Awareness overview for 2025 month of securityriccardosl1 Cyber awareness training educates employees on risk associated with internet and malicious emails

Quantum Computing Quick Research Guide by Arthur Morgan

Quantum Computing Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat The MCP (Model Context Protocol) is a framework designed to manage context and interaction within complex systems. This SlideShare presentation will provide a detailed overview of the MCP Model, its applications, and how it plays a crucial role in improving communication and decision-making in distributed systems. We will explore the key concepts behind the protocol, including the importance of context, data management, and how this model enhances system adaptability and responsiveness. Ideal for software developers, system architects, and IT professionals, this presentation will offer valuable insights into how the MCP Model can streamline workflows, improve efficiency, and create more intuitive systems for a wide range of use cases.

Splunk Security Update | Public Sector Summit Germany 2025

Splunk Security Update | Public Sector Summit Germany 2025Splunk Splunk Security Update

Sprecher: Marcel Tanuatmadja

Role of Data Annotation Services in AI-Powered Manufacturing

Role of Data Annotation Services in AI-Powered ManufacturingAndrew Leo From predictive maintenance to robotic automation, AI is driving the future of manufacturing. But without high-quality annotated data, even the smartest models fall short.

Discover how data annotation services are powering accuracy, safety, and efficiency in AI-driven manufacturing systems.

Precision in data labeling = Precision on the production floor.

Rusty Waters: Elevating Lakehouses Beyond Spark

Rusty Waters: Elevating Lakehouses Beyond Sparkcarlyakerly1 Spark is a powerhouse for large datasets, but when it comes to smaller data workloads, its overhead can sometimes slow things down. What if you could achieve high performance and efficiency without the need for Spark?

At S&P Global Commodity Insights, having a complete view of global energy and commodities markets enables customers to make data-driven decisions with confidence and create long-term, sustainable value. 🌍

Explore delta-rs + CDC and how these open-source innovations power lightweight, high-performance data applications beyond Spark! 🚀

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...TrustArc Most consumers believe they’re making informed decisions about their personal data—adjusting privacy settings, blocking trackers, and opting out where they can. However, our new research reveals that while awareness is high, taking meaningful action is still lacking. On the corporate side, many organizations report strong policies for managing third-party data and consumer consent yet fall short when it comes to consistency, accountability and transparency.

This session will explore the research findings from TrustArc’s Privacy Pulse Survey, examining consumer attitudes toward personal data collection and practical suggestions for corporate practices around purchasing third-party data.

Attendees will learn:

- Consumer awareness around data brokers and what consumers are doing to limit data collection

- How businesses assess third-party vendors and their consent management operations

- Where business preparedness needs improvement

- What these trends mean for the future of privacy governance and public trust

This discussion is essential for privacy, risk, and compliance professionals who want to ground their strategies in current data and prepare for what’s next in the privacy landscape.

2025-05-Q4-2024-Investor-Presentation.pptx

2025-05-Q4-2024-Investor-Presentation.pptxSamuele Fogagnolo Cloudflare Q4 Financial Results Presentation

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat

Ad

Tc basics

- 1. Dive into TC – TC basics Jeromy Fu

- 2. Agenda • What’s TC • How it works • Basic concepts • Command and parameters

- 3. What’s TC • TC is abbr. of Traffic Control - Rate control - Bandwidth management - Active Queue Management(AQM) - Network Emulator, pkt loss, pkt disorder, pkt duplication, pkt delay - QoS ( diffserv + rsvp ) - Many more …

- 4. What’s tc • user-level utilities iproute2( iproute2/tc ) • tc kernel (linux/net/sched)

- 5. How it works

- 6. How it works

- 7. Basic concepts- Qdisc • Qdisc(Queue discipline) - Decide which ones to send first, which ones to delay, and which ones to drop - class/classful Qdisc: Qdisc with/without configurable internal subdivision • Naming convention: - Kernel: sch_*.c (sch_netem.c, sch_tbf.c ) - iproute2: q_*.c (q_netem.c, q_tbf.c)

- 8. Qdisc list • Class Based Queueing (CBQ) • Hierarchical Token Bucket (HTB) • Hierarchical Fair Service Curve (HFSC) • ATM Virtual Circuits (ATM) • Multi Band Priority Queueing (PRIO) • Hardware Multiqueue-aware Multi Band Queuing (MULTIQ) • Random Early Detection (RED) • Stochastic Fairness Queueing (SFQ) • True Link Equalizer (TEQL) • Token Bucket Filter (TBF) • Generic Random Early Detection (GRED) • Differentiated Services marker (DSMARK) • Network emulator (NETEM) • Deficit Round Robin scheduler (DRR) • Ingress Qdisc

- 9. Basic concepts- Classification • Classification(Filter) - Used to distinguish among different classes of packets and process each class in a specific way. • Naming convention: - Kernel: cls_*.c (cls_u32.c, cls_rsvp.c ) - iproute2: f_*.c (f_u32.c, f_rsvp.c)

- 10. Classification list • Elementary classification (BASIC) • Traffic-Control Index (TCINDEX) • Routing decision (ROUTE) • Netfilter mark (FW) • Universal 32bit comparisons w/ hashing (U32) • IPv4 Resource Reservation Protocol (RSVP) • IPv6 Resource Reservation Protocol (RSVP6) • Flow classifier • Control Group Classifier • Extended Matches • Metadata • Incoming device classification

- 11. Basic concepts- Action • Action Actions get attached to classifiers and are invoked after a successful classification. They are used to overwrite the classification result, instantly drop or redirect packets, etc. Works on ingress only. • Naming convention: - Kernel: act_*.c (act_police.c, act_skbedit.c ) - iproute2: m_*.c (m_police.c, m_pedit.c)

- 12. Action list • Traffic Policing • Generic actions • Probability support • Redirecting and Mirroring • IPtables targets • Stateless NAT • Packet Editing • SKB Editing

- 13. Basic concepts- Class • Classes either contain other Classes, or a Qdisc is attached • Qdiscs and Classes are intimately tied together

- 14. TC Commands • OPTIONS: options are effective for all sub commands • OBJECTS: the object of the tc command operates on • COMMAND: the sub command for each object

- 15. TC Commands

- 16. TC Qdisc • Operations on qdisc: add | del | replace | change | show • Handle: qdisc handle used to identify qdisc • root|ingress|parent CLASSID, specify the parent node

- 17. qdisc handle • Qdisc handle is used to identify Qdisc - {none|major[:]} - none is TC_H_UNSPEC - major is 16bits HEX number(Without ‘0x’ prefix) - : is optional • Internally, qdisc_handle = major<<16

- 18. classid • Classid is used to identify Class - {none|root|[major]:minor} - none is TC_H_UNSPEC, root is TC_H_ROOT - major/minor are both 16bits HEX numbers(Without ‘0x’ prefix), major is optional • Internally, classid = (major<<16)|minor

- 19. stab and rtab • stab is Size table, rtab is rate table. • They’re used to speed up the calculation of the transmit time of packets. • The packet size is aligned to a predefined size in the stab slot. • Then the rtab is used to give the pre- calculated time of the aligned packet size.

- 20. Linklayer • Link layer affects packet size, if linklayer is set, both mpu and overhead must also be set

- 21. Linklayer

- 22. stab internal • szopts: stab relating specifications, some are specified by the command, some are calculated • data: the size table.

- 23. stab internal - user space • Averagely distribute(*2) the MTU size into size table which has tsize slots

- 24. stab internal - kernel

- 25. rtab internal - user space • rtab size is constantly 256

- 26. rtab internal - kernel

- 30. TC Class • Tc class can only be applied for classful qdisc, such as htb, cbq etc

- 32. tbf • limit/latency • buffer/burst/maxburst, capcity of token bucket: size[/cell] • mpu: minimum process unit • rate/peakrate • Max(Rate/HZ,MTU)

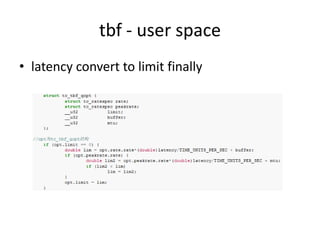

- 33. tbf - user space • latency convert to limit finally

- 34. tbf - user space • rtab initialization

- 35. tbf - kernel

- 36. tbf - kernel

- 37. Tbf dequeue