Ad

Docker - A high level introduction to dockers and containers

- 1. Dr Ganesh Neelakanta Iyer July 2015

- 2. Disclaimer: I do not have any working experience with Docker or containers. The slides are prepared based on my reading for last two weeks. Consider this as a mutual sharing session. It may give you some basic understanding of Dockers and containers

- 3. © 2015 Progress Software Corporation. All rights reserved.3 Multiplicityof Goods Multipilicityof methodsfor transporting/storing DoIworryabout howgoodsinteract (e.g.coffeebeans nexttospices) CanItransport quicklyandsmoothly (e.g.fromboatto traintotruck) Cargo Transport Pre-1960

- 4. © 2015 Progress Software Corporation. All rights reserved.4 ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? Also an NxN Matrix

- 5. © 2015 Progress Software Corporation. All rights reserved.5 Multiplicityof Goods Multiplicityof methodsfor transporting/storing DoIworryabout howgoodsinteract (e.g.coffeebeans nexttospices) CanItransport quicklyand smoothly (e.g.fromboatto traintotruck) Solution: Intermodal Shipping Container …in between, can be loaded and unloaded, stacked, transported efficiently over long distances, and transferred from one mode of transport to another A standard container that is loaded with virtually any goods, and stays sealed until it reaches final delivery.

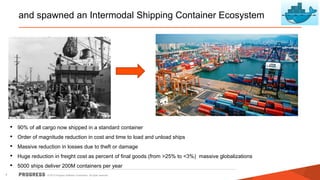

- 6. © 2015 Progress Software Corporation. All rights reserved.6 This eliminated the NXN problem…

- 7. © 2015 Progress Software Corporation. All rights reserved.7 and spawned an Intermodal Shipping Container Ecosystem • 90% of all cargo now shipped in a standard container • Order of magnitude reduction in cost and time to load and unload ships • Massive reduction in losses due to theft or damage • Huge reduction in freight cost as percent of final goods (from >25% to <3%) massive globalizations • 5000 ships deliver 200M containers per year

- 8. © 2015 Progress Software Corporation. All rights reserved.8 What is Docker? Docker is an open-source project that automates the deployment of applications inside software containers, by providing an additional layer of abstraction and automation of operating system–level virtualization on Linux. [Source: en.wikipedia.org] Docker allows you to package an application with all of its dependencies into a standardized unit for software development. [www.docker.com] http://altinvesthq.com/news/wp-content/uploads/2015/06/container-ship.jpg

- 9. © 2015 Progress Software Corporation. All rights reserved.9 Static website Web frontend User DB Queue Analytics DB Background workers API endpoint nginx 1.5 + modsecurity + openssl + bootstrap 2 postgresql + pgv8 + v8 hadoop + hive + thrift + OpenJDK Ruby + Rails + sass + Unicorn Redis + redis-sentinel Python 3.0 + celery + pyredis + libcurl + ffmpeg + libopencv + nodejs + phantomjs Python 2.7 + Flask + pyredis + celery + psycopg + postgresql-client Development VM QA server Public Cloud Disaster recovery Contributor’s laptop Production Servers The Challenge Multiplicityof Stacks Multiplicityof hardware environments Production Cluster Customer Data Center Doservicesand appsinteract appropriately? CanImigrate smoothlyand quickly?

- 10. © 2015 Progress Software Corporation. All rights reserved.10 Results in N X N compatibility nightmare Static website Web frontend Background workers User DB Analytics DB Queue Development VM QA Server Single Prod Server Onsite Cluster Public Cloud Contributor’s laptop Customer Servers ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ? ?

- 11. © 2015 Progress Software Corporation. All rights reserved.11 Static website Web frontendUser DB Queue Analytics DB Development VM QA server Public Cloud Contributor’s laptop Docker is a shipping container system for code Multiplicityof Stacks Multiplicityof hardware environments Production Cluster Customer Data Center Doservicesand appsinteract appropriately? CanImigrate smoothlyand quickly …that can be manipulated using standard operations and run consistently on virtually any hardware platform An engine that enables any payload to be encapsulated as a lightweight, portable, self-sufficient container…

- 12. © 2015 Progress Software Corporation. All rights reserved.12 Static website Web frontendUser DB Queue Analytics DB Development VM QA server Public Cloud Contributor’s laptop Or…put more simply Multiplicityof Stacks Multiplicityof hardware environments Production Cluster Customer Data Center Doservicesand appsinteract appropriately? CanImigrate smoothlyand quickly Operator: Configure Once, Run Anything Developer: Build Once, Run Anywhere (Finally)

- 13. © 2015 Progress Software Corporation. All rights reserved.13 Static website Web frontend Background workers User DB Analytics DB Queue Development VM QA Server Single Prod Server Onsite Cluster Public Cloud Contributor’s laptop Customer Servers Docker solves the NXN problem

- 14. © 2015 Progress Software Corporation. All rights reserved.14 Docker containers Wrap up a piece of software in a complete file system that contains everything it needs to run: • Code, runtime, system tools, system libraries • Anything you can install on a server This guarantees that it will always run the same, regardless of the environment it is running in

- 15. © 2015 Progress Software Corporation. All rights reserved.15 Why containers matter Physical Containers Docker Content Agnostic The same container can hold almost any type of cargo Can encapsulate any payload and its dependencies Hardware Agnostic Standard shape and interface allow same container to move from ship to train to semi-truck to warehouse to crane without being modified or opened Using operating system primitives (e.g. LXC) can run consistently on virtually any hardware—VMs, bare metal, openstack, public IAAS, etc.—without modification Content Isolation and Interaction No worry about anvils crushing bananas. Containers can be stacked and shipped together Resource, network, and content isolation. Avoids dependency hell Automation Standard interfaces make it easy to automate loading, unloading, moving, etc. Standard operations to run, start, stop, commit, search, etc. Perfect for devops: CI, CD, autoscaling, hybrid clouds Highly efficient No opening or modification, quick to move between waypoints Lightweight, virtually no perf or start-up penalty, quick to move and manipulate Separation of duties Shipper worries about inside of box, carrier worries about outside of box Developer worries about code. Ops worries about infrastructure.

- 16. © 2015 Progress Software Corporation. All rights reserved.16 Docker containers Lightweight • Containers running on one machine all share the same OS kernel • They start instantly and make more efficient use of RAM • Images are constructed from layered file systems • They can share common files, making disk usage and image downloads much more efficient Open • Based on open standards • Allowing containers to run on all major Linux distributions and Microsoft OS with support for every infrastructure Secure • Containers isolate applications from each other and the underlying infrastructure while providing an added layer of protection for the application

- 17. © 2015 Progress Software Corporation. All rights reserved.17 Docker / Containers vs. Virtual Machine https://www.docker.com/whatisdocker/ Containers have similar resource isolation and allocation benefits as VMs but a different architectural approach allows them to be much more portable and efficient

- 18. © 2015 Progress Software Corporation. All rights reserved.18 Docker / Containers vs. Virtual Machine https://www.docker.com/whatisdocker/ • Each virtual machine includes the application, the necessary binaries and libraries and an entire guest operating system - all of which may be tens of GBs in size • It includes the application and all of its dependencies, but share the kernel with other containers. • They run as an isolated process in userspace on the host operating system. • Docker containers run on any computer, on any infrastructure and in any cloud

- 19. © 2015 Progress Software Corporation. All rights reserved.19 Why are Docker containers lightweight? Bins/ Libs App A Original App (No OS to take up space, resources, or require restart) AppΔ Bins / App A Bins/ Libs App A’ Gues t OS Bins/ Libs Modified App Union file system allows us to only save the diffs Between container A and container A’ VMs Every app, every copy of an app, and every slight modification of the app requires a new virtual server App A Guest OS Bins/ Libs Copy of App No OS. Can Share bins/libs App A Guest OS Guest OS VMs Containers

- 20. © 2015 Progress Software Corporation. All rights reserved.20 What are the basics of the Docker system? Source Code Repository Dockerfile For A Docker Engine Docker Container Image Registry Build Docker Engine Host 2 OS 2 (Linux) Container A Container B Container C ContainerA Push Search Pull Run Host 1 OS (Linux)

- 21. © 2015 Progress Software Corporation. All rights reserved.21 Changes and Updates Docker Engine Docker Container Image Registry Docker Engine Push Update Bins/ Libs App A AppΔ Bins / Base Container Image Host is now running A’’ Container Mod A’’ AppΔ Bins / Bins/ Libs App A Bins / Bins/ Libs App A’’ Host running A wants to upgrade to A’’. Requests update. Gets only diffs Container Mod A’

- 22. © 2015 Progress Software Corporation. All rights reserved.22 How does this help you build better software? • Stop wasting hours trying to setup developer environments • Spin up new instances and make copies of production code to run locally • With Docker, you can easily take copies of your live environment and run on any new endpoint running Docker. Accelerate Developer Onboarding • The isolation capabilities of Docker containers free developers from the worries of using “approved” language stacks and tooling • Developers can use the best language and tools for their application service without worrying about causing conflict issues Empower Developer Creativity • By packaging up the application with its configs and dependencies together and shipping as a container, the application will always work as designed locally, on another machine, in test or production • No more worries about having to install the same configs into a different environment Eliminate Environment Inconsistencies

- 23. © 2015 Progress Software Corporation. All rights reserved.23 Easily Share and Collaborate on Applications Distribute and share content • Store, distribute and manage your Docker images in your Docker Hub with your team • Image updates, changes and history are automatically shared across your organization. Simply share your application with others • Ship your containers to others without worrying about different environment dependencies creating issues with your application. • Other teams can easily link to or test against your app without having to learn or worry about how it works. Docker creates a common framework for developers and sysadmins to work together on distributed applications

- 24. © 2015 Progress Software Corporation. All rights reserved.24 Get Started with Docker install Docker run a software image in a container browse for an image on Docker Hub create your own image and run it in a container create a Docker Hub account and an image repository create an image of your own push your image to Docker Hub for others to use http://docs.docker.com/windows/started/

- 25. © 2015 Progress Software Corporation. All rights reserved.25 • The Life of a Container – Conception • BUILD an Image from a Dockerfile – Birth • RUN (create+start) a container – Reproduction • COMMIT (persist) a container to a new image • RUN a new container from an image – Sleep • KILL a running container – Wake • START a stopped container – Death • RM (delete) a stopped container • Extinction – RMI a container image (delete image) Docker Container Lifecycle ……

- 26. © 2015 Progress Software Corporation. All rights reserved.26 How a command looks like

- 27. © 2015 Progress Software Corporation. All rights reserved.27 Docker Compose Compose is a tool for defining and running multi-container applications with Docker With Compose, you define a multi-container application in a single file, then spin your application up in a single command which does everything that needs to be done to get it running Compose is great for development environments, staging servers, and CI. We don’t recommend that you use it in production yet Define your app’s environment with a Dockerfile so it can be reproduced anywhere. Define the services that make up your app in docker-compose.yml so they can be run together in an isolated environment: Lastly, run docker-compose up and Compose will start and run your entire app.

- 28. © 2015 Progress Software Corporation. All rights reserved.28 Video https://www.youtube.com/watch?v=GVVtR_hrdKI

- 29. © 2015 Progress Software Corporation. All rights reserved.29 Health and fitness Mobile App

- 30. © 2015 Progress Software Corporation. All rights reserved.30 A familiar story You’ve been tasked with creating the REST API for a mobile app for tracking health and fitness You code the first endpoint using the development environment on your laptop After running all the unit tests and seeing that they passed, you check your code into the Git repository and let the QA engineer know that the build is ready for testing The QA engineer dutifully deploys the most recent build to the test environment, and within the first few minutes of testing discovers that your recently developed REST endpoint is broken After spending a few hours troubleshooting alongside the QA engineer, you discover that the test environment is using an outdated version of a third-party library, and this is what is causing your REST endpoints to break Slight differences between development, test, stage, and production environments can wreak mayhem on an application https://medium.com/aws-activate-startup-blog/a-better-dev-test-experience-docker-and-aws-291da5ab1238

- 31. © 2015 Progress Software Corporation. All rights reserved.31 Solution Traditional approaches for dealing with this problem, such as change management processes, are too cumbersome for today’s rapid build and deploy cycles What is needed instead is a way to transfer an environment seamlessly from development to test, eliminating the need for manual and error prone resource provisioning and configuration. You can create Docker images from a running container, similar to the way we create an AMI from an EC2 instance. For example, one could launch a container, install a bunch of software packages using a package manager like APT or yum, and then commit those changes to a new Docker image. Solution Containers (Docker) https://medium.com/aws-activate-startup-blog/a-better-dev-test-experience-docker-and-aws-291da5ab1238

- 32. © 2015 Progress Software Corporation. All rights reserved.32 Back to story First, define a Docker image for launching a container for running the REST endpoint Use this to test our code on a laptop, and the QA engineer can use this to test the code in EC2 The REST endpoints are going to be developed using Ruby and the Sinatra framework, so these will need to be installed in the image The back end will use Amazon DynamoDB To ensure that the application can be run from both inside and outside AWS, the Docker image will include the DynamoDB local database. https://medium.com/aws-activate-startup-blog/a-better-dev-test-experience-docker-and-aws-291da5ab1238

- 33. © 2015 Progress Software Corporation. All rights reserved.33 Selenium Grid and Docker

- 34. © 2015 Progress Software Corporation. All rights reserved.34 Selelnium Grid and Docker Selenium Grid is a great way to speed up your tests by running them in parallel on multiple machines However, rolling your own grid also means maintaining it Setting up the right browser / OS combinations across many virtual machines (or – even worse – physical machines) and making sure each is running the Selenium Server correctly is a huge pain Not to mention troubleshooting when something goes wrong on an individual node God help you when it comes time to perform any updates Thinking about this problem, it’s clear we need a central point from where we can configure and update our hub / nodes. It would also be great if we had a way to quickly recover in case an individual node crashes or otherwise ends up in a bad state. Selenium Grid is distributed system of nodes for running tests. Instead of running your grid across multiple machines or VMs, you can run them all on a single large machine using Docker.

- 35. © 2015 Progress Software Corporation. All rights reserved.35 Selelnium Grid and Docker Docker Compose lets take our Docker images and spin them up as a pre-configured Selenium Grid cluster Selenium Grid handles the routing of your tests to the proper place, Docker lets you configure your browser / OS combinations in a programmatic way, and Compose is the central point from which you can spin everything up on the fly. A huge benefit of using Docker is its capacity to scale • Running Selenium Grid on separate machines or even a set of VMs requires a lot of unnecessary computing overhead • Docker images run as userspace processes on a shared OS; so your images share some system resources, but are still isolated and require far fewer resources to run than a VM • This means you can cram more nodes into a single instance

- 36. © 2015 Progress Software Corporation. All rights reserved.36 How to Run Your Selenium Grid with Docker Create Docker images for your Selenium Grid hub and node(s) Add Java to the hub to run the Selenium server jar Add Java, plus Firefox and Xvfb to the node Create a docker-compose.yml file to define how the images will interact with each other Start docker-compose and scale out to as many nodes as you need – or that your machine can handle http://www.conductor.com/nightlight/running-selenium-grid-using-docker-compose/ https://sandro-keil.de/blog/2015/03/23/selenium-grid-in-minutes-with-docker/

- 37. © 2015 Progress Software Corporation. All rights reserved.37 How PLOS uses Docker for Testing

- 38. © 2015 Progress Software Corporation. All rights reserved.38 PLOS and Docker PLOS Library of open access journals and other scientific literature under an open content license When PLOS has grown in terms of users, articles and developers, they decided to split their original applications into web services They created test environments that mimic production using Docker containers • Docker makes it quick and easy to narrow the gap between production and development

- 39. © 2015 Progress Software Corporation. All rights reserved.39 PLOS uses Docker for amazing test experience • To run the API tests, they point them at port 8080, and they are testing the Dockerized API instead of a service running directly on the host • A few small scripts are written that orchestrate bringing those containers up and down as needed between various tests and Black Box Testing • Use compose to bring up any number of instances of the services in your stack • docker-compose scale web=10 database=3 • PLOS have seen hundreds of database containers run on a single laptop with no problem Scalability Testing • Easy to add very invasive automated tests for testing configurations Configuration Testing

- 40. © 2015 Progress Software Corporation. All rights reserved.40 More for QA

- 41. © 2015 Progress Software Corporation. All rights reserved.41 Security Testing using Docker ZAP is an intercepting proxy Download a docker image from https://code.google.com/p/zaproxy/wiki/Docker Run ZAP inside a container and acting as a proxy for testing You can configure your browser to connect through the ZAP proxy on 127.0.0.1:8080 and start to playHTTP and HTTPS connections

- 42. © 2015 Progress Software Corporation. All rights reserved.42 Distributed Performance Testing using JMeter and Docker Instead of JMeter running on VMs, create Docker images Docker also has the ability to iterate, correct and improve on an existing image Some of the key advantages of using Docker here: • Creating and modifying Docker image is fast and easy • Since the JMX and data files were maintained on the host a change in these files simply means restart the container • Creating additional JMeter server instance is just a matter of another docker run invocation • Finally startup time will be almost imperceptible

- 43. © 2015 Progress Software Corporation. All rights reserved.43 References Testing made awesome with Docker at PLOS • http://blogs.plos.org/tech/testing-made-awesome-with-docker/ Functional Testing with Docker • http://www.rounds.com/blog/functional-testing-docker/ Distributed JMeter testing using Docker • http://srivaths.blogspot.in/2014/08/distrubuted-jmeter-testing-using-docker.html Security Testing using Docker • http://www.ojscurity.com/2015/02/docker-zed-attack-proxy-with-tor.html Video on testing using Docker • https://www.youtube.com/watch?v=B-nv8EO1Mwk

Editor's Notes

- #18: Virtual Machines Each virtualized application includes not only the application - which may be only 10s of MB - and the necessary binaries and libraries, but also an entire guest operating system - which may weigh 10s of GB. Docker The Docker Engine container comprises just the application and its dependencies. It runs as an isolated process in userspace on the host operating system, sharing the kernel with other containers. Thus, it enjoys the resource isolation and allocation benefits of VMs but is much more portable and efficient.

- #19: Virtual Machines Each virtualized application includes not only the application - which may be only 10s of MB - and the necessary binaries and libraries, but also an entire guest operating system - which may weigh 10s of GB. Docker The Docker Engine container comprises just the application and its dependencies. It runs as an isolated process in userspace on the host operating system, sharing the kernel with other containers. Thus, it enjoys the resource isolation and allocation benefits of VMs but is much more portable and efficient.

![© 2015 Progress Software Corporation. All rights reserved.8

What is Docker?

Docker is an open-source project that

automates the deployment

of applications inside software

containers, by providing an additional

layer of abstraction and automation

of operating system–level

virtualization on Linux.

[Source: en.wikipedia.org]

Docker allows you to package an

application with all of its

dependencies into a standardized unit

for software development.

[www.docker.com]

http://altinvesthq.com/news/wp-content/uploads/2015/06/container-ship.jpg](https://image.slidesharecdn.com/docker-160612092723/85/Docker-A-high-level-introduction-to-dockers-and-containers-8-320.jpg)