Twitter with hadoop for oow

Download as PPTX, PDF6 likes1,530 views

"Analyzing Twitter Data with Hadoop - Live Demo", presented at Oracle Open World 2014. The repository for the slides is in https://github.com/cloudera/cdh-twitter-example

1 of 44

Downloaded 52 times

![What is JSON?

{

"retweeted_status": {

"contributors": null,

"text": "#Crowdsourcing – drivers already generate traffic data for your smartphone to suggest

alternative routes when a road is clogged. #bigdata",

"retweeted": false,

"entities": {

"hashtags": [

{

"text": "Crowdsourcing",

"indices": [0, 14]

},

{

"text": "bigdata",

"indices": [129,137]

}

],

"user_mentions": []

}

}

}

16 ©2012 Cloudera, Inc.](https://image.slidesharecdn.com/twitterwithhadoopforoow-141005111104-conversion-gate01/85/Twitter-with-hadoop-for-oow-16-320.jpg)

Ad

Recommended

Kafka and Hadoop at LinkedIn Meetup

Kafka and Hadoop at LinkedIn MeetupGwen (Chen) Shapira The document discusses several methods for getting data from Kafka into Hadoop, including batch tools like Camus, Sqoop2, and NiFi. It also covers streaming options like using Kafka as a source in Hive with the HiveKa storage handler, Spark Streaming, and Storm. The presenter is a software engineer and former consultant who now works at Cloudera on projects including Sqoop, Kafka, and Flume. They also maintain a blog on these topics and discuss setting up and using Kafka in Cloudera Manager.

Data Architectures for Robust Decision Making

Data Architectures for Robust Decision MakingGwen (Chen) Shapira The document discusses designing robust data architectures for decision making. It advocates for building architectures that can easily add new data sources, improve and expand analytics, standardize metadata and storage for easy data access, discover and recover from mistakes. The key aspects discussed are using Kafka as a data bus to decouple pipelines, retaining all data for recovery and experimentation, treating the filesystem as a database by storing intermediate data, leveraging Spark and Spark Streaming for batch and stream processing, and maintaining schemas for integration and evolution of the system.

Fraud Detection for Israel BigThings Meetup

Fraud Detection for Israel BigThings MeetupGwen (Chen) Shapira Modern data systems don't just process massive amounts of data, they need to do it very fast. Using fraud detection as a convenient example, this session will include best practices on how to build real-time data processing applications using Apache Kafka. We'll explain how Kafka makes real-time processing almost trivial, discuss the pros and cons of the famous lambda architecture, help you choose a stream processing framework and even talk about deployment options.

Kafka & Hadoop - for NYC Kafka Meetup

Kafka & Hadoop - for NYC Kafka MeetupGwen (Chen) Shapira The document discusses various options for getting data from Kafka into Hadoop, including Camus, Flume, Spark Streaming, and Storm. It provides information on how each works and their advantages and disadvantages. The presenter has 15 years of experience moving data and is now a Cloudera engineer working on projects like Flume, Sqoop, and Kafka.

Intro to Spark - for Denver Big Data Meetup

Intro to Spark - for Denver Big Data MeetupGwen (Chen) Shapira Spark is a fast and general engine for large-scale data processing. It improves on MapReduce by allowing iterative algorithms through in-memory caching and by supporting interactive queries. Spark features include in-memory caching, general execution graphs, APIs in multiple languages, and integration with Hadoop. It is faster than MapReduce, supports iterative algorithms needed for machine learning, and enables interactive data analysis through its flexible execution model.

Event Detection Pipelines with Apache Kafka

Event Detection Pipelines with Apache KafkaDataWorks Summit The document discusses using Apache Kafka for event detection pipelines. It describes how Kafka can be used to decouple data pipelines and ingest events from various source systems in real-time. It then provides an example use case of using Kafka, Hadoop, and machine learning for fraud detection in consumer banking, describing the online and offline workflows. Finally, it covers some of the challenges of building such a system and considerations for deploying Kafka.

Data Wrangling and Oracle Connectors for Hadoop

Data Wrangling and Oracle Connectors for HadoopGwen (Chen) Shapira This document discusses loading data from Hadoop into Oracle databases using Oracle connectors. It describes how the Oracle Loader for Hadoop and Oracle SQL Connector for HDFS can load data from HDFS into Oracle tables much faster than traditional methods like Sqoop by leveraging parallel processing in Hadoop. The connectors optimize the loading process by automatically partitioning, sorting, and formatting the data into Oracle blocks to achieve high performance loads. Measuring the CPU time needed per gigabyte loaded allows estimating how long full loads will take based on available resources.

Application architectures with hadoop – big data techcon 2014

Application architectures with hadoop – big data techcon 2014Jonathan Seidman Deck from presentation at Big Data TechCon Boston 2014 on building applications with Hadoop and tools from the Hadoop ecosystem.

Scaling ETL with Hadoop - Avoiding Failure

Scaling ETL with Hadoop - Avoiding FailureGwen (Chen) Shapira This document discusses scaling extract, transform, load (ETL) processes with Apache Hadoop. It describes how data volumes and varieties have increased, challenging traditional ETL approaches. Hadoop offers a flexible way to store and process structured and unstructured data at scale. The document outlines best practices for extracting data from databases and files, transforming data using tools like MapReduce, Pig and Hive, and loading data into data warehouses or keeping it in Hadoop. It also discusses workflow management with tools like Oozie. The document cautions against several potential mistakes in ETL design and implementation with Hadoop.

Deploying Apache Flume to enable low-latency analytics

Deploying Apache Flume to enable low-latency analyticsDataWorks Summit The driving question behind redesigns of countless data collection architectures has often been, ?how can we make the data available to our analytical systems faster?? Increasingly, the go-to solution for this data collection problem is Apache Flume. In this talk, architectures and techniques for designing a low-latency Flume-based data collection and delivery system to enable Hadoop-based analytics are explored. Techniques for getting the data into Flume, getting the data onto HDFS and HBase, and making the data available as quickly as possible are discussed. Best practices for scaling up collection, addressing de-duplication, and utilizing a combination streaming/batch model are described in the context of Flume and Hadoop ecosystem components.

Ingest and Stream Processing - What will you choose?

Ingest and Stream Processing - What will you choose?DataWorks Summit/Hadoop Summit This document discusses streaming data ingestion and processing options. It provides an overview of common streaming architectures including Kafka as an ingestion hub and various streaming engines. Spark Streaming is highlighted as a popular and full-featured option for processing streaming data due to its support for SQL, machine learning, and ease of transition from batch workflows. The document also briefly profiles StreamSets Data Collector as a higher-level tool for building streaming data pipelines.

Emerging technologies /frameworks in Big Data

Emerging technologies /frameworks in Big DataRahul Jain A short overview presentation on Emerging technologies /frameworks in Big Data covering Apache Parquet, Apache Flink, Apache Drill with basic concepts of Columnar Storage and Dremel.

Fraud Detection Architecture

Fraud Detection ArchitectureGwen (Chen) Shapira This session will go into best practices and detail on how to architect a near real-time application on Hadoop using an end-to-end fraud detection case study as an example. It will discuss various options available for ingest, schema design, processing frameworks, storage handlers and others, available for architecting this fraud detection application and walk through each of the architectural decisions among those choices.

Apache storm vs. Spark Streaming

Apache storm vs. Spark StreamingP. Taylor Goetz Slides for an upcoming talk about Apache Storm and Spark Streaming.

This is a draft and is subject to change. Comments welcome.

Real time analytics with Kafka and SparkStreaming

Real time analytics with Kafka and SparkStreamingAshish Singh In a world where every “thing” is producing lots of data, ingesting and processing that large volume of data becomes a big problem. In today’s dynamic world, firms have to react to changing conditions very fast, or even better in real time. This presentation covers how two of the latest and greatest tools from Big Data community, Kafka and Spark Streaming, enables us to take on that challenge.

Scaling etl with hadoop shapira 3

Scaling etl with hadoop shapira 3Gwen (Chen) Shapira This document discusses scaling ETL processes with Hadoop. It describes using Hadoop for extracting data from various structured and unstructured sources, transforming data using MapReduce and other tools, and loading data into data warehouses or other targets. Specific techniques covered include using Sqoop and Flume for extraction, partitioning and tuning data structures for transformation, and loading data in parallel for scaling. Workflow management with Oozie and monitoring with Cloudera Manager are also discussed.

Apache Eagle - Monitor Hadoop in Real Time

Apache Eagle - Monitor Hadoop in Real TimeDataWorks Summit/Hadoop Summit Apache Eagle is a distributed real-time monitoring and alerting engine for Hadoop created by eBay to address limitations of existing tools in handling large volumes of metrics and logs from Hadoop clusters. It provides data activity monitoring, job performance monitoring, and unified monitoring. Eagle detects anomalies using machine learning algorithms and notifies users through alerts. It has been deployed across multiple eBay clusters with over 10,000 nodes and processes hundreds of thousands of events per day.

Spark+flume seattle

Spark+flume seattleHari Shreedharan This document provides an overview of Flume and Spark Streaming. It describes how Flume is used to reliably ingest streaming data into Hadoop using an agent-based architecture. Events are collected by sources, stored reliably in channels, and sent to sinks. The Flume connector allows ingested data to be processed in real-time using Spark Streaming's micro-batch architecture, where streams of data are processed through RDD transformations. This combined Flume + Spark Streaming approach provides a scalable and fault-tolerant way to reliably ingest and process streaming data.

Application architectures with Hadoop – Big Data TechCon 2014

Application architectures with Hadoop – Big Data TechCon 2014hadooparchbook Building applications using Apache Hadoop with a use-case of clickstream analysis. Presented by Mark Grover and Jonathan Seidman at Big Data TechCon, Boston in April 2014

Streaming in the Wild with Apache Flink

Streaming in the Wild with Apache FlinkDataWorks Summit/Hadoop Summit This document summarizes a presentation about streaming data processing with Apache Flink. It discusses how Flink enables real-time analysis and continuous applications. Case studies are presented showing how companies like Bouygues Telecom, Zalando, King.com, and Netflix use Flink for applications like monitoring, analytics, and building a stream processing service. Flink performance is discussed through benchmarks, and features like consistent snapshots and dynamic scaling are mentioned.

Improving HDFS Availability with IPC Quality of Service

Improving HDFS Availability with IPC Quality of ServiceDataWorks Summit This document discusses how Hadoop RPC quality of service (QoS) helps improve HDFS availability by preventing name node congestion. It describes how certain user requests can monopolize name node resources, causing slowdowns or outages for other users. The solution presented is to implement fair scheduling of RPC requests using a weighted round-robin approach across user queues. This provides performance isolation and prevents abusive users from degrading service for others. Configuration and implementation details are also covered.

Securing Spark Applications by Kostas Sakellis and Marcelo Vanzin

Securing Spark Applications by Kostas Sakellis and Marcelo VanzinSpark Summit This document discusses securing Spark applications. It covers encryption, authentication, and authorization. Encryption protects data in transit using SASL or SSL. Authentication uses Kerberos to identify users. Authorization controls data access using Apache Sentry and the Sentry HDFS plugin, which synchronizes HDFS permissions with higher-level abstractions like tables. A future RecordService aims to provide a unified authorization system at the record level for Spark SQL.

Building Effective Near-Real-Time Analytics with Spark Streaming and Kudu

Building Effective Near-Real-Time Analytics with Spark Streaming and KuduJeremy Beard This document discusses building near-real-time analytics pipelines using Apache Spark Streaming and Apache Kudu on the Cloudera platform. It defines near-real-time analytics, describes the relevant components of the Cloudera stack (Kafka, Spark, Kudu, Impala), and how they can work together. The document then outlines the typical stages involved in implementing a Spark Streaming to Kudu pipeline, including sourcing from a queue, translating data, deriving storage records, planning mutations, and storing the data. It provides performance considerations and introduces Envelope, a Spark Streaming application on Cloudera Labs that implements these stages through configurable pipelines.

How to overcome mysterious problems caused by large and multi-tenancy Hadoop ...

How to overcome mysterious problems caused by large and multi-tenancy Hadoop ...DataWorks Summit/Hadoop Summit The NameNode was experiencing high load and instability after being restarted. Graphs showed unknown high load between checkpoints on the NameNode. DataNode logs showed repeated 60000 millisecond timeouts in communication with the NameNode. Thread dumps revealed NameNode server handlers waiting on the same lock, indicating a bottleneck. Source code analysis pointed to repeated block reports from DataNodes to the NameNode as the likely cause of the high load.

Simplified Cluster Operation & Troubleshooting

Simplified Cluster Operation & TroubleshootingDataWorks Summit/Hadoop Summit 1. Apache Ambari is an open-source platform for provisioning, managing and monitoring Hadoop clusters.

2. New features in Ambari 2.4 include additional services, role-based access control, management packs and a Grafana UI for visualizing metrics.

3. Ambari simplifies cluster operations through deploying clusters via blueprints, automated Kerberos integration, host discovery and stack advisors. It also supports upgrading clusters with either rolling or express upgrades.

Hadoop engineering bo_f_final

Hadoop engineering bo_f_finalRamya Sunil Hortonworks provides best practices for system testing Hadoop clusters. It recommends testing across different operating systems, configurations, workloads and hardware to mimic a production environment. The document outlines automating the testing process through continuous integration to test over 15,000 configurations. It provides guidance on test planning, including identifying requirements, selecting hardware and workloads to test upgrades, migrations and changes to security settings.

Near Real Time Indexing Kafka Messages into Apache Blur: Presented by Dibyend...

Near Real Time Indexing Kafka Messages into Apache Blur: Presented by Dibyend...Lucidworks This document discusses Pearson's use of Apache Blur for distributed search and indexing of data from Kafka streams into Blur. It provides an overview of Pearson's learning platform and data architecture, describes the benefits of using Blur including its scalability, fault tolerance and query support. It also outlines the challenges of integrating Kafka streams with Blur using Spark and the solution developed to provide a reliable, low-level Kafka consumer within Spark that indexes messages from Kafka into Blur in near real-time.

Fraud Detection using Hadoop

Fraud Detection using Hadoophadooparchbook The document discusses real-time fraud detection patterns and architectures. It provides an overview of key technologies like Kafka, Flume, and Spark Streaming used for real-time event processing. It then describes a high-level architecture involving ingesting events through Flume and Kafka into Spark Streaming for real-time processing, with results stored in HBase, HDFS, and Solr. The document also covers partitioning strategies, micro-batching, complex topologies, and ingestion of real-time and batch data.

Kafka for DBAs

Kafka for DBAsGwen (Chen) Shapira This document discusses Apache Kafka and how it can be used by Oracle DBAs. It begins by explaining how Kafka builds upon the concept of a database redo log by providing a distributed commit log service. It then discusses how Kafka is a publish-subscribe messaging system and can be used to log transactions from any database, application logs, metrics and other system events. Finally, it discusses how schemas are important for Kafka since it only stores messages as bytes, and how Avro can be used to define and evolve schemas for Kafka messages.

Analyzing twitter data with hadoop

Analyzing twitter data with hadoopJoey Echeverria The document discusses analyzing Twitter data with Hadoop. It describes using Flume to pull Twitter data from the Twitter API and store it in HDFS as JSON files. Hive is then used to query the JSON data with SQL, taking advantage of the JSONSerDe to parse the JSON. Impala provides faster interactive queries of the same data compared to Hive running MapReduce jobs. The document provides examples of the Flume, Hive, and Impala configurations and queries used in this Twitter analytics workflow.

Ad

More Related Content

What's hot (20)

Scaling ETL with Hadoop - Avoiding Failure

Scaling ETL with Hadoop - Avoiding FailureGwen (Chen) Shapira This document discusses scaling extract, transform, load (ETL) processes with Apache Hadoop. It describes how data volumes and varieties have increased, challenging traditional ETL approaches. Hadoop offers a flexible way to store and process structured and unstructured data at scale. The document outlines best practices for extracting data from databases and files, transforming data using tools like MapReduce, Pig and Hive, and loading data into data warehouses or keeping it in Hadoop. It also discusses workflow management with tools like Oozie. The document cautions against several potential mistakes in ETL design and implementation with Hadoop.

Deploying Apache Flume to enable low-latency analytics

Deploying Apache Flume to enable low-latency analyticsDataWorks Summit The driving question behind redesigns of countless data collection architectures has often been, ?how can we make the data available to our analytical systems faster?? Increasingly, the go-to solution for this data collection problem is Apache Flume. In this talk, architectures and techniques for designing a low-latency Flume-based data collection and delivery system to enable Hadoop-based analytics are explored. Techniques for getting the data into Flume, getting the data onto HDFS and HBase, and making the data available as quickly as possible are discussed. Best practices for scaling up collection, addressing de-duplication, and utilizing a combination streaming/batch model are described in the context of Flume and Hadoop ecosystem components.

Ingest and Stream Processing - What will you choose?

Ingest and Stream Processing - What will you choose?DataWorks Summit/Hadoop Summit This document discusses streaming data ingestion and processing options. It provides an overview of common streaming architectures including Kafka as an ingestion hub and various streaming engines. Spark Streaming is highlighted as a popular and full-featured option for processing streaming data due to its support for SQL, machine learning, and ease of transition from batch workflows. The document also briefly profiles StreamSets Data Collector as a higher-level tool for building streaming data pipelines.

Emerging technologies /frameworks in Big Data

Emerging technologies /frameworks in Big DataRahul Jain A short overview presentation on Emerging technologies /frameworks in Big Data covering Apache Parquet, Apache Flink, Apache Drill with basic concepts of Columnar Storage and Dremel.

Fraud Detection Architecture

Fraud Detection ArchitectureGwen (Chen) Shapira This session will go into best practices and detail on how to architect a near real-time application on Hadoop using an end-to-end fraud detection case study as an example. It will discuss various options available for ingest, schema design, processing frameworks, storage handlers and others, available for architecting this fraud detection application and walk through each of the architectural decisions among those choices.

Apache storm vs. Spark Streaming

Apache storm vs. Spark StreamingP. Taylor Goetz Slides for an upcoming talk about Apache Storm and Spark Streaming.

This is a draft and is subject to change. Comments welcome.

Real time analytics with Kafka and SparkStreaming

Real time analytics with Kafka and SparkStreamingAshish Singh In a world where every “thing” is producing lots of data, ingesting and processing that large volume of data becomes a big problem. In today’s dynamic world, firms have to react to changing conditions very fast, or even better in real time. This presentation covers how two of the latest and greatest tools from Big Data community, Kafka and Spark Streaming, enables us to take on that challenge.

Scaling etl with hadoop shapira 3

Scaling etl with hadoop shapira 3Gwen (Chen) Shapira This document discusses scaling ETL processes with Hadoop. It describes using Hadoop for extracting data from various structured and unstructured sources, transforming data using MapReduce and other tools, and loading data into data warehouses or other targets. Specific techniques covered include using Sqoop and Flume for extraction, partitioning and tuning data structures for transformation, and loading data in parallel for scaling. Workflow management with Oozie and monitoring with Cloudera Manager are also discussed.

Apache Eagle - Monitor Hadoop in Real Time

Apache Eagle - Monitor Hadoop in Real TimeDataWorks Summit/Hadoop Summit Apache Eagle is a distributed real-time monitoring and alerting engine for Hadoop created by eBay to address limitations of existing tools in handling large volumes of metrics and logs from Hadoop clusters. It provides data activity monitoring, job performance monitoring, and unified monitoring. Eagle detects anomalies using machine learning algorithms and notifies users through alerts. It has been deployed across multiple eBay clusters with over 10,000 nodes and processes hundreds of thousands of events per day.

Spark+flume seattle

Spark+flume seattleHari Shreedharan This document provides an overview of Flume and Spark Streaming. It describes how Flume is used to reliably ingest streaming data into Hadoop using an agent-based architecture. Events are collected by sources, stored reliably in channels, and sent to sinks. The Flume connector allows ingested data to be processed in real-time using Spark Streaming's micro-batch architecture, where streams of data are processed through RDD transformations. This combined Flume + Spark Streaming approach provides a scalable and fault-tolerant way to reliably ingest and process streaming data.

Application architectures with Hadoop – Big Data TechCon 2014

Application architectures with Hadoop – Big Data TechCon 2014hadooparchbook Building applications using Apache Hadoop with a use-case of clickstream analysis. Presented by Mark Grover and Jonathan Seidman at Big Data TechCon, Boston in April 2014

Streaming in the Wild with Apache Flink

Streaming in the Wild with Apache FlinkDataWorks Summit/Hadoop Summit This document summarizes a presentation about streaming data processing with Apache Flink. It discusses how Flink enables real-time analysis and continuous applications. Case studies are presented showing how companies like Bouygues Telecom, Zalando, King.com, and Netflix use Flink for applications like monitoring, analytics, and building a stream processing service. Flink performance is discussed through benchmarks, and features like consistent snapshots and dynamic scaling are mentioned.

Improving HDFS Availability with IPC Quality of Service

Improving HDFS Availability with IPC Quality of ServiceDataWorks Summit This document discusses how Hadoop RPC quality of service (QoS) helps improve HDFS availability by preventing name node congestion. It describes how certain user requests can monopolize name node resources, causing slowdowns or outages for other users. The solution presented is to implement fair scheduling of RPC requests using a weighted round-robin approach across user queues. This provides performance isolation and prevents abusive users from degrading service for others. Configuration and implementation details are also covered.

Securing Spark Applications by Kostas Sakellis and Marcelo Vanzin

Securing Spark Applications by Kostas Sakellis and Marcelo VanzinSpark Summit This document discusses securing Spark applications. It covers encryption, authentication, and authorization. Encryption protects data in transit using SASL or SSL. Authentication uses Kerberos to identify users. Authorization controls data access using Apache Sentry and the Sentry HDFS plugin, which synchronizes HDFS permissions with higher-level abstractions like tables. A future RecordService aims to provide a unified authorization system at the record level for Spark SQL.

Building Effective Near-Real-Time Analytics with Spark Streaming and Kudu

Building Effective Near-Real-Time Analytics with Spark Streaming and KuduJeremy Beard This document discusses building near-real-time analytics pipelines using Apache Spark Streaming and Apache Kudu on the Cloudera platform. It defines near-real-time analytics, describes the relevant components of the Cloudera stack (Kafka, Spark, Kudu, Impala), and how they can work together. The document then outlines the typical stages involved in implementing a Spark Streaming to Kudu pipeline, including sourcing from a queue, translating data, deriving storage records, planning mutations, and storing the data. It provides performance considerations and introduces Envelope, a Spark Streaming application on Cloudera Labs that implements these stages through configurable pipelines.

How to overcome mysterious problems caused by large and multi-tenancy Hadoop ...

How to overcome mysterious problems caused by large and multi-tenancy Hadoop ...DataWorks Summit/Hadoop Summit The NameNode was experiencing high load and instability after being restarted. Graphs showed unknown high load between checkpoints on the NameNode. DataNode logs showed repeated 60000 millisecond timeouts in communication with the NameNode. Thread dumps revealed NameNode server handlers waiting on the same lock, indicating a bottleneck. Source code analysis pointed to repeated block reports from DataNodes to the NameNode as the likely cause of the high load.

Simplified Cluster Operation & Troubleshooting

Simplified Cluster Operation & TroubleshootingDataWorks Summit/Hadoop Summit 1. Apache Ambari is an open-source platform for provisioning, managing and monitoring Hadoop clusters.

2. New features in Ambari 2.4 include additional services, role-based access control, management packs and a Grafana UI for visualizing metrics.

3. Ambari simplifies cluster operations through deploying clusters via blueprints, automated Kerberos integration, host discovery and stack advisors. It also supports upgrading clusters with either rolling or express upgrades.

Hadoop engineering bo_f_final

Hadoop engineering bo_f_finalRamya Sunil Hortonworks provides best practices for system testing Hadoop clusters. It recommends testing across different operating systems, configurations, workloads and hardware to mimic a production environment. The document outlines automating the testing process through continuous integration to test over 15,000 configurations. It provides guidance on test planning, including identifying requirements, selecting hardware and workloads to test upgrades, migrations and changes to security settings.

Near Real Time Indexing Kafka Messages into Apache Blur: Presented by Dibyend...

Near Real Time Indexing Kafka Messages into Apache Blur: Presented by Dibyend...Lucidworks This document discusses Pearson's use of Apache Blur for distributed search and indexing of data from Kafka streams into Blur. It provides an overview of Pearson's learning platform and data architecture, describes the benefits of using Blur including its scalability, fault tolerance and query support. It also outlines the challenges of integrating Kafka streams with Blur using Spark and the solution developed to provide a reliable, low-level Kafka consumer within Spark that indexes messages from Kafka into Blur in near real-time.

Fraud Detection using Hadoop

Fraud Detection using Hadoophadooparchbook The document discusses real-time fraud detection patterns and architectures. It provides an overview of key technologies like Kafka, Flume, and Spark Streaming used for real-time event processing. It then describes a high-level architecture involving ingesting events through Flume and Kafka into Spark Streaming for real-time processing, with results stored in HBase, HDFS, and Solr. The document also covers partitioning strategies, micro-batching, complex topologies, and ingestion of real-time and batch data.

How to overcome mysterious problems caused by large and multi-tenancy Hadoop ...

How to overcome mysterious problems caused by large and multi-tenancy Hadoop ...DataWorks Summit/Hadoop Summit

Viewers also liked (11)

Kafka for DBAs

Kafka for DBAsGwen (Chen) Shapira This document discusses Apache Kafka and how it can be used by Oracle DBAs. It begins by explaining how Kafka builds upon the concept of a database redo log by providing a distributed commit log service. It then discusses how Kafka is a publish-subscribe messaging system and can be used to log transactions from any database, application logs, metrics and other system events. Finally, it discusses how schemas are important for Kafka since it only stores messages as bytes, and how Avro can be used to define and evolve schemas for Kafka messages.

Analyzing twitter data with hadoop

Analyzing twitter data with hadoopJoey Echeverria The document discusses analyzing Twitter data with Hadoop. It describes using Flume to pull Twitter data from the Twitter API and store it in HDFS as JSON files. Hive is then used to query the JSON data with SQL, taking advantage of the JSONSerDe to parse the JSON. Impala provides faster interactive queries of the same data compared to Hive running MapReduce jobs. The document provides examples of the Flume, Hive, and Impala configurations and queries used in this Twitter analytics workflow.

Topic modeling using big data analytics

Topic modeling using big data analyticsFarheen Nilofer Topic modeling using big data analytics can analyze large datasets. It involves installing Hadoop on multiple nodes for distributed processing, preprocessing data into a desired format, and using modeling tools to parallelize computation and select algorithms. Topic modeling identifies patterns in corpora to develop new ways to search, browse, and summarize large text archives. Tools like Mallet use algorithms like LDA and PLSI to achieve topic modeling on Hadoop, applying it to analyze news articles, search engine rankings, genetic and image data, and more.

Is hadoop for you

Is hadoop for youGwen (Chen) Shapira 1) Hadoop is well-suited for organizations that have large amounts of non-relational or unstructured data from sources like logs, sensor data, or social media. It allows for the distributed storage and parallel processing of such large datasets across clusters of commodity hardware.

2) Hadoop uses the Hadoop Distributed File System (HDFS) to reliably store large files across nodes in a cluster and allows for the parallel processing of data using the MapReduce programming model. This architecture provides benefits like scalability, flexibility, reliability, and low costs compared to traditional database solutions.

3) To get started with Hadoop, organizations should run some initial proof-of-concept projects using freely available cloud resources

Dynamic Topic Modeling via Non-negative Matrix Factorization (Dr. Derek Greene)

Dynamic Topic Modeling via Non-negative Matrix Factorization (Dr. Derek Greene)Sebastian Ruder Talk given at second NLP Dublin Meetup (http://www.meetup.com/NLP-Dublin/events/233314527/) by Dr. Derek Greene, Lecturer at Insight Centre, UCD.

SpringPeople Introduction to Apache Hadoop

SpringPeople Introduction to Apache HadoopSpringPeople SpringPeople's Apache Hadoop Workshop/Training course is for experienced developers who wish to write, maintain and/or optimize Apache Hadoop jobs.

Have your cake and eat it too

Have your cake and eat it tooGwen (Chen) Shapira Many architectures include both real-time and batch processing components. This often results in two separate pipelines performing similar tasks, which can be challenging to maintain and operate. We'll show how a single, well designed ingest pipeline can be used for both real-time and batch processing, making the desired architecture feasible for scalable production use cases.

Introduction To Big Data Analytics On Hadoop - SpringPeople

Introduction To Big Data Analytics On Hadoop - SpringPeopleSpringPeople Big data analytics uses tools like Hadoop and its components HDFS and MapReduce to store and analyze large datasets in a distributed environment. HDFS stores very large data sets reliably and streams them at high speeds, while MapReduce allows developers to write programs that process massive amounts of data in parallel across a distributed cluster. Other concepts discussed in the document include data preparation, visualization, hypothesis testing, and deductive vs inductive reasoning as they relate to big data analytics. The document aims to introduce readers to big data analytics using Hadoop and suggests the audience as data analysts, scientists, database managers, and consultants.

Lambda Architecture with Spark, Spark Streaming, Kafka, Cassandra, Akka and S...

Lambda Architecture with Spark, Spark Streaming, Kafka, Cassandra, Akka and S...Helena Edelson Regardless of the meaning we are searching for over our vast amounts of data, whether we are in science, finance, technology, energy, health care…, we all share the same problems that must be solved: How do we achieve that? What technologies best support the requirements? This talk is about how to leverage fast access to historical data with real time streaming data for predictive modeling for lambda architecture with Spark Streaming, Kafka, Cassandra, Akka and Scala. Efficient Stream Computation, Composable Data Pipelines, Data Locality, Cassandra data model and low latency, Kafka producers and HTTP endpoints as akka actors...

Omnichannel Customer Experience

Omnichannel Customer ExperienceDivante Omnichannel Customer Experience. Companies such as Amazon, Facebook, Google, Apple already know that the future of user experience is automated interface creation depending on customer needs.

Big Data in Retail - Examples in Action

Big Data in Retail - Examples in ActionDavid Pittman This use case looks at how savvy retailers can use "big data" - combining data from web browsing patterns, social media, industry forecasts, existing customer records, etc. - to predict trends, prepare for demand, pinpoint customers, optimize pricing and promotions, and monitor real-time analytics and results. For more information, visit http://www.IBMbigdatahub.com

Follow us on Twitter.com/IBMbigdata

Ad

Similar to Twitter with hadoop for oow (20)

Hadoop Essentials -- The What, Why and How to Meet Agency Objectives

Hadoop Essentials -- The What, Why and How to Meet Agency ObjectivesCloudera, Inc. This session will provide an executive overview of the Apache Hadoop ecosystem, its basic concepts, and its real-world applications. Attendees will learn how organizations worldwide are using the latest tools and strategies to harness their enterprise information to solve business problems and the types of data analysis commonly powered by Hadoop. Learn how various projects make up the Apache Hadoop ecosystem and the role each plays to improve data storage, management, interaction, and analysis. This is a valuable opportunity to gain insights into Hadoop functionality and how it can be applied to address compelling business challenges in your agency.

Applications on Hadoop

Applications on Hadoopmarkgrover This document discusses building applications on Hadoop and introduces the Kite SDK. It provides an overview of Hadoop and its components like HDFS and MapReduce. It then discusses that while Hadoop is powerful and flexible, it can be complex and low-level, making application development challenging. The Kite SDK aims to address this by providing higher-level APIs and abstractions to simplify common use cases and allow developers to focus on business logic rather than infrastructure details. It includes modules for data, ETL processing with Morphlines, and tools for working with datasets and jobs. The SDK is open source and supports modular adoption.

Hadoop and Hive in Enterprises

Hadoop and Hive in Enterprisesmarkgrover Presentation by Mark Grover on how Hadoop and Hive are currently being leveraged in enterprises at San Jose State University.

SQL Engines for Hadoop - The case for Impala

SQL Engines for Hadoop - The case for Impalamarkgrover SQL Engines for Hadoop - The case for Impala presentation by Mark Grover at Budapest Data Forum on June 4th, 2015

What's New in Apache Hive 3.0 - Tokyo

What's New in Apache Hive 3.0 - TokyoDataWorks Summit The document discusses new features and enhancements in Apache Hive 3.0 including:

1. Improved transactional capabilities with ACID v2 that provide faster performance compared to previous versions while also supporting non-bucketed tables and non-ORC formats.

2. New materialized view functionality that allows queries to be rewritten to improve performance by leveraging pre-computed results stored in materialized views.

3. Enhancements to LLAP workload management that improve query scheduling and enable better sharing of resources across users.

What's New in Apache Hive 3.0?

What's New in Apache Hive 3.0?DataWorks Summit Apache Hive is a rapidly evolving project, many people are loved by the big data ecosystem. Hive continues to expand support for analytics, reporting, and bilateral queries, and the community is striving to improve support along with many other aspects and use cases. In this lecture, we introduce the latest and greatest features and optimization that appeared in this project last year. This includes benchmarks covering LLAP, Apache Druid's materialized views and integration, workload management, ACID improvements, using Hive in the cloud, and performance improvements. I will also tell you a little about what you can expect in the future.

Big SQL Competitive Summary - Vendor Landscape

Big SQL Competitive Summary - Vendor LandscapeNicolas Morales IBM's Big SQL is their SQL for Hadoop product that allows users to run SQL queries on Hadoop data. It uses the Hive metastore to catalog table definitions and shares data logic with Hive. Big SQL is architected for high performance with a massively parallel processing (MPP) runtime and runs directly on the Hadoop cluster with no proprietary storage formats required. The document compares Big SQL to other SQL on Hadoop solutions and outlines its performance and architectural advantages.

Building a Hadoop Data Warehouse with Impala

Building a Hadoop Data Warehouse with ImpalaSwiss Big Data User Group This talk was held at the 11th meeting on April 7 2014 by Marcel Kornacker.

Impala (impala.io) raises the bar for SQL query performance on Apache Hadoop. With Impala, you can query Hadoop data – including SELECT, JOIN, and aggregate functions – in real time to do BI-style analysis. As a result, Impala makes a Hadoop-based enterprise data hub function like an enterprise data warehouse for native Big Data.

Cloudera Impala - San Diego Big Data Meetup August 13th 2014

Cloudera Impala - San Diego Big Data Meetup August 13th 2014cdmaxime Cloudera Impala presentation to San Diego Big Data Meetup (http://www.meetup.com/sdbigdata/events/189420582/)

Cloudera Big Data Integration Speedpitch at TDWI Munich June 2017

Cloudera Big Data Integration Speedpitch at TDWI Munich June 2017Stefan Lipp Leverage: Multi-In + Scale + Multi Out with Cloudera as Hadoop platform

Ingest Flume Kafka Sqoop Spark Datascience Workbench

PaaS Altus job-first

Data Governance Lineage Security GDPR Navigator

Building a Hadoop Data Warehouse with Impala

Building a Hadoop Data Warehouse with Impalahuguk The document is a presentation about using Hadoop for analytic workloads. It discusses how Hadoop has traditionally been used for batch processing but can now also be used for interactive queries and business intelligence workloads using tools like Impala, Parquet, and HDFS. It summarizes performance tests showing Impala can outperform MapReduce for queries and scales linearly with additional nodes. The presentation argues Hadoop provides an effective solution for certain data warehouse workloads while maintaining flexibility, ease of scaling, and cost effectiveness.

Modernize Your Existing EDW with IBM Big SQL & Hortonworks Data Platform

Modernize Your Existing EDW with IBM Big SQL & Hortonworks Data PlatformHortonworks Find out how Hortonworks and IBM help you address these challenges to enable success to optimize your existing EDW environment.

https://hortonworks.com/webinar/modernize-existing-edw-ibm-big-sql-hortonworks-data-platform/

Using Oracle Big Data SQL 3.0 to add Hadoop & NoSQL to your Oracle Data Wareh...

Using Oracle Big Data SQL 3.0 to add Hadoop & NoSQL to your Oracle Data Wareh...Mark Rittman As presented at OGh SQL Celebration Day in June 2016, NL. Covers new features in Big Data SQL including storage indexes, storage handlers and ability to install + license on commodity hardware

Ibis: operating the Python data ecosystem at Hadoop scale by Wes McKinney

Ibis: operating the Python data ecosystem at Hadoop scale by Wes McKinneyHakka Labs Wes McKinney gave a presentation on scaling Python analytics on Hadoop and Impala. He discussed how Python has become popular for data science but does not currently scale to large datasets. The Ibis project aims to address this by providing a composable Python API that removes the need for hand-coding SQL and allows analysts to interact with distributed SQL engines like Impala from Python. Ibis expressions are compiled to optimized SQL queries for efficient execution on large datasets.

Hitachi Data Systems Hadoop Solution

Hitachi Data Systems Hadoop SolutionHitachi Vantara Hitachi Data Systems Hadoop Solution. Customers are seeing exponential growth of unstructured data from their social media websites to operational sources. Their enterprise data warehouses are not designed to handle such high volumes and varieties of data. Hadoop, the latest software platform that scales to process massive volumes of unstructured and semi-structured data by distributing the workload through clusters of servers, is giving customers new option to tackle data growth and deploy big data analysis to help better understand their business. Hitachi Data Systems is launching its latest Hadoop reference architecture, which is pre-tested with Cloudera Hadoop distribution to provide a faster time to market for customers deploying Hadoop applications. HDS, Cloudera and Hitachi Consulting will present together and explain how to get you there. Attend this WebTech and learn how to: Solve big-data problems with Hadoop. Deploy Hadoop in your data warehouse environment to better manage your unstructured and structured data. Implement Hadoop using HDS Hadoop reference architecture. For more information on Hitachi Data Systems Hadoop Solution please read our blog: http://blogs.hds.com/hdsblog/2012/07/a-series-on-hadoop-architecture.html

Big Data Developers Moscow Meetup 1 - sql on hadoop

Big Data Developers Moscow Meetup 1 - sql on hadoopbddmoscow This document summarizes a meetup about Big Data and SQL on Hadoop. The meetup included discussions on what Hadoop is, why SQL on Hadoop is useful, what Hive is, and introduced IBM's BigInsights software for running SQL on Hadoop with improved performance over other solutions. Key topics included HDFS file storage, MapReduce processing, Hive tables and metadata storage, and how BigInsights provides a massively parallel SQL engine instead of relying on MapReduce.

Oracle hadoop let them talk together !

Oracle hadoop let them talk together !Laurent Leturgez Laurent Leturgez discusses connecting Oracle and Hadoop to allow them to exchange data. He outlines several tools that can be used, including Sqoop for importing and exporting data between Oracle and Hadoop, Spark for running analytics on Hadoop, and various connectors like ODBC connectors and Oracle Big Data connectors. He also discusses using Oracle Big Data SQL and the Gluent Data Platform to query data across Oracle and Hadoop.

Delivering Insights from 20M+ Smart Homes with 500M+ Devices

Delivering Insights from 20M+ Smart Homes with 500M+ DevicesDatabricks We started out processing big data using AWS S3, EMR clusters, and Athena to serve Analytics data extracts to Tableau BI.

However as our data and teams sizes increased, Avro schemas from source data evolved, and we attempted to serve analytics data through Web apps, we hit a number of limitations in the AWS EMR, Glue/Athena approach.

This is a story of how we scaled out our data processing and boosted team productivity to meet our current demand for insights from 20M+ Smart Homes and 500M+ devices across the globe, from numerous internal business teams and our 150+ CSP partners.

We will describe lessons learnt and best practices established as we enabled our teams with DataBricks autoscaling Job clusters and Notebooks and migrated our Avro/Parquet data to use MetaStore, SQL Endpoints and SQLA Console, while charting the path to the Delta lake…

Self-Service BI for big data applications using Apache Drill (Big Data Amster...

Self-Service BI for big data applications using Apache Drill (Big Data Amster...Dataconomy Media Modern big data applications such as social, mobile, web and IoT deal with a larger number of users and larger amount of data than the traditional transactional applications. The datasets associated with these applications evolve rapidly, are often self-describing and can include complex types such as JSON and Parquet. In this demo we will show how Apache Drill can be used to provide low latency queries natively on rapidly evolving multi-structured datasets at scale.

Self-Service BI for big data applications using Apache Drill (Big Data Amster...

Self-Service BI for big data applications using Apache Drill (Big Data Amster...Mats Uddenfeldt Modern big data applications such as social, mobile, web and IoT deal with a larger number of users and larger amount of data than the traditional transactional applications. The datasets associated with these applications evolve rapidly, are often self-describing and can include complex types such as JSON and Parquet. In this demo we will show how Apache Drill can be used to provide low latency queries natively on rapidly evolving multi-structured datasets at scale.

Ad

More from Gwen (Chen) Shapira (18)

Velocity 2019 - Kafka Operations Deep Dive

Velocity 2019 - Kafka Operations Deep DiveGwen (Chen) Shapira In which disk-related failure

scenarios of Apache Kafka are discussed in unprecedented level of detail

Lies Enterprise Architects Tell - Data Day Texas 2018 Keynote

Lies Enterprise Architects Tell - Data Day Texas 2018 Keynote Gwen (Chen) Shapira The document discusses lies that architects sometimes tell and truths they avoid. It provides examples of six common lies: 1) saying a system is real-time or has big data when it really has specific requirements, 2) claiming a microservices architecture exists when the goal is still to migrate, 3) saying hybrid/multi-cloud architectures don't exist when the architecture is just copy-pasted, 4) using "best of breed" when really using only one of everything, 5) claiming something can't be done at an organization due to its nature when other similar organizations succeeded, and 6) avoiding risk or change by safely interpreting things in a non-threatening way. The document advocates defining responsibilities clearly, embracing change, taking measured

Gluecon - Kafka and the service mesh

Gluecon - Kafka and the service meshGwen (Chen) Shapira Exploring the problem of Microservices communication and how both Kafka and Service Mesh solutions address it. We then look at some approaches for combining both.

Multi-Cluster and Failover for Apache Kafka - Kafka Summit SF 17

Multi-Cluster and Failover for Apache Kafka - Kafka Summit SF 17Gwen (Chen) Shapira This document discusses disaster recovery strategies for Apache Kafka clusters running across multiple data centers. It outlines several failure scenarios like an entire data center being demolished and recommends solutions like running a single Kafka cluster across multiple near-by data centers. It then describes a "stretch cluster" approach using 3 data centers with replication between them to provide high availability. The document also discusses active-active replication between two data center clusters and challenges around consumer offsets not being identical across data centers during a failover. It recommends approaches like tracking timestamps and failing over consumers based on time.

Papers we love realtime at facebook

Papers we love realtime at facebookGwen (Chen) Shapira Presentation for Papers We Love at QCON NYC 17. I didn't write the paper, good people at Facebook did. But I sure enjoyed reading it and presenting it.

Kafka reliability velocity 17

Kafka reliability velocity 17Gwen (Chen) Shapira The document discusses reliability guarantees in Apache Kafka. It explains that Kafka provides reliability through replication of data across multiple brokers. As long as the minimum number of in-sync replicas (ISRs) is maintained, messages will not be lost even if individual brokers fail. It also discusses best practices for producers and consumers to ensure data is not lost such as using acks=all for producers, disabling unclean leader election, committing offsets only after processing is complete, and monitoring for errors, lag and reconciliation of message counts.

Multi-Datacenter Kafka - Strata San Jose 2017

Multi-Datacenter Kafka - Strata San Jose 2017Gwen (Chen) Shapira This document discusses strategies for building large-scale stream infrastructures across multiple data centers using Apache Kafka. It outlines common multi-data center patterns like stretched clusters, active/passive clusters, and active/active clusters. It also covers challenges like maintaining ordering and consumer offsets across data centers and potential solutions.

Streaming Data Integration - For Women in Big Data Meetup

Streaming Data Integration - For Women in Big Data MeetupGwen (Chen) Shapira A stream processing platform is not an island unto itself; it must be connected to all of your existing data systems, applications, and sources. In this talk, we will provide different options for integrating systems and applications with Apache Kafka, with a focus on the Kafka Connect framework and the ecosystem of Kafka connectors. We will discuss the intended use cases for Kafka Connect and share our experience and best practices for building large-scale data pipelines using Apache Kafka.

Kafka at scale facebook israel

Kafka at scale facebook israelGwen (Chen) Shapira This document provides guidance on scaling Apache Kafka clusters and tuning performance. It discusses expanding Kafka clusters horizontally across inexpensive servers for increased throughput and CPU utilization. Key aspects that impact performance like disk layout, OS tuning, Java settings, broker and topic monitoring, client tuning, and anticipating problems are covered. Application performance can be improved through configuration of batch size, compression, and request handling, while consumer performance relies on partitioning, fetch settings, and avoiding perpetual rebalances.

Kafka connect-london-meetup-2016

Kafka connect-london-meetup-2016Gwen (Chen) Shapira This document discusses Apache Kafka and Confluent's Kafka Connect tool for large-scale streaming data integration. Kafka Connect allows importing and exporting data from Kafka to other systems like HDFS, databases, search indexes, and more using reusable connectors. Connectors use converters to handle serialization between data formats. The document outlines some existing connectors and upcoming improvements to Kafka Connect.

Kafka Reliability - When it absolutely, positively has to be there

Kafka Reliability - When it absolutely, positively has to be thereGwen (Chen) Shapira Kafka provides reliability guarantees through replication and configuration settings. It replicates data across multiple brokers to protect against failures. Producers can ensure data is committed to all in-sync replicas through configuration settings like request.required.acks. Consumers maintain offsets and can commit after processing to prevent data loss. Monitoring is also important to detect any potential issues or data loss in the Kafka system.

Nyc kafka meetup 2015 - when bad things happen to good kafka clusters

Nyc kafka meetup 2015 - when bad things happen to good kafka clustersGwen (Chen) Shapira This document contains stories of things that went wrong with production Kafka clusters in an effort to provide lessons learned. Some examples include losing all data by deleting an important topic, running Kafka with an outdated version, improperly configuring replication factors, and running Kafka logs in a temporary directory which resulted in data loss. The goal is to share these stories so others can learn from mistakes and better configure their Kafka clusters for reliability.

R for hadoopers

R for hadoopersGwen (Chen) Shapira This document provides an overview of using R, Hadoop, and Rhadoop for scalable analytics. It begins with introductions to basic R concepts like data types, vectors, lists, and data frames. It then covers Hadoop basics like MapReduce. Next, it discusses libraries for data manipulation in R like reshape2 and plyr. Finally, it focuses on Rhadoop projects like RMR for implementing MapReduce in R and considerations for using RMR effectively.

Incredible Impala

Incredible Impala Gwen (Chen) Shapira Cloudera Impala: The Open Source, Distributed SQL Query Engine for Big Data. The Cloudera Impala project is pioneering the next generation of Hadoop capabilities: the convergence of fast SQL queries with the capacity, scalability, and flexibility of a Apache Hadoop cluster. With Impala, the Hadoop ecosystem now has an open-source codebase that helps users query data stored in Hadoop-based enterprise data hubs in real time, using familiar SQL syntax.

This talk will begin with an overview of the challenges organizations face as they collect and process more data than ever before, followed by an overview of Impala from the user's perspective and a dive into Impala's architecture. It concludes with stories of how Cloudera's customers are using Impala and the benefits they see.

Ssd collab13

Ssd collab13Gwen (Chen) Shapira The document discusses how databases are leveraging solid state drives (SSDs) to improve performance. It describes how Exadata and other systems use SSDs, specifically how Exadata uses SSDs for smart flash logging and caching. Exadata is able to read from disks and SSDs simultaneously for cached objects to increase read throughput. SSDs solve latency problems and placing active database objects on SSDs provides the largest performance benefits.

Integrated dwh 3

Integrated dwh 3Gwen (Chen) Shapira This document discusses building an integrated data warehouse with Oracle Database and Hadoop. It describes why a data warehouse may need Hadoop to handle big data from sources like social media, sensors and logs. Examples are given of using Hadoop for ETL and analytics. The presentation provides an overview of Hadoop and how to connect it to the data warehouse using tools like Sqoop and external tables. It also offers tips on getting started and avoiding common pitfalls.

Visualizing database performance hotsos 13-v2

Visualizing database performance hotsos 13-v2Gwen (Chen) Shapira This document discusses visualizing database performance data using R. It begins with introductions of the presenter and Pythian. It then outlines topics to be covered, including data preprocessing, visualization tools/techniques, effective vs ineffective visuals, and common mistakes. The bulk of the document demonstrates various R visualizations like boxplots, scatter plots, filtering, smoothing, and heatmaps to explore and tell stories with performance data. It emphasizes summarizing data in a way that provides insights and surprises the audience.

Flexible Design

Flexible DesignGwen (Chen) Shapira The document discusses how database design is an important part of agile development and should not be neglected. It advocates for an evolutionary design approach where the database schema can change over time without impacting application code through the use of procedures, packages, and views. A jointly designed transactional API between the application and database is recommended to simplify changes. Both agile principles and database normalization are seen as valuable to achieve flexibility and avoid redundancy.

Twitter with hadoop for oow

- 1. 1 Analyzing Twitter Data with Hadoop Gwen Shapira, Software Engineer @Gwenshap ©2012 Cloudera, Inc.

- 2. IOUG SIG Meetings at OpenWorld All meetings located in Moscone South - Room 208 Monday, September 29 Exadata SIG: 2:00 p.m. - 3:00 p.m. BIWA SIG: 5:00 p.m. – 6:00 p.m. Tuesday, September 30 Internet of Things SIG: 11:00 a.m. - 12:00 p.m. Storage SIG: 4:00 p.m. - 5:00 p.m. SPARC/Solaris SIG: 5:00 p.m. - 6:00 p.m. Wednesday, October 1 Oracle Enterprise Manager SIG: 8:00 a.m. - 9:00 a.m. Big Data SIG: 10:30 a.m. - 11:30 a.m. Oracle 12c SIG: 2:00 p.m. – 3:00 p.m. Oracle Spatial and Graph SIG: 4:00 p.m. (*OTN lounge)

- 3. • Save more than $1,000 on education offerings like pre-conference workshops • Access the brand-new, specialized IOUG Strategic Leadership Program • Priority access to the hands-on labs with Oracle ACE support • Advance access to supplemental session material and presentations • Special IOUG activities with no "ante in" needed - evening networking opportunities and more COLLABORATE 15 – IOUG Forum April 12-16, 2015 Mandalay Bay Resort and Casino Las Vegas, NV The IOUG Forum Advantage www.collaborate.ioug.org Follow us on Twitter at @IOUG or via the conference hashtag #C15LV!

- 4. I have 15 years of experience in moving data around ©2014 Cloudera, Inc. All rights reserved.

- 5. • Oracle ACE Director • Member of Oak Table • Blogger • Presenter – Hotsos, IOUG, OOW, OSCON • NoCOUG board • Contributor to Apache Oozie, Sqoop, Kafka • Author – Hadoop Application Architectures ©2014 Cloudera, Inc. All rights reserved. In my spare time…

- 6. Analyzing Twitter Data with Hadoop BUILDING AN HADOOP APPLICATION 6 ©2012 Cloudera, Inc.

- 7. 7

- 8. Hive Level Architecture Hive + Oozie Data Source Flume HDFS 8 ©2012 Cloudera, Inc. Impala / Oracle

- 9. Analyzing Twitter Data with Hadoop AN EXAMPLE USE CASE 9 ©2012 Cloudera, Inc.

- 10. Analyzing Twitter • Social media popular with marketing teams • Twitter is an effective tool for promotion • Which twitter user gets the most retweets? • Who is influential in our industry? • Which topics are trending? • “You mentioned Oracle, please take this survey” 10 ©2012 Cloudera, Inc.

- 11. Analyzing Twitter Data with Hadoop HOW DO WE ANSWER THESE QUESTIONS? 11 ©2012 Cloudera, Inc.

- 12. Techniques • Bring Data with Flume • Complex data • Deeply nested • Variable schema • Clean, Standardize, Partition, etc • SQL • Filtering • Aggregation • Sorting 12

- 13. Analyzing Twitter Data with Hadoop FLUME 13

- 14. Flume Agent design 14

- 15. In our case… • Twitter source • Pulls JSON format files from twitter • Memory Channel • HDFS Sink – directory per hour 15

- 16. What is JSON? { "retweeted_status": { "contributors": null, "text": "#Crowdsourcing – drivers already generate traffic data for your smartphone to suggest alternative routes when a road is clogged. #bigdata", "retweeted": false, "entities": { "hashtags": [ { "text": "Crowdsourcing", "indices": [0, 14] }, { "text": "bigdata", "indices": [129,137] } ], "user_mentions": [] } } } 16 ©2012 Cloudera, Inc.

- 17. But Wait! There’s More! • Many sources – directory, files, log4j, net, JMS • Interceptors – process data in flight • Selectors – choose which sink • Many channels – Memory, file • Many sinks – HDFS, Hbase, Solr 17

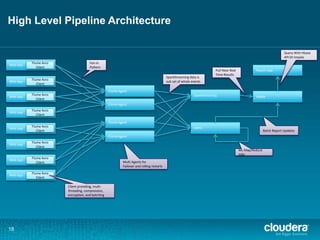

- 18. High Level Pipeline Architecture Web App 18 Flume Avro Client Web App Flume Avro Client Web App Flume Avro Client Web App Flume Avro Client Web App Flume Avro Client Web App Flume Avro Client Web App Flume Avro Client Web App Flume Avro Client Flume Agent Flume Agent Flume Agent Flume Agent SparkStreaming HBase HDFS Report App Fan-in Pattern Multi Agents for Failover and rolling restarts SparkStreaming data is sub set of whole events ML Map/Reduce Jobs Batch Report Updates Pull Near Real Time Results Query With Hbase API Or Impala Client providing, multi-threading, compression, encryption, and batching

- 19. TwitterAgent.sources = Twitter TwitterAgent.channels = MemChannel TwitterAgent.sinks = HDFS TwitterAgent.sources.Twitter.type = com.cloudera.flume.source.TwitterSource TwitterAgent.sources.Twitter.channels = MemChannel TwitterAgent.sources.Twitter.consumerKey = TwitterAgent.sources.Twitter.consumerSecret = TwitterAgent.sources.Twitter.accessToken = TwitterAgent.sources.Twitter.accessTokenSecret = TwitterAgent.sources.Twitter.keywords = hadoop, big data, flume, sqoop, oracle, oow TwitterAgent.sinks.HDFS.channel = MemChannel TwitterAgent.sinks.HDFS.type = hdfs TwitterAgent.sinks.HDFS.hdfs.path = hdfs://quickstart :8020/user/flume/tweets/%Y/%m/%d/%H/ TwitterAgent.sinks.HDFS.serializer = text TwitterAgent.channels.MemChannel.type = memory 19 Configuration

- 20. Analyzing Twitter Data with Hadoop FLUME DEMO 20 ©2012 Cloudera, Inc.

- 21. Analyzing Twitter Data with Hadoop HIVE 21 ©2012 Cloudera, Inc.

- 22. What is Hive? • Created at Facebook • HiveQL • SQL like interface • Hive interpreter converts HiveQL to MapReduce code • Returns results to the client 22 ©2012 Cloudera, Inc.

- 23. Hive Details • Metastore contains table definitions • Stored in a relational database • Basically a data dictionary • SerDes parse data • and converts to table/column structure • SerDe: • CSV, XML, JSON, Avro, Parquet, OCR files • Or write your own (We created one for CopyBook) 23

- 24. Complex Data SELECT t.retweet_screen_name, sum(retweets) AS total_retweets, count(*) AS tweet_count FROM (SELECT retweeted_status.user.screen_name AS retweet_screen_name, retweeted_status.text, max(retweeted_status.retweet_count) AS retweets FROM tweets GROUP BY retweeted_status.user.screen_name, retweeted_status.text) t GROUP BY t.retweet_screen_name ORDER BY total_retweets DESC LIMIT 10; 24 ©2012 Cloudera, Inc.

- 25. Analyzing Twitter Data with Hadoop HIVE DEMO 25 ©2012 Cloudera, Inc.

- 26. Analyzing Twitter Data with Hadoop IT’S A TRAP 26 ©2012 Cloudera, Inc.

- 27. Not a Database RDBMS Hive Impala Language Generally >= SQL-92 Subset of SQL-92 plus Hive specific extensions 27 ©2012 Cloudera, Inc. Subset of SQL- 92 Update Capabilities INSERT, UPDATE, DELETE Bulk INSERT, UPDATE, DELETE Insert, truncate Transactions Yes Yes No Latency Sub-second Minutes Sub-second Indexes Yes Yes No Data size Few Terabytes Petabytes Lots of Terabytes

- 28. Analyzing Twitter Data with Hadoop DATA FORMATS 28

- 29. I don’t like our data • Lots of small files • JSON – requires parsing • Can’t compress • Sensitive to changes 29

- 30. I’d rather use Avro • Few large files containing records • Schema in file • Schema evolution • Can compress • Well supported in Hadoop • Clients in other languages 30

- 31. Lets convert • Create table AVRO_TWEETS • Insert into Avro_tweets select …. From tweets 31

- 32. Analyzing Twitter Data with Hadoop IMPALA ASIDE 32 ©2012 Cloudera, Inc.

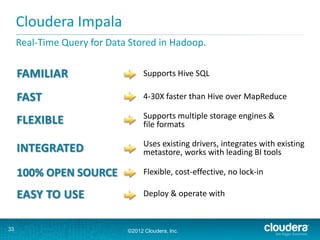

- 33. Cloudera Impala 33 Real-Time Query for Data Stored in Hadoop. Supports Hive SQL 4-30X faster than Hive over MapReduce Supports multiple storage engines & file formats Uses existing drivers, integrates with existing metastore, works with leading BI tools Flexible, cost-effective, no lock-in Deploy & operate with Cloudera Enterprise RTQ ©2012 Cloudera, Inc.

- 34. Benefits of Cloudera Impala 34 Real-Time Query for Data Stored in Hadoop • Real-time queries run directly on source data • No ETL delays • No jumping between data silos • No double storage with EDW/RDBMS • Unlock analysis on more data • No need to create and maintain complex ETL between systems • No need to preplan schemas • All data available for interactive queries • No loss of fidelity from fixed data schemas • Single metadata store from origination through analysis • No need to hunt through multiple data silos ©2012 Cloudera, Inc.

- 35. Cloudera Impala Details Query Planner Query Coordinator Query Exec Engine Query Planner Query Coordinator State Store HDFS NN HDFS DN HDFS DN HBase HBase SQL App ODBC 35 ©2012 Cloudera, Inc. Query Planner Query Coordinator Query Exec Engine HDFS DN Query Exec Engine HBase Fully MPP Distributed Local Direct Reads Hive Metastore YARN Common Hive SQL and interface Unified metadata and scheduler Low-latency scheduler and cache (low-impact failures)

- 36. LOAD DATA TO ORACLE

- 37. Oracle Connectors for Hadoop • Oracle Loader for Hadoop • Oracle SQL Connector for Hadoop • BigData SQL

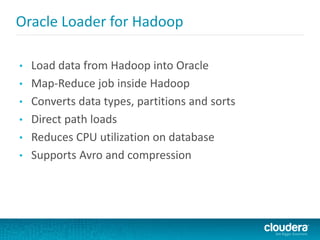

- 38. Oracle Loader for Hadoop • Load data from Hadoop into Oracle • Map-Reduce job inside Hadoop • Converts data types, partitions and sorts • Direct path loads • Reduces CPU utilization on database • Supports Avro and compression

- 39. Oracle SQL Connector for Hadoop • Run a Java app • Creates an external table • Runs MapReduce when external table is queries • Can use Hive Metastore for schema • Optimized for parallel queries • Supports Avro and compression

- 40. Big Data SQL • Also external table • Can also use Hive metastore for schema • But …. NO MapReduce • Instead – an agent will do SMART SCANS • Bloom filters • Storage indexes • Filters • Supports any Hadoop data format 40

- 41. Analyzing Twitter Data with Hadoop PUTTING IT ALL TOGETHER 41 ©2012 Cloudera, Inc.

- 42. Hive Level Architecture Hive + Oozie Data Source Flume HDFS 42 ©2012 Cloudera, Inc. Impala / Oracle

- 43. What next? • Download Hadoop! • CDH available at www.cloudera.com • Cloudera provides pre-loaded VMs • https://ccp.cloudera.com/display/SUPPORT/Cloudera+Ma nager+Free+Edition+Demo+VM • Clone the source repo • https://github.com/cloudera/cdh-twitter-example

- 44. 44 ©2012 Cloudera, Inc.