Streaming Big Data with Spark, Kafka, Cassandra, Akka & Scala (from webinar)

- 1. ©2014 DataStax Confidential. Do not distribute without consent. @helenaedelson Helena Edelson Streaming Big Data with Spark, Kafka, Cassandra, Akka & Scala 1

- 2. © 2014 DataStax, All Rights Reserved. 2 1 Delivering Meaning In Near-Real Time At High Velocity 2 Overview Of Spark Streaming, Kafka and Akka 3 Cassandra and the Spark Cassandra Connector 4 Integration In Big Data Applications

- 3. Who Is This Person? • Spark Cassandra Connector Committer! • Akka Contributor (2 features in Cluster)! • Scala & Big Data Conference Speaker! • @helenaedelson! • https://github.com/helena ! • Senior Software Engineer, Analytics @ DataStax! • Previously Senior Cloud Engineer at VMware & others Analytic Analytic

- 4. Analytic Analytic Search Use Case: Hadoop + Scalding /** Reads SequenceFile data from S3 buckets, computes then persists to Cassandra. */ class TopSearches(args: Args) extends TopKDailyJob[MyDataType](args) with Cassandra { ! PailSource.source[Search](rootpath, structure, directories).read .mapTo('pailItem -> 'engines) { e: Search ⇒ results(e) } .filter('engines) { e: String ⇒ e.nonEmpty } .groupBy('engines) { _.size('count).sortBy('engines) } .groupBy('engines) { _.sortedReverseTake[(String, Long)](('engines, 'count) -> 'tcount, k) } .flatMapTo('tcount -> ('key, 'engine, 'topCount)) { t: List[(String, Long)] ⇒ t map { case (k, v) ⇒ (jobKey, k, v) }} .write(CassandraSource(connection, "top_searches", Scheme(‘key, ('engine, ‘topCount)))) ! }

- 6. ©2014 DataStax Confidential. Do not distribute without consent. Delivering Meaning In Near-Real Time At High Velocity At Massive Scale 6

- 8. Strategies • Partition For Scale! • Replicate For Resiliency! • Share Nothing! • Asynchronous Message Passing! • Parallelism! • Isolation! • Location Transparency

- 9. What We Need • Fault Tolerant ! • Failure Detection! • Fast - low latency, distributed, data locality! • Masterless, Decentralized Cluster Membership! • Span Racks and DataCenters! • Hashes The Node Ring ! • Partition-Aware! • Elasticity ! • Asynchronous - message-passing system! • Parallelism! • Network Topology Aware!

- 10. Lambda Architecture with Spark, Kafka, Cassandra and Akka (Scala!) ! Lambda Architecture - is a data-processing architecture designed to handle massive quantities of data by taking advantage of both batch and stream processing methods.! ! • Spark - one of the few, if not the only, data processing framework that allows you to have both batch and stream processing of terabytes of data in the same application.! • Storm does not!

- 11. © 2014 DataStax, All Rights Reserved Company Confidential KillrWeather Architecture

- 12. Why Akka Rocks • location transparency! • fault tolerant! • async message passing! • non-deterministic! • share nothing! • actor atomicity (w/in actor)! !

- 13. Apache Kafka! From Joe Stein

- 14. ©2014 DataStax Confidential. Do not distribute without consent. Apache Spark and Spark Streaming 14 The Drive-By Version

- 15. Analytic Analytic Search • Fast, distributed, scalable and fault tolerant cluster compute system! • Enables Low-latency with complex analytics! • Developed in 2009 at UC Berkeley AMPLab, open sourced in 2010, and became a top-level Apache project in February, 2014 What Is Apache Spark

- 16. Most Active OSS In Big Data Search

- 17. Apache Spark - Easy to Use & Fast • 10x faster on disk,100x faster in memory than Hadoop MR! • Write, test and maintain 2 - 5x less code! • Fault Tolerant Distributed Datasets ! • Batch, iterative and streaming analysis! • In Memory Storage and Disk ! • Integrates with Most File and Storage Options Analytic Analytic Search

- 18. Easy API • Abstracts complex algorithms to high level functions! • Collections API over large datasets! • Uses a functional programming model - clean ! • Scala, Java and Python APIs! • Use from a REPL! • Easy integration with SQL, streaming, ML, Graphing, R… Analytic Analytic Search

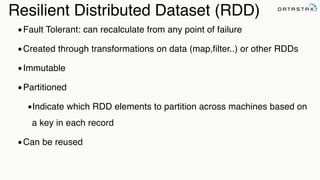

- 19. Resilient Distributed Dataset (RDD) •Fault Tolerant: can recalculate from any point of failure! •Created through transformations on data (map,filter..) or other RDDs ! •Immutable! •Partitioned! •Indicate which RDD elements to partition across machines based on a key in each record! •Can be reused

- 20. Spark Data Model A1 A2 A3 A4 A5 A6 A7 A8 B1 B2 B3 B4 B5 B6 B7 B8 map B2 B5 B7 B8B1 filter C reduce Resilient Distributed Dataset A collection: ● immutable ● iterable ● serializable ● distributed ● parallel ● lazy

- 22. Analytic Analytic Search Collection To RDD ! scala> val data = Array(1, 2, 3, 4, 5) data: Array[Int] = Array(1, 2, 3, 4, 5) scala> val distData = sc.parallelize(data) distData: spark.RDD[Int] = spark.ParallelCollection@10d13e3e

- 23. Spark Basic Word Count val conf = new SparkConf() .setMaster("local[*]") .setAppName("Simple Word Count") val sc = new SparkContext(conf) ! sc.textFile(words) .flatMap(_.split("s+")) .map(word => (word.toLowerCase, 1)) .reduceByKey(_ + _) .collect foreach println ! Analytic Analytic Search

- 24. Apache Spark - Easy to Use API /** Returns the top (k) highest temps for any location in the `year`. */ val k = 20 ! def topK(aggregate: Seq[Double]): Seq[Double] = sc.parallelize(aggregate).top(k) ! ! /** Returns the top (k) highest temps … in a Future */ def topK(aggregate: Seq[Double]): Future[Seq[Double]] = sc.parallelize(aggregate).top(k).collectAsync ! Analytic Analytic Search

- 25. © 2014 DataStax, All Rights Reserved Company Confidential Not Just MapReduce

- 26. Spark Components

- 27. http://apache-spark-user-list.1001560.n3.nabble.com/Why-Scala-tp6536p6538.html! • Functional • On the JVM • Capture functions and ship them across the network • Static typing - easier to control performance • Leverage REPL Spark REPL Analytic Analytic Search Why Scala?

- 28. When Batch Is Not Enough ! • Scheduled batch only gets you so far • I want results continuously in the event stream • I want to run computations in my even-driven asynchronous apps Analytic Analytic

- 29. zillions of bytes gigabytes per second Spark Streaming

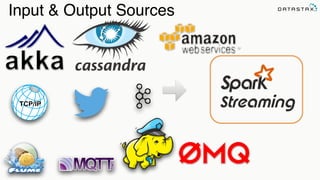

- 30. Input & Output Sources

- 32. Common Use Cases applications sensors web mobile phones intrusion detection malfunction detection site analytics network metrics analysis fraud detection dynamic process optimisation recommendations location based ads log processing supply chain planning sentiment analysis …

- 33. DStream (Discretized Stream) RDD (time 0 to time 1) RDD (time 1 to time 2) RDD (time 2 to time 3) A transformation on a DStream = transformations on its RDDs DStream Continuous stream of micro batches! • Complex processing models with minimal effort! • Streaming computations on small time intervals

- 34. DStreams - the stream of raw data received from streaming sources:! !•!Basic Source - in the StreamingContext API! !•!Advanced Source - in external modules and separate Spark artifacts! ! Receivers! • Reliable Receivers - for data sources supporting acks (like Kafka)! • Unreliable Receivers - for data sources not supporting acks! 34 InputDStreams and Receivers

- 35. Spark Streaming Modules GroupId ArtifactId Latest Version org.apache.spark spark-streaming-kinesis-asl_2.10 1.1.0 org.apache.spark spark-streaming-mqtt_2.10 1.1.0 all (7) org.apache.spark spark-streaming-zeromq_2.10 1.1.0 all (7) org.apache.spark spark-streaming-flume_2.10 1.1.0 all (7) org.apache.spark spark-streaming-flume-sink_2.10 1.1.0 org.apache.spark spark-streaming-kafka_2.10 1.1.0 all (7) org.apache.spark spark-streaming-twitter_2.10 1.1.0 all (7)

- 36. Spark Streaming Setup val conf = new SparkConf().setMaster(SparkMaster).setAppName(AppName) .set("spark.serializer", "org.apache.spark.serializer.KryoSerializer") .set("spark.kryo.registrator", "com.datastax.killrweather.KillrKryoRegistrator") .set("spark.cleaner.ttl", SparkCleanerTtl) val streamingContext = new StreamingContext(conf, Milliseconds(500)) ! // Do work in the stream ! ssc.checkpoint(checkpointDir) ! ssc.start() ssc.awaitTermination

- 37. Allows saving enough of information to a fault-tolerant storage to allow the RDDs ! • Metadata - the information defining the streaming computation! • Data (RDDs) ! ! Usage ! • With updateStateByKey, reduceByKeyAndWindow - stateful transformations! • To recover from failures in Spark Streaming apps ! ! Can affect performance, depending on! • The data and or batch sizes. ! • The speed of the file system that is being used for checkpointing 37 Checkpointing

- 38. Basic Streaming: FileInputDStream // Creates new DStreams ssc.textFileStream("s3n://raw_data_bucket/") .flatMap(_.split("s+")) .map(_.toLowerCase, 1)) .countByValue() .saveAsObjectFile("s3n://analytics_bucket/")! Search

- 39. ReceiverInputDStreams val stream = KafkaUtils.createStream[String, String, StringDecoder, StringDecoder]( ssc, kafkaParams, Map(KafkaTopicRaw -> 10), StorageLevel.DISK_ONLY_2) .map { case (_, line) => line.split(",")} .map(RawWeatherData(_)) ! /** Saves the raw data to Cassandra - raw table. */ stream.saveToCassandra(keyspace, rawDataTable) ! stream.map { data => (data.wsid, data.year, data.month, data.day, data.oneHourPrecip) }.saveToCassandra(keyspace, dailyPrecipitationTable) ssc.start() ssc.awaitTermination Search

- 40. // where pairs are (word,count) pairsStream .flatMap { case (k,v) => (k,v.value) } .reduceByKeyAndWindow((a:Int,b:Int) => (a + b), Seconds(30), Seconds(10)) .saveToCassandra(keyspace,table) 40 Streaming Window Operations

- 41. 41 Streaming Window Operations • window(Duration, Duration)! • countByWindow(Duration, Duration)! • reduceByWindow(Duration, Duration)! • countByValueAndWindow(Duration, Duration)! • groupByKeyAndWindow(Duration, Duration)! • reduceByKeyAndWindow((V, V) => V, Duration, Duration)

- 42. ©2014 DataStax Confidential. Do not distribute without consent. Ten Things About Cassandra 42 You always wanted to know but were afraid to ask

- 43. Apache Cassandra • Elasticity - scale to as many nodes as you need, when you need! •!Always On - No single point of failure, Continuous availability!! !•!Masterless peer to peer architecture! •!Designed for Replication! !•!Flexible Data Storage! !•!Read and write to any node syncs across the cluster! !•!Operational simplicity - with all nodes in a cluster being the same, there is no complex configuration to manage

- 44. Easy to use • CQL - familiar syntax! • Friendly to programmers! • Paxos for locking CREATE TABLE users (! username varchar,! firstname varchar,! lastname varchar,! email list<varchar>,! password varchar,! created_date timestamp,! PRIMARY KEY (username)! ); INSERT INTO users (username, firstname, lastname, ! email, password, created_date)! VALUES ('hedelson','Helena','Edelson',! [‘[email protected]'],'ba27e03fd95e507daf2937c937d499ab','2014-11-15 13:50:00');! INSERT INTO users (username, firstname, ! lastname, email, password, created_date)! VALUES ('pmcfadin','Patrick','McFadin',! ['[email protected]'],! 'ba27e03fd95e507daf2937c937d499ab',! '2011-06-20 13:50:00')! IF NOT EXISTS;

- 45. ©2014 DataStax Confidential. Do not distribute without consent. Spark Cassandra Integration 45

- 46. Deployment Architecture Cassandra Executor ExecutorSpark Worker (JVM) Cassandra Executor ExecutorSpark Worker (JVM) Node 1 Node 2 Node 3 Node 4 Cassandra Executor ExecutorSpark Worker (JVM) Cassandra Executor ExecutorSpark Worker (JVM) Spark Master (JVM) App Driver

- 47. Spark Cassandra Connector C* C* C*C* Spark Executor C* Java Driver Spark-Cassandra Connector User Application Cassandra

- 48. Spark Cassandra Connector https://github.com/datastax/spark-cassandra-connector ! ! • Loads data from Cassandra to Spark • Writes data from Spark to Cassandra • Handles type conversions • Offers an object mapper ! • Implemented in Scala • Scala and Java APIs • Open Source

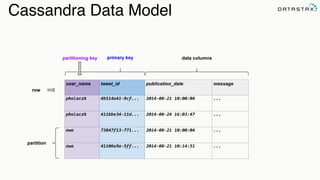

- 49. Cassandra Data Model user_name tweet_id publication_date message pkolaczk 4b514a41-‐9cf... 2014-‐08-‐21 10:00:04 ... pkolaczk 411bbe34-‐11d... 2014-‐08-‐24 16:03:47 ... ewa 73847f13-‐771... 2014-‐08-‐21 10:00:04 ... ewa 41106e9a-‐5ff... 2014-‐08-‐21 10:14:51 ... row partitioning key partition primary key data columns

- 50. Use Cases • Store raw data per weather station! • Store time series in order: most recent to oldest! • Compute and store aggregate data in the stream! • Set TTLs on historic data • Get data by weather station! • Get data for a single date and time! • Get data for a range of dates and times! • Compute, store and quickly retrieve daily, monthly and annual aggregations of data! Design Data Model to support queries (speed, scale, distribution)! vs contort queries to fit data model

- 51. Data Locality ● Spark asks an RDD for a list of its partitions (splits) ● Each split consists of one or more token-ranges ● For every partition ● Spark asks RDD for a list of preferred nodes to process on ● Spark creates a task and sends it to one of the nodes for execution ! ! Every Spark task uses a CQL-like query to fetch data for the given token range: C* C* C*C* SELECT "key", "value" FROM "test"."kv" WHERE token("key") > 595597420921139321 AND token("key") <= 595597431194200132 ALLOW FILTERING

- 52. Connector Code and Docs https://github.com/datastax/spark-cassandra-connector! ! ! ! ! ! ! Add It To Your Project:! ! "com.datastax.spark" %% "spark-‐cassandra-‐connector" % "1.1.0"

- 53. ©2014 DataStax Confidential. Do not distribute without consent. Using Spark With Cassandra 53

- 54. Reading Data: From C* To Spark val table = sc .cassandraTable[CassandraRow]("db", "tweets") .select("user_name", "message") .where("user_name = ?", "ewa") row representation keyspace table server side column and row selection

- 55. Spark Streaming, Kafka & Cassandra val conf = new SparkConf(true) .setMaster("local[*]") .setAppName(getClass.getSimpleName) .set("spark.executor.memory", "1g") .set("spark.cores.max", "1") .set("spark.cassandra.connection.host", "127.0.0.1") val ssc = new StreamingContext(conf, Seconds(30)) KafkaUtils.createStream[String, String, StringDecoder, StringDecoder]( ssc, kafka.kafkaParams, Map(topic -> 1), StorageLevel.MEMORY_ONLY) .map(_._2) .countByValue() .saveToCassandra("mykeyspace", "wordcount") ssc.start() ssc.awaitTermination() Initialization Transformations and Action

- 56. Spark Streaming, Twitter & Cassandra val stream = TwitterUtils.createStream( ssc, auth, filters, StorageLevel.MEMORY_ONLY_SER_2) val stream = TwitterUtils.createStream(ssc, auth, Nil, StorageLevel.MEMORY_ONLY_SER_2) /** Note that Cassandra is doing the sorting for you here. */ stream.flatMap(_.getText.toLowerCase.split("""s+""")) .filter(topics.contains(_)) .countByValueAndWindow(Seconds(5), Seconds(5)) .transform((rdd, time) => rdd.map { case (term, count) => (term, count, now(time))}) .saveToCassandra(keyspace, table)

- 57. Paging Reads with .cassandraTable • Configurable Page Size! • Controls how many CQL rows to fetch at a time, when fetching a single partition! • Connector returns an Iterator for rows to Spark! • Spark iterates over this, lazily !

- 58. Node 1 Client Cassandra Node 1request a page data processdata request a page data request a page Node 2 Client Cassandra Node 2request a page data processdata request a page data request a page ResultSet Paging and Pre-Fetching

- 59. Converting Columns to a Type val table = sc.cassandraTable[CassandraRow]("db", “tweets") val row: CassandraRow = table.first val userName = row.getString("user_name") val tweetId = row.getUUID(“row_id")

- 60. Converting Columns To a Type val row: CassandraRow = table.first val date1 = row.get[java.util.Date]("publication_date") val date2: org.joda.time.DateTime = row.get[org.joda.time.DateTime]("publication_date") val date3: org.joda.time.DateTime = row.getDateTime(“publication_date”) val date5: Option[DateTime] = row.getDateTimeOption(“publication_date”) ! val list1 = row.get[Set[UUID]]("collection") val list2 = row.get[List[UUID]]("collection") val list3 = row.get[Vector[UUID]]("collection") val list3 = row.get[Vector[String]]("collection") ! val nullable = row.get[Option[String]]("nullable_column")

- 61. Converting Rows To Objects case class Tweet( userName: String, tweetId: UUID, publicationDate: Date, message: String) ! val tweets = sc.cassandraTable[Tweet]("db", "tweets") Scala Cassandra message message column1 column_1 userName user_name Scala Cassandra Message Message column1 column1 userName userName

- 62. Analytic Analytic Search Converting Cassandra Rows To Tuples ! val tweets = sc .cassandraTable[(Int, Date, String)](“mykeyspace", “clustering”) .select(“cluster_id”, “time”, ”cluster_name”) .where("time > ? and time < ?", "2014-‐07-‐12 20:00:01", "2014-‐07-‐12 20:00:03") When returning tuples, always use select to specify the column order.

- 63. Analytic Analytic Search Converting Rows to Key-Value Pairs ! case class Key(...) case class Value(...) ! val rdd = sc.cassandraTable[(Key, Value)]("keyspace", "table") This is useful when joining tables!

- 64. Analytic Analytic Search Writing Data Scala: sc.parallelize(Seq(("foo", 2), ("bar", 5))) .saveToCassandra("test", "words", SomeColumns("word", "count")) cqlsh:test> select * from words; word | count -‐-‐-‐-‐-‐-‐+-‐-‐-‐-‐-‐-‐-‐ bar | 5 foo | 2 cqlsh> CREATE TABLE test.words(word TEXT PRIMARY KEY, count INT);

- 65. How Writes Are Executed Client Cassandra Node 1 batch of rows Other C* NodesNode 1 ack Network batch of rows ack data

- 66. ©2014 DataStax Confidential. Do not distribute without consent. Other Sweet Features 66

- 67. Spark SQL with Cassandra val sparkContext = new SparkContext(sparkConf) val cc = new CassandraSQLContext(sparkContext) cc.setKeyspace("nosql_joins") cc.sql(""" SELECT test1.a, test1.b, test1.c, test2.a FROM test1 AS test1 JOIN test2 AS test2 ON test1.a = test2.a AND test1.b = test2.b AND test1.c = test2.c “"").map(Data(_)) .saveToCassandra("nosql_joins","table3") NOSQL Joins - Across the spark cluster, to Cassandra (multi-datacenter)

- 68. Spark SQL: Txt, Parquet, JSON Support import com.datastax.spark.connector._ import org.apache.spark.sql.{Row, SQLContext} val sql = new SQLContext(sc) val json = sc.parallelize(Seq( """{"user":"helena","commits":98, "month":12, “year":2014}""", """{"user":"pkolaczk", "commits":42, "month":12, "year":2014}""")) sql.jsonRDD(json).map(MonthlyCommits(_)).flatMap(doSparkWork()) .saveToCassandra("githubstats","monthly_commits") sc.cassandraTable[MonthlyCommits]("githubstats","monthly_commits") .collect foreach println ! sc.stop()!

- 69. What’s New In Spark? •Petabyte sort record! • Myth busting: ! • Spark is in-memory. It doesn’t work with big data! • It’s too expensive to buy a cluster with enough memory to fit our data! •Application Integration! • Tableau, Trifacta, Talend, ElasticSearch, Cassandra! •Ongoing development for Spark 1.2! • Python streaming, new MLib API, Yarn scaling…

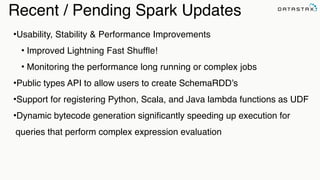

- 70. Recent / Pending Spark Updates •Usability, Stability & Performance Improvements ! • Improved Lightning Fast Shuffle!! • Monitoring the performance long running or complex jobs! •Public types API to allow users to create SchemaRDD’s! •Support for registering Python, Scala, and Java lambda functions as UDF ! •Dynamic bytecode generation significantly speeding up execution for queries that perform complex expression evaluation

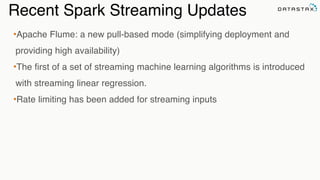

- 71. Recent Spark Streaming Updates •Apache Flume: a new pull-based mode (simplifying deployment and providing high availability)! •The first of a set of streaming machine learning algorithms is introduced with streaming linear regression.! •Rate limiting has been added for streaming inputs

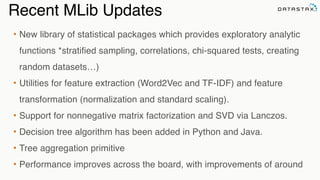

- 72. Recent MLib Updates • New library of statistical packages which provides exploratory analytic functions *stratified sampling, correlations, chi-squared tests, creating random datasets…)! • Utilities for feature extraction (Word2Vec and TF-IDF) and feature transformation (normalization and standard scaling). ! • Support for nonnegative matrix factorization and SVD via Lanczos. ! • Decision tree algorithm has been added in Python and Java. ! • Tree aggregation primitive! • Performance improves across the board, with improvements of around

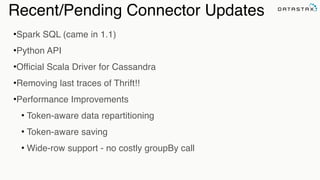

- 73. Recent/Pending Connector Updates •Spark SQL (came in 1.1)! •Python API! •Official Scala Driver for Cassandra! •Removing last traces of Thrift!!! •Performance Improvements! • Token-aware data repartitioning! • Token-aware saving! • Wide-row support - no costly groupBy call

- 74. Resources • http://spark.apache.org! • https://github.com/datastax/spark-cassandra-connector ! • http://cassandra.apache.org! • http://kafka.apache.org ! • http://akka.io ! •https://groups.google.com/a/lists.datastax.com/forum/#!forum/spark- connector-user Analytic Analytic

- 76. Thanks for listening! Cassandra Summit SEPTEMBER 10 -‐ 11, 2014 | SAN FRANCISCO, CALIF. | THE WESTIN ST. FRANCIS HOTEL

- 77. ©2014 DataStax Confidential. Do not distribute without consent. 77 @helenaedelson • Follow-Up Blog Post! • slideshare.net/helenaedelson

with Cassandra {

!

PailSource.source[Search](rootpath, structure, directories).read

.mapTo('pailItem -> 'engines) { e: Search ⇒ results(e) }

.filter('engines) { e: String ⇒ e.nonEmpty }

.groupBy('engines) { _.size('count).sortBy('engines) }

.groupBy('engines) { _.sortedReverseTake[(String, Long)](('engines, 'count) -> 'tcount, k) }

.flatMapTo('tcount -> ('key, 'engine, 'topCount)) { t: List[(String, Long)] ⇒

t map { case (k, v) ⇒ (jobKey, k, v) }}

.write(CassandraSource(connection, "top_searches", Scheme(‘key, ('engine, ‘topCount))))

!

}](https://image.slidesharecdn.com/streaming-big-datahelenawebinarv3-150113120937-conversion-gate01/85/Streaming-Big-Data-with-Spark-Kafka-Cassandra-Akka-Scala-from-webinar-4-320.jpg)

![Analytic

Analytic

Search

Collection To RDD

!

scala> val data = Array(1, 2, 3, 4, 5)

data: Array[Int] = Array(1, 2, 3, 4, 5)

scala> val distData = sc.parallelize(data)

distData: spark.RDD[Int] =

spark.ParallelCollection@10d13e3e](https://image.slidesharecdn.com/streaming-big-datahelenawebinarv3-150113120937-conversion-gate01/85/Streaming-Big-Data-with-Spark-Kafka-Cassandra-Akka-Scala-from-webinar-22-320.jpg)

![Spark Basic Word Count

val conf = new SparkConf()

.setMaster("local[*]")

.setAppName("Simple Word Count")

val sc = new SparkContext(conf)

!

sc.textFile(words)

.flatMap(_.split("s+"))

.map(word => (word.toLowerCase, 1))

.reduceByKey(_ + _)

.collect foreach println

!

Analytic

Analytic

Search](https://image.slidesharecdn.com/streaming-big-datahelenawebinarv3-150113120937-conversion-gate01/85/Streaming-Big-Data-with-Spark-Kafka-Cassandra-Akka-Scala-from-webinar-23-320.jpg)

![Apache Spark - Easy to Use API

/** Returns the top (k) highest temps for any location in the `year`. */

val k = 20

!

def topK(aggregate: Seq[Double]): Seq[Double] =

sc.parallelize(aggregate).top(k)

!

!

/** Returns the top (k) highest temps … in a Future */

def topK(aggregate: Seq[Double]): Future[Seq[Double]] =

sc.parallelize(aggregate).top(k).collectAsync

!

Analytic

Analytic

Search](https://image.slidesharecdn.com/streaming-big-datahelenawebinarv3-150113120937-conversion-gate01/85/Streaming-Big-Data-with-Spark-Kafka-Cassandra-Akka-Scala-from-webinar-24-320.jpg)

, StorageLevel.DISK_ONLY_2)

.map { case (_, line) => line.split(",")}

.map(RawWeatherData(_))

!

/** Saves the raw data to Cassandra - raw table. */

stream.saveToCassandra(keyspace, rawDataTable)

!

stream.map { data =>

(data.wsid, data.year, data.month, data.day, data.oneHourPrecip)

}.saveToCassandra(keyspace, dailyPrecipitationTable)

ssc.start()

ssc.awaitTermination

Search](https://image.slidesharecdn.com/streaming-big-datahelenawebinarv3-150113120937-conversion-gate01/85/Streaming-Big-Data-with-Spark-Kafka-Cassandra-Akka-Scala-from-webinar-39-320.jpg)

![Easy to use

• CQL - familiar syntax!

• Friendly to programmers!

• Paxos for locking

CREATE TABLE users (!

username varchar,!

firstname varchar,!

lastname varchar,!

email list<varchar>,!

password varchar,!

created_date timestamp,!

PRIMARY KEY (username)!

);

INSERT INTO users (username, firstname, lastname, !

email, password, created_date)!

VALUES ('hedelson','Helena','Edelson',!

[‘helena.edelson@datastax.com'],'ba27e03fd95e507daf2937c937d499ab','2014-11-15 13:50:00');!

INSERT INTO users (username, firstname, !

lastname, email, password, created_date)!

VALUES ('pmcfadin','Patrick','McFadin',!

['patrick@datastax.com'],!

'ba27e03fd95e507daf2937c937d499ab',!

'2011-06-20 13:50:00')!

IF NOT EXISTS;](https://image.slidesharecdn.com/streaming-big-datahelenawebinarv3-150113120937-conversion-gate01/85/Streaming-Big-Data-with-Spark-Kafka-Cassandra-Akka-Scala-from-webinar-44-320.jpg)

.select("user_name",

"message")

.where("user_name

=

?",

"ewa")

row

representation keyspace table

server side column and

row selection](https://image.slidesharecdn.com/streaming-big-datahelenawebinarv3-150113120937-conversion-gate01/85/Streaming-Big-Data-with-Spark-Kafka-Cassandra-Akka-Scala-from-webinar-54-320.jpg)

![Spark Streaming, Kafka & Cassandra

val conf = new SparkConf(true)

.setMaster("local[*]")

.setAppName(getClass.getSimpleName)

.set("spark.executor.memory", "1g")

.set("spark.cores.max", "1")

.set("spark.cassandra.connection.host", "127.0.0.1")

val ssc = new StreamingContext(conf, Seconds(30))

KafkaUtils.createStream[String, String, StringDecoder, StringDecoder](

ssc, kafka.kafkaParams, Map(topic -> 1), StorageLevel.MEMORY_ONLY)

.map(_._2)

.countByValue()

.saveToCassandra("mykeyspace", "wordcount")

ssc.start()

ssc.awaitTermination()

Initialization

Transformations

and Action](https://image.slidesharecdn.com/streaming-big-datahelenawebinarv3-150113120937-conversion-gate01/85/Streaming-Big-Data-with-Spark-Kafka-Cassandra-Akka-Scala-from-webinar-55-320.jpg)

val

row:

CassandraRow

=

table.first

val

userName

=

row.getString("user_name")

val

tweetId

=

row.getUUID(“row_id")](https://image.slidesharecdn.com/streaming-big-datahelenawebinarv3-150113120937-conversion-gate01/85/Streaming-Big-Data-with-Spark-Kafka-Cassandra-Akka-Scala-from-webinar-59-320.jpg)

val

date2:

org.joda.time.DateTime

=

row.get[org.joda.time.DateTime]("publication_date")

val

date3:

org.joda.time.DateTime

=

row.getDateTime(“publication_date”)

val

date5:

Option[DateTime]

=

row.getDateTimeOption(“publication_date”)

!

val

list1

=

row.get[Set[UUID]]("collection")

val

list2

=

row.get[List[UUID]]("collection")

val

list3

=

row.get[Vector[UUID]]("collection")

val

list3

=

row.get[Vector[String]]("collection")

!

val

nullable

=

row.get[Option[String]]("nullable_column")](https://image.slidesharecdn.com/streaming-big-datahelenawebinarv3-150113120937-conversion-gate01/85/Streaming-Big-Data-with-Spark-Kafka-Cassandra-Akka-Scala-from-webinar-60-320.jpg)

Scala Cassandra

message message

column1 column_1

userName user_name

Scala Cassandra

Message Message

column1 column1

userName userName](https://image.slidesharecdn.com/streaming-big-datahelenawebinarv3-150113120937-conversion-gate01/85/Streaming-Big-Data-with-Spark-Kafka-Cassandra-Akka-Scala-from-webinar-61-320.jpg)

.select(“cluster_id”,

“time”,

”cluster_name”)

.where("time

>

?

and

time

<

?",

"2014-‐07-‐12

20:00:01",

"2014-‐07-‐12

20:00:03")

When returning tuples, always use select to specify the column order.](https://image.slidesharecdn.com/streaming-big-datahelenawebinarv3-150113120937-conversion-gate01/85/Streaming-Big-Data-with-Spark-Kafka-Cassandra-Akka-Scala-from-webinar-62-320.jpg)

This is useful when joining tables!](https://image.slidesharecdn.com/streaming-big-datahelenawebinarv3-150113120937-conversion-gate01/85/Streaming-Big-Data-with-Spark-Kafka-Cassandra-Akka-Scala-from-webinar-63-320.jpg)

.collect foreach println

!

sc.stop()!](https://image.slidesharecdn.com/streaming-big-datahelenawebinarv3-150113120937-conversion-gate01/85/Streaming-Big-Data-with-Spark-Kafka-Cassandra-Akka-Scala-from-webinar-68-320.jpg)