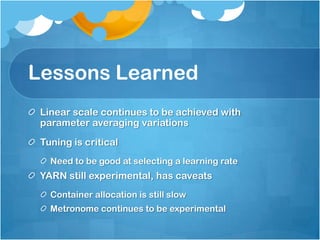

Hadoop Summit EU 2013: Parallel Linear Regression, IterativeReduce, and YARN

Download as PPTX, PDF7 likes5,390 views

Josh Patterson's Hadoop Summit EU 2013 talk on parallel linear linear regression on IterativeReduce and YARN.

1 of 38

Downloaded 62 times

![Hadoop as The Linux of Data

Hadoop has won the Cycle “Hadoop is the

kernel of a

Gartner: Hadoop will be in

distributed operating

2/3s of advanced analytics

products by 2015 [1] system, and all the

other components

around the kernel

are now arriving on

this stage”

---Doug Cutting](https://image.slidesharecdn.com/hadoopsummiteu201327022013final-130324085852-phpapp01/85/Hadoop-Summit-EU-2013-Parallel-Linear-Regression-IterativeReduce-and-YARN-7-320.jpg)

![Distributed Systems Are Hard

Lots of moving parts

Especially as these applications become more complicated

Machine learning can be a non-trivial operation

We need great building blocks that work well together

I agree with Jimmy Lin [3]: “keep it simple”

“make sure costs don’t outweigh benefits”

Minimize “Yet Another Tool To Learn” (YATTL) as much as

we can!](https://image.slidesharecdn.com/hadoopsummiteu201327022013final-130324085852-phpapp01/85/Hadoop-Summit-EU-2013-Parallel-Linear-Regression-IterativeReduce-and-YARN-12-320.jpg)

Ad

Recommended

Ai platform at scale

Ai platform at scaleHenry Saputra The document discusses designing scalable platforms for artificial intelligence (AI) and machine learning (ML). It outlines several challenges in developing AI applications, including technical debts, unpredictability, different data and compute needs compared to traditional software. It then reviews existing commercial AI platforms and common components of AI platforms, including data access, ML workflows, computing infrastructure, model management, and APIs. The rest of the document focuses on eBay's Krylov project as an example AI platform, outlining its architecture, challenges of deploying platforms at scale, and needed skill sets on the platform team.

NextGenML

NextGenML Moldovan Radu Adrian Revolutionary container based hybrid cloud solution for MLPlatform

Ness' data science platform, NextGenML, puts the entire machine learning process: modelling, execution and deployment in the hands of data science teams.

The entire paradigm approaches collaboration around AI/ML, being implemented with full respect for best practices and commitment to innovation.

Kubernetes (onPrem) + Docker, Azure Kubernetes Cluster (AKS), Nexus, Azure Container Registry(ACR), GlusterFS

Workflow

Argo->Kubeflow

DevOps

Helm, kSonnet, Kustomize,Azure DevOps

Code Management & CI/CD

Git, TeamCity, SonarQube, Jenkins

Security

MS Active Directory, Azure VPN, Dex (K8s) integrated with GitLab

Machine Learning

TensorFlow (model training, boarding, serving), Keras, Seldon

Storage (Azure)

Storage Gen1 & Gen2, Data Lake, File Storage

ETL (Azure)

Databricks, Spark on K8, Data Factory (ADF), HDInsight (Kafka and Spark), Service Bus (ASB)

Lambda functions & VMs, Cache for Redis

Monitoring and Logging

Graphana, Prometeus, GrayLog

Operationalizing AI at scale using MADlib Flow - Greenplum Summit 2019

Operationalizing AI at scale using MADlib Flow - Greenplum Summit 2019VMware Tanzu This document discusses operationalizing machine learning models at scale using MADlib Flow. It introduces MADlib Flow, which allows deploying models trained in PostgreSQL or Greenplum to Docker, Pivotal Cloud Foundry, or Kubernetes. Common challenges with operationalizing models are outlined. MADlib Flow addresses these challenges by providing an easy way to deploy models with high scalability, low latency predictions, and end-to-end SQL workflows. A demo of using MADlib Flow to deploy a fraud detection model trained in Greenplum and score transactions in real time is presented.

Accelerating Deep Learning Training with BigDL and Drizzle on Apache Spark wi...

Accelerating Deep Learning Training with BigDL and Drizzle on Apache Spark wi...Databricks The BigDL framework scales deep learning for large data sets using Apache Spark. However there is significant scheduling overhead from Spark when running BigDL at large scale. In this talk we propose a new parameter manager implementation that along with coarse-grained scheduling can provide significant speedups for deep learning models like Inception, VGG etc. Aggregation functions like reduce or treeReduce that are used for parameter aggregation in Apache Spark (and the original MapReduce) are slow as the centralized scheduling and driver network bandwidth become a bottleneck especially in large clusters.

To reduce the overhead of parameter aggregation and allow for near-linear scaling, we introduce a new AllReduce operation, a part of the parameter manager in BigDL which is built directly on top of the BlockManager in Apache Spark. AllReduce in BigDL uses a peer-to-peer mechanism to synchronize and aggregate parameters. During parameter synchronization and aggregation, all nodes in the cluster play the same role and driver’s overhead is eliminated thus enabling near-linear scaling. To address the scheduling overhead we use Drizzle, a recently proposed scheduling framework for Apache Spark. Currently, Spark uses a BSP computation model, and notifies the scheduler at the end of each task. Invoking the scheduler at the end of each task adds overheads and results in decreased throughput and increased latency.

Drizzle introduces group scheduling, where multiple iterations (or a group) of iterations are scheduled at once. This helps decouple the granularity of task execution from scheduling and amortizes the costs of task serialization and launch. Finally we will present results from using the new AllReduce operation and Drizzle on a number of common deep learning models including VGG and Inception. Our benchmarks run on Amazon EC2 and Google DataProc will show the speedups and scalability of our implementation.

MLOps with Kubeflow

MLOps with Kubeflow Saurabh Kaushik This document discusses MLOps and Kubeflow. It begins with an introduction to the speaker and defines MLOps as addressing the challenges of independently autoscaling machine learning pipeline stages, choosing different tools for each stage, and seamlessly deploying models across environments. It then introduces Kubeflow as an open source project that uses Kubernetes to minimize MLOps efforts by enabling composability, scalability, and portability of machine learning workloads. The document outlines key MLOps capabilities in Kubeflow like Jupyter notebooks, hyperparameter tuning with Katib, and model serving with KFServing and Seldon Core. It describes the typical machine learning process and how Kubeflow supports experimental and production phases.

Cloud Computing Was Built for Web Developers—What Does v2 Look Like for Deep...

Cloud Computing Was Built for Web Developers—What Does v2 Look Like for Deep...Databricks What we call the public cloud was developed primarily to manage and deploy web servers. The target audience for these products is Dev Ops. While this is a massive and exciting market, the world of Data Science and Deep Learning is very different — and possibly even bigger. Unfortunately, the tools available today are not designed for this new audience and the cloud needs to evolve. This talk would cover what the next 10 years of cloud computing will look like.

Machine Learning Interpretability - Mateusz Dymczyk - H2O AI World London 2018

Machine Learning Interpretability - Mateusz Dymczyk - H2O AI World London 2018Sri Ambati This talk was recorded in London on Oct 30, 2018 and can be viewed here: https://youtu.be/p4iAnxwC_Eg

The good news is building fair, accountable, and transparent machine learning systems is possible. The bad news is it’s harder than many blogs and software package docs would have you believe. The truth is nearly all interpretable machine learning techniques generate approximate explanations, that the fields of eXplainable AI (XAI) and Fairness, Accountability, and Transparency in Machine Learning (FAT/ML) are very new, and that few best practices have been widely agreed upon. This combination can lead to some ugly outcomes!

This talk aims to make your interpretable machine learning project a success by describing fundamental technical challenges you will face in building an interpretable machine learning system, defining the real-world value proposition of approximate explanations for exact models, and then outlining the following viable techniques for debugging, explaining, and testing machine learning models

Mateusz is a software developer who loves all things distributed, machine learning and hates buzzwords. His favourite hobby data juggling.

He obtained his M.Sc. in Computer Science from AGH UST in Krakow, Poland, during which he did an exchange at L’ECE Paris in France and worked on distributed flight booking systems. After graduation he move to Tokyo to work as a researcher at Fujitsu Laboratories on machine learning and NLP projects, where he is still currently based.

TensorFlow 16: Building a Data Science Platform

TensorFlow 16: Building a Data Science Platform Seldon 1. The document discusses building a data science platform on DC/OS to operationalize machine learning models. It outlines challenges at each stage of the ML pipeline and how DC/OS addresses them with distributed computing capabilities and services for data storage, processing, model training and deployment.

2. Key stages covered include data preparation, distributed training using frameworks like TensorFlow, model management with storage of trained models, and low-latency model serving for production with TensorFlow Serving.

3. DC/OS provides a full-stack platform to operationalize ML at scale through distributed computing resources, container orchestration, and integration of open source data and ML services.

Scaling and Unifying SciKit Learn and Apache Spark Pipelines

Scaling and Unifying SciKit Learn and Apache Spark PipelinesDatabricks Pipelines have become ubiquitous, as the need for stringing multiple functions to compose applications has gained adoption and popularity. Common pipeline abstractions such as “fit” and “transform” are even shared across divergent platforms such as Python Scikit-Learn and Apache Spark.

Scaling pipelines at the level of simple functions is desirable for many AI applications, however is not directly supported by Ray’s parallelism primitives. In this talk, Raghu will describe a pipeline abstraction that takes advantage of Ray’s compute model to efficiently scale arbitrarily complex pipeline workflows. He will demonstrate how this abstraction cleanly unifies pipeline workflows across multiple platforms such as Scikit-Learn and Spark, and achieves nearly optimal scale-out parallelism on pipelined computations.

Attendees will learn how pipelined workflows can be mapped to Ray’s compute model and how they can both unify and accelerate their pipelines with Ray.

Get Behind the Wheel with H2O Driverless AI Hands-On Training

Get Behind the Wheel with H2O Driverless AI Hands-On Training Sri Ambati Driverless AI is an automated machine learning platform created by H2O.ai that can complete an entire machine learning workflow from data to deployment in as little as 2 hours. It uses techniques developed by H2O Grandmasters such as automated feature engineering, model tuning, and ensemble building to generate high performing models with little to no input from users. Driverless AI supports both structured and unstructured data types including text/NLP and time series data and generates documentation of all modeling steps.

Machine Learning at Scale with MLflow and Apache Spark

Machine Learning at Scale with MLflow and Apache SparkDatabricks This document summarizes the challenges faced by SocGen, a large French bank, in implementing machine learning at scale using Spark and MLflow. Some key challenges included: 1) Keeping data and models local for regulatory reasons while performing training and prediction, 2) Ensuring reliability when moving models between prototyping and production phases, 3) Managing different Python package dependencies, 4) Tracking and managing many models, and 5) Ensuring high availability of the tracking server. The presentation provided a concrete example of using Spark, MLflow, and Kafka to periodically retrain a model for scoring news articles and handling user feedback in a scalable and reliable way.

CI/CD for Machine Learning with Daniel Kobran

CI/CD for Machine Learning with Daniel KobranDatabricks What we call the public cloud was developed primarily to manage and deploy web servers. The target audience for these products is Dev Ops. While this is a massive and exciting market, the world of Data Science and Deep Learning is very different — and possibly even bigger. Unfortunately, the tools available today are not designed for this new audience and the cloud needs to evolve. This talk would cover what the next 10 years of cloud computing will look like.

The Lyft data platform: Now and in the future

The Lyft data platform: Now and in the futuremarkgrover - Lyft has grown significantly in recent years, providing over 1 billion rides to 30.7 million riders through 1.9 million drivers in 2018 across North America.

- Data is core to Lyft's business decisions, from pricing and driver matching to analyzing performance and informing investments.

- Lyft's data platform supports data scientists, analysts, engineers and others through tools like Apache Superset, change data capture from operational stores, and streaming frameworks.

- Key focuses for the platform include business metric observability, streaming applications, and machine learning while addressing challenges of reliability, integration and scale.

Bighead: Airbnb’s End-to-End Machine Learning Platform with Krishna Puttaswa...

Bighead: Airbnb’s End-to-End Machine Learning Platform with Krishna Puttaswa...Databricks Bighead is Airbnb's machine learning infrastructure that was created to:

- Standardize and simplify the ML development workflow;

- Reduce the time and effort to build ML models from weeks/months to days/weeks; and

- Enable more teams at Airbnb to utilize ML.

It provides shared services and tools for data management, model training/inference, and model management to make the ML process more efficient and production-ready. This includes services like Zipline for feature storage, Redspot for notebook environments, Deep Thought for online inference, and the Bighead UI for model monitoring.

Scaling out Driverless AI with IBM Spectrum Conductor - Kevin Doyle - H2O AI ...

Scaling out Driverless AI with IBM Spectrum Conductor - Kevin Doyle - H2O AI ...Sri Ambati IBM Spectrum Conductor can manage H2O Driverless AI instances at scale across multiple nodes in an enterprise data center. Key benefits include the ability to run multiple Driverless AI instances on the same host using GPUs, failover capabilities if an instance fails, and role-based access control for users. The integration improves productivity by providing a shared file system, workload management, and allowing easy start/stop of Driverless AI instances.

A Modern Interface for Data Science on Postgres/Greenplum - Greenplum Summit ...

A Modern Interface for Data Science on Postgres/Greenplum - Greenplum Summit ...VMware Tanzu This document discusses providing a modern interface for data science on Postgres and Greenplum databases. It introduces Ibis, a Python library that provides a DataFrame abstraction for SQL systems. Ibis allows defining complex data pipelines and transformations using deferred expressions, providing type checking before execution. The document argues that Ibis could be enhanced to support user-defined functions, saving results to tables, and data science modeling abstractions to provide a full-featured interface for data scientists on SQL databases.

When Apache Spark Meets TiDB with Xiaoyu Ma

When Apache Spark Meets TiDB with Xiaoyu MaDatabricks During the past 10 years, big-data storage layers mainly focus on analytical use cases. When it comes to analytical cases, users usually offload data onto Hadoop cluster and perform queries on HDFS files. People struggle dealing with modifications on append only storage and maintain fragile ETL pipelines.

On the other hand, although Spark SQL has been proven effective parallel query processing engine, some tricks common in traditional databases are not available due to characteristics of storage underneath. TiSpark sits directly on top of a distributed database (TiDB)’s storage engine, expand Spark SQL’s planning with its own extensions and utilizes unique features of database storage engine to achieve functions not possible for Spark SQL on HDFS. With TiSpark, users are able to perform queries directly on changing / fresh data in real time.

The takeaways from this two are twofold:

— How to integrate Spark SQL with a distributed database engine and the benefit of it

— How to leverage Spark SQL’s experimental methods to extend its capacity.

Using BigDL on Apache Spark to Improve the MLS Real Estate Search Experience ...

Using BigDL on Apache Spark to Improve the MLS Real Estate Search Experience ...Databricks BigDL-enabled Deep Learning analysis of photos attached to property listings in Multiple Listings Services database allowed us to extract image features and identify similar-looking properties. We leveraged this information to in real-time property search application to improve the relevancy of user search results. Imagine identifying a property listing photo you like and having the system suggest other listings you should also review. Traditional real-estate MLS (multiple-listings services) search methods rely on SQL-type queries to search and serve real-estate listings results.

However, using BigDL in conjunction with MLSLinstings standard APIs allows users to include photos as search parameters in real-time, based both on image similarities and semantic feature search. The information extracted from listing’s images is used to improve the relevancy of the search results. To enable this use-case, we implemented several CNNs using BigDL framework on Microsoft’s Azure hosted Apache Spark: – Image feature extraction and tagging. Extracts features from real estate images and classifies them according to Real Estates Standards Organization rules, such as overall house style, interior and exterior attributes, etc. – Image similarity network which allows for comparing images that belong to different properties based on their extracted features and create a similarity score to be used in search results.

We’ll discuss the above networks in details as well as run a live demo of real-estate search results. Key takeaways: a) Why invest into Spark BigDL from the start. b) Why choose cloud-based solution from the start. c) Choice of Scala vs Python.

ML at the Edge: Building Your Production Pipeline with Apache Spark and Tens...

ML at the Edge: Building Your Production Pipeline with Apache Spark and Tens...Databricks The explosion of data volume in the years to come challenge the idea of a centralized cloud infrastructure which handles all business needs. Edge computing comes to rescue by pushing the needs of computation and data analysis at the edge of the network, thus avoiding data exchange when makes sense. One of the areas where data exchange could impose a big overhead is scoring ML models especially where data to score are files like images eg. in a computer vision application.

Another concern in some applications, is that of keeping data as private as possible and this is where keeping things local makes sense. In this talk we will discuss current needs and recent advances in model serving, like newly introduced formats for pushing models at the edge nodes eg. mobile phones and how a unified model serving architecture could cover current and future needs for both data scientists and data engineers. This architecture is based among others, on training models in a distributed fashion with TensorFlow and leveraging Spark for cleaning data before training (eg. using TensorFlow connector).

Finally we will describe a microservice based approach for scoring models back at the cloud infrastructure side (where bandwidth can be high) eg. using TensorFlow serving and updating models remotely with a pull model approach for edge devices. We will talk also about implementing the proposed architecture and how that might look on a modern deployment environment eg. Kubernetes.

Whats new in_mlflow

Whats new in_mlflowDatabricks In the last several months, MLflow has introduced significant platform enhancements that simplify machine learning lifecycle management. Expanded autologging capabilities, including a new integration with scikit-learn, have streamlined the instrumentation and experimentation process in MLflow Tracking. Additionally, schema management functionality has been incorporated into MLflow Models, enabling users to seamlessly inspect and control model inference APIs for batch and real-time scoring. In this session, we will explore these new features. We will share MLflow’s development roadmap, providing an overview of near-term advancements in the platform.

KFServing, Model Monitoring with Apache Spark and a Feature Store

KFServing, Model Monitoring with Apache Spark and a Feature StoreDatabricks In recent years, MLOps has emerged to bring DevOps processes to the machine learning (ML) development process, aiming at more automation in the execution of repetitive tasks and at smoother interoperability between tools. Among the different stages in the ML lifecycle, model monitoring involves the supervision of model performance over time, involving the combination of techniques in four categories: outlier detection, data drift detection, explainability and adversarial attacks. Most existing model monitoring tools follow a scheduled batch processing approach or analyse model performance using isolated subsets of the inference data. However, for the continuous monitoring of models, stream processing platforms show several advantages, including support for continuous data analytics, scalable processing of large amounts of data and first-class support for window-based aggregations useful for concept drift detection.

In this talk, we present an open-source platform for serving and monitoring models at scale based on Kubeflow’s model serving framework, KFServing, the Hopsworks Online Feature Store for enriching feature vectors with transformer in KFServing, and Spark and Spark Streaming as general purpose frameworks for monitoring models in production.

We also show how Spark Streaming can use the Hopsworks Feature Store to implement continuous data drift detection, where the Feature Store provides statistics on the distribution of feature values in training, and Spark Streaming computes the statistics on live traffic to the model, alerting if the live traffic differs significantly from the training data. We will include a live demonstration of the platform in action.

Saving Energy in Homes with a Unified Approach to Data and AI

Saving Energy in Homes with a Unified Approach to Data and AIDatabricks Energy wastage by residential buildings is a significant contributor to total worldwide energy consumption. Quby, an Amsterdam based technology company, offers solutions to empower homeowners to stay in control of their electricity, gas and water usage.

Plume - A Code Property Graph Extraction and Analysis Library

Plume - A Code Property Graph Extraction and Analysis LibraryTigerGraph See all on-demand Graph + AI Sessions: https://www.tigergraph.com/graph-ai-world-sessions/

Get TigerGraph: https://www.tigergraph.com/get-tigergraph/

Multi runtime serving pipelines for machine learning

Multi runtime serving pipelines for machine learningStepan Pushkarev The talk I gave at Scale By The Bay.

Deploying, Serving and monitoring machine learning models built with different ML frameworks in production. Envoy proxy powered serving mesh. TensorFlow, Spark ML, Scikit-learn and custom functions on CPU and GPU.

A Microservices Framework for Real-Time Model Scoring Using Structured Stream...

A Microservices Framework for Real-Time Model Scoring Using Structured Stream...Databricks Open-source technologies allow developers to build microservices framework to build myriad real-time applications. One such application is building the real-time model scoring. In this session,

we will showcase how to architect a microservice framework, in particular how to use it to build a low-latency, real-time model scoring system. At the core of the architecture lies Apache Spark’s Structured

Streaming capability to deliver low-latency predictions coupled with Docker and Flask as additional open source tools for model service. In this session, you will walk away with:

* Knowledge of enterprise-grade model as a service

* Streaming architecture design principles enabling real-time machine learning

* Key concepts and building blocks for real-time model scoring

* Real-time and production use cases across industries, such as IIOT, predictive maintenance, fraud detection, sepsis etc.

Automated Production Ready ML at Scale

Automated Production Ready ML at ScaleDatabricks In this session you will learn about how H&M have created a reference architecture for deploying their machine learning models on azure utilizing databricks following devOps principles. The architecture is currently used in production and has been iterated over multiple times to solve some of the discovered pain points. The team that are presenting is currently responsible for ensuring that best practices are implemented on all H&M use cases covering 100''s of models across the entire H&M group. <br> This architecture will not only give benefits to data scientist to use notebooks for exploration and modeling but also give the engineers a way to build robust production grade code for deployment. The session will in addition cover topics like lifecycle management, traceability, automation, scalability and version control.

Building Intelligent Applications, Experimental ML with Uber’s Data Science W...

Building Intelligent Applications, Experimental ML with Uber’s Data Science W...Databricks In this talk, we will explore how Uber enables rapid experimentation of machine learning models and optimization algorithms through the Uber’s Data Science Workbench (DSW). DSW covers a series of stages in data scientists’ workflow including data exploration, feature engineering, machine learning model training, testing and production deployment. DSW provides interactive notebooks for multiple languages with on-demand resource allocation and share their works through community features.

It also has support for notebooks and intelligent applications backed by spark job servers. Deep learning applications based on TensorFlow and Torch can be brought into DSW smoothly where resources management is taken care of by the system. The environment in DSW is customizable where users can bring their own libraries and frameworks. Moreover, DSW provides support for Shiny and Python dashboards as well as many other in-house visualization and mapping tools.

In the second part of this talk, we will explore the use cases where custom machine learning models developed in DSW are productionized within the platform. Uber applies Machine learning extensively to solve some hard problems. Some use cases include calculating the right prices for rides in over 600 cities and applying NLP technologies to customer feedbacks to offer safe rides and reduce support costs. We will look at various options evaluated for productionizing custom models (server based and serverless). We will also look at how DSW integrates into the larger Uber’s ML ecosystem, e.g. model/feature stores and other ML tools, to realize the vision of a complete ML platform for Uber.

H2O World - Survey of Available Machine Learning Frameworks - Brendan Herger

H2O World - Survey of Available Machine Learning Frameworks - Brendan HergerSri Ambati H2O World 2015 - Brendan Herger of Capital One

- Powered by the open source machine learning software H2O.ai. Contributors welcome at: https://github.com/h2oai

- To view videos on H2O open source machine learning software, go to: https://www.youtube.com/user/0xdata

The power of RapidMiner, showing the direct marketing demo

The power of RapidMiner, showing the direct marketing demoWessel Luijben Direct marketing allows businesses to target specific customer demographics like age, gender, and marital status. It has expanded beyond mail to include digital channels like text, email, and online ads. RapidMiner's direct marketing wizard helps businesses invest in the highest converting marketing actions and reduce costs through improved targeting. It provides a table of top customers to target, shows which customer properties most impact response rates, and evaluates the predictive model to determine if more customer data is needed.

Statisticsfor businessproject solution

Statisticsfor businessproject solutionhuynguyenbac CASE 02: SAIGON COOPMART

Logistics & Supply Chain plays an important role, if needed to say a critical factor for the success of Saigon Coopmart. Most of supermarkets over the world follow the identical model in which a warehouse is placed next to supermarket for stocks storage; and the size of warehouse is more or less equal to size of supermarket. However, due to harsh competition, and weak finance, Saigon Coopmart decided to follow a different model with very small size warehouse. This allows Saigon Coopmart to place more supermarkets; but in exchange, stocks only enough for a day, or maximum two compared to ordinary model in which a warehouse can store enough stocks for a week or more. As a consequence, Saigon Coopmart has to ship much more frequency to its supermarkets than its competitors such as Big C.

Ad

More Related Content

What's hot (20)

Scaling and Unifying SciKit Learn and Apache Spark Pipelines

Scaling and Unifying SciKit Learn and Apache Spark PipelinesDatabricks Pipelines have become ubiquitous, as the need for stringing multiple functions to compose applications has gained adoption and popularity. Common pipeline abstractions such as “fit” and “transform” are even shared across divergent platforms such as Python Scikit-Learn and Apache Spark.

Scaling pipelines at the level of simple functions is desirable for many AI applications, however is not directly supported by Ray’s parallelism primitives. In this talk, Raghu will describe a pipeline abstraction that takes advantage of Ray’s compute model to efficiently scale arbitrarily complex pipeline workflows. He will demonstrate how this abstraction cleanly unifies pipeline workflows across multiple platforms such as Scikit-Learn and Spark, and achieves nearly optimal scale-out parallelism on pipelined computations.

Attendees will learn how pipelined workflows can be mapped to Ray’s compute model and how they can both unify and accelerate their pipelines with Ray.

Get Behind the Wheel with H2O Driverless AI Hands-On Training

Get Behind the Wheel with H2O Driverless AI Hands-On Training Sri Ambati Driverless AI is an automated machine learning platform created by H2O.ai that can complete an entire machine learning workflow from data to deployment in as little as 2 hours. It uses techniques developed by H2O Grandmasters such as automated feature engineering, model tuning, and ensemble building to generate high performing models with little to no input from users. Driverless AI supports both structured and unstructured data types including text/NLP and time series data and generates documentation of all modeling steps.

Machine Learning at Scale with MLflow and Apache Spark

Machine Learning at Scale with MLflow and Apache SparkDatabricks This document summarizes the challenges faced by SocGen, a large French bank, in implementing machine learning at scale using Spark and MLflow. Some key challenges included: 1) Keeping data and models local for regulatory reasons while performing training and prediction, 2) Ensuring reliability when moving models between prototyping and production phases, 3) Managing different Python package dependencies, 4) Tracking and managing many models, and 5) Ensuring high availability of the tracking server. The presentation provided a concrete example of using Spark, MLflow, and Kafka to periodically retrain a model for scoring news articles and handling user feedback in a scalable and reliable way.

CI/CD for Machine Learning with Daniel Kobran

CI/CD for Machine Learning with Daniel KobranDatabricks What we call the public cloud was developed primarily to manage and deploy web servers. The target audience for these products is Dev Ops. While this is a massive and exciting market, the world of Data Science and Deep Learning is very different — and possibly even bigger. Unfortunately, the tools available today are not designed for this new audience and the cloud needs to evolve. This talk would cover what the next 10 years of cloud computing will look like.

The Lyft data platform: Now and in the future

The Lyft data platform: Now and in the futuremarkgrover - Lyft has grown significantly in recent years, providing over 1 billion rides to 30.7 million riders through 1.9 million drivers in 2018 across North America.

- Data is core to Lyft's business decisions, from pricing and driver matching to analyzing performance and informing investments.

- Lyft's data platform supports data scientists, analysts, engineers and others through tools like Apache Superset, change data capture from operational stores, and streaming frameworks.

- Key focuses for the platform include business metric observability, streaming applications, and machine learning while addressing challenges of reliability, integration and scale.

Bighead: Airbnb’s End-to-End Machine Learning Platform with Krishna Puttaswa...

Bighead: Airbnb’s End-to-End Machine Learning Platform with Krishna Puttaswa...Databricks Bighead is Airbnb's machine learning infrastructure that was created to:

- Standardize and simplify the ML development workflow;

- Reduce the time and effort to build ML models from weeks/months to days/weeks; and

- Enable more teams at Airbnb to utilize ML.

It provides shared services and tools for data management, model training/inference, and model management to make the ML process more efficient and production-ready. This includes services like Zipline for feature storage, Redspot for notebook environments, Deep Thought for online inference, and the Bighead UI for model monitoring.

Scaling out Driverless AI with IBM Spectrum Conductor - Kevin Doyle - H2O AI ...

Scaling out Driverless AI with IBM Spectrum Conductor - Kevin Doyle - H2O AI ...Sri Ambati IBM Spectrum Conductor can manage H2O Driverless AI instances at scale across multiple nodes in an enterprise data center. Key benefits include the ability to run multiple Driverless AI instances on the same host using GPUs, failover capabilities if an instance fails, and role-based access control for users. The integration improves productivity by providing a shared file system, workload management, and allowing easy start/stop of Driverless AI instances.

A Modern Interface for Data Science on Postgres/Greenplum - Greenplum Summit ...

A Modern Interface for Data Science on Postgres/Greenplum - Greenplum Summit ...VMware Tanzu This document discusses providing a modern interface for data science on Postgres and Greenplum databases. It introduces Ibis, a Python library that provides a DataFrame abstraction for SQL systems. Ibis allows defining complex data pipelines and transformations using deferred expressions, providing type checking before execution. The document argues that Ibis could be enhanced to support user-defined functions, saving results to tables, and data science modeling abstractions to provide a full-featured interface for data scientists on SQL databases.

When Apache Spark Meets TiDB with Xiaoyu Ma

When Apache Spark Meets TiDB with Xiaoyu MaDatabricks During the past 10 years, big-data storage layers mainly focus on analytical use cases. When it comes to analytical cases, users usually offload data onto Hadoop cluster and perform queries on HDFS files. People struggle dealing with modifications on append only storage and maintain fragile ETL pipelines.

On the other hand, although Spark SQL has been proven effective parallel query processing engine, some tricks common in traditional databases are not available due to characteristics of storage underneath. TiSpark sits directly on top of a distributed database (TiDB)’s storage engine, expand Spark SQL’s planning with its own extensions and utilizes unique features of database storage engine to achieve functions not possible for Spark SQL on HDFS. With TiSpark, users are able to perform queries directly on changing / fresh data in real time.

The takeaways from this two are twofold:

— How to integrate Spark SQL with a distributed database engine and the benefit of it

— How to leverage Spark SQL’s experimental methods to extend its capacity.

Using BigDL on Apache Spark to Improve the MLS Real Estate Search Experience ...

Using BigDL on Apache Spark to Improve the MLS Real Estate Search Experience ...Databricks BigDL-enabled Deep Learning analysis of photos attached to property listings in Multiple Listings Services database allowed us to extract image features and identify similar-looking properties. We leveraged this information to in real-time property search application to improve the relevancy of user search results. Imagine identifying a property listing photo you like and having the system suggest other listings you should also review. Traditional real-estate MLS (multiple-listings services) search methods rely on SQL-type queries to search and serve real-estate listings results.

However, using BigDL in conjunction with MLSLinstings standard APIs allows users to include photos as search parameters in real-time, based both on image similarities and semantic feature search. The information extracted from listing’s images is used to improve the relevancy of the search results. To enable this use-case, we implemented several CNNs using BigDL framework on Microsoft’s Azure hosted Apache Spark: – Image feature extraction and tagging. Extracts features from real estate images and classifies them according to Real Estates Standards Organization rules, such as overall house style, interior and exterior attributes, etc. – Image similarity network which allows for comparing images that belong to different properties based on their extracted features and create a similarity score to be used in search results.

We’ll discuss the above networks in details as well as run a live demo of real-estate search results. Key takeaways: a) Why invest into Spark BigDL from the start. b) Why choose cloud-based solution from the start. c) Choice of Scala vs Python.

ML at the Edge: Building Your Production Pipeline with Apache Spark and Tens...

ML at the Edge: Building Your Production Pipeline with Apache Spark and Tens...Databricks The explosion of data volume in the years to come challenge the idea of a centralized cloud infrastructure which handles all business needs. Edge computing comes to rescue by pushing the needs of computation and data analysis at the edge of the network, thus avoiding data exchange when makes sense. One of the areas where data exchange could impose a big overhead is scoring ML models especially where data to score are files like images eg. in a computer vision application.

Another concern in some applications, is that of keeping data as private as possible and this is where keeping things local makes sense. In this talk we will discuss current needs and recent advances in model serving, like newly introduced formats for pushing models at the edge nodes eg. mobile phones and how a unified model serving architecture could cover current and future needs for both data scientists and data engineers. This architecture is based among others, on training models in a distributed fashion with TensorFlow and leveraging Spark for cleaning data before training (eg. using TensorFlow connector).

Finally we will describe a microservice based approach for scoring models back at the cloud infrastructure side (where bandwidth can be high) eg. using TensorFlow serving and updating models remotely with a pull model approach for edge devices. We will talk also about implementing the proposed architecture and how that might look on a modern deployment environment eg. Kubernetes.

Whats new in_mlflow

Whats new in_mlflowDatabricks In the last several months, MLflow has introduced significant platform enhancements that simplify machine learning lifecycle management. Expanded autologging capabilities, including a new integration with scikit-learn, have streamlined the instrumentation and experimentation process in MLflow Tracking. Additionally, schema management functionality has been incorporated into MLflow Models, enabling users to seamlessly inspect and control model inference APIs for batch and real-time scoring. In this session, we will explore these new features. We will share MLflow’s development roadmap, providing an overview of near-term advancements in the platform.

KFServing, Model Monitoring with Apache Spark and a Feature Store

KFServing, Model Monitoring with Apache Spark and a Feature StoreDatabricks In recent years, MLOps has emerged to bring DevOps processes to the machine learning (ML) development process, aiming at more automation in the execution of repetitive tasks and at smoother interoperability between tools. Among the different stages in the ML lifecycle, model monitoring involves the supervision of model performance over time, involving the combination of techniques in four categories: outlier detection, data drift detection, explainability and adversarial attacks. Most existing model monitoring tools follow a scheduled batch processing approach or analyse model performance using isolated subsets of the inference data. However, for the continuous monitoring of models, stream processing platforms show several advantages, including support for continuous data analytics, scalable processing of large amounts of data and first-class support for window-based aggregations useful for concept drift detection.

In this talk, we present an open-source platform for serving and monitoring models at scale based on Kubeflow’s model serving framework, KFServing, the Hopsworks Online Feature Store for enriching feature vectors with transformer in KFServing, and Spark and Spark Streaming as general purpose frameworks for monitoring models in production.

We also show how Spark Streaming can use the Hopsworks Feature Store to implement continuous data drift detection, where the Feature Store provides statistics on the distribution of feature values in training, and Spark Streaming computes the statistics on live traffic to the model, alerting if the live traffic differs significantly from the training data. We will include a live demonstration of the platform in action.

Saving Energy in Homes with a Unified Approach to Data and AI

Saving Energy in Homes with a Unified Approach to Data and AIDatabricks Energy wastage by residential buildings is a significant contributor to total worldwide energy consumption. Quby, an Amsterdam based technology company, offers solutions to empower homeowners to stay in control of their electricity, gas and water usage.

Plume - A Code Property Graph Extraction and Analysis Library

Plume - A Code Property Graph Extraction and Analysis LibraryTigerGraph See all on-demand Graph + AI Sessions: https://www.tigergraph.com/graph-ai-world-sessions/

Get TigerGraph: https://www.tigergraph.com/get-tigergraph/

Multi runtime serving pipelines for machine learning

Multi runtime serving pipelines for machine learningStepan Pushkarev The talk I gave at Scale By The Bay.

Deploying, Serving and monitoring machine learning models built with different ML frameworks in production. Envoy proxy powered serving mesh. TensorFlow, Spark ML, Scikit-learn and custom functions on CPU and GPU.

A Microservices Framework for Real-Time Model Scoring Using Structured Stream...

A Microservices Framework for Real-Time Model Scoring Using Structured Stream...Databricks Open-source technologies allow developers to build microservices framework to build myriad real-time applications. One such application is building the real-time model scoring. In this session,

we will showcase how to architect a microservice framework, in particular how to use it to build a low-latency, real-time model scoring system. At the core of the architecture lies Apache Spark’s Structured

Streaming capability to deliver low-latency predictions coupled with Docker and Flask as additional open source tools for model service. In this session, you will walk away with:

* Knowledge of enterprise-grade model as a service

* Streaming architecture design principles enabling real-time machine learning

* Key concepts and building blocks for real-time model scoring

* Real-time and production use cases across industries, such as IIOT, predictive maintenance, fraud detection, sepsis etc.

Automated Production Ready ML at Scale

Automated Production Ready ML at ScaleDatabricks In this session you will learn about how H&M have created a reference architecture for deploying their machine learning models on azure utilizing databricks following devOps principles. The architecture is currently used in production and has been iterated over multiple times to solve some of the discovered pain points. The team that are presenting is currently responsible for ensuring that best practices are implemented on all H&M use cases covering 100''s of models across the entire H&M group. <br> This architecture will not only give benefits to data scientist to use notebooks for exploration and modeling but also give the engineers a way to build robust production grade code for deployment. The session will in addition cover topics like lifecycle management, traceability, automation, scalability and version control.

Building Intelligent Applications, Experimental ML with Uber’s Data Science W...

Building Intelligent Applications, Experimental ML with Uber’s Data Science W...Databricks In this talk, we will explore how Uber enables rapid experimentation of machine learning models and optimization algorithms through the Uber’s Data Science Workbench (DSW). DSW covers a series of stages in data scientists’ workflow including data exploration, feature engineering, machine learning model training, testing and production deployment. DSW provides interactive notebooks for multiple languages with on-demand resource allocation and share their works through community features.

It also has support for notebooks and intelligent applications backed by spark job servers. Deep learning applications based on TensorFlow and Torch can be brought into DSW smoothly where resources management is taken care of by the system. The environment in DSW is customizable where users can bring their own libraries and frameworks. Moreover, DSW provides support for Shiny and Python dashboards as well as many other in-house visualization and mapping tools.

In the second part of this talk, we will explore the use cases where custom machine learning models developed in DSW are productionized within the platform. Uber applies Machine learning extensively to solve some hard problems. Some use cases include calculating the right prices for rides in over 600 cities and applying NLP technologies to customer feedbacks to offer safe rides and reduce support costs. We will look at various options evaluated for productionizing custom models (server based and serverless). We will also look at how DSW integrates into the larger Uber’s ML ecosystem, e.g. model/feature stores and other ML tools, to realize the vision of a complete ML platform for Uber.

H2O World - Survey of Available Machine Learning Frameworks - Brendan Herger

H2O World - Survey of Available Machine Learning Frameworks - Brendan HergerSri Ambati H2O World 2015 - Brendan Herger of Capital One

- Powered by the open source machine learning software H2O.ai. Contributors welcome at: https://github.com/h2oai

- To view videos on H2O open source machine learning software, go to: https://www.youtube.com/user/0xdata

Viewers also liked (20)

The power of RapidMiner, showing the direct marketing demo

The power of RapidMiner, showing the direct marketing demoWessel Luijben Direct marketing allows businesses to target specific customer demographics like age, gender, and marital status. It has expanded beyond mail to include digital channels like text, email, and online ads. RapidMiner's direct marketing wizard helps businesses invest in the highest converting marketing actions and reduce costs through improved targeting. It provides a table of top customers to target, shows which customer properties most impact response rates, and evaluates the predictive model to determine if more customer data is needed.

Statisticsfor businessproject solution

Statisticsfor businessproject solutionhuynguyenbac CASE 02: SAIGON COOPMART

Logistics & Supply Chain plays an important role, if needed to say a critical factor for the success of Saigon Coopmart. Most of supermarkets over the world follow the identical model in which a warehouse is placed next to supermarket for stocks storage; and the size of warehouse is more or less equal to size of supermarket. However, due to harsh competition, and weak finance, Saigon Coopmart decided to follow a different model with very small size warehouse. This allows Saigon Coopmart to place more supermarkets; but in exchange, stocks only enough for a day, or maximum two compared to ordinary model in which a warehouse can store enough stocks for a week or more. As a consequence, Saigon Coopmart has to ship much more frequency to its supermarkets than its competitors such as Big C.

Midterm

MidtermWilkes U 1) The team will predict fall undergraduate enrollment at the University of New Mexico using data from 1981 to 2009.

2) They will use simple linear regression to build models predicting enrollment based on January unemployment rates, June high school graduation rates, and monthly per capita income in Albuquerque.

3) The best predictor of enrollment is per capita income, which has an R-squared of 88.86% and standard error of 1366, indicating that as per capita income increases, enrollment also increases.

Chapter 16

Chapter 16Matthew L Levy The document discusses the general linear model (GLM) as an extension of simple and multiple linear regression models. It describes how the GLM allows for modeling more complex relationships between variables by including transformed, squared, and interaction terms. Specifically, it explains how curvilinear relationships can be modeled by adding squared terms and how interaction effects between two variables can be modeled by including an interaction term. The document also discusses transforming the dependent variable to correct for non-constant variance.

Financialmodeling

FinancialmodelingTalal Tahir The student conducted an independent linear regression analysis to model the relationship between the closing prices of the S&P 500 ETF (SPY) and McDonald's (MCD) stock. A linear regression model was fitted with MCD closing price as the response variable and SPY closing price as the predictor variable. The model found a statistically significant linear relationship between the two variables, with SPY price explaining about 75% of the variation in MCD price. When using the model to predict MCD's closing price based on SPY's actual later closing price, the model prediction was within 0.4% of the actual MCD price.

Qam formulas

Qam formulasAshu Jain This document outlines quantitative methods taught in a course on Quantitative Applications in Management. It discusses topics like arithmetic mean, median, mode, standard deviation, correlation, regression, time series analysis, and index numbers. Calculation methods are provided for individual series, discrete series and continuous series. Common statistical measures and their applications in business and management are covered.

Regression

Regression Ali Raza This document presents information about regression analysis. It defines regression as the dependence of one variable on another and lists the objectives as defining regression, describing its types (simple, multiple, linear), assumptions, models (deterministic, probabilistic), and the method of least squares. Examples are provided to illustrate simple regression of computer speed on processor speed. Formulas are given to calculate the regression coefficients and lines for predicting y from x and x from y.

ForecastIT 2. Linear Regression & Model Statistics

ForecastIT 2. Linear Regression & Model StatisticsDeepThought, Inc. This lesson begins with explaining the linear regression method characteristics, and uses. Linear regression method attempts to best fit a line through the data. Using an example and the forecasting process, we apply the linear regression method to create a model and forecast based upon it.

Regression: A skin-deep dive

Regression: A skin-deep diveabulyomon A quick introduction to linear and logistic regression using Python. Part of the Data Science Bootcamp held in Amman by the Jordan Open Source Association Dec/Jan 2015. Reference code can be found on Github https://github.com/jordanopensource/data-science-bootcamp/tree/master/MachineLearning/Session1

[Xin yan, xiao_gang_su]_linear_regression_analysis(book_fi.org)![[Xin yan, xiao_gang_su]_linear_regression_analysis(book_fi.org)](https://cdn.slidesharecdn.com/ss_thumbnails/xinyanxiaogangsulinearregressionanalysisbookfi-140714092751-phpapp02-thumbnail.jpg?width=560&fit=bounds)

![[Xin yan, xiao_gang_su]_linear_regression_analysis(book_fi.org)](https://cdn.slidesharecdn.com/ss_thumbnails/xinyanxiaogangsulinearregressionanalysisbookfi-140714092751-phpapp02-thumbnail.jpg?width=560&fit=bounds)

![[Xin yan, xiao_gang_su]_linear_regression_analysis(book_fi.org)](https://cdn.slidesharecdn.com/ss_thumbnails/xinyanxiaogangsulinearregressionanalysisbookfi-140714092751-phpapp02-thumbnail.jpg?width=560&fit=bounds)

![[Xin yan, xiao_gang_su]_linear_regression_analysis(book_fi.org)](https://cdn.slidesharecdn.com/ss_thumbnails/xinyanxiaogangsulinearregressionanalysisbookfi-140714092751-phpapp02-thumbnail.jpg?width=560&fit=bounds)

[Xin yan, xiao_gang_su]_linear_regression_analysis(book_fi.org)mohamedchaouche This document provides an overview and summary of linear regression analysis theory and computing. It discusses linear regression models and the goals of regression analysis. It also introduces some key topics that will be covered in the book, including simple and multiple linear regression, model diagnosis, generalized linear models, Bayesian linear regression, and computational methods like least squares estimation. The book aims to serve as a one-semester textbook on fundamental regression analysis concepts for graduate students.

C2.1 intro

C2.1 introDaniel LIAO The document is a presentation on machine learning and simple linear regression. It introduces the concepts of a regression model, fitting a linear regression line to data by minimizing the residual sum of squares, and using the fitted line to make predictions. It discusses representing the linear regression model as an equation relating the output variable (y) to the input or feature (x), with parameters (w0, w1) estimated from training data. The parameters can be estimated by taking the gradient of the residual sum of squares and setting it equal to zero to find the optimal values for w0 and w1 that best fit the data.

Chapt 11 & 12 linear & multiple regression minitab

Chapt 11 & 12 linear & multiple regression minitabBoyu Deng The document discusses linear regression and correlation. It defines linear regression as finding the line of best fit that minimizes the sum of the squared residuals. The regression coefficients (slope and intercept) that achieve this are calculated using sums of squares and cross-products. Hypothesis tests are used to determine if the regression coefficients are statistically significant. Confidence and prediction intervals are also discussed to quantify the uncertainty in the regression line and predicted values.

Simple linear regression project

Simple linear regression projectJAPAN SHAH This document describes a simple linear regression analysis to model the relationship between the number of followers on Twitter (response variable) and years since joining Twitter, number of tweets, photos/videos posted, and people followed (predictor variables) for the top 40 most followed Twitter accounts. The analysis found that years since joining had the strongest linear relationship with followers. The regression equation estimated followers would increase by 12.52 million for each additional year on Twitter. Residual analyses found the model fit the data well although the residuals were not normally distributed.

Simple Linear Regression

Simple Linear RegressionSharlaine Ruth This document provides information about regression analysis and linear regression. It defines regression analysis as using relationships between quantitative variables to predict a dependent variable from independent variables. Linear regression finds the best fitting straight line relationship between variables. The simple linear regression equation is given as Y = a + bX, where a and b are estimated parameters calculated from sample data. An example is worked through, showing how to calculate the regression equation from data, graph the relationship, and use the equation to estimate values.

Statr session 23 and 24

Statr session 23 and 24Ruru Chowdhury This document provides an overview of simple and multiple linear regression analysis. It discusses key concepts such as:

- Dependent and independent variables in bivariate linear regression

- Using scatter plots to explore relationships

- Estimating regression coefficients and equations for simple and multiple regression models

- Using regression models to predict outcomes based on independent variable values

- Conducting statistical tests on overall regression models and individual coefficients

Ch14

Ch14Evil Man This document summarizes key concepts in simple linear regression:

- Simple linear regression models the relationship between a dependent variable y and independent variable x as y = β0 + β1x + ε, seeking to estimate β0 and β1 using the least squares method.

- The least squares method minimizes the sum of squared residuals to calculate the slope b1 and y-intercept b0 of the estimated regression equation ŷ = b0 + b1x.

- The coefficient of determination R2 indicates how well the regression line represents the data, calculated as the proportion of total variation explained by the regression.

- A worked example illustrates finding the estimated regression equation, R2, and correlation

Simple linear regression

Simple linear regressionMaria Theresa Simple Regression presentation is a

partial fulfillment to the requirement in PA 297 Research for Public Administrators, presented by Atty. Gayam , Dr. Cabling and Mr. Cagampang

Logistic regression for ordered dependant variable with more than 2 levels

Logistic regression for ordered dependant variable with more than 2 levelsArup Guha This document discusses multinomial logistic regression models. Multinomial logistic regression can handle dependent variables with more than two categories that may be ordinal (ordered categories) or nominal (unordered categories). The document focuses on proportional odds cumulative logit models, which model ordinal dependent variables by considering the natural ordering of categories. It provides an example of using SAS code to fit a proportional odds model to model the impact of radiation exposure on human health.

Simple linear regression

Simple linear regressionAvjinder (Avi) Kaler The document discusses simple linear regression. It defines key terms like regression equation, regression line, slope, intercept, residuals, and residual plot. It provides examples of using sample data to generate a regression equation and evaluating that regression model. Specifically, it shows generating a regression equation from bivariate data, checking assumptions visually through scatter plots and residual plots, and interpreting the slope as the marginal change in the response variable from a one unit change in the explanatory variable.

Chapter13

Chapter13rwmiller The document discusses simple linear regression and correlation methods. It defines deterministic and probabilistic models for describing the relationship between two variables. A simple linear regression model assumes a population regression line with intercept a and slope b, where observations may deviate from the line by some random error e. Key assumptions of the model are that e has a normal distribution with mean 0 and constant variance across values of x, and errors are independent. The slope b estimates the average change in y per unit change in x.

Ad

Similar to Hadoop Summit EU 2013: Parallel Linear Regression, IterativeReduce, and YARN (20)

Parallel Linear Regression in Interative Reduce and YARN

Parallel Linear Regression in Interative Reduce and YARNDataWorks Summit Online learning techniques, such as Stochastic Gradient Descent (SGD), are powerful when applied to risk minimization and convex games on large problems. However, their sequential design prevents them from taking advantage of newer distributed frameworks such as Hadoop/MapReduce. In this session, we will take a look at how we parallelized linear regression parameter optimization on the next-gen YARN framework Iterative Reduce.

Strata + Hadoop World 2012: Knitting Boar

Strata + Hadoop World 2012: Knitting BoarCloudera, Inc. In this session, we will introduce “Knitting Boar”, an open-source Java library for performing distributed online learning on a Hadoop cluster under YARN. We will give an overview of how Woven Wabbit works and examine the lessons learned from YARN application construction.

Knitting boar atl_hug_jan2013_v2

Knitting boar atl_hug_jan2013_v2Josh Patterson This document discusses machine learning and the Knitting Boar parallel machine learning library. It provides an introduction to machine learning concepts like classification, recommendation, and clustering. It also introduces Mahout for machine learning on Hadoop. The document describes the Knitting Boar library, which uses YARN to parallelize Mahout's stochastic gradient descent algorithm. It shows how Knitting Boar allows machine learning models to train faster by distributing work across multiple nodes.

KnittingBoar Toronto Hadoop User Group Nov 27 2012

KnittingBoar Toronto Hadoop User Group Nov 27 2012Adam Muise This document discusses machine learning and parallel iterative algorithms. It provides an introduction to machine learning and Mahout. It then describes Knitting Boar, a system for parallelizing stochastic gradient descent on Hadoop YARN. Knitting Boar partitions data among workers that perform online logistic regression in batches. The workers send gradient updates to a master node, which averages the updates to produce a new global model. Experimental results show Knitting Boar achieves roughly linear speedup. The document concludes by discussing developing YARN applications and the Knitting Boar codebase.

Knitting boar - Toronto and Boston HUGs - Nov 2012

Knitting boar - Toronto and Boston HUGs - Nov 2012Josh Patterson 1) The document discusses machine learning and parallel iterative algorithms like stochastic gradient descent. It introduces the Mahout machine learning library and describes an implementation of parallel SGD called Knitting Boar that runs on YARN.

2) Knitting Boar parallelizes Mahout's SGD algorithm by having worker nodes process partitions of the training data in parallel while a master node merges their results.

3) The author argues that approaches like Knitting Boar and IterativeReduce provide better ways to implement machine learning algorithms for big data compared to traditional MapReduce.

Apache Hadoop India Summit 2011 Keynote talk "Programming Abstractions for Sm...

Apache Hadoop India Summit 2011 Keynote talk "Programming Abstractions for Sm...Yahoo Developer Network This document discusses programming abstractions for smart applications on clouds. It proposes a new programming model called Deformable Mesh Abstraction (DMA) that addresses limitations in existing models like MapReduce. DMA allows tasks to recursively spawn new tasks at runtime, supports efficient communication through a shared structure, and can operate on changing datasets. The document describes how DMA can model heuristic problem solving and presents case studies applying DMA to AI planners. It also discusses how DMA could be extended to support file systems and integrated with Hadoop.

Introduction to map reduce s. jency jayastina II MSC COMPUTER SCIENCE BON SEC...

Introduction to map reduce s. jency jayastina II MSC COMPUTER SCIENCE BON SEC...jencyjayastina The document discusses MapReduce, a programming model for processing large datasets in parallel across a distributed cluster. It describes how MapReduce works by specifying computation in terms of mapping and reducing functions. The underlying runtime system automatically parallelizes the computation, handles failures and communications. MapReduce is the processing engine of Apache Hadoop, which was derived from Google's MapReduce. It allows processing huge amounts of data through mapping and reducing steps. The mapping step converts data into key-value pairs, while the reducing step combines the output of mapping into smaller tuples. MapReduce is mainly used for parallel processing of large datasets stored in Hadoop clusters.

Hadoop mapreduce and yarn frame work- unit5

Hadoop mapreduce and yarn frame work- unit5RojaT4 The document discusses MapReduce and the Hadoop framework. It provides an overview of how MapReduce works, examples of problems it can solve, and how Hadoop implements MapReduce at scale across large clusters in a fault-tolerant manner using the HDFS distributed file system and YARN resource management.

Characterization of hadoop jobs using unsupervised learning

Characterization of hadoop jobs using unsupervised learningJoão Gabriel Lima This document summarizes research characterizing Hadoop jobs using unsupervised learning techniques. The researchers clustered over 11,000 Hadoop jobs from Yahoo production clusters into 8 groups based on job metrics. The centroids of each cluster represent characteristic jobs and show differences in map/reduce tasks and data processed. Identifying common job profiles can help benchmark and optimize Hadoop performance.

Big Data on Implementation of Many to Many Clustering

Big Data on Implementation of Many to Many Clusteringpaperpublications3 Abstract: With development of the information technology, the scale of data is increasing quickly. The massive data poses a great challenge for data processing and classification. In order to classify the data, there were several algorithm proposed to efficiently cluster the data. One among that is the random forest algorithm, which is used for the feature subset selection. The feature selection involves identifying a subset of the most useful features that produces compatible results as the original entire set of features. It is achieved by classifying the given data. The efficiency is calculated based on the time required to find a subset of features, the effectiveness is related to the quality of the subset of features. The existing system deals with fast clustering based feature selection algorithm, which is proven to be powerful, but when the size of the dataset increases rapidly, the current algorithm is found to be less efficient as the clustering of datasets takes quiet more number of time. Hence the new method of implementation is proposed in this project to efficiently cluster the data and persist on the back-end database accordingly to reduce the time. It is achieved by scalable random forest algorithm. The Scalable random forest is implemented using Map Reduce Programming (An implementation of Big Data) to efficiently cluster the data. In works on two phases, the first step deals with the gathering the datasets and persisting on the datastore and the second step deals with the clustering and classification of data. This process is completely implemented using Google App Engine’s hadoop platform, which is a widely used open-source implementation of Google's distributed file system using MapReduce framework for scalable distributed computing or cloud computing. MapReduce programming model provides an efficient framework for processing large datasets in an extremely parallel mining. And it comes to being the most popular parallel model for data processing in cloud computing platform. However, designing the traditional machine learning algorithms with MapReduce programming framework is very necessary in dealing with massive datasets.Keywords: Data mining, Hadoop, Map Reduce, Clustering Tree.

Title: Big Data on Implementation of Many to Many Clustering

Author: Ravi. R, Michael. G

ISSN 2350-1022

International Journal of Recent Research in Mathematics Computer Science and Information Technology

Paper Publications

E031201032036

E031201032036ijceronline International Journal of Computational Engineering Research(IJCER) is an intentional online Journal in English monthly publishing journal. This Journal publish original research work that contributes significantly to further the scientific knowledge in engineering and Technology.

Mapreduce Osdi04

Mapreduce Osdi04Jyotirmoy Dey The document describes MapReduce, a programming model and implementation for processing large datasets across clusters of computers. The model uses map and reduce functions to parallelize computations. Map processes key-value pairs to generate intermediate pairs, and reduce merges values with the same intermediate key. The implementation handles parallelization, distribution, and fault tolerance transparently. Hundreds of programs have been implemented using MapReduce at Google, processing terabytes of data on thousands of machines daily.

Hadoop at JavaZone 2010

Hadoop at JavaZone 2010Matthew McCullough Hadoop is a framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage. Hadoop features include a distributed file system called HDFS that stores data on commodity machines, providing fault tolerance. It also provides a programming model called MapReduce that allows users to write applications as a set of map and reduce functions that can automatically parallelize across a distributed system.

Mapreduce - Simplified Data Processing on Large Clusters

Mapreduce - Simplified Data Processing on Large ClustersAbhishek Singh The document describes MapReduce, a programming model and implementation for processing large datasets across clusters of computers. It allows users to write map and reduce functions to parallelize tasks. The MapReduce library automatically parallelizes jobs, distributes data and tasks, handles failures and coordinates communication between machines. It is scalable, processing terabytes of data on thousands of machines, and easy for programmers without parallel experience to use.

map Reduce.pptx

map Reduce.pptxhabibaabderrahim1 This document provides an introduction to MapReduce programming model. It describes how MapReduce inspired by Lisp functions works by dividing tasks into mapping and reducing parts that are distributed and processed in parallel. It then gives examples of using MapReduce for word counting and calculating total sales. It also provides details on MapReduce daemons in Hadoop and includes demo code for summing array elements in Java and doing word counting on a text file using the Hadoop framework in Python.

Map Reduce

Map ReduceSri Prasanna The document provides an overview of MapReduce, including:

1) MapReduce is a programming model and implementation that allows for large-scale data processing across clusters of computers. It handles parallelization, distribution, and reliability.

2) The programming model involves mapping input data to intermediate key-value pairs and then reducing by key to output results.

3) Example uses of MapReduce include word counting and distributed searching of text.

Next generation analytics with yarn, spark and graph lab

Next generation analytics with yarn, spark and graph labImpetus Technologies This document provides an overview of next generation analytics with YARN, Spark and GraphLab. It discusses how YARN addressed limitations of Hadoop 1.0 like scalability, locality awareness and shared cluster utilization. It also describes the Berkeley Data Analytics Stack (BDAS) which includes Spark, and how companies like Ooyala and Conviva use it for tasks like iterative machine learning. GraphLab is presented as ideal for processing natural graphs and the PowerGraph framework partitions such graphs for better parallelism. PMML is introduced as a standard for defining predictive models, and how a Naive Bayes model can be defined and scored using PMML with Spark and Storm.

Yarn spark next_gen_hadoop_8_jan_2014

Yarn spark next_gen_hadoop_8_jan_2014Vijay Srinivas Agneeswaran, Ph.D This deck was presented at the Spark meetup at Bangalore. The key idea behind the presentation was to focus on limitations of Hadoop MapReduce and introduce both Hadoop YARN and Spark in this context. An overview of the other aspects of the Berkeley Data Analytics Stack was also provided.

Finalprojectpresentation

FinalprojectpresentationSANTOSH WAYAL The document proposes a system called Twiche that uses caching to improve the efficiency of incremental MapReduce jobs. Twiche indexes cached items from the map phase by their original input and applied operations. This allows it to identify duplicate computations and avoid reprocessing the same data. The experimental results show that Twiche can eliminate all duplicate tasks in incremental MapReduce jobs, reducing execution time and CPU utilization compared to traditional MapReduce.

Apache Hadoop India Summit 2011 Keynote talk "Programming Abstractions for Sm...

Apache Hadoop India Summit 2011 Keynote talk "Programming Abstractions for Sm...Yahoo Developer Network

Ad

More from Josh Patterson (20)

Patterson Consulting: What is Artificial Intelligence?

Patterson Consulting: What is Artificial Intelligence?Josh Patterson A review of the current artificial intelligence industry and what is real in artificial intelligence, deep learning, and applied machine learning.

What is Artificial Intelligence

What is Artificial IntelligenceJosh Patterson Josh Patterson's talk on Artificial Intelligence from the October 2017 conference in Raleigh NC "All Things Distributed"

Smart Data Conference: DL4J and DataVec

Smart Data Conference: DL4J and DataVecJosh Patterson This document discusses using DL4J and DataVec to build production-ready deep learning workflows for time series and text data. It provides an example of modeling sensor data with recurrent neural networks (RNNs) and character-level text generation with LSTMs. Key points include:

- DL4J is a deep learning framework for Java that runs on Spark and supports CPU/GPU. DataVec is a tool for data preprocessing.

- The document demonstrates loading and transforming sensor time series data with DataVec and training an RNN on the data with DL4J.

- It also shows vectorizing character-level text data from beer reviews with DataVec and using an LSTM in DL4J to generate new

Deep Learning: DL4J and DataVec

Deep Learning: DL4J and DataVecJosh Patterson This document discusses using DL4J and DataVec to build deep learning workflows for modeling time series sensor data with recurrent neural networks. It provides an example of loading and transforming sensor data with DataVec, configuring an RNN with DL4J, and training the model both locally and distributed on Spark. The overall workflow involves extracting, transforming, and loading data with DataVec, vectorizing it, modeling with DL4J, evaluating performance, and deploying trained models for execution on Spark/Hadoop platforms.

Deep Learning and Recurrent Neural Networks in the Enterprise

Deep Learning and Recurrent Neural Networks in the EnterpriseJosh Patterson This document discusses deep learning and recurrent neural networks. It provides an overview of deep learning, including definitions, automated feature learning, and popular deep learning architectures. It also describes DL4J, a tool for building deep learning models in Java and Scala, and discusses applications of recurrent neural networks for tasks like anomaly detection using time series data and audio processing.

Modeling Electronic Health Records with Recurrent Neural Networks