Ad

Microsoft Azure Big Data Analytics

- 1. Big Data Analytics in the Cloud Microsoft Azure Cortana Intelligence Suite Mark Kromer Microsoft Azure Cloud Data Architect @kromerbigdata @mssqldude

- 2. What is Big Data Analytics? Tech Target: “… the process of examining large data sets to uncover hidden patterns, unknown correlations, market trends, customer preferences and other useful business information.” Techopedia: “… the strategy of analyzing large volumes of data, or big data. This big data is gathered from a wide variety of sources, including social networks, videos, digital images, sensors, and sales transaction records. The aim in analyzing all this data is to uncover patterns and connections that might otherwise be invisible, and that might provide valuable insights about the users who created it. Through this insight, businesses may be able to gain an edge over their rivals and make superior business decisions.” 2 Requires lots of data wrangling and Data Engineers Requires Data Scientists to uncover patterns from complex raw data Requires Business Analysts to provide business value from multiple data sources Requires additional tools and infrastructure not provided by traditional database and BI technologies Why Cloud for Big Data Analytics? • Quick and easy to stand-up new, large, big data architectures • Elastic scale • Metered pricing • Quickly evolve architectures to rapidly changing landscapes

- 4. Action People Automated Systems Apps Web Mobile Bots Intelligence Dashboards & Visualizations Cortana Bot Framework Cognitive Services Power BI Information Management Event Hubs Data Catalog Data Factory Machine Learning and Analytics HDInsight (Hadoop and Spark) Stream Analytics Intelligence Data Lake Analytics Machine Learning Big Data Stores SQL Data Warehouse Data Lake Store Data Sources Apps Sensors and devices Data

- 5. What it is: When to use it: Microsoft’s Cloud Platform including IaaS, PaaS and SaaS • Storage and Data • Networking • Security • Services • Virtual Machines • On-demand Resources and Services

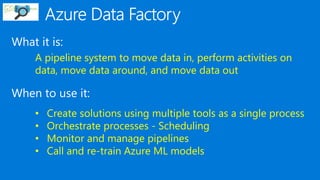

- 6. What it is: When to use it: A pipeline system to move data in, perform activities on data, move data around, and move data out • Create solutions using multiple tools as a single process • Orchestrate processes - Scheduling • Monitor and manage pipelines • Call and re-train Azure ML models

- 9. Example - Churn Call Log Files Customer Table Call Log Files Customer Table Customer Churn Table Azure Data Factory: Data Sources Customers Likely to Churn Customer Call Details Transform & Analyze PublishIngest

- 10. Simple ADF: • Business Goal: Transform and Analyze Web Logs each month • Design Process: Transform Raw Weblogs, using a Hive Query, storing the results in Blob Storage Web Logs Loaded to Blob Files ready for analysis and use in AzureML HDInsight HIVE query to transform Log entries

- 11. PowerShell ADF Example 1. Add-AzureAccount and enter the user name and password 2. Get-AzureSubscription to view all the subscriptions for this account. 3. Select-AzureSubscription to select the subscription that you want to work with. 4. Switch-AzureMode AzureResourceManager 5. New-AzureResourceGroup -Name ADFTutorialResourceGroup -Location "West US" 6. New-AzureDataFactory -ResourceGroupName ADFTutorialResourceGroup –Name DataFactory(your alias)Pipeline –Location "West US"

- 12. Using Visual Studio • Use in mature dev environments • Use when integrated into larger development process

- 13. What it is: When to use it: A Scaling Data Warehouse Service in the Cloud • When you need a large-data BI solution in the cloud • MPP SQL Server in the Cloud • Elastic scale data warehousing • When you need pause-able scale-out compute

- 14. Elastic scale & performance Real-time elasticity Resize in <1 minute On-demand compute Expand or reduce as needed Pause Data Warehouse to Save on Compute Costs. I.e. Pause during non-business hours

- 15. Storage can be as big or small as required Users can execute niche workloads without re-scanning data Elastic scale & performance Scale

- 18. SELECT COUNT_BIG(*) FROM dbo.[FactInternetSales]; SELECT COUNT_BIG(*) FROM dbo.[FactInternetSales]; SELECT COUNT_BIG(*) FROM dbo.[FactInternetSales]; SELECT COUNT_BIG(*) FROM dbo.[FactInternetSales]; SELECT COUNT_BIG(*) FROM dbo.[FactInternetSales]; SELECT COUNT_BIG(*) FROM dbo.[FactInternetSales]; Compute Control

- 19. What it is: When to use it: Data storage (Web-HDFS) and Distributed Data Processing (HIVE, Spark, HBase, Storm, U-SQL) Engines • Low-cost, high-throughput data store • Non-relational data • Larger storage limits than Blobs

- 20. Ingest all data regardless of requirements Store all data in native format without schema definition Do analysis Using analytic engines like Hadoop and ADLA Interactive queries Batch queries Machine Learning Data warehouse Real-time analytics Devices

- 21. WebHDFS YARN U-SQL ADL Analytics ADL HDInsight Store HiveAnalytics Storage

- 22. No limits to SCALE Store ANY DATA in its native format HADOOP FILE SYSTEM (HDFS) for the cloud Optimized for analytic workload PERFORMANCE ENTERPRISE GRADE authentication, access control, audit, encryption at rest Azure Data Lake Store A hyper scale repository for big data analytics workloads Introducing ADLS

- 23. • No fixed limits on: • Amount of data stored • How long data can be stored • Number of files • Size of the individual files • Ingestion/egress throughput Seamlessly scales from a few KBs to several PBs No limits to scale

- 24. No limits to storage 24 • Each file in ADL Store is sliced into blocks • Blocks are distributed across multiple data nodes in the backend storage system • With sufficient number of backend storage data nodes, files of any size can be stored • Backend storage runs in the Azure cloud which has virtually unlimited resources • Metadata is stored about each file No limit to metadata either. Azure Data Lake Store file …Block 1 Block 2 Block 2 Backend Storage Data node Data node Data node Data node Data nodeData node Block Block Block Block Block Block

- 25. Massive throughput 25 • Through read parallelism ADL Store provides massive throughput • Each read operation on a ADL Store file results in multiple read operations executed in parallel against the backend storage data nodes Read operation Azure Data Lake Store file …Block 1 Block 2 Block 2 Backend storage Data node Data node Data node Data node Data nodeData node Block Block Block Block Block Block

- 26. Enterprise grade security Enterprise-grade security permits even sensitive data to be stored securely Regulatory compliance can be enforced Integrates with Azure Active Directory for authentication Data is encrypted at rest and in flight POSIX-style permissions on files and directories Audit logs for all operations 26

- 27. Enterprise grade availability and reliability 27 • Azure maintains 3 replicas of each data object per region across three fault and upgrade domains • Each create or append operation on a replica is replicated to other two • Writes are committed to application only after all replicas are successfully updated • Read operations can go against any replica • Provides ‘read-after-write’ consistency Data is never lost or unavailable even under failures Replica 1 Replica 2 Replica 3 Fault/upgrade domains Write Commit

- 28. Enterprise- grade Limitless scaleProductivity from day one Easy and powerful data preparation All data 0100101001000101010100101001000 10101010010100100010101010010100 10001010101001010010001010101001 0100100010101010010100100010101 0100101001000101010100101001000 10101010010100100010101010010100 10001010101001010010001010101001 0100100010101010010100100010101 0100101001000101010100101001000 10101010010100100010101010010100

- 29. Developing big data apps Visual Studio U-SQL, Hive, & Pig NET

- 30. Work across all cloud data Azure Data Lake Analytics Azure SQL DW Azure SQL DB Azure Storage Blobs Azure Data Lake Store SQL DB in an Azure VM

- 31. Simplified management and administration

- 32. What is U-SQL? A hyper-scalable, highly extensible language for preparing, transforming and analyzing all data Allows users to focus on the what— not the how—of business problems Built on familiar languages (SQL and C#) and supported by a fully integrated development environment Built for data developers & scientists 32

- 33. REFERENCE MyDB.MyAssembly; CREATE TABLE T( cid int, first_order DateTime , last_order DateTime, order_count int , order_amount float ); @o = EXTRACT oid int, cid int, odate DateTime, amount float FROM "/input/orders.txt“ USING Extractors.Csv(); @c = EXTRACT cid int, name string, city string FROM "/input/customers.txt“ USING Extractors.Csv(); @j = SELECT c.cid, MIN(o.odate) AS firstorder , MAX(o.date) AS lastorder, COUNT(o.oid) AS ordercnt , SUM(c.amount) AS totalamount FROM @c AS c LEFT OUTER JOIN @o AS o ON c.cid == o.cid WHERE c.city.StartsWith("New") && MyNamespace.MyFunction(o.odate) > 10 GROUP BY c.cid; OUTPUT @j TO "/output/result.txt" USING new MyData.Write(); INSERT INTO T SELECT * FROM @j;

- 35. EXTRACT Expression @s = EXTRACT a string, b int FROM "filepath/file.csv" USING Extractors.Csv(encoding: Encoding.Unicode); • Built-in Extractors: Csv, Tsv, Text with lots of options • Custom Extractors: e.g., JSON, XML, etc. OUTPUT Expression OUTPUT @s TO "filepath/file.csv" USING Outputters.Csv(); • Built-in Outputters: Csv, Tsv, Text • Custom Outputters: e.g., JSON, XML, etc. (see http://usql.io) Filepath URIs • Relative URI to default ADL Storage account: "filepath/file.csv" • Absolute URIs: • ADLS: "adl://account.azuredatalakestore.net/filepath/file.csv" • WASB: "wasb://container@account/filepath/file.csv"

- 36. • Create assemblies • Reference assemblies • Enumerate assemblies • Drop assemblies • VisualStudio makes registration easy! • CREATE ASSEMBLY db.assembly FROM @path; • CREATE ASSEMBLY db.assembly FROM byte[]; • Can also include additional resource files • REFERENCE ASSEMBLY db.assembly; • Referencing .Net Framework Assemblies • Always accessible system namespaces: • U-SQL specific (e.g., for SQL.MAP) • All provided by system.dll system.core.dll system.data.dll, System.Runtime.Serialization.dll, mscorelib.dll (e.g., System.Text, System.Text.RegularExpressions, System.Linq) • Add all other .Net Framework Assemblies with: REFERENCE SYSTEM ASSEMBLY [System.XML]; • Enumerating Assemblies • Powershell command • U-SQL Studio Server Explorer • DROP ASSEMBLY db.assembly;

- 37. 'USING' csharp_namespace | Alias '=' csharp_namespace_or_class. Examples: DECLARE @ input string = "somejsonfile.json"; REFERENCE ASSEMBLY [Newtonsoft.Json]; REFERENCE ASSEMBLY [Microsoft.Analytics.Samples.Formats]; USING Microsoft.Analytics.Samples.Formats.Json; @data0 = EXTRACT IPAddresses string FROM @input USING new JsonExtractor("Devices[*]"); USING json = [Microsoft.Analytics.Samples.Formats.Json.JsonExtractor]; @data1 = EXTRACT IPAddresses string FROM @input USING new json("Devices[*]");

- 38. Simple pattern language on filename and path @pattern string = "/input/{date:yyyy}/{date:MM}/{date:dd}/{*}.{suffix}"; • Binds two columns date and suffix • Wildcards the filename • Limits on number of files (Current limit 800 and 3000 being increased in next refresh) Virtual columns EXTRACT name string , suffix string // virtual column , date DateTime // virtual column FROM @pattern USING Extractors.Csv(); • Refer to virtual columns in query predicates to get partition elimination (otherwise you will get a warning)

- 39. • Naming • Discovery • Sharing • Securing U-SQL Catalog Naming • Default Database and Schema context: master.dbo • Quote identifiers with []: [my table] • Stores data in ADL Storage /catalog folder Discovery • Visual Studio Server Explorer • Azure Data Lake Analytics Portal • SDKs and Azure Powershell commands Sharing • Within an Azure Data Lake Analytics account Securing • Secured with AAD principals at catalog and Database level

- 40. Views CREATE VIEW V AS EXTRACT… CREATE VIEW V AS SELECT … • Cannot contain user-defined objects (e.g. UDF or UDOs)! • Will be inlined Table-Valued Functions (TVFs) CREATE FUNCTION F (@arg string = "default") RETURNS @res [TABLE ( … )] AS BEGIN … @res = … END; • Provides parameterization • One or more results • Can contain multiple statements • Can contain user-code (needs assembly reference) • Will always be inlined • Infers schema or checks against specified return schema

- 41. CREATE PROCEDURE P (@arg string = "default“) AS BEGIN …; OUTPUT @res TO …; INSERT INTO T …; END; • Provides parameterization • No result but writes into file or table • Can contain multiple statements • Can contain user-code (needs assembly reference) • Will always be inlined • Can contain DDL (but no CREATE, DROP FUNCTION/PROCEDURE)

- 42. CREATE TABLE T (col1 int , col2 string , col3 SQL.MAP<string,string> , INDEX idx CLUSTERED (col2 ASC) PARTITION BY (col1) DISTRIBUTED BY HASH (driver_id) ); • Structured Data, built-in Data types only (no UDTs) • Clustered Index (needs to be specified): row-oriented • Fine-grained distribution (needs to be specified): • HASH, DIRECT HASH, RANGE, ROUND ROBIN • Addressable Partitions (optional) CREATE TABLE T (INDEX idx CLUSTERED …) AS SELECT …; CREATE TABLE T (INDEX idx CLUSTERED …) AS EXTRACT…; CREATE TABLE T (INDEX idx CLUSTERED …) AS myTVF(DEFAULT); • Infer the schema from the query • Still requires index and distribution (does not support partitioning)

- 43. Benefits of Table clustering and distribution • Faster lookup of data provided by distribution and clustering when right distribution/cluster is chosen • Data distribution provides better localized scale out • Used for filters, joins and grouping Benefits of Table partitioning • Provides data life cycle management (“expire” old partitions) • Partial re-computation of data at partition level • Query predicates can provide partition elimination Do not use when… • No filters, joins and grouping • No reuse of the data for future queries

- 44. ALTER TABLE T ADD COLUMN eventName string; ALTER TABLE T DROP COLUMN col3; ALTER TABLE T ADD COLUMN result string, clientId string, payload int?; ALTER TABLE T DROP COLUMN clientId, result; • Meta-data only operation • Existing rows will get • Non-nullable types: C# data type default value (e.g., int will be 0) • Nullable types: null

- 45. Windowing Expression Window_Function_Call 'OVER' '(' [ Over_Partition_By_Clause ] [ Order_By_Clause ] [ Row _Clause ] ')'. Window_Function_Call := Aggregate_Function_Call | Analytic_Function_Call | Ranking_Function_Call. Windowing Aggregate Functions ANY_VALUE, AVG, COUNT, MAX, MIN, SUM, STDEV, STDEVP, VAR, VARP Analytics Functions CUME_DIST, FIRST_VALUE, LAST_VALUE, PERCENTILE_CONT, PERCENTILE_DISC, PERCENT_RANK, LEAD, LAG Ranking Functions DENSE_RANK, NTILE, RANK, ROW_NUMBER

- 46. • Automatic "in-lining" optimized out-of- the-box • Per job parallelization visibility into execution • Heatmap to identify bottlenecks

- 48. Visualize and replay progress of job Fine-tune query performance Visualize physical plan of U-SQL query Browse metadata catalog Author U-SQL scripts (with C# code) Create metadata objects Submit and cancel U-SQL Jobs Debug U-SQL and C# code 48

- 49. Plug-in

- 50. Visual Studio fully supports authoring U-SQL scripts While editing, it provides: IntelliSense Syntax color coding Syntax checking … Contextual Menu 50

- 51. C# code to extend U-SQL can be authored and used directly in U-SQL Studio, without having to first creating and registering an external assembly. Custom Processor 51

- 52. Jobs can be submitted directly from Visual Studio in two ways You have to be logged into Azure and have to specify the target Azure Data Lake account.

- 53. Each job is broken into ‘n’ number of vertices Each vertex is some work that needs to be done Input Output Output 6 Stages 8 Vertexes Vertexes are organized into stages – Vertexes in each stage do the same work on the same data – Vertex in one stage may depend on a vertex in a earlier stage Stages themselves are organized into an acyclic graph 53

- 54. Job execution graph After a job is submitted the progress of the execution of the job as it goes through the different stages is shown and updated continuously Important stats about the job are also displayed and updated continuously 54

- 55. Diagnostics information is shown to help with debugging and performance issues

- 56. ADL Analytics creates and stores a set of metadata objects in a catalog maintained by a metadata service Tables and TVFs are created by DDL statements (CREATE TABLE …) Metadata objects can be created directly through the Server Explorer Azure Data Lake Analytics account Databases – Tables – Table valued functions – Jobs – Schemas Linked storage

- 57. The metadata catalog can be browsed with the Visual Studio Server Explorer Server Explorer lets you: 1. Create new tables, schemas and databases 2. Register assemblies

- 58. What it is: When to use it: Microsoft’s implementation of apache Hadoop (as a service) that uses Blobs for persistent storage • When you need to process large scale data (PB+) • When you want to use Hadoop or Spark as a service • When you want to compute data and retire the servers, but retain the results • When your team is familiar with the Hadoop Zoo

- 59. Using the Hadoop Ecosystem to process and query data

- 60. HDInsight Tools for Visual Studio

- 66. What it is: When to use it: A multi-platform environment and engine to create and deploy Machine Learning models and API’s • When you need to create predictive analytics • When you need to share Data Science experiments across teams • When you need to create call-able API’s for ML functions • When you also have R and Python experience on your Data Science team

- 67. Development Environment • Creating Experiments • Sharing a Workspace Deployment Environment • Publishing the Model • Using the API • Consuming in various tools

- 68. Get/Prepare Data Build/Edit Experiment Create/Update Model Evaluate Model Results Build and ModelCreate Workspace Deploy Model Consume Model

- 69. Import Data Preprocess Algorithm Train Model Split Data Score Model

- 72. What it is: When to use it: Interactive Report and Visualization creation for computing and mobile platforms • When you need to create and view interactive reports that combine multiple datasets • When you need to embed reporting into an application • When you need customizable visualizations • When you need to create shared datasets, reports, and dashboards that you publish to your team

- 75. Business apps Custom apps Sensors and devices Events Events Transformed Data Raw Events Azure Event Hubs Kafka

- 76. Business apps Custom apps Sensors and devices Bulk Load Azure Data Factory

- 77. Data Transformation Data Collection Presentation and action Queuing System Data Storage 8 Azure Search Data analytics (Excel, Power BI, Looker, Tableau) Web/thick client dashboards Devices to take action Event hub Event & data producers Applications Web and social Devices Live Dashboards DocumentDB MongoDB SQL Azure ADW Hbase Blob StorageKafka/RabbitMQ/ ActiveMQ Event hubs Azure ML Storm / Stream Analytics Hive / U-SQL Data Factory Sensors Pig Cloud gateways (web APIs) Field gateways

Editor's Notes

- #6: What you can do with it: https://azure.microsoft.com/en-us/overview/what-is-azure/ Platform: http://microsoftazure.com Storage: https://azure.microsoft.com/en-us/documentation/services/storage/ Networking: https://azure.microsoft.com/en-us/documentation/services/virtual-network/ Security: https://azure.microsoft.com/en-us/documentation/services/active-directory/ Services: https://azure.microsoft.com/en-us/documentation/articles/best-practices-scalability-checklist/ Virtual Machines: https://azure.microsoft.com/en-us/documentation/services/virtual-machines/windows/ and https://azure.microsoft.com/en-us/documentation/services/virtual-machines/linux/ PaaS: https://azure.microsoft.com/en-us/documentation/services/app-service/

- #7: Azure Data Factory: http://azure.microsoft.com/en-us/services/data-factory/

- #8: Pricing: https://azure.microsoft.com/en-us/pricing/details/data-factory/

- #9: Learning Path: https://azure.microsoft.com/en-us/documentation/articles/data-factory-introduction/ Quick Example: http://azure.microsoft.com/blog/2015/04/24/azure-data-factory-update-simplified-sample-deployment/

- #10: Video of this process: https://azure.microsoft.com/en-us/documentation/videos/azure-data-factory-102-analyzing-complex-churn-models-with-azure-data-factory/

- #11: More options: Prepare System: https://azure.microsoft.com/en-us/documentation/articles/data-factory-build-your-first-pipeline-using-editor/ - Follow steps Another Lab: https://azure.microsoft.com/en-us/documentation/articles/data-factory-samples/

- #12: https://azure.microsoft.com/en-us/documentation/articles/data-factory-build-your-first-pipeline-using-powershell/

- #13: Overview: https://azure.microsoft.com/en-us/documentation/articles/data-factory-build-your-first-pipeline/ Using the Portal: https://azure.microsoft.com/en-us/documentation/articles/data-factory-build-your-first-pipeline-using-editor/

- #14: Azure SQL Data Warehouse: http://azure.microsoft.com/en-us/services/sql-data-warehouse/

- #15: 14

- #16: 15

- #20: Azure Data Lake: http://azure.microsoft.com/en-us/campaigns/data-lake/

- #29: All data Unstructured, Semi structured, Structured Domain-specific user defined types using C# Queries over Data Lake and Azure Blobs Federated Queries over Operational and DW SQL stores removing the complexity of ETL Productive from day one Effortless scale and performance without need to manually tune/configure Best developer experience throughout development lifecycle for both novices and experts Leverage your existing skills with SQL and .NET Easy and powerful data preparation Easy to use built-in connectors for common data formats Simple and rich extensibility model for adding customer – specific data transformation – both existing and new No limits scale Scales on demand with no change to code Automatically parallelizes SQL and custom code Designed to process petabytes of data Enterprise grade Managing, securing, sharing, and discovery of familiar data and code objects (tables, functions etc.) Role based authorization of Catalogs and storage accounts using AAD security Auditing of catalog objects (databases, tables etc.)

- #31: ADLA allows you to compute on data anywhere and a join data from multiple cloud sources.

- #34: Use for language experts

- #59: Azure HDInsight: http://azure.microsoft.com/en-us/services/hdinsight/

- #60: Primary site: https://azure.microsoft.com/en-us/services/hdinsight/ Quick overview: https://azure.microsoft.com/en-us/documentation/articles/hdinsight-hadoop-introduction/ 4-week online course through the edX platform: https://www.edx.org/course/processing-big-data-azure-hdinsight-microsoft-dat202-1x 11 minute introductory video: https://channel9.msdn.com/Series/Getting-started-with-Windows-Azure-HDInsight-Service/Introduction-To-Windows-Azure-HDInsight-Service Microsoft Virtual Academy Training (4 hours) - https://mva.microsoft.com/en-US/training-courses/big-data-analytics-with-hdinsight-hadoop-on-azure-10551?l=UJ7MAv97_5804984382 Learning path for HDInsight: https://azure.microsoft.com/en-us/documentation/learning-paths/hdinsight-self-guided-hadoop-training/ Azure Feature Pack for SQL Server 2016, i.e., SSIS (SQL Server Integration Services): https://msdn.microsoft.com/en-us/library/mt146770(v=sql.130).aspx

- #66: Azure Portal: http://azure.portal.com Provisioning Clusters: https://azure.microsoft.com/en-us/documentation/articles/hdinsight-provision-clusters/ Different clusters have different node types, number of nodes, and node sizes.

- #67: Azure Machine Learning: http://azure.microsoft.com/en-us/services/machine-learning/

- #68: Guided tutorials: https://azure.microsoft.com/en-us/documentation/services/machine-learning/ Microsoft Azure Virtual Academy course: https://mva.microsoft.com/en-US/training-courses/microsoft-azure-machine-learning-jump-start-8425?l=ehQZFoKz_7904984382

- #69: Beginning Series: https://azure.microsoft.com/en-us/documentation/articles/machine-learning-data-science-for-beginners-the-5-questions-data-science-answers/

- #70: Designing an experiment in the Studio: https://azure.microsoft.com/en-us/documentation/articles/machine-learning-what-is-ml-studio/

- #73: Power BI: https://powerbi.microsoft.com/

- #85: Customize yourself or with featured partners

![SELECT COUNT_BIG(*)

FROM dbo.[FactInternetSales];

SELECT COUNT_BIG(*)

FROM dbo.[FactInternetSales];

SELECT COUNT_BIG(*)

FROM dbo.[FactInternetSales];

SELECT COUNT_BIG(*)

FROM dbo.[FactInternetSales];

SELECT COUNT_BIG(*)

FROM dbo.[FactInternetSales];

SELECT COUNT_BIG(*)

FROM dbo.[FactInternetSales];

Compute

Control](https://image.slidesharecdn.com/microsoftazurebigdataanalyticscisw-kromer001-161104154609/85/Microsoft-Azure-Big-Data-Analytics-18-320.jpg)

![• Create assemblies

• Reference assemblies

• Enumerate assemblies

• Drop assemblies

• VisualStudio makes registration easy!

• CREATE ASSEMBLY db.assembly FROM @path;

• CREATE ASSEMBLY db.assembly FROM byte[];

• Can also include additional resource files

• REFERENCE ASSEMBLY db.assembly;

• Referencing .Net Framework Assemblies

• Always accessible system namespaces:

• U-SQL specific (e.g., for SQL.MAP)

• All provided by system.dll system.core.dll

system.data.dll, System.Runtime.Serialization.dll,

mscorelib.dll (e.g., System.Text,

System.Text.RegularExpressions, System.Linq)

• Add all other .Net Framework Assemblies with:

REFERENCE SYSTEM ASSEMBLY [System.XML];

• Enumerating Assemblies

• Powershell command

• U-SQL Studio Server Explorer

• DROP ASSEMBLY db.assembly;](https://image.slidesharecdn.com/microsoftazurebigdataanalyticscisw-kromer001-161104154609/85/Microsoft-Azure-Big-Data-Analytics-36-320.jpg)

!['USING' csharp_namespace

| Alias '=' csharp_namespace_or_class.

Examples:

DECLARE @ input string = "somejsonfile.json";

REFERENCE ASSEMBLY [Newtonsoft.Json];

REFERENCE ASSEMBLY [Microsoft.Analytics.Samples.Formats];

USING Microsoft.Analytics.Samples.Formats.Json;

@data0 =

EXTRACT IPAddresses string

FROM @input

USING new JsonExtractor("Devices[*]");

USING json =

[Microsoft.Analytics.Samples.Formats.Json.JsonExtractor];

@data1 =

EXTRACT IPAddresses string

FROM @input

USING new json("Devices[*]");](https://image.slidesharecdn.com/microsoftazurebigdataanalyticscisw-kromer001-161104154609/85/Microsoft-Azure-Big-Data-Analytics-37-320.jpg)

![• Naming

• Discovery

• Sharing

• Securing

U-SQL Catalog Naming

• Default Database and Schema context: master.dbo

• Quote identifiers with []: [my table]

• Stores data in ADL Storage /catalog folder

Discovery

• Visual Studio Server Explorer

• Azure Data Lake Analytics Portal

• SDKs and Azure Powershell commands

Sharing

• Within an Azure Data Lake Analytics account

Securing

• Secured with AAD principals at catalog and Database level](https://image.slidesharecdn.com/microsoftazurebigdataanalyticscisw-kromer001-161104154609/85/Microsoft-Azure-Big-Data-Analytics-39-320.jpg)

![Views

CREATE VIEW V AS EXTRACT…

CREATE VIEW V AS SELECT …

• Cannot contain user-defined objects (e.g. UDF or UDOs)!

• Will be inlined

Table-Valued Functions (TVFs)

CREATE FUNCTION F (@arg string = "default")

RETURNS @res [TABLE ( … )]

AS BEGIN … @res = … END;

• Provides parameterization

• One or more results

• Can contain multiple statements

• Can contain user-code (needs assembly reference)

• Will always be inlined

• Infers schema or checks against specified return schema](https://image.slidesharecdn.com/microsoftazurebigdataanalyticscisw-kromer001-161104154609/85/Microsoft-Azure-Big-Data-Analytics-40-320.jpg)

![Windowing Expression

Window_Function_Call 'OVER' '('

[ Over_Partition_By_Clause ]

[ Order_By_Clause ]

[ Row _Clause ]

')'.

Window_Function_Call :=

Aggregate_Function_Call

| Analytic_Function_Call

| Ranking_Function_Call.

Windowing Aggregate Functions

ANY_VALUE, AVG, COUNT, MAX, MIN, SUM, STDEV, STDEVP, VAR, VARP

Analytics Functions

CUME_DIST, FIRST_VALUE, LAST_VALUE, PERCENTILE_CONT,

PERCENTILE_DISC, PERCENT_RANK, LEAD, LAG

Ranking Functions

DENSE_RANK, NTILE, RANK, ROW_NUMBER](https://image.slidesharecdn.com/microsoftazurebigdataanalyticscisw-kromer001-161104154609/85/Microsoft-Azure-Big-Data-Analytics-45-320.jpg)