Mining public datasets using opensource tools: Zeppelin, Spark and Juju

7 likes1,926 views

There are plenty of public datasets out there available and the number is growing. Few recent and most useful of BigData ecosystem tools are showcased: Apache Zeppelin (incubating), Apache Spark and Juju.

1 of 38

Downloaded 30 times

Ad

Recommended

Scaling Traffic from 0 to 139 Million Unique Visitors

Scaling Traffic from 0 to 139 Million Unique VisitorsYelp Engineering This document summarizes the traffic history and infrastructure changes at Yelp from 2005 to the present. It outlines the key milestones and technology changes over time as Yelp grew from handling around 200k searches per day with 1 database in 2005-2007 to serving traffic across 29 countries in 2014 with a distributed, scalable infrastructure utilizing technologies like Elasticsearch, Kafka, and Pyleus for real-time processing.

Archiving, E-Discovery, and Supervision with Spark and Hadoop with Jordan Volz

Archiving, E-Discovery, and Supervision with Spark and Hadoop with Jordan VolzDatabricks This document discusses using Hadoop for archiving, e-discovery, and supervision. It outlines the key components of each task and highlights traditional shortcomings. Hadoop provides strengths like speed, ease of use, and security. An architectural overview shows how Hadoop can be used for ingestion, processing, analysis, and machine learning. Examples demonstrate surveillance use cases. While some obstacles remain, partners can help address areas like user interfaces and compliance storage.

Big data pipeline with scala by Rohit Rai, Tuplejump - presented at Pune Scal...

Big data pipeline with scala by Rohit Rai, Tuplejump - presented at Pune Scal...Thoughtworks The document discusses Tuplejump, a data engineering startup with a vision to simplify data engineering. It summarizes Tuplejump's big data pipeline platform which collects, transforms, predicts, stores, explores and visualizes data using various tools like Hydra, Spark, Cassandra, MinerBot, Shark, UberCube and Pissaro. It advocates using Scala as the primary language due to its object oriented and functional capabilities. It also discusses advantages of Tuplejump's platform and how tools like Akka, Spark, Play, SBT, ScalaTest, Shapeless and Scalaz are leveraged.

Future of data visualization

Future of data visualizationhadoopsphere Apache Zeppelin is an emerging open-source tool for data visualization that allows for interactive data analytics. It provides a web-based notebook interface that allows users to write and execute code in languages like SQL and Scala. The tool offers features like built-in visualization capabilities, pivot tables, dynamic forms, and collaboration tools. Zeppelin works with backends like Apache Spark and uses interpreters to connect to different data processing systems. It is predicted to influence big data visualization in the coming years.

Stream All Things—Patterns of Modern Data Integration with Gwen Shapira

Stream All Things—Patterns of Modern Data Integration with Gwen ShapiraDatabricks This document discusses patterns for modern data integration using streaming data. It outlines an evolution from data warehouses to data lakes to streaming data. It then describes four key patterns: 1) Stream all things (data) in one place, 2) Keep schemas compatible and process data on, 3) Enable ridiculously parallel single message transformations, and 4) Perform streaming data enrichment to add additional context to events. Examples are provided of using Apache Kafka and Kafka Connect to implement these patterns for a large hotel chain integrating various data sources and performing real-time analytics on customer events.

Large Scale Lakehouse Implementation Using Structured Streaming

Large Scale Lakehouse Implementation Using Structured StreamingDatabricks Business leads, executives, analysts, and data scientists rely on up-to-date information to make business decision, adjust to the market, meet needs of their customers or run effective supply chain operations.

Come hear how Asurion used Delta, Structured Streaming, AutoLoader and SQL Analytics to improve production data latency from day-minus-one to near real time Asurion’s technical team will share battle tested tips and tricks you only get with certain scale. Asurion data lake executes 4000+ streaming jobs and hosts over 4000 tables in production Data Lake on AWS.

Scala eXchange: Building robust data pipelines in Scala

Scala eXchange: Building robust data pipelines in ScalaAlexander Dean Over the past couple of years, Scala has become a go-to language for building data processing applications, as evidenced by the emerging ecosystem of frameworks and tools including LinkedIn's Kafka, Twitter's Scalding and our own Snowplow project (https://github.com/snowplow/snowplow).

In this talk, Alex will draw on his experiences at Snowplow to explore how to build rock-sold data pipelines in Scala, highlighting a range of techniques including:

* Translating the Unix stdin/out/err pattern to stream processing

* "Railway oriented" programming using the Scalaz Validation

* Validating data structures with JSON Schema

* Visualizing event stream processing errors in ElasticSearch

Alex's talk draws on his experiences working with event streams in Scala over the last two and a half years at Snowplow, and by Alex's recent work penning Unified Log Processing, a Manning book.

Spark Summit EU talk by Oscar Castaneda

Spark Summit EU talk by Oscar CastanedaSpark Summit This document discusses running an Elasticsearch server inside a Spark cluster for development purposes. It presents the problem of Elasticsearch running outside of Spark making development cumbersome. The solution is to start an Elasticsearch server inside the Spark cluster that can be accessed natively from Spark jobs. Data can then be written to and read from this local Elasticsearch server. Additionally, snapshots of the Elasticsearch indices can be stored in S3 for backup and restoration. A demo application is shown that indexes tweets in real-time to the local Elasticsearch server running inside the Spark cluster.

Event & Data Mesh as a Service: Industrializing Microservices in the Enterpri...

Event & Data Mesh as a Service: Industrializing Microservices in the Enterpri...HostedbyConfluent Kafka is widely positioned as the proverbial "central nervous system" of the enterprise. In this session, we explore how the central nervous system can be used to build a mesh topology & unified catalog of enterprise wide events, enabling development teams to build event driven architectures faster & better.

The central theme of this topic is also aligned to seeking idioms from API Management, Service Meshes, Workflow management and Service orchestration. We compare how these approaches can be harmonized with Kafka.

We will also touch upon the topic of how this relates to Domain Driven Design, CQRS & other patterns in microservices.

Some potential takeaways for the discerning audience:

1. Opportunities in a platform approach to Event Driven Architecture in the enterprise

2. Adopting a product mindset around Data & Event Streams

3. Seeking harmony with allied enterprise applications

Ignite Your Big Data With a Spark!

Ignite Your Big Data With a Spark!Progress Progress® DataDirect ® Spark SQL ODBC and JDBC drivers deliver the fastest, high-performance connectivity so your existing BI and analytics applications can access Big Data in Apache Spark.

Lessons Learned - Monitoring the Data Pipeline at Hulu

Lessons Learned - Monitoring the Data Pipeline at HuluDataWorks Summit This document summarizes lessons learned about monitoring a data pipeline at Hulu. It discusses how the initial monitoring approach had some issues from the perspectives of users and detecting problems. A new approach is proposed using a graph data structure to provide contextual troubleshooting that connects any issues to their impacts on business units and user needs. This approach aims to make troubleshooting easier by querying the relationships between different components and resources. Small independent services would also be easier to create and maintain within this approach.

Taboola Road To Scale With Apache Spark

Taboola Road To Scale With Apache Sparktsliwowicz Taboola's data processing architecture has evolved over time from directly writing to databases to using Apache Spark for scalable real-time processing. Spark allows Taboola to process terabytes of data daily across multiple data centers for real-time recommendations, analytics, and algorithm calibration. Key aspects of Taboola's architecture include using Cassandra for event storage, Spark for distributed computing, Mesos for cluster management, and Zookeeper for coordination across a large Spark cluster.

Lambda architecture: from zero to One

Lambda architecture: from zero to OneSerg Masyutin Story of architecture evolution of one project from zero to Lambda Architecture. Also includes information on how we scaled cluster as soon as architecture is set up.

Contains nice performance charts after every architecture change.

10 Things About Spark

10 Things About Spark Roger Brinkley A presentation prepared for Data Stack as a part of their Interview process on July 20.

This 15 presentation in ignite format features 10 items that you might not know about the V1.0 Spark release

Big Data Platform at Pinterest

Big Data Platform at PinterestQubole This document discusses Pinterest's data architecture and use of Pinball for workflow management. Pinterest processes 3 petabytes of data daily from their 60 billion pins and 1 billion boards across a 2000 node Hadoop cluster. They use Kafka, Secor and Singer for ingesting event data. Pinball is used for workflow management to handle their scale of hundreds of workflows, thousands of jobs and 500+ jobs in some workflows. Pinball provides simple abstractions, extensibility, reliability, debuggability and horizontal scalability for workflow execution.

Data science lifecycle with Apache Zeppelin

Data science lifecycle with Apache ZeppelinDataWorks Summit/Hadoop Summit This document discusses Apache Zeppelin, an open-source web-based notebook that enables interactive data analytics. It provides an overview of Zeppelin's history and architecture, including how interpreters and notebook storage are pluggable. The document also outlines Zeppelin's roadmap for improving enterprise support through features like multi-tenancy, impersonation, job management and frontend performance.

Cloud-Based Event Stream Processing Architectures and Patterns with Apache Ka...

Cloud-Based Event Stream Processing Architectures and Patterns with Apache Ka...HostedbyConfluent The Apache Kafka ecosystem is very rich with components and pieces that make for designing and implementing secure, efficient, fault-tolerant and scalable event stream processing (ESP) systems. Using real-world examples, this talk covers why Apache Kafka is an excellent choice for cloud-native and hybrid architectures, how to go about designing, implementing and maintaining ESP systems, best practices and patterns for migrating to the cloud or hybrid configurations, when to go with PaaS or IaaS, what options are available for running Kafka in cloud or hybrid environments and what you need to build and maintain successful ESP systems that are secure, performant, reliable, highly-available and scalable.

Azuresatpn19 - An Introduction To Azure Data Factory

Azuresatpn19 - An Introduction To Azure Data FactoryRiccardo Perico Slide deck of my session during Azure Saturday 2019 in Pordenone.

We spoke about Azure Data Factory v2.

Real-Time Machine Learning with Redis, Apache Spark, Tensor Flow, and more wi...

Real-Time Machine Learning with Redis, Apache Spark, Tensor Flow, and more wi...Databricks Predictive intelligence from machine learning has the potential to change everything in our day to day experiences, from education to entertainment, from travel to healthcare, from business to leisure and everything in between. Modern ML frameworks are batch by nature and cannot pivot on the fly to changing user data or situations. Many simple ML applications such as those that enhance the user experience, can benefit from real-time robust predictive models that adapt on the fly.

Join this session to learn how common practices in machine learning such as running a trained model in production can be substantially accelerated and radically simplified by using Redis modules that natively store and execute common models generated by Spark ML and Tensorflow algorithms. We will also discuss the implementation of simple, real-time feed-forward neural networks with Neural Redis and scenarios that can benefit from such efficient, accelerated artificial intelligence.

Real-life implementations of these new techniques at a large consumer credit company for fraud analytics, at an online e-commerce provider for user recommendations and at a large media company for targeting content will also be discussed.

Beyond Relational

Beyond RelationalLynn Langit The document discusses building data pipelines in the cloud. It covers serverless data pipeline patterns using services like BigQuery, Cloud Storage, Cloud Dataflow, and Cloud Pub/Sub. It also compares Cloud Dataflow and Cloud Dataproc for ETL workflows. Key questions around ingestion and ETL are discussed, focusing on volume, variety, velocity and veracity of data. Cloud vendor offerings for streaming and ETL are also compared.

SparkOscope: Enabling Apache Spark Optimization through Cross Stack Monitorin...

SparkOscope: Enabling Apache Spark Optimization through Cross Stack Monitorin...Databricks During the last year, the team at IBM Research at Ireland has been using Apache Spark to perform analytics on large volumes of sensor data. These applications need to be executed on a daily basis, therefore, it was essential for them to understand Spark resource utilization. They found it cumbersome to manually consume and efficiently inspect the CSV files for the metrics generated at the Spark worker nodes.

Although using an external monitoring system like Ganglia would automate this process, they were still plagued with the inability to derive temporal associations between system-level metrics (e.g. CPU utilization) and job-level metrics (e.g. job or stage ID) as reported by Spark. For instance, they were not able to trace back the root cause of a peak in HDFS Reads or CPU usage to the code in their Spark application causing the bottleneck.

To overcome these limitations, they developed SparkOScope. Taking advantage of the job-level information available through the existing Spark Web UI and to minimize source-code pollution, they use the existing Spark Web UI to monitor and visualize job-level metrics of a Spark application (e.g. completion time). More importantly, they extend the Web UI with a palette of system-level metrics of the server/VM/container that each of the Spark job’s executor ran on. Using SparkOScope, you can navigate to any completed application and identify application-logic bottlenecks by inspecting the various plots providing in-depth timeseries for all relevant system-level metrics related to the Spark executors, while also easily associating them with stages, jobs and even source code lines incurring the bottleneck.

They have made Sparkoscope available as a standalone module, and also extended the available Sinks (mongodb, mysql).

Teaching Apache Spark Clusters to Manage Their Workers Elastically: Spark Sum...

Teaching Apache Spark Clusters to Manage Their Workers Elastically: Spark Sum...Spark Summit Devops engineers have applied a great deal of creativity and energy to invent tools that automate infrastructure management, in the service of deploying capable and functional applications. For data-driven applications running on Apache Spark, the details of instantiating and managing the backing Spark cluster can be a distraction from focusing on the application logic. In the spirit of devops, automating Spark cluster management tasks allows engineers to focus their attention on application code that provides value to end-users.

Using Openshift Origin as a laboratory, we implemented a platform where Apache Spark applications create their own clusters and then dynamically manage their own scale via host-platform APIs. This makes it possible to launch a fully elastic Spark application with little more than the click of a button.

We will present a live demo of turn-key deployment for elastic Apache Spark applications, and share what we’ve learned about developing Spark applications that manage their own resources dynamically with platform APIs.

The audience for this talk will be anyone looking for ways to streamline their Apache Spark cluster management, reduce the workload for Spark application deployment, or create self-scaling elastic applications. Attendees can expect to learn about leveraging APIs in the Kubernetes ecosystem that enable application deployments to manipulate their own scale elastically.

MLflow: Infrastructure for a Complete Machine Learning Life Cycle

MLflow: Infrastructure for a Complete Machine Learning Life CycleDatabricks ML development brings many new complexities beyond the traditional software development lifecycle. Unlike in traditional software development, ML developers want to try multiple algorithms, tools and parameters to get the best results, and they need to track this information to reproduce work. In addition, developers need to use many distinct systems to productionize models. To address these problems, many companies are building custom “ML platforms” that automate this lifecycle, but even these platforms are limited to a few supported algorithms and to each company’s internal infrastructure.

In this talk, we will present MLflow, a new open source project from Databricks that aims to design an open ML platform where organizations can use any ML library and development tool of their choice to reliably build and share ML applications. MLflow introduces simple abstractions to package reproducible projects, track results, and encapsulate models that can be used with many existing tools, accelerating the ML lifecycle for organizations of any size.

Data streaming

Data streamingAlberto Paro - The document profiles Alberto Paro and his experience including a Master's Degree in Computer Science Engineering from Politecnico di Milano, experience as a Big Data Practise Leader at NTTDATA Italia, authoring 4 books on ElasticSearch, and expertise in technologies like Apache Spark, Playframework, Apache Kafka, and MongoDB. He is also an evangelist for the Scala and Scala.JS languages.

The document then provides an overview of data streaming architectures, popular message brokers like Apache Kafka, RabbitMQ, and Apache Pulsar, streaming frameworks including Apache Spark, Apache Flink, and Apache NiFi, and streaming libraries such as Reactive Streams.

Streaming Data in the Cloud with Confluent and MongoDB Atlas | Robert Walters...

Streaming Data in the Cloud with Confluent and MongoDB Atlas | Robert Walters...HostedbyConfluent This document discusses streaming data between Confluent Cloud and MongoDB Atlas. It provides an overview of MongoDB Atlas and its fully managed database capabilities in the cloud. It then demonstrates how to stream data from a Python generator application to MongoDB Atlas using Confluent Cloud and its connectors. The presentation concludes by providing a reference architecture for connecting Confluent Platform to MongoDB.

The Key to Machine Learning is Prepping the Right Data with Jean Georges Perrin

The Key to Machine Learning is Prepping the Right Data with Jean Georges Perrin Databricks The document discusses preparing data for machine learning by applying data quality techniques in Spark. It introduces concepts of data quality and machine learning data formats. The main part shows how to use User Defined Functions (UDFs) in Spark SQL to automate transforming raw data into the required formats for machine learning, making the process more efficient and reproducible.

An overview of Amazon Athena

An overview of Amazon AthenaJulien SIMON Amazon Athena is a serverless query service that allows users to run interactive SQL queries on data stored in Amazon S3 without having to load the data into a database. It uses Presto to allow ANSI SQL queries on data in formats like CSV, JSON, and columnar formats like Parquet and ORC. For a dataset of 1 billion rows of sales data stored in S3, Athena queries performed comparably to a basic Redshift cluster and much faster than loading and querying the data in Redshift, making Athena a cost-effective solution for ad-hoc queries on data in S3.

Kick-Start with SMACK Stack

Kick-Start with SMACK StackKnoldus Inc. SMACK is a combination of Spark, Mesos, Akka, Cassandra and Kafka. It is used for pipelined data architecture which is required for the real time data analysis and to integrate all the technology at the right place to efficient data pipeline.

963

963Annu Ahmed This document provides an overview of real-time big data processing using Apache Kafka, Spark Streaming, Scala, and Elastic Search. It defines key concepts like big data, real-time big data, and describes technologies like Hadoop, Apache Kafka, Spark Streaming, Scala, and Elastic Search and how they can be used together for real-time big data processing. The document also provides details about each technology and how they fit into an overall real-time big data architecture.

2015 Data Science Summit @ dato Review

2015 Data Science Summit @ dato ReviewHang Li This document summarizes a data science summit attended by the author. It includes a brief overview of the author's travel itinerary to and from the event. The main body summarizes various sessions and topics discussed, including machine learning platforms and tools like Dato, IBM System ML, Apache Flink, PredictionIO, and DeepLearning4J. Session topics focused on scalable data processing, stream and batch processing, graph processing, and machine learning algorithms. The document provides links to several of the platforms and tools discussed.

Ad

More Related Content

What's hot (20)

Event & Data Mesh as a Service: Industrializing Microservices in the Enterpri...

Event & Data Mesh as a Service: Industrializing Microservices in the Enterpri...HostedbyConfluent Kafka is widely positioned as the proverbial "central nervous system" of the enterprise. In this session, we explore how the central nervous system can be used to build a mesh topology & unified catalog of enterprise wide events, enabling development teams to build event driven architectures faster & better.

The central theme of this topic is also aligned to seeking idioms from API Management, Service Meshes, Workflow management and Service orchestration. We compare how these approaches can be harmonized with Kafka.

We will also touch upon the topic of how this relates to Domain Driven Design, CQRS & other patterns in microservices.

Some potential takeaways for the discerning audience:

1. Opportunities in a platform approach to Event Driven Architecture in the enterprise

2. Adopting a product mindset around Data & Event Streams

3. Seeking harmony with allied enterprise applications

Ignite Your Big Data With a Spark!

Ignite Your Big Data With a Spark!Progress Progress® DataDirect ® Spark SQL ODBC and JDBC drivers deliver the fastest, high-performance connectivity so your existing BI and analytics applications can access Big Data in Apache Spark.

Lessons Learned - Monitoring the Data Pipeline at Hulu

Lessons Learned - Monitoring the Data Pipeline at HuluDataWorks Summit This document summarizes lessons learned about monitoring a data pipeline at Hulu. It discusses how the initial monitoring approach had some issues from the perspectives of users and detecting problems. A new approach is proposed using a graph data structure to provide contextual troubleshooting that connects any issues to their impacts on business units and user needs. This approach aims to make troubleshooting easier by querying the relationships between different components and resources. Small independent services would also be easier to create and maintain within this approach.

Taboola Road To Scale With Apache Spark

Taboola Road To Scale With Apache Sparktsliwowicz Taboola's data processing architecture has evolved over time from directly writing to databases to using Apache Spark for scalable real-time processing. Spark allows Taboola to process terabytes of data daily across multiple data centers for real-time recommendations, analytics, and algorithm calibration. Key aspects of Taboola's architecture include using Cassandra for event storage, Spark for distributed computing, Mesos for cluster management, and Zookeeper for coordination across a large Spark cluster.

Lambda architecture: from zero to One

Lambda architecture: from zero to OneSerg Masyutin Story of architecture evolution of one project from zero to Lambda Architecture. Also includes information on how we scaled cluster as soon as architecture is set up.

Contains nice performance charts after every architecture change.

10 Things About Spark

10 Things About Spark Roger Brinkley A presentation prepared for Data Stack as a part of their Interview process on July 20.

This 15 presentation in ignite format features 10 items that you might not know about the V1.0 Spark release

Big Data Platform at Pinterest

Big Data Platform at PinterestQubole This document discusses Pinterest's data architecture and use of Pinball for workflow management. Pinterest processes 3 petabytes of data daily from their 60 billion pins and 1 billion boards across a 2000 node Hadoop cluster. They use Kafka, Secor and Singer for ingesting event data. Pinball is used for workflow management to handle their scale of hundreds of workflows, thousands of jobs and 500+ jobs in some workflows. Pinball provides simple abstractions, extensibility, reliability, debuggability and horizontal scalability for workflow execution.

Data science lifecycle with Apache Zeppelin

Data science lifecycle with Apache ZeppelinDataWorks Summit/Hadoop Summit This document discusses Apache Zeppelin, an open-source web-based notebook that enables interactive data analytics. It provides an overview of Zeppelin's history and architecture, including how interpreters and notebook storage are pluggable. The document also outlines Zeppelin's roadmap for improving enterprise support through features like multi-tenancy, impersonation, job management and frontend performance.

Cloud-Based Event Stream Processing Architectures and Patterns with Apache Ka...

Cloud-Based Event Stream Processing Architectures and Patterns with Apache Ka...HostedbyConfluent The Apache Kafka ecosystem is very rich with components and pieces that make for designing and implementing secure, efficient, fault-tolerant and scalable event stream processing (ESP) systems. Using real-world examples, this talk covers why Apache Kafka is an excellent choice for cloud-native and hybrid architectures, how to go about designing, implementing and maintaining ESP systems, best practices and patterns for migrating to the cloud or hybrid configurations, when to go with PaaS or IaaS, what options are available for running Kafka in cloud or hybrid environments and what you need to build and maintain successful ESP systems that are secure, performant, reliable, highly-available and scalable.

Azuresatpn19 - An Introduction To Azure Data Factory

Azuresatpn19 - An Introduction To Azure Data FactoryRiccardo Perico Slide deck of my session during Azure Saturday 2019 in Pordenone.

We spoke about Azure Data Factory v2.

Real-Time Machine Learning with Redis, Apache Spark, Tensor Flow, and more wi...

Real-Time Machine Learning with Redis, Apache Spark, Tensor Flow, and more wi...Databricks Predictive intelligence from machine learning has the potential to change everything in our day to day experiences, from education to entertainment, from travel to healthcare, from business to leisure and everything in between. Modern ML frameworks are batch by nature and cannot pivot on the fly to changing user data or situations. Many simple ML applications such as those that enhance the user experience, can benefit from real-time robust predictive models that adapt on the fly.

Join this session to learn how common practices in machine learning such as running a trained model in production can be substantially accelerated and radically simplified by using Redis modules that natively store and execute common models generated by Spark ML and Tensorflow algorithms. We will also discuss the implementation of simple, real-time feed-forward neural networks with Neural Redis and scenarios that can benefit from such efficient, accelerated artificial intelligence.

Real-life implementations of these new techniques at a large consumer credit company for fraud analytics, at an online e-commerce provider for user recommendations and at a large media company for targeting content will also be discussed.

Beyond Relational

Beyond RelationalLynn Langit The document discusses building data pipelines in the cloud. It covers serverless data pipeline patterns using services like BigQuery, Cloud Storage, Cloud Dataflow, and Cloud Pub/Sub. It also compares Cloud Dataflow and Cloud Dataproc for ETL workflows. Key questions around ingestion and ETL are discussed, focusing on volume, variety, velocity and veracity of data. Cloud vendor offerings for streaming and ETL are also compared.

SparkOscope: Enabling Apache Spark Optimization through Cross Stack Monitorin...

SparkOscope: Enabling Apache Spark Optimization through Cross Stack Monitorin...Databricks During the last year, the team at IBM Research at Ireland has been using Apache Spark to perform analytics on large volumes of sensor data. These applications need to be executed on a daily basis, therefore, it was essential for them to understand Spark resource utilization. They found it cumbersome to manually consume and efficiently inspect the CSV files for the metrics generated at the Spark worker nodes.

Although using an external monitoring system like Ganglia would automate this process, they were still plagued with the inability to derive temporal associations between system-level metrics (e.g. CPU utilization) and job-level metrics (e.g. job or stage ID) as reported by Spark. For instance, they were not able to trace back the root cause of a peak in HDFS Reads or CPU usage to the code in their Spark application causing the bottleneck.

To overcome these limitations, they developed SparkOScope. Taking advantage of the job-level information available through the existing Spark Web UI and to minimize source-code pollution, they use the existing Spark Web UI to monitor and visualize job-level metrics of a Spark application (e.g. completion time). More importantly, they extend the Web UI with a palette of system-level metrics of the server/VM/container that each of the Spark job’s executor ran on. Using SparkOScope, you can navigate to any completed application and identify application-logic bottlenecks by inspecting the various plots providing in-depth timeseries for all relevant system-level metrics related to the Spark executors, while also easily associating them with stages, jobs and even source code lines incurring the bottleneck.

They have made Sparkoscope available as a standalone module, and also extended the available Sinks (mongodb, mysql).

Teaching Apache Spark Clusters to Manage Their Workers Elastically: Spark Sum...

Teaching Apache Spark Clusters to Manage Their Workers Elastically: Spark Sum...Spark Summit Devops engineers have applied a great deal of creativity and energy to invent tools that automate infrastructure management, in the service of deploying capable and functional applications. For data-driven applications running on Apache Spark, the details of instantiating and managing the backing Spark cluster can be a distraction from focusing on the application logic. In the spirit of devops, automating Spark cluster management tasks allows engineers to focus their attention on application code that provides value to end-users.

Using Openshift Origin as a laboratory, we implemented a platform where Apache Spark applications create their own clusters and then dynamically manage their own scale via host-platform APIs. This makes it possible to launch a fully elastic Spark application with little more than the click of a button.

We will present a live demo of turn-key deployment for elastic Apache Spark applications, and share what we’ve learned about developing Spark applications that manage their own resources dynamically with platform APIs.

The audience for this talk will be anyone looking for ways to streamline their Apache Spark cluster management, reduce the workload for Spark application deployment, or create self-scaling elastic applications. Attendees can expect to learn about leveraging APIs in the Kubernetes ecosystem that enable application deployments to manipulate their own scale elastically.

MLflow: Infrastructure for a Complete Machine Learning Life Cycle

MLflow: Infrastructure for a Complete Machine Learning Life CycleDatabricks ML development brings many new complexities beyond the traditional software development lifecycle. Unlike in traditional software development, ML developers want to try multiple algorithms, tools and parameters to get the best results, and they need to track this information to reproduce work. In addition, developers need to use many distinct systems to productionize models. To address these problems, many companies are building custom “ML platforms” that automate this lifecycle, but even these platforms are limited to a few supported algorithms and to each company’s internal infrastructure.

In this talk, we will present MLflow, a new open source project from Databricks that aims to design an open ML platform where organizations can use any ML library and development tool of their choice to reliably build and share ML applications. MLflow introduces simple abstractions to package reproducible projects, track results, and encapsulate models that can be used with many existing tools, accelerating the ML lifecycle for organizations of any size.

Data streaming

Data streamingAlberto Paro - The document profiles Alberto Paro and his experience including a Master's Degree in Computer Science Engineering from Politecnico di Milano, experience as a Big Data Practise Leader at NTTDATA Italia, authoring 4 books on ElasticSearch, and expertise in technologies like Apache Spark, Playframework, Apache Kafka, and MongoDB. He is also an evangelist for the Scala and Scala.JS languages.

The document then provides an overview of data streaming architectures, popular message brokers like Apache Kafka, RabbitMQ, and Apache Pulsar, streaming frameworks including Apache Spark, Apache Flink, and Apache NiFi, and streaming libraries such as Reactive Streams.

Streaming Data in the Cloud with Confluent and MongoDB Atlas | Robert Walters...

Streaming Data in the Cloud with Confluent and MongoDB Atlas | Robert Walters...HostedbyConfluent This document discusses streaming data between Confluent Cloud and MongoDB Atlas. It provides an overview of MongoDB Atlas and its fully managed database capabilities in the cloud. It then demonstrates how to stream data from a Python generator application to MongoDB Atlas using Confluent Cloud and its connectors. The presentation concludes by providing a reference architecture for connecting Confluent Platform to MongoDB.

The Key to Machine Learning is Prepping the Right Data with Jean Georges Perrin

The Key to Machine Learning is Prepping the Right Data with Jean Georges Perrin Databricks The document discusses preparing data for machine learning by applying data quality techniques in Spark. It introduces concepts of data quality and machine learning data formats. The main part shows how to use User Defined Functions (UDFs) in Spark SQL to automate transforming raw data into the required formats for machine learning, making the process more efficient and reproducible.

An overview of Amazon Athena

An overview of Amazon AthenaJulien SIMON Amazon Athena is a serverless query service that allows users to run interactive SQL queries on data stored in Amazon S3 without having to load the data into a database. It uses Presto to allow ANSI SQL queries on data in formats like CSV, JSON, and columnar formats like Parquet and ORC. For a dataset of 1 billion rows of sales data stored in S3, Athena queries performed comparably to a basic Redshift cluster and much faster than loading and querying the data in Redshift, making Athena a cost-effective solution for ad-hoc queries on data in S3.

Kick-Start with SMACK Stack

Kick-Start with SMACK StackKnoldus Inc. SMACK is a combination of Spark, Mesos, Akka, Cassandra and Kafka. It is used for pipelined data architecture which is required for the real time data analysis and to integrate all the technology at the right place to efficient data pipeline.

Similar to Mining public datasets using opensource tools: Zeppelin, Spark and Juju (20)

963

963Annu Ahmed This document provides an overview of real-time big data processing using Apache Kafka, Spark Streaming, Scala, and Elastic Search. It defines key concepts like big data, real-time big data, and describes technologies like Hadoop, Apache Kafka, Spark Streaming, Scala, and Elastic Search and how they can be used together for real-time big data processing. The document also provides details about each technology and how they fit into an overall real-time big data architecture.

2015 Data Science Summit @ dato Review

2015 Data Science Summit @ dato ReviewHang Li This document summarizes a data science summit attended by the author. It includes a brief overview of the author's travel itinerary to and from the event. The main body summarizes various sessions and topics discussed, including machine learning platforms and tools like Dato, IBM System ML, Apache Flink, PredictionIO, and DeepLearning4J. Session topics focused on scalable data processing, stream and batch processing, graph processing, and machine learning algorithms. The document provides links to several of the platforms and tools discussed.

Bringing the Power and Familiarity of .NET, C# and F# to Big Data Processing ...

Bringing the Power and Familiarity of .NET, C# and F# to Big Data Processing ...Michael Rys This document introduces .NET for Apache Spark, which allows .NET developers to use the Apache Spark analytics engine for big data and machine learning. It discusses why .NET support is needed for Apache Spark given that much business logic is written in .NET. It provides an overview of .NET for Apache Spark's capabilities including Spark DataFrames, machine learning, and performance that is on par or faster than PySpark. Examples and demos are shown. Future plans are discussed to improve the tooling, expand programming experiences, and provide out-of-box experiences on platforms like Azure HDInsight and Azure Databricks. Readers are encouraged to engage with the open source project and provide feedback.

Building data pipelines for modern data warehouse with Apache® Spark™ and .NE...

Building data pipelines for modern data warehouse with Apache® Spark™ and .NE...Michael Rys This presentation shows how you can build solutions that follow the modern data warehouse architecture and introduces the .NET for Apache Spark support (https://dot.net/spark, https://github.com/dotnet/spark)

Openstack - An introduction/Installation - Presented at Dr Dobb's conference...

Openstack - An introduction/Installation - Presented at Dr Dobb's conference...Rahul Krishna Upadhyaya Slide was presented at Dr. Dobb's Conference in Bangalore.

Talks about Openstack Introduction in general

Projects under Openstack.

Contributing to Openstack.

This was presented jointly by CB Ananth and Rahul at Dr. Dobb's Conference Bangalore on 12th Apr 2014.

Intro to Machine Learning with H2O and AWS

Intro to Machine Learning with H2O and AWSSri Ambati Navdeep Gill @ Galvanize Seattle- May 2016

- Powered by the open source machine learning software H2O.ai. Contributors welcome at: https://github.com/h2oai

- To view videos on H2O open source machine learning software, go to: https://www.youtube.com/user/0xdata

SAP & Open Souce - Give & Take

SAP & Open Souce - Give & TakeJan Penninkhof This document discusses the use of open source tools in software development. It outlines the typical toolstacks used, including programming languages, databases, and frameworks. It notes that open source tools have accelerated startup development by reducing costs and allowing developers greater flexibility in choosing technologies. The document also discusses SAP's embrace of open source, both in powering their own products and services with open source software, as well as contributing their own open source projects.

Apache Spark for Everyone - Women Who Code Workshop

Apache Spark for Everyone - Women Who Code WorkshopAmanda Casari Women Who Code Ignite 2015 Workshop - Introducing Apache Spark in notebooks to technologists, using Java, Scala, Python + R.

Azure Databricks & Spark @ Techorama 2018

Azure Databricks & Spark @ Techorama 2018Nathan Bijnens Spark is an open-source framework for large-scale data processing. Azure Databricks provides Spark as a managed service on Microsoft Azure, allowing users to deploy production Spark jobs and workflows without having to manage infrastructure. It offers an optimized Databricks runtime, collaborative workspace, and integrations with other Azure services to enhance productivity and scale workloads without limits.

ApacheCon 2021 Apache Deep Learning 302

ApacheCon 2021 Apache Deep Learning 302Timothy Spann ApacheCon 2021 Apache Deep Learning 302

Tuesday 18:00 UTC

Apache Deep Learning 302

Timothy Spann

This talk will discuss and show examples of using Apache Hadoop, Apache Kudu, Apache Flink, Apache Hive, Apache MXNet, Apache OpenNLP, Apache NiFi and Apache Spark for deep learning applications. This is the follow up to previous talks on Apache Deep Learning 101 and 201 and 301 at ApacheCon, Dataworks Summit, Strata and other events. As part of this talk, the presenter will walk through using Apache MXNet Pre-Built Models, integrating new open source Deep Learning libraries with Python and Java, as well as running real-time AI streams from edge devices to servers utilizing Apache NiFi and Apache NiFi - MiNiFi. This talk is geared towards Data Engineers interested in the basics of architecting Deep Learning pipelines with open source Apache tools in a Big Data environment. The presenter will also walk through source code examples available in github and run the code live on Apache NiFi and Apache Flink clusters.

Tim Spann is a Developer Advocate @ StreamNative where he works with Apache NiFi, Apache Pulsar, Apache Flink, Apache MXNet, TensorFlow, Apache Spark, big data, the IoT, machine learning, and deep learning. Tim has over a decade of experience with the IoT, big data, distributed computing, streaming technologies, and Java programming. Previously, he was a Principal Field Engineer at Cloudera, a senior solutions architect at AirisData and a senior field engineer at Pivotal. He blogs for DZone, where he is the Big Data Zone leader, and runs a popular meetup in Princeton on big data, the IoT, deep learning, streaming, NiFi, the blockchain, and Spark. Tim is a frequent speaker at conferences such as IoT Fusion, Strata, ApacheCon, Data Works Summit Berlin, DataWorks Summit Sydney, and Oracle Code NYC. He holds a BS and MS in computer science.

* https://github.com/tspannhw/ApacheDeepLearning302/

* https://github.com/tspannhw/nifi-djl-processor

* https://github.com/tspannhw/nifi-djlsentimentanalysis-processor

* https://github.com/tspannhw/nifi-djlqa-processor

* https://www.linkedin.com/pulse/2021-schedule-tim-spann/

The other Apache Technologies your Big Data solution needs

The other Apache Technologies your Big Data solution needsgagravarr The document discusses many Apache projects relevant to big data solutions, including projects for loading and querying data like Pig and Gora, building MapReduce jobs like Avro and Thrift, cloud computing with LibCloud and DeltaCloud, and extracting information from unstructured data with Tika, UIMA, OpenNLP, and cTakes. It also mentions utility projects like Chemistry, JMeter, Commons, and ManifoldCF.

Cassandra Summit 2014: Apache Spark - The SDK for All Big Data Platforms

Cassandra Summit 2014: Apache Spark - The SDK for All Big Data PlatformsDataStax Academy Apache Spark has grown to be one of the largest open source communities in big data, with over 190 developers and dozens of companies contributing. The latest 1.0 release alone includes contributions from 117 people. A clean API, interactive shell, distributed in-memory computation, stream processing, interactive SQL, and libraries delivering everything from machine learning to graph processing make it an excellent unified platform to solve a number of problems. Apache Spark works very well with a growing number of big data solutions, including Cassandra and Hadoop. Come learn about Apache Spark and see how easy it is for you to get started using Spark to build your own high performance big data applications today.

Writing Apache Spark and Apache Flink Applications Using Apache Bahir

Writing Apache Spark and Apache Flink Applications Using Apache BahirLuciano Resende Big Data is all about being to access and process data in various formats, and from various sources. Apache Bahir provides extensions to distributed analytic platforms providing them access to different data sources. In this talk we will introduce you to Apache Bahir and its various connectors that are available for Apache Spark and Apache Flink. We will also go over the details of how to build, test and deploy an Spark Application using the MQTT data source for the new Apache Spark 2.0 Structure Streaming functionality.

963

963Annu Ahmed This document provides an overview of real time big data processing using Apache Kafka, Spark Streaming, Scala, and Elastic search. It begins with introductions to data mining, big data, and real time big data. It then discusses Apache Hadoop, Scala, Spark Streaming, Kafka, and Elastic search. The key technologies covered allow for distributed, low latency processing of streaming data at large volumes and velocities.

Apache-Flink-What-How-Why-Who-Where-by-Slim-Baltagi

Apache-Flink-What-How-Why-Who-Where-by-Slim-BaltagiSlim Baltagi This introductory level talk is about Apache Flink: a multi-purpose Big Data analytics framework leading a movement towards the unification of batch and stream processing in the open source.

With the many technical innovations it brings along with its unique vision and philosophy, it is considered the 4 G (4th Generation) of Big Data Analytics frameworks providing the only hybrid (Real-Time Streaming + Batch) open source distributed data processing engine supporting many use cases: batch, streaming, relational queries, machine learning and graph processing.

In this talk, you will learn about:

1. What is Apache Flink stack and how it fits into the Big Data ecosystem?

2. How Apache Flink integrates with Hadoop and other open source tools for data input and output as well as deployment?

3. Why Apache Flink is an alternative to Apache Hadoop MapReduce, Apache Storm and Apache Spark.

4. Who is using Apache Flink?

5. Where to learn more about Apache Flink?

Azure Databricks - An Introduction 2019 Roadshow.pptx

Azure Databricks - An Introduction 2019 Roadshow.pptxpascalsegoul Structure proposée du PowerPoint

1. Introduction au contexte

Objectif métier

Pourquoi Snowflake ?

Pourquoi Data Vault ?

2. Architecture cible

Schéma simplifié : zone RAW → Data Vault → Data Marts

Description des schémas : RAW, DV, DM

3. Données sources

Exemple : fichier CSV de commandes (client, produit, date, montant, etc.)

Structure des fichiers

4. Zone de staging (RAW)

CREATE STAGE

COPY INTO → vers table RAW

Screenshot du script SQL + résultat

5. Création des HUBs

HUB_CLIENT, HUB_PRODUIT…

Définition métier

Script SQL avec INSERT DISTINCT

6. Création des LINKS

LINK_COMMANDE (Client ↔ Produit ↔ Date)

Structure avec clés techniques

Script SQL + logique métier

7. Création des SATELLITES

SAT_CLIENT_DETAILS, SAT_PRODUIT_DETAILS…

Historisation avec LOAD_DATE, END_DATE, HASH_DIFF

Script SQL (MERGE ou INSERT conditionnel)

8. Orchestration

Exemple de flux via dbt ou Airflow (ou simplement séquence SQL)

Screenshot modèle YAML dbt ou DAG Airflow

9. Création des vues métiers (DM)

Vue agrégée des ventes mensuelles

SELECT complexe sur HUB + LINK + SAT

Screenshot ou exemple de résultat

10. Visualisation

Connexion à Power BI / Tableau

Screenshot d’un graphique simple basé sur une vue DM

11. Conclusion et bénéfices

Fiabilité, auditabilité, versioning, historique

Adapté aux environnements de production

Resume

Resumenagapandu Nagapandu Potti seeks a software engineering role that utilizes his technical skills. He has strong skills in Java, C, C++, Ruby, Scala, C#, databases like MySQL and MongoDB, web development technologies like JavaScript, AngularJS, and Ruby on Rails. He has work experience developing applications using these skills at Citrix and Cerner. Potti has a Master's degree in Computer Science from the University of Florida and a Bachelor's degree in Computer Science from Manipal University.

Drupal In The Cloud

Drupal In The CloudBret Piatt The secret is out – Drupal has become the ‘go-to’ open source software for the publication and management of website content. By pairing Drupal with cloud technologies there is a whole new world of user benefits well beyond scale and performance.

In this session, Bret Piatt, director, technical alliances at Rackspace Hosting will discuss how to best take advantage of cloud technologies with Drupal sites. The panel presentation will address:

• Leveraging the cloud ecosystem for managing configuration, code, and backups

• How to scale Drupal clusters by integrating with cloud APIs

• Enhancing site scale and performance by taking advantage of cloud file storage/CDN

• Cloud/Drupal success stories such as Chapter Three’s ( http://www.chapterthree.com ) on Mercury, a Drupal PaaS built on The Rackspace Cloud’s Cloud Servers

HBase Meetup @ Cask HQ 09/25

HBase Meetup @ Cask HQ 09/25Cask Data The document summarizes an agenda for an HBase Meetup at Cask HQ. The agenda includes announcements about Cask's newly open sourced projects - CDAP (Cask Data Application Platform), Coopr (cluster provisioning), and Tigon (real-time streaming on YARN and HBase). It also lists talks on using HBase at Flipboard and master topologies after HBase 1.0. Cask is now fully open source and aims to build communities around these projects to help more developers build applications on Hadoop platforms.

Flink in action

Flink in actionArtem Semenenko Artsem Semianenko (Adform) - "Flink in action или как приручить белочку"

Slides for presentation: https://www.youtube.com/watch?v=YSI5_RFlcPE

Source: https://github.com/art4ul/flink-demo

Openstack - An introduction/Installation - Presented at Dr Dobb's conference...

Openstack - An introduction/Installation - Presented at Dr Dobb's conference...Rahul Krishna Upadhyaya

Ad

Recently uploaded (20)

Deloitte Analytics - Applying Process Mining in an audit context

Deloitte Analytics - Applying Process Mining in an audit contextProcess mining Evangelist Mieke Jans is a Manager at Deloitte Analytics Belgium. She learned about process mining from her PhD supervisor while she was collaborating with a large SAP-using company for her dissertation.

Mieke extended her research topic to investigate the data availability of process mining data in SAP and the new analysis possibilities that emerge from it. It took her 8-9 months to find the right data and prepare it for her process mining analysis. She needed insights from both process owners and IT experts. For example, one person knew exactly how the procurement process took place at the front end of SAP, and another person helped her with the structure of the SAP-tables. She then combined the knowledge of these different persons.

Classification_in_Machinee_Learning.pptx

Classification_in_Machinee_Learning.pptxwencyjorda88 Brief powerpoint presentation about different classification of machine learning

Perencanaan Pengendalian-Proyek-Konstruksi-MS-PROJECT.pptx

Perencanaan Pengendalian-Proyek-Konstruksi-MS-PROJECT.pptxPareaRusan planning and calculation monitoring project

Developing Security Orchestration, Automation, and Response Applications

Developing Security Orchestration, Automation, and Response ApplicationsVICTOR MAESTRE RAMIREZ Developing Security Orchestration, Automation, and Response Applications

CTS EXCEPTIONSPrediction of Aluminium wire rod physical properties through AI...

CTS EXCEPTIONSPrediction of Aluminium wire rod physical properties through AI...ThanushsaranS Prediction of Aluminium wire rod physical properties through AI, ML

or any modern technique for better productivity and quality control.

Data Science Courses in India iim skills

Data Science Courses in India iim skillsdharnathakur29 This comprehensive Data Science course is designed to equip learners with the essential skills and knowledge required to analyze, interpret, and visualize complex data. Covering both theoretical concepts and practical applications, the course introduces tools and techniques used in the data science field, such as Python programming, data wrangling, statistical analysis, machine learning, and data visualization.

Adobe Analytics NOAM Central User Group April 2025 Agent AI: Uncovering the S...

Adobe Analytics NOAM Central User Group April 2025 Agent AI: Uncovering the S...gmuir1066 Discussion of Highlights of Adobe Summit 2025

GenAI for Quant Analytics: survey-analytics.ai

GenAI for Quant Analytics: survey-analytics.aiInspirient Pitched at the Greenbook Insight Innovation Competition as apart of IIEX North America 2025 on 30 April 2025 in Washington, D.C.

Join us at survey-analytics.ai!

Just-In-Timeasdfffffffghhhhhhhhhhj Systems.ppt

Just-In-Timeasdfffffffghhhhhhhhhhj Systems.pptssuser5f8f49 Just-in-time: Repetitive production system in which processing and movement of materials and goods occur just as they are needed, usually in small batches

JIT is characteristic of lean production systems

JIT operates with very little “fat”

Ad

Mining public datasets using opensource tools: Zeppelin, Spark and Juju

- 1. Mining Public Datasets Using Open Source Tools At scale & on a budget by Alexander

- 2. Software Engineer at NFLabs, Seoul, South Korea Co-organizer of SeoulTech Society Committer and PPMC member of Apache Zeppelin (Incubating) @seoul_engineer github.com/bzz Alexander

- 3. IS DATA IMPORTANT? Content Streaming Services Taxi Housing Web Search Kuaidi OpenHouse IoT

- 4. CONTEXT Size of even Public Data is huge and growing There could be more research, applications and data products build using that data Quality and number of free tools available to public to crunch that data is constantly improving Cloud brings affordable computations @ scale

- 5. PUBLIC DATA = OPPORTUNITY

- 8. • Internet archives • Web applications logs (wikipedia activity, github activity) • Genome • AdClicks • Webserver access logs • Network traffic • Scientific datasets • Images, Songs (Million Song Dataset) • Reviews, • Social media • Flight timetables • Taxis • n-gram language model DATASETS

- 9. DATASETS • 300Gb compressed • Collaboration google and github engineers • Events on PR, repo, issues, comments in JSON http://githubarchive.org

- 14. Common Crawl https://commoncrawl.org Nonprofit, by Factual On AWS S3 in WARC, WAT, formats since 2013, monthly: ~150Tb compressed, 2+bln ulrs

- 15. URL Index by Ilya Kreymer of @webrecorder_io http://index.commoncrawl.org/

- 19. TOOLS OVERVIEW Generic: Grep, Python, Ruby, JVM - all good, but hard to scale beyond single machine or data format Hight-performance: MPI, Hadoop, HPC - awesome but complex, not easy, problem specific, not very accessible New, scalable: Spark, Flink, Zeppelin - easy (not simple) and robust

- 21. Apache Software Foundation 1999 - 21 founders of 1 project 2016 - 9 Board of Directors 600 Foundation members 2000+ committers of 171 projects (+55 incubating) Keywords: meritocracy, community over code, consensus Provide: infrastructure, legal support, way of building software http://www.apache.org/foundation/

- 22. Apache Spark Scala, Python, R Apache Zeppelin Modern Web GUI, plays nicely with Spark, Flink, Elasticsearch, etc. Easy to set up. Warcbase Spark library for saved crawl data (WARC) Juju Scales, integration with Spark, Zeppelin, AWS, Ganglia NEW, SCALABLE TOOLS

- 23. APACHE SPARK From Berkeley AMP Labs, since 2010 Founded Databricks since 2013, joined Apache since 2014 1000+ contributors REPL + Java, Scala, Python, R APIs http://spark.apache.org

- 24. APACHE SPARK Has much more: GraphX, MLlib, SQL https://spark.apache.org/examples.html http://spark.apache.org Parallel collections API (similar to FlumeJava, Crunch, Cascading)

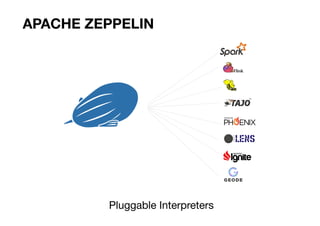

- 25. • Notebook style GUI on top of backend processing system • Plays nicely with all the eco-system Spark, Flink, SQL, Elasticsearch, etc. • Easy to set up APACHE ZEPPELIN (INCUBATING) http://zeppelin.incubator.apache.org

- 27. Enters ASF Incubation12.2014 08.2013 NFLabs Internal project Hive/Shark 12.2012 Commercial App using AMP Lab Shark 0.5 10.2013 Prototype Hive/Shark APACHE ZEPPELIN PROJECT TIMELINE 01.2016 3 major releases http://zeppelin.incubator.apache.org

- 30. Spark library for WARC (Web ARChive) data processing * text analysis * site link structure WARCBASE https://github.com/lintool/warcbase http://lintool.github.io/warcbase-docs

- 31. Service modeling at scale Deploymentconfiguration automation + Integration with Spark, Zeppelin, Ganglia, etc + AWS, GCE, Azure, LXC, etc JUJU https://jujucharms.com/

- 32. $ apt-get install juju-core juju-quickstart # or $ brew install juju juju-quickstart $ juju generate-config #LXC, AWS, GCE, Azure, VMWare, OpenStack $ juju bootstrap $ juju quickstart apache-hadoop-spark-zeppelin $ juju expose spark zeppelin $ juju add-unit -n4 slave JUJU http://bigdata.juju.solutions/getstarted

- 33. JUJU http://bigdata.juju.solutions/getstarted 7 node cluster designed to scale out

- 35. 1 core 10s PC 1000 instances APPROACH: SCALE AND BUDGET Prototype Estimate the cost Scale out Your laptop AWS spot instances Deployment automation

- 36. TAKEAWAY There are plenty of free tools out there To crunch the data for fun and profit They are easy (not simple) to learn and generic enough

- 37. Thank you