High Availabiltity & Replica Sets with mongoDB

Download as PPT, PDF17 likes14,520 views

A short presentation with some discussion about mongoDB, why I use it and how to set up Replica Sets for a fully HA/failover enabled system.

1 of 23

Downloaded 174 times

![Add the member nodes

- You'll need to run 'mongo' to get the mongo

shell then:

rs.initiate({_id: 'yoursetname', members: [

{_id: 0, host: '192.168.1.1:27017'},

{_id: 1, host: '192.168.1.2:27017'},

{_id: 2, host: '192.168.1.3:27017'}]

})

- This will spin up the set](https://image.slidesharecdn.com/hamongodb-120503050543-phpapp01/85/High-Availabiltity-Replica-Sets-with-mongoDB-18-320.jpg)

Ad

Recommended

Introducing MongoDB in a multi-site HA environment

Introducing MongoDB in a multi-site HA environmentSebastian Geib This presentation was given by us at Mongo Munich on 10th of October 2011. It covers the introduction and mostly the durability and robustness testing of MongoDB at AutoScout24 before launching a new site.

High Performance Wordpress

High Performance WordpressGareth Davies My presentation from Wordconf 2011 about High Performance Wordpress. Covers tuning the whole LAMP stack, some stuff on Wordpress and Caching (both plugins and Varnish).

Building Scalable Web Apps - LVL.UP KL

Building Scalable Web Apps - LVL.UP KLGareth Davies This is my talk from the July LVL.UP KL meeting (formerly WebCamp KL) held on August 6th at Mindvalley, Bangsar.

The talk covers a basic introduction to scalability, 5 things to consider/think about and 5 things you can do build at scale.

WebCampKL Group is here - https://www.facebook.com/groups/webcamp/

The video of this talk is available here: http://youtu.be/Djs-8lGpz_U (also added as the 19th slide).

Setting up mongo replica set

Setting up mongo replica setSudheer Kondla This document provides instructions for setting up a MongoDB replica set across multiple virtual machines. It describes installing MongoDB on each VM, creating directories to store data, configuring the yum repository, and installing MongoDB packages. It then explains how to initialize and configure a local 3-node replica set, add members, and check the replica set status. Finally, it briefly discusses connecting to primary and secondary members, performing CRUD operations, and setting up MongoDB Management Service (MMS) for monitoring and backups.

MongoDb scalability and high availability with Replica-Set

MongoDb scalability and high availability with Replica-SetVivek Parihar One of the much awaited features in MongoDB 1.6 is replica sets, MongoDB replication solution providing automatic failover and recovery.

MongoDB High Availabiltity with Replica Sets

This talk will cover -

• What is Replica Set?

• Replication Process

• Advantaged of Replica Set vs master/slave

• How to set up replica set on production Demo

This video is tutorial for setting up the MongoDb replica-set ion production environment. In this i took 3 instances which have already mongo installed and running. This tutorial consists-:

1.Setup the each instance of replica set

2.modify the mongodb.conf to include replica set information

3.configure the servers to include in replica set

4.then cross checking if we kill one primary then secondary becomes primary or not.

Setting up mongodb sharded cluster in 30 minutes

Setting up mongodb sharded cluster in 30 minutesSudheer Kondla The document describes how to configure and deploy a MongoDB sharded cluster with 6 virtual machines in 30 minutes. It provides step-by-step instructions on installing MongoDB, setting up the config servers, adding shards, and enabling sharding for databases and collections. Key aspects include designating MongoDB instances as config servers, starting mongos processes connected to the config servers, adding shards by hostname and port, and enabling sharding on specific databases and collections with shard keys.

Clug 2011 March web server optimisation

Clug 2011 March web server optimisationgrooverdan This document discusses various techniques for optimizing website performance, including:

1. Network optimizations like compression, HTTP caching, and keeping connections alive.

2. Structuring content efficiently and using tools like YSlow to measure performance.

3. Application caching of pages, database queries, and other frequently accessed content.

4. Database tuning through indexing, query optimization, and offloading text searches.

5. Monitoring resource usage and business metrics to ensure performance meets targets.

Intro to MySQL Master Slave Replication

Intro to MySQL Master Slave Replicationsatejsahu This document provides an introduction to MySQL master-slave replication. It defines replication as copying data from a master database server to one or more slave servers. The key concepts of master and slave are explained. Advantages of replication include scaling out solutions, increasing data security, enabling analytics, and distributing data over long distances. Event-based and GTID-based replication methods are described. The document also demonstrates setting up master-slave replication between two AWS MySQL instances and provides guidance on when replication is best used, such as for systems with many reads and few writes.

Mysql data replication

Mysql data replicationTuấn Ngô This document discusses MySQL data replication. It explains that replication asynchronously copies data from a master database server to slave servers, allowing the slaves to handle read operations and serve as backups. It provides configuration steps for setting up replication by enabling binary logging on the master, setting server IDs, and specifying replication users and hosts. Code examples demonstrate how to configure the master and slave servers and check the slave's replication status. Finally, it briefly mentions alternative replication topologies and tools.

Development to Production with Sharded MongoDB Clusters

Development to Production with Sharded MongoDB ClustersSeveralnines Severalnines presentation at MongoDB Stockholm Conference.

Presentation covers:

- mongoDB sharding/clustering concepts

- recommended dev/test/prod setups

- how to verify your deployment

- how to avoid downtime

- what MongoDB metrics to watch

- when to scale

Strategies for Backing Up MongoDB

Strategies for Backing Up MongoDBMongoDB For the first time this year, 10gen will be offering a track completely dedicated to Operations at MongoSV, 10gen's annual MongoDB user conference on December 4. Learn more at MongoSV.com

Come learn about the different ways to back up your single servers, replica sets, and sharded clusters

Availability and scalability in mongo

Availability and scalability in mongoMd. Khairul Anam This document provides an overview of availability, scalability, and sharding in MongoDB. It discusses:

1) How replica sets provide availability and failover capabilities within a single data center.

2) The limitations of replica sets across multiple data centers in terms of write availability and consistency.

3) How sharding allows horizontal scaling across multiple servers and data centers to handle increased user and data growth beyond the capabilities of a single server.

4) The key components of a sharded cluster in MongoDB including config servers, mongos routers, shards, and how data is partitioned and distributed based on the shard key.

Backup, Restore, and Disaster Recovery

Backup, Restore, and Disaster RecoveryMongoDB The document discusses various backup, restore, and disaster recovery strategies for MongoDB databases. It outlines options for backing up data using mongodump, copying database files, or taking filesystem snapshots. For restoring, it recommends using mongorestore which can replay oplog entries for point-in-time recovery. It also stresses the importance of testing restores. For disaster recovery, it suggests using replica sets for redundancy across multiple data centers and regions to avoid single points of failure.

PostgreSQL Replication in 10 Minutes - SCALE

PostgreSQL Replication in 10 Minutes - SCALEPostgreSQL Experts, Inc. This document summarizes a presentation about PostgreSQL replication. It discusses different replication terms like master/slave and primary/secondary. It also covers replication mechanisms like statement-based and binary replication. The document outlines how to configure and administer replication through files like postgresql.conf and recovery.conf. It discusses managing replication including failover, failback, remastering and replication lag. It also covers synchronous replication and cascading replication setups.

Tuning Linux for Databases.

Tuning Linux for Databases.Alexey Lesovsky This presentation discusses optimizing Linux systems for PostgreSQL databases. Linux is a good choice for databases due to its active development, features, stability, and community support. The presentation covers optimizing various system resources like CPU scheduling, memory, storage I/O, and power management to improve database performance. Specific topics include disabling transparent huge pages, tuning block I/O schedulers, and selecting appropriate scaling governors. The overall message is that Linux can be adapted for database workloads through testing and iterative changes.

Evolution of MongoDB Replicaset and Its Best Practices

Evolution of MongoDB Replicaset and Its Best PracticesMydbops There are several exciting and long-awaited features released from MongoDB 4.0. He will focus on the prime features, the kind of problem it solves, and the best practices for deploying replica sets.

How to monitor MongoDB

How to monitor MongoDBServer Density David Mytton is a MongoDB master and the founder of Server Density. In this presentation David delves deeper into what's discussed in our how to monitor MongoDB tutorial (https://blog.serverdensity.com/monitor-mongodb/), with the aim of taking you through:

Key MongoDB metrics to monitor.

Non-critical MongoDB metrics to monitor.

Alerts to set for MongoDB on production.

Tools for monitoring MongoDB.

One Tool to Rule Them All- Seamless SQL on MongoDB, MySQL and Redis with Apac...

One Tool to Rule Them All- Seamless SQL on MongoDB, MySQL and Redis with Apac...Tim Vaillancourt This document provides an overview of Apache Spark, a fast and general engine for large-scale data processing. It discusses how Spark can be used to query and summarize data stored in different data sources like MongoDB, MySQL, and Redis in a single Spark job. The document then demonstrates a Spark job that retrieves weather station data from MongoDB and MySQL, aggregates it, stores the results in Redis, and retrieves the top 10 results.

Using ZFS file system with MySQL

Using ZFS file system with MySQLMydbops This slide was presented at Mydbops Database Meetup 4 by Bajranj ( Zenefits ). ZFS as a filesystem has good features that can enhance MySQL by compression, Quick Snapshots and others.

Postgres connections at scale

Postgres connections at scaleMydbops PostgreSQL connections at scale was the presentation by our external speaker at our 8th opensource database meetup. The presentation helps you comprehend on database connections with its cost, gauge the need for a connection pooler, Pgbouncer overview with its features, monitoring, and deployment best practices.

MySQL Oslayer performace optimization

MySQL Oslayer performace optimizationLouis liu This document provides 10 tips for optimizing MySQL database performance at the operating system level. The tips include using SSDs instead of HDDs for faster I/O, allocating large amounts of memory, avoiding swap space, keeping the MySQL version up to date, using file systems without barriers, configuring RAID cards for write-back caching, and leveraging huge pages. Overall, the tips aim to improve I/O speeds and memory usage to enhance MySQL query processing performance.

MongoDB performance tuning and load testing, NOSQL Now! 2013 Conference prese...

MongoDB performance tuning and load testing, NOSQL Now! 2013 Conference prese...ronwarshawsky This document discusses MongoDB performance tuning and load testing. It provides an overview of areas to optimize like OS, storage and database tuning. Specific techniques are outlined like using SSDs, adjusting journal settings and compacting collections. Load testing is recommended to validate upgrades and hardware changes using tools like Mongo-Perf. The document is from a presentation by Ron Warshawsky of Enteros, a software company that provides performance management and load testing solutions for databases.

Logical Replication in PostgreSQL - FLOSSUK 2016

Logical Replication in PostgreSQL - FLOSSUK 2016Petr Jelinek Slides from FLOSSUK Spring 2016 conference about the logical replication in PostgreSQL using pglogical extension.

Advanced Administration, Monitoring and Backup

Advanced Administration, Monitoring and BackupMongoDB Sailthru has been using MongoDB for 4 years, pushing the system to scale. Maintaining a high degree of client-side customizability while growing aggressively has posed unique challenges to our infrastructure. We have maintained high uptime and performance by using monitoring that covers expected use patterns as well as monitoring that catches edge cases for new and unexpected access to the database. In this session, we will talk about Sailthru's use of MongoDB Management Service (MMS), as well as areas in which we have implemented custom monitoring and alerting tools. I will also discuss our transition from a hybrid backup solution using on-premise hardware and AWS snapshots, to using backups with MMS, and how this has benefited Sailthru.

Backup, restore and repair database in mongo db linux file

Backup, restore and repair database in mongo db linux filePrem Regmi This document discusses backup and restore options for MongoDB databases. It describes mongodump and mongorestore tools that can backup databases to files and restore from files. It also discusses repairing databases using the repair command if issues occur from unexpected shutdowns. The most important thing is being able to restore backups.

Monitoring MongoDB’s Engines in the Wild

Monitoring MongoDB’s Engines in the WildTim Vaillancourt Tim Vaillancourt is a senior technical operations architect specializing in MongoDB. He has over 10 years of experience tuning Linux for database workloads and monitoring technologies like Nagios, MRTG, Munin, Zabbix, Cacti, and Graphite. He discussed the various MongoDB storage engines including MMAPv1, WiredTiger, RocksDB, and TokuMX. Key metrics for monitoring the different engines include lock ratio, page faults, background flushing times, checkpoints/compactions, replication lag, and scanned/moved documents. High-level operating system metrics like CPU, memory, disk, and network utilization are also important for ensuring MongoDB has sufficient resources.

Как PostgreSQL работает с диском

Как PostgreSQL работает с дискомPostgreSQL-Consulting Как в PostgreSQL устроено взаимодействие с диском, какие проблемы производительности при этом бывают и как их решать выбором подходящего hardware, настройками операционной системы и настройками PostgreSQL

Apache Traffic Server

Apache Traffic Serversupertom Apache Traffic Server is a high performance caching proxy that can improve performance and uptime. It is open source software originally created by Yahoo and used widely at Yahoo. It can be used as a content delivery network, reverse proxy, forward proxy, and general proxy. Configuration primarily involves files like remap.config, records.config, and storage.config. Plugins can also be created to extend its functionality.

MongoDB and Amazon Web Services: Storage Options for MongoDB Deployments

MongoDB and Amazon Web Services: Storage Options for MongoDB DeploymentsMongoDB When using MongoDB and AWS, you want to design your infrastructure to avoid storage bottlenecks and make the best use of your available storage resources. AWS offers a myriad of storage options, including ephemeral disks, EBS, Provisioned IOPS, and ephemeral SSD's, each offering different performance and persistence characteristics. In this session, we’ll evaluate each of these options in the context of your MongoDB deployment, assessing the benefits and drawbacks of each.

MongoDB Database Replication

MongoDB Database ReplicationMehdi Valikhani Database replication involves keeping identical copies of data on different servers to provide redundancy and minimize downtime. Replication is recommended for databases in production from the start. A MongoDB replica set consists of a primary server that handles client requests and secondary servers that copy the primary's data. Replica sets can include up to 50 members with 7 voting members and use an oplog to replicate operations from the primary to secondaries. For elections and writes to succeed, a majority of voting members must be reachable.

Ad

More Related Content

What's hot (20)

Mysql data replication

Mysql data replicationTuấn Ngô This document discusses MySQL data replication. It explains that replication asynchronously copies data from a master database server to slave servers, allowing the slaves to handle read operations and serve as backups. It provides configuration steps for setting up replication by enabling binary logging on the master, setting server IDs, and specifying replication users and hosts. Code examples demonstrate how to configure the master and slave servers and check the slave's replication status. Finally, it briefly mentions alternative replication topologies and tools.

Development to Production with Sharded MongoDB Clusters

Development to Production with Sharded MongoDB ClustersSeveralnines Severalnines presentation at MongoDB Stockholm Conference.

Presentation covers:

- mongoDB sharding/clustering concepts

- recommended dev/test/prod setups

- how to verify your deployment

- how to avoid downtime

- what MongoDB metrics to watch

- when to scale

Strategies for Backing Up MongoDB

Strategies for Backing Up MongoDBMongoDB For the first time this year, 10gen will be offering a track completely dedicated to Operations at MongoSV, 10gen's annual MongoDB user conference on December 4. Learn more at MongoSV.com

Come learn about the different ways to back up your single servers, replica sets, and sharded clusters

Availability and scalability in mongo

Availability and scalability in mongoMd. Khairul Anam This document provides an overview of availability, scalability, and sharding in MongoDB. It discusses:

1) How replica sets provide availability and failover capabilities within a single data center.

2) The limitations of replica sets across multiple data centers in terms of write availability and consistency.

3) How sharding allows horizontal scaling across multiple servers and data centers to handle increased user and data growth beyond the capabilities of a single server.

4) The key components of a sharded cluster in MongoDB including config servers, mongos routers, shards, and how data is partitioned and distributed based on the shard key.

Backup, Restore, and Disaster Recovery

Backup, Restore, and Disaster RecoveryMongoDB The document discusses various backup, restore, and disaster recovery strategies for MongoDB databases. It outlines options for backing up data using mongodump, copying database files, or taking filesystem snapshots. For restoring, it recommends using mongorestore which can replay oplog entries for point-in-time recovery. It also stresses the importance of testing restores. For disaster recovery, it suggests using replica sets for redundancy across multiple data centers and regions to avoid single points of failure.

PostgreSQL Replication in 10 Minutes - SCALE

PostgreSQL Replication in 10 Minutes - SCALEPostgreSQL Experts, Inc. This document summarizes a presentation about PostgreSQL replication. It discusses different replication terms like master/slave and primary/secondary. It also covers replication mechanisms like statement-based and binary replication. The document outlines how to configure and administer replication through files like postgresql.conf and recovery.conf. It discusses managing replication including failover, failback, remastering and replication lag. It also covers synchronous replication and cascading replication setups.

Tuning Linux for Databases.

Tuning Linux for Databases.Alexey Lesovsky This presentation discusses optimizing Linux systems for PostgreSQL databases. Linux is a good choice for databases due to its active development, features, stability, and community support. The presentation covers optimizing various system resources like CPU scheduling, memory, storage I/O, and power management to improve database performance. Specific topics include disabling transparent huge pages, tuning block I/O schedulers, and selecting appropriate scaling governors. The overall message is that Linux can be adapted for database workloads through testing and iterative changes.

Evolution of MongoDB Replicaset and Its Best Practices

Evolution of MongoDB Replicaset and Its Best PracticesMydbops There are several exciting and long-awaited features released from MongoDB 4.0. He will focus on the prime features, the kind of problem it solves, and the best practices for deploying replica sets.

How to monitor MongoDB

How to monitor MongoDBServer Density David Mytton is a MongoDB master and the founder of Server Density. In this presentation David delves deeper into what's discussed in our how to monitor MongoDB tutorial (https://blog.serverdensity.com/monitor-mongodb/), with the aim of taking you through:

Key MongoDB metrics to monitor.

Non-critical MongoDB metrics to monitor.

Alerts to set for MongoDB on production.

Tools for monitoring MongoDB.

One Tool to Rule Them All- Seamless SQL on MongoDB, MySQL and Redis with Apac...

One Tool to Rule Them All- Seamless SQL on MongoDB, MySQL and Redis with Apac...Tim Vaillancourt This document provides an overview of Apache Spark, a fast and general engine for large-scale data processing. It discusses how Spark can be used to query and summarize data stored in different data sources like MongoDB, MySQL, and Redis in a single Spark job. The document then demonstrates a Spark job that retrieves weather station data from MongoDB and MySQL, aggregates it, stores the results in Redis, and retrieves the top 10 results.

Using ZFS file system with MySQL

Using ZFS file system with MySQLMydbops This slide was presented at Mydbops Database Meetup 4 by Bajranj ( Zenefits ). ZFS as a filesystem has good features that can enhance MySQL by compression, Quick Snapshots and others.

Postgres connections at scale

Postgres connections at scaleMydbops PostgreSQL connections at scale was the presentation by our external speaker at our 8th opensource database meetup. The presentation helps you comprehend on database connections with its cost, gauge the need for a connection pooler, Pgbouncer overview with its features, monitoring, and deployment best practices.

MySQL Oslayer performace optimization

MySQL Oslayer performace optimizationLouis liu This document provides 10 tips for optimizing MySQL database performance at the operating system level. The tips include using SSDs instead of HDDs for faster I/O, allocating large amounts of memory, avoiding swap space, keeping the MySQL version up to date, using file systems without barriers, configuring RAID cards for write-back caching, and leveraging huge pages. Overall, the tips aim to improve I/O speeds and memory usage to enhance MySQL query processing performance.

MongoDB performance tuning and load testing, NOSQL Now! 2013 Conference prese...

MongoDB performance tuning and load testing, NOSQL Now! 2013 Conference prese...ronwarshawsky This document discusses MongoDB performance tuning and load testing. It provides an overview of areas to optimize like OS, storage and database tuning. Specific techniques are outlined like using SSDs, adjusting journal settings and compacting collections. Load testing is recommended to validate upgrades and hardware changes using tools like Mongo-Perf. The document is from a presentation by Ron Warshawsky of Enteros, a software company that provides performance management and load testing solutions for databases.

Logical Replication in PostgreSQL - FLOSSUK 2016

Logical Replication in PostgreSQL - FLOSSUK 2016Petr Jelinek Slides from FLOSSUK Spring 2016 conference about the logical replication in PostgreSQL using pglogical extension.

Advanced Administration, Monitoring and Backup

Advanced Administration, Monitoring and BackupMongoDB Sailthru has been using MongoDB for 4 years, pushing the system to scale. Maintaining a high degree of client-side customizability while growing aggressively has posed unique challenges to our infrastructure. We have maintained high uptime and performance by using monitoring that covers expected use patterns as well as monitoring that catches edge cases for new and unexpected access to the database. In this session, we will talk about Sailthru's use of MongoDB Management Service (MMS), as well as areas in which we have implemented custom monitoring and alerting tools. I will also discuss our transition from a hybrid backup solution using on-premise hardware and AWS snapshots, to using backups with MMS, and how this has benefited Sailthru.

Backup, restore and repair database in mongo db linux file

Backup, restore and repair database in mongo db linux filePrem Regmi This document discusses backup and restore options for MongoDB databases. It describes mongodump and mongorestore tools that can backup databases to files and restore from files. It also discusses repairing databases using the repair command if issues occur from unexpected shutdowns. The most important thing is being able to restore backups.

Monitoring MongoDB’s Engines in the Wild

Monitoring MongoDB’s Engines in the WildTim Vaillancourt Tim Vaillancourt is a senior technical operations architect specializing in MongoDB. He has over 10 years of experience tuning Linux for database workloads and monitoring technologies like Nagios, MRTG, Munin, Zabbix, Cacti, and Graphite. He discussed the various MongoDB storage engines including MMAPv1, WiredTiger, RocksDB, and TokuMX. Key metrics for monitoring the different engines include lock ratio, page faults, background flushing times, checkpoints/compactions, replication lag, and scanned/moved documents. High-level operating system metrics like CPU, memory, disk, and network utilization are also important for ensuring MongoDB has sufficient resources.

Как PostgreSQL работает с диском

Как PostgreSQL работает с дискомPostgreSQL-Consulting Как в PostgreSQL устроено взаимодействие с диском, какие проблемы производительности при этом бывают и как их решать выбором подходящего hardware, настройками операционной системы и настройками PostgreSQL

Apache Traffic Server

Apache Traffic Serversupertom Apache Traffic Server is a high performance caching proxy that can improve performance and uptime. It is open source software originally created by Yahoo and used widely at Yahoo. It can be used as a content delivery network, reverse proxy, forward proxy, and general proxy. Configuration primarily involves files like remap.config, records.config, and storage.config. Plugins can also be created to extend its functionality.

Viewers also liked (20)

MongoDB and Amazon Web Services: Storage Options for MongoDB Deployments

MongoDB and Amazon Web Services: Storage Options for MongoDB DeploymentsMongoDB When using MongoDB and AWS, you want to design your infrastructure to avoid storage bottlenecks and make the best use of your available storage resources. AWS offers a myriad of storage options, including ephemeral disks, EBS, Provisioned IOPS, and ephemeral SSD's, each offering different performance and persistence characteristics. In this session, we’ll evaluate each of these options in the context of your MongoDB deployment, assessing the benefits and drawbacks of each.

MongoDB Database Replication

MongoDB Database ReplicationMehdi Valikhani Database replication involves keeping identical copies of data on different servers to provide redundancy and minimize downtime. Replication is recommended for databases in production from the start. A MongoDB replica set consists of a primary server that handles client requests and secondary servers that copy the primary's data. Replica sets can include up to 50 members with 7 voting members and use an oplog to replicate operations from the primary to secondaries. For elections and writes to succeed, a majority of voting members must be reachable.

Mongo Web Apps: OSCON 2011

Mongo Web Apps: OSCON 2011rogerbodamer This document discusses using MongoDB to build location-based applications. It describes how to model location and check-in data, perform queries and analytics on that data, and deploy MongoDB in both unsharded and sharded configurations to scale the application. Examples of using MongoDB for a location application include storing location documents with name, address, tags, latitude/longitude, and user tips, and user documents with check-in arrays referencing location IDs.

The History Of The Future

The History Of The FutureGareth Davies Gareth Davies is known as ShaolinTiger and runs personal and infosec blogs. He founded shutterasia.com and security-forums.com communities. He cites works of science fiction from 1968, 1911, and 1983 that predicted technologies like the iPad, video chat, and themes in the movie Wargames. Davies believes science fiction shapes the future by introducing ideas that then get developed into reality over time.

Introduction to Information Security

Introduction to Information SecurityGareth Davies A short talk about Information Security, mainly focusing on start-ups and entrepreneurs.

Some basics on what Information Security is, how it can impact your business and some tips on how to mitigate against risk.

High Availability with MongoDB for Fun and Profit

High Availability with MongoDB for Fun and Profitthegdb This document discusses tips and best practices for achieving high availability with MongoDB. It begins with an introduction to replica sets and includes a live demo. It then covers strategies for handling failures, such as retrying operations and rebuilding replica sets. The document also provides recommendations for zero-downtime migrations, avoiding multi-updates, rehearsing administration procedures, being skeptical of assumptions, understanding available guarantees, and considering open issues when using MongoDB for high availability applications.

Scaling MongoDB

Scaling MongoDBMongoDB Has your app taken off? Are you thinking about scaling? MongoDB makes it easy to horizontally scale out with built-in automatic sharding, but did you know that sharding isn't the only way to achieve scale with MongoDB?

In this webinar, we'll review three different ways to achieve scale with MongoDB. We'll cover how you can optimize your application design and configure your storage to achieve scale, as well as the basics of horizontal scaling. You'll walk away with a thorough understanding of options to scale your MongoDB application.

Cassandra internals

Cassandra internalsAcunu Cassandra uses commit logs, memtables, and SSTables to handle writes efficiently. Commit logs store writes sequentially, memtables buffer in-memory, and SSTables store sorted, compressed data files on disk. For reads, Cassandra uses bloom filters and indexes to locate keys in memtables and SSTables, then retrieves the data. Compaction merges SSTables to improve performance and remove obsolete data. Snapshots and repair use Merkle trees to backup consistent data sets and repair differences between nodes.

Mule high availability (ha) cluster

Mule high availability (ha) clusterAchyuta Lakshmi A Mule ESB cluster consists of 2-8 Mule server instances that act as a single unit. It uses an active-active model where all servers support the application simultaneously rather than one primary server. Queues can be used to load balance across nodes. High-reliability applications require zero message loss tolerance and a reliable ESB and connections. Transports like VM and JMS are recommended for clustering over File due to distributed data access. Best practices include organizing applications into transactional steps and using reliability patterns for high reliability.

Spring Data MongoDB Webiner

Spring Data MongoDB WebinerHakan Özler 10 Haziran 2015 tarihinde yapılan Spring Data MongoDB webinerine ait sunum.

Webinar: Replication and Replica Sets

Webinar: Replication and Replica SetsMongoDB MongoDB supports replication for failover and redundancy. In this session we will introduce the basic concepts around replica sets, which provide automated failover and recovery of nodes. We'll cover how to set up, configure, and initiate a replica set; methods for using replication to scale reads; and proper architecture for durability.

Basic Replication in MongoDB

Basic Replication in MongoDBMongoDB This document discusses MongoDB replication and replica sets. It begins with an overview of why replication is useful, including protecting against node failures, network latency, and having different uses for data. It then covers the lifecycle of a replica set from creation to recovery. It describes the different roles nodes can have in a replica set and how replica sets are configured. It discusses considerations for developing applications with replica sets, including write acknowledgement and consistency levels. Finally, it covers some operational considerations like maintenance, upgrades, and topology options for replica sets spanning multiple data centers.

MongoDB Replica Sets

MongoDB Replica SetsMongoDB - Replica sets in MongoDB allow for replication across multiple servers, with one server acting as the primary and able to accept writes, and other secondary servers replicating the primary.

- If the primary fails, the replica set will automatically elect a new primary from the secondary servers and continue operating without interruption.

- The replica set configuration specifies the members, their roles, and settings like heartbeat frequency to monitor member health and elect a primary if needed.

Getting started with replica set in MongoDB

Getting started with replica set in MongoDBKishor Parkhe The document provides instructions for setting up and administering replica sets and sharded clusters in MongoDB. It describes initializing and configuring replica sets, adding members, and handling failures. It also explains the components of sharded clusters, requirements for sharding, and steps for enabling and administering sharding, including adding shards, sharding data, and commands for viewing sharding status.

Back to Basics: Build Something Big With MongoDB

Back to Basics: Build Something Big With MongoDB MongoDB 1. Replica sets allow for high availability and redundancy by creating copies of data across multiple nodes. The replica set lifestyle involves creation, initialization, handling failures and failovers, and recovery from failures.

2. When developing with replica sets, developers must consider consistency models such as strong consistency, delayed consistency, and write concerns to determine how and when data is written and acknowledged. Tagging and read preferences also allow control over where data is read from and written to.

3. Sharding provides horizontal scalability by partitioning data across multiple machines or replica sets. The data is split into chunks based on a user-defined shard key and distributed across shards. A config server stores metadata about chunk mappings and locations,

Advanced Replication

Advanced ReplicationMongoDB In this session we will cover wide area replica sets and using tags for backup. Attendees should be well versed in basic replication and familiar with concepts in the morning's basic replication talk. No beginner topics will be covered in this session

MongoDB Replication (Dwight Merriman)

MongoDB Replication (Dwight Merriman)MongoSF This document discusses MongoDB replication using replica sets. It describes how to configure and administer replica sets, which allow for asynchronous master-slave replication and automatic failover between members. Replica sets maintain multiple copies of data across multiple servers, provide redundancy and high availability, and can elect a new primary if one fails. The document outlines different replication topologies and member types in a replica set, and how replica sets integrate with sharded clusters in MongoDB.

MongoDB vs Mysql. A devops point of view

MongoDB vs Mysql. A devops point of viewPierre Baillet A fotopedia presentation made at the MongoDay 2012 in Paris at Xebia Office.

Talk by Pierre Baillet and Mathieu Poumeyrol.

French Article about the presentation:

http://www.touilleur-express.fr/2012/02/06/mongodb-retour-sur-experience-chez-fotopedia/

Video to come.

Multi Data Center Strategies

Multi Data Center StrategiesSteven Francia This document discusses strategies for using MongoDB in a distributed environment across multiple data centers. It describes setting up MongoDB replica sets in three different locations to allow for local writes and reads while minimizing remote data access. It also covers features like write concern, read preferences, and geo-aware sharding that can be used to control data routing and consistency for scenarios like social networks, analytics, authentication, and administration systems.

CouchDB Vs MongoDB

CouchDB Vs MongoDBGabriele Lana This document discusses NoSQL databases and provides an example of using MongoDB to calculate a total sum from documents. Key points:

- MongoDB is a document-oriented NoSQL database where data is stored in JSON-like documents within collections. It uses map-reduce functions to perform aggregations.

- The example shows saving ticket documents with an ID and checkout amount to the tickets collection.

- A map-reduce operation is run to emit the checkout amount from each document. These are summed by the reduce function to calculate a total of 430 across all documents.

Ad

Similar to High Availabiltity & Replica Sets with mongoDB (20)

Migrating and living on rds aurora

Migrating and living on rds auroraBalazs Pocze The document provides information on migrating to and managing databases on Amazon RDS/Aurora. Some key points include:

- RDS/Aurora handles complexity and makes the database highly available, but it also limits customization options compared to managing your own databases.

- Aurora is a MySQL-compatible database cluster that shares storage across nodes for high availability without replication lag. A cluster has writer and reader endpoints.

- CloudFormation is recommended for creating and managing Aurora clusters due to its native AWS support and ability to integrate with other services.

- Loading large amounts of data into Aurora may require using parallel dump/load tools like Mydumper/Myloader instead of mysqldump due to improved

Building Apache Cassandra clusters for massive scale

Building Apache Cassandra clusters for massive scaleAlex Thompson Covering theory and operational aspects of bring up Apache Cassandra clusters - this presentation can be used as a field reference. Presented by Alex Thompson at the Sydney Cassandra Meetup.

Upgrading mysql version 5.5.30 to 5.6.10

Upgrading mysql version 5.5.30 to 5.6.10Vasudeva Rao The document provides steps to upgrade a MySQL database from version 5.5.30 to 5.6.10 on a Linux server. It involves downloading the MySQL 5.6 RPM files, stopping the existing 5.5 server, moving the existing data directory, removing the 5.5 RPMs, installing the 5.6 RPMs, moving the data directory back, starting the 5.6 server, and running mysql_upgrade to convert the database to the new version's format. Additional configuration changes for the new 5.6 version are also recommended.

Introducing with MongoDB

Introducing with MongoDBMahbub Tito This document introduces MongoDB, a NoSQL database that stores data in flexible, JSON-like documents rather than rigid tables. It notes that MongoDB is scalable, supports many programming languages, is free to use, and easy to install. The document provides instructions on installing MongoDB and the PHP driver and includes examples of basic usage from the terminal.

Mysql ppt

Mysql pptSanmuga Nathan The document provides information about MySQL, including that it is an open source database software that is widely used. It describes how to install and configure MySQL on Linux, and provides examples of common SQL queries like creating tables, inserting/updating/deleting data, and exporting/importing databases. Key topics covered include the benefits of MySQL, installing it on Linux, basic configuration, and using SQL statements to define schemas and manipulate data.

MongoDB: Advantages of an Open Source NoSQL Database

MongoDB: Advantages of an Open Source NoSQL DatabaseFITC Save 10% off ANY FITC event with discount code 'slideshare'

See our upcoming events at www.fitc.ca

OVERVIEW

The presentation will present an overview of the MongoDB NoSQL database, its history and current status as the leading NoSQL database. It will focus on how NoSQL, and in particular MongoDB, benefits developers building big data or web scale applications. Discuss the community around MongoDB and compare it to commercial alternatives. An introduction to installing, configuring and maintaining standalone instances and replica sets will be provided.

Presented live at FITC's Spotlight:MEAN Stack on March 28th, 2014.

More info at FITC.ca

Armitage – The Ultimate Attack Platform for Metasploit

Armitage – The Ultimate Attack Platform for Metasploit Ishan Girdhar Provides recommendations for exploits and active checks.

Hosts: Shows discovered hosts and lets you manage them.

Consoles: Provides access to Metasploit console and shell access.

Some key areas of the interface:

1. Toolbar: Provides access to common tasks like scanning, exploitation.

2. Assistant Panel: Shows exploit recommendations and active check results.

3. Hosts Panel: Lists discovered hosts and their details.

4. Consoles Panel: Access to Metasploit console and shell access.

5. Status Bar: Shows connection status, database status and more.

So in summary, Armitage takes the raw power of Metasploit and wraps it in an easy to

grate techniques

grate techniquesjunaid novapex This document provides instructions for installing Redmine, an open source project management tool, on a Debian Wheezy system. It describes how to install Ruby, Rails, gems, and the Passenger application server. It then covers downloading and configuring Redmine, creating a MySQL database, and configuring Apache virtual hosts to serve Redmine at a sub-URI. Testing the installation and troubleshooting tips are also included.

Alta disponibilidad en GNU/Linux

Alta disponibilidad en GNU/LinuxGuillermo Salas Macias This document describes setting up a high availability system using two Debian GNU/Linux virtual servers. Distributed Replicated Block Device (DRBD) will be used for data replication between the servers. Heartbeat will monitor the servers and ensure services are running on the active server. Wordpress will be installed to demonstrate that data entered on one server is immediately replicated to the other. Network, storage, and configuration details are provided to set up DRBD and Heartbeat to achieve high availability.

Snaps on open suse

Snaps on open suseZygmunt Krynicki The document provides an overview of snaps and snapd:

- Snaps are packages that provide application sandboxing and confinement using interfaces and security policies. They work across distributions and allow automatic updates.

- Snapcraft is used to build snaps by defining parts and plugins in a yaml file. Snaps are mounted at runtime rather than unpacked.

- Snapd is the daemon that installs, removes, and updates snaps. It manages security interfaces and confinement policies between snaps.

- The store publishes snaps to channels of different risk levels. Snapd installs the revisions specified by the store for each channel.

Caching and tuning fun for high scalability @ FrOSCon 2011

Caching and tuning fun for high scalability @ FrOSCon 2011Wim Godden The document discusses using caching and tuning techniques to improve scalability for websites. It covers caching full pages, parts of pages, SQL queries, and complex processing results. Memcache is presented as a fast and distributed caching solution. The document also discusses installing and using Memcache, as well as replacing Apache with Nginx as a lighter-weight web server that can serve static files and forward dynamic requests.

Drupal7 MEMCACHE

Drupal7 MEMCACHE Pankaj Chauhan Memcached is a general-purpose distributed memory caching system that speeds up dynamic database-driven websites. It caches data and objects in RAM to reduce database access. Memcached stores data in a key-value format in memory across multiple servers to provide a large pooled memory cache. The document provides instructions on installing and configuring Memcached and related modules for a Drupal website to improve performance.

MongoDB and AWS Best Practices

MongoDB and AWS Best PracticesMongoDB Charity Majors works as a systems engineer at Parse, a platform for mobile developers. Parse uses MongoDB for various purposes, including storing user data, DDoS protection and query profiling, and analytics for billing and logging. Charity provides advice on best practices for running MongoDB in production environments at scale, such as using replica sets, taking regular snapshots, automating setup and maintenance with Chef, and using provisioned IOPS volumes to improve performance.

Tutorial CentOS 5 untuk Webhosting

Tutorial CentOS 5 untuk WebhostingBeni Krisbiantoro 1. The document provides instructions for installing CentOS and setting up a DNS server on the installed CentOS system.

2. It describes downloading and burning the CentOS ISO, installing it on a computer, and configuring the network interfaces and other installation options.

3. It also explains how to generate an rndc key for bind, edit the rndc.conf and named.conf files, and enable DNS services on the new CentOS server.

Containers with systemd-nspawn

Containers with systemd-nspawnGábor Nyers While probably the most prominent, Docker is not the only tool for building and managing containers. Originally meant to be a "chroot on steroids" to help debug systemd, systemd-nspawn provides a fairly uncomplicated approach to work with containers. Being part of systemd, it is available on most recent distributions out-of-the-box and requires no additional dependencies.

This deck will introduce a few concepts involved in containers and will guide you through the steps of building a container from scratch. The payload will be a simple service, which will be automatically activated by systemd when the first request arrives.

Mysql talk

Mysql talkLogicMonitor The document discusses various ways to tune Linux and MySQL for performance. It recommends measuring different aspects of the database, operating system, disk and application performance. Some specific tuning techniques discussed include testing different IO schedulers, increasing the number of InnoDB threads, reducing swapping by lowering the swappiness value, enabling interleave mode for NUMA systems, and potentially using huge pages, though noting the complexity of configuring huge pages. The key message is that default settings may not be optimal and testing is needed to understand each individual system's performance.

Deploying Foreman in Enterprise Environments

Deploying Foreman in Enterprise Environmentsinovex GmbH Nils Domrose, inovex GmbH

Config Management Camp

cfgmgmtcamp.eu

3 and 4 February 2014

Gent, Belgium

Hadoop admin

Hadoop adminBalaji Rajan This document provides an overview of Hadoop and MapReduce concepts. It discusses:

- HDFS architecture with NameNode and DataNodes for metadata and data storage. HDFS provides reliability through block replication across nodes.

- MapReduce framework for distributed processing of large datasets across clusters. It consists of map and reduce phases with intermediate shuffling and sorting of data.

- Hadoop was developed based on Google's papers describing their distributed file system GFS and MapReduce processing model. It allows processing of data in parallel across large clusters of commodity hardware.

DrupalCampLA 2011: Drupal backend-performance

DrupalCampLA 2011: Drupal backend-performanceAshok Modi The DrupalCampLA 2011 presentation on backend performance. The slides go over optimizations that can be done through the LAMP (or now VAN LAMMP stack for even more performance) to get everything up and running.

Caching and tuning fun for high scalability

Caching and tuning fun for high scalabilityWim Godden Caching has been a 'hot' topic for a few years. But caching takes more than merely taking data and putting it in a cache : the right caching techniques can improve performance and reduce load significantly. But we'll also look at some major pitfalls, showing that caching the wrong way can bring down your site.

If you're looking for a clear explanation about various caching techniques and tools like Memcached, Nginx and Varnish, as well as ways to deploy them in an efficient way, this talk is for you.

Ad

Recently uploaded (20)

Role of Data Annotation Services in AI-Powered Manufacturing

Role of Data Annotation Services in AI-Powered ManufacturingAndrew Leo From predictive maintenance to robotic automation, AI is driving the future of manufacturing. But without high-quality annotated data, even the smartest models fall short.

Discover how data annotation services are powering accuracy, safety, and efficiency in AI-driven manufacturing systems.

Precision in data labeling = Precision on the production floor.

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep Dive

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep DiveScyllaDB Want to learn practical tips for designing systems that can scale efficiently without compromising speed?

Join us for a workshop where we’ll address these challenges head-on and explore how to architect low-latency systems using Rust. During this free interactive workshop oriented for developers, engineers, and architects, we’ll cover how Rust’s unique language features and the Tokio async runtime enable high-performance application development.

As you explore key principles of designing low-latency systems with Rust, you will learn how to:

- Create and compile a real-world app with Rust

- Connect the application to ScyllaDB (NoSQL data store)

- Negotiate tradeoffs related to data modeling and querying

- Manage and monitor the database for consistently low latencies

Rusty Waters: Elevating Lakehouses Beyond Spark

Rusty Waters: Elevating Lakehouses Beyond Sparkcarlyakerly1 Spark is a powerhouse for large datasets, but when it comes to smaller data workloads, its overhead can sometimes slow things down. What if you could achieve high performance and efficiency without the need for Spark?

At S&P Global Commodity Insights, having a complete view of global energy and commodities markets enables customers to make data-driven decisions with confidence and create long-term, sustainable value. 🌍

Explore delta-rs + CDC and how these open-source innovations power lightweight, high-performance data applications beyond Spark! 🚀

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...TrustArc Most consumers believe they’re making informed decisions about their personal data—adjusting privacy settings, blocking trackers, and opting out where they can. However, our new research reveals that while awareness is high, taking meaningful action is still lacking. On the corporate side, many organizations report strong policies for managing third-party data and consumer consent yet fall short when it comes to consistency, accountability and transparency.

This session will explore the research findings from TrustArc’s Privacy Pulse Survey, examining consumer attitudes toward personal data collection and practical suggestions for corporate practices around purchasing third-party data.

Attendees will learn:

- Consumer awareness around data brokers and what consumers are doing to limit data collection

- How businesses assess third-party vendors and their consent management operations

- Where business preparedness needs improvement

- What these trends mean for the future of privacy governance and public trust

This discussion is essential for privacy, risk, and compliance professionals who want to ground their strategies in current data and prepare for what’s next in the privacy landscape.

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat The MCP (Model Context Protocol) is a framework designed to manage context and interaction within complex systems. This SlideShare presentation will provide a detailed overview of the MCP Model, its applications, and how it plays a crucial role in improving communication and decision-making in distributed systems. We will explore the key concepts behind the protocol, including the importance of context, data management, and how this model enhances system adaptability and responsiveness. Ideal for software developers, system architects, and IT professionals, this presentation will offer valuable insights into how the MCP Model can streamline workflows, improve efficiency, and create more intuitive systems for a wide range of use cases.

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In France

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In Francechb3 The latest updates on Manifest's pre-seed stage progress.

Heap, Types of Heap, Insertion and Deletion

Heap, Types of Heap, Insertion and DeletionJaydeep Kale This pdf will explain what is heap, its type, insertion and deletion in heap and Heap sort

Splunk Security Update | Public Sector Summit Germany 2025

Splunk Security Update | Public Sector Summit Germany 2025Splunk Splunk Security Update

Sprecher: Marcel Tanuatmadja

Generative Artificial Intelligence (GenAI) in Business

Generative Artificial Intelligence (GenAI) in BusinessDr. Tathagat Varma My talk for the Indian School of Business (ISB) Emerging Leaders Program Cohort 9. In this talk, I discussed key issues around adoption of GenAI in business - benefits, opportunities and limitations. I also discussed how my research on Theory of Cognitive Chasms helps address some of these issues

Linux Professional Institute LPIC-1 Exam.pdf

Linux Professional Institute LPIC-1 Exam.pdfRHCSA Guru Introduction to LPIC-1 Exam - overview, exam details, price and job opportunities

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptx

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptxAnoop Ashok In today's fast-paced retail environment, efficiency is key. Every minute counts, and every penny matters. One tool that can significantly boost your store's efficiency is a well-executed planogram. These visual merchandising blueprints not only enhance store layouts but also save time and money in the process.

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, presentation slides, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

Dev Dives: Automate and orchestrate your processes with UiPath Maestro

Dev Dives: Automate and orchestrate your processes with UiPath MaestroUiPathCommunity This session is designed to equip developers with the skills needed to build mission-critical, end-to-end processes that seamlessly orchestrate agents, people, and robots.

📕 Here's what you can expect:

- Modeling: Build end-to-end processes using BPMN.

- Implementing: Integrate agentic tasks, RPA, APIs, and advanced decisioning into processes.

- Operating: Control process instances with rewind, replay, pause, and stop functions.

- Monitoring: Use dashboards and embedded analytics for real-time insights into process instances.

This webinar is a must-attend for developers looking to enhance their agentic automation skills and orchestrate robust, mission-critical processes.

👨🏫 Speaker:

Andrei Vintila, Principal Product Manager @UiPath

This session streamed live on April 29, 2025, 16:00 CET.

Check out all our upcoming Dev Dives sessions at https://community.uipath.com/dev-dives-automation-developer-2025/.

Cybersecurity Identity and Access Solutions using Azure AD

Cybersecurity Identity and Access Solutions using Azure ADVICTOR MAESTRE RAMIREZ Cybersecurity Identity and Access Solutions using Azure AD

2025-05-Q4-2024-Investor-Presentation.pptx

2025-05-Q4-2024-Investor-Presentation.pptxSamuele Fogagnolo Cloudflare Q4 Financial Results Presentation

Build Your Own Copilot & Agents For Devs

Build Your Own Copilot & Agents For DevsBrian McKeiver May 2nd, 2025 talk at StirTrek 2025 Conference.

How Can I use the AI Hype in my Business Context?

How Can I use the AI Hype in my Business Context?Daniel Lehner 𝙄𝙨 𝘼𝙄 𝙟𝙪𝙨𝙩 𝙝𝙮𝙥𝙚? 𝙊𝙧 𝙞𝙨 𝙞𝙩 𝙩𝙝𝙚 𝙜𝙖𝙢𝙚 𝙘𝙝𝙖𝙣𝙜𝙚𝙧 𝙮𝙤𝙪𝙧 𝙗𝙪𝙨𝙞𝙣𝙚𝙨𝙨 𝙣𝙚𝙚𝙙𝙨?

Everyone’s talking about AI but is anyone really using it to create real value?

Most companies want to leverage AI. Few know 𝗵𝗼𝘄.

✅ What exactly should you ask to find real AI opportunities?

✅ Which AI techniques actually fit your business?

✅ Is your data even ready for AI?

If you’re not sure, you’re not alone. This is a condensed version of the slides I presented at a Linkedin webinar for Tecnovy on 28.04.2025.

Drupalcamp Finland – Measuring Front-end Energy Consumption

Drupalcamp Finland – Measuring Front-end Energy ConsumptionExove How to measure web front-end energy consumption using Firefox Profiler. Presented in DrupalCamp Finland on April 25th, 2025.

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat

High Availabiltity & Replica Sets with mongoDB

- 1. High Availability & Replica Sets with Gareth Davies @ShaolinTiger www.shaolintiger.com

- 2. Who am I? - Blogger (shaolintiger.com/darknet.org.uk) - Community Starter (security-forums.com/shutterasia.com) - Geek/Sys-admin - WordPress/Web Publishing Scaling Expert - Recent MongoDB user - Currently working at Mindvalley

- 3. Why I <3 MongoDB - It's FAST - It's relatively easy to setup - It's a LOT easier to scale than say..MySQL - Does anyone know about scaling MySQL?

- 4. Scaling MySQL Is Like...

- 5. Basic Concepts – Master Slave

- 6. The Next Level – Replica Set

- 7. Master Slave vs Replica Set

- 8. Replica Sets – Things to Grok - The primary AKA Master is auto-elected - Drivers and mongos can detect the primary - Replica sets provide you: - Data Redundancy - Automated Failover AKA High-availaiblity - Distributed Read Load/Read Scaling - Disaster Recovery

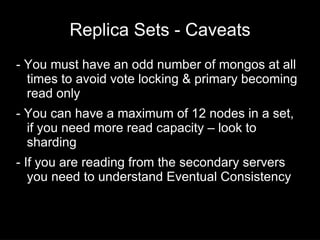

- 9. Replica Sets - Caveats - You must have an odd number of mongos at all times to avoid vote locking & primary becoming read only - You can have a maximum of 12 nodes in a set, if you need more read capacity – look to sharding - If you are reading from the secondary servers you need to understand Eventual Consistency

- 10. Getting Started - I <3 Linode! - Easy scaling/Nodebalancers/Cloning/Fast Roll- up/Fastest IO in the industry/Ubuntu 12.04LTS etc – Examples are done on Linode

- 11. Add 3 new Nodes - Chose your location, put 2 in your primary DC and 1 in a different geographical DC - In my case this would be 2 servers in Atlanta, GA and 1 in Dallas TX – This gives you a replica set that works if a whole datacenter goes down

- 12. Select The OS - Ubuntu 12.04LTS 64-bit – this gives you package support for the next 5 years - Also gives you the ability to grow your MongoDB instance above 2GB safely

- 13. Do your basic shizzles - Set the hostname/local IP address etc - Disable Swap - Change SSH port - Remove password based login - Block root SSH acess - aptitude update; aptitude safe-upgrade - Install base packages (munin/iotop/sysstat etc) - Configure unattended security updates

- 14. Install MongoDB - I don't recommend installing from the regular repo as it will be out of date after some time - Install direct from the 10gen repo sudo apt-key adv --keyserver keyserver.ubuntu.com --recv 7F0CEB10 sudo nano /etc/apt/sources.list – add this: deb http://downloads-distro.mongodb.org/repo/ubuntu-upstart dist 10gen aptitude update; aptitude install mongodb-10gen - That's it – it's installed!

- 15. Clone that bad boy! - Bear in mind you only have to do all of that stuff once! When it's done – just clone it over to your two new nodes. - Remember to delete the config & disk images from your target first & power down the initial machine. * Do note when copying to the remote DC it will take quite a long time

- 16. Get the Replica Set Started Do: sudo nano /etc/mongodb.conf Find the line like so: # in replica set configuration, specify the name of the replica set # replSet = setname Change it to: # in replica set configuration, specify the name of the replica set replSet = yoursetname Do this on all your Mongos and then restart them sudo service mongodb restart

- 17. Configure the Replica Set - After restarting if you check the logs you'll see something like this: - This basically means the Replica set is running, but it's not yet aware of the other nodes

- 18. Add the member nodes - You'll need to run 'mongo' to get the mongo shell then: rs.initiate({_id: 'yoursetname', members: [ {_id: 0, host: '192.168.1.1:27017'}, {_id: 1, host: '192.168.1.2:27017'}, {_id: 2, host: '192.168.1.3:27017'}] }) - This will spin up the set

- 19. Check that it worked - I suggest running tail -f on the logs on one of the other nodes, you'll see a bunch of messages about replSet & rsStart (hopefully) - If you see all that in /var/log/mongodb/mongodb.log – you're good!

- 20. That's It! - Yah I know, too easy right? - That's how hard it is to set up a fully scalable, high availability database cluster with MongoDB

- 21. Things to Consider - ALWAYS monitor, make decisions made on statistics and numbers not on assumptions - I like (and very actively use) munin - munin works well with MongoDB and a myriad of other software

- 22. Further Learnings - Think about security (Bind address/IPTables/Authentication/Cluster Keys) - If you have a write heavy application and you need to scale writes – look to sharding - Sharding and replica sets work well together (but each shard needs a replica set) - Try and give your MongoDB instance enough RAM to keep the hot index in memory

- 23. THE END! Questions? This presentation will be available at http://slideshare.net/shaolintiger