04 using and_configuring_bash

- 1. Using & Configuring BASH

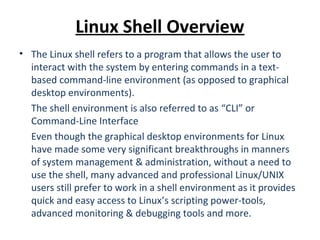

- 2. Linux Shell Overview • The Linux shell refers to a program that allows the user to interact with the system by entering commands in a text- based command-line environment (as opposed to graphical desktop environments). The shell environment is also referred to as “CLI” or Command-Line Interface Even though the graphical desktop environments for Linux have made some very significant breakthroughs in manners of system management & administration, without a need to use the shell, many advanced and professional Linux/UNIX users still prefer to work in a shell environment as it provides quick and easy access to Linux’s scripting power-tools, advanced monitoring & debugging tools and more.

- 3. Aliases • The primary focus in this course will be on BASH, which is a modern, growing shell and the successor of the commonly used SH in both Linux & UNIX systems. • Shell aliases provide us with a way to: Substitute short commands for long ones. Turn a series of commands into a single command that executes them. Create alternate forms of existing commands. Add options to different commands and use those syntaxes as default. • To view the aliases for the current user, run: alias • Create a new alias with the command: alias aliasname=value • To remove an alias, use: unalias aliasname

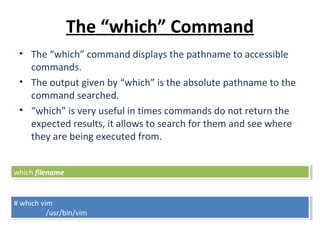

- 4. The “which” Command • The “which” command displays the pathname to accessible commands. • The output given by “which” is the absolute pathname to the command searched. • “which” is very useful in times commands do not return the expected results, it allows to search for them and see where they are being executed from. # which vim /usr/bin/vim # which vim /usr/bin/vim which filenamewhich filename

- 5. Quoting • Shell meta-characters, as discussed before, interpret in a special way in the shell. • There are a number of ways to override these special meanings and have these characters behave like any other regular character; this is done by quoting: ‘ ‘ - single quotes cancel the special meaning of ALL metacharacters within them. “ “ - double quotes cancel the special meanings for all metacharacters, except for $ - backslash cancels the special meaning of any character that immediately follows. • Note that quotes are metacharacters themselves as well.

- 6. Command History • The BASH shell saves a history of every command executed from command line. • The history is saved into a file, located in: ~/.bash_history • By default, BASH saves a history of 128 last commands; this value and the location in which the history is saved can be customized. • In order to display the command history, run: “history [options]” Running “history -3” will display the last 4 commands entered. “history 3” will display all commands from the 3rd line in the history file to the last commanded entered.

- 7. Command History • The history file can be searched for specific strings in numerous ways: CTRL-r will open the history search line, then we can type in the command or string we wish to search for; once the string we want is in, hitting CTRL-r again will jump to the next search hit. Another search method would be to display the history file’s contents # history … 40 id 41 ls 42 cd myDir # history … 40 id 41 ls 42 cd myDir

- 8. Shell Variables • Variables are placeholders for information. • Two types of variables exist: Local – these variables affect the current shell session only. Environment – these variables affect any shell session; they are automatically initiated every time a new session starts. • Shell variables can be either user-defined or built-into the system, they can also be pre-defined and then customized later. • When a variable is created, it is Local and effective only within the shell environment that created it. • In order for a variable to be available in other sessions as well, it must be exported.

- 9. Shell Variables • By convention, variables in Linux are defined in upper-case characters; this is not a must though, lower-case characters would work just as well. • This example creates a variable named MAILLOG and assigns the value “/var/log/maillog” to it: MAILLOG=/var/log/maillog • Once we have defined the variable, we can now apply it in commands, such as: “vim $MAILLOG” which will start vim and the argument provided will be “/var/log/maillog” which is a file. • In order to display the value of an existing variable we can use: “echo $MAILLOG” • Keep in mind: Linux IS case-sensitive.

- 10. Shell Variables • The “$” sign is a metacharacter with the meaning of “substitute with value”, it is used to expand the assigned value of a variable. • When attempting to expand a variable, the shell will look in both its local and environment variable lists and find the value assigned to the variable we’ve used, $MAILLOG in our case.

- 11. Local Shell Variables • User-defined variables enable the user to determine both the variable name and its value. • The syntax for creating a new variable is: VAR=value • Make sure there are no spaces in either side of the “=“ mark. • The “unset” command removes a variable, the syntax is: unset VAR • All currently set variables and their values can be displayed with the “set” command.

- 12. Environment Variables • Environment variables are copied to child processes upon creation. • Every process has its own copy and no process can touch another’s memory. • In order to turn a local variable into an environment variable we’d use the “export” command; there are two methods of doing this First method: create a local variable then export it in two commands: MAILLOG=/var/log/maillog ; export MAILLOG Second method: create the new variable while exporting it: export MAILLOG=/var/log/mail

- 13. Environment Variables • Linux provides the user with the ability to change and customize the values of the default environment variables. • Environment variables can be temporarily modified for the current shell session only and until it is closed. • In order to make environment variable changes permanent, their values will need to be changed in the initialization files. • We can view the environment variables by running the command: “env”.

- 14. The PATH Variable • The PATH variable allows the shell to locate commands in directories, in the order they are defined in the variable. • In order to add a new directory to the PATH variable, we’d use the following command: PATH=$PATH:/new/directory/here/ • PATH is already exported, there is no need to export it again after adding to it.

- 15. Variables & Command Expansion • Command expansion is the ability to use a custom command output anywhere when writing shell commands. Use the “$()” meta-character to declare a command expansion block $( command ; command ; … ) # ls dir1 file1 file2 # VAR=$( ls ) # echo $VAR dir1 file1 file2 # ls dir1 file1 file2 # VAR=$( ls ) # echo $VAR dir1 file1 file2

- 16. The Initialization Files • Initialization files contain commands and variable settings that are executed on any shell that is started • There are two levels of initialization files: System wide: /etc/profile – accessible only by the sys-admin. User-specific: ~/.bash_profile and ~/.bashrc – accessible by the owning user. The .bash_profile file is loaded once in the beginning of every session. .bashrc is loaded every time a new shell is opened, for example when opening a shell via the graphical desktop environment. Neither of these files must exist but if they do exist, they will be read and applied by the system. These two files can be used by the owning user to customer their own working environment.

- 17. The /etc/profile File • When a user logs in, the system first reads and applies everything from the /etc/profile file into that user’s environment and only then reads the user’s .bash_profile and/or .bashrc • The /etc/profile file is maintained by the sys-admin: Exports environment variables Exports PATH and default command path. Sets the variable TERM for the default terminal type. Displays the contents of the /etc/motd file. Sets the default file creation permissions.

- 18. BASH Tab Completion • BASH has the ability to auto-complete command, directory and file names upon hitting the TAB key. • As long as the string we wish to auto-complete is not ambiguous, hitting TAB once will get the job done. • In such cases of ambiguous strings, we can hit TAB twice and it will list all of the options that begin with the string we have provided the command line with. • Adding another character that would change our string from ambiguous to unique would allow a single TAB to auto- complete it.

- 19. Shell Scripts • Shell commands can be run as an individual set of tasks, or a unified ‘flow’ of tasks. This is usually called a “Shell Script” • Shell Scripts can simply be a serial set of command ls ; df ; ps and can include flow control, arithmetic operators, variables and functions • The most basic qualifier for a shell script is that it is saved in a file The first line of this file, should be the “#!” meta-character which indicates which type of shell script this file is #!/bin/bash command

- 20. Shell Scripts - Conditions • In order to run two or more commands, in a serial manner, we use the ‘;’ meta-character. • By using the “OR” (||) or “AND” (&&) meta-characters, we can add the appropriate logical condition to our command-set The decision whether to run the next command, is based on the “Exit Status” of the previous command, which is also viewable by reading the value of the special variable “$?”. Exit status value of 0 means success or ‘true’. When the value is bigger than 0 it is treated as ‘false’ # ls -l file && echo "My file exists" -rw-r--r-- 1 user staff 4 Jul 22 13:00 file My file exists

- 21. Shell Scripts - Conditions • In order to run multiple commands after a logical condition, we can use the “{ }” meta-characters to declare a ‘Command Set’ # ls file && { > echo "My file exists" > ls -l file > echo "This is good news" > } file My file exists -rw-r--r-- 1 shaycohen staff 4 Jul 22 13:00 file This is good news # ls file && { > echo "My file exists" > ls -l file > echo "This is good news" > } file My file exists -rw-r--r-- 1 shaycohen staff 4 Jul 22 13:00 file This is good news

- 22. Shell Scripts - Conditions • Another way of using conditions is by using the ‘if’ command if expression then command else command fi # if ls nosuchfile > then > echo "My file exists" > else > echo "My file does not exist" > fi ls: nosuchfile: No such file or directory My file does not exist # if ls nosuchfile > then > echo "My file exists" > else > echo "My file does not exist" > fi ls: nosuchfile: No such file or directory My file does not exist

- 23. Shell Scripts - Conditions • There are three main types of expressions – Logical Expression “[[ expression ]]” expression can be any of the valid flags of the “test” command – Arithmetic Expression “(( expression ))” expression can be any valid flags of the “expr” command Use manual pages to find how to use the ‘expr’ and ‘test’ commands. Q: What will the following command return as output # expr 1+1

- 24. Shell Scripts - Loops • Bash supports three main types of loops – List loop “for” – Conditional loop “while” | “until” for VAR in “value1” “value2” … do command $VAR done while expression do command done

- 25. Shell Scripts - Loops # ls dir1 file1 file2 # for FILE in file1 file2 > do > ls $FILE && echo "$FILE Exists" > done # ls dir1 file1 file2 # for FILE in file1 file2 > do > ls $FILE && echo "$FILE Exists" > done

- 26. Command line parsing • Before running given commands, Bash parse the given command and arguments and replaces any meta-characters with the evaluated value. • Use Bash with the ‘-x’ flag to get detailed information about every command parsing result # for FILE in $(ls) > do > echo "$((COUNT++)) - $FILE" > done 0 - dir1 1 - file1 2 - file2 # for FILE in $(ls) > do > echo "$((COUNT++)) - $FILE" > done 0 - dir1 1 - file1 2 - file2 # for FILE in dir1 file1 file2# for FILE in dir1 file1 file2 > echo “0 - dir1”> echo “0 - dir1” > echo “1 - file1”> echo “1 - file1” > echo “2 - file2”> echo “2 - file2”

- 27. Exercise • Write your first shell script: hello.sh The script should print out “Hello World” to the terminal

Editor's Notes

- #2: Discussion: The importance of command line to communicate with a computer. - Why is it important ? - Learning the language is the initial step in the way of becoming a computing specialist - Linux, Windows, Mac, Cellphones - vast market

![Command History

• The BASH shell saves a history of every command executed

from command line.

• The history is saved into a file, located in: ~/.bash_history

• By default, BASH saves a history of 128 last commands; this

value and the location in which the history is saved can be

customized.

• In order to display the command history, run: “history

[options]”

Running “history -3” will display the last 4 commands entered.

“history 3” will display all commands from the 3rd

line in the history file

to the last commanded entered.](https://image.slidesharecdn.com/04usingandconfiguringbash-130801014812-phpapp01/85/04-using-and_configuring_bash-6-320.jpg)

![Shell Scripts - Conditions

• There are three main types of expressions

– Logical Expression “[[ expression ]]” expression can be any of the valid

flags of the “test” command

– Arithmetic Expression “(( expression ))” expression can be any valid

flags of the “expr” command

Use manual pages to find how to use the ‘expr’ and ‘test’ commands.

Q: What will the following command return as output

# expr 1+1](https://image.slidesharecdn.com/04usingandconfiguringbash-130801014812-phpapp01/85/04-using-and_configuring_bash-23-320.jpg)

![[Altibase] 9 replication part2 (methods and controls)](https://cdn.slidesharecdn.com/ss_thumbnails/altibase9replicationpart2methodsandcontrols-160126071813-thumbnail.jpg?width=560&fit=bounds)