01_pytorch_workflow jutedssd huge hhgggdf

- 1. Workflow

- 2. What we’re going to cover A PyTorch workflow (one of many)

- 3. Where can you get help? •Follow along with the code •Try it for yourself •Press SHIFT + CMD + SPACE to read the docstring •Search for it •Try again •Ask Motto #1: “If in doubt, run the code” https://www.github.com/mrdbourke/pytorch-deep-learning/discussions

- 4. Let’s code!

- 5. Machine learning: a game of two parts [[116, 78, 15], [117, 43, 96], [125, 87, 23], …, [[0.983, 0.004, 0.013], [0.110, 0.889, 0.001], [0.023, 0.027, 0.985], …, Inputs Numerical encoding Learns representation (patterns/features/ weights) Representation outputs Outputs Ramen, Spaghetti Not spam “Hey Siri, what’s the weather today?”

- 6. [[116, 78, 15], [117, 43, 96], [125, 87, 23], …, [[0.983, 0.004, 0.013], [0.110, 0.889, 0.001], [0.023, 0.027, 0.985], …, Inputs Numerical encoding Learns representation (patterns/features/weights) Representation outputs Outputs Ramen, Spaghetti Not spam “Hey Siri, what’s the weather today?” Part 1: Turn data into numbers Part 2: Build model to learn patterns in numbers

- 7. Three datasets (possibly the most important concept in machine learning…) Final exam (test set) Course materials (training set) Practice exam (validation set) Generalization The ability for a machine learning model to perform well on data it hasn’t seen before. Model learns patterns from here Tune model patterns See if the model is ready for the wild

- 8. Subclass nn.Module (this contains all the building blocks for neural networks) Any subclass of nn.Module needs to override forward() (this de fi nes the forward computation of the model) Initialise model parameters to be used in various computations (these could be di ff erent layers from torch.nn, single parameters, hard-coded values or functions) requires_grad=True means PyTorch will track the gradients of this speci fi c parameter for use with torch.autograd and gradient descent (for many torch.nn modules, requires_grad=True is set by default)

- 9. PyTorch essential neural network building modules PyTorch module What does it do? torch.nn Contains all of the building blocks for computational graphs (essentially a series of computations executed in a particular way). torch.nn.Module The base class for all neural network modules, all the building blocks for neural networks are subclasses. If you're building a neural network in PyTorch, your models should subclass nn.Module. Requires a forward() method be implemented. torch.optim Contains various optimization algorithms (these tell the model parameters stored in nn.Parameter how to best change to improve gradient descent and in turn reduce the loss). torch.utils.data.Dataset Represents a map between key (label) and sample (features) pairs of your data. Such as images and their associated labels. torch.utils.data.DataLoader Creates a Python iterable over a torch Dataset (allows you to iterate over your data). See more: https://pytorch.org/tutorials/beginner/ptcheat.html

- 10. torch.optim torch.nn torch.nn.Module torchvision.models torchmetrics See more: https://pytorch.org/tutorials/beginner/ptcheat.html torch.utils.data.Dataset torch.utils.data.DataLoader torchvision.transforms torch.utils.tensorboard

- 11. Di ff erence (y_pred[0] - y_test[0]) = 0.4618 MAE_loss = torch.mean(torch.abs(y_pred-y_test)) or MAE_loss = torch.nn.L1Loss Mean absolute error (MAE) Mean absolute error (MAE) = Repeat for all and take the mean See more: https://pytorch.org/tutorials/beginner/ptcheat.html#loss-functions

- 12. Source: @mrdbourke Twitter & see the video version on YouTube.

- 13. PyTorch training loop Pass the data through the model for a number of epochs (e.g. 100 for 100 passes of the data) Zero the optimizer gradients (they accumulate every epoch, zero them to start fresh each forward pass) Pass the data through the model, this will perform the forward() method located within the model object Calculate the loss value (how wrong the model’s predictions are) Perform backpropagation on the loss function (compute the gradient of every parameter with requires_grad=True) Step the optimizer to update the model’s parameters with respect to the gradients calculated by loss.backward() Note: all of this can be turned into a function

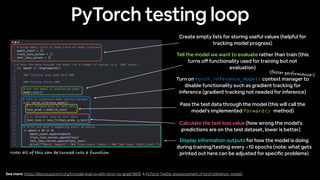

- 14. PyTorch testing loop Create empty lists for storing useful values (helpful for tracking model progress) Pass the test data through the model (this will call the model’s implemented forward() method) Tell the model we want to evaluate rather than train (this turns o ff functionality used for training but not evaluation) Turn on torch.inference_mode() context manager to disable functionality such as gradient tracking for inference (gradient tracking not needed for inference) Calculate the test loss value (how wrong the model’s predictions are on the test dataset, lower is better) Display information outputs for how the model is doing during training/testing every ~10 epochs (note: what gets printed out here can be adjusted for speci fi c problems) See more: https://discuss.pytorch.org/t/model-eval-vs-with-torch-no-grad/19615 & PyTorch Twitter announcement of torch.inference_mode() Note: all of this can be turned into a function (faster performance!)

- 16. Linear regression model with nn.Parameter Linear regression model with nn.Linear

- 17. [[116, 78, 15], [117, 43, 96], [125, 87, 23], …, [[0.983, 0.004, 0.013], [0.110, 0.889, 0.001], [0.023, 0.027, 0.985], …, Inputs Numerical encoding Learns representation (patterns/features/weights) Representation outputs Outputs Ramen, Spaghetti [[0.092, 0.210, 0.415], [0.778, 0.929, 0.030], [0.019, 0.182, 0.555], …, 1. Initialise with random weights (only at beginning) 2. Show examples 3. Update representation outputs 4. Repeat with more examples Supervised learning (overview)

- 18. Neural Networks [[116, 78, 15], [117, 43, 96], [125, 87, 23], …, [[0.983, 0.004, 0.013], [0.110, 0.889, 0.001], [0.023, 0.027, 0.985], …, Inputs Numerical encoding Learns representation (patterns/features/weights) Representation outputs Outputs (a human can understand these) Ramen, Spaghetti Not spam “Hey Siri, what’s the weather today?” (choose the appropriate neural network for your problem) (before data gets used with an algorithm, it needs to be turned into numbers) These are tensors!

- 19. [[116, 78, 15], [117, 43, 96], [125, 87, 23], …, [[0.983, 0.004, 0.013], [0.110, 0.889, 0.001], [0.023, 0.027, 0.985], …, Inputs Numerical encoding Learns representation (patterns/features/weights) Representation outputs Outputs Ramen, Spaghetti These are tensors!

- 20. How to approach this course 1. Code along Motto #1: if in doubt, run the code! 2. Explore and experiment Motto #2: Experiment, experiment, experiment! 3. Visualize what you don’t understand Motto #3: Visualize, visualize, visualize! 4. Ask questions 🛠 5. Do the exercises 🤗 6. Share your work (including the “dumb” ones)

- 21. How not to approach this course Avoid: 🧠 🔥 🔥 🔥 “I can’t learn ______”

- 22. Resources https://www.github.com/mrdbourke/pytorch-deep-learning https://www.github.com/mrdbourke/pytorch-deep-learning/ discussions https://learnpytorch.io Course materials Course Q&A Course online book PyTorch website & forums This course All things PyTorch

- 23. Di ff erence (y_pred[0] - y_test[0]) = 0.4618

![Machine learning: a game of two parts

[[116, 78, 15],

[117, 43, 96],

[125, 87, 23],

…,

[[0.983, 0.004, 0.013],

[0.110, 0.889, 0.001],

[0.023, 0.027, 0.985],

…,

Inputs

Numerical

encoding

Learns

representation

(patterns/features/

weights)

Representation

outputs Outputs

Ramen,

Spaghetti

Not spam

“Hey Siri, what’s

the weather

today?”](https://image.slidesharecdn.com/01pytorchworkflow-250118055025-8644afb2/85/01_pytorch_workflow-jutedssd-huge-hhgggdf-5-320.jpg)

![[[116, 78, 15],

[117, 43, 96],

[125, 87, 23],

…,

[[0.983, 0.004, 0.013],

[0.110, 0.889, 0.001],

[0.023, 0.027, 0.985],

…,

Inputs

Numerical

encoding

Learns

representation

(patterns/features/weights)

Representation

outputs Outputs

Ramen,

Spaghetti

Not spam

“Hey Siri, what’s

the weather

today?”

Part 1: Turn data into numbers Part 2: Build model to learn patterns in numbers](https://image.slidesharecdn.com/01pytorchworkflow-250118055025-8644afb2/85/01_pytorch_workflow-jutedssd-huge-hhgggdf-6-320.jpg)

![Di

ff

erence (y_pred[0] - y_test[0]) = 0.4618

MAE_loss = torch.mean(torch.abs(y_pred-y_test))

or

MAE_loss = torch.nn.L1Loss

Mean absolute error (MAE)

Mean absolute error (MAE) = Repeat for all and take the mean

See more: https://pytorch.org/tutorials/beginner/ptcheat.html#loss-functions](https://image.slidesharecdn.com/01pytorchworkflow-250118055025-8644afb2/85/01_pytorch_workflow-jutedssd-huge-hhgggdf-11-320.jpg)

![[[116, 78, 15],

[117, 43, 96],

[125, 87, 23],

…,

[[0.983, 0.004, 0.013],

[0.110, 0.889, 0.001],

[0.023, 0.027, 0.985],

…,

Inputs

Numerical

encoding

Learns

representation

(patterns/features/weights)

Representation

outputs

Outputs

Ramen,

Spaghetti

[[0.092, 0.210, 0.415],

[0.778, 0.929, 0.030],

[0.019, 0.182, 0.555],

…,

1. Initialise with random

weights (only at beginning)

2. Show examples

3. Update representation

outputs

4. Repeat with more

examples

Supervised

learning

(overview)](https://image.slidesharecdn.com/01pytorchworkflow-250118055025-8644afb2/85/01_pytorch_workflow-jutedssd-huge-hhgggdf-17-320.jpg)

![Neural Networks

[[116, 78, 15],

[117, 43, 96],

[125, 87, 23],

…,

[[0.983, 0.004, 0.013],

[0.110, 0.889, 0.001],

[0.023, 0.027, 0.985],

…,

Inputs

Numerical

encoding

Learns

representation

(patterns/features/weights)

Representation

outputs

Outputs

(a human can

understand these)

Ramen,

Spaghetti

Not spam

“Hey Siri, what’s

the weather

today?”

(choose the appropriate

neural network for your

problem)

(before data gets used

with an algorithm, it

needs to be turned into

numbers)

These are tensors!](https://image.slidesharecdn.com/01pytorchworkflow-250118055025-8644afb2/85/01_pytorch_workflow-jutedssd-huge-hhgggdf-18-320.jpg)

![[[116, 78, 15],

[117, 43, 96],

[125, 87, 23],

…,

[[0.983, 0.004, 0.013],

[0.110, 0.889, 0.001],

[0.023, 0.027, 0.985],

…,

Inputs

Numerical

encoding

Learns

representation

(patterns/features/weights)

Representation

outputs

Outputs

Ramen,

Spaghetti

These are tensors!](https://image.slidesharecdn.com/01pytorchworkflow-250118055025-8644afb2/85/01_pytorch_workflow-jutedssd-huge-hhgggdf-19-320.jpg)

![Di

ff

erence (y_pred[0] - y_test[0]) = 0.4618](https://image.slidesharecdn.com/01pytorchworkflow-250118055025-8644afb2/85/01_pytorch_workflow-jutedssd-huge-hhgggdf-23-320.jpg)