10 basic terms so you can talk to data engineer

0 likes60 views

This document defines 16 basic terms related to data engineering: 1. Apache Airflow is an open-source workflow management platform that uses directed acyclic graphs to manage workflow orchestration. 2. Batch processing involves processing large amounts of data at once, such as in ETL steps or bulk operations on digital images. 3. Cold data storage stores old, hardly used data on low-power servers, making retrieval slower. 4. A cluster groups several computers together to perform a single task.

1 of 18

Download to read offline

Ad

Recommended

Hadoop Maharajathi,II-M.sc.,Computer Science,Bonsecours college for women

Hadoop Maharajathi,II-M.sc.,Computer Science,Bonsecours college for womenmaharajothip1 This document provides an overview of Hadoop, an open-source software framework for distributed storage and processing of large datasets across commodity hardware. It discusses Hadoop's history and goals, describes its core architectural components including HDFS, MapReduce and their roles, and gives examples of how Hadoop is used at large companies to handle big data.

Basic Hadoop Architecture V1 vs V2

Basic Hadoop Architecture V1 vs V2VIVEKVANAVAN In the ppt i have explained the basic difference between the hadoop architectures.

hadoop architecture 1 and hadoop architecture 2

i have taken the reference from the website for the preperation.

Improve Presto Architectural Decisions with Shadow Cache

Improve Presto Architectural Decisions with Shadow CacheAlluxio, Inc. Alluxio Day VI

October 12, 2021

https://www.alluxio.io/alluxio-day/

Speaker:

Ke Wang, Facebook

Zhenyu Song, Princeton University

Improving Presto performance with Alluxio at TikTok

Improving Presto performance with Alluxio at TikTokAlluxio, Inc. This document discusses improving the performance of Presto queries on Hive data stored in HDFS by leveraging Alluxio caching. It describes how TikTok integrated Presto with Alluxio to cache the most frequently accessed data partitions, reducing the median query latency by 41.2% and average latency by over 20% for cache hits. Custom caching strategies were developed to identify and prioritize caching the partitions consuming the most IO to maximize resource utilization and minimize cache space requirements.

Hedvig & ClusterHQ - Persistent, portable storage for Docker

Hedvig & ClusterHQ - Persistent, portable storage for DockerEric Carter Hedvig (http://www.hedviginc.com) and Flocker for persistent, portable storage for Docker containers and microservices - preso around VMworld 2015.

Hybrid collaborative tiered storage with alluxio

Hybrid collaborative tiered storage with alluxioThai Bui This document discusses using Alluxio and ZFS together to provide a hybrid collaborative tiered storage solution with Amazon S3. Alluxio acts as a distributed data storage layer that can mount S3 and HDFS, providing data locality. ZFS works at the kernel level to accelerate read/write speeds by caching data in RAM and automatically promoting and demoting blocks between storage tiers like RAM, SSD, and S3. Benchmark results show the combination of ZFS and NVMe SSDs provides up to 10x faster read speeds and 4x faster write speeds compared to using just Amazon EBS, and up to 15x faster performance than directly accessing data from S3. This hybrid approach provides improved performance for analytic queries in

Hadoop introduction

Hadoop introductionRabindra Nath Nandi Hadoop is an open-source software platform for distributed storage and processing of large datasets across clusters of computers. It was designed to scale up from single servers to thousands of machines, with very high fault tolerance. The document outlines the history of Hadoop, why it was created, its core components HDFS for storage and MapReduce for processing, and provides an example word count problem. It also includes information on installing Hadoop and additional resources.

Lodstats: The Data Web Census Dataset. Kobe, Japan, 2016

Lodstats: The Data Web Census Dataset. Kobe, Japan, 2016Ivan Ermilov Lodstats: The Data Web Census Dataset presentation for ISWC conference in Kobe, Japan. Presented on 20 October 2016.

The Path to Migrating off MapR

The Path to Migrating off MapRAlluxio, Inc. Alluxio Tech Talk

Dec 2019

Speaker:

Madan Kumar, Alluxio

If you’re a MapR user, you might have concerns with your existing data stack. Whether it’s the complexity of Hadoop, financial instability and no future MapR product roadmap, or no flexibility when it comes to co-locating storage and compute, MapR may no longer be working for you.

Alluxio can help you migrate to a modern, disaggregated data stack using any object store with the similar performance of Hadoop plus significant cost savings.

Join us for this tech talk where we’ll discuss how to separate your compute and storage on-prem and architect a new data stack that makes your object store the core. We’ll show you how to offload your MapR/HDFS compute to any object store and how to run all of your existing jobs as-is on Alluxio + object store.

Apache cassandra

Apache cassandraAdnan Siddiqi This document provides an overview of Apache Cassandra including its history, architecture, data modeling concepts, and how to install and use it with Python. Key points include that Cassandra is a distributed, scalable NoSQL database designed without single points of failure. It discusses Cassandra's architecture including nodes, datacenters, clusters, commit logs, memtables, and SSTables. Data modeling concepts explained are keyspaces, column families, and designing for even data distribution and minimizing reads. The document also provides examples of creating a keyspace, reading data using Python driver, and demoing data clustering.

Tachyon: An Open Source Memory-Centric Distributed Storage System

Tachyon: An Open Source Memory-Centric Distributed Storage SystemTachyon Nexus, Inc. Tachyon talk at Strata and Hadoop World 2015 at New York City, given by Haoyuan Li, Founder & CEO of Tachyon Nexus. If you are interested, please do not hesitate to contact us at [email protected] . You are welcome to visit our website ( www.tachyonnexus.com ) as well.

Big data and hadoop

Big data and hadoopRoushan Sinha Big data and Hadoop are frameworks for processing and storing large datasets. Hadoop uses HDFS for distributed storage and MapReduce for distributed processing. HDFS stores large files across multiple machines for redundancy and parallel access. MapReduce divides jobs into map and reduce tasks that run in parallel across a cluster. Hadoop provides scalable and fault-tolerant solutions to problems like processing terabytes of data from jet engines or scaling to Google's data processing needs.

Data Center Operating System

Data Center Operating SystemKeshav Yadav The document discusses the need for an operating system for datacenters to manage resources and provide shared services across applications and users in the datacenter. It describes how today's datacenter OS, like Hadoop MapReduce, provides some resource sharing and data sharing capabilities but lacks advanced features. The document envisions a future datacenter OS that enables more efficient resource sharing, standardized interfaces for data sharing, useful programming abstractions, and improved debugging tools to better manage and program large datacenters.

Features of Hadoop

Features of HadoopDr. C.V. Suresh Babu This presentation discusses the following features of Hadoop:

Open source

Fault Tolerance

Distributed Processing

Scalability

Reliability

High Availability

Economic

Flexibility

Easy to use

Data locality

Conclusion

HADOOP

HADOOPHarinder Kaur Hadoop is an open-source framework that allows for the distributed processing of large data sets across clusters of computers. It addresses problems like massive data storage needs and scalable processing of large datasets. Hadoop uses the Hadoop Distributed File System (HDFS) for storage and MapReduce as its processing engine. HDFS stores data reliably across commodity hardware and MapReduce provides a programming model for distributed computing of large datasets.

GlusterFS Presentation FOSSCOMM2013 HUA, Athens, GR

GlusterFS Presentation FOSSCOMM2013 HUA, Athens, GRTheophanis Kontogiannis This document discusses using GlusterFS, an open source distributed file system, to store large amounts of non-structured data in a scalable way using commodity hardware. GlusterFS can scale to thousands of petabytes by clustering storage components over a network and managing data in a single global namespace. It uses a stackable translator architecture and self-healing capabilities to deliver high performance and reliability for diverse workloads. Red Hat acquired Gluster Inc. in 2011 and continues to develop and support GlusterFS as part of their storage product offerings.

RaptorX: Building a 10X Faster Presto with hierarchical cache

RaptorX: Building a 10X Faster Presto with hierarchical cacheAlluxio, Inc. RaptorX is a new product from Facebook that provides a 10x performance improvement over Presto for querying large datasets stored in remote object storage. It achieves this through an intelligent hierarchical caching system that caches metadata, file lists, file descriptors, data fragments, and query results at various points in the query processing pipeline. This caching approach significantly reduces the latency of queries by minimizing the number of remote storage requests. RaptorX has been deployed at Facebook on over 10,000 servers to power interactive analytics workloads querying over 1 exabyte of data stored in remote object storage.

ETL DW-RealTime

ETL DW-RealTimeAdriano Patrick Cunha Apresentação sobre os métodos aplicados no processo de ETL, aprofundando sobre os métodos CDC que são utilizados em ETL de DataWarehouse de Tempo Real.

Introducing gluster filesystem by aditya

Introducing gluster filesystem by adityaAditya Chhikara GlusterFS is an open-source clustered file system that aggregates disk resources from multiple servers into a single global namespace. It scales to several petabytes and thousands of clients. GlusterFS clusters storage over RDMA or TCP/IP, managing data through a unified namespace. Its stackable userspace design delivers high performance for diverse workloads.

Blue Pill / Red Pill : The Matrix of thousands of data streams - Himanshu Gup...

Blue Pill / Red Pill : The Matrix of thousands of data streams - Himanshu Gup...Tech Triveni Designing a streaming application which has to process data from 1 or 2 streams is easy. Any streaming framework which provides scalability, high-throughput, and fault-tolerance would work. But when the number of streams starts growing in order 100s or 1000s, managing them can be daunting. How would you share resources among 1000s of streams with all of them running 24x7? Manage their state, Apply advanced streaming operations, Add/Delete streams without restarting? This talk explains common scenarios & shows techniques that can handle thousands of streams using Spark Structured Streaming.

Pros and Cons of Erasure Coding & Replication vs. RAID in Next-Gen Storage

Pros and Cons of Erasure Coding & Replication vs. RAID in Next-Gen StorageEric Carter Session slides on how to protect data with modern, software-defined storage (like Hedvig). Given at SNIA developer conference 2015.

Optimizing Latency-Sensitive Queries for Presto at Facebook: A Collaboration ...

Optimizing Latency-Sensitive Queries for Presto at Facebook: A Collaboration ...Alluxio, Inc. Alluxio Global Online Meetup

May 7, 2020

For more Alluxio events: https://www.alluxio.io/events/

Speakers:

Rohit Jain, Facebook

Yutian "James" Sun, Facebook

Bin Fan, Alluxio

For many latency-sensitive SQL workloads, Presto is often bound by retrieving distant data. In this talk, Rohit Jain, James Sun from Facebook and Bin Fan from Alluxio will introduce their teams’ collaboration on adding a local on-SSD Alluxio cache inside Presto workers to improve unsatisfied Presto latency.

This talk will focus on:

- Insights of the Presto workloads at Facebook w.r.t. cache effectiveness

- API and internals of the Alluxio local cache, from design trade-offs (e.g. caching granularity, concurrency level and etc) to performance optimizations.

- Initial performance analysis and timeline to deliver this feature for general Presto users.

- Discussion on our future work to optimize cache performance with deeper integration with Presto

Cassandra no sql ecosystem

Cassandra no sql ecosystemSandeep Sharma IIMK Smart City,IoT,Bigdata,Cloud,BI,DW The document discusses Cassandra's data model and how it replaces HDFS services. It describes:

1) Two column families - "inode" and "sblocks" - that replace the HDFS NameNode and DataNode services respectively, with "inode" storing metadata and "sblocks" storing file blocks.

2) CFS reads involve reading the "inode" info to find the block and subblock, then directly accessing the data from the Cassandra SSTable file on the node where it is stored.

3) Keyspaces are containers for column families in Cassandra, and the NetworkTopologyStrategy places replicas across data centers to enable local reads and survive failures.

What's New in Alluxio 2.3

What's New in Alluxio 2.3Alluxio, Inc. Alluxio Community Office Hour

July 14, 2020

For more Alluxio events: https://www.alluxio.io/events/

Speakers:

Calvin Jia, Alluxio

Bin Fan, Alluxio

Alluxio 2.3 was just released at the end of June 2020. Calvin and Bin will go over the new features and integrations available and share learnings from the community. Any questions about the release and on-going community feature development are welcome.

In this Office Hour, we will go over:

- Glue Under Database integration

- Under Filesystem mount wizard

- Tiered Storage Enhancements

- Concurrent Metadata Sync

- Delegated Journal Backups

Introducing to Datamining vs. OLAP - مقدمه و مقایسه ای بر داده کاوی و تحلیل ...

Introducing to Datamining vs. OLAP - مقدمه و مقایسه ای بر داده کاوی و تحلیل ...y-asgari این فایل مقدمه ای بر شناسائی و مقایسه میان داده کاوی و تحلیل روی خط است که با شناسائی وجوه تشابه و تناظر میان این دو ابزار به رابطه تکمیل کننده این دو دانش و تکنیک می پردازد.

Big data

Big dataAlisha Roy This ppt is to help students to learn about big data, Hadoop, HDFS, MApReduce, architecture of HDFS,

Using Alluxio as a Fault-tolerant Pluggable Optimization Component of JD.com'...

Using Alluxio as a Fault-tolerant Pluggable Optimization Component of JD.com'...Alluxio, Inc. JD.com is China's largest retailer that uses Alluxio as a fault-tolerant optimization component in its computation frameworks. Alluxio improves JDPresto performance by 10x on 100+ nodes by enabling data caching and reducing remote reads. Ongoing exploration includes running Alluxio on YARN for resource management, using Alluxio as a shuffle service to address disk I/O bottlenecks, and separating computing and storage across clusters for further optimization. JD has also contributed various features and fixes to Alluxio, including a new WebUI, eviction strategies, JVM monitoring, shell commands, and tests.

Shaping the Role of a Data Lake in a Modern Data Fabric Architecture

Shaping the Role of a Data Lake in a Modern Data Fabric ArchitectureDenodo Watch full webinar here:

Data lakes have been both praised and loathed. They can be incredibly useful to an organization, but it can also be the source of major headaches. Its ease to scale storage with minimal cost has opened the door to many new solutions, but also to a proliferation of runaway objects that have coined the term data swamp.

However, the addition of an MPP engine, based on Presto, to Denodo’s logical layer can change the way you think about the role of the data lake in your overall data strategy.

Watch on-demand this session to learn:

- The new MPP capabilities that Denodo includes

- How to use them to your advantage to improve security and governance of your lake

- New scenarios and solutions where your data fabric strategy can evolve

Transforming Data Architecture Complexity at Sears - StampedeCon 2013

Transforming Data Architecture Complexity at Sears - StampedeCon 2013StampedeCon At the StampedeCon 2013 Big Data conference in St. Louis, Justin Sheppard discussed Transforming Data Architecture Complexity at Sears. High ETL complexity and costs, data latency and redundancy, and batch window limits are just some of the IT challenges caused by traditional data warehouses. Gain an understanding of big data tools through the use cases and technology that enables Sears to solve the problems of the traditional enterprise data warehouse approach. Learn how Sears uses Hadoop as a data hub to minimize data architecture complexity – resulting in a reduction of time to insight by 30-70% – and discover “quick wins” such as mainframe MIPS reduction.

An Introduction To Oracle Database

An Introduction To Oracle DatabaseMeysam Javadi This document provides an overview of Oracle database history, architecture, components, and terminology. It discusses:

- Oracle's release history from 1978 to present.

- The physical and logical structures that make up an Oracle database, including data files, control files, redo logs, tablespaces, segments, and blocks.

- The Oracle instance and its memory components like the SGA and PGA. It describes the various background processes.

- How clients connect to Oracle using the listener, tnsnames.ora file, and naming resolution.

- Common Oracle tools for accessing and managing databases like SQLPlus, SQL Developer, and views for monitoring databases.

Ad

More Related Content

What's hot (19)

The Path to Migrating off MapR

The Path to Migrating off MapRAlluxio, Inc. Alluxio Tech Talk

Dec 2019

Speaker:

Madan Kumar, Alluxio

If you’re a MapR user, you might have concerns with your existing data stack. Whether it’s the complexity of Hadoop, financial instability and no future MapR product roadmap, or no flexibility when it comes to co-locating storage and compute, MapR may no longer be working for you.

Alluxio can help you migrate to a modern, disaggregated data stack using any object store with the similar performance of Hadoop plus significant cost savings.

Join us for this tech talk where we’ll discuss how to separate your compute and storage on-prem and architect a new data stack that makes your object store the core. We’ll show you how to offload your MapR/HDFS compute to any object store and how to run all of your existing jobs as-is on Alluxio + object store.

Apache cassandra

Apache cassandraAdnan Siddiqi This document provides an overview of Apache Cassandra including its history, architecture, data modeling concepts, and how to install and use it with Python. Key points include that Cassandra is a distributed, scalable NoSQL database designed without single points of failure. It discusses Cassandra's architecture including nodes, datacenters, clusters, commit logs, memtables, and SSTables. Data modeling concepts explained are keyspaces, column families, and designing for even data distribution and minimizing reads. The document also provides examples of creating a keyspace, reading data using Python driver, and demoing data clustering.

Tachyon: An Open Source Memory-Centric Distributed Storage System

Tachyon: An Open Source Memory-Centric Distributed Storage SystemTachyon Nexus, Inc. Tachyon talk at Strata and Hadoop World 2015 at New York City, given by Haoyuan Li, Founder & CEO of Tachyon Nexus. If you are interested, please do not hesitate to contact us at [email protected] . You are welcome to visit our website ( www.tachyonnexus.com ) as well.

Big data and hadoop

Big data and hadoopRoushan Sinha Big data and Hadoop are frameworks for processing and storing large datasets. Hadoop uses HDFS for distributed storage and MapReduce for distributed processing. HDFS stores large files across multiple machines for redundancy and parallel access. MapReduce divides jobs into map and reduce tasks that run in parallel across a cluster. Hadoop provides scalable and fault-tolerant solutions to problems like processing terabytes of data from jet engines or scaling to Google's data processing needs.

Data Center Operating System

Data Center Operating SystemKeshav Yadav The document discusses the need for an operating system for datacenters to manage resources and provide shared services across applications and users in the datacenter. It describes how today's datacenter OS, like Hadoop MapReduce, provides some resource sharing and data sharing capabilities but lacks advanced features. The document envisions a future datacenter OS that enables more efficient resource sharing, standardized interfaces for data sharing, useful programming abstractions, and improved debugging tools to better manage and program large datacenters.

Features of Hadoop

Features of HadoopDr. C.V. Suresh Babu This presentation discusses the following features of Hadoop:

Open source

Fault Tolerance

Distributed Processing

Scalability

Reliability

High Availability

Economic

Flexibility

Easy to use

Data locality

Conclusion

HADOOP

HADOOPHarinder Kaur Hadoop is an open-source framework that allows for the distributed processing of large data sets across clusters of computers. It addresses problems like massive data storage needs and scalable processing of large datasets. Hadoop uses the Hadoop Distributed File System (HDFS) for storage and MapReduce as its processing engine. HDFS stores data reliably across commodity hardware and MapReduce provides a programming model for distributed computing of large datasets.

GlusterFS Presentation FOSSCOMM2013 HUA, Athens, GR

GlusterFS Presentation FOSSCOMM2013 HUA, Athens, GRTheophanis Kontogiannis This document discusses using GlusterFS, an open source distributed file system, to store large amounts of non-structured data in a scalable way using commodity hardware. GlusterFS can scale to thousands of petabytes by clustering storage components over a network and managing data in a single global namespace. It uses a stackable translator architecture and self-healing capabilities to deliver high performance and reliability for diverse workloads. Red Hat acquired Gluster Inc. in 2011 and continues to develop and support GlusterFS as part of their storage product offerings.

RaptorX: Building a 10X Faster Presto with hierarchical cache

RaptorX: Building a 10X Faster Presto with hierarchical cacheAlluxio, Inc. RaptorX is a new product from Facebook that provides a 10x performance improvement over Presto for querying large datasets stored in remote object storage. It achieves this through an intelligent hierarchical caching system that caches metadata, file lists, file descriptors, data fragments, and query results at various points in the query processing pipeline. This caching approach significantly reduces the latency of queries by minimizing the number of remote storage requests. RaptorX has been deployed at Facebook on over 10,000 servers to power interactive analytics workloads querying over 1 exabyte of data stored in remote object storage.

ETL DW-RealTime

ETL DW-RealTimeAdriano Patrick Cunha Apresentação sobre os métodos aplicados no processo de ETL, aprofundando sobre os métodos CDC que são utilizados em ETL de DataWarehouse de Tempo Real.

Introducing gluster filesystem by aditya

Introducing gluster filesystem by adityaAditya Chhikara GlusterFS is an open-source clustered file system that aggregates disk resources from multiple servers into a single global namespace. It scales to several petabytes and thousands of clients. GlusterFS clusters storage over RDMA or TCP/IP, managing data through a unified namespace. Its stackable userspace design delivers high performance for diverse workloads.

Blue Pill / Red Pill : The Matrix of thousands of data streams - Himanshu Gup...

Blue Pill / Red Pill : The Matrix of thousands of data streams - Himanshu Gup...Tech Triveni Designing a streaming application which has to process data from 1 or 2 streams is easy. Any streaming framework which provides scalability, high-throughput, and fault-tolerance would work. But when the number of streams starts growing in order 100s or 1000s, managing them can be daunting. How would you share resources among 1000s of streams with all of them running 24x7? Manage their state, Apply advanced streaming operations, Add/Delete streams without restarting? This talk explains common scenarios & shows techniques that can handle thousands of streams using Spark Structured Streaming.

Pros and Cons of Erasure Coding & Replication vs. RAID in Next-Gen Storage

Pros and Cons of Erasure Coding & Replication vs. RAID in Next-Gen StorageEric Carter Session slides on how to protect data with modern, software-defined storage (like Hedvig). Given at SNIA developer conference 2015.

Optimizing Latency-Sensitive Queries for Presto at Facebook: A Collaboration ...

Optimizing Latency-Sensitive Queries for Presto at Facebook: A Collaboration ...Alluxio, Inc. Alluxio Global Online Meetup

May 7, 2020

For more Alluxio events: https://www.alluxio.io/events/

Speakers:

Rohit Jain, Facebook

Yutian "James" Sun, Facebook

Bin Fan, Alluxio

For many latency-sensitive SQL workloads, Presto is often bound by retrieving distant data. In this talk, Rohit Jain, James Sun from Facebook and Bin Fan from Alluxio will introduce their teams’ collaboration on adding a local on-SSD Alluxio cache inside Presto workers to improve unsatisfied Presto latency.

This talk will focus on:

- Insights of the Presto workloads at Facebook w.r.t. cache effectiveness

- API and internals of the Alluxio local cache, from design trade-offs (e.g. caching granularity, concurrency level and etc) to performance optimizations.

- Initial performance analysis and timeline to deliver this feature for general Presto users.

- Discussion on our future work to optimize cache performance with deeper integration with Presto

Cassandra no sql ecosystem

Cassandra no sql ecosystemSandeep Sharma IIMK Smart City,IoT,Bigdata,Cloud,BI,DW The document discusses Cassandra's data model and how it replaces HDFS services. It describes:

1) Two column families - "inode" and "sblocks" - that replace the HDFS NameNode and DataNode services respectively, with "inode" storing metadata and "sblocks" storing file blocks.

2) CFS reads involve reading the "inode" info to find the block and subblock, then directly accessing the data from the Cassandra SSTable file on the node where it is stored.

3) Keyspaces are containers for column families in Cassandra, and the NetworkTopologyStrategy places replicas across data centers to enable local reads and survive failures.

What's New in Alluxio 2.3

What's New in Alluxio 2.3Alluxio, Inc. Alluxio Community Office Hour

July 14, 2020

For more Alluxio events: https://www.alluxio.io/events/

Speakers:

Calvin Jia, Alluxio

Bin Fan, Alluxio

Alluxio 2.3 was just released at the end of June 2020. Calvin and Bin will go over the new features and integrations available and share learnings from the community. Any questions about the release and on-going community feature development are welcome.

In this Office Hour, we will go over:

- Glue Under Database integration

- Under Filesystem mount wizard

- Tiered Storage Enhancements

- Concurrent Metadata Sync

- Delegated Journal Backups

Introducing to Datamining vs. OLAP - مقدمه و مقایسه ای بر داده کاوی و تحلیل ...

Introducing to Datamining vs. OLAP - مقدمه و مقایسه ای بر داده کاوی و تحلیل ...y-asgari این فایل مقدمه ای بر شناسائی و مقایسه میان داده کاوی و تحلیل روی خط است که با شناسائی وجوه تشابه و تناظر میان این دو ابزار به رابطه تکمیل کننده این دو دانش و تکنیک می پردازد.

Big data

Big dataAlisha Roy This ppt is to help students to learn about big data, Hadoop, HDFS, MApReduce, architecture of HDFS,

Using Alluxio as a Fault-tolerant Pluggable Optimization Component of JD.com'...

Using Alluxio as a Fault-tolerant Pluggable Optimization Component of JD.com'...Alluxio, Inc. JD.com is China's largest retailer that uses Alluxio as a fault-tolerant optimization component in its computation frameworks. Alluxio improves JDPresto performance by 10x on 100+ nodes by enabling data caching and reducing remote reads. Ongoing exploration includes running Alluxio on YARN for resource management, using Alluxio as a shuffle service to address disk I/O bottlenecks, and separating computing and storage across clusters for further optimization. JD has also contributed various features and fixes to Alluxio, including a new WebUI, eviction strategies, JVM monitoring, shell commands, and tests.

Similar to 10 basic terms so you can talk to data engineer (20)

Shaping the Role of a Data Lake in a Modern Data Fabric Architecture

Shaping the Role of a Data Lake in a Modern Data Fabric ArchitectureDenodo Watch full webinar here:

Data lakes have been both praised and loathed. They can be incredibly useful to an organization, but it can also be the source of major headaches. Its ease to scale storage with minimal cost has opened the door to many new solutions, but also to a proliferation of runaway objects that have coined the term data swamp.

However, the addition of an MPP engine, based on Presto, to Denodo’s logical layer can change the way you think about the role of the data lake in your overall data strategy.

Watch on-demand this session to learn:

- The new MPP capabilities that Denodo includes

- How to use them to your advantage to improve security and governance of your lake

- New scenarios and solutions where your data fabric strategy can evolve

Transforming Data Architecture Complexity at Sears - StampedeCon 2013

Transforming Data Architecture Complexity at Sears - StampedeCon 2013StampedeCon At the StampedeCon 2013 Big Data conference in St. Louis, Justin Sheppard discussed Transforming Data Architecture Complexity at Sears. High ETL complexity and costs, data latency and redundancy, and batch window limits are just some of the IT challenges caused by traditional data warehouses. Gain an understanding of big data tools through the use cases and technology that enables Sears to solve the problems of the traditional enterprise data warehouse approach. Learn how Sears uses Hadoop as a data hub to minimize data architecture complexity – resulting in a reduction of time to insight by 30-70% – and discover “quick wins” such as mainframe MIPS reduction.

An Introduction To Oracle Database

An Introduction To Oracle DatabaseMeysam Javadi This document provides an overview of Oracle database history, architecture, components, and terminology. It discusses:

- Oracle's release history from 1978 to present.

- The physical and logical structures that make up an Oracle database, including data files, control files, redo logs, tablespaces, segments, and blocks.

- The Oracle instance and its memory components like the SGA and PGA. It describes the various background processes.

- How clients connect to Oracle using the listener, tnsnames.ora file, and naming resolution.

- Common Oracle tools for accessing and managing databases like SQLPlus, SQL Developer, and views for monitoring databases.

1. Briefly describe the major components of a data warehouse archi.docx

1. Briefly describe the major components of a data warehouse archi.docxmonicafrancis71118 1. Briefly describe the major components of a data warehouse architecture?

Components in data warehouse

Data warehouse contains the collection of data that are used for decision making and used business intelligence.

· It is a subject-oriented, integrated, time- variant, and non-updateable data.

· Three components in the architecture of the data warehouse are

· Operational data

· Reconciled data

· Derived data

Diagrammatic representation of architecture of data warehouse is shown below:

Components in the data warehouse architecture:

Operational data:

· It maintains the data from the operational system throughout the organization.

Reconciled data

· It is a data stored in the enterprise data warehouse and an operational data store.

· it contains a current and detailed data and authoritative sources for decision support application.

Derived data

· Derives data is a data obtained from the data mart that is used for the end user decision support application.

· It contains the selected, formatted, and aggregated data.

· It is the data stored in every mart.

Types of metadata in the data warehouse architecture:

There are three types of metadata. They are,

· Operational metadata.

· Enterprises data warehouse (EDW)metadata.

· Data mart metadata.

Operational metadata:

It describes the data in the operational system that provides for the enterprise data warehouse.

It is available in various formats, but the quality is poor.

Enterprises data warehouse (EDW)metadata:

It describes the data of reconciled layer.

It provides the rules for converting the operational data into reconciled data.

It extracts from the enterprise data model.

Data mart metadata:

It describes the data of derived data layer.

It provides the rules for converting the reconciled data into derived data.

2. Explain how the volatility of a data warehouse is different from the volatility of a database for an operational information system?

Data warehouse

· Data warehouse contains the collection of data that are used for decision making and used business intelligence.

· It is a unique kind of database, so it focuses on business intelligence, time variant data, and external data.

· The term data warehouse usually denotes to the grouping of many different database across an entire enterprise.

· It is a subject-oriented, integrated, time- variant, and non-updateable data.

Operational database:

An operational database is the database which is usually accessed and restructured on a regular basis and generally handles the daily transactions for a business.

It is used to manage the dynamic data and modification in the real-time data.

Volatility of a data warehouse and operational database:

A key dissimilarity between a data warehouse and an operational system is the data stored type.

Data warehouse is based on the use of periodic data operational system is based on the use of the transient data.

A change in the existing record present in the stores that overwrites the previous reco.

Oracle 11gR2 plain servers vs Exadata - 2013

Oracle 11gR2 plain servers vs Exadata - 2013Connor McDonald This document discusses Oracle's Exadata engineered systems and how they provide several advantages over traditional database systems. Exadata systems are optimized end-to-end by Oracle engineers to improve performance, simplify administration, and reduce costs. Key benefits include orders of magnitude faster data transfer speeds, higher database throughput, automated storage management, database-level security and compression, and the ability to run mixed workloads simultaneously on a single cloud platform.

Accelerate Spark Workloads on S3

Accelerate Spark Workloads on S3Alluxio, Inc. This document discusses accelerating Spark workloads on Amazon S3 using Alluxio. It describes the challenges of running Spark interactively on S3 due to its eventual consistency and expensive metadata operations. Alluxio provides a data caching layer that offers strong consistency, faster performance, and API compatibility with HDFS and S3. It also allows data outside of S3 to be analyzed. The document demonstrates how to bootstrap Alluxio on an AWS EMR cluster to accelerate Spark workloads running on S3.

Unit 6 - Compression and Serialization in Hadoop.pptx

Unit 6 - Compression and Serialization in Hadoop.pptxmuhweziart Unit 6 - Compression and Serialization in Hadoop.

How the Development Bank of Singapore solves on-prem compute capacity challen...

How the Development Bank of Singapore solves on-prem compute capacity challen...Alluxio, Inc. The Development Bank of Singapore (DBS) has evolved its data platforms over three generations to address big data challenges and the explosion of data. It now uses a hybrid cloud model with Alluxio to provide a unified namespace across on-prem and cloud storage for analytics workloads. Alluxio enables "zero-copy" cloud bursting by caching hot data and orchestrating analytics jobs between on-prem and cloud resources like AWS EMR and Google Dataproc. This provides dynamic scaling of compute capacity while retaining data locality. Alluxio also offers intelligent data tiering and policy-driven data migration to cloud storage over time for cost efficiency and management.

Speed Up Your Queries with Hive LLAP Engine on Hadoop or in the Cloud

Speed Up Your Queries with Hive LLAP Engine on Hadoop or in the Cloudgluent. Hive was the first popular SQL layer built on Hadoop and has long been known as a heavyweight SQL engine suitable mainly for long-running batch jobs. This has greatly changed since Hive was announced to the world over 8 years ago. Hortonworks and the open source community have evolved Apache Hive into a fast, dynamic SQL on Hadoop engine capable of running highly concurrent query workloads over large datasets with sub-second response time.

The latest Hortonworks and Azure HDInsight platform versions fully support Hive with LLAP execution engine for production use. In this webinar, we will go through the architecture of Hive + LLAP engine and explain how it differs from previous Hive versions. We will then dive deeper and show how features like query vectorization and LLAP columnar caching bring further automatic performance improvements.

In the end, we will show how Gluent brings these new performance benefits to traditional enterprise database platforms via transparent data virtualization, allowing even your largest databases to benefit from all this without changing any application code. Join this webinar to learn about significant improvements in modern Hive architecture and how Gluent and Hive LLAP on Hortonworks or Azure HDInsight platforms can accelerate cloud migrations and greatly improve hybrid query performance!

A Reference Architecture for ETL 2.0

A Reference Architecture for ETL 2.0 DataWorks Summit More and more organizations are moving their ETL workloads to a Hadoop based ELT grid architecture. Hadoop`s inherit capabilities, especially it`s ability to do late binding addresses some of the key challenges with traditional ETL platforms. In this presentation, attendees will learn the key factors, considerations and lessons around ETL for Hadoop. Areas such as pros and cons for different extract and load strategies, best ways to batch data, buffering and compression considerations, leveraging HCatalog, data transformation, integration with existing data transformations, advantages of different ways of exchanging data and leveraging Hadoop as a data integration layer. This is an extremely popular presentation around ETL and Hadoop.

getFamiliarWithHadoop

getFamiliarWithHadoopAmirReza Mohammadi This document provides an introduction to big data and Hadoop. It discusses how the volume of data being generated is growing rapidly and exceeding the capabilities of traditional databases. Hadoop is presented as a solution for distributed storage and processing of large datasets across clusters of commodity hardware. Key aspects of Hadoop covered include MapReduce for parallel processing, the Hadoop Distributed File System (HDFS) for reliable storage, and how data is replicated across nodes for fault tolerance.

Building the Data Lake with Azure Data Factory and Data Lake Analytics

Building the Data Lake with Azure Data Factory and Data Lake AnalyticsKhalid Salama In essence, a data lake is commodity distributed file system that acts as a repository to hold raw data file extracts of all the enterprise source systems, so that it can serve the data management and analytics needs of the business. A data lake system provides means to ingest data, perform scalable big data processing, and serve information, in addition to manage, monitor and secure the it environment. In these slide, we discuss building data lakes using Azure Data Factory and Data Lake Analytics. We delve into the architecture if the data lake and explore its various components. We also describe the various data ingestion scenarios and considerations. We introduce the Azure Data Lake Store, then we discuss how to build Azure Data Factory pipeline to ingest the data lake. After that, we move into big data processing using Data Lake Analytics, and we delve into U-SQL.

The Marriage of the Data Lake and the Data Warehouse and Why You Need Both

The Marriage of the Data Lake and the Data Warehouse and Why You Need BothAdaryl "Bob" Wakefield, MBA In the past few years, the term "data lake" has leaked into our lexicon. But what exactly IS a data lake? Some IT managers confuse data lakes with data warehouses. Some people think data lakes replace data warehouses. Both of these conclusions are false. Their is room in your data architecture for both data lakes and data warehouses. They both have different use cases and those use cases can be complementary.

Todd Reichmuth, Solutions Engineer with Snowflake Computing, has spent the past 18 years in the world of Data Warehousing and Big Data. He spent that time at Netezza and then later at IBM Data. Earlier in 2018 making the jump to the cloud at Snowflake Computing.

Mike Myer, Sales Director with Snowflake Computing, has spent the past 6 years in the world of Security and looking to drive awareness to better Data Warehousing and Big Data solutions available! Was previously at local tech companies FireMon and Lockpath and decided to join Snowflake due to the disruptive technology that's truly helping folks in the Big Data world on a day to day basis.

Hadoop seminar

Hadoop seminarKrishnenduKrishh Hadoop is an open-source framework for distributed storage and processing of large datasets across clusters of commodity hardware. It addresses problems posed by large and complex datasets that cannot be processed by traditional systems. Hadoop uses HDFS for storage and MapReduce for distributed processing of data in parallel. Hadoop clusters can scale to thousands of nodes and petabytes of data, providing low-cost and fault-tolerant solutions for big data problems faced by internet companies and other large organizations.

Hadoop project design and a usecase

Hadoop project design and a usecasesudhakara st Fundamentals of Big Data, Hadoop project design and case study or Use case

General planning consideration and most necessaries in Hadoop ecosystem and Hadoop projects

This will provide the basis for choosing the right Hadoop implementation, Hadoop technologies integration, adoption and creating an infrastructure.

Building applications using Apache Hadoop with a use-case of WI-FI log analysis has real life example.

Spark Driven Big Data Analytics

Spark Driven Big Data Analyticsinoshg This document provides an overview of Spark driven big data analytics. It begins by defining big data and its characteristics. It then discusses the challenges of traditional analytics on big data and how Apache Spark addresses these challenges. Spark improves on MapReduce by allowing distributed datasets to be kept in memory across clusters. This enables faster iterative and interactive processing. The document outlines Spark's architecture including its core components like RDDs, transformations, actions and DAG execution model. It provides examples of writing Spark applications in Java and Java 8 to perform common analytics tasks like word count.

Delivering rapid-fire Analytics with Snowflake and Tableau

Delivering rapid-fire Analytics with Snowflake and TableauHarald Erb Until recently, advancements in data warehousing and analytics were largely incremental. Small innovations in database design would herald a new data warehouse every

2-3 years, which would quickly become overwhelmed with rapidly increasing data volumes. Knowledge workers struggled to access those databases with development intensive BI tools designed for reporting, rather than exploration and sharing. Both databases and BI tools were strained in locally hosted environments that were inflexible to growth or change.

Snowflake and Tableau represent a fundamentally different approach. Snowflake’s multi-cluster shared data architecture was designed for the cloud and to handle logarithmically larger data volumes at blazing speed. Tableau was made to foster an interactive approach to analytics, freeing knowledge workers to use the speed of Snowflake to their greatest advantage.

Vargas polyglot-persistence-cloud-edbt

Vargas polyglot-persistence-cloud-edbtGenoveva Vargas-Solar This document discusses polyglot persistence and multi-cloud data management solutions. It begins by noting the huge amounts of data being generated and stored globally, such as the billions of pieces of content shared daily on social media platforms. It then discusses challenges in storing and accessing these massive datasets, which can range from the petabyte to exabyte scale. The document introduces the concept of polyglot persistence, where enterprises use a variety of data storage technologies suited to different types of data, rather than assuming a single relational database. It also discusses using NoSQL databases and deploying databases across multiple cloud platforms.

History of Oracle and Databases

History of Oracle and DatabasesConnor McDonald The document traces the history and evolution of Oracle Database from its beginnings in 1977 through version 12c. It discusses key milestones like the first commercial SQL database in 1979, the introduction of transactions and multi-versioning in version 6 in 1988, and the development of Real Application Clusters and Automatic Storage Management. It focuses on how the multitenant architecture of Oracle Database 12c allows for increased consolidation through pluggable databases that share resources.

Big Data Unit 4 - Hadoop

Big Data Unit 4 - HadoopRojaT4 Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of computers. It allows for the reliable, scalable, and distributed processing of large data sets across commodity hardware. The core of Hadoop consists of HDFS for storage and MapReduce for processing data in parallel on multiple nodes. The Hadoop ecosystem includes additional projects that extend the functionality of the core components.

The Marriage of the Data Lake and the Data Warehouse and Why You Need Both

The Marriage of the Data Lake and the Data Warehouse and Why You Need BothAdaryl "Bob" Wakefield, MBA

Ad

More from Worapol Alex Pongpech, PhD (9)

Blockchain based Customer Relation System

Blockchain based Customer Relation SystemWorapol Alex Pongpech, PhD Blockchain based Customer Relation System

Fast analytics kudu to druid

Fast analytics kudu to druidWorapol Alex Pongpech, PhD Fast Analytics aims to deliver analytics at decision-making speeds using technologies like Apache Kudu and Apache Druid for processing high volumes of data in real time. However, Kudu does not integrate well with Hadoop, so Druid is presented as a better solution for combining low-latency queries with Hadoop compatibility. The document then provides overviews of the capabilities and use cases of Druid, examples of companies using Druid, and instructions for getting started with a Druid quickstart tutorial.

Apache Kafka

Apache Kafka Worapol Alex Pongpech, PhD This document provides an overview of Apache Kafka. It discusses Kafka's key capabilities including publishing and subscribing to streams of records, storing streams of records durably, and processing streams of records as they occur. It describes Kafka's core components like producers, consumers, brokers, and clustering. It also outlines why Kafka is useful for messaging, storing data, processing streams in real-time, and its high performance capabilities like supporting multiple producers/consumers and disk-based retention.

Building business intuition from data

Building business intuition from dataWorapol Alex Pongpech, PhD This document discusses different methods for analyzing both quantitative and qualitative data to gain business intuition. It describes common descriptive and inferential statistical techniques for quantitative data like mean, median, mode, correlation, and regression. For qualitative data, it outlines techniques like word repetition analysis, comparing and contrasting themes, content analysis, narrative analysis, and grounded theory. The overall goal is to use exploratory data analysis and various tools to identify patterns and insights that can help build business intuition.

Why are we using kubernetes

Why are we using kubernetesWorapol Alex Pongpech, PhD Kubernetes provides the software necessary to build and deploy reliable, scalable distributed systems by handling problems related to velocity, scaling, abstracting infrastructure, and efficiency. Specifically, it allows for fast updates and self-healing systems, scales well through decoupled architectures, separates developers from specific machines or cloud providers, and improves efficiency by automating application distribution and enabling cheap test environments using containers.

Airflow 4 manager

Airflow 4 managerWorapol Alex Pongpech, PhD Apache Airflow is an open-source workflow management platform that was created at Airbnb in 2014 to author, schedule, and monitor complex workflows. It allows users to define workflows as directed acyclic graphs (DAGs) of tasks. The Airflow scheduler then executes the tasks on workers based on dependencies. Airflow is commonly used for ETL pipelines, data processing, machine learning workflows, and automating devops tasks like monitoring cron jobs. Companies like Robinhood and Google use Airflow for complex data workflows and as a managed service on Google Cloud.

Fast Analytics

Fast Analytics Worapol Alex Pongpech, PhD Fast Analytics (FA) uses an Enterprise Service Bus (ESB) to process high volumes of big data in real time, enabling decision makers to understand new trends and shifts as they occur. FA delivers analytics at decision-making speeds through technologies like Apache Kudu, which provides low latency random access and efficient analytical queries on columnar data. Kudu uses a log-structured storage approach and Raft consensus algorithm to replicate data across nodes for reliability and high availability.

Dark data

Dark dataWorapol Alex Pongpech, PhD The document discusses the concept of "dark data", which refers to data that is collected by organizations but not analyzed or used. Some key points:

- Up to 90% of data loses value immediately or is never analyzed by organizations. Common examples of dark data include customer location data and sensor data.

- Organizations retain dark data for compliance purposes but storing it can be more expensive than the potential value. Only about 1% of organizational data is typically analyzed.

- Dark data poses risks like legal issues if it contains private information, but also opportunity costs if competitors analyze the data first. Methods to mitigate risks include ongoing data inventories, encryption, and retention policies.

- Many types of businesses could benefit from analyzing

In15orlesss hadoop

In15orlesss hadoopWorapol Alex Pongpech, PhD Apache Hadoop is a framework for distributed storage and processing of large datasets across clusters of commodity hardware. It provides HDFS for distributed file storage and MapReduce as a programming model for distributed computations. Hadoop includes other technologies like YARN for resource management, Spark for fast computation, HBase for NoSQL database, and tools for data analysis, transfer, and security. Hadoop can run on-premise or in cloud environments and supports analytics workloads.

Ad

Recently uploaded (20)

Linux Professional Institute LPIC-1 Exam.pdf

Linux Professional Institute LPIC-1 Exam.pdfRHCSA Guru Introduction to LPIC-1 Exam - overview, exam details, price and job opportunities

How analogue intelligence complements AI

How analogue intelligence complements AIPaul Rowe

Artificial Intelligence is providing benefits in many areas of work within the heritage sector, from image analysis, to ideas generation, and new research tools. However, it is more critical than ever for people, with analogue intelligence, to ensure the integrity and ethical use of AI. Including real people can improve the use of AI by identifying potential biases, cross-checking results, refining workflows, and providing contextual relevance to AI-driven results.

News about the impact of AI often paints a rosy picture. In practice, there are many potential pitfalls. This presentation discusses these issues and looks at the role of analogue intelligence and analogue interfaces in providing the best results to our audiences. How do we deal with factually incorrect results? How do we get content generated that better reflects the diversity of our communities? What roles are there for physical, in-person experiences in the digital world?

#StandardsGoals for 2025: Standards & certification roundup - Tech Forum 2025

#StandardsGoals for 2025: Standards & certification roundup - Tech Forum 2025BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, transcript, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...organizerofv IEDM 2024 Tutorial2

Generative Artificial Intelligence (GenAI) in Business

Generative Artificial Intelligence (GenAI) in BusinessDr. Tathagat Varma My talk for the Indian School of Business (ISB) Emerging Leaders Program Cohort 9. In this talk, I discussed key issues around adoption of GenAI in business - benefits, opportunities and limitations. I also discussed how my research on Theory of Cognitive Chasms helps address some of these issues

Dev Dives: Automate and orchestrate your processes with UiPath Maestro

Dev Dives: Automate and orchestrate your processes with UiPath MaestroUiPathCommunity This session is designed to equip developers with the skills needed to build mission-critical, end-to-end processes that seamlessly orchestrate agents, people, and robots.

📕 Here's what you can expect:

- Modeling: Build end-to-end processes using BPMN.

- Implementing: Integrate agentic tasks, RPA, APIs, and advanced decisioning into processes.

- Operating: Control process instances with rewind, replay, pause, and stop functions.

- Monitoring: Use dashboards and embedded analytics for real-time insights into process instances.

This webinar is a must-attend for developers looking to enhance their agentic automation skills and orchestrate robust, mission-critical processes.

👨🏫 Speaker:

Andrei Vintila, Principal Product Manager @UiPath

This session streamed live on April 29, 2025, 16:00 CET.

Check out all our upcoming Dev Dives sessions at https://community.uipath.com/dev-dives-automation-developer-2025/.

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...Noah Loul Artificial intelligence is changing how businesses operate. Companies are using AI agents to automate tasks, reduce time spent on repetitive work, and focus more on high-value activities. Noah Loul, an AI strategist and entrepreneur, has helped dozens of companies streamline their operations using smart automation. He believes AI agents aren't just tools—they're workers that take on repeatable tasks so your human team can focus on what matters. If you want to reduce time waste and increase output, AI agents are the next move.

Andrew Marnell: Transforming Business Strategy Through Data-Driven Insights

Andrew Marnell: Transforming Business Strategy Through Data-Driven InsightsAndrew Marnell With expertise in data architecture, performance tracking, and revenue forecasting, Andrew Marnell plays a vital role in aligning business strategies with data insights. Andrew Marnell’s ability to lead cross-functional teams ensures businesses achieve sustainable growth and operational excellence.

Role of Data Annotation Services in AI-Powered Manufacturing

Role of Data Annotation Services in AI-Powered ManufacturingAndrew Leo From predictive maintenance to robotic automation, AI is driving the future of manufacturing. But without high-quality annotated data, even the smartest models fall short.

Discover how data annotation services are powering accuracy, safety, and efficiency in AI-driven manufacturing systems.

Precision in data labeling = Precision on the production floor.

HCL Nomad Web – Best Practices and Managing Multiuser Environments

HCL Nomad Web – Best Practices and Managing Multiuser Environmentspanagenda Webinar Recording: https://www.panagenda.com/webinars/hcl-nomad-web-best-practices-and-managing-multiuser-environments/

HCL Nomad Web is heralded as the next generation of the HCL Notes client, offering numerous advantages such as eliminating the need for packaging, distribution, and installation. Nomad Web client upgrades will be installed “automatically” in the background. This significantly reduces the administrative footprint compared to traditional HCL Notes clients. However, troubleshooting issues in Nomad Web present unique challenges compared to the Notes client.

Join Christoph and Marc as they demonstrate how to simplify the troubleshooting process in HCL Nomad Web, ensuring a smoother and more efficient user experience.

In this webinar, we will explore effective strategies for diagnosing and resolving common problems in HCL Nomad Web, including

- Accessing the console

- Locating and interpreting log files

- Accessing the data folder within the browser’s cache (using OPFS)

- Understand the difference between single- and multi-user scenarios

- Utilizing Client Clocking

Heap, Types of Heap, Insertion and Deletion

Heap, Types of Heap, Insertion and DeletionJaydeep Kale This pdf will explain what is heap, its type, insertion and deletion in heap and Heap sort

2025-05-Q4-2024-Investor-Presentation.pptx

2025-05-Q4-2024-Investor-Presentation.pptxSamuele Fogagnolo Cloudflare Q4 Financial Results Presentation

Technology Trends in 2025: AI and Big Data Analytics

Technology Trends in 2025: AI and Big Data AnalyticsInData Labs At InData Labs, we have been keeping an ear to the ground, looking out for AI-enabled digital transformation trends coming our way in 2025. Our report will provide a look into the technology landscape of the future, including:

-Artificial Intelligence Market Overview

-Strategies for AI Adoption in 2025

-Anticipated drivers of AI adoption and transformative technologies

-Benefits of AI and Big data for your business

-Tips on how to prepare your business for innovation

-AI and data privacy: Strategies for securing data privacy in AI models, etc.

Download your free copy nowand implement the key findings to improve your business.

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager API

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager APIUiPathCommunity Join this UiPath Community Berlin meetup to explore the Orchestrator API, Swagger interface, and the Test Manager API. Learn how to leverage these tools to streamline automation, enhance testing, and integrate more efficiently with UiPath. Perfect for developers, testers, and automation enthusiasts!

📕 Agenda

Welcome & Introductions

Orchestrator API Overview

Exploring the Swagger Interface

Test Manager API Highlights

Streamlining Automation & Testing with APIs (Demo)

Q&A and Open Discussion

Perfect for developers, testers, and automation enthusiasts!

👉 Join our UiPath Community Berlin chapter: https://community.uipath.com/berlin/

This session streamed live on April 29, 2025, 18:00 CET.

Check out all our upcoming UiPath Community sessions at https://community.uipath.com/events/.

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...Impelsys Inc. Impelsys provided a robust testing solution, leveraging a risk-based and requirement-mapped approach to validate ICU Connect and CritiXpert. A well-defined test suite was developed to assess data communication, clinical data collection, transformation, and visualization across integrated devices.

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptx

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptxJustin Reock Building 10x Organizations with Modern Productivity Metrics

10x developers may be a myth, but 10x organizations are very real, as proven by the influential study performed in the 1980s, ‘The Coding War Games.’

Right now, here in early 2025, we seem to be experiencing YAPP (Yet Another Productivity Philosophy), and that philosophy is converging on developer experience. It seems that with every new method we invent for the delivery of products, whether physical or virtual, we reinvent productivity philosophies to go alongside them.

But which of these approaches actually work? DORA? SPACE? DevEx? What should we invest in and create urgency behind today, so that we don’t find ourselves having the same discussion again in a decade?

AI and Data Privacy in 2025: Global Trends

AI and Data Privacy in 2025: Global TrendsInData Labs In this infographic, we explore how businesses can implement effective governance frameworks to address AI data privacy. Understanding it is crucial for developing effective strategies that ensure compliance, safeguard customer trust, and leverage AI responsibly. Equip yourself with insights that can drive informed decision-making and position your organization for success in the future of data privacy.

This infographic contains:

-AI and data privacy: Key findings

-Statistics on AI data privacy in the today’s world

-Tips on how to overcome data privacy challenges

-Benefits of AI data security investments.

Keep up-to-date on how AI is reshaping privacy standards and what this entails for both individuals and organizations.

Cyber Awareness overview for 2025 month of security

Cyber Awareness overview for 2025 month of securityriccardosl1 Cyber awareness training educates employees on risk associated with internet and malicious emails

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptx

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptxAnoop Ashok In today's fast-paced retail environment, efficiency is key. Every minute counts, and every penny matters. One tool that can significantly boost your store's efficiency is a well-executed planogram. These visual merchandising blueprints not only enhance store layouts but also save time and money in the process.

Quantum Computing Quick Research Guide by Arthur Morgan

Quantum Computing Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

10 basic terms so you can talk to data engineer

- 1. 16 basic terms So you can talk to data engineers Alex Pongpech

- 2. 1. AIRFlow ● Apache Airflow is an open-source workflow management platform. ● It started at Airbnb in October 2014 as a solution to manage the company's increasing complex workflows. ● Airflow uses directed acyclic graphs (DAGs) to manage workflow orchestration. 2

- 3. 2. Batch Processing: ● processing large amounts of data at once. ● Usage ○ The extract, transform, load (ETL) step in populating data warehouses ○ Performing bulk operations on digital images such as resizing, conversion, watermarking, or otherwise editing a group of image files. ○ Converting computer files from one format to another. For example, a batch job may convert proprietary and legacy files to common standard formats for end-user queries and display. 3

- 4. 3. Cold data storage– ● storing old data that is hardly used on low-power servers. Retrieving the data will take longer 4

- 5. 4. Cluster: ● several computers (or virtual machines or node) grouped together to perform a single task. 5

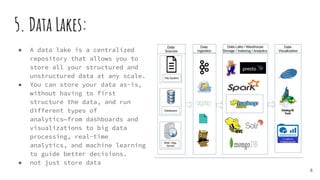

- 6. 5. Data Lakes: ● A data lake is a centralized repository that allows you to store all your structured and unstructured data at any scale. ● You can store your data as-is, without having to first structure the data, and run different types of analytics—from dashboards and visualizations to big data processing, real-time analytics, and machine learning to guide better decisions. ● not just store data 6

- 7. 6. Data Ingests: ● is the process of obtaining and importing data for immediate use or storage in a database. To ingest something is to "take something in or absorb something." ● Data can be streamed in real time or ingested in batches. 7

- 8. 7. Data Pipeline ● is a broader term that encompasses ETL as a subset. ● It refers to a system for moving data from one system to another. The data may or may not be transformed, and it may be processed in real-time (or streaming) instead of batches. 8

- 9. 8. Data Streaming: ● processing data in small chunks, one at a time, rather than processing all data at once. ● Streaming is necessary for processing infinite event streams. ● It’s also useful for processing large amounts of data, because it prevents memory overflows during processing 9

- 10. 9. Distributed Data Processing: ● breaking up data into partitions so that large amounts of data can be processed by many machines simultaneously. 10

- 11. 10.Extract, Transform and Load (ETL) – a process in a database and data warehousing meaning extracting the data from various sources, transforming it to fit operational needs and loading it into the database 11

- 12. 11. Extract Load Transform ● In contrast to ETL, in ELT models the data is not transformed on entry to the data lake, but stored in its original raw format. 12

- 13. 12. Hadoop Distributed File System (HDFS) ● Hadoop Distributed File System (HDFS) is primary data storage layer used by Hadoop applications. ● It employs DataNode and NameNode architecture. 13

- 14. 13. Metadata. ● Metadata is "data that provides information about other data". ● Many distinct types of metadata exist, including descriptive metadata, structural metadata, administrative metadata, reference metadata and statistical metadata. 14

- 15. 14 Real-time processing: ● Real-time data processing is the execution of data in a short time period, providing near-instantaneous output. ● The processing is done as the data is inputted, so it needs a continuous stream of input data in order to provide a continuous output. 15

- 16. 15. Scalable Scalable hardware or software can expand to support increasing workloads. This capability allows computer equipment and software programs to grow over time, rather than needing to be replaced. 16

- 17. 16. Workflow Orchestration ● An orchestration workflow, which is based on Business Process Manager Business Process Definition, defines a logical flow of activities or tasks from a Start event to an End event to accomplish a specific service. 17