18 Data Streams

27 likes17,209 views

Course "Machine Learning and Data Mining" for the degree of Computer Engineering at the Politecnico di Milano. In in this lecture we overview the mining of data streams

1 of 62

Downloaded 1,539 times

Ad

Recommended

Lecture6 introduction to data streams

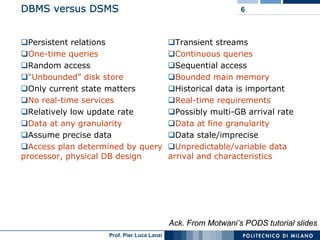

Lecture6 introduction to data streamshktripathy There are three main points about data streams and stream processing:

1) A data stream is a continuous, ordered sequence of data items that arrives too rapidly to be stored fully. Common sources include sensors, web traffic, and social media.

2) Data stream management systems process continuous queries over streams in real-time using bounded memory. They provide summaries of historical data rather than storing entire streams.

3) Challenges of stream processing include limited memory, complex continuous queries, and unpredictable data rates and characteristics. Approximate query processing techniques like windows, sampling, and load shedding help address these challenges.

5.1 mining data streams

5.1 mining data streamsKrish_ver2 This document discusses techniques for mining data streams. It begins by defining different types of streaming data like time-series data and sequence data. It then discusses the characteristics of data streams like their huge volume, fast changing nature, and requirement for real-time processing. The key challenges in stream query processing are the unbounded memory requirements and need for approximate query answering. The document outlines several synopsis data structures and techniques used for mining data streams, including random sampling, histograms, sketches, and randomized algorithms. It also discusses architectures for stream query processing and classification of dynamic data streams.

Big Data Analytics with Hadoop

Big Data Analytics with HadoopPhilippe Julio Hadoop, flexible and available architecture for large scale computation and data processing on a network of commodity hardware.

Introduction to Data streaming - 05/12/2014

Introduction to Data streaming - 05/12/2014Raja Chiky Raja Chiky is an associate professor whose research interests include data stream mining, distributed architectures, and recommender systems. The document outlines data streaming concepts including what a data stream is, data stream management systems, and basic approximate algorithms used for processing massive, high-velocity data streams. It also discusses challenges in distributed systems and using semantic technologies for data streaming.

Dynamic Itemset Counting

Dynamic Itemset CountingTarat Diloksawatdikul Dynamic Itemset Counting (DIC) is an algorithm for efficiently mining frequent itemsets from transactional data that improves upon the Apriori algorithm. DIC allows itemsets to begin being counted as soon as it is suspected they may be frequent, rather than waiting until the end of each pass like Apriori. DIC uses different markings like solid/dashed boxes and circles to track the counting status of itemsets. It can generate frequent itemsets and association rules using conviction in fewer passes over the data compared to Apriori.

Ensemble learning

Ensemble learningHaris Jamil Ensemble Learning is a technique that creates multiple models and then combines them to produce improved results.

Ensemble learning usually produces more accurate solutions than a single model would.

Visit our Website for More Info: https://thetrendshunters.com/custom-acrylic-glass-spotify-music-plaque/

Spatial data mining

Spatial data miningMITS Gwalior Spatial data mining involves discovering patterns from large spatial datasets. It differs from traditional data mining due to properties of spatial data like spatial autocorrelation and heterogeneity. Key spatial data mining tasks include clustering, classification, trend analysis and association rule mining. Clustering algorithms like PAM and CLARA are useful for grouping spatial data objects. Trend analysis can identify global or local trends by analyzing attributes of spatially related objects. Future areas of research include spatial data mining in object oriented databases and using parallel processing to improve computational efficiency for large spatial datasets.

Map reduce in BIG DATA

Map reduce in BIG DATAGauravBiswas9 MapReduce is a programming framework that allows for distributed and parallel processing of large datasets. It consists of a map step that processes key-value pairs in parallel, and a reduce step that aggregates the outputs of the map step. As an example, a word counting problem is presented where words are counted by mapping each word to a key-value pair of the word and 1, and then reducing by summing the counts of each unique word. MapReduce jobs are executed on a cluster in a reliable way using YARN to schedule tasks across nodes, restarting failed tasks when needed.

HML: Historical View and Trends of Deep Learning

HML: Historical View and Trends of Deep LearningYan Xu The document provides a historical view and trends of deep learning. It discusses that deep learning models have evolved in several waves since the 1940s, with key developments including the backpropagation algorithm in 1986 and deep belief networks with pretraining in 2006. Current trends include growing datasets, increasing numbers of neurons and connections per neuron, and higher accuracy on tasks involving vision, NLP and games. Research trends focus on generative models, domain alignment, meta-learning, using graphs as inputs, and program induction.

Hadoop & MapReduce

Hadoop & MapReduceNewvewm This is a deck of slides from a recent meetup of AWS Usergroup Greece, presented by Ioannis Konstantinou from the National Technical University of Athens.

The presentation gives an overview of the Map Reduce framework and a description of its open source implementation (Hadoop). Amazon's own Elastic Map Reduce (EMR) service is also mentioned. With the growing interest on Big Data this is a good introduction to the subject.

5.3 mining sequential patterns

5.3 mining sequential patternsKrish_ver2 The document discusses sequential pattern mining, which involves finding frequently occurring ordered sequences or subsequences in sequence databases. It covers key concepts like sequential patterns, sequence databases, support count, and subsequences. It also describes several algorithms for sequential pattern mining, including GSP (Generalized Sequential Patterns) which uses a candidate generation and test approach, SPADE which works on a vertical data format, and PrefixSpan which employs a prefix-projected sequential pattern growth approach without candidate generation.

Data preprocessing using Machine Learning

Data preprocessing using Machine Learning Gopal Sakarkar Data Preprocessing plays an important role in data cleaning .

Good data preparation is key to producing valid and reliable

Machine Learning models.

3. mining frequent patterns

3. mining frequent patternsAzad public school The document discusses frequent pattern mining and the Apriori algorithm. It introduces frequent patterns as frequently occurring sets of items in transaction data. The Apriori algorithm is described as a seminal method for mining frequent itemsets via multiple passes over the data, generating candidate itemsets and pruning those that are not frequent. Challenges with Apriori include multiple database scans and large number of candidate sets generated.

Mining Data Streams

Mining Data StreamsSujaAldrin This document discusses concepts related to data streams and real-time analytics. It begins with introductions to stream data models and sampling techniques. It then covers filtering, counting, and windowing queries on data streams. The document discusses challenges of stream processing like bounded memory and proposes solutions like sampling and sketching. It provides examples of applications in various domains and tools for real-time data streaming and analytics.

PAC Learning

PAC LearningSanghyuk Chun This document provides an overview of PAC (Probably Approximately Correct) learning theory. It discusses how PAC learning relates the probability of successful learning to the number of training examples, complexity of the hypothesis space, and accuracy of approximating the target function. Key concepts explained include training error vs true error, overfitting, the VC dimension as a measure of hypothesis space complexity, and how PAC learning bounds can be derived for finite and infinite hypothesis spaces based on factors like the training size and VC dimension.

Data streaming fundamentals

Data streaming fundamentalsMohammed Fazuluddin This document provides an overview of data streaming fundamentals and tools. It discusses how data streaming processes unbounded, continuous data streams in real-time as opposed to static datasets. The key aspects covered include data streaming architecture, specifically the lambda architecture, and popular open source data streaming tools like Apache Spark, Apache Flink, Apache Samza, Apache Storm, Apache Kafka, Apache Flume, Apache NiFi, Apache Ignite and Apache Apex.

Artificial Neural Networks for Data Mining

Artificial Neural Networks for Data MiningAmity University | FMS - DU | IMT | Stratford University | KKMI International Institute | AIMA | DTU This Presentation covers Data Mining: Classification and Prediction, NEURAL NETWORK REPRESENTATION, NEURAL NETWORK APPLICATION DEVELOPMENT, BENEFITS AND LIMITATIONS OF NEURAL NETWORKS, Neural Networks, Real Estate Appraiser, Kinds of Data Mining Problems, Data Mining Techniques, Learning in ANN, Elements of ANN, Neural Network Architectures Recurrent Neural Networks and ANN Software.

Data cube computation

Data cube computationRashmi Sheikh Data cube computation involves precomputing aggregations to enable fast query performance. There are different materialization strategies like full cubes, iceberg cubes, and shell cubes. Full cubes precompute all aggregations but require significant storage, while iceberg cubes only store aggregations that meet a threshold. Computation strategies include sorting and grouping to aggregate similar values, caching intermediate results, and aggregating from smallest child cuboids first. The Apriori pruning method can efficiently compute iceberg cubes by avoiding computing descendants of cells that do not meet the minimum support threshold.

Inductive bias

Inductive biasswapnac12 This document discusses inductive bias in machine learning. It defines inductive bias as the assumptions that allow an inductive learning system to generalize beyond its training data. Without some biases, a learning system cannot rationally classify new examples. The document compares different learning algorithms based on the strength of their inductive biases, from weak biases like rote learning to stronger biases like preferring more specific hypotheses. It argues that all inductive learning systems require some inductive biases to generalize at all.

State space search

State space searchchauhankapil This document discusses state space search in artificial intelligence. It defines state space search as consisting of a tree of symbolic states generated by iteratively applying operations to represent a problem. It discusses the initial, intermediate, and final states in state space search. As an example, it describes the water jug problem and its state representation. It also outlines different types of state space search algorithms, including heuristic search algorithms like A* and uninformed search algorithms like breadth-first search.

Data preprocessing

Data preprocessingankur bhalla Data preprocessing involves transforming raw data into an understandable and consistent format. It includes data cleaning, integration, transformation, and reduction. Data cleaning aims to fill missing values, smooth noise, and resolve inconsistencies. Data integration combines data from multiple sources. Data transformation handles tasks like normalization and aggregation to prepare the data for mining. Data reduction techniques obtain a reduced representation of data that maintains analytical results but reduces volume, such as through aggregation, dimensionality reduction, discretization, and sampling.

Using prior knowledge to initialize the hypothesis,kbann

Using prior knowledge to initialize the hypothesis,kbannswapnac12 1) The KBANN algorithm uses a domain theory represented as Horn clauses to initialize an artificial neural network before training it with examples. This helps the network generalize better than random initialization when training data is limited.

2) KBANN constructs a network matching the domain theory's predictions exactly, then refines it with backpropagation to fit examples. This balances theory and data when they disagree.

3) In experiments on promoter recognition, KBANN achieved a 4% error rate compared to 8% for backpropagation alone, showing the benefit of prior knowledge.

Schemas for multidimensional databases

Schemas for multidimensional databasesyazad dumasia This document discusses different types of schemas used in multidimensional databases and data warehouses. It describes star schemas, snowflake schemas, and fact constellation schemas. A star schema contains one fact table connected to multiple dimension tables. A snowflake schema is similar but with some normalized dimension tables. A fact constellation schema contains multiple fact tables that can share dimension tables. The document provides examples and comparisons of each schema type.

Data mining: Classification and prediction

Data mining: Classification and predictionDataminingTools Inc This document discusses various machine learning techniques for classification and prediction. It covers decision tree induction, tree pruning, Bayesian classification, Bayesian belief networks, backpropagation, association rule mining, and ensemble methods like bagging and boosting. Classification involves predicting categorical labels while prediction predicts continuous values. Key steps for preparing data include cleaning, transformation, and comparing different methods based on accuracy, speed, robustness, scalability, and interpretability.

Data Mining: Concepts and Techniques chapter 07 : Advanced Frequent Pattern M...

Data Mining: Concepts and Techniques chapter 07 : Advanced Frequent Pattern M...Salah Amean the slides contain:

Pattern Mining: A Road Map

Pattern Mining in Multi-Level, Multi-Dimensional Space

Constraint-Based Frequent Pattern Mining

Mining High-Dimensional Data and Colossal Patterns

Mining Compressed or Approximate Patterns

Sequential Pattern Mining

Graph Pattern Mining

by

Jiawei Han, Micheline Kamber, and Jian Pei,

University of Illinois at Urbana-Champaign &

Simon Fraser University,

©2013 Han, Kamber & Pei. All rights reserved.

Introduction to Recurrent Neural Network

Introduction to Recurrent Neural NetworkKnoldus Inc. The document provides an introduction to recurrent neural networks (RNNs). It discusses how RNNs differ from feedforward neural networks in that they have internal memory and can use their output from the previous time step as input. This allows RNNs to process sequential data like time series. The document outlines some common RNN types and explains the vanishing gradient problem that can occur in RNNs due to multiplication of small gradient values over many time steps. It discusses solutions to this problem like LSTMs and techniques like weight initialization and gradient clipping.

2.4 rule based classification

2.4 rule based classificationKrish_ver2 This document discusses rule-based classification. It describes how rule-based classification models use if-then rules to classify data. It covers extracting rules from decision trees and directly from training data. Key points include using sequential covering algorithms to iteratively learn rules that each cover positive examples of a class, and measuring rule quality based on both coverage and accuracy to determine the best rules.

Machine Learning and Real-World Applications

Machine Learning and Real-World ApplicationsMachinePulse This presentation was created by Ajay, Machine Learning Scientist at MachinePulse, to present at a Meetup on Jan. 30, 2015. These slides provide an overview of widely used machine learning algorithms. The slides conclude with examples of real world applications.

Ajay Ramaseshan, is a Machine Learning Scientist at MachinePulse. He holds a Bachelors degree in Computer Science from NITK, Suratkhal and a Master in Machine Learning and Data Mining from Aalto University School of Science, Finland. He has extensive experience in the machine learning domain and has dealt with various real world problems.

Jewei Hans & Kamber Chapter 8

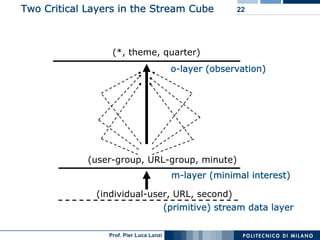

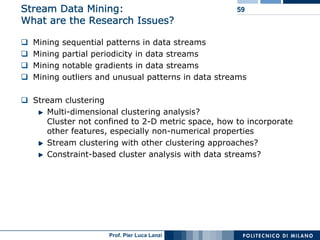

Jewei Hans & Kamber Chapter 8Houw Liong The This document discusses mining data streams. It begins by defining stream data and how it differs from traditional database management systems in terms of characteristics like continuous arrival of huge volumes of data that require fast real-time response. It then covers challenges in processing stream data like limited memory and approximate query answering. Common techniques for mining stream data are also introduced, such as random sampling, histograms, sliding windows, and sketches. Finally, the document discusses challenges in mining dynamics from data streams and provides examples of multi-dimensional stream analysis.

Semantics in Sensor Networks

Semantics in Sensor NetworksOscar Corcho Invited talk at the FIS2009 (Future Internet Symposium) Workshop on Semantics and Future Internet. September 1st, 2009

Ad

More Related Content

What's hot (20)

HML: Historical View and Trends of Deep Learning

HML: Historical View and Trends of Deep LearningYan Xu The document provides a historical view and trends of deep learning. It discusses that deep learning models have evolved in several waves since the 1940s, with key developments including the backpropagation algorithm in 1986 and deep belief networks with pretraining in 2006. Current trends include growing datasets, increasing numbers of neurons and connections per neuron, and higher accuracy on tasks involving vision, NLP and games. Research trends focus on generative models, domain alignment, meta-learning, using graphs as inputs, and program induction.

Hadoop & MapReduce

Hadoop & MapReduceNewvewm This is a deck of slides from a recent meetup of AWS Usergroup Greece, presented by Ioannis Konstantinou from the National Technical University of Athens.

The presentation gives an overview of the Map Reduce framework and a description of its open source implementation (Hadoop). Amazon's own Elastic Map Reduce (EMR) service is also mentioned. With the growing interest on Big Data this is a good introduction to the subject.

5.3 mining sequential patterns

5.3 mining sequential patternsKrish_ver2 The document discusses sequential pattern mining, which involves finding frequently occurring ordered sequences or subsequences in sequence databases. It covers key concepts like sequential patterns, sequence databases, support count, and subsequences. It also describes several algorithms for sequential pattern mining, including GSP (Generalized Sequential Patterns) which uses a candidate generation and test approach, SPADE which works on a vertical data format, and PrefixSpan which employs a prefix-projected sequential pattern growth approach without candidate generation.

Data preprocessing using Machine Learning

Data preprocessing using Machine Learning Gopal Sakarkar Data Preprocessing plays an important role in data cleaning .

Good data preparation is key to producing valid and reliable

Machine Learning models.

3. mining frequent patterns

3. mining frequent patternsAzad public school The document discusses frequent pattern mining and the Apriori algorithm. It introduces frequent patterns as frequently occurring sets of items in transaction data. The Apriori algorithm is described as a seminal method for mining frequent itemsets via multiple passes over the data, generating candidate itemsets and pruning those that are not frequent. Challenges with Apriori include multiple database scans and large number of candidate sets generated.

Mining Data Streams

Mining Data StreamsSujaAldrin This document discusses concepts related to data streams and real-time analytics. It begins with introductions to stream data models and sampling techniques. It then covers filtering, counting, and windowing queries on data streams. The document discusses challenges of stream processing like bounded memory and proposes solutions like sampling and sketching. It provides examples of applications in various domains and tools for real-time data streaming and analytics.

PAC Learning

PAC LearningSanghyuk Chun This document provides an overview of PAC (Probably Approximately Correct) learning theory. It discusses how PAC learning relates the probability of successful learning to the number of training examples, complexity of the hypothesis space, and accuracy of approximating the target function. Key concepts explained include training error vs true error, overfitting, the VC dimension as a measure of hypothesis space complexity, and how PAC learning bounds can be derived for finite and infinite hypothesis spaces based on factors like the training size and VC dimension.

Data streaming fundamentals

Data streaming fundamentalsMohammed Fazuluddin This document provides an overview of data streaming fundamentals and tools. It discusses how data streaming processes unbounded, continuous data streams in real-time as opposed to static datasets. The key aspects covered include data streaming architecture, specifically the lambda architecture, and popular open source data streaming tools like Apache Spark, Apache Flink, Apache Samza, Apache Storm, Apache Kafka, Apache Flume, Apache NiFi, Apache Ignite and Apache Apex.

Artificial Neural Networks for Data Mining

Artificial Neural Networks for Data MiningAmity University | FMS - DU | IMT | Stratford University | KKMI International Institute | AIMA | DTU This Presentation covers Data Mining: Classification and Prediction, NEURAL NETWORK REPRESENTATION, NEURAL NETWORK APPLICATION DEVELOPMENT, BENEFITS AND LIMITATIONS OF NEURAL NETWORKS, Neural Networks, Real Estate Appraiser, Kinds of Data Mining Problems, Data Mining Techniques, Learning in ANN, Elements of ANN, Neural Network Architectures Recurrent Neural Networks and ANN Software.

Data cube computation

Data cube computationRashmi Sheikh Data cube computation involves precomputing aggregations to enable fast query performance. There are different materialization strategies like full cubes, iceberg cubes, and shell cubes. Full cubes precompute all aggregations but require significant storage, while iceberg cubes only store aggregations that meet a threshold. Computation strategies include sorting and grouping to aggregate similar values, caching intermediate results, and aggregating from smallest child cuboids first. The Apriori pruning method can efficiently compute iceberg cubes by avoiding computing descendants of cells that do not meet the minimum support threshold.

Inductive bias

Inductive biasswapnac12 This document discusses inductive bias in machine learning. It defines inductive bias as the assumptions that allow an inductive learning system to generalize beyond its training data. Without some biases, a learning system cannot rationally classify new examples. The document compares different learning algorithms based on the strength of their inductive biases, from weak biases like rote learning to stronger biases like preferring more specific hypotheses. It argues that all inductive learning systems require some inductive biases to generalize at all.

State space search

State space searchchauhankapil This document discusses state space search in artificial intelligence. It defines state space search as consisting of a tree of symbolic states generated by iteratively applying operations to represent a problem. It discusses the initial, intermediate, and final states in state space search. As an example, it describes the water jug problem and its state representation. It also outlines different types of state space search algorithms, including heuristic search algorithms like A* and uninformed search algorithms like breadth-first search.

Data preprocessing

Data preprocessingankur bhalla Data preprocessing involves transforming raw data into an understandable and consistent format. It includes data cleaning, integration, transformation, and reduction. Data cleaning aims to fill missing values, smooth noise, and resolve inconsistencies. Data integration combines data from multiple sources. Data transformation handles tasks like normalization and aggregation to prepare the data for mining. Data reduction techniques obtain a reduced representation of data that maintains analytical results but reduces volume, such as through aggregation, dimensionality reduction, discretization, and sampling.

Using prior knowledge to initialize the hypothesis,kbann

Using prior knowledge to initialize the hypothesis,kbannswapnac12 1) The KBANN algorithm uses a domain theory represented as Horn clauses to initialize an artificial neural network before training it with examples. This helps the network generalize better than random initialization when training data is limited.

2) KBANN constructs a network matching the domain theory's predictions exactly, then refines it with backpropagation to fit examples. This balances theory and data when they disagree.

3) In experiments on promoter recognition, KBANN achieved a 4% error rate compared to 8% for backpropagation alone, showing the benefit of prior knowledge.

Schemas for multidimensional databases

Schemas for multidimensional databasesyazad dumasia This document discusses different types of schemas used in multidimensional databases and data warehouses. It describes star schemas, snowflake schemas, and fact constellation schemas. A star schema contains one fact table connected to multiple dimension tables. A snowflake schema is similar but with some normalized dimension tables. A fact constellation schema contains multiple fact tables that can share dimension tables. The document provides examples and comparisons of each schema type.

Data mining: Classification and prediction

Data mining: Classification and predictionDataminingTools Inc This document discusses various machine learning techniques for classification and prediction. It covers decision tree induction, tree pruning, Bayesian classification, Bayesian belief networks, backpropagation, association rule mining, and ensemble methods like bagging and boosting. Classification involves predicting categorical labels while prediction predicts continuous values. Key steps for preparing data include cleaning, transformation, and comparing different methods based on accuracy, speed, robustness, scalability, and interpretability.

Data Mining: Concepts and Techniques chapter 07 : Advanced Frequent Pattern M...

Data Mining: Concepts and Techniques chapter 07 : Advanced Frequent Pattern M...Salah Amean the slides contain:

Pattern Mining: A Road Map

Pattern Mining in Multi-Level, Multi-Dimensional Space

Constraint-Based Frequent Pattern Mining

Mining High-Dimensional Data and Colossal Patterns

Mining Compressed or Approximate Patterns

Sequential Pattern Mining

Graph Pattern Mining

by

Jiawei Han, Micheline Kamber, and Jian Pei,

University of Illinois at Urbana-Champaign &

Simon Fraser University,

©2013 Han, Kamber & Pei. All rights reserved.

Introduction to Recurrent Neural Network

Introduction to Recurrent Neural NetworkKnoldus Inc. The document provides an introduction to recurrent neural networks (RNNs). It discusses how RNNs differ from feedforward neural networks in that they have internal memory and can use their output from the previous time step as input. This allows RNNs to process sequential data like time series. The document outlines some common RNN types and explains the vanishing gradient problem that can occur in RNNs due to multiplication of small gradient values over many time steps. It discusses solutions to this problem like LSTMs and techniques like weight initialization and gradient clipping.

2.4 rule based classification

2.4 rule based classificationKrish_ver2 This document discusses rule-based classification. It describes how rule-based classification models use if-then rules to classify data. It covers extracting rules from decision trees and directly from training data. Key points include using sequential covering algorithms to iteratively learn rules that each cover positive examples of a class, and measuring rule quality based on both coverage and accuracy to determine the best rules.

Machine Learning and Real-World Applications

Machine Learning and Real-World ApplicationsMachinePulse This presentation was created by Ajay, Machine Learning Scientist at MachinePulse, to present at a Meetup on Jan. 30, 2015. These slides provide an overview of widely used machine learning algorithms. The slides conclude with examples of real world applications.

Ajay Ramaseshan, is a Machine Learning Scientist at MachinePulse. He holds a Bachelors degree in Computer Science from NITK, Suratkhal and a Master in Machine Learning and Data Mining from Aalto University School of Science, Finland. He has extensive experience in the machine learning domain and has dealt with various real world problems.

Artificial Neural Networks for Data Mining

Artificial Neural Networks for Data MiningAmity University | FMS - DU | IMT | Stratford University | KKMI International Institute | AIMA | DTU

Similar to 18 Data Streams (20)

Jewei Hans & Kamber Chapter 8

Jewei Hans & Kamber Chapter 8Houw Liong The This document discusses mining data streams. It begins by defining stream data and how it differs from traditional database management systems in terms of characteristics like continuous arrival of huge volumes of data that require fast real-time response. It then covers challenges in processing stream data like limited memory and approximate query answering. Common techniques for mining stream data are also introduced, such as random sampling, histograms, sliding windows, and sketches. Finally, the document discusses challenges in mining dynamics from data streams and provides examples of multi-dimensional stream analysis.

Semantics in Sensor Networks

Semantics in Sensor NetworksOscar Corcho Invited talk at the FIS2009 (Future Internet Symposium) Workshop on Semantics and Future Internet. September 1st, 2009

Cyber Analytics Applications for Data-Intensive Computing

Cyber Analytics Applications for Data-Intensive ComputingMike Fisk This document discusses applying data-intensive computing techniques to cyber security problems. It describes three characteristic cyber problems: query and retrieval of large datasets, time-series anomaly detection to find unusual patterns, and non-local graph analysis to examine global and local properties of network activity. A file-oriented MapReduce approach called FileMap is proposed to enable parallel processing of large datasets across multiple nodes in a way that is compatible with existing analysis tools. This approach aims to make distributed querying and iterative analysis more efficient.

Performance and predictability

Performance and predictabilityRichardWarburton These days fast code needs to operate in harmony with its environment. At the deepest level this means working well with hardware: RAM, disks and SSDs. A unifying theme is treating memory access patterns in a uniform and predictable that is sympathetic to the underlying hardware. For example writing to and reading from RAM and Hard Disks can be significantly sped up by operating sequentially on the device, rather than randomly accessing the data.

In this talk we’ll cover why access patterns are important, what kind of speed gain you can get and how you can write simple high level code which works well with these kind of patterns.

Chapter 08 Data Mining Techniques

Chapter 08 Data Mining Techniques Houw Liong The This document discusses mining data streams. It describes stream data as continuous, ordered, and fast changing. Traditional databases store finite data sets while stream data may be infinite. The document outlines challenges in mining stream data including processing queries and patterns continuously and with limited memory. It proposes using synopses to approximate answers within a small error range.

Spark

SparkSrinath Reddy This document discusses the Spark analytics platform and its advantages over existing Hadoop-based platforms. Spark provides a unified data processing engine for batch, interactive, and streaming workloads. It includes components like Spark Core for distributed computing, Spark Streaming for real-time data processing, Shark for SQL and analytics, GraphX for graph processing, and MLlib for machine learning. Spark aims to be up to 100x faster than Hadoop for interactive queries by keeping data in-memory using its Resilient Distributed Datasets (RDDs). It also leverages other projects like Mesos for resource management and Tachyon for a fault-tolerant shared storage layer.

Evaluating Classification Algorithms Applied To Data Streams Esteban Donato

Evaluating Classification Algorithms Applied To Data Streams Esteban DonatoEsteban Donato This document summarizes and evaluates several algorithms for classification of data streams: VFDTc, UFFT, and CVFDT. It describes their approaches for handling concept drift, detecting outliers and noise. The algorithms were tested on synthetic data streams generated with configurable attributes like drift frequency and noise percentage. Results show VFDTc and UFFT performed best in accuracy, while CVFDT and UFFT were fastest. The study aims to help choose algorithms suitable for different data stream characteristics like gradual vs sudden drift or frequent vs infrequent drift.

Tsinghua invited talk_zhou_xing_v2r0

Tsinghua invited talk_zhou_xing_v2r0Joe Xing Invited talk at Tsinghua University on "Applications of Deep Neural Network". As the tech. lead of deep learning task force at NIO USA INC, I was invited to give this colloquium talk on general applications of deep neural network.

Performance and predictability

Performance and predictabilityRichardWarburton These days fast code needs to operate in harmony with its environment. At the deepest level this means working well with hardware: RAM, disks and SSDs. A unifying theme is treating memory access patterns in a uniform and predictable way that is sympathetic to the underlying hardware. For example writing to and reading from RAM and Hard Disks can be significantly sped up by operating sequentially on the device, rather than randomly accessing the data.

In this talk we’ll cover why access patterns are important, what kind of speed gain you can get and how you can write simple high level code which works well with these kind of patterns.

Interactive Data Analysis for End Users on HN Science Cloud

Interactive Data Analysis for End Users on HN Science CloudHelix Nebula The Science Cloud The document provides a status report on testing the Helix Nebula Science Cloud for interactive data analysis by end users of the TOTEM experiment. It summarizes the deployment of a "Science Box" platform on the Helix Nebula Cloud using technologies like EOS, CERNBox, SWAN and SPARK. Initial tests of the platform were successful in 2017 using a single VM. Current tests involve a scalable deployment with Kubernetes and using SPARK as the computing engine. Synthetic benchmarks and a TOTEM data analysis example show the platform is functioning well with room to scale out storage and computing resources for larger datasets and analyses.

Network-aware Data Management for High Throughput Flows Akamai, Cambridge, ...

Network-aware Data Management for High Throughput Flows Akamai, Cambridge, ...balmanme The document discusses Mehmet Balman's work on network-aware data management for large-scale distributed applications. It provides background on Balman, including his employment at VMware and affiliations. The presentation outline discusses VSAN and VVOL storage performance in virtualized environments, data streaming in high-bandwidth networks, the Climate100 100Gbps networking demo, and other topics related to network-aware data management.

Reflections on Almost Two Decades of Research into Stream Processing

Reflections on Almost Two Decades of Research into Stream ProcessingKyumars Sheykh Esmaili This is the slide deck that I used during my tutorial presentation at the ACM DEBS Conference (http://www.debs2017.org/) that was held in Barcelona between June 19 and June 23, 2017.

The tutorial paper itself can be accessed here: http://dl.acm.org/citation.cfm?id=3095110

Lecture 24

Lecture 24Shani729 This document discusses parallelism in data warehousing. It explains that parallelism can improve performance for large table scans, joins, indexing, and data loading/modification operations. It also discusses Amdahl's law, which shows that the potential speedup from parallelism is limited by the percentage of sequential operations. Additionally, the document provides an overview of different parallel hardware architectures like SMP, distributed memory, and NUMA systems and software architectures like shared disk, shared nothing, and shared everything.

Mining Adaptively Frequent Closed Unlabeled Rooted Trees in Data Streams

Mining Adaptively Frequent Closed Unlabeled Rooted Trees in Data StreamsAlbert Bifet This document discusses mining frequent closed unlabeled rooted trees in data streams. It introduces the problem of finding frequent closed trees in a data stream of unlabeled rooted trees. It describes some of the challenges of data streams, including that the sequence is potentially infinite, there is a high amount of data requiring sublinear space, and a high speed of arrival requiring sublinear time per example. The document outlines an approach using ADWIN, an adaptive sliding window algorithm, to detect concept drift and adapt the window size accordingly.

Distributed Systems: scalability and high availability

Distributed Systems: scalability and high availabilityRenato Lucindo Distributed systems use multiple computers that interact over a network to achieve common goals like scalability and high availability. They work to handle increasing loads by either scaling up individual nodes or scaling out by adding more nodes. However, distributed systems face challenges in maintaining consistency, availability, and partition tolerance as defined by the CAP theorem. Techniques like caching, queues, logging, and understanding failure modes can help address these challenges.

Stream Reasoning - where we got so far 2011.1.18 Oxford Key Note

Stream Reasoning - where we got so far 2011.1.18 Oxford Key NoteEmanuele Della Valle This document discusses stream reasoning and the key achievements in exploring continuous semantics for reasoning over data streams on the Semantic Web. It presents the motivation for stream reasoning to make sense of real-time data streams, and discusses challenges like query languages, reasoning, and dealing with incomplete data. The document outlines research that developed an architecture for a stream reasoner, the concept of RDF streams, and the Continuous SPARQL query language (C-SPARQL) to query RDF streams.

Proactive Data Containers (PDC): An Object-centric Data Store for Large-scale...

Proactive Data Containers (PDC): An Object-centric Data Store for Large-scale...Globus These slides were presented by Suren Byna from Lawrence Berkeley National Lab (LBNL) at the AGU Fall Meeting 2018 in a session titled "Scalable Data Management Practices in Earth Sciences" convened by Ian Foster, Globus co-founder and director of Argonne's data science and learning division.

Computation and Knowledge

Computation and KnowledgeIan Foster The document discusses how computation can accelerate the generation of new knowledge by enabling large-scale collaborative research and extracting insights from vast amounts of data. It provides examples from astronomy, physics simulations, and biomedical research where computation has allowed more data and researchers to be incorporated, advancing various fields more quickly over time. Computation allows for data sharing, analysis, and hypothesis generation at scales not previously possible.

The Seven Main Challenges of an Early Warning System Architecture

The Seven Main Challenges of an Early Warning System Architecturestreamspotter J. Moßgraber, F. Chaves, S. Middleton, Z. Zlatev, and R. Tao on "The Seven Main Challenges of an Early Warning System Architecture" at ISCRAM 2013 in Baden-Baden.

10th International Conference on Information Systems for Crisis Response and Management

12-15 May 2013, Baden-Baden, Germany

Toward Real-Time Analysis of Large Data Volumes for Diffraction Studies by Ma...

Toward Real-Time Analysis of Large Data Volumes for Diffraction Studies by Ma...EarthCube Talk at the EarthCube End-User Domain Workshop for Rock Deformation and Mineral Physics Research.

By Martin Kunz, Lawrence Berkeley National Laboratory

Ad

More from Pier Luca Lanzi (20)

11 Settembre 2021 - Giocare con i Videogiochi

11 Settembre 2021 - Giocare con i VideogiochiPier Luca Lanzi Presentazione per il festival dell'ingegneria organizzata dal Politecnico di Milano.

Breve Viaggio al Centro dei Videogiochi

Breve Viaggio al Centro dei VideogiochiPier Luca Lanzi Presentazione tenuta a Bergamo Scienza - 1 Aprile 2017

Global Game Jam 19 @ POLIMI - Morning Welcome

Global Game Jam 19 @ POLIMI - Morning WelcomePier Luca Lanzi These are the slides presented at the morning welcome of the Global Game Jam 2019 at the Politecnico di Milano on January 25, 2019

Data Driven Game Design @ Campus Party 2018

Data Driven Game Design @ Campus Party 2018Pier Luca Lanzi Prof. Pier Luca Lanzi discusses using data-driven game design and machine learning techniques like player modeling and gameplay analysis tools to balance multiplayer first-person shooters. He proposes using the distribution of kills and scores among players as a proxy to evaluate balancing. His research also looks at using AI to automatically design game maps and levels to improve balancing, as well as generative adversarial networks to generate new Doom levels.

GGJ18 al Politecnico di Milano - Presentazione che precede la presentazione d...

GGJ18 al Politecnico di Milano - Presentazione che precede la presentazione d...Pier Luca Lanzi Presentazione che precede la presentazione del tema della Global Game Jam 2018

GGJ18 al Politecnico di Milano - Presentazione di apertura

GGJ18 al Politecnico di Milano - Presentazione di aperturaPier Luca Lanzi Presentazione di apertura della Global Game Jam 2018 ospitata al Politecnico di Milano

Presentation for UNITECH event - January 8, 2018

Presentation for UNITECH event - January 8, 2018Pier Luca Lanzi Presentation on game design, its use for applied games, its influence on gamification, and free to play

DMTM Lecture 20 Data preparation

DMTM Lecture 20 Data preparationPier Luca Lanzi Slides for the 2016/2017 edition of the Data Mining and Text Mining Course at the Politecnico di Milano. The course is also part of the joint program with the University of Illinois at Chicago.

DMTM Lecture 19 Data exploration

DMTM Lecture 19 Data explorationPier Luca Lanzi Slides for the 2016/2017 edition of the Data Mining and Text Mining Course at the Politecnico di Milano. The course is also part of the joint program with the University of Illinois at Chicago.

DMTM Lecture 18 Graph mining

DMTM Lecture 18 Graph miningPier Luca Lanzi Slides for the 2016/2017 edition of the Data Mining and Text Mining Course at the Politecnico di Milano. The course is also part of the joint program with the University of Illinois at Chicago.

DMTM Lecture 17 Text mining

DMTM Lecture 17 Text miningPier Luca Lanzi Slides for the 2016/2017 edition of the Data Mining and Text Mining Course at the Politecnico di Milano. The course is also part of the joint program with the University of Illinois at Chicago.

DMTM Lecture 16 Association rules

DMTM Lecture 16 Association rulesPier Luca Lanzi Slides for the 2016/2017 edition of the Data Mining and Text Mining Course at the Politecnico di Milano. The course is also part of the joint program with the University of Illinois at Chicago.

DMTM Lecture 15 Clustering evaluation

DMTM Lecture 15 Clustering evaluationPier Luca Lanzi Slides for the 2016/2017 edition of the Data Mining and Text Mining Course at the Politecnico di Milano. The course is also part of the joint program with the University of Illinois at Chicago.

DMTM Lecture 14 Density based clustering

DMTM Lecture 14 Density based clusteringPier Luca Lanzi Slides for the 2016/2017 edition of the Data Mining and Text Mining Course at the Politecnico di Milano. The course is also part of the joint program with the University of Illinois at Chicago.

DMTM Lecture 13 Representative based clustering

DMTM Lecture 13 Representative based clusteringPier Luca Lanzi Slides for the 2016/2017 edition of the Data Mining and Text Mining Course at the Politecnico di Milano. The course is also part of the joint program with the University of Illinois at Chicago.

DMTM Lecture 12 Hierarchical clustering

DMTM Lecture 12 Hierarchical clusteringPier Luca Lanzi Slides for the 2016/2017 edition of the Data Mining and Text Mining Course at the Politecnico di Milano. The course is also part of the joint program with the University of Illinois at Chicago.

DMTM Lecture 11 Clustering

DMTM Lecture 11 ClusteringPier Luca Lanzi Slides for the 2016/2017 edition of the Data Mining and Text Mining Course at the Politecnico di Milano. The course is also part of the joint program with the University of Illinois at Chicago.

DMTM Lecture 10 Classification ensembles

DMTM Lecture 10 Classification ensemblesPier Luca Lanzi Slides for the 2016/2017 edition of the Data Mining and Text Mining Course at the Politecnico di Milano. The course is also part of the joint program with the University of Illinois at Chicago.

DMTM Lecture 09 Other classificationmethods

DMTM Lecture 09 Other classificationmethodsPier Luca Lanzi This document discusses Naive Bayes classifiers and k-nearest neighbors (kNN) algorithms. It begins with an overview of Naive Bayes, including how it makes strong independence assumptions between attributes. Several examples are provided to illustrate Naive Bayes classification. The document then covers kNN, explaining that it is an instance-based learning method that classifies new examples based on their similarity to training examples. Parameters like the number of neighbors k and distance metrics are discussed.

DMTM Lecture 08 Classification rules

DMTM Lecture 08 Classification rulesPier Luca Lanzi Slides for the 2016/2017 edition of the Data Mining and Text Mining Course at the Politecnico di Milano. The course is also part of the joint program with the University of Illinois at Chicago.

Ad

Recently uploaded (20)

Linux Professional Institute LPIC-1 Exam.pdf

Linux Professional Institute LPIC-1 Exam.pdfRHCSA Guru Introduction to LPIC-1 Exam - overview, exam details, price and job opportunities

AI and Data Privacy in 2025: Global Trends

AI and Data Privacy in 2025: Global TrendsInData Labs In this infographic, we explore how businesses can implement effective governance frameworks to address AI data privacy. Understanding it is crucial for developing effective strategies that ensure compliance, safeguard customer trust, and leverage AI responsibly. Equip yourself with insights that can drive informed decision-making and position your organization for success in the future of data privacy.

This infographic contains:

-AI and data privacy: Key findings

-Statistics on AI data privacy in the today’s world

-Tips on how to overcome data privacy challenges

-Benefits of AI data security investments.

Keep up-to-date on how AI is reshaping privacy standards and what this entails for both individuals and organizations.

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep Dive

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep DiveScyllaDB Want to learn practical tips for designing systems that can scale efficiently without compromising speed?

Join us for a workshop where we’ll address these challenges head-on and explore how to architect low-latency systems using Rust. During this free interactive workshop oriented for developers, engineers, and architects, we’ll cover how Rust’s unique language features and the Tokio async runtime enable high-performance application development.

As you explore key principles of designing low-latency systems with Rust, you will learn how to:

- Create and compile a real-world app with Rust

- Connect the application to ScyllaDB (NoSQL data store)

- Negotiate tradeoffs related to data modeling and querying

- Manage and monitor the database for consistently low latencies

2025-05-Q4-2024-Investor-Presentation.pptx

2025-05-Q4-2024-Investor-Presentation.pptxSamuele Fogagnolo Cloudflare Q4 Financial Results Presentation

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager API

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager APIUiPathCommunity Join this UiPath Community Berlin meetup to explore the Orchestrator API, Swagger interface, and the Test Manager API. Learn how to leverage these tools to streamline automation, enhance testing, and integrate more efficiently with UiPath. Perfect for developers, testers, and automation enthusiasts!

📕 Agenda

Welcome & Introductions

Orchestrator API Overview

Exploring the Swagger Interface

Test Manager API Highlights

Streamlining Automation & Testing with APIs (Demo)

Q&A and Open Discussion

Perfect for developers, testers, and automation enthusiasts!

👉 Join our UiPath Community Berlin chapter: https://community.uipath.com/berlin/

This session streamed live on April 29, 2025, 18:00 CET.

Check out all our upcoming UiPath Community sessions at https://community.uipath.com/events/.

Into The Box Conference Keynote Day 1 (ITB2025)

Into The Box Conference Keynote Day 1 (ITB2025)Ortus Solutions, Corp This is the keynote of the Into the Box conference, highlighting the release of the BoxLang JVM language, its key enhancements, and its vision for the future.

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In France

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In Francechb3 The latest updates on Manifest's pre-seed stage progress.

HCL Nomad Web – Best Practices and Managing Multiuser Environments

HCL Nomad Web – Best Practices and Managing Multiuser Environmentspanagenda Webinar Recording: https://www.panagenda.com/webinars/hcl-nomad-web-best-practices-and-managing-multiuser-environments/

HCL Nomad Web is heralded as the next generation of the HCL Notes client, offering numerous advantages such as eliminating the need for packaging, distribution, and installation. Nomad Web client upgrades will be installed “automatically” in the background. This significantly reduces the administrative footprint compared to traditional HCL Notes clients. However, troubleshooting issues in Nomad Web present unique challenges compared to the Notes client.

Join Christoph and Marc as they demonstrate how to simplify the troubleshooting process in HCL Nomad Web, ensuring a smoother and more efficient user experience.

In this webinar, we will explore effective strategies for diagnosing and resolving common problems in HCL Nomad Web, including

- Accessing the console

- Locating and interpreting log files

- Accessing the data folder within the browser’s cache (using OPFS)

- Understand the difference between single- and multi-user scenarios

- Utilizing Client Clocking

tecnologias de las primeras civilizaciones.pdf

tecnologias de las primeras civilizaciones.pdffjgm517 descaripcion detallada del avance de las tecnologias en mesopotamia, egipto, roma y grecia.

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptx

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptxJustin Reock Building 10x Organizations with Modern Productivity Metrics

10x developers may be a myth, but 10x organizations are very real, as proven by the influential study performed in the 1980s, ‘The Coding War Games.’

Right now, here in early 2025, we seem to be experiencing YAPP (Yet Another Productivity Philosophy), and that philosophy is converging on developer experience. It seems that with every new method we invent for the delivery of products, whether physical or virtual, we reinvent productivity philosophies to go alongside them.

But which of these approaches actually work? DORA? SPACE? DevEx? What should we invest in and create urgency behind today, so that we don’t find ourselves having the same discussion again in a decade?

How analogue intelligence complements AI

How analogue intelligence complements AIPaul Rowe

Artificial Intelligence is providing benefits in many areas of work within the heritage sector, from image analysis, to ideas generation, and new research tools. However, it is more critical than ever for people, with analogue intelligence, to ensure the integrity and ethical use of AI. Including real people can improve the use of AI by identifying potential biases, cross-checking results, refining workflows, and providing contextual relevance to AI-driven results.

News about the impact of AI often paints a rosy picture. In practice, there are many potential pitfalls. This presentation discusses these issues and looks at the role of analogue intelligence and analogue interfaces in providing the best results to our audiences. How do we deal with factually incorrect results? How do we get content generated that better reflects the diversity of our communities? What roles are there for physical, in-person experiences in the digital world?

Rusty Waters: Elevating Lakehouses Beyond Spark

Rusty Waters: Elevating Lakehouses Beyond Sparkcarlyakerly1 Spark is a powerhouse for large datasets, but when it comes to smaller data workloads, its overhead can sometimes slow things down. What if you could achieve high performance and efficiency without the need for Spark?

At S&P Global Commodity Insights, having a complete view of global energy and commodities markets enables customers to make data-driven decisions with confidence and create long-term, sustainable value. 🌍

Explore delta-rs + CDC and how these open-source innovations power lightweight, high-performance data applications beyond Spark! 🚀

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungen

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungenpanagenda Webinar Recording: https://www.panagenda.com/webinars/hcl-nomad-web-best-practices-und-verwaltung-von-multiuser-umgebungen/

HCL Nomad Web wird als die nächste Generation des HCL Notes-Clients gefeiert und bietet zahlreiche Vorteile, wie die Beseitigung des Bedarfs an Paketierung, Verteilung und Installation. Nomad Web-Client-Updates werden “automatisch” im Hintergrund installiert, was den administrativen Aufwand im Vergleich zu traditionellen HCL Notes-Clients erheblich reduziert. Allerdings stellt die Fehlerbehebung in Nomad Web im Vergleich zum Notes-Client einzigartige Herausforderungen dar.

Begleiten Sie Christoph und Marc, während sie demonstrieren, wie der Fehlerbehebungsprozess in HCL Nomad Web vereinfacht werden kann, um eine reibungslose und effiziente Benutzererfahrung zu gewährleisten.

In diesem Webinar werden wir effektive Strategien zur Diagnose und Lösung häufiger Probleme in HCL Nomad Web untersuchen, einschließlich

- Zugriff auf die Konsole

- Auffinden und Interpretieren von Protokolldateien

- Zugriff auf den Datenordner im Cache des Browsers (unter Verwendung von OPFS)

- Verständnis der Unterschiede zwischen Einzel- und Mehrbenutzerszenarien

- Nutzung der Client Clocking-Funktion

Drupalcamp Finland – Measuring Front-end Energy Consumption

Drupalcamp Finland – Measuring Front-end Energy ConsumptionExove How to measure web front-end energy consumption using Firefox Profiler. Presented in DrupalCamp Finland on April 25th, 2025.

Role of Data Annotation Services in AI-Powered Manufacturing

Role of Data Annotation Services in AI-Powered ManufacturingAndrew Leo From predictive maintenance to robotic automation, AI is driving the future of manufacturing. But without high-quality annotated data, even the smartest models fall short.

Discover how data annotation services are powering accuracy, safety, and efficiency in AI-driven manufacturing systems.

Precision in data labeling = Precision on the production floor.

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptx

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptxAnoop Ashok In today's fast-paced retail environment, efficiency is key. Every minute counts, and every penny matters. One tool that can significantly boost your store's efficiency is a well-executed planogram. These visual merchandising blueprints not only enhance store layouts but also save time and money in the process.

Semantic Cultivators : The Critical Future Role to Enable AI

Semantic Cultivators : The Critical Future Role to Enable AIartmondano By 2026, AI agents will consume 10x more enterprise data than humans, but with none of the contextual understanding that prevents catastrophic misinterpretations.

How Can I use the AI Hype in my Business Context?

How Can I use the AI Hype in my Business Context?Daniel Lehner 𝙄𝙨 𝘼𝙄 𝙟𝙪𝙨𝙩 𝙝𝙮𝙥𝙚? 𝙊𝙧 𝙞𝙨 𝙞𝙩 𝙩𝙝𝙚 𝙜𝙖𝙢𝙚 𝙘𝙝𝙖𝙣𝙜𝙚𝙧 𝙮𝙤𝙪𝙧 𝙗𝙪𝙨𝙞𝙣𝙚𝙨𝙨 𝙣𝙚𝙚𝙙𝙨?

Everyone’s talking about AI but is anyone really using it to create real value?

Most companies want to leverage AI. Few know 𝗵𝗼𝘄.

✅ What exactly should you ask to find real AI opportunities?

✅ Which AI techniques actually fit your business?

✅ Is your data even ready for AI?

If you’re not sure, you’re not alone. This is a condensed version of the slides I presented at a Linkedin webinar for Tecnovy on 28.04.2025.

Massive Power Outage Hits Spain, Portugal, and France: Causes, Impact, and On...

Massive Power Outage Hits Spain, Portugal, and France: Causes, Impact, and On...Aqusag Technologies In late April 2025, a significant portion of Europe, particularly Spain, Portugal, and parts of southern France, experienced widespread, rolling power outages that continue to affect millions of residents, businesses, and infrastructure systems.