Ad

2014 sept 4_hadoop_security

- 1. Securing Hadoop Hadoop Security Demystified…and then made more confusing. Presenter: Adam Muise Content: Balaji Ganesan Adam Muise Page 1 © Hortonworks Inc. 2014

- 2. What do we mean by Security? Say you have a house guest… - Authentication - Who gets in the door - Authorization - How far are they allowed in the house and what rooms are they allowed in - Auditing - Follow them around - Encryption - When all else fails, lock it up Page 2 © Hortonworks Inc. 2014

- 3. Insecurity – Not just for Teenagers - Security is really about risk mitigation - No perfect solution exists unless you locate your datacenter in the hull of the Titanic and cut all communications - The risks are: - Inappropriate access to data by internal resources - External data theft - Service outages - No knowledge of theft or inappropriate access - Hadoop’s value to a business is to centralize their data, that can make leaks more detrimental than a DDoS or stolen laptops Page 3 © Hortonworks Inc. 2014

- 4. Attention to Hadoop security on the rise… Page 4 © Hortonworks Inc. 2014 - As Hadoop becomes more adopted, more sensitive production data is going into clusters, more attention is being paid to security - Intel/Cloudera working on Project Rhino - Hortonworks introduces Apache Knox - Cloudera buys Gazzang - Hortonworks buys XASecure and turns it into Apache Argus - HBase gets cell level security - … the list goes on

- 5. Watch out for those malicious attacks… Page 5 © Hortonworks Inc. 2014

- 6. Layers Of Hadoop Security Perimeter Level Security • Network Security (i.e. Firewalls) • Apache Knox (i.e. Gateways) Authentication • Kerberos • Delegation Tokens Authorization • Argus Security Policies OS Security • File Permissions • Process Isolation Page 6 © Hortonworks Inc. 2014 Data Protection • Transport • Storage • Access

- 7. Typical Hadoop Security Vanilla Hadoop Page 7 © Hortonworks Inc. 2014

- 8. Hadoop out of the box - While a lot of security is built into Hadoop, out of the box not much of it is turned on - Without strong authentication, anyone with sufficient access to underlying OS has ability to impersonate users - Often paired with gateway nodes that provide stronger access restrictions - HDFS/YARN/Hive - Authentication - Derived from OS users local to the box the task/request is submitted from - Authorization – Dependent on each project/service Page 8 © Hortonworks Inc. 2014

- 9. Page 9 © Hortonworks Inc. 2014 HDFS Typical Flow – Hive Access HiveServer 2 A B C Beeline Client

- 10. Typical Hadoop Security Strong Authentication through Kerberos Page 10 © Hortonworks Inc. 2014

- 11. Kerberos Primer Page 11 © Hortonworks Inc. 2014 Page 11 KDC Client NN DN 1. kinit - Login and get Ticket Granting Ticket (TGT) 3. Get NameNode Service Ticket (NN-ST) 2. Client Stores TGT in Ticket Cache 4. Client Stores NN-ST in Ticket Cache 5. Read/write file given NN-ST and file name; returns block locations, block IDs and Block Access Tokens if access permitted 6. Read/write block given Block Access Token and block ID Client’s Kerberos Ticket Cache

- 12. Kerberos Summary • Provides Strong Authentication • Establishes identity for users, services and hosts • Prevents impersonation on unauthorized account • Supports token delegation model • Works with existing directory services • Basis for Authorization Page 12 © Hortonworks Inc. 2014 Page 12

- 13. Hadoop Authentication • Users authenticate with the services – CLI & API: Kerberos kinit or keytab – Web UIs: Kerberos SPNego or custom plugin (e.g. SSO) • Services authenticate with each other – Prepopulated Kerberos keytab – e.g. DN->NN, NM->RM • Services propagate authenticated user identity – Authenticated trusted proxy service – e.g. Oozie->RM, Knox->WebHCat • Job tasks present delegated user’s identity/access – Delegation tokens – e.g. Job task -> NN, Job task -> JT/RM • Strong authentication is the basis for authorization Page 13 © Hortonworks Inc. 2014 Client Page 13 Name Node Data Node Name Node Oozie Job Tracker Task Name Node (User) Kerberos or Custom (Service) Kerberos (Service) Kerberos + (User) doas (User) Delegation Token

- 14. User Management • Most implementations use LDAP for user info – LDAP guarantees that user information is consistent across the cluster – An easy way to manage users & groups – The standard user to group mapping comes from the OS on the NameNode • Kerberos provides authentication – PAM can automatically log user into Kerberos Page 14 © Hortonworks Inc. 2014 Page 14

- 15. Kerberos + Active Directory Page 15 © Hortonworks Inc. 2014 Page 15 Cross Realm Trust Client Hadoop Cluster AD / LDAP KDC Users: [email protected]! Hosts: [email protected]! Services: hdfs/[email protected]! User Store Use existing directory tools to manage users Use Kerberos tools to manage host + service principals Authentication

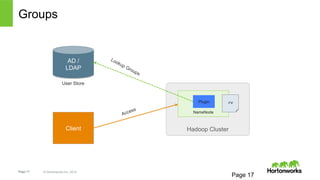

- 16. Groups • Define groups for each required role • Hadoop has pluggable interface – Mapping from user to group not stored within Hadoop • Defaults to the OS information on master node – Typically driven from LDAP on Linux – Existing Plugins – ShellBasedUnixGroupsMapping - /bin/id – JniBasedUnixGroupsMapping – system call – LdapGroupsMapping – LDAP call – CompositeGroupMapping – combines Unix & LDAP group mapping • Strong authentication and role-based groups provide protections enabling shared clusters Page 16 © Hortonworks Inc. 2014 Page 16

- 17. Groups AD / LDAP User Store Page 17 © Hortonworks Inc. 2014 Plugin rw! Page 17 NameNode Client Hadoop Cluster

- 18. Kerberos FAQ • Where do I install KDC? – On a master type node • User Provisioning – Hook up to Corporate AD/LDAP to leverage existing User Provisioning • Growing a cluster – Provision new services and nodes in MIT KDC, copy keytabs to new nodes • Is Kerberos a SPOF? – Kerberos support HA, with delegation tokens the KDC load is reduced Page 18 © Hortonworks Inc. 2014 Page 18

- 19. Typical Flow – Authenticate through Kerberos Page 19 © Hortonworks Inc. 2014 HDFS HiveServer 2 A B C KDC Use Hive ST, submit query Hive gets Namenode (NN) service ticket Hive creates map reduce using NN ST Client gets service ticket for Hive Beeline Client

- 20. Typical Hadoop Security Strong Authentication + Cross-cutting Authorization Page 20 © Hortonworks Inc. 2014

- 21. Apache Argus (aka HDP Security) Capabilities Page 21 © Hortonworks Inc. 2014 Hadoop and Argus Authentication Cross Platform Security Kerberos, Integration with AD Gateway for REST APIs Knox for http, REST APIs Role Based Authorizations Fine grained access control HDFS – Folder, File, Hive – Database, Table, Column, UDFs HBase – Table, Column Family, Column Wildcard Resource Names Yes Permission Support HDFS – Read, Write, Execute Hive – Select, Update, Create, Drop, Alter, Index, Lock Hbase – Read, Write, Create

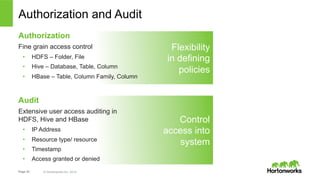

- 22. Authorization and Audit Authorization Fine grain access control • HDFS – Folder, File • Hive – Database, Table, Column • HBase – Table, Column Family, Column Audit Extensive user access auditing in HDFS, Hive and HBase • IP Address • Resource type/ resource • Timestamp • Access granted or denied Page 22 © Hortonworks Inc. 2014 Flexibility in defining policies Control access into system

- 23. Central Security Administration Apache Argus • Delivers a ‘single pane of glass’ for the security administrator • Centralizes administration of security policy • Ensures consistent coverage across the entire Hadoop stack Page 23 © Hortonworks Inc. 2014

- 24. Setup Authorization Policies 24 Page 24 © Hortonworks Inc. 2014 file level access control, flexible definition Control permissions

- 25. Monitor through Auditing 25 Page 25 © Hortonworks Inc. 2014

- 26. Authorization and Auditing with Argus Hadoop distributed file system (HDFS) Page 26 © Hortonworks Inc. 2014 Argus Administration Portal HBase Hive Server2 Argus Policy Server Argus Audit Server Argus Agent Hadoop Components Enterprise Users Argus Agent Argus Agent Legacy Tools Integration API RDBMS HDFS Knox Falcon Argus Agent* Argus Agent* Argus Agent* Storm YARN : Data Opera.ng System * - Future Integration

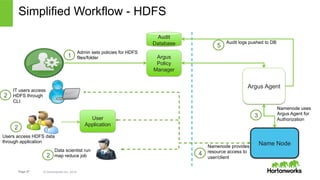

- 27. Simplified Workflow - HDFS Users access HDFS data through application Name Node Page 27 © Hortonworks Inc. 2014 Argus Policy Manager Argus Agent Admin sets policies for HDFS files/folder User Application Data scientist runs a map reduce job IT users access HDFS through CLI Namenode uses Argus Agent for Authorization Audit Database Audit logs pushed to DB Namenode provides resource access to user/client 1 2 2 2 3 4 5

- 28. Simplified Workflow - Hive 28 Page 28 © Hortonworks Inc. 2014 Audit logs pushed to DB Argus Agent Admin sets policies for Hive db/ tables/columns Hive Server2 HiveServer2 provide data access to users 1 3 4 5 IT users access Hive via beeline 2 command tool Hive Authorizes with Argus Agent 2 Users access Hive data using JDBC/ODBC Argus Policy Manager User Application Audit Database

- 29. Simplified Workflow - HBase 29 Page 29 © Hortonworks Inc. 2014 Audit Database Audit logs pushed to DB Argus Policy Manager Argus Agent Admin sets policies for HBase table/cf/column User Application Data scientist runs a map reduce job Hbase Server HBase server provide data access to users 1 2 3 4 5 IT users access Hbase via HBShell 2 HBase Authorizes with Argus Agent 2 Users access HBase data using Java API

- 30. Typical Flow – Add Authorization through Argus Page 30 © Hortonworks Inc. 2014 HDFS HiveServer 2 A B C KDC Use Hive ST, submit query Hive gets Namenode (NN) service ticket Argus Hive creates map reduce using NN ST Client gets service ticket for Hive Beeline Client

- 31. Typical Hadoop Security Strong Authentication + Cross-cutting Authorization + Perimeter Security Page 31 © Hortonworks Inc. 2014

- 32. What does Perimeter Security really mean? REST API Page 32 © Hortonworks Inc. 2014 Hadoop Services Gateway REST API Firewall User Firewall required at perimeter (today) Knox Gateway controls all Hadoop REST API access through firewall Hadoop cluster mostly unaffected Firewall only allows connections through specific ports from Knox host

- 33. Why Knox? Simplified Access • Kerberos encapsulation • Extends API reach • Single access point • Multi-cluster support • Single SSL certificate Page 33 © Hortonworks Inc. 2014 Centralized Control • Central REST API auditing • Service-level authorization • Alternative to SSH “edge node” Enterprise Integration • LDAP integration • Active Directory integration • SSO integration • Apache Shiro extensibility • Custom extensibility Enhanced Security • Protect network details • Partial SSL for non-SSL services • WebApp vulnerability filter

- 34. Current Hadoop Client Model • FileSystem and MapReduce Java APIs • HDFS, Pig, Hive and Oozie clients (that wrap the Java APIs) • Typical use of APIs is via “Edge Node” that is “inside” cluster • Users SSH to Edge Node and execute API commands from shell Page 34 © Hortonworks Inc. 2014 Page 34 SSH! User Edge Node Hadoop

- 35. Hadoop REST APIs Service API WebHDFS Supports HDFS user operations including reading files, writing to files, making directories, changing permissions and renaming. Learn more about WebHDFS. WebHCat Job control for MapReduce, Pig and Hive jobs, and HCatalog DDL • Useful for connecting to Hadoop from the outside the cluster • When more client language flexibility is required – i.e. Java binding not an option • Challenges – Client must have knowledge of cluster topology – Required to open ports (and in some cases, on every host) outside the cluster Page 35 © Hortonworks Inc. 2014 Page 35 commands. Learn more about WebHCat. Hive Hive REST API operations HBase HBase REST API operations Oozie Job submission and management, and Oozie administration. Learn more about Oozie.

- 36. Knox Deployment with Hadoop Cluster Application Tier DMZ Switch NN SNN Page 36 © Hortonworks Inc. 2014 LB Switch Switch …. Master Nodes Rack 1 Switch Switch DN DN …. Slave Nodes Rack 2 …. Slave Nodes Rack N Web Tier Knox Hadoop CLIs

- 37. Hadoop REST API Security: Drill-Down Page 37 © Hortonworks Inc. 2014 Page 37 REST Client Enterprise Identity Provider LDAP/AD Knox Gateway GGWW Firewall Firewall DMZ LB Edge Node/ Hadoop CLIs RPC HTTP HTTP HTTP LDAP Hadoop Cluster 1 Masters Slaves NN RM Web Oozie HCat DN NM HBase HS2 Hadoop Cluster 2 Masters Slaves NN RM Web Oozie HCat DN NM HBase HS2

- 38. OpenLDAP Configuration • In sandbox.xml: <param> <name>main.ldapRealm</name> <value>org.apache.shiro.realm.ldap.JndiLdapRealm</value> </param> <param> <name>main.ldapRealm.userDnTemplate</name> <value>uid={0},ou=people,dc=hadoop,dc=apache,dc=org</value> </param> <param> <name>main.ldapRealm.contextFactory.url</name> <value>ldap://localhost:33389</value> </param> Page 38 © Hortonworks Inc. 2014 Page 38

- 39. Service level authorization Configuration • In <cluster.xml> <provider> <role>authorization</role> <name>AclsAuthz</name> <enabled>true</enabled> <param> <name>webhdfs.acl.mode</name> <value>OR</value> </param> <param> <name>webhdfs.acl</name> <value>guest;*;*</value> <-Format user(s);groups;ipaddress </param> <param> <name>webhcat.acl</name> <value>hdfs;admin;127.0.0.2,127.0.0.3</value> </param> </provider> Page 39 © Hortonworks Inc. 2014 Page 39

- 40. Page 40 © Hortonworks Inc. 2014 HDFS Typical Flow – Firewall, Route through Knox Gateway HiveServer 2 A B C KDC Use Hive ST, submit query Hive gets Namenode (NN) service ticket Argus Hive creates map reduce using NN ST Knox runs as proxy user using Hive ST Knox gets service ticket for Hive Original request w/user id/password Client gets query result Beeline Client

- 41. SSL Page 41 © Hortonworks Inc. 2014 HDFS Optionally - Add Wire and File Encryption SSL SSL HiveServer 2 A B C KDC Use Hive ST, submit query Hive gets Namenode (NN) service ticket Argus Hive creates map reduce using NN ST Knox runs as proxy user using Hive ST Knox gets service ticket for Hive Original request w/user id/password Client gets query result Beeline Client SASL SASL

- 42. Security Features Page 42 © Hortonworks Inc. 2014 Hadoop with Argus Auditing Configurable audit Yes, auditing can be controlled through policy Resource access auditing User id, request type, repository, access resource, IP address, timestamp, access granted/denied Admin auditing Changes to policies, login sessions and agent monitoring, Data Protection Over the wire SASL for RPC, SSL for MR shuffle, Web HDFS Data at rest LUKS for Volume Encryption, Partners Manage User/ Group mapping Local, Sync with LDAP/AD, Sync with Unix Delegated administration Delegate policy administration to groups or users

- 43. Data Protection Page 43 © Hortonworks Inc. 2014 Page 43

- 44. Data Protection HDP allows you to apply data protection policy at three different layers across the Hadoop stack Layer What? How ? Storage Encrypt data while it is at rest 3rd Party, Future Hadoop improvements Transmission Encrypt data as it moves Already in Hadoop Upon Access Apply restrictions when accessed 3rd Party Page 44 © Hortonworks Inc. 2014

- 45. Points of Communication Page 45 © Hortonworks Inc. 2014 Page 45 WebHDFS DataTransferProtocol Nodes 2 DataTransfer 3 RPC Nodes M/R Shuffle Client 1 2 4 JDBC/ODBC 3 Hadoop Cluster RPC 4

- 46. Data Transmission Protection in HDP 2.1 • WebHDFS – Provides read/write access to HDFS – Optionally enable HTTPS – Authenticated using SPNEGO (Kerberos for HTTP) filter – SSL based wire encryption • RPC – Communications between NNs, DNs, etc. and Clients – SASL based wire encryption – DTP encryption with SASL • JDBC/ODBC – SSL based wire encryption – Also available SASL based encryption • Shuffle – Mapper to Reducer over HTTP(S) with SSL Page 46 © Hortonworks Inc. 2014 46

- 47. Data Storage Protection • Encrypt at the physical file system level (e.g. dm-crypt) • Encrypt via custom HDFS “compression” codec • Encrypt at Application level (including security service/device) Page 47 © Hortonworks Inc. 2014 DEF ABC Page 47 Security Service (e.g. Voltage) ABC 1a3d HDFS ABC DEF ETL App ENCRYPT DECRYPT

- 48. Current Open Source Initiatives • HDFS Encryption – Transparent encryption of data at rest in HDFS via Encryption zones. Being worked in the community – Dependency on Key Management Server and Keyshell • Hive Column Level Encryption • HBase Column Level Encryption – Transparent Column Encryption, needs more testing/validation • Key Management Server • Key Provider API • Command line Key Operations Page 48 © Hortonworks Inc. 2014

- 49. And remember…. Page 49 © Hortonworks Inc. 2014