Ad

2018/12/28 LiDARで取得した道路上点群に対するsemantic segmentation

- 2. 自己紹介 2 株式会社ビジョン&ITラボ 代表取締役 皆川 卓也(みながわ たくや) 「コンピュータビジョン勉強会@関東」主催 博士(工学) 略歴: 1999-2003年 日本HP(後にアジレント・テクノロジーへ分社)にて、ITエンジニアとしてシステム構築、プリ セールス、プロジェクトマネジメント、サポート等の業務に従事 2004-2009年 コンピュータビジョンを用いたシステム/アプリ/サービス開発等に従事 2007-2010年 慶應義塾大学大学院 後期博士課程にて、コンピュータビジョンを専攻 単位取得退学後、博士号取得(2014年) 2009年-現在 フリーランスとして、コンピュータビジョンのコンサル/研究/開発等に従事(2018年法人化) お問い合わせ:http://visitlab.jp

- 3. 本資料について 本資料は主にLiDARから取得した道路上の点群データ に対しSemantic Segmentationを行う技術について行いま した。 点群に対するSemantic Segmentationは歴史も古く、論文 数もとても多いため、主に以下の観点で選定した研究を 紹介します。 ここ数年の比較的新しいアプローチ 屋外や道路環境を想定したもの 有名会議/論文誌で発表されたもの 引用数が多いもの ベンチマークで好成績

- 4. 点群に対するSemantic Segmentation 今回調査した内容: データセット LiDARで取得したデータに対するSemantic Segmentation 点群に対する畳み込みニューラルネットワーク 汎用的に使うことを目的にしてますが、主要なものと屋外を対 象としたものを紹介

- 5. 関連資料 LiDAR-Camera Fusionによる道路上の物体検出サーベイ https://www.slideshare.net/takmin/object-detection-with- lidarcamera-fusion-survey-updated LiDARによる道路上の物体検出サーベイ https://www.slideshare.net/takmin/20181130-lidar-object- detection-survey

- 6. データセット Oakland 3D Point Cloud Dataset Munoz, D., Bagnell, J.A.,Vandapel, N., & Hebert, M. (2009). Contextual Classification with Functional Max-Margin Markov Networks. In IEEE Conference on ComputerVision and Pattern Recognition. Paris-rue-Madame Serna,A., Marcotegui, B., Goulette, F., & Deschaud, J.-E. (2014). Paris- rue-Madame database : a 3D mobile laser scanner dataset for benchmarking urban detection , segmentation and classification methods. In International Conference on Pattern Recognition Applications and Methods (ICPRAM). IQmulus Bredif, M.,Vallet, B., Serna,A., Marcotegui, B., & Paparoditis, N. (2015). TERRAMOBILITA/IQMULUS URBAN POINT CLOUD ANALYSIS BENCHMARK. Computers and Graphics, 49, 126–133.

- 7. データセット Semantic 3D Hackel,T., Savinov, N., Ladicky, L.,Wegner, J. D., Schindler, K., & Pollefeys, M. (2017). SEMANTIC3D.NET:A New Large-Scale Point Cloud Classification. ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, IV-1-W1, 91– 98. Paris-Lille-3D Roynard, X., Deschaud, J., & Goulette, F. (2018). Paris-Lille-3D : a large and high-quality ground truth urban point cloud dataset for automatic segmentation and classification. In IEEE Conference on ComputerVision and Pattern Recognition Workshop

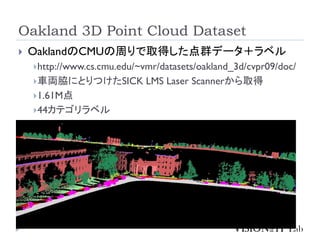

- 8. Oakland 3D Point Cloud Dataset OaklandのCMUの周りで取得した点群データ+ラベル http://www.cs.cmu.edu/~vmr/datasets/oakland_3d/cvpr09/doc/ 車両脇にとりつけたSICK LMS Laser Scannerから取得 1.61M点 44カテゴリラベル

- 9. Paris-rue-Madame パリのrue-Madameの約160mの区間で、 Mobile Laser System(MLS)により取得した点群およびラベル http://www.cmm.mines- paristech.fr/~serna/rueMadameDataset.html 20M点 17クラス Object label Object class

- 10. IQmulus 2013年ParisでMobile Laser System(MLS)により取得した 点群およびラベル http://data.ign.fr/benchmarks/UrbanAnalysis/ インスタンスレベルでセグメンテーション 12M点 22クラス

- 11. Semantic 3D 点群のSemantic Segmentationのためのベンチマーク http://www.semantic3d.net/ 固定LiDARで取得 評価用ツールも提供 1660M点 8クラス

- 12. Paris-Lille-3D Mobile Laser System (MLS)を用いてParisとLilleで取得した 点群+ラベルデータセット http://npm3d.fr/paris-lille-3d 全長1940m 143.1M点 50クラス

- 13. LiDARを用いたSemantic Segmentation [Hackel2016]Hackel,T.,Wegner, J. D., & Schindler, K. (2016). FAST SEMANTIC SEGMENTATION of 3D POINT CLOUDS with STRONGLYVARYING DENSITY. ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, 3(July) [Thomas2018] Thomas, H., & Marcotegui, J. D. B. (2018). Semantic Classification of 3D Point Clouds with Multiscale Spherical Neighborhoods. International Conference on 3DVision (3DV). [Tchapmi2017]Tchapmi, L. P., Choy, C. B.,Armeni, I., Gwak, J., & Savarese, S. (2017). SEGCloud : Semantic Segmentation of 3D Point Clouds. In International Conference of 3DVision (3DV). [Dewan2017] Dewan,A., Oliveira, G. L., & Burgard,W. (2017). Deep Semantic Classification for 3D LiDAR Data. In International Conference on Intelligent Robots and Systems. [Boulch2017]Boulch,A., Saux, B. Le, & Audebert, N. (2017). Unstructured point cloud semantic labeling using deep segmentation networks. In EurographicsWorkshop on 3D Object Retrieval.

- 14. LiDARを用いたSemantic Segmentation [Roynard2018] Roynard, X., Deschaud, J., Goulette, F., Roynard, X., Deschaud, J., Goulette, F., … Goulette, F. (2018). Classification of Point Cloud Scenes with MultiscaleVoxel Deep Network. ArXiv, 1804.03583. [Landrieu2018]Landrieu, L., & Simonovsky, M. (2018). Large-scale Point Cloud Semantic Segmentation with Superpoint Graphs. IEEE Conference on ComputerVision and Pattern Recognition. [Wu2018]Wu, B.,Wan,A.,Yue, X., & Keutzer, K. (2018). SqueezeSeg: Convolutional Neural Nets with Recurrent CRF for Real-Time Road- Object Segmentation from 3D LiDAR Point Cloud. IEEE International Conference on Robotics and Automation (ICRA). [Wu2018_2] Wu, B., Zhou, X., Zhao, S.,Yue, X., Keutzer, K., & Berkeley, U. C. (2018). SqueezeSegV2 : Improved Model Structure and Unsupervised Domain Adaptation for Road-Object Segmentation from a LiDAR Point Cloud. [Ye2018]Ye, X., Li, J., Du, L., & Zhang, X. (2018). 3D Recurrent Neural Networks with Context Fusion for Point Cloud Semantic Segmentation. In European Conference on ComputerVision.

- 15. [Hackel2016]TMLC-MSR (1/2) 点群をマルチスケール化し、各スケールで近傍点を設定することで、 密度の異なる点群を高速に処理 1. 点群をマルチスケールでVoxel Filter 2. 各スケールで各点のk近傍(k=10程度)と、その固有値/固有ベクトル 算出 3. 各スケールで特徴量(下表+SHOT/3D Shape Context)を算出 4. Random Forestで各点を識別 SHOT / 3D Shape Context

- 19. [Tchapmi2017]SEGCloud (1/3) Fully Convolutional Neural Networkによる推定能力に、 Trilinear Interpolation(TI)とCRFを組み合わせ、細部での 性能向上 Voxel化した点群に対し3D FCNNでラベル割り当て TIでVoxelについたラベルを点群へ変換し、3D CRFで最終ラベ ルを調整

- 20. [Tchapmi2017]SEGCloud (2/3) 3D FCNN 点群をVoxel化し、OccupancyチャネルとRGBやIntensityなどのチャネ ルに対して3次元畳み込み Trilinear Interpolation 各点のラベルを周囲の8近 傍ボクセルのラベルスコア の重み付き和で決定

- 22. [Dewan2017]Deep Semantic Classification for LiDAR Data (1/4) 点群をMovable, Non-movable, Dynamic(今動いている)の 3タイプにラベル付け 点群を3チャネルの画像(デプス、高さ、輝度)へ投影し、 CNN(Fast-Net)でObjectnessを判別 2枚の点群からRigid flowを用いて、点ごとの動き(6自由度)を 推定 Objectnessと点の動きをもとにBayes Filterでラベル推定

- 23. [Dewan2017]Deep Semantic Classification for LiDAR Data (2/4) Fast-Net Oliveira, G. L., Burgard,W., & Brox,T. (2016). Efficient Deep Models for Monocular Road Segmentation. In International Conference on Intelligent Robots and Systems. Rigid Flow Dewan,A., Caselitz,T.,Tipaldi, G. D., & Burgard,W. (2016). Rigid Scene Flow for 3D LiDAR Scans. In International Conference on Intelligent Robots and Systems. 2つのPoint Cloud間で以下の𝜙を最大化するように各点の6自 由度の動き𝝉𝑖を算出 近傍点の動きの差を小さく 2つの点群の対応点の 特徴が近くなるように

- 24. [Dewan2017]Deep Semantic Classification for LiDAR Data (3/4) Bayes Filter 時刻𝑡において、各点がラベル𝑥𝑡 = ሼ ሽ dynamic, movable, non − movable をとる確率分布を求める 動き Objectness物体かどうかラベル それぞれモデル化(元論文参照) 前フレームの情報を伝播させることで逐次的に計算可能

- 25. [Dewan2017]Deep Semantic Classification for LiDAR Data (4/4) KITTI 3D Object Detection Benchmark 物体ラベルからMovableとNon-Movableラベルを取得 点群にMovable、Non-Movable、Dynamicラベルを付与したデータセット Ayush Dewan,Tim Caselitz, Gian Diego Tipaldi, and Wolfram Burgard.Motion-based detection and tracking in 3d lidar scans. In IEEE International Conference on Robotics and Automation (ICRA), 2016.

- 26. [Boulch2017]SnapNet (1/2) 点群を複数の画像に落とし込み、CNNによってセグメン テーションする手法 1. 点群をメッシュ化 2. ランダムにカメラ位置と向きを複数設定し、RGB画像とデプ スやノイズ、法線情報などをもとにしたComposite画像を生 成 3. 複数画像に対してSegnetやUnetなどをもとにSemantic Segmentation 4. Segmentationした結果を点群に戻す

- 28. [Roynard2018]Multiscale Voxel Deep Network (1/2) ラベルごとの点の数の不均衡を是正することで精度向上 各エポックごとに、各クラスからN個の点をランダムサンプリングして、 シャッフル サンプリングした点を中心にその周辺をマルチスケールでボクセル グリッド化し、畳み込み

- 30. [Landrieu2018]SPGraph (1/4) 巨大な点群に対し、Superpoint graph (SPG)と呼ばれる構造を 用いて効率的にSemantic Segmentation 1. 点群同士をHand-craftedな特徴を用いてSegmentation (Superpoint) 2. Superpoint間のエッジを生成(点群のVoronoi隣接グラフから) 3. 各Superpointに対しPointNetでラベルを付与 4. Gated Recurrent Unit (GRU)を用いて、グラフ間でメッセージを伝 播することで、コンテクストを考慮した最終的なラベルを算出

- 31. [Landrieu2018]SPGraph (2/4) Superpointの作成 1. 各点から10近傍を結んでグラフを作成 2. 各点からHand-craftedな特徴(Linearity, Planarity, scattering + vertical feature, elevation)を算出 3. 以下の式を𝑙0-cut pursuit algorithmで最小化することで、グ ラフを切断し、Superpointとその特徴を作成 argmin 𝑔∈ℝ 𝑑𝑔 𝑖∈𝐶 𝑔𝑖 − 𝑓𝑖 2 + 𝜇 (𝑖,𝑗)∈𝐸 𝑛𝑛 𝑤𝑖,𝑗 𝑔𝑖 − 𝑔𝑗 ≠ 0 Superedgeの作成 入力点群からVoronoi隣接グラフを作り、もし2つのSuperpoint 間に点同士のエッジが存在するなら、そのSuperedgeを作成 Superppoint間のエッジに13次元特徴量 Superpoint特徴 点の特徴 エッジの距離に 反比例した重み 隣り合うノードが 同じ特徴なら0

- 32. [Landrieu2018]SPGraph (3/4) Superedgeの特徴量 GRU 隣接ノードとエッジの特徴量からメッセージを生成 隣接ノードからのメッセージと現ノードの特徴量から、入力 ゲート、更新ゲート、忘却ゲートなどを使用してSuperpointの特 k超量を更新 ラベルの予測は過去のすべてのSuperpointの特徴量をもとに 行う

- 34. [Wu2018]SqueezeSeg (1/3) End-to-Endに学習可能な高速かつ高精度なネットワーク LiDARデータをシリンダ上に投影し、画像(x,y,z,intensity,rangeの5 チャネル)として扱う 画像をSqueezeNetで解析することにより、精度を維持しつつ高速処 理 CRF as RNNを用いてSegmentationの精度を向上

- 35. [Wu2018]SqueezeSeg (2/3) FireModuleおよびFireDeconvモジュールで1x1 Convを使 用してチャネル数と3x3 kernelの数を減らすことで高速に 畳み込み(FireDeconvモジュールでアップサンプリング) RNNでデータ項を予測ラベル、平滑化項を周辺との特徴 量差分とした平均場近似を算出し、ラベル修正 ソースコード https://github.com/BichenWuUCB/SqueezeSeg

- 36. [Wu2018]SqueezeSeg (3/3) 実験 KITTI 3D Object Detection DatasetからSegmentaion用データ セットを作成 Titan X GPUでCRFありで13.5ms、なしで8.7ms

- 37. [Wu2018_2]SqueezeSeg V2 (1/4) SqueezeSegを以下の改良により6.0-8.6%の精度向上 Context Aggregation Module (CAM)でDropout Noiseに頑健 に Focal Loss、Batch Normalization、LiDAR mask channelで精度 向上 ゲーム(CG)動画からのDomain Adaptation

- 38. [Wu2018_2]SqueezeSeg V2 (2/4) Context Aggregation Module (CAM) LiDARで取得した点群にはセンサーのレンジや鏡、ジッタなどのノイ ズが多い(Dropout Noise) 大きいサイズのMax Poolingを使用して穴埋め Focal Loss 学習データの不均衡(ほとんど点が背景)を是正するために、Cross Entropy Lossから変更 𝐹𝐿 𝑝𝑡 = − 1 − 𝑝𝑡 𝛾 log 𝑝𝑡 Batch Normalizationを各畳み込み層に挿入 データの欠損した領域のマスク(LiDAR Mask)を入力チャネル に追加 Context Aggregation Module

- 39. [Wu2018_2]SqueezeSeg V2 (3/4) Domain Adaptation CGではIntensityのデータが ないため、x、y、z、depthから 推定するネットワークを Unlabelな実データで学習し、 CGデータで推論(a) CG DataにIntensityを加えて Focal LossにGeodesic Loss (実データバッチとCGバッチ との出力分布の距離)を加え て学習(b) 各層の偏りをなくすため、 Unlabelな実データを入力とし、 下の階層から平均0分散1と なるように Batch Normalizationのパラメータを 更新していく(c)

- 40. [Wu2018_2]SqueezeSeg V2 (4/4) KITTIから作成したデータセットを用いて評価

- 41. [Ye2018]3D RNN with Context Fusion (1/4) end-to-endで局所的な空間構造および大局的なコンテクスト両 方を考慮したSemantic Segmentationを学習 1. 空間内を床面と平行にブロック(マルチスケール)へ分割 2. Pointwise Pyramid Poolingで、MLPで求めた点の特徴を統合 してブロックの特徴を算出し、点とブロックの特徴を結合 3. Two-direction RNNによって空間全体のコンテクストを学習/ 認識 4. 2と3それぞれの特徴を統合し、各点のラベルを算出

- 42. [Ye2018]3D RNN with Context Fusion (2/4) Pointwise Pyramid Pooling PointNetと同様にMLPで各点ごとに特徴量を取得済み 各点を中心にマルチスケールにPoolingして統合 近傍計算をマルチサイズのCuboidを用いて近似 スケールに合わせた数の点をCuboid内でランダムサンプリン グしてPooling

- 43. [Ye2018]3D RNN with Context Fusion (3/4) Two-Direction RNN 点ごとにMLPで求めた特徴と、Pointwise Pyramid Pooling で求めた特徴を結合したものを入力 ブロック単位で、まずはx方向のRNN、次はy方向のRNN を用いることで、コンテクストの情報を学習 RNNで取得した特徴量をもとにMLPでラベル付与

- 44. [Ye2018]3D RNN with Context Fusion (4/4) vKITTIでの結果 KITTIでの結果例

- 45. 点群に対するニューラルネットワーク ここ数年、点群をディープラーニングで扱うための研究が 増えています。これらの研究はほとんどのケースで Semantic Segmentationに応用可能です。 点群に対するDeep Learningサーベイはこちらが参考になり ます。 三次元点群を取り扱うニューラルネットワークサーベイ (東北大橋本研) https://www.slideshare.net/naoyachiba18/ss-120302579 CVPR2018の点群畳み込み研究まとめ+SPLATNet https://www.slideshare.net/takmin/cvpr2018pointcloudcnnsplatn et

- 46. 点群に対する畳み込みニューラルネットワーク ここでは重要、または屋外環境に適応した事例があるものに絞って紹 介します。 [Qi2017]Qi, C. R., Su, H., Mo, K., & Guibas, L. J. (2017). PointNet : Deep Learning on Point Sets for 3D Classification and Segmentation Big Data + Deep Representation Learning. IEEE Conference on ComputerVision and Pattern Recognition. [Qi2017_2]Qi, C. R.,Yi, L., Su, H., & Guibas, L. J. (2017). PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. Conference on Neural Information Processing Systems. [Tatarchenko2018]Tatarchenko, M., Park, J., Koltun,V., & Zhou, Q. (2018).Tangent convolutions for dense prediction in 3D. IEEE Conference on ComputerVision and Pattern Recognition. [Wang2018]Wang, S., Suo, S., Ma,W., & Urtasun, R. (2018). Deep Parametric Continuous Convolutional Neural Networks. IEEE Conference on ComputerVision and Pattern Recognition

- 47. [Qi2017]PointNet (1/2) 47 各点群の点を独立に畳み込む Global Max Poolingで点群全体の特徴量を取得 T-Netによって点群を回転させて正規化 コード: https://github.com/charlesq34/pointnet 各点を個別 に畳み込み アフィン変換 各点の特徴を統合

- 48. [Qi2017]PointNet (2/2) T-NetはLoss関数に以下のような正規化項を用いること で、直行行列に近くなるよう変換行列を学習 𝐿 𝑟𝑒𝑔 = 𝑰 − 𝑨𝑨 𝑇 𝐹 2 Segmentationは各点 ごとの特徴に、 Global Max Pooling によって取得した全 体特徴を結合した特 徴に対して行う

- 49. [Qi2017_2]PointNet++ (1/2) 49 PointNetを階層的に適用 点群をクラスタ分割→PointNet→クラスタ内で統合を繰り返す Segmentationの各点の特徴量は周辺の点から補間し、対応する set abstraction層の出力と結合 k近傍の特徴量に対し、距離に応じた重み付き和

- 50. [Qi2017_2]PointNet++ (2/2) 点群の分割 Farthest Point Samplingでサンプリングし、半径r内の点全部 (上限K)を1つのPoint Setとする(オーバーラップあり) PointNetに入力される各Point Set内の点は、セントロイドを原 点とする座標に変換されてから入力 Grouping 異なる密度の点群に対応するため、 異なるスケール(半径r)で取得した PointNet特徴を結合(MSG)、また は異なる層のPointNet特徴を結合 (MRG)する コード https://github.com/charlesq34/point net

- 51. [Tatarchenko2018]Tangent Convolutions (1/3) 51 巨大な点群に対しても適用可能 点群以外の3Dデータフォーマットにも適用可能 Tangent Convolutions 点pの接平面(tangent plane)に近傍点を投影 接平面を画像とみなし、画素を最近傍やGaussianで補間 入力を𝑁 × 𝐿 × 𝐶𝑖𝑛として1 × 𝐿のカーネルで畳み込む 𝑁:点の数、 𝐿:接平面を1次元にした長さ( = 𝑙2 )、 𝐶𝑖𝑛:チャネル数 近傍点の接平面への投影 投影接平面 画像 最近傍で補 間 混合ガウス 補間 Top-3近傍で 混合ガウス補 間

- 52. [Tatarchenko2018]Tangent Convolutions (2/3) 計算の効率化のため、以下を事前計算 接平面の各位置𝒖に対する近傍点𝑔𝑖 𝒖 を事前計算 接平面の各位置𝒖における値𝑆 𝒖 を決定するために、 𝑔𝑖 𝒖 の距 離に応じた重み𝑤𝑖 𝒖 Pooling 3D Gridに点群を分割し、Grid内でAverage Pooling ネットワーク構造

- 53. [Tatarchenko2018]Tangent Convolutions (3/3) ソースコード https://github.com/tatarchm/tangent_conv D: Depth H: Height N: Normal RGB: Red Green Blue

- 54. [Wang2018]Deep Parametric Continuous CNN カーネルを離散ではなく、パラメトリックな連続関数として表現 (ここではMulti-Layer Perceptron) 任意の構造の入力に対して、任意の個所の出力が計算可能 ℎ 𝑛 = 𝑚=−𝑀 𝑀 𝑓 𝑛 − 𝑚 𝑔[𝑚] ℎ 𝒙 = න −∞ ∞ 𝑓 𝒚 𝑔 𝒙 − 𝒚 ⅆ𝑦 ≈ 𝑖 𝑁 1 𝑁 𝑓 𝒚𝑖 𝑔(𝒙 − 𝒚𝑖) 連続カーネル離散カーネル

- 55. [Wang2018]Deep Parametric Continuous CNN Continuous Convolution Layer 各点の k近傍 k近傍 点座標 近傍点への カーネル重み 畳み込み Semantic Segmentation Network

- 56. [Wang2018]Deep Parametric Continuous CNN 車両の屋根に搭載したVelodyne-64で取得したデータセットに 対して評価

- 57. まとめ Point CloudのSemantic Segmentationはここでは紹介しき れないほど、まだまだ多くの手法があります。 点ごとに特徴量を求めるアプローチ、画像へ投影するア プローチ、Voxelに対して畳み込むアプローチ、RNNを用 いるアプローチについて紹介しました。 Point Cloudに対するCNNについても様々な手法が提案 されており、それらの大半はSemantic Segmentationに直 接応用可能です。 PointNetおよびPointNet++が現時点で最も多く利用されてい ます。 Uber/トロント大のグループがContinuous Convolutionを利用し た研究をいくつか発表しています

- 58. 付録 [Zehng2015]Zehng, S., Jayasumana, S., Romera-Paredes, B., Vineet,V., Su, Z., Du, D., …Torr, P. H. S. (2015). Conditional Random Fields as Recurrent Neural Networks. In IEEE Conference on ComputerVision and Pattern Recognition. [Iandola2016]Iandola, F. N., Han, S., Moskewicz, M.W., Ashraf, K., Dally,W. J., & Keutzer, K. (2016). SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5MB model size. ArXiv, 1602.07360.

- 59. [Zheng2015]CRF as RNN Fully Connected CRFの平均場近似による学習と等価なRNNを構築 特徴抽出部分にFCN(Fully Convolutional Networks)を用いることで、 end to endで誤差逆伝播法による学習が行えるネットワークを構築 平均場近似の一回のIterationを表すCNN ネットワークの全体像 ソースコード https://github.com/torrvisi on/crfasrnn (Caffe)

- 60. [Iandola2016]SqueezeNet AlexNetと同等の性能を1/510のパラメータサイズで実現 畳み込み処理を、1x1畳み込みでチャネル数を減らし (squeeze)、3x3と1x1畳み込みを併用する(expand)ことでカー ネルサイズを減らすことで、全体のパラメータ数削減 Downsample(PoolingやStride>1の畳み込み)を後ろの層で行 うことで精度向上

![LiDARを用いたSemantic Segmentation

[Hackel2016]Hackel,T.,Wegner, J. D., & Schindler, K. (2016). FAST

SEMANTIC SEGMENTATION of 3D POINT CLOUDS with

STRONGLYVARYING DENSITY. ISPRS Annals of the Photogrammetry,

Remote Sensing and Spatial Information Sciences, 3(July)

[Thomas2018] Thomas, H., & Marcotegui, J. D. B. (2018). Semantic

Classification of 3D Point Clouds with Multiscale Spherical

Neighborhoods. International Conference on 3DVision (3DV).

[Tchapmi2017]Tchapmi, L. P., Choy, C. B.,Armeni, I., Gwak, J., &

Savarese, S. (2017). SEGCloud : Semantic Segmentation of 3D Point

Clouds. In International Conference of 3DVision (3DV).

[Dewan2017] Dewan,A., Oliveira, G. L., & Burgard,W. (2017). Deep

Semantic Classification for 3D LiDAR Data. In International Conference

on Intelligent Robots and Systems.

[Boulch2017]Boulch,A., Saux, B. Le, & Audebert, N. (2017).

Unstructured point cloud semantic labeling using deep segmentation

networks. In EurographicsWorkshop on 3D Object Retrieval.](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-13-320.jpg)

![LiDARを用いたSemantic Segmentation

[Roynard2018] Roynard, X., Deschaud, J., Goulette, F., Roynard, X.,

Deschaud, J., Goulette, F., … Goulette, F. (2018). Classification of Point

Cloud Scenes with MultiscaleVoxel Deep Network. ArXiv, 1804.03583.

[Landrieu2018]Landrieu, L., & Simonovsky, M. (2018). Large-scale Point

Cloud Semantic Segmentation with Superpoint Graphs. IEEE Conference

on ComputerVision and Pattern Recognition.

[Wu2018]Wu, B.,Wan,A.,Yue, X., & Keutzer, K. (2018). SqueezeSeg:

Convolutional Neural Nets with Recurrent CRF for Real-Time Road-

Object Segmentation from 3D LiDAR Point Cloud. IEEE International

Conference on Robotics and Automation (ICRA).

[Wu2018_2] Wu, B., Zhou, X., Zhao, S.,Yue, X., Keutzer, K., & Berkeley, U. C.

(2018). SqueezeSegV2 : Improved Model Structure and Unsupervised

Domain Adaptation for Road-Object Segmentation from a LiDAR Point

Cloud.

[Ye2018]Ye, X., Li, J., Du, L., & Zhang, X. (2018). 3D Recurrent Neural

Networks with Context Fusion for Point Cloud Semantic Segmentation. In

European Conference on ComputerVision.](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-14-320.jpg)

![[Hackel2016]TMLC-MSR (1/2)

点群をマルチスケール化し、各スケールで近傍点を設定することで、

密度の異なる点群を高速に処理

1. 点群をマルチスケールでVoxel Filter

2. 各スケールで各点のk近傍(k=10程度)と、その固有値/固有ベクトル

算出

3. 各スケールで特徴量(下表+SHOT/3D Shape Context)を算出

4. Random Forestで各点を識別

SHOT / 3D Shape Context](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-15-320.jpg)

![[Hackel2016]TMLC-MSR (2/2)

Paris-Rue-CasseteおよびParis-Rue-Madameで評価](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-16-320.jpg)

![[Thomas2018]RF-MSSF (1/2)

TMLC-MSRではマルチスケールのk近傍を求めていたが、

それをk-NNの代わりにマルチスケールの球の中にあるk

個のサンプルを使用する形に変更

形状の特徴がより適切に取得できる](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-17-320.jpg)

![[Thomas2018]RF-MSSF (2/2)

評価結果](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-18-320.jpg)

![[Tchapmi2017]SEGCloud (1/3)

Fully Convolutional Neural Networkによる推定能力に、

Trilinear Interpolation(TI)とCRFを組み合わせ、細部での

性能向上

Voxel化した点群に対し3D FCNNでラベル割り当て

TIでVoxelについたラベルを点群へ変換し、3D CRFで最終ラベ

ルを調整](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-19-320.jpg)

![[Tchapmi2017]SEGCloud (2/3)

3D FCNN

点群をVoxel化し、OccupancyチャネルとRGBやIntensityなどのチャネ

ルに対して3次元畳み込み

Trilinear Interpolation

各点のラベルを周囲の8近

傍ボクセルのラベルスコア

の重み付き和で決定](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-20-320.jpg)

![[Tchapmi2017]SEGCloud (3/3)

Semantic3Dで評価](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-21-320.jpg)

![[Dewan2017]Deep Semantic Classification

for LiDAR Data (1/4)

点群をMovable, Non-movable, Dynamic(今動いている)の

3タイプにラベル付け

点群を3チャネルの画像(デプス、高さ、輝度)へ投影し、

CNN(Fast-Net)でObjectnessを判別

2枚の点群からRigid flowを用いて、点ごとの動き(6自由度)を

推定

Objectnessと点の動きをもとにBayes Filterでラベル推定](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-22-320.jpg)

![[Dewan2017]Deep Semantic Classification

for LiDAR Data (2/4)

Fast-Net

Oliveira, G. L., Burgard,W., & Brox,T. (2016). Efficient Deep

Models for Monocular Road Segmentation. In International

Conference on Intelligent Robots and Systems.

Rigid Flow

Dewan,A., Caselitz,T.,Tipaldi, G. D., & Burgard,W. (2016). Rigid

Scene Flow for 3D LiDAR Scans. In International Conference on

Intelligent Robots and Systems.

2つのPoint Cloud間で以下の𝜙を最大化するように各点の6自

由度の動き𝝉𝑖を算出

近傍点の動きの差を小さく

2つの点群の対応点の

特徴が近くなるように](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-23-320.jpg)

![[Dewan2017]Deep Semantic Classification

for LiDAR Data (3/4)

Bayes Filter

時刻𝑡において、各点がラベル𝑥𝑡 = ሼ

ሽ

dynamic, movable, non −

movable をとる確率分布を求める

動き Objectness物体かどうかラベル

それぞれモデル化(元論文参照)

前フレームの情報を伝播させることで逐次的に計算可能](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-24-320.jpg)

![[Dewan2017]Deep Semantic Classification

for LiDAR Data (4/4)

KITTI 3D Object Detection Benchmark

物体ラベルからMovableとNon-Movableラベルを取得

点群にMovable、Non-Movable、Dynamicラベルを付与したデータセット

Ayush Dewan,Tim Caselitz, Gian Diego Tipaldi, and Wolfram

Burgard.Motion-based detection and tracking in 3d lidar scans. In IEEE

International Conference on Robotics and Automation (ICRA), 2016.](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-25-320.jpg)

![[Boulch2017]SnapNet (1/2)

点群を複数の画像に落とし込み、CNNによってセグメン

テーションする手法

1. 点群をメッシュ化

2. ランダムにカメラ位置と向きを複数設定し、RGB画像とデプ

スやノイズ、法線情報などをもとにしたComposite画像を生

成

3. 複数画像に対してSegnetやUnetなどをもとにSemantic

Segmentation

4. Segmentationした結果を点群に戻す](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-26-320.jpg)

![[Boulch2017]SnapNet (2/2)](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-27-320.jpg)

![[Roynard2018]Multiscale Voxel Deep

Network (1/2)

ラベルごとの点の数の不均衡を是正することで精度向上

各エポックごとに、各クラスからN個の点をランダムサンプリングして、

シャッフル

サンプリングした点を中心にその周辺をマルチスケールでボクセル

グリッド化し、畳み込み](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-28-320.jpg)

![[Roynard2018]Multiscale Voxel Deep

Network (2/2)](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-29-320.jpg)

![[Landrieu2018]SPGraph (1/4)

巨大な点群に対し、Superpoint graph (SPG)と呼ばれる構造を

用いて効率的にSemantic Segmentation

1. 点群同士をHand-craftedな特徴を用いてSegmentation

(Superpoint)

2. Superpoint間のエッジを生成(点群のVoronoi隣接グラフから)

3. 各Superpointに対しPointNetでラベルを付与

4. Gated Recurrent Unit (GRU)を用いて、グラフ間でメッセージを伝

播することで、コンテクストを考慮した最終的なラベルを算出](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-30-320.jpg)

![[Landrieu2018]SPGraph (2/4)

Superpointの作成

1. 各点から10近傍を結んでグラフを作成

2. 各点からHand-craftedな特徴(Linearity, Planarity, scattering +

vertical feature, elevation)を算出

3. 以下の式を𝑙0-cut pursuit algorithmで最小化することで、グ

ラフを切断し、Superpointとその特徴を作成

argmin

𝑔∈ℝ 𝑑𝑔

𝑖∈𝐶

𝑔𝑖 − 𝑓𝑖

2

+ 𝜇

(𝑖,𝑗)∈𝐸 𝑛𝑛

𝑤𝑖,𝑗 𝑔𝑖 − 𝑔𝑗 ≠ 0

Superedgeの作成

入力点群からVoronoi隣接グラフを作り、もし2つのSuperpoint

間に点同士のエッジが存在するなら、そのSuperedgeを作成

Superppoint間のエッジに13次元特徴量

Superpoint特徴

点の特徴

エッジの距離に

反比例した重み

隣り合うノードが

同じ特徴なら0](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-31-320.jpg)

![[Landrieu2018]SPGraph (3/4)

Superedgeの特徴量

GRU

隣接ノードとエッジの特徴量からメッセージを生成

隣接ノードからのメッセージと現ノードの特徴量から、入力

ゲート、更新ゲート、忘却ゲートなどを使用してSuperpointの特

k超量を更新

ラベルの予測は過去のすべてのSuperpointの特徴量をもとに

行う](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-32-320.jpg)

![[Landrieu2018]SPGraph (4/4)](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-33-320.jpg)

![[Wu2018]SqueezeSeg (1/3)

End-to-Endに学習可能な高速かつ高精度なネットワーク

LiDARデータをシリンダ上に投影し、画像(x,y,z,intensity,rangeの5

チャネル)として扱う

画像をSqueezeNetで解析することにより、精度を維持しつつ高速処

理

CRF as RNNを用いてSegmentationの精度を向上](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-34-320.jpg)

![[Wu2018]SqueezeSeg (2/3)

FireModuleおよびFireDeconvモジュールで1x1 Convを使

用してチャネル数と3x3 kernelの数を減らすことで高速に

畳み込み(FireDeconvモジュールでアップサンプリング)

RNNでデータ項を予測ラベル、平滑化項を周辺との特徴

量差分とした平均場近似を算出し、ラベル修正

ソースコード

https://github.com/BichenWuUCB/SqueezeSeg](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-35-320.jpg)

![[Wu2018]SqueezeSeg (3/3)

実験

KITTI 3D Object Detection DatasetからSegmentaion用データ

セットを作成

Titan X GPUでCRFありで13.5ms、なしで8.7ms](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-36-320.jpg)

![[Wu2018_2]SqueezeSeg V2 (1/4)

SqueezeSegを以下の改良により6.0-8.6%の精度向上

Context Aggregation Module (CAM)でDropout Noiseに頑健

に

Focal Loss、Batch Normalization、LiDAR mask channelで精度

向上

ゲーム(CG)動画からのDomain Adaptation](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-37-320.jpg)

![[Wu2018_2]SqueezeSeg V2 (2/4)

Context Aggregation Module (CAM)

LiDARで取得した点群にはセンサーのレンジや鏡、ジッタなどのノイ

ズが多い(Dropout Noise)

大きいサイズのMax Poolingを使用して穴埋め

Focal Loss

学習データの不均衡(ほとんど点が背景)を是正するために、Cross

Entropy Lossから変更

𝐹𝐿 𝑝𝑡 = − 1 − 𝑝𝑡

𝛾

log 𝑝𝑡

Batch Normalizationを各畳み込み層に挿入

データの欠損した領域のマスク(LiDAR Mask)を入力チャネル

に追加

Context Aggregation Module](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-38-320.jpg)

![[Wu2018_2]SqueezeSeg V2 (3/4)

Domain Adaptation

CGではIntensityのデータが

ないため、x、y、z、depthから

推定するネットワークを

Unlabelな実データで学習し、

CGデータで推論(a)

CG DataにIntensityを加えて

Focal LossにGeodesic Loss

(実データバッチとCGバッチ

との出力分布の距離)を加え

て学習(b)

各層の偏りをなくすため、

Unlabelな実データを入力とし、

下の階層から平均0分散1と

なるように Batch

Normalizationのパラメータを

更新していく(c)](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-39-320.jpg)

![[Wu2018_2]SqueezeSeg V2 (4/4)

KITTIから作成したデータセットを用いて評価](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-40-320.jpg)

![[Ye2018]3D RNN with Context Fusion (1/4)

end-to-endで局所的な空間構造および大局的なコンテクスト両

方を考慮したSemantic Segmentationを学習

1. 空間内を床面と平行にブロック(マルチスケール)へ分割

2. Pointwise Pyramid Poolingで、MLPで求めた点の特徴を統合

してブロックの特徴を算出し、点とブロックの特徴を結合

3. Two-direction RNNによって空間全体のコンテクストを学習/

認識

4. 2と3それぞれの特徴を統合し、各点のラベルを算出](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-41-320.jpg)

![[Ye2018]3D RNN with Context Fusion (2/4)

Pointwise Pyramid Pooling

PointNetと同様にMLPで各点ごとに特徴量を取得済み

各点を中心にマルチスケールにPoolingして統合

近傍計算をマルチサイズのCuboidを用いて近似

スケールに合わせた数の点をCuboid内でランダムサンプリン

グしてPooling](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-42-320.jpg)

![[Ye2018]3D RNN with Context Fusion (3/4)

Two-Direction RNN

点ごとにMLPで求めた特徴と、Pointwise Pyramid Pooling

で求めた特徴を結合したものを入力

ブロック単位で、まずはx方向のRNN、次はy方向のRNN

を用いることで、コンテクストの情報を学習

RNNで取得した特徴量をもとにMLPでラベル付与](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-43-320.jpg)

![[Ye2018]3D RNN with Context Fusion (4/4)

vKITTIでの結果

KITTIでの結果例](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-44-320.jpg)

![点群に対する畳み込みニューラルネットワーク

ここでは重要、または屋外環境に適応した事例があるものに絞って紹

介します。

[Qi2017]Qi, C. R., Su, H., Mo, K., & Guibas, L. J. (2017). PointNet :

Deep Learning on Point Sets for 3D Classification and Segmentation

Big Data + Deep Representation Learning. IEEE Conference on

ComputerVision and Pattern Recognition.

[Qi2017_2]Qi, C. R.,Yi, L., Su, H., & Guibas, L. J. (2017). PointNet++:

Deep Hierarchical Feature Learning on Point Sets in a Metric Space.

Conference on Neural Information Processing Systems.

[Tatarchenko2018]Tatarchenko, M., Park, J., Koltun,V., & Zhou, Q.

(2018).Tangent convolutions for dense prediction in 3D. IEEE

Conference on ComputerVision and Pattern Recognition.

[Wang2018]Wang, S., Suo, S., Ma,W., & Urtasun, R. (2018). Deep

Parametric Continuous Convolutional Neural Networks. IEEE

Conference on ComputerVision and Pattern Recognition](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-46-320.jpg)

![[Qi2017]PointNet (1/2)

47

各点群の点を独立に畳み込む

Global Max Poolingで点群全体の特徴量を取得

T-Netによって点群を回転させて正規化

コード:

https://github.com/charlesq34/pointnet

各点を個別

に畳み込み

アフィン変換

各点の特徴を統合](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-47-320.jpg)

![[Qi2017]PointNet (2/2)

T-NetはLoss関数に以下のような正規化項を用いること

で、直行行列に近くなるよう変換行列を学習

𝐿 𝑟𝑒𝑔 = 𝑰 − 𝑨𝑨 𝑇

𝐹

2

Segmentationは各点

ごとの特徴に、

Global Max Pooling

によって取得した全

体特徴を結合した特

徴に対して行う](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-48-320.jpg)

![[Qi2017_2]PointNet++ (1/2)

49

PointNetを階層的に適用

点群をクラスタ分割→PointNet→クラスタ内で統合を繰り返す

Segmentationの各点の特徴量は周辺の点から補間し、対応する

set abstraction層の出力と結合

k近傍の特徴量に対し、距離に応じた重み付き和](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-49-320.jpg)

![[Qi2017_2]PointNet++ (2/2)

点群の分割

Farthest Point Samplingでサンプリングし、半径r内の点全部

(上限K)を1つのPoint Setとする(オーバーラップあり)

PointNetに入力される各Point Set内の点は、セントロイドを原

点とする座標に変換されてから入力

Grouping

異なる密度の点群に対応するため、

異なるスケール(半径r)で取得した

PointNet特徴を結合(MSG)、また

は異なる層のPointNet特徴を結合

(MRG)する

コード

https://github.com/charlesq34/point

net](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-50-320.jpg)

![[Tatarchenko2018]Tangent Convolutions

(1/3)

51

巨大な点群に対しても適用可能

点群以外の3Dデータフォーマットにも適用可能

Tangent Convolutions

点pの接平面(tangent plane)に近傍点を投影

接平面を画像とみなし、画素を最近傍やGaussianで補間

入力を𝑁 × 𝐿 × 𝐶𝑖𝑛として1 × 𝐿のカーネルで畳み込む

𝑁:点の数、 𝐿:接平面を1次元にした長さ( = 𝑙2 )、 𝐶𝑖𝑛:チャネル数

近傍点の接平面への投影

投影接平面

画像

最近傍で補

間

混合ガウス

補間

Top-3近傍で

混合ガウス補

間](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-51-320.jpg)

![[Tatarchenko2018]Tangent Convolutions

(2/3)

計算の効率化のため、以下を事前計算

接平面の各位置𝒖に対する近傍点𝑔𝑖 𝒖 を事前計算

接平面の各位置𝒖における値𝑆 𝒖 を決定するために、 𝑔𝑖 𝒖 の距

離に応じた重み𝑤𝑖 𝒖

Pooling

3D Gridに点群を分割し、Grid内でAverage Pooling

ネットワーク構造](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-52-320.jpg)

![[Tatarchenko2018]Tangent Convolutions

(3/3)

ソースコード

https://github.com/tatarchm/tangent_conv

D: Depth

H: Height

N: Normal

RGB: Red Green Blue](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-53-320.jpg)

![[Wang2018]Deep Parametric Continuous

CNN

カーネルを離散ではなく、パラメトリックな連続関数として表現

(ここではMulti-Layer Perceptron)

任意の構造の入力に対して、任意の個所の出力が計算可能

ℎ 𝑛 =

𝑚=−𝑀

𝑀

𝑓 𝑛 − 𝑚 𝑔[𝑚] ℎ 𝒙 = න

−∞

∞

𝑓 𝒚 𝑔 𝒙 − 𝒚 ⅆ𝑦 ≈

𝑖

𝑁

1

𝑁

𝑓 𝒚𝑖 𝑔(𝒙 − 𝒚𝑖)

連続カーネル離散カーネル](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-54-320.jpg)

![[Wang2018]Deep Parametric Continuous

CNN

Continuous Convolution Layer

各点の

k近傍

k近傍

点座標

近傍点への

カーネル重み

畳み込み

Semantic Segmentation Network](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-55-320.jpg)

![[Wang2018]Deep Parametric Continuous

CNN

車両の屋根に搭載したVelodyne-64で取得したデータセットに

対して評価](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-56-320.jpg)

![付録

[Zehng2015]Zehng, S., Jayasumana, S., Romera-Paredes, B.,

Vineet,V., Su, Z., Du, D., …Torr, P. H. S. (2015). Conditional

Random Fields as Recurrent Neural Networks. In IEEE

Conference on ComputerVision and Pattern Recognition.

[Iandola2016]Iandola, F. N., Han, S., Moskewicz, M.W.,

Ashraf, K., Dally,W. J., & Keutzer, K. (2016). SqueezeNet:

AlexNet-level accuracy with 50x fewer parameters and

<0.5MB model size. ArXiv, 1602.07360.](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-58-320.jpg)

![[Zheng2015]CRF as RNN

Fully Connected CRFの平均場近似による学習と等価なRNNを構築

特徴抽出部分にFCN(Fully Convolutional Networks)を用いることで、

end to endで誤差逆伝播法による学習が行えるネットワークを構築

平均場近似の一回のIterationを表すCNN

ネットワークの全体像

ソースコード

https://github.com/torrvisi

on/crfasrnn (Caffe)](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-59-320.jpg)

![[Iandola2016]SqueezeNet

AlexNetと同等の性能を1/510のパラメータサイズで実現

畳み込み処理を、1x1畳み込みでチャネル数を減らし

(squeeze)、3x3と1x1畳み込みを併用する(expand)ことでカー

ネルサイズを減らすことで、全体のパラメータ数削減

Downsample(PoolingやStride>1の畳み込み)を後ろの層で行

うことで精度向上](https://image.slidesharecdn.com/20181228lidarsemanticsegsurvey-190108044055/85/2018-12-28-LiDAR-semantic-segmentation-60-320.jpg)

![[DL輪読会]Dense Captioning分野のまとめ](https://cdn.slidesharecdn.com/ss_thumbnails/dlseminar-201202012355-thumbnail.jpg?width=560&fit=bounds)

![SSII2022 [SS1] ニューラル3D表現の最新動向〜 ニューラルネットでなんでも表せる?? 〜](https://cdn.slidesharecdn.com/ss_thumbnails/ss1ssii2022hkatoneural3drepresentationhiroharukato-220607054619-fadc6480-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Learning Transferable Visual Models From Natural Language Supervision](https://cdn.slidesharecdn.com/ss_thumbnails/dlkobayashi0115-210115012308-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]GQNと関連研究,世界モデルとの関係について](https://cdn.slidesharecdn.com/ss_thumbnails/20180817-180827085537-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metr...](https://cdn.slidesharecdn.com/ss_thumbnails/181214dlpointnet-181214053349-thumbnail.jpg?width=560&fit=bounds)