A Gentle Introduction to Locality Sensitive Hashing with Apache Spark

23 likes16,926 views

An Implementation war story of locality sensitive hashing with Apache Spark, with performance lessons.

1 of 33

Downloaded 600 times

![LOOKUP

def findCandidates(record: Iterable[String], hashers: Array[Int => Int],

mBands: BandType) = {

val hash = getHash(record, hashers)

val subArrays = partitionArray(hash).zipWithIndex

subArrays.flatMap { case (band, bandIndex) =>

val hashedBucket = mBands.lookup(bandIndex).

headOption.

flatMap{_.get(band)}

hashedBucket

}.flatten.toSet

}

19](https://image.slidesharecdn.com/hashing-150821160417-lva1-app6892/85/A-Gentle-Introduction-to-Locality-Sensitive-Hashing-with-Apache-Spark-19-320.jpg)

Ad

Recommended

Finding similar items in high dimensional spaces locality sensitive hashing

Finding similar items in high dimensional spaces locality sensitive hashingDmitriy Selivanov This document discusses using locality sensitive hashing (LSH) to efficiently find similar items in high-dimensional spaces. It describes how LSH works by first representing items as sets of shingles/n-grams, then using minhashing to map these sets to compact signatures while preserving similarity. It explains that LSH further hashes the signatures into "bands" to generate candidate pairs that are likely similar and need direct comparison. The number of bands can be tuned to tradeoff between finding most similar pairs vs few dissimilar pairs.

Locality sensitive hashing

Locality sensitive hashingSameera Horawalavithana Locality Sensitive Hashing (LSH) is a technique for solving near neighbor queries in high dimensional spaces. It works by using random projections to map similar data points to the same "buckets" with high probability, allowing efficient retrieval of nearest neighbors. The key properties required of the hash functions used are that they are locality sensitive, meaning nearby points are hashed to the same value more often than distant points. LSH allows solving near neighbor queries approximately in sub-linear time versus expensive exact algorithms like kd-trees that require at least linear time.

LSH

LSHHsiao-Fei Liu Locality sensitive hashing (LSH) is a technique to improve the efficiency of near neighbor searches in high-dimensional spaces. LSH works by hash functions that map similar items to the same buckets with high probability. The document discusses applications of near neighbor searching, defines the near neighbor reporting problem, and introduces LSH. It also covers techniques like gap amplification to improve LSH performance and parameter optimization to minimize query time.

3 - Finding similar items

3 - Finding similar itemsViet-Trung TRAN Locality Sensitive Hashing (LSH) is a technique for finding similar items in large datasets. It works in 3 steps:

1. Shingling converts documents to sets of n-grams (sequences of tokens). This represents documents as high-dimensional vectors.

2. MinHashing maps these high-dimensional sets to short signatures or sketches, in a way that preserves similarity according to the Jaccard coefficient. It uses random permutations to select the minimum value in each permutation.

3. LSH partitions the signature matrix into bands and hashes each band separately, so that similar signatures are likely to hash to the same buckets. Candidate pairs are those that share buckets in one or more bands, reducing

Project - Deep Locality Sensitive Hashing

Project - Deep Locality Sensitive HashingGabriele Angeletti In this project I use a stack of denoising autoencoders to learn low-dimensional

representations of images. These encodings are used as input to a locality sensitive

hashing algorithm to find images similar to a given query image. The results clearly

shows that this approach outperforms basic LSH by far.

CPM2013-tabei201306

CPM2013-tabei201306Yasuo Tabei This document summarizes research presented at the 24th Annual Symposium on Combinatorial Pattern Matching. It discusses three open problems in optimally encoding Straight Line Programs (SLPs), which are compressed representations of strings. The document presents information theoretic lower bounds on SLP size and describes novel techniques for building optimal encodings of SLPs in close to minimal space. It also proposes a space-efficient data structure for the reverse dictionary of an SLP.

Dstar Lite

Dstar LiteAdrian Sotelo This document discusses dynamic pathfinding algorithms. It begins with an overview of A* pathfinding and how it works. It then explains how dynamic pathfinding algorithms differ by modifying search data when the graph connections change, rather than recomputing the entire path from scratch. The document focuses on the dynamic pathfinding algorithms D* Lite and LPA*, explaining how they use node inconsistency checks and priority queue reordering to efficiently handle changes in the graph structure during a search.

Ch03 Mining Massive Data Sets stanford

Ch03 Mining Massive Data Sets stanfordSakthivel C R This document describes a technique called MinHashing that can be used to efficiently find near-duplicate documents among a large collection. MinHashing works in three steps: 1) it converts documents to sets of shingles, 2) it computes signatures for the sets using MinHashing to preserve similarity, 3) it uses Locality-Sensitive Hashing to focus on signature pairs likely to be from similar documents, finding candidates efficiently. This avoids comparing all possible document pairs.

Rehashing

Rehashingrajshreemuthiah The document discusses how hash maps work and the process of rehashing. It explains that inserting a key-value pair into a hash map involves: 1) Hashing the key to get an index, 2) Searching the linked list at that index for an existing key, updating its value if found or adding a new node. Rehashing is done when the load factor increases above a threshold, as that increases lookup time. Rehashing doubles the size of the array and rehashes all existing entries to maintain a low load factor and constant time lookups.

Concept of hashing

Concept of hashingRafi Dar This document discusses hashing techniques for storing data in a hash table. It describes hash collisions that can occur when multiple keys map to the same hash value. Two primary techniques for dealing with collisions are chaining and open addressing. Open addressing resolves collisions by probing to subsequent table indices, but this can cause clustering issues. The document proposes various rehashing functions that incorporate secondary hash values or quadratic probing to reduce clustering in open addressing schemes.

Hashing

Hashingamoldkul Hashing is a common technique for implementing dictionaries that provides constant-time operations by mapping keys to table positions using a hash function, though collisions require resolution strategies like separate chaining or open addressing. Popular hash functions include division and cyclic shift hashing to better distribute keys across buckets. Both open hashing using linked lists and closed hashing using linear probing can provide average constant-time performance for dictionary operations depending on load factor.

Hashing PPT

Hashing PPTSaurabh Kumar This document discusses hashing and different techniques for implementing dictionaries using hashing. It begins by explaining that dictionaries store elements using keys to allow for quick lookups. It then discusses different data structures that can be used, focusing on hash tables. The document explains that hashing allows for constant-time lookups on average by using a hash function to map keys to table positions. It discusses collision resolution techniques like chaining, linear probing, and double hashing to handle collisions when the hash function maps multiple keys to the same position.

Graph Regularised Hashing

Graph Regularised HashingSean Moran Hashing has witnessed an increase in popularity over the

past few years due to the promise of compact encoding and fast query

time. In order to be effective hashing methods must maximally preserve

the similarity between the data points in the underlying binary representation.

The current best performing hashing techniques have utilised

supervision. In this paper we propose a two-step iterative scheme, Graph

Regularised Hashing (GRH), for incrementally adjusting the positioning

of the hashing hypersurfaces to better conform to the supervisory signal:

in the first step the binary bits are regularised using a data similarity

graph so that similar data points receive similar bits. In the second

step the regularised hashcodes form targets for a set of binary classifiers

which shift the position of each hypersurface so as to separate opposite

bits with maximum margin. GRH exhibits superior retrieval accuracy to

competing hashing methods.

From Trill to Quill: Pushing the Envelope of Functionality and Scale

From Trill to Quill: Pushing the Envelope of Functionality and ScaleBadrish Chandramouli In this talk, I overview Trill, describe two projects that expand Trill's functionality, and describe Quill, a new multi-node offline analytics system I have been working on at MSR.

DASH: A C++ PGAS Library for Distributed Data Structures and Parallel Algorit...

DASH: A C++ PGAS Library for Distributed Data Structures and Parallel Algorit...Menlo Systems GmbH DASH is a C++ PGAS library that provides distributed data structures and parallel algorithms. It offers a global address space without a custom compiler. DASH partitions data across multiple units through various distribution patterns. It supports distributed multidimensional arrays and efficient local and global access to data. DASH includes many parallel algorithms ported from STL and supports asynchronous communication. It aims to simplify programming distributed applications through its data-oriented approach.

Introduction to Ultra-succinct representation of ordered trees with applications

Introduction to Ultra-succinct representation of ordered trees with applicationsYu Liu The document summarizes a paper on ultra-succinct representations of ordered trees. It introduces tree degree entropy, a new measure of information in trees. It presents a succinct data structure that uses nH*(T) + O(n log log n / log n) bits to represent an ordered tree T with n nodes, where H*(T) is the tree degree entropy. This representation supports computing consecutive bits of the tree's DFUDS representation in constant time. It also supports computing operations like lowest common ancestor, depth, and level-ancestor in constant time using an auxiliary structure of O(n(log log n)2 / log n) bits.

K10692 control theory

K10692 control theorysaagar264 This document discusses sampled-data systems and provides examples of:

1. Models of analog-to-digital and digital-to-analog converters using sampler and zero-order hold blocks.

2. Deriving the discrete-time model from the continuous-time model using a zero-order hold.

3. An example of obtaining the state-space equations and transfer function of a discrete-time system from a continuous-time system.

IOEfficientParalleMatrixMultiplication_present

IOEfficientParalleMatrixMultiplication_presentShubham Joshi This document presents an overview of making matrix multiplication algorithms more I/O efficient. It discusses the parallel disk model and how to incorporate locality into algorithms to minimize I/O steps. Cannon's algorithm for matrix multiplication in a 2D mesh network is described. Loop interchange is discussed as a way to improve cache efficiency when multiplying matrices by exploring different loop orderings. Results are shown for parallel I/O efficient matrix multiplication on different sized matrices, with times ranging from 0.38 to 7 seconds. References on cache-oblivious algorithms and distributed memory matrix multiplication are provided.

Hashing

Hashinggrahamwell Hashing involves using an algorithm to generate a numeric key from input data. It maps keys to integers in order to store and retrieve data more efficiently. A simple hashing algorithm uses the modulus operator (%) to distribute keys uniformly. Hashing is commonly used for file management, comparing complex values, and cryptography. Collisions occur when different keys hash to the same value, requiring strategies like open hashing, closed hashing, or deleting data. Closed hashing supplements the hash table with linked lists to store colliding entries outside the standard table.

Hashing

HashingDinesh Vujuru This document provides an overview of hashing techniques. It defines hashing as transforming a string into a shorter fixed-length value to represent the original string. Collisions occur when two different keys map to the same address. The document then describes a simple hashing algorithm involving three steps: representing the key numerically, folding and adding the numerical values, and dividing by the address space size. It also discusses predicting the distribution of records among addresses and estimating collisions for a full hash table.

Data Structure and Algorithms Hashing

Data Structure and Algorithms HashingManishPrajapati78 The document discusses hashing techniques for storing and retrieving data from memory. It covers hash functions, hash tables, open addressing techniques like linear probing and quadratic probing, and closed hashing using separate chaining. Hashing maps keys to memory addresses using a hash function to store and find data independently of the number of items. Collisions may occur and different collision resolution methods are used like open addressing that resolves collisions by probing in the table or closed hashing that uses separate chaining with linked lists. The efficiency of hashing depends on factors like load factor and average number of probes.

Spatial search with geohashes

Spatial search with geohashesLucidworks (Archived) The document discusses using geohashes to enable spatial search within Apache Solr. It describes how geohashes encode latitude and longitude data hierarchically and can be used to filter search results by geographic bounding boxes through indexing documents by their geohash prefixes. The author outlines his strategy for implementing geohash-based spatial search in Solr by calculating overlapping geohash prefixes, developing a filtering query, and indexing multi-precision geohashes for more efficient searching.

Tech talk Probabilistic Data Structure

Tech talk Probabilistic Data StructureRishabh Dugar This document provides an overview of probabilistic data structures including Bloom filters, Cuckoo filters, Count-Min sketch, majority algorithm, linear counting, LogLog, HyperLogLog, locality sensitive hashing, minhash, and simhash. It discusses how Bloom filters work using hash functions to insert elements into a bit array and can tell if an element is likely present or definitely not present. It also explains how Count-Min sketch tracks frequencies in a stream using hash functions and finding the minimum value in each hash table cell. Finally, it summarizes how HyperLogLog estimates cardinality by tracking the maximum number of leading zeros when hashing random numbers.

Hashing Technique In Data Structures

Hashing Technique In Data StructuresSHAKOOR AB This document discusses different searching methods like sequential, binary, and hashing. It defines searching as finding an element within a list. Sequential search searches lists sequentially until the element is found or the end is reached, with efficiency of O(n) in worst case. Binary search works on sorted arrays by eliminating half of remaining elements at each step, with efficiency of O(log n). Hashing maps keys to table positions using a hash function, allowing searches, inserts and deletes in O(1) time on average. Good hash functions uniformly distribute keys and generate different hashes for similar keys.

Hashing Algorithm

Hashing AlgorithmHayi Nukman This document discusses dictionaries and hashing techniques for implementing dictionaries. It describes dictionaries as data structures that map keys to values. The document then discusses using a direct access table to store key-value pairs, but notes this has problems with negative keys or large memory usage. It introduces hashing to map keys to table indices using a hash function, which can cause collisions. To handle collisions, the document proposes chaining where each index is a linked list of key-value pairs. Finally, it covers common hash functions and analyzing load factor and collision probability.

Mpmc unit-string manipulation

Mpmc unit-string manipulationxyxz The document contains programs demonstrating string and numeric conversion routines in 8086 assembly language. It includes examples of (1) block transfer of strings from one memory location to another, (2) insertion of a string into another string at a specified location, (3) deletion of a substring from a string, and (4) conversions between BCD, hexadecimal, and ASCII numeric representations. The programs utilize common string and arithmetic operations like MOVSB, LODSB, STOSB, DIV, MUL, AND, SHR to manipulate strings and perform conversions.

Hashing

Hashingdebolina13 Hashing is a technique used to store and retrieve data efficiently. It involves using a hash function to map keys to integers that are used as indexes in an array. This improves searching time from O(n) to O(1) on average. However, collisions can occur when different keys map to the same index. Collision resolution techniques like chaining and open addressing are used to handle collisions. Chaining resolves collisions by linking keys together in buckets, while open addressing resolves them by probing to find the next empty index. Both approaches allow basic dictionary operations like insertion and search to be performed in O(1) average time when load factors are low.

geogebra

geogebra TRIPURARI RAI This document contains descriptions of 6 graphs with their corresponding x and y coordinates. Each graph defines a function by mapping x-values from their domain to y-values in their range. The graphs demonstrate different types of functions, including linear, constant, and piecewise functions. Geogebra is also mentioned as a tool that can be used to find the area under curves.

Sketching and locality sensitive hashing for alignment

Sketching and locality sensitive hashing for alignmentssuser2be88c Sketching and locality sensitive hashing techniques are used to speed up large-scale sequence alignment problems by avoiding all-pairs comparisons. Locality sensitive hashing maps similar sequences to the same "buckets" with high probability, allowing near neighbors to be identified quickly. Ordered minhash is a locality sensitive hashing method for edit distance that accounts for k-mer repetition, providing a better approximation of sequence similarity than regular minhash. These techniques enable faster genome assembly and database searches by prioritizing sequence pairs that are more likely to align.

Structures de données exotiques

Structures de données exotiquesSamir Bessalah Devoxx France 2013 talk on Advanced data structures

SkipLists

Tries

Hash Array Mapped Tries

Bloom Filters

Count min sketch

Ad

More Related Content

What's hot (20)

Rehashing

Rehashingrajshreemuthiah The document discusses how hash maps work and the process of rehashing. It explains that inserting a key-value pair into a hash map involves: 1) Hashing the key to get an index, 2) Searching the linked list at that index for an existing key, updating its value if found or adding a new node. Rehashing is done when the load factor increases above a threshold, as that increases lookup time. Rehashing doubles the size of the array and rehashes all existing entries to maintain a low load factor and constant time lookups.

Concept of hashing

Concept of hashingRafi Dar This document discusses hashing techniques for storing data in a hash table. It describes hash collisions that can occur when multiple keys map to the same hash value. Two primary techniques for dealing with collisions are chaining and open addressing. Open addressing resolves collisions by probing to subsequent table indices, but this can cause clustering issues. The document proposes various rehashing functions that incorporate secondary hash values or quadratic probing to reduce clustering in open addressing schemes.

Hashing

Hashingamoldkul Hashing is a common technique for implementing dictionaries that provides constant-time operations by mapping keys to table positions using a hash function, though collisions require resolution strategies like separate chaining or open addressing. Popular hash functions include division and cyclic shift hashing to better distribute keys across buckets. Both open hashing using linked lists and closed hashing using linear probing can provide average constant-time performance for dictionary operations depending on load factor.

Hashing PPT

Hashing PPTSaurabh Kumar This document discusses hashing and different techniques for implementing dictionaries using hashing. It begins by explaining that dictionaries store elements using keys to allow for quick lookups. It then discusses different data structures that can be used, focusing on hash tables. The document explains that hashing allows for constant-time lookups on average by using a hash function to map keys to table positions. It discusses collision resolution techniques like chaining, linear probing, and double hashing to handle collisions when the hash function maps multiple keys to the same position.

Graph Regularised Hashing

Graph Regularised HashingSean Moran Hashing has witnessed an increase in popularity over the

past few years due to the promise of compact encoding and fast query

time. In order to be effective hashing methods must maximally preserve

the similarity between the data points in the underlying binary representation.

The current best performing hashing techniques have utilised

supervision. In this paper we propose a two-step iterative scheme, Graph

Regularised Hashing (GRH), for incrementally adjusting the positioning

of the hashing hypersurfaces to better conform to the supervisory signal:

in the first step the binary bits are regularised using a data similarity

graph so that similar data points receive similar bits. In the second

step the regularised hashcodes form targets for a set of binary classifiers

which shift the position of each hypersurface so as to separate opposite

bits with maximum margin. GRH exhibits superior retrieval accuracy to

competing hashing methods.

From Trill to Quill: Pushing the Envelope of Functionality and Scale

From Trill to Quill: Pushing the Envelope of Functionality and ScaleBadrish Chandramouli In this talk, I overview Trill, describe two projects that expand Trill's functionality, and describe Quill, a new multi-node offline analytics system I have been working on at MSR.

DASH: A C++ PGAS Library for Distributed Data Structures and Parallel Algorit...

DASH: A C++ PGAS Library for Distributed Data Structures and Parallel Algorit...Menlo Systems GmbH DASH is a C++ PGAS library that provides distributed data structures and parallel algorithms. It offers a global address space without a custom compiler. DASH partitions data across multiple units through various distribution patterns. It supports distributed multidimensional arrays and efficient local and global access to data. DASH includes many parallel algorithms ported from STL and supports asynchronous communication. It aims to simplify programming distributed applications through its data-oriented approach.

Introduction to Ultra-succinct representation of ordered trees with applications

Introduction to Ultra-succinct representation of ordered trees with applicationsYu Liu The document summarizes a paper on ultra-succinct representations of ordered trees. It introduces tree degree entropy, a new measure of information in trees. It presents a succinct data structure that uses nH*(T) + O(n log log n / log n) bits to represent an ordered tree T with n nodes, where H*(T) is the tree degree entropy. This representation supports computing consecutive bits of the tree's DFUDS representation in constant time. It also supports computing operations like lowest common ancestor, depth, and level-ancestor in constant time using an auxiliary structure of O(n(log log n)2 / log n) bits.

K10692 control theory

K10692 control theorysaagar264 This document discusses sampled-data systems and provides examples of:

1. Models of analog-to-digital and digital-to-analog converters using sampler and zero-order hold blocks.

2. Deriving the discrete-time model from the continuous-time model using a zero-order hold.

3. An example of obtaining the state-space equations and transfer function of a discrete-time system from a continuous-time system.

IOEfficientParalleMatrixMultiplication_present

IOEfficientParalleMatrixMultiplication_presentShubham Joshi This document presents an overview of making matrix multiplication algorithms more I/O efficient. It discusses the parallel disk model and how to incorporate locality into algorithms to minimize I/O steps. Cannon's algorithm for matrix multiplication in a 2D mesh network is described. Loop interchange is discussed as a way to improve cache efficiency when multiplying matrices by exploring different loop orderings. Results are shown for parallel I/O efficient matrix multiplication on different sized matrices, with times ranging from 0.38 to 7 seconds. References on cache-oblivious algorithms and distributed memory matrix multiplication are provided.

Hashing

Hashinggrahamwell Hashing involves using an algorithm to generate a numeric key from input data. It maps keys to integers in order to store and retrieve data more efficiently. A simple hashing algorithm uses the modulus operator (%) to distribute keys uniformly. Hashing is commonly used for file management, comparing complex values, and cryptography. Collisions occur when different keys hash to the same value, requiring strategies like open hashing, closed hashing, or deleting data. Closed hashing supplements the hash table with linked lists to store colliding entries outside the standard table.

Hashing

HashingDinesh Vujuru This document provides an overview of hashing techniques. It defines hashing as transforming a string into a shorter fixed-length value to represent the original string. Collisions occur when two different keys map to the same address. The document then describes a simple hashing algorithm involving three steps: representing the key numerically, folding and adding the numerical values, and dividing by the address space size. It also discusses predicting the distribution of records among addresses and estimating collisions for a full hash table.

Data Structure and Algorithms Hashing

Data Structure and Algorithms HashingManishPrajapati78 The document discusses hashing techniques for storing and retrieving data from memory. It covers hash functions, hash tables, open addressing techniques like linear probing and quadratic probing, and closed hashing using separate chaining. Hashing maps keys to memory addresses using a hash function to store and find data independently of the number of items. Collisions may occur and different collision resolution methods are used like open addressing that resolves collisions by probing in the table or closed hashing that uses separate chaining with linked lists. The efficiency of hashing depends on factors like load factor and average number of probes.

Spatial search with geohashes

Spatial search with geohashesLucidworks (Archived) The document discusses using geohashes to enable spatial search within Apache Solr. It describes how geohashes encode latitude and longitude data hierarchically and can be used to filter search results by geographic bounding boxes through indexing documents by their geohash prefixes. The author outlines his strategy for implementing geohash-based spatial search in Solr by calculating overlapping geohash prefixes, developing a filtering query, and indexing multi-precision geohashes for more efficient searching.

Tech talk Probabilistic Data Structure

Tech talk Probabilistic Data StructureRishabh Dugar This document provides an overview of probabilistic data structures including Bloom filters, Cuckoo filters, Count-Min sketch, majority algorithm, linear counting, LogLog, HyperLogLog, locality sensitive hashing, minhash, and simhash. It discusses how Bloom filters work using hash functions to insert elements into a bit array and can tell if an element is likely present or definitely not present. It also explains how Count-Min sketch tracks frequencies in a stream using hash functions and finding the minimum value in each hash table cell. Finally, it summarizes how HyperLogLog estimates cardinality by tracking the maximum number of leading zeros when hashing random numbers.

Hashing Technique In Data Structures

Hashing Technique In Data StructuresSHAKOOR AB This document discusses different searching methods like sequential, binary, and hashing. It defines searching as finding an element within a list. Sequential search searches lists sequentially until the element is found or the end is reached, with efficiency of O(n) in worst case. Binary search works on sorted arrays by eliminating half of remaining elements at each step, with efficiency of O(log n). Hashing maps keys to table positions using a hash function, allowing searches, inserts and deletes in O(1) time on average. Good hash functions uniformly distribute keys and generate different hashes for similar keys.

Hashing Algorithm

Hashing AlgorithmHayi Nukman This document discusses dictionaries and hashing techniques for implementing dictionaries. It describes dictionaries as data structures that map keys to values. The document then discusses using a direct access table to store key-value pairs, but notes this has problems with negative keys or large memory usage. It introduces hashing to map keys to table indices using a hash function, which can cause collisions. To handle collisions, the document proposes chaining where each index is a linked list of key-value pairs. Finally, it covers common hash functions and analyzing load factor and collision probability.

Mpmc unit-string manipulation

Mpmc unit-string manipulationxyxz The document contains programs demonstrating string and numeric conversion routines in 8086 assembly language. It includes examples of (1) block transfer of strings from one memory location to another, (2) insertion of a string into another string at a specified location, (3) deletion of a substring from a string, and (4) conversions between BCD, hexadecimal, and ASCII numeric representations. The programs utilize common string and arithmetic operations like MOVSB, LODSB, STOSB, DIV, MUL, AND, SHR to manipulate strings and perform conversions.

Hashing

Hashingdebolina13 Hashing is a technique used to store and retrieve data efficiently. It involves using a hash function to map keys to integers that are used as indexes in an array. This improves searching time from O(n) to O(1) on average. However, collisions can occur when different keys map to the same index. Collision resolution techniques like chaining and open addressing are used to handle collisions. Chaining resolves collisions by linking keys together in buckets, while open addressing resolves them by probing to find the next empty index. Both approaches allow basic dictionary operations like insertion and search to be performed in O(1) average time when load factors are low.

geogebra

geogebra TRIPURARI RAI This document contains descriptions of 6 graphs with their corresponding x and y coordinates. Each graph defines a function by mapping x-values from their domain to y-values in their range. The graphs demonstrate different types of functions, including linear, constant, and piecewise functions. Geogebra is also mentioned as a tool that can be used to find the area under curves.

Similar to A Gentle Introduction to Locality Sensitive Hashing with Apache Spark (20)

Sketching and locality sensitive hashing for alignment

Sketching and locality sensitive hashing for alignmentssuser2be88c Sketching and locality sensitive hashing techniques are used to speed up large-scale sequence alignment problems by avoiding all-pairs comparisons. Locality sensitive hashing maps similar sequences to the same "buckets" with high probability, allowing near neighbors to be identified quickly. Ordered minhash is a locality sensitive hashing method for edit distance that accounts for k-mer repetition, providing a better approximation of sequence similarity than regular minhash. These techniques enable faster genome assembly and database searches by prioritizing sequence pairs that are more likely to align.

Structures de données exotiques

Structures de données exotiquesSamir Bessalah Devoxx France 2013 talk on Advanced data structures

SkipLists

Tries

Hash Array Mapped Tries

Bloom Filters

Count min sketch

Expressing and Exploiting Multi-Dimensional Locality in DASH

Expressing and Exploiting Multi-Dimensional Locality in DASHMenlo Systems GmbH DASH is a realization of the PGAS (partitioned global address space) programming model in the form of a C++ template library. It provides a multidimensional array abstraction which is typically used as an underlying container for stencil- and dense matrix operations.

Efficiency of operations on a distributed multi-dimensional array highly depends on the distribution of its elements to processes and the communication strategy used to propagate values between them. Locality can only be improved by employing an optimal distribution that is specific to the implementation of the algorithm, run-time parameters such as node topology, and numerous additional aspects. Application developers do not know these implications which also might change in future releases of DASH.

In the following, we identify fundamental properties of distribution patterns that are prevalent in existing HPC applications.

We describe a classification scheme of multi-dimensional distributions based on these properties and demonstrate how distribution patterns can be optimized for locality and communication avoidance automatically and, to a great extent, at compile time.

Cluster Drm

Cluster DrmHong ChangBum This document provides information about various Linux clusters and distributed resource managers available at the Center for Genome Science and NIH. It lists several Linux cluster machines including KHAN with 94 nodes, KGENE with 28 nodes, and LOGIN and LOGINDB servers. Details are provided about the KHAN cluster including its node configuration, storage space, and available software. It also briefly mentions distributed resource managers.

Cluster Drm

Cluster DrmHong ChangBum This document provides information about various Linux clusters and distributed resource managers available at the Center for Genome Science and NIH. It lists several Linux cluster machines including KHAN, KGENE, LOGIN, LOGINDB, and DEV. Details are provided about the KHAN cluster, which has a total of 94 nodes, including specifications of the nodes and storage and software information. It also briefly mentions distributed resource managers.

Massively Scalable Real-time Geospatial Data Processing with Apache Kafka and...

Massively Scalable Real-time Geospatial Data Processing with Apache Kafka and...Paul Brebner Geospatial data makes it possible to leverage location, location, location! Geospatial data is taking off, as companies realize that just about everyone needs the benefits of geospatially aware applications. As a result there are no shortages of unique but demanding use cases of how enterprises are leveraging large-scale and fast geospatial big data processing. The data must be processed in large quantities - and quickly - to reveal hidden spatiotemporal insights vital to businesses and their end users. In the rush to tap into geospatial data, many enterprises will find that representing, indexing and querying geospatially-enriched data is more complex than they anticipated - and might bring about tradeoffs between accuracy, latency, and throughput.

This presentation will explore how we added location data to a scalable real-time anomaly detection application, built around Apache Kafka, and Cassandra. Kafka and Cassandra are designed for time-series data, however, it’s not so obvious how they can process geospatial data. In order to find location-specific anomalies, we need a way to represent locations, index locations, and query locations. We explore alternative geospatial representations including: Latitude/Longitude points, Bounding Boxes, Geohashes, and go vertical with 3D representations, including 3D Geohashes. To conclude we measure and compare the query throughput of some of the solutions, and summarise the results in terms of accuracy vs. performance to answer the question “Which geospatial data representation and Cassandra implementation is best?”

Massively Scalable Real-time Geospatial Data Processing with Apache Kafka and...

Massively Scalable Real-time Geospatial Data Processing with Apache Kafka and...Paul Brebner This presentation will explore how we added location data to a scalable real-time anomaly detection application, built around Apache Kafka, and Cassandra.

Kafka and Cassandra are designed for time-series data, however, it’s not so obvious how they can process geospatial data. In order to find location-specific anomalies, we need a way to represent locations, index locations, and query locations.

We explore alternative geospatial representations including: Latitude/Longitude points, Bounding Boxes, Geohashes, and go vertical with 3D representations, including 3D Geohashes.

For each representation we also explore possible Cassandra implementations including: Clustering columns, Secondary indexes, Denormalized tables, and the Cassandra Lucene Index Plugin.

To conclude we measure and compare the query throughput of some of the solutions, and summarise the results in terms of accuracy vs. performance to answer the question “Which geospatial data representation and Cassandra implementation is best?”

Updated version of presentation for 30 April 2020 Melbourne Distributed Meetup (online)

Enterprise Scale Topological Data Analysis Using Spark

Enterprise Scale Topological Data Analysis Using SparkAlpine Data This document discusses scaling topological data analysis (TDA) using the Mapper algorithm to analyze large datasets. It describes how the authors built the first open-source scalable implementation of Mapper called Betti Mapper using Spark. Betti Mapper uses locality-sensitive hashing to bin data points and compute topological summaries on prototype points to achieve an 8-11x performance improvement over a naive Spark implementation. The key aspects of Betti Mapper that enable scaling to enterprise datasets are locality-sensitive hashing for sampling and using prototype points to reduce the distance matrix computation.

Enterprise Scale Topological Data Analysis Using Spark

Enterprise Scale Topological Data Analysis Using SparkSpark Summit This document discusses scaling topological data analysis (TDA) using the Mapper algorithm to analyze large datasets. It introduces Mapper and its computational bottlenecks. It then describes Betti Mapper, the authors' open-source scalable implementation of Mapper on Spark that achieves an 8-11x performance improvement over a naive version. Betti Mapper uses locality-sensitive hashing to bin data points and compute topological networks on prototype points to analyze large datasets enterprise-scale.

Hadoop Overview kdd2011

Hadoop Overview kdd2011Milind Bhandarkar In KDD2011, Vijay Narayanan (Yahoo!) and Milind Bhandarkar (Greenplum Labs, EMC) conducted a tutorial on "Modeling with Hadoop". This is the first half of the tutorial.

Faster persistent data structures through hashing

Faster persistent data structures through hashingJohan Tibell This document summarizes work to optimize persistent hash map data structures for faster performance. It begins by describing a use case needing fast lookups of string keys. Various implementations are evaluated, including binary search trees, hash tables, and Milan Straka's IntMap approach using hashing. The document then introduces the hash-array mapped trie (HAMT) data structure, describes an optimized Haskell implementation, and benchmarks showing it outperforms the IntMap approach with up to 76% faster lookups and 39-44% faster mutations. Memory usage is also improved over the IntMap. Overall, the HAMT provides the best combination of performance and memory efficiency for this persistent hash map use case.

Svm map reduce_slides

Svm map reduce_slidesSara Asher Support Vector Machines in MapReduce presented an overview of support vector machines (SVMs) and how to implement them in a MapReduce framework to handle large datasets. The document discussed the theory behind basic linear SVMs and generalized multi-classification SVMs. It explained how to parallelize SVM training using stochastic gradient descent and randomly distributing samples across mappers and reducers. The document also addressed handling non-linear SVMs using kernel methods and approximations that allow SVMs to be treated as a linear problem in MapReduce. Finally, examples were given of large companies using SVMs trained on MapReduce to perform customer segmentation and improve inventory value.

Cassandra talk @JUG Lausanne, 2012.06.14

Cassandra talk @JUG Lausanne, 2012.06.14Benoit Perroud Apache Cassandra is a highly scalable, distributed, decentralized, open-source NoSQL database. It provides availability, partition tolerance, and scalability without sacrificing consistency. Cassandra's data model is a keyed column store and it uses consistent hashing to distribute data across nodes. It also uses an eventual consistency model to provide high availability even in the presence of network issues.

Distributed approximate spectral clustering for large scale datasets

Distributed approximate spectral clustering for large scale datasetsBita Kazemi The document proposes a distributed approximate spectral clustering (DASC) algorithm to process large datasets in a scalable way. DASC uses locality sensitive hashing to group similar data points and then approximates the kernel matrix on each group to reduce computation. It implements DASC using MapReduce and evaluates it on real and synthetic datasets, showing it can achieve similar clustering accuracy to standard spectral clustering but with an order of magnitude better runtime by distributing the computation across clusters.

Hashing and Hash Tables

Hashing and Hash Tablesadil raja The document provides an introduction to hashing techniques and their applications. It discusses hashing as a technique to distribute dataset entries across an array of buckets using a hash function. It then describes various hashing techniques like separate chaining and open addressing to resolve collisions. Some applications discussed include how Dropbox uses hashing to check for copyrighted content sharing and how subtree caching is used in symbolic regression.

HACC: Fitting the Universe Inside a Supercomputer

HACC: Fitting the Universe Inside a Supercomputerinside-BigData.com In this deck from the DOE CSGF Program Review meeting, Nicholas Frontiere from the University of Chicago presents: HACC - Fitting the Universe Inside a Supercomputer.

"In response to the plethora of data from current and future large-scale structure surveys of the universe, sophisticated simulations are required to obtain commensurate theoretical predictions. We have developed the Hardware/Hybrid Accelerated Cosmology Code (HACC), capable of sustained performance on powerful and architecturally diverse supercomputers to address this numerical challenge. We will investigate the numerical methods utilized to solve a problem that evolves trillions of particles, with a dynamic range of a million to one."

Watch the video: https://wp.me/p3RLHQ-i4l

Learn more: https://www.krellinst.org/csgf/conf/2017/

Sign up for our insideHPC Newsletter: http://insidehpc.com/newsletter

Strava Labs: Exploring a Billion Activity Dataset from Athletes with Apache S...

Strava Labs: Exploring a Billion Activity Dataset from Athletes with Apache S...Databricks At Strava we have extensively leveraged Apache Spark to explore our data of over a billion activities, from tens of millions of athletes. This talk will be a survey of the more unique and exciting applications: A Global Heatmap gives a ~2 meter resolution density map of one billion runs, rides, and other activities consisting of three trillion GPS points from 17 billion miles of exercise data. The heatmap was rewritten from a non-scalable system into a highly scalable Spark job enabling great gains in speed, cost, and quality. Locally sensitive hashing for GPS traces was used to efficiently cluster 1 billion activities. Additional processes categorize and extract data from each cluster, such as names and statistics. Clustering gives an automated process to extract worldwide geographical patterns of athletes.

Applications include route discovery, recommendation systems, and detection of events and races. A coarse spatiotemporal index of all activity data is stored in Apache Cassandra. Spark streaming jobs maintain this index and compute all space-time intersections (“flybys”) of activities in this index. Intersecting activity pairs are then checked for spatiotemporal correlation, indicated by connected components in the graph of highly correlated pairs form “Group Activities”, creating a social graph of shared activities and workout partners. Data from several hundred thousand runners was used to build an improved model of the relationship between running difficulty and elevation gradient (Grade Adjusted Pace).

Cascading Map-Side Joins over HBase for Scalable Join Processing

Cascading Map-Side Joins over HBase for Scalable Join ProcessingAlexander Schätzle One of the major challenges in large-scale data processing with MapReduce is the smart computation of joins. Since Semantic Web datasets published in RDF have increased rapidly over the last few years, scalable join techniques become an important issue for SPARQL query processing as well.

In this paper, we introduce the Map-Side Index Nested Loop Join (MAPSIN join) which combines scalable indexing capabilities of NoSQL data stores like HBase, that suffer from an insufficient distributed processing layer, with MapReduce, which in turn does not provide appropriate storage structures for efficient large-scale join processing.

While retaining the flexibility of commonly used reduce-side joins, we leverage the effectiveness of map-side joins without any changes to the underlying framework. We demonstrate the significant benefits of MAPSIN joins for the processing of SPARQL basic graph patterns on large RDF datasets by an evaluation with the LUBM and SP2Bench benchmarks.

For selective queries, MAPSIN join based query execution outperforms reduce-side join based execution by an order of magnitude.

snarks <3 hash functions

snarks <3 hash functionsRebekah Mercer How to make hash functions go fast inside snarks, aka a guided tour through arithmetisation friendly hash functions (useful for all cryptographic protocols where cost is dominated by multiplications -- e.g. anything using R1CS; secret sharing based multiparty computation protocols; etc)

Basics of Distributed Systems - Distributed Storage

Basics of Distributed Systems - Distributed StorageNilesh Salpe The document discusses distributed systems. It defines a distributed system as a collection of computers that appear as one computer to users. Key characteristics are that the computers operate concurrently but fail independently and do not share a global clock. Examples given are Amazon.com and Cassandra database. The document then discusses various aspects of distributed systems including distributed storage, computation, synchronization, consensus, messaging, load balancing and serialization.

Ad

More from François Garillot (8)

Growing Your Types Without Growing Your Workload

Growing Your Types Without Growing Your WorkloadFrançois Garillot Growing Your Types Without Growing Your Workload: a talk about the expression problem in Rust, given to the Montreal Rust meetup in January 2020

Deep learning on a mixed cluster with deeplearning4j and spark

Deep learning on a mixed cluster with deeplearning4j and sparkFrançois Garillot Deep learning models can be distributed across a cluster to speed up training time and handle large datasets. Deeplearning4j is an open-source deep learning library for Java that runs on Spark, allowing models to be trained in a distributed fashion across a Spark cluster. Training a model involves distributing stochastic gradient descent (SGD) across nodes, with the key challenge being efficient all-reduce communication between nodes. Engineering high performance distributed training, such as with parameter servers, is important to reduce bottlenecks.

Mobility insights at Swisscom - Understanding collective mobility in Switzerland

Mobility insights at Swisscom - Understanding collective mobility in SwitzerlandFrançois Garillot Swisscom is the leading mobile-service provider in Switzerland, with a market share high enough to enable us to model and understand the collective mobility in every area of the country. To accomplish that, we built an urban planning tool that helps cities better manage their infrastructure based on data-based insights, produced with Apache Spark, YARN, Kafka and a good dose of machine learning. In this talk, we will explain how building such a tool involves mining a massive amount of raw data (1.5E9 records/day) to extract fine-grained mobility features from raw network traces. These features are obtained using different machine learning algorithms. For example, we built an algorithm that segments a trajectory into mobile and static periods and trained classifiers that enable us to distinguish between different means of transport. As we sketch the different algorithmic components, we will present our approach to continuously run and test them, which involves complex pipelines managed with Oozie and fuelled with ground truth data. Finally, we will delve into the streaming part of our analytics and see how network events allow Swisscom to understand the characteristics of the flow of people on roads and paths of interest. This requires making a link between network coverage information and geographical positioning in the space of milliseconds and using Spark streaming with libraries that were originally designed for batch processing. We will conclude on the advantages and pitfalls of Spark involved in running this kind of pipeline on a multi-tenant cluster. Audiences should come back from this talk with an overall picture of the use of Apache Spark and related components of its ecosystem in the field of trajectory mining.

Delivering near real time mobility insights at swisscom

Delivering near real time mobility insights at swisscomFrançois Garillot A use case developed at Swisscom to help urban planners understand their cities and to measure speeds on Swiss highways.

Spark Streaming : Dealing with State

Spark Streaming : Dealing with StateFrançois Garillot These are the slides for the http://www.meetup.com/Big-Data-Romandie/events/230345605/

Most of the interesting bits are in the attached notebooks though :

https://gist.github.com/huitseeker/a868af0dd8064cfe9806f4974a955386

https://gist.github.com/huitseeker/3c6d1246178eea56d958a4757a8cadbd

To be used with :

https://github.com/andypetrella/spark-notebook

Ramping up your Devops Fu for Big Data developers

Ramping up your Devops Fu for Big Data developersFrançois Garillot This document discusses ramping up DevOps for Big Data developers using Apache Mesos and Spark. It provides an overview of Mesos and its principles as a cluster manager and resource manager. It then discusses Spark and its advantages over Hadoop, including its speed, flexibility, and fault tolerance. The document also covers automating deployment of Mesos, HDFS and Spark using tools like Ansible, Packer and Tinc. It discusses ongoing work to further integrate Mesos and Spark.

Diving In The Deep End Of The Big Data Pool

Diving In The Deep End Of The Big Data PoolFrançois Garillot A team of 4 data science PhDs worked with an organization's data for 3 weeks to answer a question. They used unsupervised clustering on web browsing history to identify new customer segments. Due to computational limitations, they implemented locality-sensitive hashing to cluster over 22 million nodes and 123 million edges representing customers' web browsing histories. On the last day, they obtained final results identifying singles as a customer segment, which aligned with existing marketing articles about the rise of singles as an economic segment. The key lessons were to lay a bare minimum pipeline first before refining, that distributed systems are difficult so focus on the problem, and that fuel and social support from colleagues are essential when tackling big challenges.

Scala Collections : Java 8 on Steroids

Scala Collections : Java 8 on SteroidsFrançois Garillot A small talk comparing Java 8 & Scala Collections on functionality, design & goals.

psug-44.eventbrite.fr

Ad

Recently uploaded (20)

Cryptocurrency Exchange Script like Binance.pptx

Cryptocurrency Exchange Script like Binance.pptxriyageorge2024 This SlideShare dives into the process of developing a crypto exchange platform like Binance, one of the world’s largest and most successful cryptocurrency exchanges.

FlakyFix: Using Large Language Models for Predicting Flaky Test Fix Categorie...

FlakyFix: Using Large Language Models for Predicting Flaky Test Fix Categorie...Lionel Briand Journal-first presentation at ICST 2025

Proactive Vulnerability Detection in Source Code Using Graph Neural Networks:...

Proactive Vulnerability Detection in Source Code Using Graph Neural Networks:...Ranjan Baisak As software complexity grows, traditional static analysis tools struggle to detect vulnerabilities with both precision and context—often triggering high false positive rates and developer fatigue. This article explores how Graph Neural Networks (GNNs), when applied to source code representations like Abstract Syntax Trees (ASTs), Control Flow Graphs (CFGs), and Data Flow Graphs (DFGs), can revolutionize vulnerability detection. We break down how GNNs model code semantics more effectively than flat token sequences, and how techniques like attention mechanisms, hybrid graph construction, and feedback loops significantly reduce false positives. With insights from real-world datasets and recent research, this guide shows how to build more reliable, proactive, and interpretable vulnerability detection systems using GNNs.

Odoo ERP for Education Management to Streamline Your Education Process

Odoo ERP for Education Management to Streamline Your Education ProcessiVenture Team LLP Odoo ERP for Education Management can streamline your education process such as admission, staff, lecture, payment and much more.

Kubernetes_101_Zero_to_Platform_Engineer.pptx

Kubernetes_101_Zero_to_Platform_Engineer.pptxCloudScouts Presentacion de la primera sesion de Zero to Platform Engineer

Get & Download Wondershare Filmora Crack Latest [2025]![Get & Download Wondershare Filmora Crack Latest [2025]](https://cdn.slidesharecdn.com/ss_thumbnails/revolutionizingresidentialwi-fi-250422112639-60fb726f-250429170801-59e1b240-thumbnail.jpg?width=560&fit=bounds)

![Get & Download Wondershare Filmora Crack Latest [2025]](https://cdn.slidesharecdn.com/ss_thumbnails/revolutionizingresidentialwi-fi-250422112639-60fb726f-250429170801-59e1b240-thumbnail.jpg?width=560&fit=bounds)

![Get & Download Wondershare Filmora Crack Latest [2025]](https://cdn.slidesharecdn.com/ss_thumbnails/revolutionizingresidentialwi-fi-250422112639-60fb726f-250429170801-59e1b240-thumbnail.jpg?width=560&fit=bounds)

![Get & Download Wondershare Filmora Crack Latest [2025]](https://cdn.slidesharecdn.com/ss_thumbnails/revolutionizingresidentialwi-fi-250422112639-60fb726f-250429170801-59e1b240-thumbnail.jpg?width=560&fit=bounds)

Get & Download Wondershare Filmora Crack Latest [2025]saniaaftab72555 Copy & Past Link 👉👉

https://dr-up-community.info/

Wondershare Filmora is a video editing software and app designed for both beginners and experienced users. It's known for its user-friendly interface, drag-and-drop functionality, and a wide range of tools and features for creating and editing videos. Filmora is available on Windows, macOS, iOS (iPhone/iPad), and Android platforms.

Not So Common Memory Leaks in Java Webinar

Not So Common Memory Leaks in Java WebinarTier1 app This SlideShare presentation is from our May webinar, “Not So Common Memory Leaks & How to Fix Them?”, where we explored lesser-known memory leak patterns in Java applications. Unlike typical leaks, subtle issues such as thread local misuse, inner class references, uncached collections, and misbehaving frameworks often go undetected and gradually degrade performance. This deck provides in-depth insights into identifying these hidden leaks using advanced heap analysis and profiling techniques, along with real-world case studies and practical solutions. Ideal for developers and performance engineers aiming to deepen their understanding of Java memory management and improve application stability.

Microsoft Excel Core Points Training.pptx

Microsoft Excel Core Points Training.pptxMekonnen This is a short and precise training PowerPoint about Microsoft excel.

Avast Premium Security Crack FREE Latest Version 2025

Avast Premium Security Crack FREE Latest Version 2025mu394968 🌍📱👉COPY LINK & PASTE ON GOOGLE https://dr-kain-geera.info/👈🌍

Avast Premium Security is a paid subscription service that provides comprehensive online security and privacy protection for multiple devices. It includes features like antivirus, firewall, ransomware protection, and website scanning, all designed to safeguard against a wide range of online threats, according to Avast.

Key features of Avast Premium Security:

Antivirus: Protects against viruses, malware, and other malicious software, according to Avast.

Firewall: Controls network traffic and blocks unauthorized access to your devices, as noted by All About Cookies.

Ransomware protection: Helps prevent ransomware attacks, which can encrypt your files and hold them hostage.

Website scanning: Checks websites for malicious content before you visit them, according to Avast.

Email Guardian: Scans your emails for suspicious attachments and phishing attempts.

Multi-device protection: Covers up to 10 devices, including Windows, Mac, Android, and iOS, as stated by 2GO Software.

Privacy features: Helps protect your personal data and online privacy.

In essence, Avast Premium Security provides a robust suite of tools to keep your devices and online activity safe and secure, according to Avast.

Adobe Marketo Engage Champion Deep Dive - SFDC CRM Synch V2 & Usage Dashboards

Adobe Marketo Engage Champion Deep Dive - SFDC CRM Synch V2 & Usage DashboardsBradBedford3 Join Ajay Sarpal and Miray Vu to learn about key Marketo Engage enhancements. Discover improved in-app Salesforce CRM connector statistics for easy monitoring of sync health and throughput. Explore new Salesforce CRM Synch Dashboards providing up-to-date insights into weekly activity usage, thresholds, and limits with drill-down capabilities. Learn about proactive notifications for both Salesforce CRM sync and product usage overages. Get an update on improved Salesforce CRM synch scale and reliability coming in Q2 2025.

Key Takeaways:

Improved Salesforce CRM User Experience: Learn how self-service visibility enhances satisfaction.

Utilize Salesforce CRM Synch Dashboards: Explore real-time weekly activity data.

Monitor Performance Against Limits: See threshold limits for each product level.

Get Usage Over-Limit Alerts: Receive notifications for exceeding thresholds.

Learn About Improved Salesforce CRM Scale: Understand upcoming cloud-based incremental sync.

Why Orangescrum Is a Game Changer for Construction Companies in 2025

Why Orangescrum Is a Game Changer for Construction Companies in 2025Orangescrum Orangescrum revolutionizes construction project management in 2025 with real-time collaboration, resource planning, task tracking, and workflow automation, boosting efficiency, transparency, and on-time project delivery.

The Significance of Hardware in Information Systems.pdf

The Significance of Hardware in Information Systems.pdfdrewplanas10 The Significance of Hardware in Information Systems: The Types Of Hardware and What They Do

Revolutionizing Residential Wi-Fi PPT.pptx

Revolutionizing Residential Wi-Fi PPT.pptxnidhisingh691197 Discover why Wi-Fi 7 is set to transform wireless networking and how Router Architects is leading the way with next-gen router designs built for speed, reliability, and innovation.

Expand your AI adoption with AgentExchange

Expand your AI adoption with AgentExchangeFexle Services Pvt. Ltd. AgentExchange is Salesforce’s latest innovation, expanding upon the foundation of AppExchange by offering a centralized marketplace for AI-powered digital labor. Designed for Agentblazers, developers, and Salesforce admins, this platform enables the rapid development and deployment of AI agents across industries.

Email: [email protected]

Phone: +1(630) 349 2411

Website: https://www.fexle.com/blogs/agentexchange-an-ultimate-guide-for-salesforce-consultants-businesses/?utm_source=slideshare&utm_medium=pptNg

Creating Automated Tests with AI - Cory House - Applitools.pdf

Creating Automated Tests with AI - Cory House - Applitools.pdfApplitools In this fast-paced, example-driven session, Cory House shows how today’s AI tools make it easier than ever to create comprehensive automated tests. Full recording at https://applitools.info/5wv

See practical workflows using GitHub Copilot, ChatGPT, and Applitools Autonomous to generate and iterate on tests—even without a formal requirements doc.

Automation Techniques in RPA - UiPath Certificate

Automation Techniques in RPA - UiPath CertificateVICTOR MAESTRE RAMIREZ Automation Techniques in RPA - UiPath Certificate

How can one start with crypto wallet development.pptx

How can one start with crypto wallet development.pptxlaravinson24 This presentation is a beginner-friendly guide to developing a crypto wallet from scratch. It covers essential concepts such as wallet types, blockchain integration, key management, and security best practices. Ideal for developers and tech enthusiasts looking to enter the world of Web3 and decentralized finance.

DVDFab Crack FREE Download Latest Version 2025

DVDFab Crack FREE Download Latest Version 2025younisnoman75 ⭕️➡️ FOR DOWNLOAD LINK : http://drfiles.net/ ⬅️⭕️

DVDFab is a multimedia software suite primarily focused on DVD and Blu-ray disc processing. It offers tools for copying, ripping, creating, and editing DVDs and Blu-rays, as well as features for downloading videos from streaming sites. It also provides solutions for playing locally stored video files and converting audio and video formats.

Here's a more detailed look at DVDFab's offerings:

DVD Copy:

DVDFab offers software for copying and cloning DVDs, including removing copy protections and creating backups.

DVD Ripping:

This allows users to rip DVDs to various video and audio formats for playback on different devices, while maintaining the original quality.

Blu-ray Copy:

DVDFab provides tools for copying and cloning Blu-ray discs, including removing Cinavia protection and creating lossless backups.

4K UHD Copy:

DVDFab is known for its 4K Ultra HD Blu-ray copy software, allowing users to copy these discs to regular BD-50/25 discs or save them as 1:1 lossless ISO files.

DVD Creator:

This tool allows users to create DVDs from various video and audio formats, with features like GPU acceleration for faster burning.

Video Editing:

DVDFab includes a video editing tool for tasks like cropping, trimming, adding watermarks, external subtitles, and adjusting brightness.

Video Player:

A free video player that supports a wide range of video and audio formats.

All-In-One:

DVDFab offers a bundled software package, DVDFab All-In-One, that includes various tools for handling DVD and Blu-ray processing.

PRTG Network Monitor Crack Latest Version & Serial Key 2025 [100% Working]![PRTG Network Monitor Crack Latest Version & Serial Key 2025 [100% Working]](https://cdn.slidesharecdn.com/ss_thumbnails/revolutionizingresidentialwi-fi-250422112639-60fb726f-250504182022-6534c7c0-thumbnail.jpg?width=560&fit=bounds)

![PRTG Network Monitor Crack Latest Version & Serial Key 2025 [100% Working]](https://cdn.slidesharecdn.com/ss_thumbnails/revolutionizingresidentialwi-fi-250422112639-60fb726f-250504182022-6534c7c0-thumbnail.jpg?width=560&fit=bounds)

![PRTG Network Monitor Crack Latest Version & Serial Key 2025 [100% Working]](https://cdn.slidesharecdn.com/ss_thumbnails/revolutionizingresidentialwi-fi-250422112639-60fb726f-250504182022-6534c7c0-thumbnail.jpg?width=560&fit=bounds)

![PRTG Network Monitor Crack Latest Version & Serial Key 2025 [100% Working]](https://cdn.slidesharecdn.com/ss_thumbnails/revolutionizingresidentialwi-fi-250422112639-60fb726f-250504182022-6534c7c0-thumbnail.jpg?width=560&fit=bounds)

PRTG Network Monitor Crack Latest Version & Serial Key 2025 [100% Working]saimabibi60507 Copy & Past Link 👉👉

https://dr-up-community.info/

PRTG Network Monitor is a network monitoring software developed by Paessler that provides comprehensive monitoring of IT infrastructure, including servers, devices, applications, and network traffic. It helps identify bottlenecks, track performance, and troubleshoot issues across various network environments, both on-premises and in the cloud.

A Gentle Introduction to Locality Sensitive Hashing with Apache Spark

- 1. A GENTLE INTRODUCTION TO APACHE SPARK AND LOCALITY-SENSITIVE HASHING 1

- 3. LOCALITY-SENSITIVE HASHING ▸ A story : Why LSH ▸ How it works & hash families ▸ LSH distribution ▸ Beware : WIP 3

- 4. SPARK TENETS ▸ broadcast variables ▸ per-partition commands ▸ shuffle sparsely 4

- 5. 5

- 6. 6

- 7. 7

- 8. SEGMENTATION ▸ small sample: 289421 users ▸ larger sample : 5684403 users 46K websites, ultimately users 4 personal laptops, 4 provided laptops 8

- 9. K-MEANS COMPLEXITY Find with the 'elbow method' on within-cluster sum of squares. Then 9

- 10. EM - GAUSSIAN MIXTURE With dimensions, mixtures, 10

- 11. LOCALITY-SENSITIVE HASHING FUNCTIONS A family H of hashing functions is -sensitive if: ▸ if then ▸ if then 11

- 12. DISTANCES ! (THOSE AND MANY OTHER) ▸ Hamming distance : where is a randomly chosen index ▸ Jaccard : ▸ Cosine distance: 12

- 14. EARTH MOVER'S DISTANCE Find optimal F minimizing: Then: 14

- 15. A WORD ON MODULARITY LSH for EMD introduced by Charikar in the Simhash paper (2002). Yet no place to plug your LSH family in implementation (e.g. scikit, mrsqueeze) ! 15

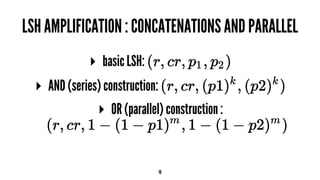

- 16. LSH AMPLIFICATION : CONCATENATIONS AND PARALLEL ▸ basic LSH: ▸ AND (series) construction: ▸ OR (parallel) construction : 16

- 17. 17

- 18. BASIC LSH val hashCollection = records.map(s => (getId(s), s)). mapValues(s => getHash(s, hashers)) val subArray = hashCollection.flatMap { case (recordId, hash) => hash.grouped(hashLength / numberBands).zipWithIndex.map{ case (band, bandIndex) => (bandIndex, (band, sentenceId)) } } 18

- 19. LOOKUP def findCandidates(record: Iterable[String], hashers: Array[Int => Int], mBands: BandType) = { val hash = getHash(record, hashers) val subArrays = partitionArray(hash).zipWithIndex subArrays.flatMap { case (band, bandIndex) => val hashedBucket = mBands.lookup(bandIndex). headOption. flatMap{_.get(band)} hashedBucket }.flatten.toSet } 19

- 20. getHash(record,hashers) DISTRIBUTE RANDOM SEEDS, NOT PERMUTATION FUNCTIONS records.mapPartitions { iter => val rng = new Scala.util.random() iter.map(x => hashers.flatMap{h => getHashFunction(rng, h)(x)}) } 20

- 21. AND YET, OOM 21

- 22. BASIC LSH WITH A 2-STABLE GAUSSIAN DISTRIBUTION With data points, choose and , to solve the problem 22

- 23. WEB LOGS ARE SPARSE Input : hits per user, over 6 months, 2x50-ish integers/user (4GB) Output of length 1000 integers per user : 10 (parallel) bands, 100 (concatenated) hashes 64-bit integers : 40 GB Yet ! 23

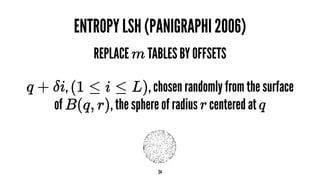

- 24. ENTROPY LSH (PANIGRAPHI 2006) REPLACE TABLES BY OFFSETS , , chosen randomly from the surface of , the sphere of radius centered at 24

- 25. ENTROPY LSH WITH A 2-STABLE GAUSSIAN DISTRIBUTION With data points, choose and , to solve the problem with as few as hash tables 25

- 26. BUT ... NETWORK COSTS ▸ Basic LSH : look up buckets, ▸ Entropy LSH : search for offsets 26

- 27. LAYERED LSH (BAHMANI ET AL. 2012) Output of your LSH family is in , with e.g. a cosine norm. For closer points, the chance of hashes hashing to the same bucket is high! 27

- 28. LAYERED LSH Have an LSH family for your norm on Likely that for all offsets 28

- 29. LAYERED LSH Output of hash generation is (GH(p), (H(p), p)) for all p. In Spark, group, or custom partitioner for (H(p), p) RDD. Network cost : 29

- 30. PERFORMANCE 30

- 31. FUTURE WORK HAVE A (BIG) WEBLOG ? ▸ Weve ▸ Yandex 31

- 32. FUTURE WORK LOCALITY-SENSITIVE HASHING FORESTS ! 32