A modern approach to AI AI_02_agents_Strut.pptx

- 1. Artificial Intelligence Intelligent Agents AIMA Chapter 2 Image: "Robot at the British Library Science Fiction Exhibition" by BadgerGravling

- 4. What is an Agents? • An agent is anything that can be viewed as perceiving its environment through sensors and acting upon that environment through actuators. • Control theory: A closed-loop control system (= feedback control system) is a set of mechanical or electronic devices that automatically regulate a process variable to a desired state or set point without human interaction. The agent is called a controller. • Softbot: Agent is a software program that runs on a host device.

- 5. Agent Function and Agent Program 𝑓 ∶ 𝑃∗ → 𝐴 • Sensors • Memory • Computational power 𝑎 = 𝑓(𝑝) 𝑎 The agent program is a concrete implementation of this function for a given physical system. Agent = architecture (hardware) + agent program (implementation of 𝑓) The agent function maps from the set of all possible percept sequences 𝑃∗ to the set of actions 𝐴 formulated as an abstract mathematical function. 𝑝

- 6. Agents Definition of Agent: Agent is anything that can be viewed as perceiving the environment through sensors and acting upon the environment through actuators. - The human agent has - Eyes, ears, and other organs for sensors - Hands, legs , vocal tract for actuators - A Robotic agent has - cameras and infrared range finders for sensors - Variou motors for actuators - A software agent - receives keystrokes, file contents and network packets as sensory inputs - Acts on the environment by displaying on the screen, writing files and sending network packets Percept vs Percept Sequence:- Percent is the agent’s perceptual input at any given instant and percept sequence is the complete history of everything the agent has ever perceived

- 7. Agent Function Vs Agent Program - The Vacuum cleaner world - Has jus two locations : Square A and B - The Vacuum Agent - Perceives which square it is in and whether there is dirt in the square - Can choose to move left, move right, such up the dirt or do nothing - Agent Function - If the current square is dirty, then suck; otherwise move to the other square

- 8. Example: Vacuum-cleaner World • Percepts: Location and status, e.g., [A, Dirty] • Actions: Left, Right, Suck, NoOp Implemented agent program: function Vacuum-Agent([location, status]) returns an action 𝑎 if status = Dirty then return Suck else if location = A then return Right else if location = B then return Left Agent function: 𝑓 ∶ 𝑃∗ → 𝐴 Percept Sequence Action Right Suck Left [A, Clean] [A, Dirty] … [A, Clean], [B, Clean] … Most recent Percept 𝑝 [A, Clean], [B, Clean], [A, Dirty] Suck … Problem: This table can become infinitively large!

- 9. Agent Function vs Agent Program -Agent function- describes agent’s behavior by mapping any given percept sequence to an action. - To describe any given agent , we have to tabulate the agent function- and this will typically be a very large table(potentially infinitely large table) - We can , in principle , construct this table by trying out all possible percept sequence and recording which action the agent does in response - This table is external characterization of the agent - Agent function abstract mathematical description - Agent program- is an internal implementation of the agent function for an artificial agent - It is a concrete implementation running within sum physical system.

- 11. Good Behavior: The Concept of Rationality We learnt the we should design agents that “ act rational” How do we define action rationally so we can write programs? Should we consider the environment where the agent will be deployed?

- 12. Good Behavior: The Concept of Rationality - A rational agent is one that does the right thing(not a definition of rational agent) - In other words, every entry in the table for the agent function is filled out correctly - What does it mean to do the right thing? - We consider the consequences of the agent’s behavior - When an agent is plunked down in an environment, it generates a sequence of actions according to the percept it receives. - The sequence of actions causes the environment to go through a sequence of states - If this sequence of environment is desirable , the agent has performed well

- 13. Good Behavior: The Concept of Rationality - We are interested in environment states not agent states - We should not define success in terms of the agent’s opinion - The agent could achieve a perfect rationality simply by deluding itself its performance was perfect - Human agents for example are notorious for “ sour grapes”- believing that they did not really want something after not getting it.

- 14. Performance Measure of a Rational vacuum cleaner agent - Amount of dirt cleaned up, - Amount of time taken - Amount of electricity consumed - Amount of noise generated etc.

- 15. Rational Agents: What is Good Behavior? Foundation • Consequentialism: Evaluate behavior by its consequences. • Utilitarianism: Maximize happiness and well-being. Definition of a rational agent: “For each possible percept sequence, a rational agent should select an action that maximizes its expected performance measure, given the evidence provided by the percept sequence and the agent’s built-in knowledge.” • Performance measure: An objective criterion for success of an agent's behavior (often called utility function or reward function). • Expectation: Outcome averaged over all possible situations that may arise. Rule: Pick the action that maximize the expected utility 𝑎 = argmax𝑎∈A 𝐸 𝑈 𝑎)

- 16. Rational Agents This means: • Rationality is an ideal – it implies that no one can build a better agent • Rationality ≠ Omniscience – rational agents can make mistakes if percepts and knowledge do not suffice to make a good decision • Rationality ≠ Perfection – rational agents maximize expected outcomes not actual outcomes • It is rational to explore and learn – I.e., use percepts to supplement prior knowledge and become autonomous • Rationality is often bounded by available memory, computational power, available sensors, etc. Rule: Pick the action that maximize the expected utility 𝑎 = argmax𝑎∈A 𝐸 𝑈 𝑎)

- 17. Example: Vacuum-cleaner World • Percepts: Location and status, e.g., [A, Dirty] • Actions: Left, Right, Suck, NoOp Implemented agent program: function Vacuum-Agent([location, status]) returns an action if status = Dirty then return Suck else if location = A then return Right else if location = B then return Left Agent function: Percept Sequence Action Right Suck Left [A, Clean] [A, Dirty] … [A, Clean], [B, Clean] … What could be a performance measure? Is this agent program rational?

- 19. Problem Specification: PEAS Performance measure Performance measure Environment Actuators Sensors Defines utility and what is rational Components and rules of how actions affect the environment. Defines available actions Defines percepts

- 20. Example: Automated Taxi Driver Performance measure • Safe • fast • legal • comfortable trip • maximize profits Environment • Roads • other traffic • pedestrians • customers Actuators • Steering wheel • acceler ator • brake • signal • horn Sensors • Cameras • sonar • speedometer • GPS • Odometer • engine sensors • keyboa rd

- 21. Example: Spam Filter Performance measure • Accuracy: Minimizing false positives, false negatives Environment • A user’s email account • email server Actuators • Mark as spam • delete • etc. Sensors • Incoming messages • other information about user’s account

- 24. Types of Environment 1. Observability : Fully ( complete) , Partial , Hidden 2. Predictability: Deterministic , Strategic, Stochastic 3. Interaction: Episodic / Sequential 4. Agents: Single-Agent, Multi-agent(Competitive, Cooperative) 5. Time: Static/Dynamic 6. State:- Discrete, Continuous(Time , Percept, actions)

- 25. Environment Types vs. vs. vs. Fully observable: The agent's sensors give it access to the complete state of the environment. The agent can “see” the whole environment. Partially observable: The agent cannot see all aspects of the environment. E.g., it can’t see through walls Deterministic: Changes in the environment is completely determined by the current state of the environment and the agent’s action. Stochastic: Changes cannot be determined from the current state and the action (there is some randomness). Strategic: The environment is stochastic and adversarial. It chooses actions strategically to harm the agent. E.g., a game where the other player is modeled as part of the environment. Known: The agent knows the rules of the environment and can predict the outcome of actions. Unknown: The agent cannot predict the outcome of actions.

- 26. Environment Types vs. Continuous: Percepts, actions, state variables or vs. time are continuous leading to an infinite state, percept or action space. vs. vs. Static: The environment is not changing while agent is deliberating. Semidynamic: the environment is static, but the agent's performance score depends on how fast it acts. Dynamic: The environment is changing while the agent is deliberating. Discrete: The environment provides a fixed number of distinct percepts, actions, and environment states. Time can also evolve in a discrete or continuous fashion. Episodic: Episode = a self-contained sequence of actions. The agent's choice of action in one episode does not affect the next episodes. The agent does the same task repeatedly. Single agent: An agent operating by itself in an environment. Sequential: Actions now affect the outcomes later. E.g., learning makes problems sequential. Multi-agent: Agent cooperate or compete in the same environment.

- 35. Examples of Different Environments Observable Deterministic Episodic? Static Discrete Single agent Partially Partially Stochastic +Strategi c Stochastic Fully Determ. game Mechanics + Strategic* Episodic Semidynamic Sequential Dynamic Episodic Static Discrete Discrete Continuous Multi* Episodic Fully Deterministic Static Discrete Single Multi* Multi* Chess with a clock Scrabble Taxi driving Word jumble solver * Can be models as a single agent problem with the other agent(s) in the environment.

- 37. Designing a Rational Agent Remember the definition of a rational agent: “For each possible percept sequence, a rational agent should select an action that maximizes its expected performance measure, given the evidence provided by the percept sequence and the agent’s built-in knowledge.” Agent Function • Assess performance measure • Remember percept sequence • Built-in knowledge � � � � Percept to the agent function Action from the agent function Note: Everything outside the agent function can be seen as the environment. action

- 38. Hierarchy of Agent Types Utility-based agents Goal-based agents Model-based reflex agents Simple reflex agents Learning Agents

- 40. Simple Reflex Agent • Uses only built-in knowledge in the form of rules that select action only based on the current percept. This is typically very fast! • The agent does not know about the performance measure! But well-designed rules can lead to good performance. • The agent needs no memory and ignores all past percepts. The interaction is a sequence: 𝑝0, 𝑎0, 𝑝1, 𝑎1, 𝑝2, 𝑎2, … 𝑝𝑡, 𝑎𝑡, … Example: A simple vacuum cleaner that uses rules based on its current sensor input. Observe the world, choose an action, implement action, done Problems if environment is not fully-observable 𝑎 = 𝑓(𝑝)

- 42. Model-based Reflex Agent The interaction is a sequence: 𝑠0, 𝑎0, 𝑝1, 𝑠1, 𝑎1, 𝑝2, 𝑠2, 𝑎2, 𝑝3, … , 𝑝𝑡, 𝑠𝑡, 𝑎𝑡, … Example: A vacuum cleaner that remembers were it has already cleaned. Information about how the world evolves independently of the agent Information about how the agent’s own actions affect the world • Maintains a state variable to keeps track of aspects of the environment that cannot be currently observed. I.e., it has memory and knows how the environment reacts to actions (called transition function). • The state is updated using the percept. • There is now more information for the rules to make better decisions. 𝑠 𝑠′ = 𝑇(𝑠, 𝑎) 𝑎 = 𝑓(𝑝, 𝑠)

- 43. Transition Function • The environment is modeled as a discrete dynamical system. • Changed in the environment are a sequence of states 𝑠0, 𝑠1, … 𝑠𝑇, where the index is the time step. • Example of a state diagram: switch off • States change because of: a. System dynamics of the environment. b. The actions of the agent. • Both types of changes are represented by the transition function written as 𝑠𝑠 = 𝑇(𝑠, 𝑎) 𝑇: 𝑆 × 𝐴 → 𝑆 � � � � … set of states … set of available actions 𝑎 ∈ 𝐴 … an action 𝑠 ∈ 𝑆 … current state 𝑠′ ∈ 𝑆 … next state or Light is off Light is on switch on

- 44. State Representation States help to keep track of the environment and the agent in the environment. This is often also called the system state. The representation can be • Atomic: Just a label for a black box. E.g., A, B • Factored: A set of attribute values called fluents. E.g., [location = left, status = clean, temperature = 75 deg. F] We often construct atomic labels from factored information. E.g.: If the agent’s state is the coordinate x = 7 and y = 3, then the atomic state label could be the string “(7, 3)”. With the atomic representation, we can only compare if two labels are the same. With the factored state representation, we can reason more and calculate the distance between states! The set of all possible states is called the state space 𝑆. This set is typically very large! Action causes transition Variables describing the system state are called “fluents”

- 45. Old-school vs. Smart Thermostat Smart thermostat Percepts Old-school thermostat Percepts States States

- 46. Old-school vs. Smart Thermostat Smart thermostat temperature: Low, ok, high Percepts • Temp: deg. F • Outside temp. • Weather report • Energy curtailment • Someone walking by • Someone changes temp. • Day & time • … Old-school thermostat Percepts States No states need States Factored states • Estimated time to cool the house • Someone home? • How long till someone is coming home? • A/C: on, off Set temperature range Change temperatur e when you are too cold/warm.

- 48. Goal-based Agent • The agent has the task to reach a defined goal state and is then finished. • The agent needs to move towards the goal. It can use search algorithms to plan actions that lead to the goal. • Performance measure: the cost to reach the goal. 𝑎 = argmin𝑎0∈A 𝑇 � 𝑐𝑡 � 𝑠𝑇 ∈ 𝑆𝑔 𝑔𝑜𝑎𝑙 𝑡=0 Sum of the cost of a planed sequence of actions that leads to a goal state The interaction is a sequence: 𝑠0, 𝑎0, 𝑝1, 𝑠1, 𝑎1, 𝑝2, 𝑠2, 𝑎2, … , 𝑠𝑔𝑔𝑜𝑎𝑙 cost plan

- 50. Utility-based Agent • The agent uses a utility function to evaluate the desirability of each possible states. This is typically expressed as the reward of being in a state 𝑅(𝑠). • Choose actions to stay in desirable states. • Performance measure: The discounted sum of expected utility over time. ∞ 𝑎 = arg𝑚𝑎𝑥𝑎 D � 𝛾𝛾𝑡𝑟 0∈A 𝑡 𝑡=0 Utility is the expected future discounted reward Techniques: Markov decision processes, reinforcement learning

- 51. Agents that Learn The learning element modifies the agent program (reflex-based, goal- based, or utility-based) to improve its performance. Agent program How is the agent currently performing? Exploration Update the agent program

- 52. Example: Smart Thermostat Smart thermostat Percepts • Temp: deg. F • Outside temp. • Weather report • Energy curtailment • Someone walking by • Someone changes temp. • Day & time • … States Factored states • Estimated time to cool the house • Someone home? • How long till someone is coming home? • A/C: on, off Change temperature when you are too cold/warm.

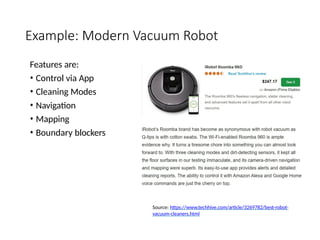

- 53. Example: Modern Vacuum Robot Features are: • Control via App • Cleaning Modes • Navigation • Mapping • Boundary blockers Source: https://www.techhive.com/article/3269782/best-robot- vacuum-cleaners.html

- 54. PEAS Description of a Modern Robot Vacuum Performance measure Environment Actuators Sensors

- 55. What Type of Intelligent Agent is a Modern Robot Vacuum? Utility-based agents Goal-based agents Model-based reflex agents Simple reflex agents Does it collect utility over time? How would the utility for each state be defined? Does it have a goal state? Does it store state information. How would they be defined (atomic/factored)? Does it use simple rules based on the current percepts? Is it learning? Check what applies

- 56. What Type of Intelligent Agent is this?

- 57. PEAS Description of ChatGPT Performance measure Environment Actuators Sensors

- 58. How does ChatGPT work?

- 59. What Type of Intelligent Agent is ChatGPT? Utility-based agents Goal-based agents Model-based reflex agents Simple reflex agents Does it store state information. How would they be defined (atomic/factored)? Does it have a goal state? Does it collect utility over time? How would the utility for each state be defined? Is it learning? Answer the following questions: • Does ChatGPT pass the Touring test? • Is ChatGPT a rational agent? Why? Check what applies Does it use simple rules based on the current percepts? We will talk about knowledge-based agents later.

- 60. Intelligent Systems as Sets of Agents: Self-driving Car Utility-based agents Goal-based agents Model-based reflex agents Simple reflex agents Remember where every other car is and calculate where they will be in the next few seconds. React to unforeseen issues like a child running in front of the car quickly. Make sure the passenger has a pleasant drive (not too much sudden breaking = utility) Plan the route to the destination. It should learn! High-level planning Low-level planning

- 61. Some Environment Types Revisited vs. vs. vs. Fully observable: The agent’s sensors always show the whole state. Partially observable: The agent only perceives part of the state and needs to remember or infer the test. Deterministic: Percepts are 100% reliable and changes in the environment is completely determined by the current state of the environment and the agent’s action. Stochastic: • Percepts are unreliable (noise distribution, sensor failure probability, etc.). This is called a stochastic sensor model. • The transition function is stochastic leading to transition probabilities and a Markov process. Known: The agent knows the transition function. Unknown: The needs to learn the transition function by trying actions. We will spend the whole semester on discussing algorithms that can deal with environments that have different combinations of these three properties.

- 62. Conclusion Search for a goal (e.g., navigation). Optimize functions (e.g., utility). Stay within given constraints (constraint satisfaction problem; e.g., reach the goal without running out of power) Deal with uncertainty (e.g., current traffic on the road). Learn a good agent program from data and improve over time (machine learning). Sensing (e.g, natural language processing, vision) Intelligent agents inspire the research areas of modern AI

- 63. Different types of AI agents: 1. Simple Reflex Agents: These agents make decisions based solely on the current percept (immediate stimuli from the environment). They ignore the rest of the percept history. For example, a simple reflex agent might apply the brakes if it detects the tail light of the vehicle in fr ont of it is on 1 . 2. Goal-Based Agents: Goal-based agents have a specific goal they aim to achieve. They select actions that improve progress toward that goal. For instance, a goal-based reflex agent would take actions to reach its goal, even if they are not neces sarily the best actions 2 . 3. Learning Agents: Learning agents adapt and improve their behavior over time by learning from

- 64. 4. Hierarchical Agents: These agents operate in a hierarchical manner, with different levels of decision-making. Higher-level goals guide lower- level actions. For example, a robot navigating a maze might hav e high-level goals (reach the exit) and low-level acti ons (move forward, turn left, etc.) 3 . 5. Collaborative Agents: Collaborative agents work together in multi-agent systems to achieve common goals. They coordinate actions and communicate with each other. Examples include teams of robots working togeth er or multiplayer game characters cooperating 1 . 6. Agent Program: An agent program implements the agent’s decision-making function. It maps

- 65. Simple Reflex Agent def simple_reflex_agent(percept): if percept == "tail light on": return "Apply brakes" else: return "Continue driving" # Example usage: current_percept = "tail light on" action = simple_reflex_agent(current_percept) print(f"Action: {action}")

- 66. class SimpleReflexAgent: def __init__(self): self.rules = { 'dirty': self.clean, 'clean': self.no_op } self.location = None def perceive(self, environment): self.location, status = environment return status def act(self, status): action = self.rules.get(status, self.no_op) return action() def clean(self): print(f"Agent cleaned the room at location {self.location}.") return "clean“ def no_op(self): print(f"Agent did nothing in the room at location {self.location}.") return "no_op"

- 67. if __name__ == "__main__": environment_state = ("A", "dirty") agent = SimpleReflexAgent() while True: percept = agent.perceive(environment_state) action_result = agent.act(percept) if action_result == "clean": environment_state = ("A", "clean") else: break

- 68. Goal Based Agent class GoalBasedAgent: def __init__(self, goal): self.goal = goal def select_action(self, percept): # Implement your logic here based on the goal and percept # For demonstration purposes, let's assume a simple action: return "Take a step towards the goal" # Example usage: goal_agent = GoalBasedAgent("Reach the exit") current_percept = "Obstacle ahead" action = goal_agent.select_action(current_percept) print(f"Action: {action}")

Editor's Notes

- #1: https://slideplayer.com/slide/4380559/

- #4: How do AI agents work? AI agents range from simple task-specific programs to sophisticated systems that combine perception, reasoning, and action capabilities. The most advanced agents in use today present the full potential of this technology, operating through a cycle of processing inputs, making decisions, and executing actions while continuously updating their knowledge. Perception and input processing AI agents begin by gathering and processing input from their environment. This could include parsing text commands, analyzing data streams, or receiving sensor data. The perception module converts raw inputs into a format the agent can understand and process. For example, when a customer submits a support request, an AI agent could process the ticket by analyzing text content, user history, and metadata like priority level and timestamp. Decision-making and planning Using machine learning models like NLP, sentiment analysis, and classification algorithms, agents evaluate their inputs against their objectives. These models work together: NLP first processes and understands the input text, sentiment analysis evaluates its tone and intent, and classification algorithms determine which category of response is most appropriate. This layered approach enables agents to process complex inputs and respond appropriately. They generate possible actions, assess potential outcomes, and select the most appropriate response based on their programming and current context. For instance, when handling the support ticket, the AI agent could evaluate content and urgency to determine whether to handle it directly or escalate to a human agent. Knowledge management Agents maintain and use knowledge bases that contain domain-specific information, learned patterns, and operational rules. Through Retrieval-Augmented Generation (RAG), agents can dynamically access and incorporate relevant information from their knowledge base when forming responses. In our support ticket example, the agent uses RAG to pull information from product documentation, past cases, and company policies to generate accurate, contextual solutions rather than relying solely on its training data. Action execution Once a decision is made, agents execute actions through their output interfaces. This could involve generating text responses, updating databases, triggering workflows, or sending commands to other systems. The action module ensures the chosen response is properly formatted and delivered. Continuing our example, the customer support agent might then send automated troubleshooting steps, route the ticket to a specialized department, or flag it for immediate human attention. Learning and adaptation Advanced AI agents can improve their performance over time through feedback loops and learning mechanisms. They analyze the outcomes of their actions, update their knowledge bases, and refine their decision-making processes based on success metrics and user feedback. Using reinforcement learning techniques, these agents develop optimal policies by balancing exploration (trying new approaches) with exploitation (using proven successful strategies). In the support scenario, the agent learns from resolution success rates and satisfaction scores to improve its future responses and routing decisions, treating each interaction as a learning opportunity to refine its decision-making model. Types of AI agents Businesses have a rich but complex landscape of AI agent options, ranging from simple task-specific automation tools to sophisticated multi-purpose assistants that can transform entire workflows. The choice or development of an AI agent depends on several factors—including technical complexity, implementation costs, and specific use cases—with some organizations opting for ready-to-use solutions while others invest in custom agents tailored to their unique needs. 1. Simple reflex agents Simple reflex agents are one of the most basic forms of artificial intelligence. These agents make decisions based solely on their current sensory input, responding immediately to environmental stimuli without needing memory or learning processes. Their behavior is governed by predefined condition-action rules, which specify how to react to particular inputs. Though they are limited in complexity, this straightforward approach makes them highly efficient and easy to implement, especially in environments where the range of possible actions is limited. Key components: Sensors: Much like human senses, these gather information from the environment. For a simple reflex agent, sensors are typically basic input devices that detect specific environmental conditions like temperature, light, or motion. Condition-action rules: These predefined rules determine how the agent responds to specific inputs. The logic is direct—if the agent detects a specific condition, it immediately performs a corresponding action. Actuators: These execute the decisions made by the agent, translating them into physical or digital responses that alter the environment in some way, such as activating a heating system or turning on lights. Use cases: Simple reflex agents are ideal for transparent, predictable environments with limited variables. Industrial safety sensors that immediately shut down machinery when detecting an obstruction in the work area. Automated sprinkler systems that activate based on smoke detection. Email auto-responders that send predefined messages based on specific keywords or sender addresses. 2. Model-based reflex agents Model-based reflex agents are a more advanced form of intelligent agents designed to operate in partially observable environments. Unlike simple reflex agents, which react solely based on current sensory input, model-based agents maintain an internal representation, or model, of the world. This model tracks how the environment evolves, allowing the agent to infer unobserved aspects of the current state. While these agents don’t actually “remember” past states in the way more advanced agents do, they use their world model to make better decisions about the current state. Key components: State tracker: Maintains information about the current state of the environment based on the world model and sensor history. World model: Contains two key types of knowledge, how the environment evolves independent of the agent, and how the agent’s actions affect the environment. Reasoning component: Uses the world model and current state to determine appropriate actions based on condition-action rules. Use cases: These agents are suitable for environments where the current state isn’t fully observable from sensor data alone. Smart home security systems: Using models of normal household activity patterns to distinguish between routine events and potential security threats. Quality control systems: Monitoring manufacturing processes by maintaining a model of normal operations to detect deviations. Network monitoring tools: Tracking network state and traffic patterns to identify potential issues or anomalies. 3. Goal-based agents Goal-based agents are designed to pursue specific objectives by considering the future consequences of their actions. Unlike reflex agents that act based on rules or world models, goal-based agents plan sequences of actions to achieve desired outcomes. They use search and planning algorithms to find action sequences that lead to their goals. Key components: Goal state: A clear description of what the agent aims to achieve Planning mechanism: The ability to search through possible sequences of actions that could lead to the goal. State evaluation: Methods to assess whether potential future states move closer to or further from the goal. Action selection: The process of choosing actions based on their predicted contribution toward reaching the goal. World model: Understanding of how actions change the environment, used for planning. Use cases: Goal-based agents are suited for tasks with clear, well-defined objectives and predictable action outcomes. Industrial robots: Following specific sequences to assemble products. Automated warehouse systems: Planning optimal paths to retrieve items. Smart heating systems: Planning temperature adjustments to reach desired comfort levels efficiently. Inventory management systems: Planning reorder schedules to maintain target stock levels. Task scheduling systems: Organizing sequences of operations to meet completion deadlines. 4. Learning agents A learning agent is an artificial intelligence system capable of improving its behavior over time by interacting with its environment and learning from its experiences. These agents modify their behavior based on feedback and experience, using various learning mechanisms to optimize their performance. Unlike simpler agent types, they can discover how to achieve their goals through experience rather than purely relying on pre-programmed knowledge. Key components: Performance element: The component that selects external actions, similar to the decision-making modules in simpler agents. Critic: Provides feedback on the agent’s performance by evaluating outcomes against standards, often using a reward or performance metric. Learning element: Uses critic’s feedback to improve the performance element, determining how to modify behavior to do better in the future. Problem generator: Suggests exploratory actions that might lead to new experiences and better future decisions. Use cases: Learning agents are suited for environments where optimal behavior isn’t known in advance and must be learned through experience. Industrial process control: Learning optimal settings for manufacturing processes through trial and error. Energy management systems: Learning patterns of usage to optimize resource consumption. Customer service chatbots: Improving response accuracy based on interaction outcomes. Quality control systems: Learning to identify defects more accurately over time. 5. Utility-based agents A utility-based agent makes decisions by evaluating the potential outcomes of its actions and choosing the one that maximizes overall utility. Unlike goal-based agents that aim for specific states, utility-based agents can handle tradeoffs between competing goals by assigning numerical values to different outcomes. Key components: Utility function: A mathematical function that maps states to numerical values, representing the desirability of each state. State evaluation: Methods to assess current and potential future states in terms of their utility. Decision mechanism: Processes for selecting actions that are expected to maximize utility. Environment model: Understanding of how actions affect the environment and resulting utilities. Use cases: Utility-based agents are suited for scenarios requiring balance between multiple competing objectives. Resource allocation systems: Balancing machine usage, energy consumption, and production goals. Smart building management: Optimizing between comfort, energy efficiency, and maintenance costs. Scheduling systems: Balancing task priorities, deadlines, and resource constraints. 6. Hierarchical agents Hierarchical agents are structured in a tiered system, where higher-level agents manage and direct the actions of lower-level agents. This architecture breaks down complex tasks into manageable subtasks, allowing for more organized control and decision-making. Key components: Task decomposition: Breaks down complex tasks into simpler subtasks that can be managed by lower-level agents. Command hierarchy: Defines how control and information flow between different levels of agents. Coordination mechanisms: Ensures different levels of agents work together coherently. Goal delegation: Translates high-level objectives into specific tasks for lower-level agents. Use cases: Hierarchical agents are best suited for systems with clear task hierarchies and well-defined subtasks. Manufacturing control systems: Coordinating different stages of production processes. Building automation: Managing basic systems like HVAC and lighting through layered control. Robotic task planning: Breaking down simple robotic tasks into basic movements and actions. 7. Multi-agent System (MAS) A multi-agent system involves multiple autonomous agents interacting within a shared environment, working independently or cooperatively to achieve individual or collective goals. While often confused with more advanced AI systems, traditional MAS focuses on relatively simple agents interacting through basic protocols and rules. Types of multi-agent systems: Cooperative systems: Agents share information and resources to achieve common goals. For example, multiple robots working together on basic assembly tasks. Competitive systems: Agents compete for resources following defined rules. Like multiple bidding agents in a simple auction system. Mixed systems: Combines both cooperative and competitive behaviors, such as agents sharing some information while competing for limited resources. Key components: Communication protocols: Define how agents exchange information. Interaction rules: Specify how agents can interact and what actions are permitted. Resource management: Methods for handling shared resources between agents. Coordination mechanisms: Systems for organizing agent activities and preventing conflicts. Use cases: MAS is best suited for scenarios with clear interaction rules and relatively simple agent behaviors. Warehouse management: Multiple robots coordinating to move and sort items. Basic manufacturing: Coordinating simple assembly tasks between multiple machines. Resource allocation: Managing shared resources like processing time or storage space.

- #15: Rational Agents and Ethical Foundations in Decision-Making When designing rational agents in artificial intelligence, key ethical principles and decision-making concepts guide their behavior. These include consequentialism, utilitarianism, and specific definitions related to rational agency. 1. Consequentialism Consequentialism evaluates actions based on their outcomes. For AI systems, this means assessing decisions by the results they produce rather than the intentions or processes behind them. Example: A delivery drone chooses a flight path that avoids high-traffic areas. The decision is deemed rational and ethical because it minimizes the risk of accidents (positive consequence). 2. Utilitarianism Utilitarianism, a subset of consequentialism, focuses on maximizing happiness or well-being for the greatest number of people. In AI, this principle can be applied to design systems that prioritize outcomes beneficial to society. Example: A healthcare scheduling AI allocates hospital resources to maximize the number of patients treated effectively. It chooses strategies that improve overall patient outcomes, aligning with utilitarian principles. 3. Definition of a Rational Agent A rational agent is defined as: "For each possible percept sequence, a rational agent should select an action that maximizes its expected performance measure, given the evidence provided by the percept sequence and the agent’s built-in knowledge." Key terms in this definition include: Percept Sequence: The history of inputs or observations received by the agent. Example: For a self-driving car, a percept sequence includes sensor readings like traffic data, road conditions, and GPS signals. Action Selection: The agent evaluates possible actions and selects the one that maximizes its expected performance measure. Maximizing Expected Performance: The goal is to choose actions that, on average, lead to the best outcomes under uncertainty. 4. Performance Measure A performance measure is an objective criterion for evaluating the success of an agent’s behavior. Often referred to as a utility function or reward function, it defines what the agent aims to achieve. Example: A chess-playing AI might use a performance measure based on winning, drawing, or losing games. The utility function assigns higher rewards to strategies that increase the likelihood of winning. 5. Expectation Expectation represents the average outcome across all possible situations that might arise, accounting for uncertainty and variability in the environment. Example: A weather-predicting AI calculates the expected rainfall by averaging data from multiple models and historical trends. It uses this expectation to guide decisions like irrigation schedules. Connecting Rational Agents to Ethical Foundations Rational agents embody the principles of consequentialism and utilitarianism when their performance measures align with ethical goals: Consequentialism in Rational Agents: Agents evaluate actions by their consequences using performance measures. Utilitarianism in Rational Agents: Agents prioritize actions that maximize aggregate utility (e.g., happiness, well-being). Practical Applications and Challenges In designing rational agents: Performance Measure Selection: Designers must carefully define performance measures to reflect ethical and societal goals. Poorly defined measures can lead to unintended consequences. Example: An AI trained to maximize profits might exploit users unless fairness and well-being are included in the utility function. Handling Uncertainty: Rational agents rely on expectations, which can be influenced by incomplete or biased data. By combining rationality with ethical foundations like consequentialism and utilitarianism, AI systems can act in ways that are both intelligent and aligned with societal values.

- #16: 1. Rationality as an Ideal Rationality is an ideal standard of behavior for agents. It implies that an agent is designed to make the best possible decisions based on its goals, knowledge, and capabilities. However, this does not mean it is possible to design an agent that no one could ever improve upon. Example: Imagine a chess-playing AI like AlphaZero. While it may play at a superhuman level, someone might still develop a better chess AI in the future. Rationality, in this context, is about maximizing performance within current constraints, not achieving unassailable perfection. 2. Rationality ≠ Omniscience Being rational does not mean the agent is all-knowing. Mistakes can occur if the agent lacks sufficient information or if the percepts (inputs) it receives are ambiguous or incomplete. Rationality is about making the best decision based on the available information, not guaranteeing flawless outcomes. Example: A self-driving car may encounter a situation where sensors fail to detect a hidden obstacle due to poor lighting. Based on the percepts it receives, the car makes a rational decision, but it could still make a mistake because it is not omniscient. 3. Rationality ≠ Perfection A rational agent is designed to maximize expected outcomes, not necessarily achieve the best possible outcome in every single instance. This distinction highlights the probabilistic nature of decision-making under uncertainty. Example: A delivery drone chooses a route to avoid predicted bad weather. Despite this rational choice, unexpected storms may still delay the delivery. The decision was rational because it maximized the expected outcome based on available weather forecasts. 4. Exploration and Learning are Rational A rational agent should explore and learn to enhance its decision-making capabilities over time. Using percepts (observations) to supplement prior knowledge allows the agent to adapt to changing environments and become more autonomous. Example: A robotic vacuum cleaner might initially map a new house incorrectly. Over time, as it gathers perceptual data about the layout, it refines its map and becomes more efficient at cleaning. This process of exploration and learning is rational behavior. 5. Bounded Rationality Rationality is often constrained by the agent's physical and computational limitations, such as memory capacity, processing power, or sensor accuracy. This concept, known as bounded rationality, acknowledges that agents operate within practical limitations. Example: A smartphone assistant like Siri cannot process complex, multi-sentence user queries instantly due to hardware and software constraints. It operates rationally within these limits, providing the best response it can based on its programming and resources. In summary, rationality is an idealized concept guiding the design of intelligent agents. It focuses on making the best possible decisions given the agent's goals, knowledge, and constraints, without requiring omniscience or perfection. Rational agents are designed to explore, learn, and adapt within their bounded capacities.

- #17: Analysis of the Vacuum-Cleaner World 1. Performance Measure The performance measure defines the success of the agent's behavior. In the context of the vacuum-cleaner world, possible performance measures could include: The total amount of dirt cleaned over time. Minimizing the time taken to clean all dirty locations. Energy efficiency, such as minimizing unnecessary moves or actions. For example: Performance Measure = Total Dirt Cleaned: The agent's score increases each time it sucks up dirt. Performance Measure = Cleanliness of the Environment: The agent aims to keep both locations consistently clean. Performance Measure = Efficiency: The agent minimizes actions while ensuring both locations are clean. 2. Rationality of the Agent Program To determine if the agent program is rational, we evaluate whether it selects actions that maximize the expected performance measure based on its percepts and built-in knowledge. Agent Behavior Analysis: If the agent perceives that the current location is dirty, it executes the Suck action. If the location is clean and the agent is at A, it moves to B (action = Right). If the location is clean and the agent is at B, it moves to A (action = Left). Strengths: The agent reacts appropriately to dirt by cleaning it (Suck when the status is dirty). The agent ensures it visits both locations by moving back and forth between them when no dirt is detected. Limitations: The agent does not adapt dynamically if a location becomes dirty after it has been cleaned. The program may waste energy by continuously moving between A and B, even when the environment is clean. Conclusion on Rationality The agent program is rational if the performance measure is focused solely on cleaning dirt when it is detected. In that case, the agent maximizes cleanliness based on its percepts and knowledge. However, the program is not rational if the performance measure includes energy efficiency or minimizing unnecessary actions, as it moves between locations even when no dirt is present. Improvements for Better Rationality To enhance the rationality of the agent: Include a NoOp Action: The agent could remain idle if no dirt is detected, conserving energy. Example: if location is clean and no new dirt is detected, return NoOp. Incorporate Memory: The agent could remember previously cleaned locations and revisit them only if percepts indicate new dirt. Example: Maintain a "clean status" for each location in memory. Dynamic Exploration: The agent could periodically check locations for dirt instead of continuously moving. With these improvements, the agent would better align with performance measures that value efficiency and adaptivity. def Vacuum_Agent(location, status): """ Vacuum-Agent function that takes the current location and status as input and returns an appropriate action. :param location: Current location of the vacuum ('A' or 'B') :param status: Current status of the location ('Clean' or 'Dirty') :return: Action to be performed ('Suck', 'Right', or 'Left') """ if status == "Dirty": return "Suck" elif location == "A": return "Right" elif location == "B": return "Left" # Example usage location = "A" status = "Dirty" action = Vacuum_Agent(location, status) print(f"Location: {location}, Status: {status} -> Action: {action}") location = "A" status = "Clean" action = Vacuum_Agent(location, status) print(f"Location: {location}, Status: {status} -> Action: {action}") location = "B" status = "Clean" action = Vacuum_Agent(location, status) print(f"Location: {location}, Status: {status} -> Action: {action}")

- #19: PEAS: Performance, Environment, Actuators, and Sensors The PEAS framework specifies the problem a rational agent is designed to solve. It helps define the task environment for an agent by breaking it into four components: Performance Measure (P): Specifies the criteria used to evaluate the agent's success. Environment (E): Describes the external setting or world in which the agent operates. Actuators (A): The mechanisms or tools the agent uses to perform actions in the environment. Sensors (S): The mechanisms or tools the agent uses to perceive the environment. Example: Vacuum-Cleaner World 1. Performance Measure (P): The agent is evaluated based on: Amount of dirt cleaned. Minimizing energy usage (e.g., minimizing unnecessary moves). Maintaining a clean environment over time. Example: A higher score is achieved by cleaning all dirt quickly and efficiently. 2. Environment (E): A simplified grid world with two locations: A and B. Each location can have a status of Dirty or Clean. The agent operates in this deterministic and observable environment. 3. Actuators (A): The agent has the following actuators: Suck: Cleans dirt from the current location. Move Right: Moves to the location on the right (from A to B). Move Left: Moves to the location on the left (from B to A). NoOp: Performs no action (useful when the environment is clean). 4. Sensors (S): The agent's sensors provide: Location: The current position of the agent (either A or B). Status: The cleanliness of the current location (Dirty or Clean). PEAS Summary for Vacuum-Cleaner World ComponentDescriptionPerformance MeasureMaximize cleanliness, minimize energy usage and unnecessary movements.EnvironmentTwo locations (A, B), each either Dirty or Clean.ActuatorsSuck, Move Left, Move Right, NoOp.SensorsLocation (A or B), Status (Clean or Dirty).This specification ensures clarity in designing and evaluating the agent's behavior.

- #20: PEAS Example: Automated Taxi Driver The PEAS framework for an automated taxi driver defines the problem space and helps structure its design. Here's how the PEAS framework applies: 1. Performance Measure (P): The performance measure evaluates the success of the taxi driver. Examples include: Passenger satisfaction (e.g., smooth and safe ride). Minimized travel time for passengers. Fuel efficiency (e.g., minimizing fuel consumption or battery usage for electric taxis). Adherence to traffic rules to avoid accidents or penalties. Maximizing profits (e.g., efficient routing to pick up more passengers). A composite score could combine these factors, balancing safety, efficiency, and customer satisfaction. 2. Environment (E): The environment describes the world in which the taxi operates: Urban city environment with streets, traffic signals, pedestrians, and other vehicles. Dynamic conditions, such as changing weather, traffic jams, and road closures. Passenger-related elements, such as pick-up and drop-off locations. Geographical boundaries, like the taxi’s operating area. 3. Actuators (A): The actuators are the components that allow the taxi to act in its environment: Steering wheel: For navigating the taxi. Accelerator and brakes: For controlling speed and stopping. Gear system: For moving forward or reversing. Indicators and horn: For communication with other road users. Doors: For allowing passengers to enter and exit. Display screen: To communicate with passengers (e.g., route, fare, and time). 4. Sensors (S): The sensors provide the taxi with information about the environment and its internal state: GPS: For location tracking and navigation. Cameras: For detecting road signs, lanes, pedestrians, and vehicles. LiDAR or Radar: For sensing nearby objects and distances. Speedometer: To measure the taxi's speed. Fuel gauge: To monitor fuel or battery levels. Microphones: To detect audio signals (e.g., honking or verbal instructions from passengers). Internal sensors: For detecting passenger presence (e.g., weight sensors on seats). PEAS Summary for Automated Taxi Driver ComponentDescriptionPerformance MeasurePassenger satisfaction, safe driving, efficient fuel usage, rule adherence, profits.EnvironmentUrban streets, traffic, pedestrians, passengers, dynamic weather, and road conditions.ActuatorsSteering wheel, accelerator, brakes, gears, indicators, horn, doors, display.SensorsGPS, cameras, LiDAR, radar, speedometer, fuel gauge, microphones, internal sensors.This PEAS specification helps guide the development of an automated taxi system by clearly defining what it needs to achieve, the challenges it faces, and the tools it can use.

- #21: PEAS Example: Spam Filter The PEAS framework applied to a spam filter provides a structured approach to understanding its design and function. 1. Performance Measure (P): The performance measure evaluates the effectiveness of the spam filter. Examples include: Accuracy: Correctly classifying emails as spam or non-spam. Precision: Minimizing false positives (legitimate emails marked as spam). Recall: Minimizing false negatives (spam emails marked as legitimate). Efficiency: Speed of processing and classifying emails. User satisfaction: Ensuring minimal user intervention for reclassification. 2. Environment (E): The environment represents the system and external factors the spam filter interacts with: Email inboxes: Containing legitimate and spam emails. Users: Who may manually classify or correct spam decisions. Email servers: Providing incoming email streams. Attackers: Sending spam emails with evolving techniques (e.g., obfuscation, phishing). 3. Actuators (A): The actuators are the actions the spam filter can perform: Marking emails: Classify emails as either "Spam" or "Not Spam." Moving emails: Relocating spam emails to a designated spam folder. Alerting users: Notifying users about potentially harmful emails (e.g., phishing attempts). Learning updates: Adapting its classification model based on user feedback. 4. Sensors (S): The sensors provide the spam filter with the necessary input to make decisions: Email content: Text, links, and attachments in the email body. Email metadata: Sender information, subject lines, and timestamps. User feedback: Corrections to the spam classification (e.g., marking spam emails as legitimate and vice versa). Spam databases: External sources for known spam patterns or blacklisted senders. Behavior patterns: Frequency of emails from certain senders, suspicious keywords, or abnormal activity. PEAS Summary for Spam Filter ComponentDescriptionPerformance MeasureHigh accuracy, low false positives/negatives, speed, user satisfaction.EnvironmentEmail inbox, users, email servers, spammers, evolving spam techniques.ActuatorsMark emails, move to spam folder, notify users, update learning models.SensorsEmail content, metadata, user feedback, spam databases, behavior patterns.This PEAS framework helps design a spam filter that is effective, adaptable to new threats, and user-friendly while minimizing errors and improving classification accuracy over time.

- #24: Explanation with Real-Time Examples 1. Observability Fully Observable: The agent has complete and accurate knowledge of the environment at any given time. Example: A chess game where the board and positions of all pieces are visible to both players. Partially Observable: The agent has limited information about the environment due to missing data or constraints. Example: A poker game where players can see their cards but not their opponents’ cards. Hidden (Unobservable): The agent has no direct information about the environment and must infer it entirely. Example: Diagnosing a malfunction in a system without any direct sensors or logs, relying solely on external symptoms. 2. Predictability Deterministic: The outcome of actions is entirely predictable and has no uncertainty. Example: A calculator performing mathematical operations (e.g., 2 + 2 always equals 4). Strategic: The outcome depends on the actions of other agents in the environment. Example: A game of chess where the next move depends on the strategy of the opponent. Stochastic: The outcome involves randomness or uncertainty. Example: Weather forecasting, where outcomes are influenced by probabilistic factors. 3. Interaction Episodic: The agent's actions in one episode do not affect future episodes. Each task is independent. Example: Image classification tasks where each image is evaluated independently. Sequential: The agent's actions in one step influence future states and actions. Example: Autonomous driving, where current decisions (e.g., turning left) affect future scenarios (e.g., encountering traffic). 4. Agents Single-Agent: The agent operates in an environment without interaction with other agents. Example: A robot vacuum cleaner operating in a house without interference from other robots. Multi-Agent: Multiple agents interact in the environment. Competitive: Agents compete against each other to achieve their goals. Example: A soccer match where teams compete to score goals. Cooperative: Agents work together to achieve a shared objective. Example: Multiple robots collaborating to move a heavy object. 5. Time Static: The environment remains unchanged unless acted upon by the agent. Example: A crossword puzzle where the clues and grid remain static. Dynamic: The environment changes over time, even if the agent takes no action. Example: A stock trading environment where prices fluctuate constantly. 6. State Discrete: The environment consists of a finite number of states, actions, or percepts. Example: A board game like tic-tac-toe where there are limited positions and moves. Continuous: The environment has an infinite number of possible states, actions, or percepts. Example: Controlling a drone in a 3D space where position and velocity are continuous variables. Real-Time Example Summary CharacteristicReal-Time ExampleObservabilityFully (Chess), Partial (Poker), Hidden (System Diagnosis).PredictabilityDeterministic (Calculator), Strategic (Chess), Stochastic (Weather).InteractionEpisodic (Image Classification), Sequential (Autonomous Driving).AgentsSingle-Agent (Vacuum Cleaner), Multi-Agent (Soccer Match, Collaboration).TimeStatic (Crossword Puzzle), Dynamic (Stock Market).StateDiscrete (Tic-Tac-Toe), Continuous (Drone Control).This classification aids in designing agents that are tailored to specific environments and tasks.

- #38: Artificial Intelligence (AI) agents are designed to perform specific tasks by making decisions based on data and predefined rules. These agents vary in complexity and functionality, depending on their design and purpose. Let’s explore the different types of AI agents, including Learning Agents, with real-time examples to make the concepts clearer: 1. Simple Reflex Agents Description: These are the most basic AI agents that operate based on current data and predefined condition-action rules. They don’t have memory or the ability to learn from past experiences. Real-Time Example: Smart Thermostat: A smart thermostat adjusts the temperature based on the current room temperature. If the room is too hot, it turns on the cooling system; if it’s too cold, it turns on the heating system. It doesn’t consider past temperatures or future predictions—it simply reacts to the current condition. 2. Model-Based Reflex Agents Description: These agents are more advanced than simple reflex agents. They maintain an internal model of the world, which helps them make decisions even when the environment is partially observable. Real-Time Example: Self-Driving Cars: A self-driving car uses sensors and cameras to create an internal model of its surroundings. If a pedestrian suddenly crosses the road, the car uses its internal model to predict the pedestrian’s movement and decides whether to brake or steer away. It doesn’t just react to the current sensor data but also considers the context of the situation. 3. Goal-Based Agents Description: These agents make decisions based on how close they are to achieving a specific goal. They plan and take actions to reach that goal. Real-Time Example: Fitness App: A fitness app like MyFitnessPal tracks your daily calorie intake and exercise. If your goal is to lose 10 pounds in a month, the app suggests workouts and meal plans based on your progress. It constantly evaluates how close you are to your goal and adjusts its recommendations accordingly. 4. Utility-Based Agents Description: These agents go beyond just achieving goals—they also consider the quality of the outcomes. They use utility functions to evaluate different states and choose actions that maximize expected utility. Real-Time Example: Investment Advisor Algorithm: Platforms like Betterment or Wealthfront use utility-based AI agents to manage investments. They don’t just aim to grow your portfolio; they also consider factors like risk tolerance, market conditions, and tax implications. The algorithm evaluates different investment options and chooses the one that maximizes your financial well-being while minimizing risk. 5. Learning Agents Description: These agents are the most advanced type of AI agents. They have the ability to learn from past experiences and improve their performance over time. They consist of four main components: Learning Element: Responsible for improving the agent’s performance. Performance Element: Makes decisions based on what the agent has learned. Critic: Provides feedback on the agent’s actions. Problem Generator: Suggests new actions to explore and learn from. Real-Time Example: Recommendation Systems: Platforms like Netflix or Spotify use learning agents to suggest movies, shows, or music based on your past behavior. For example, if you frequently watch action movies, the system learns your preferences and recommends similar content. Over time, it improves its recommendations by analyzing your feedback (e.g., whether you liked or skipped a suggestion). Comparison of AI Agents in a Real-Time Scenario: Smart Home System Imagine a smart home system that uses different types of AI agents to manage various tasks: Simple Reflex Agent: Example: A motion sensor light turns on when it detects movement in a room and turns off after a few minutes of inactivity. It doesn’t consider past movements or future predictions—it simply reacts to the current condition. Model-Based Reflex Agent: Example: A smart security camera detects a person at the door. It uses an internal model to determine if the person is a family member (based on facial recognition) or a stranger. If it’s a stranger, it sends an alert to the homeowner. Goal-Based Agent: Example: A smart thermostat aims to maintain a comfortable temperature while minimizing energy usage. It plans ahead by learning your daily routine and adjusting the temperature before you wake up or return home. Utility-Based Agent: Example: A smart energy management system evaluates the cost of electricity at different times of the day. It decides when to run appliances like the washing machine or dishwasher to minimize energy costs while ensuring your tasks are completed on time. Learning Agent: Example: A smart home assistant like Amazon Alexa learns your daily habits over time. If you regularly ask for the weather forecast at 7 AM, it starts providing the information automatically without being asked. It also improves its responses based on your feedback and usage patterns. Conclusion AI agents come in various forms, each suited for different tasks and levels of complexity. Simple reflex agents are ideal for straightforward tasks, while model-based, goal-based, and utility-based agents handle more complex scenarios by considering internal models, goals, and the quality of outcomes. Learning agents take this a step further by improving their performance over time through experience. By understanding these types of agents, we can design smarter systems that improve efficiency, accuracy, and user experience in real-world applications like smart homes, fitness tracking, financial management, and personalized recommendations.

- #40: Simple reflex agents The simplest kind of agent is the simple reflex agent. These agents select actions on the basis SIMPLE REFLEX AGENT of the current percept, ignoring the rest of the percept history. For example, the vacuum agent whose agent function is tabulated in Figure 2.3 is a simple reflex agent, because its decision is based only on the current location and on whether that contains dirt. An agent program for this agent is shown in Figure 2.8. Notice that the vacuum agent program is very small indeed compared to the corresponding table. The most obvious reduction comes from ignoring the percept history, which cuts down the number of possibilities from 4 T to just 4. A further, small reduction comes from the fact that, when the current square is dirty, the action does not depend on the location. Imagine yourself as the driver of the automated taxi. If the car in front brakes, and its brake lights come on, then you should notice this and initiate braking. In other words, some processing is done on the visual input to establish the condition we call “The car in front is braking.” Then, this triggers some established connection in the agent program to the action “initiate braking.” We call such a connection a condition–action rule, 7 written as CONDITION–ACTION RULE if car-in-front-is-braking then initiate-braking. Humans also have many such connections, some of which are learned responses (as for driving) and some of which are innate reflexes (such as blinking when something approaches the eye). In the course of the book, we will see several different ways in which such connections can be learned and implemented.

- #42: Model-based reflex agents The most effective way to handle partial observability is for the agent to keep track of the part of the world it can’t see now. That is, the agent should maintain some sort of internal INTERNAL STATE state that depends on the percept history and thereby reflects at least some of the unobserved aspects of the current state. For the braking problem, the internal state is not too extensive— just the previous frame from the camera, allowing the agent to detect when two red lights at the edge of the vehicle go on or off simultaneously. For other driving tasks such as changing lanes, the agent needs to keep track of where the other cars are if it can’t see them all at once. Updating this internal state information as time goes by requires two kinds of knowledge to be encoded in the agent program. First, we need some information about how the world evolves independently of the agent—for example, that an overtaking car generally will be closer behind than it was a moment ago. Second, we need some information about how the agent’s own actions affect the world—for example, that when the agent turns the steering wheel clockwise, the car turns to the right or that after driving for five minutes northbound on the freeway one is usually about five miles north of where one was five minutes ago. This knowledge about “how the world works”—whether implemented in simple Boolean circuits or in complete scientific theories—is called a model of the world. An agent that uses such a model is called a model-based agent.

- #48: Goal-based agents Knowing about the current state of the environment is not always enough to decide what to do. For example, at a road junction, the taxi can turn left, turn right, or go straight on. The correct decision depends on where the taxi is trying to get to. In other words, as well GOAL as a current state description, the agent needs some sort of goal information that describes situations that are desirable—for example, being at the passenger’s destination. The agent program can combine this with information about the results of possible actions (the same information as was used to update internal state in the reflex agent) in order to choose actions that achieve the goal. Figure 2.13 shows the goal-based agent’s structure. Sometimes goal-based action selection is straightforward, when goal satisfaction results immediately from a single action. Sometimes it will be more tricky, when the agent has to consider long sequences of twists and turns to find a way to achieve the goal. Search (Chapters 3 to 6) and planning (Chapters 11 and 12) are the subfields of AI devoted to finding action sequences that achieve the agent’s goals. Notice that decision making of this kind is fundamentally different from the condition– action rules described earlier, in that it involves consideration of the future—both “What will happen if I do such-and-such?” and “Will that make me happy?” In the reflex agent designs, this information is not explicitly represented, because the built-in rules map directly from percepts to actions. The reflex agent brakes when it sees brake lights. A goal-based agent, in principle, could reason that if the car in front has its brake lights on, it will slow down. Given the way the world usually evolves, the only action that will achieve the goal of not hitting other cars is to brake. Although the goal-based agent appears less efficient, it is more flexible because the knowledge that supports its decisions is represented explicitly and can be modified. If it starts to rain, the agent can update its knowledge of how effectively its brakes will operate; this will automatically cause all of the relevant behaviors to be altered to suit the new conditions. For the reflex agent, on the other hand, we would have to rewrite many condition–action rules. The goal-based agent’s behavior can easily be changed to go to a different location. The reflex agent’s rules for when to turn and when to go straight will work only for a single destination; they must all be replaced to go somewhere new

- #50: Utility-based agents Goals alone are not really enough to generate high-quality behavior in most environments. For example, there are many action sequences that will get the taxi to its destination (thereby achieving the goal) but some are quicker, safer, more reliable, or cheaper than others. Goals just provide a crude binary distinction between “happy” and “unhappy” states, whereas a more general performance measure should allow a comparison of different world states according to exactly how happy they would make the agent if they could be achieved. Because “happy” does not sound very scientific, the customary terminology is to say that if one world state is preferred to another, then it has higher utility for the agent.8 UTILITY UTILITY FUNCTION A utility function maps a state (or a sequence of states) onto a real number, which describes the associated degree of happiness. A complete specification of the utility function allows rational decisions in two kinds of cases where goals are inadequate. First, when there are conflicting goals, only some of which can be achieved (for example, speed and safety), the utility function specifies the appropriate tradeoff. Second, when there are several goals that the agent can aim for, none of which can be achieved with certainty, utility provides a way in which the likelihood of success can be weighed up against the importance of the goals. In Chapter 16, we will show that any rational agent must behave as if it possesses a utility function whose expected value it tries to maximize. An agent that possesses an explicit utility function therefore can make rational decisions, and it can do so via a general-purpose algorithm that does not depend on the specific utility function being maximized. In this way, the “global” definition of rationality—designating as rational those agent functions that have the highest performance—is turned into a “local” constraint on rational-agent designs that can be expressed in a simple program. The utility-based agent structure appears in Figure 2.14. Utility-based agent programs appear in Part V, where we design decision making agents that must handle the uncertainty inherent in partially observable environments.

- #51: Learning agents We have described agent programs with various methods for selecting actions. We have not, so far, explained how the agent programs come into being. In his famous early paper, Turing (1950) considers the idea of actually programming his intelligent machines by hand. He estimates how much work this might take and concludes “Some more expeditious method seems desirable.” The method he proposes is to build learning machines and then to teach them. In many areas of AI, this is now the preferred method for creating state-of-the-art systems. Learning has another advantage, as we noted earlier: it allows the agent to operate in initially unknown environments and to become more competent than its initial knowledge alone might allow. In this section, we briefly introduce the main ideas of learning agents. In almost every chapter of the book, we will comment on opportunities and methods for learning in particular kinds of agents. Part VI goes into much more depth on the various learning algorithms themselves. A learning agent can be divided into four conceptual components, as shown in FigLEARNING ELEMENT ure 2.15. The most important distinction is between the learning element, which is responsible for making improvements, and the performance element, which is responsible for PERFORMANCE ELEMENT selecting external actions. The performance element is what we have previously considered to be the entire agent: it takes in percepts and decides on actions. The learning element uses CRITIC feedback from the critic on how the agent is doing and determines how the performance element should be modified to do better in the future. The design of the learning element depends very much on the design of the performance element. When trying to design an agent that learns a certain capability, the first question is not “How am I going to get it to learn this?” but “What kind of performance element will my agent need to do this once it has learned how?” Given an agent design, learning mechanisms can be constructed to improve every part of the agent. The critic tells the learning element how well the agent is doing with respect to a fixed performance standard. The critic is necessary because the percepts themselves provide no indication of the agent’s success. For example, a chess program could receive a percept indicating that it has checkmated its opponent, but it needs a performance standard to know that this is a good thing; the percept itself does not say so. It is important that the performance standard be fixed. Conceptually, one should think of it as being outside the agent altogether, because the agent must not modify it to fit its own behavior. The last component of the learning agent is the problem generator. It is responsible PROBLEM GENERATOR for suggesting actions that will lead to new and informative experiences. The point is that if the performance element had its way, it would keep doing the actions that are best, given what it knows. But if the agent is willing to explore a little, and do some perhaps suboptimal actions in the short run, it might discover much better actions for the long run. The problem

![Example:

Vacuum-cleaner World

• Percepts:

Location and status,

e.g., [A, Dirty]

• Actions:

Left, Right, Suck,

NoOp

Implemented agent program:

function Vacuum-Agent([location, status])

returns an action 𝑎

if status = Dirty then return Suck else

if location = A then return Right else

if location = B then return Left

Agent function: 𝑓 ∶ 𝑃∗ →

𝐴

Percept Sequence Action

Right

Suck

Left

[A, Clean]

[A, Dirty]

…

[A, Clean], [B, Clean]

…

Most recent

Percept 𝑝

[A, Clean], [B, Clean], [A, Dirty] Suck

…

Problem: This table can become infinitively large!](https://image.slidesharecdn.com/ai02agentsstrut-250106084509-cc37ef39/85/A-modern-approach-to-AI-AI_02_agents_Strut-pptx-8-320.jpg)

![Example:

Vacuum-cleaner World

• Percepts:

Location and status,

e.g., [A, Dirty]

• Actions:

Left, Right, Suck,

NoOp

Implemented agent program:

function Vacuum-Agent([location, status])

returns an action

if status = Dirty then return Suck else

if location = A then return Right else

if location = B then return Left

Agent function:

Percept Sequence Action

Right

Suck

Left

[A, Clean]

[A, Dirty]

…

[A, Clean], [B, Clean]

…

What could be a performance measure?

Is this agent program rational?](https://image.slidesharecdn.com/ai02agentsstrut-250106084509-cc37ef39/85/A-modern-approach-to-AI-AI_02_agents_Strut-pptx-17-320.jpg)

![State Representation

States help to keep track of the environment and the agent in the environment. This is

often also called the system state. The representation can be

• Atomic: Just a label for a black box. E.g., A, B

• Factored: A set of attribute values called fluents.

E.g., [location = left, status = clean, temperature = 75 deg. F]

We often construct atomic labels from factored information. E.g.: If the agent’s state is

the coordinate x = 7 and y = 3, then the atomic state label could be the string “(7, 3)”.

With the atomic representation, we can only compare if two labels are the same. With

the factored state representation, we can reason more and calculate the distance

between states!

The set of all possible states is called the state space 𝑆. This set is typically very large!

Action causes

transition

Variables describing the

system state are called

“fluents”](https://image.slidesharecdn.com/ai02agentsstrut-250106084509-cc37ef39/85/A-modern-approach-to-AI-AI_02_agents_Strut-pptx-44-320.jpg)