Ad

A Modern Approach to Performance Monitoring

- 1. A Modern Approach to Performance Monitoring

- 2. Cliff Crocker @cliffcrocker SOASTA VP Product, mPulse

- 3. 1. How fast am I? 1. How fast should I be? 2. How do I get there?

- 5. Synthetic 101 Synthetic monitoring (for purposes of this discussion) refers to the use of automated agents (bots) to measure your website from different physical locations. • A set ‘path’ or URL is defined • Tests are run either adhoc or scheduled and data is collected

- 6. RUM 101 Real User Measurement (RUM) is a technology for collecting performance metrics directly from the browser of an end user. • Involves instrumentation of your website via JavaScript • Measurements are fired across the network to a collection point through a small request object (beacon) <JS> <beacon>

- 7. RUM Cast a wide net • Identify key areas of concern • Understand real user impact • Map performance to human behavior & $$ Synthetic Diagnostic tool • Identify issues in a ‘lab’/remove variables • Reproduce a problem found with RUM http://www.flickr.com/photos/84338444@N00/with/3780079044/ http://www.flickr.com/photos/ezioman/

- 8. The Early Days of RUM • Round-trip time • Start/stop timers via JavaScript • Early contributors: • Steve Souders/Episodes • Philip Tellis/Boomerang.js • Both widely in use today

- 10. Browser Support for Navigation Timing !

- 11. © 2014 SOASTA CONFIDENTIAL - All rights reserved. 10 Navigation Timing DNS: Domain Lookup Time function getPerfStats() { var timing = window.performance.timing; return { dns: timing.domainLookupEnd - timing.domainLookupStart}; }

- 12. © 2014 SOASTA CONFIDENTIAL - All rights reserved. 11 Navigation Timing TCP: Connection Time to Server function getPerfStats() { var timing = window.performance.timing; return { connect: timing.connectEnd - timing.connectStart}; }

- 13. © 2014 SOASTA CONFIDENTIAL - All rights reserved. 12 Navigation Timing TTFB: Time to First Byte function getPerfStats() { var timing = window.performance.timing; return { ttfb: timing.responseStart - timing.connectEnd}; }

- 14. © 2014 SOASTA CONFIDENTIAL - All rights reserved. 13 Navigation Timing Base Page function getPerfStats() { var timing = window.performance.timing; return { basePage: timing.responseEnd - timing.responseStart}; }

- 15. © 2014 SOASTA CONFIDENTIAL - All rights reserved. 14 Navigation Timing Front-end Time function getPerfStats() { var timing = window.performance.timing; return { frontEnd: timing.loadEventStart - timing.responseEnd}; }

- 16. © 2014 SOASTA CONFIDENTIAL - All rights reserved. 15 Navigation Timing Page Load Time function getPerfStats() { var timing = window.performance.timing; return { load: timing.loadEventStart - timing.navigationStart}; }

- 17. Measuring Assets • Strength of synthetic • Full visibility into asset performance • Images • JavaScript • CSS • HTML • A lot of which is served by third-parties • CDN!

- 18. Object Level RUM

- 19. Browser Support for Resource Timing

- 20. CORS: Cross-Origin Resource Sharing Timing-Allow-Origin = "Timing-Allow-Origin" ":" origin-list-or- null | "*" Start/End time only unless Timing-Allow-Origin HTTP Response Header defined

- 21. Resource Timing var rUrl = ‘http://www.akamai.com/images/img/cliff-crocker-dualtone- 150x150.png’; var me = performance.getEntriesByName(url)[0]; var timings = { loadTime: me.duration, dns: me.domainLookupEnd - me.domainLookupStart, tcp: me.connectEnd - me.connectStart, waiting: me.responseStart - me.requestStart, fetch: me.responseEnd - me.responseStart }

- 22. Resource Timing • Slowest resources • Time to first image • Response time by domain • Time a group of assets • Response time by initiator type (element type) • Cache-hit ratio for resources For examples see: http://www.slideshare.net/bluesmoon/beyond-page-level-metrics

- 23. Resource Timing • PerfMap - https://github.com/zeman/perfmap • Mark Zeman • Waterfall.js - https://github.com/andydavies/waterfall • Andy Davies

- 24. 1. How fast am I? 1. How fast should I be? 2. How do I get there?

- 25. Picking a Number • Industry benchmarks? • Apdex? • Analyst report? • Case studies?

- 26. “Synthetic monitoring shows you how you relate to your competitors, RUM shows you how you relate to your customers.” – Buddy Brewer

- 27. Benchmarking

- 29. Benchmarking • Page construction • Requests • Images • Size • Other important metrics • Speedindex • Start Render • PageSpeed/Yslow scoring

- 30. Performance is a Business Problem

- 31. Yahoo! - 2008 Increase of 400ms causes 5-9% increase in user abandonment http://www.slideshare.net/stubbornella/designing-fast-websites-presentation

- 32. Shopzilla - 2009 A reduction in Page Load time of 5s increased site conversion 7-12%! http://assets.en.oreilly.com/1/event/29/Shopzilla%27s%20Site%20Redo%20- %20You%20Get%20What%20You%20Measure%20Presentation.ppt

- 33. Walmart - 2012 http://minus.com/msM8y8nyh#1e SF WebPerf – 2012 Up to 2% conversion drop for every additional second

- 34. So What?

- 35. SIMULATION

- 38. How Fast Should You Be? • Use synthetic measurement for benchmarking your competitors • Understand how fast your site needs to be to reach business goals/objectives with RUM • You must look at your own data

- 39. 1. How fast am I? 1. How fast should I be? 2. How do I get there?

- 40. Real users are not normal

- 42. Page Load Times 2.76s – Median 17.26s – p98 10.45s – p95

- 43. Page Load Times Platform Median 95th Percentile 98th Percentile Mobile 3.62s 12.53s 20.04s Desktop 2.44s 9.31s 15.86s

- 44. Page Load Times OS Median 95th Percentile 98th Percentile Windows 7 2.41s 9.29s 15.89s Mac OS X/10 2.30s 8.11s 13.45s iOS7 3.27s 10.64s 15.79s Android 4 4.06s 14.30s 27.93s iOS8 3.53s 11.54s 19.72s Windows 8 2.67s 10.75s 18,74s

- 45. Other Factors • Geography • User Agent • Connection Type • Carrier/ISP • Device Type

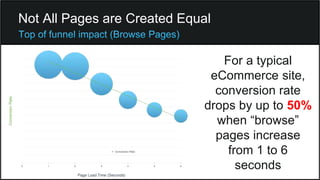

- 46. Not All Pages are Created Equal For a typical eCommerce site, conversion rate drops by up to 50% when “browse” pages increase from 1 to 6 seconds

- 47. Not All Pages are Created Equal However, there is much less impact to conversion when “checkout” pages degrade

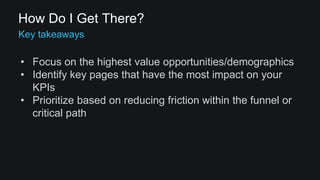

- 48. How Do I Get There? • Focus on the highest value opportunities/demographics • Identify key pages that have the most impact on your KPIs • Prioritize based on reducing friction within the funnel or critical path

- 49. Thank You!

- 50. Images 1. Modern (Title Slide): https://www.flickr.com/photos/looking4poetry/3477854720/in/photolist-6ijV3q-9t3sjb-cj8VWE-MNjPA- 4yBqug-2rk5he-4nZVzJ-4yE45J-9t3Fvj-2rptr5-69ymdr-6XVTH-6QZ4dg-9t3HN5-8geUkB-6oEXs5-eH9zS-H2XTt-cKdJvb- 7GNKWx-cKdKZd-5Rw3qt-jfT5Dx-Jfiuy-bTop6R-2m8kAB-Jfiuu-7E8eMf-9jqD6-9hYAvd-Jfius-gsqr7U-7KiNAZ-8kzG9V-euXdb- 44qJNN-47jBuY-nsxZrZ-7cGjQL-4cfHKq-cGzbSC-aQtWPR-8y3sR3-6okjAW-5A7BSC-6aRsvh-eSMHkX-kxvdyK-9t3udu-c8EkYu 2. Man vs. Machine: https://www.flickr.com/photos/eogez/3289851965/in/photolist-61HmnB-9nkQu3-dfinF1-4GjK24-4GjL6Z- 4GoV6C-dibVCU-4GoTXo-4GjF3k-4GjH7Z-4GoR6h-ek6eT9-ek6eUU-4fADUx-4fEDV9-65JA6W-65Jsxu-65JDnj-4fAE12- 65JDTh-65JBZu-65Ek9M-65JtDu-65JuiN-65JzFS-65EfiH-65Jv63-65JxX7-65Enyc-65Eh4c-65Jxjm-65JwmU-65EhUP-65JCML- 65EjxP-65JBQf-65Ee4Z-65JD4b-65JBF1-65Ec1r-65EkPB-65Jy4o-65Eg96-65JAjb-65Emfe-65Efoz-65JBam-65Eniv-65JCrU- 65Eed2 3. https://www.flickr.com/photos/bradybd/2818154005/ 4. https://www.flickr.com/photos/perspective/149321089/ 5. Dartboard: http://en.wikipedia.org/wiki/Darts#mediaviewer/File:Darts_in_a_dartboard.jpg References: 1. http://calendar.perfplanet.com/2011/a-practical-guide-to-the-navigation-timing-api/ 2. http://www.slideshare.net/bluesmoon/beyond-page-level-metrics

Editor's Notes

- #3: Who am I? Cliff Crocker SOASTA as VP of Product where I focus on a Real User Measurement product called mPulse. Working in performance for the last 10+ years. Tools vendor, focused on synthetic monitoring and front-end performance consulting Switched to the private sector, to build the Performance and Reliability team at @walmartlabs After getting a taste for RUM, sought out SOASTA to build a real user monitoring product called mPulse. I work with a lot of companies helping them transition to a new approach to performance monitoring. I’ve found that its helpful to break the conversation down into three parts.

- #4: How fast am I? - What metrics should I look at? - What do these metrics mean? - What is a good default metric? - What additional components should I measure? - What is the best reflection of the user experience? How fast should I be? - How fast is fast enough? - How do I compare to other’s in my industry? - How fast do I need to be to achieve my business goals? How do I get there? - Where do I focus to get the most value? - Which demographics have the most impact on my business? Where should I start?

- #5: For the purposes of simplifying this talk, when I discuss performance monitoring I’m looking specifically at measuring the front-end performance of an application. Today, this is done two ways: Synthetically: A simulation of the user experience Real User: Measurement of the user through their browser or device. There has been debate around which is “better” – I’m of the opinion you need both.

- #6: A few quick definitions for this talk: When I talk about synthetic monitoring, I’m speaking specifically about “Real Browser” technology. The use of agents to ‘puppet’ a real browser while executing a defined set of actions and capture/record performance timing data for those targets.

- #7: A very quick primer: For those of you who need a definition, this is a description of what RUM is. RUM aka: Real User Monitoring Real User Measurement Real User Metrics Javascript executed in the browser to collect performance timing information about your website. This data is then fired back to a collection point for processing in the form of a beacon (a request object used for transferring the payload).

- #8: This is how I tend to think about RUM vs. Synthetic. Clearly key to have both.

- #9: The Origins of RUM: ~2008 Steve Souders open sourced Episodes - 2010 Yahoo! Open sourced Boomerang Boomerang is the most widely used and supported library for RUM today Supported by Philip Tellis (now of SOASTA) Used in on thousands of sites world-wide, and also the preferred library for tool vendors (such as SOASTA). Prior to IE9 release in 2010, only round-trip timing through use of JavaScript was supported. This was a great indication of perceived user experience, but lacked key metrics like network timing, Time to First Byte, DOM Complete, etc.

- #10: On the same day that boomerang was open-sourced, IE9 Beta announced support for a new browser API called Navigation Timing. This recommendation was introduced by the newly formed W3C Performance Working Group. This now gave us the ability to get precise measurements for a number of performance timers, directly from the browser across the entire user population.

- #11: Other browser vendors quickly followed suite in providing access to the Navigation Timing API. With the exception of one….. Safari (Desktop and iOS) were silent for the last 4 years. This caused serious problems for the performance community. The issue was not that desktop Safari as much since we had a good representation from other browsers. The glaring hole has been for mobile web sites due to iOS market share. Navigation timing metrics have only been available for Android 4….until now! As of the release of iOS8 a few weeks ago and Safari 8 (any day now) Navigation timing is supported by all major browser vendors!

- #12: The following are a few examples of what is now possible with RUM through use of the performance.timing object DNS – domain lookup time

- #13: TCP: The time it takes to establish a connection with the server

- #14: Time to First Byte – sometimes referred to as server-time or backend time or time to first packet.

- #15: Base Page: The time it takes to serve the base html document

- #16: Front-End Time: Time FROM last byte to fully loaded

- #17: Page Load time: Time from the start of navigation to the onload event (start or end, take your pick ;))

- #18: Ok…. Thanks for the history lesson, but I already new all of that from 2010! One of the major advantages that synthetic monitor has had is the ability to capture details at the object level. Your page is composed of many parts. Assets include: Images JavaScript CSS Etc. Third-party contributors can have significant impact on performance CDN’s (like Akamai) are largely responsible for this content

- #19: Enter resource timing. Second(?) recommendation introduced by the newly formed W3C Performance Working Group. Visibility (with component breakdown) into performance of assets

- #20: Now supported by all major browser vendors…..except one….4 more years?

- #21: One important note: Start/End time only for assets served from a different origin. (including third-parties of course) Unless that host defines The Timing-Allow-Origin HTTP Header -> encourage your partners to do this!

- #22: Here is an example of how you would measure a single asset/resource from the browser using resource timing. For examples see: http://www.slideshare.net/bluesmoon/beyond-page-level-metrics

- #23: Here are some of the other examples discussed at Velocity and WebPerf days in NY a few weeks ago: Slowest resources for a page Time to first image Time to product image for retailers (heavily used by our customers) Response time by domain Timing a group of assets (for timing twitter, facebook, etc.) Response time by asset type Cache-hit ratio for resources The main take away is that with Navigation timing and resource timing together, there is a lot that RUM provides you to answer the question of “How Fast am I?”. Demo of PerfMap:

- #24: DEMO

- #25: Now that you have what you need to understand how fast you are, the question comes of ‘how fast should you be?’.

- #26: (Poll audience) How many of you are setting front-end performance SLAs or goals today? I’ve seen very few organizations that are doing this in practice, at least officially. Those that are often look at these resources: Industry benchmarks provided by Synthetic Vendors (great insight) Apdex (http://en.wikipedia.org/wiki/Apdex): Open standard created by a collaboration of companies used to report and compare a standard of reporting between applications. Analysts reports: Forrester and Akamai (great paper) Other case studies from Velocity Conference, THIS conference or tools vendors

- #27: Starting with competitive benchmarking – here’s a quote from a friend and co-worker Buddy Brewer

- #28: Synthetic offerings like Keynote, Gomez and SpeedCurve have specialized competitive indices that show you just how you relate to your competition. This is a great use of synthetic measurement, until we can fine a way to hack into all or our competitor websites to collect RUM data, it’s really the only viable option.

- #29: That said, we collect and report high-level statistics based on RUM that can be found at http://soasta.com/mpulseux

- #30: One thing to take note of however, is that it isn’t just about comparing page load time. Focus instead on other metrics like http request count, image count and size, JavaScript count Other great metrics you can gather are: Speedindex, introduced in WebPagetest which allows you to compare the visual completeness of a page Start Render also in WPT PageSpeed Score/Yslow score Don’t just make it about speed, try to understand what you competitors are doing differently that you are. Also – note the free options for benchmarking such as WebPagetest and HTTPArchive, which is a great source of data for 1M+ websites measured globally

- #31: The key concept I find to understanding how fast you should be is how it relates to your business. Performance most certainly is a business problem.

- #32: Here are some examples of case studies provided that demonstrate the impact of performance on human-behavior. In 2008, Yahoo! shared findings that a substantial increase in user abandonment when pages increased by 400ms.

- #33: The following year, Shopzilla shared how a 5s reduction in load times (synthetic I believe) increase site conversion between 7-12%.

- #34: In 2012, I along with my collogues at @Walmartlabs published a study that showed substantial drops in conversion when item pages slowed from 1-6 seconds.

- #35: So what? These are not your businesses. These are not your stories. You have to look at your own data.

- #36: In the work that I’m lucky enough to do on a daily basis, we help organizations determine ‘what if’? What if they improved the page load time by 1sec? What would that do for them? How fast do they need to be to achieve business targets? This type of discovery and experimentation is what is needed to determine: HOW FAST DO YOUR CUSTOMERS NEED YOU TO BE?

- #37: In this example, I’ve shown the impact of performance (Page Load time) on the Bounce rate for two different groups of sites. Site A: A collection of user experiences for Specialty Goods eCommerce sites (luxury goods)) Site B: A collection of user experiences for General Merchandise eCommerce sites (commodity goods) Notice the patience levels of the users after 6s for each site. Users for a specialty goods site with fewer options tend to be more patient. Meanwhile users that have other options for a GM site continue to abandon at the same rate.

- #38: The relationship between speed and behavior is even more noticeable when we look at conversion rates between the two sites. Notice how quickly users will decide to abandon a purchase for Site A, versus B.

- #40: Finally, step three. “How do you get there?” Obviously, this is where our community has spent most of their time discussing technology, best practices and techniques to get you there. This is not quite what we dive into for this discussion. How we get there when discussing RUM is more about where we start, where we focus our efforts.

- #41: First and foremost, we need to understand that Real Users are NOT Normal.

- #42: Here is an example of a normal distribution. Similar to what you might see in a controlled environment where there is little variation in data.

- #43: When looking at RUM data, we see the distribution is log normal. The ‘long tail’ is due to many factors that introduce variability into the data set. This is due to the multitude of factors both inside and outside of our control. For this data set, which represents a number of different user experience for a multitude of sites over the month of September.

- #44: When segmenting into two groups (mobile vs. desktop) the differences aren’t as profound as you might think...

- #45: However, when looking at different OS versions, a different story starts to take shape

- #46: The great thing about RUM is that you can take a multi-dimensional approach and recognize hot-spots and key areas you should focus for your business.

- #47: Another important aspect is the realize all pages are not created equal. In this study of retail, we found that pages that were at the top of the funnel (browser pages) such as Home, Search, Product were extremely sensitive to user dissatisfaction. As these pages slowed from 1-6s, we saw a 50% decrease in the conversion rate!

- #48: However, when we looked at pages deeper in the funnel like Login, Billing (checkout pages) – users were more patient and committed to the transaction.

![Resource Timing

var rUrl = ‘http://www.akamai.com/images/img/cliff-crocker-dualtone-

150x150.png’;

var me = performance.getEntriesByName(url)[0];

var timings = {

loadTime: me.duration,

dns: me.domainLookupEnd - me.domainLookupStart,

tcp: me.connectEnd - me.connectStart,

waiting: me.responseStart - me.requestStart,

fetch: me.responseEnd - me.responseStart

}](https://image.slidesharecdn.com/akamaiedgefinal-141008181826-conversion-gate01/85/A-Modern-Approach-to-Performance-Monitoring-21-320.jpg)