A survey of software testing

- 1. A Survey of Software Testing Tao He [email protected] Software Engineering Laboratory Department of Computer Science Sun Yat-sen University October 11, 2010 A203 1/50

- 2. Outline Background Framework Classification Research Directions 2/50

- 3. Background 3/50

- 4. What is Software Testing? Software Testing is The process of operating a system or component under specified conditions, observing or recording the results, and making an evaluation of some aspect of the system or component. The process of analyzing a software item to detect the differences between existing and required conditions (that is, bugs) and to evaluate the features of the software items. IEEE. IEEE Standard Glossary of Software Engineering Terminology, lEEE Std 610.121990 (Revision and reddgnation of IEEEstd7921983) 4/50

- 5. What is not software testing? Formal verification and analysis Code review Debugging Testing is NOT debugging Debugging is NOT testing 5/50

- 6. Basic Terminology Mistake a human action that produces an incorrect result. Fault an incorrect step, process, or data definition in a computer program. In common usage Error the difference between a computed, observed, or measured value or condition and the true, specified, or theoretically correct value or condition. Failure the inability of a system or component to fulfill its required functions within specified performance requirements. IEEE. IEEE Standard Glossary of Software Engineering Terminology (IEEE Std. 610.12-1990).Technical Report, IEEE, 1990 6/50

- 7. A few more definitions Test Case Set of inputs, execution conditions, and expected results developed for a particular objective Test Sequence Specific order of related actions or steps that comprise a test procedure or test run. Test Suite Collection of test cases, typically related by a testing goal or an implementation dependency Test Driver Class or utility program that applies test cases Test Harness System of test drivers and other tools that support test execution Test Strategy Algorithm or heuristic to create test cases from a representation, implementation, or a test model Oracle Means to check the output from a program is correct for the given input Stub Partial temporary implementation of a component (usually required for a component to operate) 7/50

- 8. Effectiveness vs. Efficiency Test Effectiveness Relative ability of testing strategy to find bugs in the software Test Efficiency Relative cost of finding a bug in the software under test 8/50

- 9. What is a successful test? Pass Status of a completed test case whose actual results are the same as the expected results No Pass Status of a completed software test case whose actual results differ from the expected ones “Successful” test (i.e., we want this to happen) 9/50

- 10. What is a good test suite? Black-box testing Features in requirement White-box testing Statement Coverage Branch Coverage Expression Coverage Path Coverage Data-flow Coverage Coverage Data flow testing C-use/ DU pair, P-use and all-uses Random testing Fuzziness Complexity Distance between Inputs Isomorphism 10/50

- 11. What are the goals of Testing ? Validation testing To demonstrate to the developer and the system customer that the software meets its requirements; A successful test shows that the system operates as intended. Defect testing To discover faults or defects in the software where its behaviors incorrect or not in conformance with its specification; A successful test is a test that makes the system perform incorrectly and so exposes a defect in the system. 11/50

- 12. What does Testing Shows? Errors Requirements Conformance Performance An indication of quality BUT: Testing can only prove the presence of bugs - never their absence 12/50

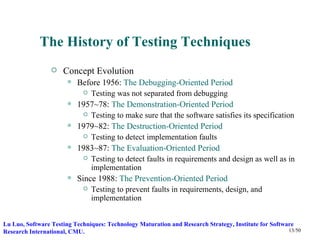

- 13. The History of Testing Techniques Concept Evolution Before 1956: The Debugging-Oriented Period Testing was not separated from debugging 1957~78: The Demonstration-Oriented Period Testing to make sure that the software satisfies its specification 1979~82: The Destruction-Oriented Period Testing to detect implementation faults 1983~87: The Evaluation-Oriented Period Testing to detect faults in requirements and design as well as in implementation Since 1988: The Prevention-Oriented Period Testing to prevent faults in requirements, design, and implementation Lu Luo, Software Testing Techniques: Technology Maturation and Research Strategy, Institute for Software Research International, CMU. 13/50

- 14. Technology maturation Research Strategies for Testing Techniques Lu Luo, Software Testing Techniques: Technology Maturation and Research Strategy, Institute for Software Research International, CMU. 14/50

- 15. Framework 15/50

- 16. Overview Test Adequacy System Criterion Under Test (C) (P) Test Case Test Case Descriptions Specification T1 Executable Test Cases Test Case T2 Generation T3 Test Execution Testing Results Test Adequacy Evaluation Testing Report Gregory M. Kapfhammer. Software Testing. Department of Computer Science, Allegheny College 16/50

- 17. Input of Software Testing System Under Test (P) Test Adequacy Criterion (C) 17/50

- 18. Output of Software Testing Testing Report 18/50

- 19. Processes of Testing Design Test Cases Executing software with INPUTS Environment (e.g. host byte order) Comparing resulting/expected outputs states Measuring execution characteristics pass or not ? memory used time consumed code coverage 19/50

- 20. Which process could be automated? Test case generation and optimization Input (e.g. program, specification, test cases, spectra) Techniques (e.g. search, formal verification and analysis, data mining, symbolic execution) Criteria (e.g. correctness, fuzziness, coverage, nonisomorphic) Oracle Test execution environment simulation (e.g. mobile, sensor networks ) automation (e.g. GUI, Web interaction) monitor Testing vs. Testing Techniques 20/50

- 21. Evaluation for Automated Testing Techniques Benchmarks Siemens suite Small size, large number of test cases 7 correct programs, 132 faulty versions Injection one fault for each faulty version Data Structures from standard libraries Open Source Projects (Large size, Real faults) 21/50

- 22. Taxonomy 22/50

- 23. The Taxonomy of Testing Functional Testing (Black-box testing ) Testing that ignores the internal mechanism of a system or component and focuses solely on the outputs generated in response to selected inputs and execution conditions. Structural Testing (White-box testing) Testing that takes into account the internal mechanism of a system or component. Types include branch testing, path testing, statement testing. IEEE. IEEE Standard Glossary of Software Engineering Terminology (IEEE Std. 610.12-1990).Technical Report, IEEE, 1990 23/50

- 24. Testing Scope Requirements phase testing Design phase testing “Testing in the small” (unit testing) Exercising the smallest executable units of the system “Testing the build” (integration testing) Finding problems in the interaction between components “Testing in the large” (system testing) (α-test) Putting the entire system to test “Testing in the real" (acceptance testing) (β-test) Operating the system in the user environment “Testing after the change” (regression testing) 24/50

- 25. Hierarchy of Software Testing Techniques Gregory M. Kapfhammer. Software Testing. Department of Computer Science, Allegheny College 25/50

- 26. My Classification of Testing Techniques By Input By Output Program Program Specification Test Cases Specification Execution Test Cases Mutant Execution Criteria Mutant By Techniques in Process Search Formal verification and analysis Data Mining Symbolic Execution 26/50

- 27. My Classification of Testing Techniques (cont’) e.g. Paper Input Output Process [GGJ+10] Specification Test Cases Search [DGM10] Test Cases Test Cases Symbolic Execution [HO09] Test Cases Test Cases ILP solvers [GGJ+10] Milos Gligoric, Tihomir Gvero, Vilas Jagannath, Sarfraz Khurshid, Viktor Kuncak, Darko Marinov. Test generation through programming in UDITA. Proceedings of the 32nd ACM/IEEE International Conference on Software Engineering - Volume 1, ICSE 2010, Cape Town, South Africa, 1-8 May 2010. [DGM10] Daniel, B., Gvero, T., and Marinov, D. 2010. On test repair using symbolic execution. In Proceedings of the 19th international Symposium on Software Testing and Analysis (Trento, Italy, July 12 - 16, 2010). ISSTA '10. ACM, New York, NY, 207-218. [HO09] Hwa-You Hsu; Orso, A.; , "MINTS: A general framework and tool for supporting test-suite minimization," Software Engineering, 2009. ICSE 2009. IEEE 31st International Conference on , vol., no., pp.419-429, 16-24 May 2009 27/50

- 28. Research Directions 28/50

- 29. Test Generation Input Program Specification Output Test Cases Process Search Formal verification and analysis Data mining Symbolic execution 29/50

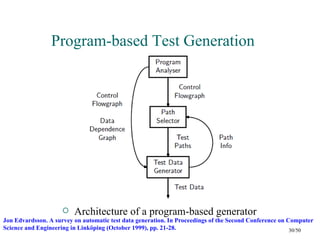

- 30. Program-based Test Generation Architecture of a program-based generator Jon Edvardsson. A survey on automatic test data generation. In Proceedings of the Second Conference on Computer Science and Engineering in Linköping (October 1999), pp. 21-28. 30/50

- 31. Program-based Test Generation Jon Edvardsson. A survey on automatic test data generation. In Proceedings of the Second Conference on Computer Science and Engineering in Linköping (October 1999), pp. 21-28. 31/50

- 32. Program-based Test Generation Static and Dynamic Test Data Generation Random Test Data Generation Goal-Oriented Test Data Generation Path-Oriented Test Data Generation 32/50

- 33. Program-based Test Generation Issues Arrays and Pointers Objects Loops … 33/50

- 34. Specification-based Test Generation Issues Efficiency (Search-based) Effectiveness Complexity Correctness Easy to write the specification Milos Gligoric, Tihomir Gvero, Vilas Jagannath, Sarfraz Khurshid, Viktor Kuncak, Darko Marinov. Test generation through programming in UDITA. Proceedings of the 32nd ACM/IEEE International Conference on Software Engineering - Volume 1, ICSE 2010, Cape Town, South Africa, 1-8 May 2010. 34/50

- 35. Symbolic Execution Definition Symbolic execution is a program analysis technique that allows execution of programs using symbolic input values, instead of actual data, and represents the values of program variables as symbolic expressions. As a result, the outputs computed by a program are expressed as a function of the symbolic inputs. Input Program Output Symbolic Execution Tree Scope Semantic Parsing King, J. C. 1976. Symbolic execution and program testing. Commun. ACM 19, 7 (Jul. 1976), 385-394. 35/50

- 36. Symbolic Execution An example Code that swaps two integers Symbolic Execution Tree Corina S. Păsăreanu, Willem Visser. A survey of new trends in symbolic execution for software testing and analysis. 36/50 339-353 2009 11 STTT 4

- 37. Symbolic Execution Another example Code to sort the first two nodes of a list An analysis of this code using symbolic execution based approach Corina S. Păsăreanu, Willem Visser. A survey of new trends in symbolic execution for software testing and analysis. 37/50 339-353 2009 11 STTT 4

- 38. Symbolic execution tree Corina S. Păsăreanu, Willem Visser. A survey of new trends in symbolic execution for software testing and analysis. 38/50 339-353 2009 11 STTT 4

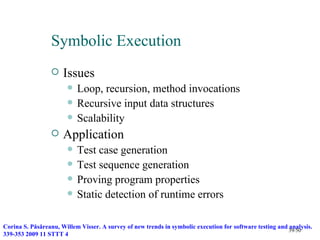

- 39. Symbolic Execution Issues Loop, recursion, method invocations Recursive input data structures Scalability Application Test case generation Test sequence generation Proving program properties Static detection of runtime errors Corina S. Păsăreanu, Willem Visser. A survey of new trends in symbolic execution for software testing and analysis. 39/50 339-353 2009 11 STTT 4

- 40. Symbolic Execution JPF - Java PathFinder Edgewall Software. What is JPF? http://babelfish.arc.nasa.gov/trac/jpf/wiki/intro/what_is_jpf 40/50

- 41. Symbolic Execution JPF - Testing vs. Model Checking Edgewall Software. Testing vs. Model Checking. 41/50 http://babelfish.arc.nasa.gov/trac/jpf/wiki/intro/testing_vs_model_checking

- 42. Symbolic Execution JPF - Testing vs. Model Checking Edgewall Software. Testing vs. Model Checking. 42/50 http://babelfish.arc.nasa.gov/trac/jpf/wiki/intro/testing_vs_model_checking

- 43. Symbolic Execution JPF -Random example Edgewall Software. Example: java.util.Random. http://babelfish.arc.nasa.gov/trac/jpf/wiki/intro/random_example. 43/50

- 44. Future Work IPO Effectiveness Efficiency Easy to use Change the habit of users Maybe a little operation from user can raise a lot of effectiveness or Efficiency Methods to evaluate New convincing Benchmark Import some existed techniques from other area 44/50

- 45. Is Testing a Hot topic ? ICSE2010 Submission Topics ICSE 2010. Opening Slides with the statistics on the attendance and the paper acceptances. ICSE 2010 CAPE TOWN. 45/50

- 46. Is Testing a Hot topic ? ICSE2009 Topics Carlo Ghezzi. Reflections on 40+ years of software engineering research and beyond an insider's view. 31st International Conference on Software Engineering, Vancouver, Canada, May 16-24, 2009. 46/50

- 47. But… We all think that Testing is trivial non-technique boring 47/50

- 48. Why is Testing a Hot topic ? Suppose you were in 1940s, you may think Programming is trivial non-technique boring 48/50

- 49. Why is Testing a Hot topic ? ENIAC Data Link Layer Manual testing Von Neumann TCP Unit Testing Architecture Assembly Language Socket … Backus–Naur Form Petri Nets … C COBRA … Java MapReduce, … GFS, Bigtable When do we need standards in Testing? Can we develop a language with testing constrains? 49/50

- 50. Thank you! 50/50

Editor's Notes

- #50: Do we need standards and formal methods in testing? Can we develop a language with testing constrains?

![My Classification of Testing Techniques (cont’)

e.g.

Paper Input Output Process

[GGJ+10] Specification Test Cases Search

[DGM10] Test Cases Test Cases Symbolic Execution

[HO09] Test Cases Test Cases ILP solvers

[GGJ+10] Milos Gligoric, Tihomir Gvero, Vilas Jagannath, Sarfraz Khurshid, Viktor Kuncak, Darko Marinov. Test

generation through programming in UDITA. Proceedings of the 32nd ACM/IEEE International Conference on

Software Engineering - Volume 1, ICSE 2010, Cape Town, South Africa, 1-8 May 2010.

[DGM10] Daniel, B., Gvero, T., and Marinov, D. 2010. On test repair using symbolic execution. In Proceedings of the

19th international Symposium on Software Testing and Analysis (Trento, Italy, July 12 - 16, 2010). ISSTA '10. ACM,

New York, NY, 207-218.

[HO09] Hwa-You Hsu; Orso, A.; , "MINTS: A general framework and tool for supporting test-suite minimization,"

Software Engineering, 2009. ICSE 2009. IEEE 31st International Conference on , vol., no., pp.419-429, 16-24 May

2009 27/50](https://image.slidesharecdn.com/asurveyofsoftwaretesting-120318205607-phpapp02/85/A-survey-of-software-testing-27-320.jpg)