Advanced Drupal 8 Caching

- 2. Pantheon.io Defining Measurable Success ❏ Meet project requirements (e.g. blogging, ecommerce, HTTPS) ❏ Have a good time to first byte (TTFB) ❏ Accelerates requests for other resources ❏ Better search ranking ❏ Have a good time to first paint (TTFP) ❏ Better user experience and conversion rates ❏ Stay online during load spikes (no timeouts or errors) 2

- 3. “Are you sure you have a problem?” Step One: Triage 3

- 4. Pantheon.io Meeting Project Requirements It’s important to establish project goals early. These needs can affect performance as well as which optimizations are possible. ❏ HTTPS ❏ To browser or end-to-end? ❏ Needs an EV certificate? ❏ Compliance ❏ Where can data be cached? ❏ Dynamic Pages ❏ Which features require them? ❏ How often are they used? 4

- 5. Pantheon.io Know When Performance is Good Enough 5 The more abundant and complex your sites, the more you need to pick your battles. “...a clear correlation was identified between decreasing search rank and increasing time to first byte.” —“How Website Speed Actually Impacts Search Ranking,” Moz, 2013 Good Enough: TTFB <400ms Good Enough: TTFP <2.4s “If your website takes longer than three seconds to load, you could be losing nearly half of your visitors...” —“How Page Load Time Affects Conversion Rates: 12 Case Studies,” HubSpot, 2017

- 6. Pantheon.io Collect Basic Performance Data 6 Test the site using WebPageTest.org. TTFP <3s? TTFB <0.4s? N o errors?

- 7. Pantheon.io Collect Uptime Data 7 Monitor the site with something like Pingdom.

- 8. Pantheon.io Revisit Measures of Success ❏ Does the site meet business value requirements? ❏ Is the TTFB good enough? ❏ Is the TTFP good enough? ❏ Is the site staying online? Don’t create unnecessary work for yourself. 8

- 9. “So, I take it that things aren’t great?” Step Two: Diagnosis 9

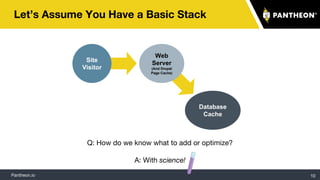

- 10. Pantheon.io Let’s Assume You Have a Basic Stack 10 Site Visitor Database Cache Web Server (And Drupal Page Cache) Q: How do we know what to add or optimize? A: With science!

- 11. Pantheon.io Collect Performance Data 11 Find bottlenecks with an APM tool like New Relic.

- 12. Pantheon.io Where’s the Bottleneck? From frontend to deep in the backend: ❏ Review scores in the WebPageTest.org. ❏ Review regional load times in Pingdom. ❏ Review slow transactions in New Relic. ❏ Configure and download PHP slow logs. ❏ Profile slow pages using New Relic, APD, Xdebug, XHProf, or BlackFire. ❏ Configure and download MySQL slow query logs. 12

- 13. Pantheon.io Symptoms: Resource Bottleneck ● Good TTFB, Bad TTFP ● Recursive Resource Dependencies ⌾ Look for: Cascading bars on WebPageTest.org before the “Start Render” marker ⌾ Example: Javascript multiple dependencies ● Huge Resources ⌾ Look for: Long bars for items on WebPageTest.org before the “Start Render” marker ⌾ Example: Multi-megabyte images ● Blocking, External Resources ⌾ Look for: Many domains for items on WebPageTest.org before the “Start Render” marker ⌾ Examples: Analytics Tools, Web Fonts, Chat Tools, Marketing Optimization Tools 13 ▲Cascading Bars

- 14. Pantheon.io Symptoms: Database Bottleneck ● Bad TTFB ● Database Timeout Errors ● Slow Page Loads for Authenticated Users ● Slow Queries ⌾ Look for: Queries to non-cache tables in the MySQL Slow Query Log ⌾ Example: Uncached Views 14

- 15. Pantheon.io Symptoms: Object Cache Bottleneck ● Bad TTFB ● Timeout Errors ● Slow Page Loads for Anyone ● Heavy Object Cache Queries ⌾ Look for: Heavy aggregate queries to the non-page cache tables in New Relic ● Heavy Network Egress from the Database Server 15

- 16. Pantheon.io Symptoms: Page Cache Bottleneck ● Consistently Bad TTFB ⌾ Look for: On the “Summary” tab of WebPageTest.org, even second and later runs have a long bar for request #1. ● Slow Page Loads for Anonymous Users ● Heavy Page Cache Queries ⌾ Look for: Heavy aggregate queries to the page cache tables in New Relic ● Overloading with Cacheable Requests ⌾ Look for: Many GET requests to the same URLs in web server logs from different IPs 16

- 17. “What do I do about my bottleneck?” Step Three: Treatment 17

- 18. Pantheon.io Treatment: Resource Bottleneck ● Cache-Based Treatments ⌾ Deploy a CDN to cache resources closer to site visitors. ⌾ Optimize Drupal’s image styles to create files optimized for their use. (Drupal’s image style system is, at heart, a cache of images processed in various ways.) ● Non-Cache Treatments ⌾ Deploy HTTP/2 (easiest via CDN) to improve parallelism. ⌾ If no HTTP/2, aggregated CSS and JS to allow fewer round trips. ⌾ Move where resources load to make them non-blocking (and loaded after first paint). 18

- 19. Pantheon.io Treatment: Database Bottleneck ● Cache-Based Treatments ⌾ Move object caching out of the database (or otherwise reduce the load). ⌾ Move page caching to a layer in front of the web server (as a proxy or CDN). ⌾ Get the InnoDB buffer pool as big as possible. ⌾ MySQL’s query cache can actually be too big. The bigger it is, the more overhead there is for changing data. While Drupal 7 relied heavily on this cache (for the “system” table), Drupal 8 does not. ● Non-Cache Treatments ⌾ Out of scope for today 19

- 20. Pantheon.io Treatment: Object Cache Bottleneck ● Drupal 8 ships a “null” backend. It’s sometimes useful in production: $settings['container_yamls'][] = DRUPAL_ROOT . '/sites/development.services.yml'; ● If you use a CDN or proxy cache, don’t cache pages: $settings['cache']['bins']['dynamic_page_cache'] = 'cache.backend.null'; ● If the site mostly has anonymous users and certain bins mostly get used to generate pages-that-will-be-cached, don’t cache those bins: $settings['cache']['bins']['render'] = 'cache.backend.null'; ● If using an external cache (Redis/memcached), use a sensible size: ⌾ In Redis, using too large of a cache size will cause snapshots to bottleneck. ⌾ Drupal shouldn’t need more than 1GB of cache. Going larger can be less efficient. 20

- 21. Pantheon.io Treatment: Page Cache Bottleneck ● Move page caching in front of the web server, ideally to a CDN. ⌾ Deploy Varnish in front of Drupal or use a CDN with an origin shield. ● Configure Drupal to allow page caching for at least 10 minutes. ● Ensure repeated, anonymous requests for the same page start “hitting.” ⌾ Look for: Responses with “Cache-Control” headers having a defined “max-age” without “private” or “no-store.” ⌾ Look for: Responses with “Age” headers with numbers more than zero. ⌾ Look for: Responses with CDN-specific headers showing a “hit.” ⌾ Look for: Responses without “Set-Cookie” headers. ⌾ Look for: Responses with “Vary” containing no more than “cookie,” “accept-encoding,” and “accept-language.” Other things can be very harmful to cache hit rates. 21

- 22. Pantheon.io A Decent Drupal 8 Stack for Caching 22 Site Visitor CDN or Proxy Web Server Dedicated Cache

- 23. “What if that’s not enough?” Step Four: Advanced Page Caching 23

- 24. Pantheon.io Does Your Site Suffer From... ❏ Downtime when the entire CDN or proxy cache gets cleared? ❏ Frustrating tradeoffs between delivering pages that are fast versus fresh? ❏ Do you want to crank Drupal’s page cache time up but fear the consequences? ❏ Frequent, manual cache clearing to get new content out? ❏ Inconsistent content: Some pages show what’s new but other pages don’t? ❏ Load times that are sometimes great but awful when the cache misses? ❏ Good control of your CDN or proxy but stale browser caches? ❏ Heavy loads while different proxies or CDN POPs warm themselves after some cache clearing? 24

- 25. Pantheon.io ...Then You Need Smarter Page Caching In the world of Varnish (and Fastly): ● stale-while-revalidate ● stale-if-error ● Surrogate-Control ● Surrogate-Key 25

- 26. Pantheon.io C-C: stale-while-revalidate=SECONDS ● Semi-Standardized: Part of Informational RFC 5861 ● Directive goes into the Cache-Control header. ⌾ SECONDS sets the time it’s usable after it expires. ● Built on the “grace mode” capabilities of Varnish. ● Allows the page cache to “hit” stale content. ● Triggers an asynchronous refresh of the content in the background. 26

- 27. Pantheon.io C-C: stale-if-error=SECONDS ● Semi-Standardized: Part of Informational RFC 5861 ● Mostly similar to stale-while-revalidate. ● Used to return stale content instead of an error when the backend is inaccessible or returning errors. 27

- 28. Pantheon.io Surrogate-Control: max-age=SECONDS ● Semi-Standard: Part of W3C’s Edge Architecture Specification ● Same syntax as Cache-Control ● Used instead of Cache-Control by some CDNs when present ● Stripped before the response leaves the CDN ● Allows storing things for different durations in the CDN and browser cache ⌾ Mostly useful for retaining things a long time in the CDN and explicitly invalidating them 28

- 29. Pantheon.io Surrogate-Key: frontpage node-1 ● Non-Standard: Only in Varnish (with xkey) and Fastly ⌾ Equivalents exist for Akamai, Cloudflare (Enterprise-only), and KeyCDN ● Space-delimited list of keys identifying ingredients of the page ● Allows later, explicit invalidation of cache pages with updated content. ● Drupal 8 makes this easy because it has widespread cache tags we can repurpose as page keys. 29

- 30. Pantheon.io Pantheon + Global CDN + Drupal Modules 30

- 31. Pantheon.io Our Front Page Has Two Nodes 31

- 32. Pantheon.io And the Response is Cached and Keyed Up [straussd@t560 d8-granular]$ curl -v https://dev-d8-granular.pantheonsite.io/ > /dev/null [...] < surrogate-key-raw: block_view config:block.block.bartik_account_menu config:block.block.bartik_branding config:block.block.bartik_breadcrumbs config:block.block.bartik_content config:block.block.bartik_footer config:block.block.bartik_help config:block.block.bartik_local_actions config:block.block.bartik_local_tasks config:block.block.bartik_main_menu config:block.block.bartik_messages config:block.block.bartik_page_title config:block.block.bartik_powered config:block.block.bartik_search config:block.block.bartik_tools config:block_emit_list config:color.theme.bartik config:filter.format.basic_html config:search.settings config:system.menu.account config:system.menu.footer config:system.menu.main config:system.menu.tools config:system.site config:user.role.anonymous config:views.view.frontpage http_response node:1 node:2 node_emit_list node_view rendered user:1 user_view < age: 10 [...] 32

- 33. Pantheon.io [straussd@t560 d8-granular]$ curl -v https://dev-d8-granular.pantheonsite.io/ [...] < age: 0 [...] [straussd@t560 d8-granular]$ curl -v https://dev-d8-granular.pantheonsite.io/node/1 [...] < age: 0 [...] [straussd@t560 d8-granular]$ curl -v https://dev-d8-granular.pantheonsite.io/node/1 [...] < age: 141 [...] ...Only the Pages Containing Node 2 Content Get Cleared! If I Alter Node 2... 33

- 34. Pantheon.io Origin Shielding: Consolidating Caching 34

- 35. Pantheon.io A Best-Practice Drupal 8 Stack for Caching 35 Site Visitor CDN or Proxy Origin Cache “Shield” Web Server Dedicated Cache

- 37. Something here about this A Subtitle Production Something here ● List one ● List two ● List three 37

- 38. Something here about this A Subtitle production 38

- 39. Something here ● List one ● List two ● List three 39

- 40. Pantheon.io Thing #3 Some Shapes 40 Something inside here Something inside here Something inside here Something inside here Something inside here Thing #1 Thing #2 Thing #3 Thing #2Thing #1

![Pantheon.io

Treatment: Object Cache Bottleneck

● Drupal 8 ships a “null” backend. It’s sometimes useful in production:

$settings['container_yamls'][] = DRUPAL_ROOT . '/sites/development.services.yml';

● If you use a CDN or proxy cache, don’t cache pages:

$settings['cache']['bins']['dynamic_page_cache'] = 'cache.backend.null';

● If the site mostly has anonymous users and certain bins mostly get used to

generate pages-that-will-be-cached, don’t cache those bins:

$settings['cache']['bins']['render'] = 'cache.backend.null';

● If using an external cache (Redis/memcached), use a sensible size:

⌾ In Redis, using too large of a cache size will cause snapshots to bottleneck.

⌾ Drupal shouldn’t need more than 1GB of cache. Going larger can be less efficient.

20](https://image.slidesharecdn.com/advanceddrupal8caching-170809031242/85/Advanced-Drupal-8-Caching-20-320.jpg)

![Pantheon.io

And the Response is Cached and Keyed Up

[straussd@t560 d8-granular]$ curl -v https://dev-d8-granular.pantheonsite.io/ > /dev/null

[...]

< surrogate-key-raw: block_view config:block.block.bartik_account_menu

config:block.block.bartik_branding config:block.block.bartik_breadcrumbs

config:block.block.bartik_content config:block.block.bartik_footer

config:block.block.bartik_help config:block.block.bartik_local_actions

config:block.block.bartik_local_tasks config:block.block.bartik_main_menu

config:block.block.bartik_messages config:block.block.bartik_page_title

config:block.block.bartik_powered config:block.block.bartik_search

config:block.block.bartik_tools config:block_emit_list

config:color.theme.bartik config:filter.format.basic_html

config:search.settings config:system.menu.account config:system.menu.footer

config:system.menu.main config:system.menu.tools config:system.site

config:user.role.anonymous config:views.view.frontpage http_response node:1

node:2 node_emit_list node_view rendered user:1 user_view

< age: 10

[...]

32](https://image.slidesharecdn.com/advanceddrupal8caching-170809031242/85/Advanced-Drupal-8-Caching-32-320.jpg)

![Pantheon.io

[straussd@t560 d8-granular]$ curl -v https://dev-d8-granular.pantheonsite.io/

[...]

< age: 0

[...]

[straussd@t560 d8-granular]$ curl -v https://dev-d8-granular.pantheonsite.io/node/1

[...]

< age: 0

[...]

[straussd@t560 d8-granular]$ curl -v https://dev-d8-granular.pantheonsite.io/node/1

[...]

< age: 141

[...]

...Only the Pages Containing Node 2 Content Get Cleared!

If I Alter Node 2...

33](https://image.slidesharecdn.com/advanceddrupal8caching-170809031242/85/Advanced-Drupal-8-Caching-33-320.jpg)