AI & ML INTRODUCTION OF AI AND ML FOR LEARING BASICS

- 1. BCA-V Artificial Intelligence and Machine Learning BCA504A Mrs. Ashu Nayak Assistant Professor Department of CS & IT Kalinga University Naya Raipur (C.G.), India BCA504A Artificial Intelligence & Machine Learning 1

- 2. CONTENTS • Kernel Method • Support Vector Machine Algorithm • Hyperplane and Support Vectors in the SVM algorithm • Ensemble learning • Architecture of Stacking • Ada Boost algorithm • Cross-Validation in Machine Learning • Dimensionality Reduction • Feature Selection • Principal Component Analysis • Multidimensional Scaling • Linear Discriminant Analysis 2 BCA504A Artificial Intelligence & Machine Learning

- 3. LECTURE PLAN Lecture No. Topics to be covered Slide No. L-1 Kernel Method 8-14 L-2 Support Vector Machine Algorithm 15-18 L-3 Hyperplane and Support Vectors in the SVM algorithm 19-20 L-4 Ensemble learning 21-25 L-5 Architecture of Stacking 26-28 L-6 Ada Boost algorithm 29-30 L-7 Cross-Validation in Machine Learning 31-32 L-8 Dimensionality Reduction 33-34 L-9 Feature Selection 35-37 L-10 Principal Component Analysis 38 L-11 Multidimensional Scaling 39-40 L-12 Linear Discriminant Analysis 41-42 Quiz 43-53 3 BCA504A Artificial Intelligence & Machine Learning

- 4. Unit- 5 4 BCA504A Artificial Intelligence & Machine Learning

- 5. What is Kernel Method? A set of techniques known as kernel methods are used in machine learning to address classification, regression, and other prediction issues. They are built around the idea of kernels, which are functions that gauge how similar two data points are to one another in a high- dimensional feature space. BCA504A Artificial Intelligence & Machine Learning 5

- 6. Characteristics of Kernel Function • Mercer's condition: A kernel function must satisfy Mercer's condition to be valid. This condition ensures that the kernel function is positive semi definite, which means that it is always greater than or equal to zero. • Positive definiteness: A kernel function is positive definite if it is always greater than zero except for when the inputs are equal to each other. • Non-negativity: A kernel function is non-negative, meaning that it produces non-negative values for all inputs. BCA504A Artificial Intelligence & Machine Learning 6

- 7. • Symmetry: A kernel function is symmetric, meaning that it produces the same value regardless of the order in which the inputs are given. • Reproducing property: A kernel function satisfies the reproducing property if it can be used to reconstruct the input data in the feature space. • Smoothness: A kernel function is said to be smooth if it produces a smooth transformation of the input data into the feature space. • Complexity: The complexity of a kernel function is an important consideration, as more complex kernel functions may lead to over fitting and reduced generalization performance. BCA504A Artificial Intelligence & Machine Learning 7

- 8. Linear Kernel • A linear kernel is a type of kernel function used in machine learning, including in SVMs (Support Vector Machines). It is the simplest and most commonly used kernel function, and it defines the dot product between the input vectors in the original feature space. • The linear kernel can be defined as: • K(x, y) = x .y • Where x and y are the input feature vectors. The dot product of the input vectors is a measure of their similarity or distance in the original feature space. BCA504A Artificial Intelligence & Machine Learning 8

- 9. Polynomial Kernel • A particular kind of kernel function utilised in machine learning, such as in SVMs, is a polynomial kernel (Support Vector Machines). It is a nonlinear kernel function that employs polynomial functions to transfer the input data into a higher-dimensional feature space. • One definition of the polynomial kernel is: • Where x and y are the input feature vectors, c is a constant term, and d is the degree of the polynomial, K(x, y) = (x. y + c)d. The constant term is added to, and the dot product of the input vectors elevated to the degree of the polynomial. BCA504A Artificial Intelligence & Machine Learning 9

- 10. Gaussian (RBF) Kernel • The Gaussian kernel, also known as the radial basis function (RBF) kernel, is a popular kernel function used in machine learning, particularly in SVMs (Support Vector Machines). It is a nonlinear kernel function that maps the input data into a higher-dimensional feature space using a Gaussian function. • The Gaussian kernel can be defined as: • K(x, y) = exp(-gamma * ||x - y||^2) • Where x and y are the input feature vectors, gamma is a parameter that controls the width of the Gaussian function, and ||x - y||^2 is the squared Euclidean distance between the input vectors. BCA504A Artificial Intelligence & Machine Learning 10

- 11. Laplace Kernel • The Laplacian kernel, also known as the Laplace kernel or the exponential kernel, is a type of kernel function used in machine learning, including in SVMs (Support Vector Machines). It is a non- parametric kernel that can be used to measure the similarity or distance between two input feature vectors. • The Laplacian kernel can be defined as: • K(x, y) = exp(-gamma * ||x - y||) • Where x and y are the input feature vectors, gamma is a parameter that controls the width of the Laplacian function, and ||x - y|| is the L1 norm or Manhattan distance between the input vectors. BCA504A Artificial Intelligence & Machine Learning 11

- 12. Support Vector Machine Algorithm • Support Vector Machine or SVM is one of the most popular Supervised Learning algorithms, which is used for Classification as well as Regression problems. However, primarily, it is used for Classification problems in Machine Learning. • The goal of the SVM algorithm is to create the best line or decision boundary that can segregate n-dimensional space into classes so that we can easily put the new data point in the correct category in the future. This best decision boundary is called a hyperplane. BCA504A Artificial Intelligence & Machine Learning 12

- 13. BCA504A Artificial Intelligence & Machine Learning 13

- 14. On the basis of the support vectors, it will classify it as a cat. Consider the below diagram: BCA504A Artificial Intelligence & Machine Learning 14 SVM algorithm can be used for Face detection, image classification, text categorization, etc.

- 15. Types of SVM • Linear SVM: Linear SVM is used for linearly separable data, which means if a dataset can be classified into two classes by using a single straight line, then such data is termed as linearly separable data, and classifier is used called as Linear SVM classifier. • Non-linear SVM: Non-Linear SVM is used for non-linearly separated data, which means if a dataset cannot be classified by using a straight line, then such data is termed as non-linear data and classifier used is called as Non-linear SVM classifier. BCA504A Artificial Intelligence & Machine Learning 15

- 16. Hyperplane and Support Vectors in the SVM algorithm • Hyperplane: There can be multiple lines/decision boundaries to segregate the classes in n-dimensional space, but we need to find out the best decision boundary that helps to classify the data points. This best boundary is known as the hyperplane of SVM. BCA504A Artificial Intelligence & Machine Learning 16

- 17. Support Vectors: • The data points or vectors that are the closest to the hyperplane and which affect the position of the hyperplane are termed as Support Vector. Since these vectors support the hyperplane, hence called a Support vector. BCA504A Artificial Intelligence & Machine Learning 17

- 18. What is Ensemble learning in Machine Learning? • Ensemble learning is one of the most powerful machine learning techniques that use the combined output of two or more models/weak learners and solve a particular computational intelligence problem. E.g., a Random Forest algorithm is an ensemble of various decision trees combined. • "An ensembled model is a machine learning model that combines the predictions from two or more models.” BCA504A Artificial Intelligence & Machine Learning 18

- 19. There are 3 most common ensemble learning methods in machine learning. These are as follows: •Bagging •Boosting •Stacking BCA504A Artificial Intelligence & Machine Learning 19

- 20. 1. Bagging • Bagging is a method of ensemble modeling, which is primarily used to solve supervised machine learning problems. It is generally completed in two steps as follows: • Bootstrapping: It is a random sampling method that is used to derive samples from the data using the replacement procedure. In this method, first, random data samples are fed to the primary model, and then a base learning algorithm is run on the samples to complete the learning process. • Aggregation: This is a step that involves the process of combining the output of all base models and, based on their output, predicting an aggregate result with greater accuracy and reduced variance. BCA504A Artificial Intelligence & Machine Learning 20

- 21. 2. Boosting • Boosting is an ensemble method that enables each member to learn from the preceding member's mistakes and make better predictions for the future. Unlike the bagging method, in boosting, all base learners (weak) are arranged in a sequential format so that they can learn from the mistakes of their preceding learner. Hence, in this way, all weak learners get turned into strong learners and make a better predictive model with significantly improved performance. BCA504A Artificial Intelligence & Machine Learning 21

- 22. 3. Stacking • Stacking is one of the popular ensemble modeling techniques in machine learning. Various weak learners are ensembled in a parallel manner in such a way that by combining them with Meta learners, we can predict better predictions for the future. BCA504A Artificial Intelligence & Machine Learning 22

- 23. Architecture of Stacking BCA504A Artificial Intelligence & Machine Learning 23

- 24. • The architecture of the stacking model is designed in such as way that it consists of two or more base/learner's models and a meta-model that combines the predictions of the base models. These base models are called level 0 models, and the meta-model is known as the level 1 model. So, the Stacking ensemble method includes original (training) data, primary level models, primary level prediction, secondary level model, and final prediction. BCA504A Artificial Intelligence & Machine Learning 24

- 25. Ada Boost algorithm in Machine Learning: • AdaBoost is a boosting set of rules that was added with the aid of Yoav Freund and Robert Schapire in 1996. It is part of a class of ensemble getting-to-know strategies that aim to improve the overall performance of gadget getting-to-know fashions by combining the outputs of a couple of weaker fashions, known as vulnerable, inexperienced persons or base novices. The fundamental idea at the back of AdaBoost is to offer greater weight to the schooling instances that are misclassified through the modern-day model, thereby focusing on the samples that are tough to classify. BCA504A Artificial Intelligence & Machine Learning 25

- 26. Advantages of AdaBoost 1. Improved Accuracy AdaBoost can notably improve the accuracy of susceptible, inexperienced persons, even when the usage of easy fashions. By specializing in misclassified instances, it adapts to the tough areas of the records distribution. 2. Versatility AdaBoost can be used with a number of base newbies, making it a flexible set of rules that may be carried out for unique forms of problems. 3. Feature Selection It routinely selects the most informative features, lowering the need for giant function engineering. 4. Resistance to Overfitting AdaBoost tends to be much less at risk of overfitting compared to a few different ensemble methods, thanks to its recognition of misclassified instances. BCA504A Artificial Intelligence & Machine Learning 26

- 27. Cross-Validation in Machine Learning Cross-validation is a technique for validating the model efficiency by training it on the subset of input data and testing on previously unseen subset of the input data. We can also say that it is a technique to check how a statistical model generalizes to an independent dataset. •Hence the basic steps of cross-validations are: Reserve a subset of the dataset as a validation set. •Provide the training to the model using the training dataset. •Now, evaluate model performance using the validation set. If the model performs well with the validation set, perform the further step, else check for the issues. BCA504A Artificial Intelligence & Machine Learning 27

- 28. Methods used for Cross-Validation • Validation Set Approach • Leave-P-out cross-validation • Leave one out cross-validation • K-fold cross-validation • Stratified k-fold cross-validation BCA504A Artificial Intelligence & Machine Learning 28

- 29. What is Dimensionality Reduction? • The number of input features, variables, or columns present in a given dataset is known as dimensionality, and the process to reduce these features is called dimensionality reduction. • Dimensionality reduction technique can be defined as, "It is a way of converting the higher dimensions dataset into lesser dimensions dataset ensuring that it provides similar information." These techniques are widely used in machine learning for obtaining a better fit predictive model while solving the classification and regression problems. BCA504A Artificial Intelligence & Machine Learning 29

- 30. BCA504A Artificial Intelligence & Machine Learning 30

- 31. Feature Selection Techniques in Machine Learning: BCA504A Artificial Intelligence & Machine Learning 31

- 32. Need for Feature Selection • It helps in avoiding the curse of dimensionality. • It helps in the simplification of the model so that it can be easily interpreted by the researchers. • It reduces the training time. • It reduces overfitting hence enhance the generalization. BCA504A Artificial Intelligence & Machine Learning 32

- 33. BCA504A Artificial Intelligence & Machine Learning 33

- 34. Principal Component Analysis(PCA) • Principal Component Analysis is an unsupervised learning algorithm that is used for the dimensionality reduction in machine learning. It is a statistical process that converts the observations of correlated features into a set of linearly uncorrelated features with the help of orthogonal transformation. These new transformed features are called the Principal Components. BCA504A Artificial Intelligence & Machine Learning 34

- 35. Multidimensional Scaling (MDS) • Multidimensional scaling (MDS) is a dimensionality reduction technique that is used to project high-dimensional data onto a lower- dimensional space while preserving the pairwise distances between the data points as much as possible. MDS is based on the concept of distance and aims to find a projection of the data that minimizes the differences between the distances in the original space and the distances in the lower-dimensional space. BCA504A Artificial Intelligence & Machine Learning 35

- 36. Features of the Multidimensional Scaling (MDS) • MDS is based on the concept of distance and aims to find a projection of the data that minimizes the differences between the distances in the original space and the distances in the lower-dimensional space. • MDS can be applied to a wide range of data types, including numerical, categorical, and mixed data. • MDS is implemented using numerical optimization algorithms, such as gradient descent or simulated annealing, to minimize the difference between the distances in the original and lower-dimensional spaces. BCA504A Artificial Intelligence & Machine Learning 36

- 37. What is Linear Discriminant Analysis (LDA)? • Linear Discriminant analysis is one of the most popular dimensionality reduction techniques used for supervised classification problems in machine learning. It is also considered a pre-processing step for modeling differences in ML and applications of pattern classification. BCA504A Artificial Intelligence & Machine Learning 37

- 38. Extension to Linear Discriminant Analysis (LDA) Some of the common real-world applications of Linear discriminant Analysis are given below: •Face Recognition •Medical •Customer Identification •For Predictions •In Learning BCA504A Artificial Intelligence & Machine Learning 38

- 39. Unit-5 (MCQs) 39 BCA504A Artificial Intelligence & Machine Learning Question 1 What is the primary objective of Support Vector Machines (SVM)? A) Dimensionality reduction B) Model complexity minimization C) Maximize margin between classes D) Regression accuracy improvement Correct answer: C Question 2 Which ensemble method emphasizes reducing variance by training models on different subsets of data? A) Stacking B) Boosting C) Bagging D) Mixture Models Correct answer: C

- 40. BCA504A Artificial Intelligence & Machine Learning 40 Unit-5 (MCQs) Question 3 In AdaBoost, what happens to the weights of incorrectly classified data points in subsequent iterations? A) They are increased B) They are decreased C) They remain unchanged D) They are set to zero Correct answer: A Question 4 What does the kernel trick in SVM allow? A) Mapping data to a higher-dimensional space B) Reducing computational complexity C) Enhancing visualization D) Improving model accuracy Correct answer: A

- 41. BCA504A Artificial Intelligence & Machine Learning 41 Unit-5 (MCQs) Question 5 What is the primary goal of Dimensionality Reduction techniques? A) Increase model complexity B) Improve model interpretability C) Reduce number of features D) Enhance model training time Correct answer: C Question 6 Which technique in Dimensionality Reduction creates a linear combination of features to maximize class separability? A) Principal Components Analysis (PCA) B) Multidimensional Scaling (MDS) C) Linear Discriminant Analysis (LDA) D) Subset Selection Correct answer: C

- 42. BCA504A Artificial Intelligence & Machine Learning 42 Unit-5 (MCQs) Question 7 How does Bagging differ from Boosting? A) Bagging uses weighted data points B) Boosting trains models sequentially C) Bagging focuses on reducing model complexity D) Boosting combines multiple models Correct answer: B Question 8 What is the main advantage of using Ensemble Methods? A) Lower computational complexity B) Higher model bias C) Improved model generalization D) Reduced model variance Correct answer: C

- 43. BCA504A Artificial Intelligence & Machine Learning 43 Unit-5 (MCQs) Question 9 Which Ensemble Method uses a weighted average of predictions from multiple models? A) Stacking B) Boosting C) Bagging D) Mixture Models Correct answer: B Question 10 What does AdaBoost aim to improve in each iteration? A) Model bias B) Model complexity C) Model performance on misclassified data D) Model interpretability Correct answer: C

- 44. BCA504A Artificial Intelligence & Machine Learning 44 Unit-5 (MCQs) Question 11 How does the Soft Margin Hyperplane handle non-separable data in SVM? A) By ignoring misclassified points B) By penalizing misclassified points C) By reducing the number of support vectors D) By increasing the margin width Correct answer: B Question 12 What is a potential drawback of using AdaBoost in practice? A) Sensitivity to noisy data B) Difficulty in training large datasets C) Overfitting D) Slow convergence Correct answer: A

- 45. BCA504A Artificial Intelligence & Machine Learning 45 Unit-5 (MCQs) Question 13 Which technique in Dimensionality Reduction aims to find the best linear combination of features? A) PCA B) MDS C) LDA D) Subset Selection Correct answer: C Question 14 How does Multidimensional Scaling (MDS) differ from PCA? A) It focuses on variance maximization B) It deals with categorical data C) It visualizes data relationships D) It doesn't involve feature reduction Correct answer: C

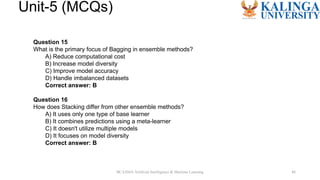

- 46. BCA504A Artificial Intelligence & Machine Learning 46 Unit-5 (MCQs) Question 15 What is the primary focus of Bagging in ensemble methods? A) Reduce computational cost B) Increase model diversity C) Improve model accuracy D) Handle imbalanced datasets Correct answer: B Question 16 How does Stacking differ from other ensemble methods? A) It uses only one type of base learner B) It combines predictions using a meta-learner C) It doesn't utilize multiple models D) It focuses on model diversity Correct answer: B

- 47. BCA504A Artificial Intelligence & Machine Learning 47 Unit-5 (MCQs) Question 17 Which ensemble method is prone to overfitting if not carefully tuned? A) Stacking B) Bagging C) Boosting D) Mixture Models Correct answer: C Question 18 What does PCA prioritize when selecting principal components? A) Features with high variance B) Features with low variance C) All features equally D) Features with high correlation Correct answer: A

- 48. BCA504A Artificial Intelligence & Machine Learning 48 Unit-5 (MCQs) Question 19 When is Linear Discriminant Analysis (LDA) preferred over PCA? A) When class separation is important B) When feature reduction is the goal C) When dimensionality is high D) When data is noisy Correct answer: A Question 20 How does boosting handle misclassified data points in each subsequent model iteration? A) Increases their weights B) Decreases their weights C) Ignores them D) Randomizes their weights Correct answer: A

- 49. 49 BCA504A Artificial Intelligence & Machine Learning