Ad

Algorithm Using Divide And Conquer

- 2. Introduction Divide-and-conquer is a top-down technique for designing algorithms that consists of dividing the problem into smaller sub problems hoping that the solutions of the sub problems are easier to find and then composing the partial solutions into the solution of the original problem.

- 3. Divide-and-conquer 1. Divide: Break the problem into sub-problems of same type. 2. Conquer: Recursively solve these sub-problems. 3. Combine: Combine the solution sub-problems.

- 4. Divide-and-conquer For Example: Assuming that each divide step creates two sub- problems

- 6. Divide-and-conquer For Example: If we can divide the problem into more than two, it looks like this

- 8. Divide-and-conquer Detail Divide/Break This step involves breaking the problem into smaller sub-problems. Sub-problems should represent a part of the original problem. This step generally takes a recursive approach to divide the problem until no sub-problem is further divisible. At this stage, sub-problems become atomic in nature but still represent some part of the actual problem.

- 9. Divide-and-conquer Detail Conquer/Solve This step receives a lot of smaller sub- problems to be solved. Generally, at this level, the problems are considered 'solved' on their own.

- 10. Divide-and-conquer Detail Merge/Combine When the smaller sub-problems are solved, this stage recursively combines them until they formulate a solution of the original problem. This algorithmic approach works recursively and conquer & merge steps works so close that they appear as one.

- 11. Standard Algorithms based on D & C The following algorithms are based on divide-and-conquer algorithm design paradigm. Merge Sort Quick Sort Binary Search Strassen's Matrix Multiplication Closest pair (points) Cooley–Tukey Fast Fourier Transform (FFT) algorithm

- 12. Advantages of D & C 1. Solving difficult problems: Divide and conquer is a powerful tool for solving conceptually difficult problems: all it requires is a way of breaking the problem into sub-problems, of solving the trivial cases and of combining sub- problems to the original problem. 2. Parallelism: Divide and conquer algorithms are naturally adapted for execution in multi-processor machines, especially shared-memory systems where the communication of data between processors does not need to be planned in advance, because distinct sub-problems can be executed on different processors.

- 13. Advantages of D & C 3. Memory Access: Divide-and-conquer algorithms naturally tend to make efficient use of memory caches. The reason is that once a sub-problem is small enough, it and all its sub-problems can, in principle, be solved within the cache, without accessing the slower main memory. 4. Roundoff control: In computations with rounded arithmetic, e.g. with floating point numbers, a divide-and-conquer algorithm may yield more accurate results than a superficially equivalent iterative method.

- 14. Advantages of D & C For solving difficult problems like Tower Of Hanoi, divide & conquer is a powerful tool Results in efficient algorithms. Divide & Conquer algorithms are adapted foe execution in multi-processor machines Results in algorithms that use memory cache efficiently.

- 15. Limitations of D & C Recursion is slow. Very simple problem may be more complicated than an iterative approach. Example: adding n numbers etc

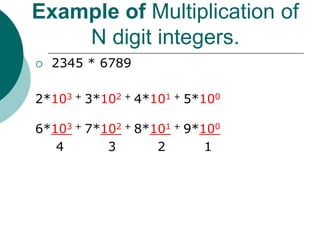

- 16. Example of Multiplication of N digit integers. Multiplication can be perform using divide and conquer technique. First we know the decimal system of number which are shown as under. Number is 3754 3*103 + 7*102 + 5*101 + 4*100

- 17. Example of Multiplication of N digit integers. 2345 * 6789 2*103 + 3*102 + 4*101 + 5*100 6*103 + 7*102 + 8*101 + 9*100 4 3 2 1

- 18. Example of Multiplication of N digit integers. 2345 * 6789 2*103 + 3*102 + 4*101 + 5*100 6*103 + 7*102 + 8*101 + 9*100 4 3 2 1

- 19. Example of Multiplication of N digit integers. 2345 * 6789 2*103 + 3*102 + 4*101 + 5*100 6*103 + 7*102 + 8*101 + 9*100 4 3 2 1

- 20. Closest-Pair Problem: Divide and Conquer Brute force approach requires comparing every point with every other point Given n points, we must perform 1 + 2 + 3 + … + n- 2 + n-1 comparisons. Brute force O(n2) The Divide and Conquer algorithm yields O(n log n) Reminder: if n = 1,000,000 then n2 = 1,000,000,000,000 whereas n log n = 20,000,000 2 )1(1 1 nn k n k

- 21. Closest-Pair Algorithm Given: A set of points in 2-D

- 22. Closest-Pair Algorithm Step 1: Sort the points in one D

- 23. Lets sort based on the X-axis O(n log n) using quicksort or mergesort 1 2 3 4 5 6 7 8 9 1 0 1 1 1 2 1 3 1 4 Closest-Pair Algorithm

- 24. Step 2: Split the points, i.e., Draw a line at the mid-point between 7 and 8 1 2 3 4 5 6 7 8 9 1 0 1 1 1 2 1 3 1 4 Closest-Pair Algorithm Sub-Problem 1 Sub-Problem 2

- 25. Advantage: Normally, we’d have to compare each of the 14 points with every other point. (n-1)n/2 = 13*14/2 = 91 comparisons 1 2 3 4 5 6 7 8 9 1 0 1 1 1 2 1 3 1 4 Closest-Pair Algorithm Sub-Problem 1 Sub-Problem 2

- 26. Advantage: Now, we have two sub- problems of half the size. Thus, we have to do 6*7/2 comparisons twice, which is 42 comparisons 1 2 3 4 5 6 7 8 9 1 0 1 1 1 2 1 3 1 4 Closest-Pair Algorithm d1 d2 Sub-Problem 1 Sub-Problem 2 solution d = min(d1, d2)

- 27. Advantage: With just one split we cut the number of comparisons in half. Obviously, we gain an even greater advantage if we split the sub-problems. 1 2 3 4 5 6 7 8 9 1 0 1 1 1 2 1 3 1 4 Closest-Pair Algorithm d1 d2 Sub-Problem 1 Sub-Problem 2 d = min(d1, d2)

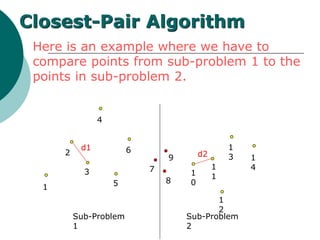

- 28. Problem: However, what if the closest two points are each from different sub- problems? 1 2 3 4 5 6 7 8 9 1 0 1 1 1 2 1 3 1 4 Closest-Pair Algorithm d1 d2 Sub-Problem 1 Sub-Problem 2

- 29. Here is an example where we have to compare points from sub-problem 1 to the points in sub-problem 2. 1 2 3 4 5 6 7 8 9 1 0 1 1 1 2 1 3 1 4 Closest-Pair Algorithm d1 d2 Sub-Problem 1 Sub-Problem 2

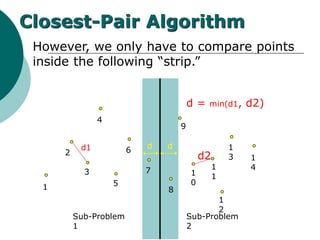

- 30. However, we only have to compare points inside the following “strip.” 1 2 3 4 5 6 7 8 9 1 0 1 1 1 2 1 3 1 4 Closest-Pair Algorithm d1 d2 Sub-Problem 1 Sub-Problem 2 dd d = min(d1, d2)

- 31. Step 3: In fact we can continue to split until each sub-problem is trivial, i.e., takes one comparison. 1 2 3 4 5 6 7 8 9 1 0 1 1 1 2 1 3 1 4 Closest-Pair Algorithm

- 32. Finally: The solution to each sub-problem is combined until the final solution is obtained 1 2 3 4 5 6 7 8 9 1 0 1 1 1 2 1 3 1 4 Closest-Pair Algorithm

- 33. Finally: On the last step the ‘strip’ will likely be very small. Thus, combining the two largest sub-problems won’t require much work. 1 2 3 4 5 6 7 8 9 1 0 1 1 1 2 1 3 1 4 Closest-Pair Algorithm

- 34. 1 2 3 4 5 6 7 8 9 1 0 1 1 1 2 1 3 1 4 Closest-Pair Algorithm In this example, it takes 22 comparisons to find the closets-pair. The brute force algorithm would have taken 91 comparisons. But, the real advantage occurs when there are millions of points.

- 35. Closest-Pair Algo Float DELTA-LEFT, DELTA-RIGHT; Float DELTA; If n= 2 then return distance form p(1) to p(2); Else P-LEFT <- (p(1),p(2),p(n/2)); P-RIGHT <- (p(n/2 + 1),p(n/2 + 2),p(n)); DELTA-LEFT <- Closest_pair(P-LEFT,n2); DELTA-RIGHT <- Closest_pair(P-RIGHT,n2); DELTA<- minimum(DELTA-LEFT,DELTA-RIGHT)

- 36. Closest-Pair Algo For I in 1---s do for j in i+1..s do if(|x[i] – x[j] | > DELTA and |y[i] – y[j]|> DELTA ) then exit; end if(distance(q[I],q[j] <DELTA)) then DELTA <- distance (q[i],q[j]); end end

- 37. Merge Sort

- 38. Closest-Pair Algo Array a[] Array b[] Integer h,i,j,k; h<- low; i <- low; j=<- mid + 1; While( h<= mid and j<= high) do if(a[h] <= a[j]) then b[i]<- a[h] h<-h+1

- 39. Closest-Pair Algo Else b[i]<- a[j] j<- j+1; end i<- i+1; End If(h>mid) then for(k<-j to high) do b[i] <- a[k]; i<-i+1;

- 40. Closest-Pair Algo Else for(k<- to mid) do b[i] <- a[k] i=i+1; End For(k<-low to high) a[k]<- b[k] end

- 41. Timing Analysis D&C algorithm running time in mainly affected by 3 factors The number of sub-instance(α) into which a problem is split. The ratio of initial problem size to sub problem size(ß) The number of steps required to divide the initial instance and to combine sub-solutions expressed as a function of the input size n.

- 42. Timing Analysis P is D & C Where α sub instance each of size n/ß Let Tp(n) donete the number of steps taken by P on instances of size n. Tp(n0) = constant (recursive-base); Tp(n)= αTp (n/ß)+y(n); α is number of sub instance ß is number of sub size y is constant

![Closest-Pair Algo

For I in 1---s do

for j in i+1..s do

if(|x[i] – x[j] | > DELTA and

|y[i] – y[j]|> DELTA ) then

exit;

end

if(distance(q[I],q[j] <DELTA)) then

DELTA <- distance (q[i],q[j]);

end

end](https://image.slidesharecdn.com/daaunit2-180727132023/85/Algorithm-Using-Divide-And-Conquer-36-320.jpg)

![Closest-Pair Algo

Array a[]

Array b[]

Integer h,i,j,k;

h<- low;

i <- low;

j=<- mid + 1;

While( h<= mid and j<= high) do

if(a[h] <= a[j]) then

b[i]<- a[h]

h<-h+1](https://image.slidesharecdn.com/daaunit2-180727132023/85/Algorithm-Using-Divide-And-Conquer-38-320.jpg)

![Closest-Pair Algo

Else

b[i]<- a[j]

j<- j+1;

end

i<- i+1;

End

If(h>mid) then

for(k<-j to high) do

b[i] <- a[k];

i<-i+1;](https://image.slidesharecdn.com/daaunit2-180727132023/85/Algorithm-Using-Divide-And-Conquer-39-320.jpg)

![Closest-Pair Algo

Else

for(k<- to mid) do

b[i] <- a[k]

i=i+1;

End

For(k<-low to high)

a[k]<- b[k]

end](https://image.slidesharecdn.com/daaunit2-180727132023/85/Algorithm-Using-Divide-And-Conquer-40-320.jpg)