Ad

Apache HBase 1.0 Release

- 1. Apache HBase 1.0 Release Nick Dimiduk, Hortonworks @xefyr n10k.com February 20, 2015

- 2. Release 1.0 “The theme of (eventual) 1.0 release is to become a stable base for future 1.x series of releases. 1.0 release will aim to achieve at least the same level of stability of 0.98 releases without introducing too many new features.” Enis Söztutar HBase 1.0 Release Manager

- 3. Agenda • A Brief History of HBase • What is HBase • Major Changes for 1.0 • Upgrade Path

- 4. A BRIEF HISTORY OF HBASE How we got here

- 5. The Early Years • 2006: BigTable paper published by Google • 2006: HBase development starts • 2007: HBase added Hadoop contrib • 2007: Release Hadoop 0.15.0 • 2008: Hadoop graduates Incubator • 2008: HBase becomes Hadoop sub-‐project • 2008: Release HBase 0.18.1 • 2009: Release HBase 0.19.0 • 2009: Release HBase 0.20.0

- 6. Into Produc_on • 2010: HBase becomes Apache top-‐level project • 2011: Release HBase 0.90.0 • 2011: Release HBase 0.92.0 • 2011: HBase: The Defini1ve Guide published • 2012: Release HBase 0.94.0 • 2012: First HBaseCon • 2012: HBase Administra1on Cookbook published • 2012: HBase In Ac1on published

- 7. Modern HBase • 2013: HBaseCon 2013 • 2013: Release HBase 0.96.0 • 2013: Apache Phoenix enters Incubator • 2014: Release HBase 0.98.0 • 2014: HBaseCon 2014 • 2014: Apache Phoenix graduates Incubator • 2015: Release HBase 1.0 … • 2016: Release HBase 2.0?

- 8. WHAT IS HBASE HBase architecture in 5 minutes or less

- 9. Data Model 1368387247 [3.6 kb png data]"thumb"cf2b a cf1 1368394583 7 1368394261 "hello" "bar" 1368394583 22 1368394925 13.6 1368393847 "world" "foo" cf2 1368387684 "almost the loneliest number"1.0001 1368396302 "fourth of July""2011-07-04" Table A rowkey column family column qualifier timestamp value Rows Column Families

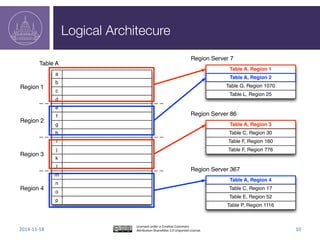

- 10. Logical Architecture a b d c e f h g i j l k m n p o Table A Region 1 Region 2 Region 3 Region 4 Region Server 7 Table A, Region 1 Table A, Region 2 Table G, Region 1070 Table L, Region 25 Region Server 86 Table A, Region 3 Table C, Region 30 Table F, Region 160 Table F, Region 776 Region Server 367 Table A, Region 4 Table C, Region 17 Table E, Region 52 Table P, Region 1116

- 11. Physical Architecture system and can therefore host any region (figure 3.8). By physically collocating Data Nodes and RegionServers, you can use the data locality property; that is, RegionServ ers can theoretically read and write to the local DataNode as the primary DataNode. You may wonder where the TaskTrackers are in this scheme of things. In some HBase deployments, the MapReduce framework isn’t deployed at all if the workload i primarily random reads and writes. In other deployments, where the MapReduce pro cessing is also a part of the workloads, TaskTrackers, DataNodes, and HBase Region Servers can run together. DataNode RegionServer DataNode RegionServer DataNode RegionServer Figure 3.7 HBase RegionServer and HDFS DataNode processes are typically collocated on the same host system and can therefore host any region (figure 3.8) Nodes and RegionServers, you can use the data locali ers can theoretically read and write to the local DataN You may wonder where the TaskTrackers are in t HBase deployments, the MapReduce framework isn’t d primarily random reads and writes. In other deployme cessing is also a part of the workloads, TaskTrackers, Servers can run together. DataNode RegionServer DataNode RegionServer Figure 3.7 HBase RegionServer and HDFS DataNode processes are system and can therefore host any region (figure 3.8). By physically colloca Nodes and RegionServers, you can use the data locality property; that is, R ers can theoretically read and write to the local DataNode as the primary D You may wonder where the TaskTrackers are in this scheme of thing HBase deployments, the MapReduce framework isn’t deployed at all if the w primarily random reads and writes. In other deployments, where the MapR cessing is also a part of the workloads, TaskTrackers, DataNodes, and HBa Servers can run together. DataNode RegionServer DataNode RegionServer DataNode Reg Figure 3.7 HBase RegionServer and HDFS DataNode processes are typically collocated on th system and can therefore host any region (figure 3.8). By physica Nodes and RegionServers, you can use the data locality property ers can theoretically read and write to the local DataNode as the p You may wonder where the TaskTrackers are in this scheme HBase deployments, the MapReduce framework isn’t deployed at primarily random reads and writes. In other deployments, where cessing is also a part of the workloads, TaskTrackers, DataNodes Servers can run together. DataNode RegionServer DataNode RegionServer Dat Figure 3.7 HBase RegionServer and HDFS DataNode processes are typically col Region Server Data Node Region Server Data Node Region Server Data Node Region Server Data Node ... Nodes and RegionServers, you can use th ers can theoretically read and write to the You may wonder where the TaskTrac HBase deployments, the MapReduce fram primarily random reads and writes. In oth cessing is also a part of the workloads, T Servers can run together. DataNode RegionServer DataNode Figure 3.7 HBase RegionServer and HDFS DataNo Master Zoo Keeper Given that the underlying data is stored in HDFS, which is available to all clients as a single namespace, all RegionServers have access to the same persisted files in the file system and can therefore host any region (figure 3.8). By physically collocating Data- Nodes and RegionServers, you can use the data locality property; that is, RegionServ- ers can theoretically read and write to the local DataNode as the primary DataNode. You may wonder where the TaskTrackers are in this scheme of things. In some HBase deployments, the MapReduce framework isn’t deployed at all if the workload is primarily random reads and writes. In other deployments, where the MapReduce pro- cessing is also a part of the workloads, TaskTrackers, DataNodes, and HBase Region- Servers can run together. DataNode RegionServer DataNode RegionServer DataNode RegionServer Name Node cessing is also a part of the workloads, TaskTrackers, DataNode Servers can run together. DataNode RegionServer DataNode RegionServer Da Figure 3.7 HBase RegionServer and HDFS DataNode processes are typically co Licensed to Nick Dimiduk <[email protected]> HBase Client HDFS HBase

- 12. MAJOR CHANGES FOR 1.0 What’s all the excitement about?

- 13. Stability: Co-‐Locate Meta with Master • Simplify, Improve region assignment reliability – Fewer components involved in upda_ng “truth” • Master embeds a RegionServer – Will host only system tables – Baby step towards combining RS/Master into a single hbase daemon • Backup masters unchanged – Can be configured to host user tables while in standby • Plumbing is all there, off by default hip://issues.apache.org/jira/browse/HBASE-‐10569

- 14. Availability: Region Replicas • Mul_ple RegionServers host a Region – One is “primary”, others are “replicas” – Only primary accepts writes • Client reads against primary only or any – Results marked as appropriate • Baby step toward quorum reads, writes hip://issues.apache.org/jira/browse/HBASE-‐10070 hip://www.slideshare.net/HBaseCon/features-‐session-‐1

- 15. Usability: Client API Cleanup • Improved self-‐consistency • Simpler seman_cs • Easier to maintain • Obvious @InterfaceAudience annota_ons hip://issues.apache.org/jira/browse/HBASE-‐10602 hip://s.apache.org/hbase-‐1.0-‐api hips://github.com/ndimiduk/hbase-‐1.0-‐api-‐examples

- 16. New and Noteworthy • Greatly expanded hbase.apache.org/book.html • Truncate table shell command • Automa_c tuning of global MemStore and BlockCache sizes • Basic backpressure mechanism • BucketCache easier to configure • Compressed BlockCache • Pluggable replica_on endpoint • A Dockerfile to easily run HBase from source

- 17. Under the Covers • ZooKeeper abstrac_ons • Meta table used for assignment • Cell-‐based read/write path • Combining mvcc/seqid • Sundry security, tags, labels improvements

- 18. Groundwork for 2.0 • More, Smaller Regions – Millions, 1G or less – Less write amplifica_on – Splinng hbase:meta • Performance – More off-‐heap – Less resource conten_on – Faster region failover/recovery – Mul_ple WALs – QoS/Quotas/Mul_-‐tenancy • Rigging – Faster, more intelligent assignment – Procedure bus – Resumable, query-‐able opera_ons • Other possibili_es – Quorum/consensus reads, writes? – Hydrabase, mul_-‐DC consensus? – Streaming RPCs? – High level coprocessor API

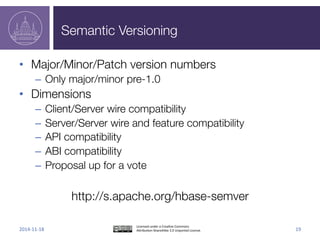

- 19. Seman_c Versioning • Major/Minor/Patch version numbers – Only major/minor pre-‐1.0 • Dimensions – Client/Server wire compa_bility – Server/Server wire and feature compa_bility – API compa_bility – ABI compa_bility • Proposal up for a vote hip://s.apache.org/hbase-‐semver

- 20. UPGRADE PATH Tell it to me straight, how bad is it?

- 21. Online/Wire Compa_bility • Direct migra_on from 0.94 supported – Looks a lot like upgrade from 0.94 to 0.96: requires down_me – Not tested yet, will be before release • RPC is backward-‐compa_ble to 0.96 – Enabled mixing clients and servers across versions – So long as no new features are enabled • Rolling upgrade "out of the box" from 0.98 • Rolling upgrade "with some massaging" from 0.96 – IE, 0.96 cannot read HFileV3, the new default – not tested yet, will be before release

- 22. Client Applica_on Compa_bility • API is backward compa_ble to 0.96 – No code change required – You’ll start genng new depreca_on warnings – We recommend you start using new APIs • ABI is NOT backward compa_ble – Cannot drop current applica_on jars onto new run_me – Recompile your applica_on vs. 1.0 jars – Just like 0.96 to 0.98 upgrade

- 23. Hadoop Versions • Hadoop 1.x is NOT supported – Bite the bullet; you’ll enjoy the performance benefits • Hadoop 2.x only – Most thoroughly tested on 2.4.x, 2.5.x – Probably works on 2.2.x, 2.3.x, but less thoroughly tested hips://hbase.apache.org/book/configura_on.html#hadoop

- 24. Java Versions • JDK 6 is NOT supported! • JDK 7 is the target run_me • JDK 8 support is experimental hips://hbase.apache.org/book/configura_on.html#hadoop

- 25. 1.0.0 RCs Available Now! • Release Candidate vo_ng has commenced • Last chance to catch show-‐stopping bugs RELEASE CANDIDATES NOT FOR PRODUCTION USE • Try out the new features • Help us test your upgrade path • Be a part of history in the making! • 1.0.0rc5 available 2015-‐02-‐19 hip://search-‐hadoop.com/m/DHED40Ih5n

- 26. Thanks! M A N N I N G Nick Dimiduk Amandeep Khurana FOREWORD BY Michael Stack hbaseinac_on.com Nick Dimiduk github.com/ndimiduk @xefyr n10k.com hip://www.apache.org/dyn/closer.cgi/hbase/

Editor's Notes

- #2: Now with 1000% more Orca!

- #3: Stable Reliable Performant

- #14: Improving the distributed system “rigging” Consider enabling in highly volatile environments (like EC2)

- #18: “paving the way for new features and 2.0”

- #21: How to get from here to there

- #27: hbaseugcf (43% off HBase in Action, all formats, valid through Nov 20)

![Data

Model

1368387247 [3.6 kb png data]"thumb"cf2b

a

cf1

1368394583 7

1368394261 "hello"

"bar"

1368394583 22

1368394925 13.6

1368393847 "world"

"foo"

cf2

1368387684 "almost the loneliest number"1.0001

1368396302 "fourth of July""2011-07-04"

Table A

rowkey

column

family

column

qualifier

timestamp value

Rows

Column Families](https://image.slidesharecdn.com/hbase1-141020165501-conversion-gate01/85/Apache-HBase-1-0-Release-9-320.jpg)