Apache MetaModel - unified access to all your data points

Download as PPTX, PDF6 likes2,327 views

Slides from presentation at Apache: Big Data 2015 in Budapest. An introduction into Apache MetaModel and how it helps in Big Data ingestion scenarios.

1 of 47

Downloaded 34 times

![2015- © GMC - 12

MetaModel - Queries via metadata model

DataContext dc = …

Table[] tables = dc.getDefaultSchema().getTables();

Table table = tables[0];

Column[] primaryKeys = table.getPrimaryKeys();

dc.query().from(table).selectAll().where(primaryKeys[0]).eq(42);

dc.query().from("person").selectAll().where("id").eq(42);](https://image.slidesharecdn.com/2015-09-29apachemetamodel-unifiedaccesstoallyourdatapoints-151005070545-lva1-app6892/85/Apache-MetaModel-unified-access-to-all-your-data-points-12-320.jpg)

![2015- © GMC - 29

Query language examples

SELECT * FROM customers

WHERE country_code = 'GB'

OR country_code IS NULL

db.customers.find({

$or: [

{"country.code": "GB"},

{"country.code": {$exists: false}}

]

})

for (line : customers.csv) {

values = parse(line);

country = values[country_index];

if (country == null || "GB".equals(country) {

emit(line);

}

}

SQL

CSV

MongoDB](https://image.slidesharecdn.com/2015-09-29apachemetamodel-unifiedaccesstoallyourdatapoints-151005070545-lva1-app6892/85/Apache-MetaModel-unified-access-to-all-your-data-points-29-320.jpg)

Ad

Recommended

The other Apache Technologies your Big Data solution needs

The other Apache Technologies your Big Data solution needsgagravarr The document discusses many Apache projects relevant to big data solutions, including projects for loading and querying data like Pig and Gora, building MapReduce jobs like Avro and Thrift, cloud computing with LibCloud and DeltaCloud, and extracting information from unstructured data with Tika, UIMA, OpenNLP, and cTakes. It also mentions utility projects like Chemistry, JMeter, Commons, and ManifoldCF.

SQL on Hadoop in Taiwan

SQL on Hadoop in TaiwanTreasure Data, Inc. This document discusses SQL engines for Hadoop, including Hive, Presto, and Impala. Hive is best for batch jobs due to its stability. Presto provides interactive queries across data sources and is easier to manage than Hive with Tez. Presto's distributed architecture allows queries to run in parallel across nodes. It supports pluggable connectors to access different data stores and has language bindings for multiple clients.

Introduction to Apache Amaterasu (Incubating): CD Framework For Your Big Data...

Introduction to Apache Amaterasu (Incubating): CD Framework For Your Big Data...DataWorks Summit In the last few years, the DevOps movement has introduced ground breaking approaches to the way we manage the lifecycle of software development and deployment. Today organisations aspire to fully automate the deployment of microservices and web applications with tools such as Chef, Puppet and Ansible. However, the deployment of data-processing pipelines remains a relic from the dark-ages of software development.

Processing large-scale data pipelines is the main engineering task of the Big Data era, and it should be treated with the same respect and craftsmanship as any other piece of software. That is why we created Apache Amaterasu (Incubating) - an open source framework that takes care of the specific needs of Big Data applications in the world of continuous delivery.

In this session, we will take a close look at Apache Amaterasu (Incubating) a simple and powerful framework to build and dispense pipelines. Amaterasu aims to help data engineers and data scientists to compose, configure, test, package, deploy and execute data pipelines written using multiple tools, languages and frameworks.

We will see what Amaterasu provides today, and how it can help existing Big Data application and demo some of the new bits that are coming in the near future.

Speaker:

Yaniv Rodenski, Senior Solutions Architect, Couchbase

Spark Meetup Amsterdam - Dealing with Bad Actors in ETL, Databricks

Spark Meetup Amsterdam - Dealing with Bad Actors in ETL, DatabricksGoDataDriven Stable and robust data pipelines are a critical component of the data infrastructure of enterprises. Most commonly, data pipelines ingest messy data sources with incorrect, incomplete or inconsistent records and produce curated and/or summarized data for consumption by subsequent applications.

In this talk we go over new and upcoming features in Spark that enable it to better serve such workloads. Such features include isolation of corrupt input records and files, useful diagnostic feedback to users and improved support for nested type handling which is common in ETL jobs.

Transformation Processing Smackdown; Spark vs Hive vs Pig

Transformation Processing Smackdown; Spark vs Hive vs PigLester Martin This document provides an overview and comparison of different data transformation frameworks including Apache Pig, Apache Hive, and Apache Spark. It discusses features such as file formats, source to target mappings, data quality checks, and core processing functionality. The document contains code examples demonstrating how to perform common ETL tasks in each framework using delimited, XML, JSON, and other file formats. It also covers topics like numeric validation, data mapping, and performance. The overall purpose is to help users understand the different options for large-scale data processing in Hadoop.

SQL on Hadoop: Defining the New Generation of Analytic SQL Databases

SQL on Hadoop: Defining the New Generation of Analytic SQL DatabasesOReillyStrata The document summarizes Carl Steinbach's presentation on SQL on Hadoop. It discusses how earlier systems like Hive had limitations for analytics workloads due to using MapReduce. A new architecture runs PostgreSQL on worker nodes co-located with HDFS data to enable push-down query processing for better performance. Citus Data's CitusDB product was presented as an example of this architecture, allowing SQL queries to efficiently analyze petabytes of data stored in HDFS.

Apache Spark sql

Apache Spark sqlaftab alam Spark SQL provides relational data processing capabilities in Spark. It introduces a DataFrame API that allows both relational operations on external data sources and Spark's built-in distributed collections. The Catalyst optimizer improves performance by applying database query optimization techniques. It is highly extensible, making it easy to add data sources, optimization rules, and data types for domains like machine learning. Spark SQL evaluation shows it outperforms alternative systems on both SQL query processing and Spark program workloads involving large datasets.

Functional Programming and Big Data

Functional Programming and Big DataDataWorks Summit The document discusses functional programming concepts and their application to big data problems. It provides an overview of functional programming foundations and languages. Key functional programming concepts discussed include first-class functions, pure functions, recursion, and immutability. These concepts are well-suited for data-centric applications like Hadoop MapReduce. The document also presents a case study comparing an imperative approach to a transaction processing problem to a functional approach, showing that the functional version was faster and avoided side effects.

Spark sql

Spark sqlZahra Eskandari Spark SQL is a module for structured data processing in Spark. It provides DataFrames and the ability to execute SQL queries. Some key points:

- Spark SQL allows querying structured data using SQL, or via DataFrame/Dataset APIs for Scala, Java, Python, and R.

- It supports various data sources like Hive, Parquet, JSON, and more. Data can be loaded and queried using a unified interface.

- The SparkSession API combines SparkContext with SQL functionality and is used to create DataFrames from data sources, register databases/tables, and execute SQL queries.

Spark sql

Spark sqlFreeman Zhang Spark SQL is a component of Apache Spark that introduces SQL support. It includes a DataFrame API that allows users to write SQL queries on Spark, a Catalyst optimizer that converts logical queries to physical plans, and data source APIs that provide a unified way to read/write data in various formats. Spark SQL aims to make SQL queries on Spark more efficient and extensible.

Spark meetup TCHUG

Spark meetup TCHUGRyan Bosshart This document discusses Apache Spark, an open-source cluster computing framework. It describes how Spark allows for faster iterative algorithms and interactive data mining by keeping working sets in memory. The document also provides an overview of Spark's ease of use in Scala and Python, built-in modules for SQL, streaming, machine learning, and graph processing, and compares Spark's machine learning library MLlib to other frameworks.

Spark meetup v2.0.5

Spark meetup v2.0.5Yan Zhou In this talk, we’ll discuss technical designs of support of HBase as a “native” data source to Spark SQL to achieve both query and load performance and scalability: near-precise execution locality of query and loading, fine-tuned partition pruning, predicate pushdown, plan execution through coprocessor, and optimized and fully parallelized bulk loader. Point and range queries on dimensional attributes will benefit particularly well from the techniques. Preliminary test results vs. established SQL-on-HBase technologies will be provided. The speaker will also share the future plan and real-world use cases, particularly in the telecom industry.

Data Migration with Spark to Hive

Data Migration with Spark to HiveDatabricks In this presentation, Vineet will be explaining case study of one of my customers using Spark to migrate terabytes of data from GPFS into Hive tables. The ETL pipeline was built purely using Spark. The pipeline extracted target (Hive) table properties such as - identification of Hive Date/Timestamp columns, whether target table is partitioned or non-partitioned, target storage formats (Parquet or Avro) and source to target columns mappings. These target tables contain few to hundreds of columns and non standard date fomats into Hive standard timestamp format.

Four Things to Know About Reliable Spark Streaming with Typesafe and Databricks

Four Things to Know About Reliable Spark Streaming with Typesafe and DatabricksLegacy Typesafe (now Lightbend) Spark Streaming makes it easy to build scalable fault-tolerant streaming applications. In this webinar, developers will learn:

*How Spark Streaming works - a quick review.

*Features in Spark Streaming that help prevent potential data loss.

*Complementary tools in a streaming pipeline - Kafka and Akka.

*Design and tuning tips for Reactive Spark Streaming applications.

Spark,Hadoop,Presto Comparition

Spark,Hadoop,Presto ComparitionSandish Kumar H N The document discusses several big data frameworks: Spark, Presto, Cloudera Impala, and Apache Hadoop. Spark aims to make data analytics faster by loading data into memory for iterative querying. Presto extends R with distributed parallelism for scalable machine learning and graph algorithms. Hadoop uses MapReduce to distribute computations across large hardware clusters and handles failures automatically. While useful for batch processing, Hadoop has disadvantages for small files and online transactions.

Spark SQL

Spark SQLCaserta The document provides an agenda and overview for a Big Data Warehousing meetup hosted by Caserta Concepts. The meetup agenda includes an introduction to SparkSQL with a deep dive on SparkSQL and a demo. Elliott Cordo from Caserta Concepts will provide an introduction and overview of Spark as well as a demo of SparkSQL. The meetup aims to share stories in the rapidly changing big data landscape and provide networking opportunities for data professionals.

How to use Parquet as a Sasis for ETL and Analytics

How to use Parquet as a Sasis for ETL and AnalyticsDataWorks Summit Parquet is a columnar storage format that provides efficient compression and querying capabilities. It aims to store data efficiently for analysis while supporting interoperability across systems. Parquet uses column-oriented storage with efficient encodings and statistics to enable fast querying of large datasets. It integrates with many query engines and frameworks like Hive, Impala, Spark and MapReduce to allow projection and predicate pushdown for optimized queries.

Spark SQL Tutorial | Spark SQL Using Scala | Apache Spark Tutorial For Beginn...

Spark SQL Tutorial | Spark SQL Using Scala | Apache Spark Tutorial For Beginn...Simplilearn This presentation about Spark SQL will help you understand what is Spark SQL, Spark SQL features, architecture, data frame API, data source API, catalyst optimizer, running SQL queries and a demo on Spark SQL. Spark SQL is an Apache Spark's module for working with structured and semi-structured data. It is originated to overcome the limitations of Apache Hive. Now, let us get started and understand Spark SQL in detail.

Below topics are explained in this Spark SQL presentation:

1. What is Spark SQL?

2. Spark SQL features

3. Spark SQL architecture

4. Spark SQL - Dataframe API

5. Spark SQL - Data source API

6. Spark SQL - Catalyst optimizer

7. Running SQL queries

8. Spark SQL demo

This Apache Spark and Scala certification training is designed to advance your expertise working with the Big Data Hadoop Ecosystem. You will master essential skills of the Apache Spark open source framework and the Scala programming language, including Spark Streaming, Spark SQL, machine learning programming, GraphX programming, and Shell Scripting Spark. This Scala Certification course will give you vital skillsets and a competitive advantage for an exciting career as a Hadoop Developer.

What is this Big Data Hadoop training course about?

The Big Data Hadoop and Spark developer course have been designed to impart an in-depth knowledge of Big Data processing using Hadoop and Spark. The course is packed with real-life projects and case studies to be executed in the CloudLab.

What are the course objectives?

Simplilearn’s Apache Spark and Scala certification training are designed to:

1. Advance your expertise in the Big Data Hadoop Ecosystem

2. Help you master essential Apache and Spark skills, such as Spark Streaming, Spark SQL, machine learning programming, GraphX programming and Shell Scripting Spark

3. Help you land a Hadoop developer job requiring Apache Spark expertise by giving you a real-life industry project coupled with 30 demos

What skills will you learn?

By completing this Apache Spark and Scala course you will be able to:

1. Understand the limitations of MapReduce and the role of Spark in overcoming these limitations

2. Understand the fundamentals of the Scala programming language and its features

3. Explain and master the process of installing Spark as a standalone cluster

4. Develop expertise in using Resilient Distributed Datasets (RDD) for creating applications in Spark

5. Master Structured Query Language (SQL) using SparkSQL

6. Gain a thorough understanding of Spark streaming features

7. Master and describe the features of Spark ML programming and GraphX programming

Learn more at https://www.simplilearn.com/big-data-and-analytics/apache-spark-scala-certification-training

Big Data and Hadoop Guide

Big Data and Hadoop GuideSimplilearn Big Data is a collection of large and complex data sets that cannot be processed using regular database management tools or processing applications. A lot of challenges such as capture, curation, storage, search, sharing, analysis, and visualization can be encountered while handling Big Data. On the other hand the Apache Hadoop software library is a framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage. Big Data certification is one of the most recognized credentials of today.

For more details Click http://www.simplilearn.com/big-data-and-analytics/big-data-and-hadoop-training

Using Apache Spark as ETL engine. Pros and Cons

Using Apache Spark as ETL engine. Pros and Cons Provectus Maxim Doroshenko (Big Data Engineer, Lead Genius, Provectus) with the talk "Using Apache Spark as ETL engine. Pros and Cons".

Building a Hadoop Data Warehouse with Impala

Building a Hadoop Data Warehouse with Impalahuguk The document is a presentation about using Hadoop for analytic workloads. It discusses how Hadoop has traditionally been used for batch processing but can now also be used for interactive queries and business intelligence workloads using tools like Impala, Parquet, and HDFS. It summarizes performance tests showing Impala can outperform MapReduce for queries and scales linearly with additional nodes. The presentation argues Hadoop provides an effective solution for certain data warehouse workloads while maintaining flexibility, ease of scaling, and cost effectiveness.

Building large scale transactional data lake using apache hudi

Building large scale transactional data lake using apache hudiBill Liu Data is a critical infrastructure for building machine learning systems. From ensuring accurate ETAs to predicting optimal traffic routes, providing safe, seamless transportation and delivery experiences on the Uber platform requires reliable, performant large-scale data storage and analysis. In 2016, Uber developed Apache Hudi, an incremental processing framework, to power business critical data pipelines at low latency and high efficiency, and helps distributed organizations build and manage petabyte-scale data lakes.

In this talk, I will describe what is APache Hudi and its architectural design, and then deep dive to improving data operations by providing features such as data versioning, time travel.

We will also go over how Hudi brings kappa architecture to big data systems and enables efficient incremental processing for near real time use cases.

Speaker: Satish Kotha (Uber)

Apache Hudi committer and Engineer at Uber. Previously, he worked on building real time distributed storage systems like Twitter MetricsDB and BlobStore.

website: https://www.aicamp.ai/event/eventdetails/W2021043010

Easy, Scalable, Fault-tolerant stream processing with Structured Streaming in...

Easy, Scalable, Fault-tolerant stream processing with Structured Streaming in...DataWorks Summit Last year, in Apache Spark 2.0, we introduced Structured Steaming, a new stream processing engine built on Spark SQL, which revolutionized how developers could write stream processing application. Structured Streaming enables users to express their computations the same way they would express a batch query on static data. Developers can express queries using powerful high-level APIs including DataFrames, Dataset and SQL. Then, the Spark SQL engine is capable of converting these batch-like transformations into an incremental execution plan that can process streaming data, while automatically handling late, out-of-order data, and ensuring end-to-end exactly-once fault-tolerance guarantees.

Since Spark 2.0 we've been hard at work building first class integration with Kafka. With this new connectivity, performing complex, low-latency analytics is now as easy as writing a standard SQL query. This functionality in addition to the existing connectivity of Spark SQL make it easy to analyze data using one unified framework. Users can now seamlessly extract insights from data, independent of whether it is coming from messy / unstructured files, a structured / columnar historical data warehouse or arriving in real-time from pubsub systems like Kafka and Kinesis.

We'll walk through a concrete example where in less than 10 lines, we read Kafka, parse JSON payload data into separate columns, transform it, enrich it by joining with static data and write it out as a table ready for batch and ad-hoc queries on up-to-the-last-minute data. We'll use techniques including event-time based aggregations, arbitrary stateful operations, and automatic state management using event-time watermarks.

20140908 spark sql & catalyst

20140908 spark sql & catalystTakuya UESHIN This document introduces Spark SQL and the Catalyst query optimizer. It discusses that Spark SQL allows executing SQL on Spark, builds SchemaRDDs, and optimizes query execution plans. It then provides details on how Catalyst works, including its use of logical expressions, operators, and rules to transform query trees and optimize queries. Finally, it outlines some interesting open issues and how to contribute to Spark SQL's development.

Introduction to Sqoop Aaron Kimball Cloudera Hadoop User Group UK

Introduction to Sqoop Aaron Kimball Cloudera Hadoop User Group UKSkills Matter In this talk of Hadoop User Group UK meeting, Aaron Kimball from Cloudera introduces Sqoop, the open source SQL-to-Hadoop tool. Sqoop helps users perform efficient imports of data from RDBMS sources to Hadoop's distributed file system, where it can be processed in concert with other data sources. Sqoop also allows users to export Hadoop-generated results back to an RDBMS for use with other data pipelines.

After this session, users will understand how databases and Hadoop fit together, and how to use Sqoop to move data between these systems. The talk will provide suggestions for best practices when integrating Sqoop and Hadoop in your data processing pipelines. We'll also cover some deeper technical details of Sqoop's architecture, and take a look at some upcoming aspects of Sqoop's development roadmap.

Reshape Data Lake (as of 2020.07)

Reshape Data Lake (as of 2020.07)Eric Sun SF Big Analytics 2020-07-28

Anecdotal history of Data Lake and various popular implementation framework. Why certain tradeoff was made to solve the problems, such as cloud storage, incremental processing, streaming and batch unification, mutable table, ...

Ingesting data at scale into elasticsearch with apache pulsar

Ingesting data at scale into elasticsearch with apache pulsarTimothy Spann Ingesting data at scale into elasticsearch with apache pulsar

FLiP

Flink, Pulsar, Spark, NiFi, ElasticSearch, MQTT, JSON

data ingest

etl

elt

sql

timothy spann

elasticsearch community conference

2/11/2022

developer advocate at streamnative

Machine Learning by Example - Apache Spark

Machine Learning by Example - Apache SparkMeeraj Kunnumpurath This document provides an agenda for a presentation on using machine learning with Apache Spark. The presentation introduces Apache Spark and its architecture, Scala notebooks in Spark, machine learning components in Spark, pipelines for machine learning tasks, and examples of regression, classification, clustering, collaborative filtering, and model tuning in Spark. It aims to provide a hands-on, example-driven introduction to applying machine learning using Apache Spark without deep dives into Spark architecture or algorithms.

黑豹 ch4 ddd pattern practice (2)

黑豹 ch4 ddd pattern practice (2)Fong Liou This document discusses layered architecture and repositories in domain-driven design. It covers topics like separating domain models from data models using repositories, implementing repositories to provide a collection-like interface for accessing domain objects, and differences between repositories and data access objects. The key points are that repositories mediate between the domain and data layers, encapsulate data access, and make the domain model independent of data access technologies and schemas.

NoSQL and MySQL: News about JSON

NoSQL and MySQL: News about JSONMario Beck MySQL 5.7 is GA. Here is the news about our NoSQL features in MySQL and MySQL Cluster, with a lot of emphasize on the new JSON features that make MySQL suitable as a document store.

Ad

More Related Content

What's hot (20)

Spark sql

Spark sqlZahra Eskandari Spark SQL is a module for structured data processing in Spark. It provides DataFrames and the ability to execute SQL queries. Some key points:

- Spark SQL allows querying structured data using SQL, or via DataFrame/Dataset APIs for Scala, Java, Python, and R.

- It supports various data sources like Hive, Parquet, JSON, and more. Data can be loaded and queried using a unified interface.

- The SparkSession API combines SparkContext with SQL functionality and is used to create DataFrames from data sources, register databases/tables, and execute SQL queries.

Spark sql

Spark sqlFreeman Zhang Spark SQL is a component of Apache Spark that introduces SQL support. It includes a DataFrame API that allows users to write SQL queries on Spark, a Catalyst optimizer that converts logical queries to physical plans, and data source APIs that provide a unified way to read/write data in various formats. Spark SQL aims to make SQL queries on Spark more efficient and extensible.

Spark meetup TCHUG

Spark meetup TCHUGRyan Bosshart This document discusses Apache Spark, an open-source cluster computing framework. It describes how Spark allows for faster iterative algorithms and interactive data mining by keeping working sets in memory. The document also provides an overview of Spark's ease of use in Scala and Python, built-in modules for SQL, streaming, machine learning, and graph processing, and compares Spark's machine learning library MLlib to other frameworks.

Spark meetup v2.0.5

Spark meetup v2.0.5Yan Zhou In this talk, we’ll discuss technical designs of support of HBase as a “native” data source to Spark SQL to achieve both query and load performance and scalability: near-precise execution locality of query and loading, fine-tuned partition pruning, predicate pushdown, plan execution through coprocessor, and optimized and fully parallelized bulk loader. Point and range queries on dimensional attributes will benefit particularly well from the techniques. Preliminary test results vs. established SQL-on-HBase technologies will be provided. The speaker will also share the future plan and real-world use cases, particularly in the telecom industry.

Data Migration with Spark to Hive

Data Migration with Spark to HiveDatabricks In this presentation, Vineet will be explaining case study of one of my customers using Spark to migrate terabytes of data from GPFS into Hive tables. The ETL pipeline was built purely using Spark. The pipeline extracted target (Hive) table properties such as - identification of Hive Date/Timestamp columns, whether target table is partitioned or non-partitioned, target storage formats (Parquet or Avro) and source to target columns mappings. These target tables contain few to hundreds of columns and non standard date fomats into Hive standard timestamp format.

Four Things to Know About Reliable Spark Streaming with Typesafe and Databricks

Four Things to Know About Reliable Spark Streaming with Typesafe and DatabricksLegacy Typesafe (now Lightbend) Spark Streaming makes it easy to build scalable fault-tolerant streaming applications. In this webinar, developers will learn:

*How Spark Streaming works - a quick review.

*Features in Spark Streaming that help prevent potential data loss.

*Complementary tools in a streaming pipeline - Kafka and Akka.

*Design and tuning tips for Reactive Spark Streaming applications.

Spark,Hadoop,Presto Comparition

Spark,Hadoop,Presto ComparitionSandish Kumar H N The document discusses several big data frameworks: Spark, Presto, Cloudera Impala, and Apache Hadoop. Spark aims to make data analytics faster by loading data into memory for iterative querying. Presto extends R with distributed parallelism for scalable machine learning and graph algorithms. Hadoop uses MapReduce to distribute computations across large hardware clusters and handles failures automatically. While useful for batch processing, Hadoop has disadvantages for small files and online transactions.

Spark SQL

Spark SQLCaserta The document provides an agenda and overview for a Big Data Warehousing meetup hosted by Caserta Concepts. The meetup agenda includes an introduction to SparkSQL with a deep dive on SparkSQL and a demo. Elliott Cordo from Caserta Concepts will provide an introduction and overview of Spark as well as a demo of SparkSQL. The meetup aims to share stories in the rapidly changing big data landscape and provide networking opportunities for data professionals.

How to use Parquet as a Sasis for ETL and Analytics

How to use Parquet as a Sasis for ETL and AnalyticsDataWorks Summit Parquet is a columnar storage format that provides efficient compression and querying capabilities. It aims to store data efficiently for analysis while supporting interoperability across systems. Parquet uses column-oriented storage with efficient encodings and statistics to enable fast querying of large datasets. It integrates with many query engines and frameworks like Hive, Impala, Spark and MapReduce to allow projection and predicate pushdown for optimized queries.

Spark SQL Tutorial | Spark SQL Using Scala | Apache Spark Tutorial For Beginn...

Spark SQL Tutorial | Spark SQL Using Scala | Apache Spark Tutorial For Beginn...Simplilearn This presentation about Spark SQL will help you understand what is Spark SQL, Spark SQL features, architecture, data frame API, data source API, catalyst optimizer, running SQL queries and a demo on Spark SQL. Spark SQL is an Apache Spark's module for working with structured and semi-structured data. It is originated to overcome the limitations of Apache Hive. Now, let us get started and understand Spark SQL in detail.

Below topics are explained in this Spark SQL presentation:

1. What is Spark SQL?

2. Spark SQL features

3. Spark SQL architecture

4. Spark SQL - Dataframe API

5. Spark SQL - Data source API

6. Spark SQL - Catalyst optimizer

7. Running SQL queries

8. Spark SQL demo

This Apache Spark and Scala certification training is designed to advance your expertise working with the Big Data Hadoop Ecosystem. You will master essential skills of the Apache Spark open source framework and the Scala programming language, including Spark Streaming, Spark SQL, machine learning programming, GraphX programming, and Shell Scripting Spark. This Scala Certification course will give you vital skillsets and a competitive advantage for an exciting career as a Hadoop Developer.

What is this Big Data Hadoop training course about?

The Big Data Hadoop and Spark developer course have been designed to impart an in-depth knowledge of Big Data processing using Hadoop and Spark. The course is packed with real-life projects and case studies to be executed in the CloudLab.

What are the course objectives?

Simplilearn’s Apache Spark and Scala certification training are designed to:

1. Advance your expertise in the Big Data Hadoop Ecosystem

2. Help you master essential Apache and Spark skills, such as Spark Streaming, Spark SQL, machine learning programming, GraphX programming and Shell Scripting Spark

3. Help you land a Hadoop developer job requiring Apache Spark expertise by giving you a real-life industry project coupled with 30 demos

What skills will you learn?

By completing this Apache Spark and Scala course you will be able to:

1. Understand the limitations of MapReduce and the role of Spark in overcoming these limitations

2. Understand the fundamentals of the Scala programming language and its features

3. Explain and master the process of installing Spark as a standalone cluster

4. Develop expertise in using Resilient Distributed Datasets (RDD) for creating applications in Spark

5. Master Structured Query Language (SQL) using SparkSQL

6. Gain a thorough understanding of Spark streaming features

7. Master and describe the features of Spark ML programming and GraphX programming

Learn more at https://www.simplilearn.com/big-data-and-analytics/apache-spark-scala-certification-training

Big Data and Hadoop Guide

Big Data and Hadoop GuideSimplilearn Big Data is a collection of large and complex data sets that cannot be processed using regular database management tools or processing applications. A lot of challenges such as capture, curation, storage, search, sharing, analysis, and visualization can be encountered while handling Big Data. On the other hand the Apache Hadoop software library is a framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage. Big Data certification is one of the most recognized credentials of today.

For more details Click http://www.simplilearn.com/big-data-and-analytics/big-data-and-hadoop-training

Using Apache Spark as ETL engine. Pros and Cons

Using Apache Spark as ETL engine. Pros and Cons Provectus Maxim Doroshenko (Big Data Engineer, Lead Genius, Provectus) with the talk "Using Apache Spark as ETL engine. Pros and Cons".

Building a Hadoop Data Warehouse with Impala

Building a Hadoop Data Warehouse with Impalahuguk The document is a presentation about using Hadoop for analytic workloads. It discusses how Hadoop has traditionally been used for batch processing but can now also be used for interactive queries and business intelligence workloads using tools like Impala, Parquet, and HDFS. It summarizes performance tests showing Impala can outperform MapReduce for queries and scales linearly with additional nodes. The presentation argues Hadoop provides an effective solution for certain data warehouse workloads while maintaining flexibility, ease of scaling, and cost effectiveness.

Building large scale transactional data lake using apache hudi

Building large scale transactional data lake using apache hudiBill Liu Data is a critical infrastructure for building machine learning systems. From ensuring accurate ETAs to predicting optimal traffic routes, providing safe, seamless transportation and delivery experiences on the Uber platform requires reliable, performant large-scale data storage and analysis. In 2016, Uber developed Apache Hudi, an incremental processing framework, to power business critical data pipelines at low latency and high efficiency, and helps distributed organizations build and manage petabyte-scale data lakes.

In this talk, I will describe what is APache Hudi and its architectural design, and then deep dive to improving data operations by providing features such as data versioning, time travel.

We will also go over how Hudi brings kappa architecture to big data systems and enables efficient incremental processing for near real time use cases.

Speaker: Satish Kotha (Uber)

Apache Hudi committer and Engineer at Uber. Previously, he worked on building real time distributed storage systems like Twitter MetricsDB and BlobStore.

website: https://www.aicamp.ai/event/eventdetails/W2021043010

Easy, Scalable, Fault-tolerant stream processing with Structured Streaming in...

Easy, Scalable, Fault-tolerant stream processing with Structured Streaming in...DataWorks Summit Last year, in Apache Spark 2.0, we introduced Structured Steaming, a new stream processing engine built on Spark SQL, which revolutionized how developers could write stream processing application. Structured Streaming enables users to express their computations the same way they would express a batch query on static data. Developers can express queries using powerful high-level APIs including DataFrames, Dataset and SQL. Then, the Spark SQL engine is capable of converting these batch-like transformations into an incremental execution plan that can process streaming data, while automatically handling late, out-of-order data, and ensuring end-to-end exactly-once fault-tolerance guarantees.

Since Spark 2.0 we've been hard at work building first class integration with Kafka. With this new connectivity, performing complex, low-latency analytics is now as easy as writing a standard SQL query. This functionality in addition to the existing connectivity of Spark SQL make it easy to analyze data using one unified framework. Users can now seamlessly extract insights from data, independent of whether it is coming from messy / unstructured files, a structured / columnar historical data warehouse or arriving in real-time from pubsub systems like Kafka and Kinesis.

We'll walk through a concrete example where in less than 10 lines, we read Kafka, parse JSON payload data into separate columns, transform it, enrich it by joining with static data and write it out as a table ready for batch and ad-hoc queries on up-to-the-last-minute data. We'll use techniques including event-time based aggregations, arbitrary stateful operations, and automatic state management using event-time watermarks.

20140908 spark sql & catalyst

20140908 spark sql & catalystTakuya UESHIN This document introduces Spark SQL and the Catalyst query optimizer. It discusses that Spark SQL allows executing SQL on Spark, builds SchemaRDDs, and optimizes query execution plans. It then provides details on how Catalyst works, including its use of logical expressions, operators, and rules to transform query trees and optimize queries. Finally, it outlines some interesting open issues and how to contribute to Spark SQL's development.

Introduction to Sqoop Aaron Kimball Cloudera Hadoop User Group UK

Introduction to Sqoop Aaron Kimball Cloudera Hadoop User Group UKSkills Matter In this talk of Hadoop User Group UK meeting, Aaron Kimball from Cloudera introduces Sqoop, the open source SQL-to-Hadoop tool. Sqoop helps users perform efficient imports of data from RDBMS sources to Hadoop's distributed file system, where it can be processed in concert with other data sources. Sqoop also allows users to export Hadoop-generated results back to an RDBMS for use with other data pipelines.

After this session, users will understand how databases and Hadoop fit together, and how to use Sqoop to move data between these systems. The talk will provide suggestions for best practices when integrating Sqoop and Hadoop in your data processing pipelines. We'll also cover some deeper technical details of Sqoop's architecture, and take a look at some upcoming aspects of Sqoop's development roadmap.

Reshape Data Lake (as of 2020.07)

Reshape Data Lake (as of 2020.07)Eric Sun SF Big Analytics 2020-07-28

Anecdotal history of Data Lake and various popular implementation framework. Why certain tradeoff was made to solve the problems, such as cloud storage, incremental processing, streaming and batch unification, mutable table, ...

Ingesting data at scale into elasticsearch with apache pulsar

Ingesting data at scale into elasticsearch with apache pulsarTimothy Spann Ingesting data at scale into elasticsearch with apache pulsar

FLiP

Flink, Pulsar, Spark, NiFi, ElasticSearch, MQTT, JSON

data ingest

etl

elt

sql

timothy spann

elasticsearch community conference

2/11/2022

developer advocate at streamnative

Machine Learning by Example - Apache Spark

Machine Learning by Example - Apache SparkMeeraj Kunnumpurath This document provides an agenda for a presentation on using machine learning with Apache Spark. The presentation introduces Apache Spark and its architecture, Scala notebooks in Spark, machine learning components in Spark, pipelines for machine learning tasks, and examples of regression, classification, clustering, collaborative filtering, and model tuning in Spark. It aims to provide a hands-on, example-driven introduction to applying machine learning using Apache Spark without deep dives into Spark architecture or algorithms.

Four Things to Know About Reliable Spark Streaming with Typesafe and Databricks

Four Things to Know About Reliable Spark Streaming with Typesafe and DatabricksLegacy Typesafe (now Lightbend)

Similar to Apache MetaModel - unified access to all your data points (20)

黑豹 ch4 ddd pattern practice (2)

黑豹 ch4 ddd pattern practice (2)Fong Liou This document discusses layered architecture and repositories in domain-driven design. It covers topics like separating domain models from data models using repositories, implementing repositories to provide a collection-like interface for accessing domain objects, and differences between repositories and data access objects. The key points are that repositories mediate between the domain and data layers, encapsulate data access, and make the domain model independent of data access technologies and schemas.

NoSQL and MySQL: News about JSON

NoSQL and MySQL: News about JSONMario Beck MySQL 5.7 is GA. Here is the news about our NoSQL features in MySQL and MySQL Cluster, with a lot of emphasize on the new JSON features that make MySQL suitable as a document store.

Elevate MongoDB with ODBC/JDBC

Elevate MongoDB with ODBC/JDBCMongoDB <b>Elevate MongoDB with ODBC/JDBC </b>[4:05 pm - 4:25 pm]<br />Adoption for MongoDB is growing across the enterprise and disrupting existing business intelligence, analytics and data integration infrastructure. Join us to disrupt that disruption using ODBC and JDBC access to MongoDB for instant out-of-box integration with existing infrastructure to elevate and expand your organization’s MongoDB footprint. We'll talk about common challenges and gotchas that shops face when exposing unstructured and semi-structured data using these established data connectivity standards. Existing infrastructure requirements should not dictate developers’ freedom of choice in a database

GDG Cloud Southlake #16: Priyanka Vergadia: Scalable Data Analytics in Google...

GDG Cloud Southlake #16: Priyanka Vergadia: Scalable Data Analytics in Google...James Anderson Do you know The Cloud Girl? She makes the cloud come alive with pictures and storytelling.

The Cloud Girl, Priyanka Vergadia, Chief Content Officer @Google, joins us to tell us about Scaleable Data Analytics in Google Cloud.

Maybe, with her explanation, we'll finally understand it!

Priyanka is a technical storyteller and content creator who has created over 300 videos, articles, podcasts, courses and tutorials which help developers learn Google Cloud fundamentals, solve their business challenges and pass certifications! Checkout her content on Google Cloud Tech Youtube channel.

Priyanka enjoys drawing and painting which she tries to bring to her advocacy.

Check out her website The Cloud Girl: https://thecloudgirl.dev/ and her new book: https://www.amazon.com/Visualizing-Google-Cloud-Illustrated-References/dp/1119816327

FOSDEM 2015 - NoSQL and SQL the best of both worlds

FOSDEM 2015 - NoSQL and SQL the best of both worldsAndrew Morgan This document discusses the benefits and limitations of both SQL and NoSQL databases. It argues that while NoSQL databases provide benefits like simple data formats and scalability, relying solely on them can result in data duplication and inconsistent data when relationships are not properly modeled. The document suggests that MySQL Cluster provides a hybrid approach, allowing both SQL queries and NoSQL interfaces while ensuring ACID compliance and referential integrity through its transactional capabilities and handling of foreign keys.

Webinar on MongoDB BI Connectors

Webinar on MongoDB BI ConnectorsSumit Sarkar Discuss certified BI Connector options from MongoDB and Progress DataDirect, with a real world JDBC demo from Tibco Jaspersoft

ER/Studio and DB PowerStudio Launch Webinar: Big Data, Big Models, Big News!

ER/Studio and DB PowerStudio Launch Webinar: Big Data, Big Models, Big News! Embarcadero Technologies Watch the accompanying webinar presentation at http://embt.co/BigXE6

These are the slides for the ER/Studio and DB PowerStudio Launch Webinar: Big Data, Big Models, Big News! on September 18, 2014

Challenges of Implementing an Advanced SQL Engine on Hadoop

Challenges of Implementing an Advanced SQL Engine on HadoopDataWorks Summit Big SQL 3.0 is IBM's SQL engine for Hadoop that addresses challenges of building a first class SQL engine on Hadoop. It uses a modern MPP shared-nothing architecture and is architected from the ground up for low latency and high throughput. Key challenges included data placement on Hadoop, reading and writing Hadoop file formats, query optimization with limited statistics, and resource management with a shared Hadoop cluster. The architecture utilizes existing SQL query rewrite and optimization capabilities while introducing new capabilities for statistics, constraints, and pushdown to Hadoop file formats and data sources.

Ad hoc analytics with Cassandra and Spark

Ad hoc analytics with Cassandra and SparkMohammed Guller Presented at the Cassandra Summit 2015. Discusses how you can use Spark to do ad hoc analytics on the data stored in Cassandra.

Practical OData

Practical ODataVagif Abilov The outline of the presentation (presented at NDC 2011, Oslo, Norway):

- Short summary of OData evolution and current state

- Quick presentation of tools used to build and test OData services and clients (Visual Studio, LinqPad, Fiddler)

- Definition of canonical REST service, conformance of DataService-based implementation

- Updateable OData services

- Sharing single conceptual data model between databases from different vendors

- OData services without Entity Framework (NHibernate, custom data provider)

- Practical tips (logging, WCF binding, deployment)

Presto talk @ Global AI conference 2018 Boston

Presto talk @ Global AI conference 2018 Bostonkbajda Presented at Global AI Conference in Boston 2018:

http://www.globalbigdataconference.com/boston/global-artificial-intelligence-conference-106/speaker-details/kamil-bajda-pawlikowski-62952.html

Presto, an open source distributed SQL engine, is widely recognized for its low-latency queries, high concurrency, and native ability to query multiple data sources. Proven at scale in a variety of use cases at Facebook, Airbnb, Netflix, Uber, Twitter, LinkedIn, Bloomberg, and FINRA, Presto experienced an unprecedented growth in popularity in both on-premises and cloud deployments in the last few years. Presto is really a SQL-on-Anything engine in a single query can access data from Hadoop, S3-compatible object stores, RDBMS, NoSQL and custom data stores. This talk will cover some of the best use cases for Presto, recent advancements in the project such as Cost-Based Optimizer and Geospatial functions as well as discuss the roadmap going forward.

Tutorial Workgroup - Model versioning and collaboration

Tutorial Workgroup - Model versioning and collaborationPascalDesmarets1 Hackolade Studio has native integration with Git repositories to provide state-of-the-art collaboration, versioning, branching, conflict resolution, peer review workflows, change tracking and traceability. Mostly, it allows to co-locate data models and schemas with application code, and further integrate with DevOps CI/CD pipelines as part of our vision for Metadata-as-Code.

Co-located application code and data models provide the single source-of-truth for business and technical stakeholders.

Adding Data into your SOA with WSO2 WSAS

Adding Data into your SOA with WSO2 WSASsumedha.r This webinar (done in December,2007) shows how the new Data Services capability in WSO2's Web Services Application Server can become a key component in your SOA/Data strategy. Using simple screens and a basic knowledge of SQL, any database programmer or administrator can configure and expose Data Services. As well as major databases such as Oracle, DB2 and MySQL, you can also extract data from Excel and CSV files.

Mondrian - Geo Mondrian

Mondrian - Geo MondrianSimone Campora The document discusses Mondrian, an open source OLAP server written in Java. It can be used to develop a trajectory data warehouse and interactively analyze large datasets stored in SQL databases without writing SQL. Mondrian uses MDX and XML for querying and cube definition. It provides an OLAP view of relational data and enables fast, on-line analytical processing through aggregation and caching. GeoMondrian extends it with spatial/GIS data types and functions for geographical analysis.

Perchè potresti aver bisogno di un database NoSQL anche se non sei Google o F...

Perchè potresti aver bisogno di un database NoSQL anche se non sei Google o F...Codemotion La presentazione di Luca Garulli

in occasione del Codemotion, Roma 5 marzo 2011 http://www.codemotion.it

From Legacy Database to Domain Layer Using a New Cincom VisualWorks Tool

From Legacy Database to Domain Layer Using a New Cincom VisualWorks ToolESUG The document discusses updates and new features in GLORP (Generic Lightweight Object Relational Persistence), Cincom's object-relational mapping framework for Smalltalk. Key points include:

- Support for PostgreSQL 3.0 and ODBC 3.0 in the External Database Interface (EXDI).

- Improvements to GLORP including support for composite primary keys, nested iterators in queries, and exempting more literals from rollbacks.

- The mapping process between databases, GLORP modeling tools, and domain objects. A quick-start mapping tool called GlorpAtlasUI is demonstrated.

- Questions are invited about GLORP, EXDI, AppeX,

Upcoming changes in MySQL 5.7

Upcoming changes in MySQL 5.7Morgan Tocker The document discusses upcoming changes and new features in MySQL 5.7. Key points include:

- MySQL 5.7 development has focused on performance, scalability, security and refactoring code.

- New features include online DDL support for additional DDL statements, InnoDB support for spatial data types, and cost information added to EXPLAIN output.

- Benchmarks show MySQL 5.7 providing significantly higher performance than previous versions, with a peak of 645,000 queries/second on some workloads.

MongoDB SoCal 2020: Migrate Anything* to MongoDB Atlas

MongoDB SoCal 2020: Migrate Anything* to MongoDB AtlasMongoDB This presentation discusses migrating data from other data stores to MongoDB Atlas. It begins by explaining why MongoDB and Atlas are good choices for data management. Several preparation steps are covered, including sizing the target Atlas cluster, increasing the source oplog, and testing connectivity. Live migration, mongomirror, and dump/restore options are presented for migrating between replicasets or sharded clusters. Post-migration steps like monitoring and backups are also discussed. Finally, migrating from other data stores like AWS DocumentDB, Azure CosmosDB, DynamoDB, and relational databases are briefly covered.

A Pipeline for Distributed Topic and Sentiment Analysis of Tweets on Pivotal ...

A Pipeline for Distributed Topic and Sentiment Analysis of Tweets on Pivotal ...Srivatsan Ramanujam Unstructured data is everywhere - in the form of posts, status updates, bloglets or news feeds in social media or in the form of customer interactions Call Center CRM. While many organizations study and monitor social media for tracking brand value and targeting specific customer segments, in our experience blending the unstructured data with the structured data in supplementing data science models has been far more effective than working with it independently.

In this talk we will show case an end-to-end topic and sentiment analysis pipeline we've built on the Pivotal Greenplum Database platform for Twitter feeds from GNIP, using open source tools like MADlib and PL/Python. We've used this pipeline to build regression models to predict commodity futures from tweets and in enhancing churn models for telecom through topic and sentiment analysis of call center transcripts. All of this was possible because of the flexibility and extensibility of the platform we worked with.

ER/Studio and DB PowerStudio Launch Webinar: Big Data, Big Models, Big News!

ER/Studio and DB PowerStudio Launch Webinar: Big Data, Big Models, Big News! Embarcadero Technologies

Ad

Recently uploaded (20)

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, presentation slides, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

Role of Data Annotation Services in AI-Powered Manufacturing

Role of Data Annotation Services in AI-Powered ManufacturingAndrew Leo From predictive maintenance to robotic automation, AI is driving the future of manufacturing. But without high-quality annotated data, even the smartest models fall short.

Discover how data annotation services are powering accuracy, safety, and efficiency in AI-driven manufacturing systems.

Precision in data labeling = Precision on the production floor.

Generative Artificial Intelligence (GenAI) in Business

Generative Artificial Intelligence (GenAI) in BusinessDr. Tathagat Varma My talk for the Indian School of Business (ISB) Emerging Leaders Program Cohort 9. In this talk, I discussed key issues around adoption of GenAI in business - benefits, opportunities and limitations. I also discussed how my research on Theory of Cognitive Chasms helps address some of these issues

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...TrustArc Most consumers believe they’re making informed decisions about their personal data—adjusting privacy settings, blocking trackers, and opting out where they can. However, our new research reveals that while awareness is high, taking meaningful action is still lacking. On the corporate side, many organizations report strong policies for managing third-party data and consumer consent yet fall short when it comes to consistency, accountability and transparency.

This session will explore the research findings from TrustArc’s Privacy Pulse Survey, examining consumer attitudes toward personal data collection and practical suggestions for corporate practices around purchasing third-party data.

Attendees will learn:

- Consumer awareness around data brokers and what consumers are doing to limit data collection

- How businesses assess third-party vendors and their consent management operations

- Where business preparedness needs improvement

- What these trends mean for the future of privacy governance and public trust

This discussion is essential for privacy, risk, and compliance professionals who want to ground their strategies in current data and prepare for what’s next in the privacy landscape.

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager API

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager APIUiPathCommunity Join this UiPath Community Berlin meetup to explore the Orchestrator API, Swagger interface, and the Test Manager API. Learn how to leverage these tools to streamline automation, enhance testing, and integrate more efficiently with UiPath. Perfect for developers, testers, and automation enthusiasts!

📕 Agenda

Welcome & Introductions

Orchestrator API Overview

Exploring the Swagger Interface

Test Manager API Highlights

Streamlining Automation & Testing with APIs (Demo)

Q&A and Open Discussion

Perfect for developers, testers, and automation enthusiasts!

👉 Join our UiPath Community Berlin chapter: https://community.uipath.com/berlin/

This session streamed live on April 29, 2025, 18:00 CET.

Check out all our upcoming UiPath Community sessions at https://community.uipath.com/events/.

Rusty Waters: Elevating Lakehouses Beyond Spark

Rusty Waters: Elevating Lakehouses Beyond Sparkcarlyakerly1 Spark is a powerhouse for large datasets, but when it comes to smaller data workloads, its overhead can sometimes slow things down. What if you could achieve high performance and efficiency without the need for Spark?

At S&P Global Commodity Insights, having a complete view of global energy and commodities markets enables customers to make data-driven decisions with confidence and create long-term, sustainable value. 🌍

Explore delta-rs + CDC and how these open-source innovations power lightweight, high-performance data applications beyond Spark! 🚀

Linux Professional Institute LPIC-1 Exam.pdf

Linux Professional Institute LPIC-1 Exam.pdfRHCSA Guru Introduction to LPIC-1 Exam - overview, exam details, price and job opportunities

AI and Data Privacy in 2025: Global Trends

AI and Data Privacy in 2025: Global TrendsInData Labs In this infographic, we explore how businesses can implement effective governance frameworks to address AI data privacy. Understanding it is crucial for developing effective strategies that ensure compliance, safeguard customer trust, and leverage AI responsibly. Equip yourself with insights that can drive informed decision-making and position your organization for success in the future of data privacy.

This infographic contains:

-AI and data privacy: Key findings

-Statistics on AI data privacy in the today’s world

-Tips on how to overcome data privacy challenges

-Benefits of AI data security investments.

Keep up-to-date on how AI is reshaping privacy standards and what this entails for both individuals and organizations.

Massive Power Outage Hits Spain, Portugal, and France: Causes, Impact, and On...

Massive Power Outage Hits Spain, Portugal, and France: Causes, Impact, and On...Aqusag Technologies In late April 2025, a significant portion of Europe, particularly Spain, Portugal, and parts of southern France, experienced widespread, rolling power outages that continue to affect millions of residents, businesses, and infrastructure systems.

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdf

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdfAbi john Analyze the growth of meme coins from mere online jokes to potential assets in the digital economy. Explore the community, culture, and utility as they elevate themselves to a new era in cryptocurrency.

Cyber Awareness overview for 2025 month of security

Cyber Awareness overview for 2025 month of securityriccardosl1 Cyber awareness training educates employees on risk associated with internet and malicious emails

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptx

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptxAnoop Ashok In today's fast-paced retail environment, efficiency is key. Every minute counts, and every penny matters. One tool that can significantly boost your store's efficiency is a well-executed planogram. These visual merchandising blueprints not only enhance store layouts but also save time and money in the process.

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep Dive

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep DiveScyllaDB Want to learn practical tips for designing systems that can scale efficiently without compromising speed?

Join us for a workshop where we’ll address these challenges head-on and explore how to architect low-latency systems using Rust. During this free interactive workshop oriented for developers, engineers, and architects, we’ll cover how Rust’s unique language features and the Tokio async runtime enable high-performance application development.

As you explore key principles of designing low-latency systems with Rust, you will learn how to:

- Create and compile a real-world app with Rust

- Connect the application to ScyllaDB (NoSQL data store)

- Negotiate tradeoffs related to data modeling and querying

- Manage and monitor the database for consistently low latencies

Into The Box Conference Keynote Day 1 (ITB2025)

Into The Box Conference Keynote Day 1 (ITB2025)Ortus Solutions, Corp This is the keynote of the Into the Box conference, highlighting the release of the BoxLang JVM language, its key enhancements, and its vision for the future.

Quantum Computing Quick Research Guide by Arthur Morgan

Quantum Computing Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...Alan Dix Talk at the final event of Data Fusion Dynamics: A Collaborative UK-Saudi Initiative in Cybersecurity and Artificial Intelligence funded by the British Council UK-Saudi Challenge Fund 2024, Cardiff Metropolitan University, 29th April 2025

https://alandix.com/academic/talks/CMet2025-AI-Changes-Everything/

Is AI just another technology, or does it fundamentally change the way we live and think?

Every technology has a direct impact with micro-ethical consequences, some good, some bad. However more profound are the ways in which some technologies reshape the very fabric of society with macro-ethical impacts. The invention of the stirrup revolutionised mounted combat, but as a side effect gave rise to the feudal system, which still shapes politics today. The internal combustion engine offers personal freedom and creates pollution, but has also transformed the nature of urban planning and international trade. When we look at AI the micro-ethical issues, such as bias, are most obvious, but the macro-ethical challenges may be greater.

At a micro-ethical level AI has the potential to deepen social, ethnic and gender bias, issues I have warned about since the early 1990s! It is also being used increasingly on the battlefield. However, it also offers amazing opportunities in health and educations, as the recent Nobel prizes for the developers of AlphaFold illustrate. More radically, the need to encode ethics acts as a mirror to surface essential ethical problems and conflicts.

At the macro-ethical level, by the early 2000s digital technology had already begun to undermine sovereignty (e.g. gambling), market economics (through network effects and emergent monopolies), and the very meaning of money. Modern AI is the child of big data, big computation and ultimately big business, intensifying the inherent tendency of digital technology to concentrate power. AI is already unravelling the fundamentals of the social, political and economic world around us, but this is a world that needs radical reimagining to overcome the global environmental and human challenges that confront us. Our challenge is whether to let the threads fall as they may, or to use them to weave a better future.

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...Noah Loul Artificial intelligence is changing how businesses operate. Companies are using AI agents to automate tasks, reduce time spent on repetitive work, and focus more on high-value activities. Noah Loul, an AI strategist and entrepreneur, has helped dozens of companies streamline their operations using smart automation. He believes AI agents aren't just tools—they're workers that take on repeatable tasks so your human team can focus on what matters. If you want to reduce time waste and increase output, AI agents are the next move.

Ad

Apache MetaModel - unified access to all your data points

- 1. 2015- © GMC - 1 with Apache MetaModel Unified access to all your data points

- 2. 2015- © GMC - 2 Who am I? Kasper Sørensen, dad, geek, guitarist … @kaspersor Long-time developer and PMC member of: Founder also of another nice open source project: Principal Software Engineer @

- 3. 2015- © GMC - 3 Session agenda - Introduction to Apache MetaModel - Use case: Query composition - The role of Apache MetaModel in Big Data - (New) architectural possibilities with Apache MetaModel

- 5. 2015- © GMC - 5 The Apache journey 2011-xx: MetaModel founded (outside of Apache) 2013-06: Incubation of Apache MetaModel starts 2014-12: Apache MetaModel is officially an Apache TLP 2015-08: Latest stable release, 4.3.6 2015-09: Latest RC, 4.4.0-RC1 - We're still a small project, just 10 committers (plus some extra contributors), so we have room for you!

- 6. 2015- © GMC - 6 Helicopter view You can look at Apache MetaModel by it's formal description: "… a uniform connector and query API to many very different datastore types …" But let's start with a problem to solve ...

- 7. 2015- © GMC - 7 A problem How to process multiple data sources while: - Staying agnostic to the database engine. - Respecting the metadata of the underlying database. - Not repeating yourself. - Avoiding fragility towards metadata changes. - Mutating the data structure when needed. We used to rely on ORM frameworks to handle the bulk of these ...

- 8. 2015- © GMC - 8 ORM - Queries via domain models public class Person { public String getName() {...} } ORM.query(Person.class).where(Person::getName).eq("John Doe");

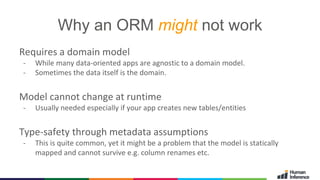

- 9. 2015- © GMC - 9 Why an ORM might not work Requires a domain model - While many data-oriented apps are agnostic to a domain model. - Sometimes the data itself is the domain. Model cannot change at runtime - Usually needed especially if your app creates new tables/entities Type-safety through metadata assumptions - This is quite common, yet it might be a problem that the model is statically mapped and cannot survive e.g. column renames etc.

- 10. 2015- © GMC - 10 Alternative to ORM: Use JDBC Metadata discoverable via java.sql.DatabaseMetaData. Queries can be assembled safely with a bit of String magic: "SELECT " + columnNames + " FROM " + tableName + … ...

- 11. 2015- © GMC - 11 Alternative to ORM: Use JDBC Wrong! It turns out that … - not all databases have the same SQL "dialect". - they also don't all implement DatabaseMetaData the same way. - you cannot use this on much else than relational databases that use SQL. - What about NoSQL, various file formats, web services etc.

- 12. 2015- © GMC - 12 MetaModel - Queries via metadata model DataContext dc = … Table[] tables = dc.getDefaultSchema().getTables(); Table table = tables[0]; Column[] primaryKeys = table.getPrimaryKeys(); dc.query().from(table).selectAll().where(primaryKeys[0]).eq(42); dc.query().from("person").selectAll().where("id").eq(42);

- 13. 2015- © GMC - 13 MetaModel updates UpdateableDataContext dc = … dc.executeUpdate(new UpdateScript() { // multiple updates go here - transactional characteristics (ACID, synchronization etc.) // as per the capabilities of the concrete DataContext implementation. }); dc.executeUpdate(new BatchUpdateScript() { // multiple "batch/bulk" (non atomic) updates go here }); // if I only want to do a single update, there are convenience classes for this InsertInto insert = new InsertInto(table).value(nameColumn, "John Doe"); dc.executeUpdate(insert);

- 14. 2015- © GMC - 14 MetaModel - Connectivity Sometimes we have SQL, sometimes we have another native query engine (e.g. Lucene) and sometimes we use MetaModel's own query engine. (A few more connectors available via the MetaModel-extras (LGPL) project)

- 15. 2015- © GMC - 15 Tables, Columns and SQL – that doesn't sound right. This is one of our trade-offs to make life easier! We map NoSQL models (doc, key/value etc.) to a table-based model. As a user you have some choices: • Supply your own mapping (non-dynamic). • Use schema inference provided by Apache MetaModel. • Provide schema inference instructions (e.g. turn certain key/value pairs into separate tables).

- 16. 2015- © GMC - 16 Other key characteristic about MetaModel • Non-intrusive, you can use it when you want to. • It's just a library – no need for a running a separate service. • Easy to test, stub and mock. Inject a replacement DataContext instead of the real one (typically PojoDataContext). • Easy to implement a new DataContext for your curious database or data format – reuse our abstract implementations.

- 18. 2015- © GMC - 18 MetaModel schema model

- 19. 2015- © GMC - 19 MetaModel query and schema model

- 20. 2015- © GMC - 20 Query representation for developers? "SELECT * FROM foo" Query as a string - Easy to write. - Easy to read. - Prone to parsing errors. - Prone to typos etc. - Fails at runtime. Query q = new Query(); q.from(table).selectAll(); Query as an object - More involved code to read and write. - Lends itself to inspection and mutation. - Fails at compile time.

- 21. 2015- © GMC - 21 This STATUS=DELIVERED filter never actually executes, it just updates the query on the ORDERS table. Context-based Query optimization You might not always need to pass data around between components … Sometimes you can just pass the query around!

- 22. The role of Apache MetaModel in Big Data

- 23. 2015- © GMC - 23 So how does this all relate to Big Data?

- 24. 2015- © GMC - 24 Big Data and the need for metadata Variety - Not only structured data - Social - Sensors - Many new sources, but also the “old”: • Relational • NoSQL • Files • Cloud

- 25. Volume

- 26. Volume Velocity

- 29. 2015- © GMC - 29 Query language examples SELECT * FROM customers WHERE country_code = 'GB' OR country_code IS NULL db.customers.find({ $or: [ {"country.code": "GB"}, {"country.code": {$exists: false}} ] }) for (line : customers.csv) { values = parse(line); country = values[country_index]; if (country == null || "GB".equals(country) { emit(line); } } SQL CSV MongoDB

- 30. 2015- © GMC - 30 Query language examples dataContext.query() .from(customers) .selectAll() .where(countryCode).eq("GB") .or(countryCode).isNull(); Any datastore

- 31. 2015- © GMC - 31 Make it easy to ingest data in your lake

- 32. 2015- © GMC - 32 Metadata to enable automation Big Data Variety and Veracity means we will be handling: ● Different physical formats of the same data ● Different query engines ● Different quality-levels of data How can we automate data ingestion in such a landscape?

- 33. 2015- © GMC - 33 Metadata to enable automation Big Data Variety and Veracity means we will be handling: ● Different physical formats of the same data We need a uniform metamodel for all the datastores. And enough metadata to infer the ingestion transformations needed. ● Different query engines We need a uniform query API based on the metamodel. ● Different quality-levels of data We need our ingestion target to be aware of the ingestion sources.

- 34. 2015- © GMC - 34 Metadata to enable automation Big Data Variety and Veracity means we will be handling: ● Different physical formats of the same data We need a uniform metamodel for all the datastores. And enough metadata to infer the ingestion transformations needed. ● Different query engines We need a uniform query API based on the metamodel. ● Different quality-levels of data We need our ingestion target to be aware of the ingestion sources. As an industry we lack more elaborate metadata to support this!

- 35. 2015- © GMC - 35 Traditional view on metadata user id (pk) CHAR(32) java.lang.String not null username VARCHAR(64) java.lang.String not null, unique real_name VARCHAR(256) java.lang.String not null address VARCHAR(256) java.lang.String nullable age INT int nullable

- 36. 2015- © GMC - 36 Traditional view on metadata user id (pk) CHAR(32) java.lang.String not null username VARCHAR(64) java.lang.String not null, unique real_name VARCHAR(256) java.lang.String not null address VARCHAR(256) java.lang.String nullable age INT int nullable customer id (pk) BIGINT long not null firstname VARCHAR(128) java.lang.String not null lastname VARCHAR(128) java.lang.String not null street VARCHAR(64) java.lang.String not null house number INT int not null

- 37. 2015- © GMC - 37 A more elaborate metadata view user id (pk) CHAR(32) java.lang.String not null username VARCHAR(64) java.lang.String not null, unique real_name VARCHAR(256) java.lang.String not null address VARCHAR(256) java.lang.String nullable age INT int nullable customer id (pk) BIGINT long not null firstname VARCHAR(128) java.lang.String not null lastname VARCHAR(128) java.lang.String not null street VARCHAR(64) java.lang.String not null house number INT int not null 32 char UUID Person full name Address (unstructured) Person first name Person last name Address part - street Same real- world entity? Address part - house number Numeric nominal variable Person age Numeric ratio variable

- 38. 2015- © GMC - 38 Elaborate metadata and querying SELECT (columns with header 'name') FROM (customer and user) SELECT firstname, lastname FROM customer SELECT real_name FROM user SELECT EXTRACT(full name FROM (name columns)) FROM (tables containing person names) SELECT (person name columns) FROM (customer and user) Static Manual Dynamic Automated (or supervised)

- 39. 2015- © GMC - 39 Elaborate metadata and querying SELECT street, house_number FROM customer SELECT address FROM user SELECT EXTRACT(street, hno, city, zip) FROM (Address part columns)) FROM (tables containing addresses) SELECT (Address part columns) FROM (customer and user) Static Manual Dynamic Automated (or supervised)

- 40. 2015- © GMC - 40 Elaborate metadata and querying Individual/specific DB connectors JDBC Apache MetaModel (today) Apache MetaModel (future) Static Manual Dynamic Automated (or supervised)

- 41. (New) architectural possibilities with Apache MetaModel

- 42. 2015- © GMC - 42 Data Integration scenario Copy (ETL)

- 43. 2015- © GMC - 43 Data Federation scenario

- 44. 2015- © GMC - 44 What about speed? Performance - Performance is important in development of MetaModel. - But not in favor of uniformness. - In some cases the metadata may benefit performance by automatically tweaking query parameters (e.g. fetching strategies). - We usually expose the native connector objects too. Time to market - MetaModel makes it easy to cover all the data sources with the same codebase. - Typical 80/20 trade-off scenario. - Avoid premature optimization.

- 45. 2015- © GMC - 45 The future of Apache MetaModel? Stuff currently being built (version 4.4.0): • Pluggable operators in queries • Pluggable functions in queries • Phase-out Java 6 support Ideas being prototyped: • Elaborate metadata service • Spark module (Turn a Query into a RDD or DataFrame) • More connectors - Apache Solr, Neo4j, Couchbase, Riak

- 46. Let's see some code Query a remote CSV file https://github.com/kaspersorensen/ApacheBigDataMetaModelExample