Apache Spark Internals - Part 2

2 likes463 views

The idea of this presentation is to understand more about Apache Spark internals. How it deals with resilience for each component, how Shard allocation works using RDD and how it abstract data partitioning and cluster distribution complexity.

1 of 24

Downloaded 16 times

![Shard allocation

Example - Case 1 - explanation

val = 2.000.000 / 8 = 250.000

Range partition:

[0] -> 2 - 250.000

[1] -> 250.001 - 500.000

[2] -> 500.001 - 750.000

[3] -> 750.001 - 1.000.000

[4] -> 1.000.001 - 1.025.000

[5] -> 1.025.001 - 1.050,000

[6] -> 1.050.001 - 1.075.000

[7] -> 1.075.001 - 2.000.000](https://image.slidesharecdn.com/apachespark-part2-170119123923/85/Apache-Spark-Internals-Part-2-20-320.jpg)

Ad

Recommended

Apache Spark

Apache SparkJéferson Machado Apache Spark presentation showing how Spark works internally and how it deals with distributed data.

A comparison with Apache Hadoop is made in order to show the advantages that Apache Spark.

Introduction to Hadoop and MapReduce

Introduction to Hadoop and MapReduceDr Ganesh Iyer Slides of the workshop conducted in Model Engineering College, Ernakulam, and Sree Narayana Gurukulam College, Kadayiruppu

Kerala, India in December 2010

NoSQL @ CodeMash 2010

NoSQL @ CodeMash 2010Ben Scofield This document discusses NoSQL databases and provides examples of different types. It begins by discussing motivations for NoSQL like performance, scalability, and flexibility over traditional relational databases. It then categorizes NoSQL databases as key-value stores like Redis and Tokyo Cabinet, column-oriented stores like BigTable and Cassandra, document-oriented stores like CouchDB and MongoDB, and graph databases like Neo4J. For each category it provides comparisons on attributes and examples using different languages.

ClickHouse Features for Advanced Users, by Aleksei Milovidov

ClickHouse Features for Advanced Users, by Aleksei MilovidovAltinity Ltd This document summarizes key features for advanced users of ClickHouse, an open-source column-oriented database management system. It describes sample keys that can be defined in MergeTree tables to generate instant reports on large customer data. It also summarizes intermediate aggregation states, consistency modes, and tools for processing data without a server like clickhouse-local.

ClickHouse Unleashed 2020: Our Favorite New Features for Your Analytical Appl...

ClickHouse Unleashed 2020: Our Favorite New Features for Your Analytical Appl...Altinity Ltd Robert Hodges is the Altinity CEO with over 30 years of experience in DBMS, virtualization, and security. ClickHouse is the 20th DBMS he has worked with. Alexander Zaitsev is the Altinity CTO and founder with decades of experience designing and operating petabyte-scale analytic systems. Vitaliy Zakaznikov is the QA Architect with over 13 years of testing hardware and software and is the author of the TestFlows open source testing tool.

MongoDB & Hadoop: Flexible Hourly Batch Processing Model

MongoDB & Hadoop: Flexible Hourly Batch Processing ModelTakahiro Inoue The document describes how to use Gawk to perform data aggregation from log files on Hadoop by having Gawk act as both the mapper and reducer to incrementally count user actions and output the results. Specific user actions are matched and counted using operations like incrby and hincrby and the results are grouped by user ID and output to be consumed by another system. Gawk is able to perform the entire MapReduce job internally without requiring Hadoop.

Tricks every ClickHouse designer should know, by Robert Hodges, Altinity CEO

Tricks every ClickHouse designer should know, by Robert Hodges, Altinity CEOAltinity Ltd "Tricks every ClickHouse designer should know" by Robert Hodges, Altinity CEO

Presented at Meetup in Mountain View, August 13, 2019

Cassandra&map reduce

Cassandra&map reducevlaskinvlad Cassandra and MapReduce were used to handle the large data volumes for real-time bidding auctions, which could generate 10-30GB of data per day for mobile devices in Russia alone. Cassandra was used to store the data due to its ability to handle big data and indexing, while MapReduce jobs were run on the data for data mining and analysis. However, directly integrating Cassandra with MapReduce proved to be slow and unable to handle large reads. Instead, reading the raw Cassandra data files (SSTables) directly with MapReduce provided better performance.

Introduction to MapReduce and Hadoop

Introduction to MapReduce and HadoopMohamed Elsaka This was the first session about Hadoop and MapReduce. It introduces what Hadoop is and its main components. It also covers the how to program your first MapReduce task and how to run it on pseudo distributed Hadoop installation.

This session was given in Arabic and i may provide a video for the session soon.

Advanced Apache Cassandra Operations with JMX

Advanced Apache Cassandra Operations with JMXzznate Nodetool is a command line interface for managing a Cassandra node. It provides commands for node administration, cluster inspection, table operations and more. The nodetool info command displays node-specific information such as status, load, memory usage and cache details. The nodetool compactionstats command shows compaction status including active tasks and progress. The nodetool tablestats command displays statistics for a specific table including read/write counts, space usage, cache usage and latency.

Hands on MapR -- Viadea

Hands on MapR -- Viadeaviadea The document discusses MapR cluster management using the MapR CLI. It provides examples of starting and stopping a MapR cluster, managing nodes, volumes, mirrors and schedules. Specific examples include creating volumes, linking mirrors to volumes, syncing mirrors, moving volumes and nodes to different topologies, and creating schedules to automate tasks.

orca_fosdem_FINAL

orca_fosdem_FINALaddisonhuddy ORCA is a query optimization framework that is modular, extensible, and pluggable. It provides smarter optimization techniques like partition elimination, subquery unnesting, and join ordering. ORCA transforms all possible logical plans into physical operators and applies many logical transformations during optimization. The talk introduces ORCA and its internals, demonstrates a pairing technique to split an aggregate into local and global components, and discusses opportunities to improve ORCA integration with PostgreSQL.

C* Summit 2013: Cassandra at Instagram by Rick Branson

C* Summit 2013: Cassandra at Instagram by Rick BransonDataStax Academy Speaker: Rick Branson, Infrastructure Engineer at Instagram

Cassandra is a critical part of Instagram's large scale site infrastructure that supports more than 100 million active users. This talk is a practical deep dive into data models, systems architecture, and challenges encountered during the implementation process.

Gnocchi Profiling v2

Gnocchi Profiling v2Gordon Chung additional benchmarks on Gnocchi+Ceph. this shows how to stabilise performance and how performance changes as Ceph is scaled out.

Hadoop & MapReduce

Hadoop & MapReduceNewvewm This is a deck of slides from a recent meetup of AWS Usergroup Greece, presented by Ioannis Konstantinou from the National Technical University of Athens.

The presentation gives an overview of the Map Reduce framework and a description of its open source implementation (Hadoop). Amazon's own Elastic Map Reduce (EMR) service is also mentioned. With the growing interest on Big Data this is a good introduction to the subject.

Cassandra Backups and Restorations Using Ansible (Joshua Wickman, Knewton) | ...

Cassandra Backups and Restorations Using Ansible (Joshua Wickman, Knewton) | ...DataStax A solid backup strategy is a DBA's bread and butter. Cassandra's nodetool snapshot makes it easy to back up the SSTable files, but there remains the question of where to put them and how. Knewton's backup strategy uses Ansible for distributed backups and stores them in S3.

Unfortunately, it's all too easy to store backups that are essentially useless due to the absence of a coherent restoration strategy. This problem proved much more difficult and nuanced than taking the backups themselves. I will discuss Knewton's restoration strategy, which again leverages Ansible, yet I will focus on general principles and pitfalls to be avoided. In particular, restores necessitated modifying our backup strategy to generate cluster-wide metadata that is critical for a smooth automated restoration. Such pitfalls indicate that a restore-focused backup design leads to faster and more deterministic recovery.

About the Speaker

Joshua Wickman Database Engineer, Knewton

Dr. Joshua Wickman is currently part of the database team at Knewton, a NYC tech company focused on adaptive learning. He earned his PhD at the University of Delaware in 2012, where he studied particle physics models of the early universe. After a brief stint teaching college physics, he entered the New York tech industry in 2014 working with NoSQL, first with MongoDB and then Cassandra. He was certified in Cassandra at his first Cassandra Summit in 2015.

Gnocchi Profiling 2.1.x

Gnocchi Profiling 2.1.xGordon Chung initial profile of Gnocchi 2.1.x, this shows performance using a basic configuration of Gnocchi with standard configuration of Ceph 10.2.1.

Concurrent and Distributed Applications with Akka, Java and Scala

Concurrent and Distributed Applications with Akka, Java and ScalaFernando Rodriguez The document discusses concurrency and distribution in applications using Akka, Java and Scala. It covers key concepts like actors, messages and message passing in Akka. It describes how actors encapsulate state and behavior, communicate asynchronously via message passing and provide built-in concurrency without shared state or locks. The document also discusses patterns for building distributed, fault tolerant and scalable applications using Akka actors deployed locally or remotely.

38 39 v-dbench june 16

38 39 v-dbench june 16Senthilkumar E Vdbench is an open source storage benchmarking tool that can generate customizable I/O workloads. It is highly portable and works across various operating systems. Vdbench allows users to control workload parameters like I/O rate, file size, transfer size, and cache hit percentage. After running tests, it generates reports in HTML format with performance metrics.

ClickHouse Materialized Views: The Magic Continues

ClickHouse Materialized Views: The Magic ContinuesAltinity Ltd Slides for the webinar, presented on February 26, 2020

By Robert Hodges, Altinity CEO

Materialized views are the killer feature of ClickHouse, and the Altinity 2019 webinar on how they work was very popular. Join this updated webinar to learn how to use materialized views to speed up queries hundreds of times. We'll cover basic design, last point queries, using TTLs to drop source data, counting unique values, and other useful tricks. Finally, we'll cover recent improvements that make materialized views more useful than ever.

Failing gracefully

Failing gracefullyTakuya UESHIN This document discusses Spark's approach to fault tolerance. It begins by defining what failures Spark supports, such as transient errors and worker failures, but not systemic exceptions or driver failures. It then outlines Spark's execution model, which involves creating a DAG of RDDs, developing a logical execution plan, and scheduling and executing individual tasks across stages. When failures occur, Spark retries failed tasks and uses speculative execution to mitigate stragglers. It also discusses how the shuffle works and checkpointing can help with recovery in multi-stage jobs.

ClickHouse materialized views - a secret weapon for high performance analytic...

ClickHouse materialized views - a secret weapon for high performance analytic...Altinity Ltd ClickHouse materialized views allow you to precompute aggregates and transform data to improve query performance. Materialized views can store precomputed aggregates from a source table to speed up aggregation queries over 100x. They can also retrieve the last data point for each item over 100x faster than scanning the raw data table. Materialized views provide a way to optimize data storage layout and indexing to improve query efficiency.

Apache Spark with Scala

Apache Spark with ScalaFernando Rodriguez Apache Spark is a fast, general engine for large-scale data processing. It supports batch, interactive, and stream processing using a unified API. Spark uses resilient distributed datasets (RDDs), which are immutable distributed collections of objects that can be operated on in parallel. RDDs support transformations like map, filter, and reduce and actions that return final results to the driver program. Spark provides high-level APIs in Scala, Java, Python, and R and an optimized engine that supports general computation graphs for data analysis.

C07.heaps

C07.heapssyeda madeha azmat This document discusses heaps and their use in implementing priority queues. It describes how a max-heap or min-heap is a complete binary tree that satisfies the heap property, where each internal node is greater than or equal to its children. It explains how a heap can be represented using a simple array and how to build a heap from an unsorted array in O(n) time by sifting nodes down. Deleting the root element and maintaining the heap property takes O(log n) time. Heap sort uses a heap to sort an array in O(n log n) time. Priority queues can be efficiently implemented using max-heaps.

Gnocchi v3

Gnocchi v3Gordon Chung The document summarizes optimisation opportunities and testing results for Gnocchi v3 compared to v2. It discusses improvements to coordination and scheduling to minimize contention, performance improvements for large datasets, a new storage format to reduce operations and disk size, and benchmark results showing processing time reductions of up to 23% and write throughput increases.

Webinar: Secrets of ClickHouse Query Performance, by Robert Hodges

Webinar: Secrets of ClickHouse Query Performance, by Robert HodgesAltinity Ltd From webinars September 11 and September 17, 2019

ClickHouse is famous for speed. That said, you can almost always make it faster! This webinar uses examples to teach you how to deduce what queries are actually doing by reading the system log and system tables. We'll then explore standard ways to increase query speed: data types and encodings, filtering, join reordering, skip indexes, materialized views, session parameters, to name just a few. In each case we'll circle back to query plans and system metrics to demonstrate changes in ClickHouse behavior that explain the boost in performance. We hope you'll enjoy the first step to becoming a ClickHouse performance guru!

Speaker Bio:

Robert Hodges is CEO of Altinity, which offers enterprise support for ClickHouse. He has over three decades of experience in data management spanning 20 different DBMS types. ClickHouse is his current favorite. ;)

Gnocchi v4 (preview)

Gnocchi v4 (preview)Gordon Chung early benchmarks on pre-release Gnocchi v4. includes benchmark comparison between all-ceph v3.x driver versus all-ceph v4 driver. also, shows benchmark using redis+ceph deployment.

MongoDB London 2013: Basic Replication in MongoDB presented by Marc Schwering...

MongoDB London 2013: Basic Replication in MongoDB presented by Marc Schwering...MongoDB The document discusses MongoDB replication and replica sets. It covers the lifecycle of replica sets including creation, initialization, failure, and recovery. It also discusses replica set roles and configuration options. Additionally, it addresses considerations for developing with replica sets like strong/delayed consistency, write concerns, tagging, and read preferences. Finally, it discusses operational considerations like maintenance/upgrades and different replica set deployment architectures.

Everyday I'm Shuffling - Tips for Writing Better Spark Programs, Strata San J...

Everyday I'm Shuffling - Tips for Writing Better Spark Programs, Strata San J...Databricks Watch video at: http://youtu.be/Wg2boMqLjCg

Want to learn how to write faster and more efficient programs for Apache Spark? Two Spark experts from Databricks, Vida Ha and Holden Karau, provide some performance tuning and testing tips for your Spark applications

Apache Cassandra at Macys

Apache Cassandra at MacysDataStax Academy Cassandra was chosen over other NoSQL options like MongoDB for its scalability and ability to handle a projected 10x growth in data and shift to real-time updates. A proof-of-concept showed Cassandra and ActiveSpaces performing similarly for initial loads, writes and reads. Cassandra was selected due to its open source nature. The data model transitioned from lists to maps to a compound key with JSON to optimize for queries. Ongoing work includes upgrading Cassandra, integrating Spark, and improving JSON schema management and asynchronous operations.

Ad

More Related Content

What's hot (20)

Introduction to MapReduce and Hadoop

Introduction to MapReduce and HadoopMohamed Elsaka This was the first session about Hadoop and MapReduce. It introduces what Hadoop is and its main components. It also covers the how to program your first MapReduce task and how to run it on pseudo distributed Hadoop installation.

This session was given in Arabic and i may provide a video for the session soon.

Advanced Apache Cassandra Operations with JMX

Advanced Apache Cassandra Operations with JMXzznate Nodetool is a command line interface for managing a Cassandra node. It provides commands for node administration, cluster inspection, table operations and more. The nodetool info command displays node-specific information such as status, load, memory usage and cache details. The nodetool compactionstats command shows compaction status including active tasks and progress. The nodetool tablestats command displays statistics for a specific table including read/write counts, space usage, cache usage and latency.

Hands on MapR -- Viadea

Hands on MapR -- Viadeaviadea The document discusses MapR cluster management using the MapR CLI. It provides examples of starting and stopping a MapR cluster, managing nodes, volumes, mirrors and schedules. Specific examples include creating volumes, linking mirrors to volumes, syncing mirrors, moving volumes and nodes to different topologies, and creating schedules to automate tasks.

orca_fosdem_FINAL

orca_fosdem_FINALaddisonhuddy ORCA is a query optimization framework that is modular, extensible, and pluggable. It provides smarter optimization techniques like partition elimination, subquery unnesting, and join ordering. ORCA transforms all possible logical plans into physical operators and applies many logical transformations during optimization. The talk introduces ORCA and its internals, demonstrates a pairing technique to split an aggregate into local and global components, and discusses opportunities to improve ORCA integration with PostgreSQL.

C* Summit 2013: Cassandra at Instagram by Rick Branson

C* Summit 2013: Cassandra at Instagram by Rick BransonDataStax Academy Speaker: Rick Branson, Infrastructure Engineer at Instagram

Cassandra is a critical part of Instagram's large scale site infrastructure that supports more than 100 million active users. This talk is a practical deep dive into data models, systems architecture, and challenges encountered during the implementation process.

Gnocchi Profiling v2

Gnocchi Profiling v2Gordon Chung additional benchmarks on Gnocchi+Ceph. this shows how to stabilise performance and how performance changes as Ceph is scaled out.

Hadoop & MapReduce

Hadoop & MapReduceNewvewm This is a deck of slides from a recent meetup of AWS Usergroup Greece, presented by Ioannis Konstantinou from the National Technical University of Athens.

The presentation gives an overview of the Map Reduce framework and a description of its open source implementation (Hadoop). Amazon's own Elastic Map Reduce (EMR) service is also mentioned. With the growing interest on Big Data this is a good introduction to the subject.

Cassandra Backups and Restorations Using Ansible (Joshua Wickman, Knewton) | ...

Cassandra Backups and Restorations Using Ansible (Joshua Wickman, Knewton) | ...DataStax A solid backup strategy is a DBA's bread and butter. Cassandra's nodetool snapshot makes it easy to back up the SSTable files, but there remains the question of where to put them and how. Knewton's backup strategy uses Ansible for distributed backups and stores them in S3.

Unfortunately, it's all too easy to store backups that are essentially useless due to the absence of a coherent restoration strategy. This problem proved much more difficult and nuanced than taking the backups themselves. I will discuss Knewton's restoration strategy, which again leverages Ansible, yet I will focus on general principles and pitfalls to be avoided. In particular, restores necessitated modifying our backup strategy to generate cluster-wide metadata that is critical for a smooth automated restoration. Such pitfalls indicate that a restore-focused backup design leads to faster and more deterministic recovery.

About the Speaker

Joshua Wickman Database Engineer, Knewton

Dr. Joshua Wickman is currently part of the database team at Knewton, a NYC tech company focused on adaptive learning. He earned his PhD at the University of Delaware in 2012, where he studied particle physics models of the early universe. After a brief stint teaching college physics, he entered the New York tech industry in 2014 working with NoSQL, first with MongoDB and then Cassandra. He was certified in Cassandra at his first Cassandra Summit in 2015.

Gnocchi Profiling 2.1.x

Gnocchi Profiling 2.1.xGordon Chung initial profile of Gnocchi 2.1.x, this shows performance using a basic configuration of Gnocchi with standard configuration of Ceph 10.2.1.

Concurrent and Distributed Applications with Akka, Java and Scala

Concurrent and Distributed Applications with Akka, Java and ScalaFernando Rodriguez The document discusses concurrency and distribution in applications using Akka, Java and Scala. It covers key concepts like actors, messages and message passing in Akka. It describes how actors encapsulate state and behavior, communicate asynchronously via message passing and provide built-in concurrency without shared state or locks. The document also discusses patterns for building distributed, fault tolerant and scalable applications using Akka actors deployed locally or remotely.

38 39 v-dbench june 16

38 39 v-dbench june 16Senthilkumar E Vdbench is an open source storage benchmarking tool that can generate customizable I/O workloads. It is highly portable and works across various operating systems. Vdbench allows users to control workload parameters like I/O rate, file size, transfer size, and cache hit percentage. After running tests, it generates reports in HTML format with performance metrics.

ClickHouse Materialized Views: The Magic Continues

ClickHouse Materialized Views: The Magic ContinuesAltinity Ltd Slides for the webinar, presented on February 26, 2020

By Robert Hodges, Altinity CEO

Materialized views are the killer feature of ClickHouse, and the Altinity 2019 webinar on how they work was very popular. Join this updated webinar to learn how to use materialized views to speed up queries hundreds of times. We'll cover basic design, last point queries, using TTLs to drop source data, counting unique values, and other useful tricks. Finally, we'll cover recent improvements that make materialized views more useful than ever.

Failing gracefully

Failing gracefullyTakuya UESHIN This document discusses Spark's approach to fault tolerance. It begins by defining what failures Spark supports, such as transient errors and worker failures, but not systemic exceptions or driver failures. It then outlines Spark's execution model, which involves creating a DAG of RDDs, developing a logical execution plan, and scheduling and executing individual tasks across stages. When failures occur, Spark retries failed tasks and uses speculative execution to mitigate stragglers. It also discusses how the shuffle works and checkpointing can help with recovery in multi-stage jobs.

ClickHouse materialized views - a secret weapon for high performance analytic...

ClickHouse materialized views - a secret weapon for high performance analytic...Altinity Ltd ClickHouse materialized views allow you to precompute aggregates and transform data to improve query performance. Materialized views can store precomputed aggregates from a source table to speed up aggregation queries over 100x. They can also retrieve the last data point for each item over 100x faster than scanning the raw data table. Materialized views provide a way to optimize data storage layout and indexing to improve query efficiency.

Apache Spark with Scala

Apache Spark with ScalaFernando Rodriguez Apache Spark is a fast, general engine for large-scale data processing. It supports batch, interactive, and stream processing using a unified API. Spark uses resilient distributed datasets (RDDs), which are immutable distributed collections of objects that can be operated on in parallel. RDDs support transformations like map, filter, and reduce and actions that return final results to the driver program. Spark provides high-level APIs in Scala, Java, Python, and R and an optimized engine that supports general computation graphs for data analysis.

C07.heaps

C07.heapssyeda madeha azmat This document discusses heaps and their use in implementing priority queues. It describes how a max-heap or min-heap is a complete binary tree that satisfies the heap property, where each internal node is greater than or equal to its children. It explains how a heap can be represented using a simple array and how to build a heap from an unsorted array in O(n) time by sifting nodes down. Deleting the root element and maintaining the heap property takes O(log n) time. Heap sort uses a heap to sort an array in O(n log n) time. Priority queues can be efficiently implemented using max-heaps.

Gnocchi v3

Gnocchi v3Gordon Chung The document summarizes optimisation opportunities and testing results for Gnocchi v3 compared to v2. It discusses improvements to coordination and scheduling to minimize contention, performance improvements for large datasets, a new storage format to reduce operations and disk size, and benchmark results showing processing time reductions of up to 23% and write throughput increases.

Webinar: Secrets of ClickHouse Query Performance, by Robert Hodges

Webinar: Secrets of ClickHouse Query Performance, by Robert HodgesAltinity Ltd From webinars September 11 and September 17, 2019

ClickHouse is famous for speed. That said, you can almost always make it faster! This webinar uses examples to teach you how to deduce what queries are actually doing by reading the system log and system tables. We'll then explore standard ways to increase query speed: data types and encodings, filtering, join reordering, skip indexes, materialized views, session parameters, to name just a few. In each case we'll circle back to query plans and system metrics to demonstrate changes in ClickHouse behavior that explain the boost in performance. We hope you'll enjoy the first step to becoming a ClickHouse performance guru!

Speaker Bio:

Robert Hodges is CEO of Altinity, which offers enterprise support for ClickHouse. He has over three decades of experience in data management spanning 20 different DBMS types. ClickHouse is his current favorite. ;)

Gnocchi v4 (preview)

Gnocchi v4 (preview)Gordon Chung early benchmarks on pre-release Gnocchi v4. includes benchmark comparison between all-ceph v3.x driver versus all-ceph v4 driver. also, shows benchmark using redis+ceph deployment.

MongoDB London 2013: Basic Replication in MongoDB presented by Marc Schwering...

MongoDB London 2013: Basic Replication in MongoDB presented by Marc Schwering...MongoDB The document discusses MongoDB replication and replica sets. It covers the lifecycle of replica sets including creation, initialization, failure, and recovery. It also discusses replica set roles and configuration options. Additionally, it addresses considerations for developing with replica sets like strong/delayed consistency, write concerns, tagging, and read preferences. Finally, it discusses operational considerations like maintenance/upgrades and different replica set deployment architectures.

Similar to Apache Spark Internals - Part 2 (20)

Everyday I'm Shuffling - Tips for Writing Better Spark Programs, Strata San J...

Everyday I'm Shuffling - Tips for Writing Better Spark Programs, Strata San J...Databricks Watch video at: http://youtu.be/Wg2boMqLjCg

Want to learn how to write faster and more efficient programs for Apache Spark? Two Spark experts from Databricks, Vida Ha and Holden Karau, provide some performance tuning and testing tips for your Spark applications

Apache Cassandra at Macys

Apache Cassandra at MacysDataStax Academy Cassandra was chosen over other NoSQL options like MongoDB for its scalability and ability to handle a projected 10x growth in data and shift to real-time updates. A proof-of-concept showed Cassandra and ActiveSpaces performing similarly for initial loads, writes and reads. Cassandra was selected due to its open source nature. The data model transitioned from lists to maps to a compound key with JSON to optimize for queries. Ongoing work includes upgrading Cassandra, integrating Spark, and improving JSON schema management and asynchronous operations.

Apache Flink & Graph Processing

Apache Flink & Graph ProcessingVasia Kalavri This document discusses batch and stream graph processing with Apache Flink. It provides an overview of distributed graph processing and Flink's graph processing APIs, Gelly for batch graph processing and Gelly-Stream for continuous graph processing on data streams. It describes how Gelly and Gelly-Stream allow for processing large and dynamic graphs in a distributed fashion using Flink's dataflow engine.

NTU ML TENSORFLOW

NTU ML TENSORFLOWMark Chang This document provides an overview of TensorFlow and how to implement machine learning models using TensorFlow. It discusses:

1) How to install TensorFlow either directly or within a virtual environment.

2) The key concepts of TensorFlow including computational graphs, sessions, placeholders, variables and how they are used to define and run computations.

3) An example one-layer perceptron model for MNIST image classification to demonstrate these concepts in action.

Scaling Up: How Switching to Apache Spark Improved Performance, Realizability...

Scaling Up: How Switching to Apache Spark Improved Performance, Realizability...Databricks This document summarizes how switching from Hadoop to Spark for data science applications improved performance, reliability, and reduced costs at Salesforce. Some key issues addressed were handling large datasets across many S3 prefixes, efficiently computing segment overlap on skewed user data, and performing joins on highly skewed datasets. These changes resulted in applications that were 100x faster, used 10x less data, had fewer failures, and reduced infrastructure costs.

Scaling up data science applications

Scaling up data science applicationsKexin Xie This document summarizes how switching from Hadoop to Spark for data science applications improved performance, reliability, and reduced costs at Salesforce. Some key issues addressed were handling large datasets across many S3 prefixes, efficiently computing segment overlap on skewed user data, and performing joins on highly skewed datasets. These changes resulted in applications that were 100x faster, used 10x less data, had fewer failures, and reduced infrastructure costs.

Large volume data analysis on the Typesafe Reactive Platform

Large volume data analysis on the Typesafe Reactive PlatformMartin Zapletal The document discusses several topics related to distributed machine learning and distributed systems including:

- Reasons for using distributed machine learning being either due to large data volumes or hopes of increased speed

- Failure rates of hardware and network links in large data centers

- Examples of database inconsistencies and data loss caused by network partitions in different distributed databases

- Key aspects of distributed data processing including data storage, integration, computing primitives, and analytics

Learn Matlab

Learn MatlabAbd El Kareem Ahmed The document provides an introduction to MATLAB, describing the main environment components like the command window and workspace. It explains basic MATLAB functions and variables, arrays, control flow statements, M-files, and common plotting and data analysis tools. Examples are given of different array operations, control structures, and building simple MATLAB functions and scripts.

nlp dl 1.pdf

nlp dl 1.pdfnyomans1 This document discusses a deep learning course at Carnegie Mellon University for fall 2016 that covers topics like popularization of backpropagation for training neural networks, unsupervised pre-training of deep networks, and convolutional neural networks winning the ImageNet competition in 2012 leading to increased interest in deep learning research. It also shows the architecture of a convolutional neural network and how it is split across two GPUs during training.

Building a Scalable Distributed Stats Infrastructure with Storm and KairosDB

Building a Scalable Distributed Stats Infrastructure with Storm and KairosDBCody Ray Building a Scalable Distributed Stats Infrastructure with Storm and KairosDB

Many startups collect and display stats and other time-series data for their users. A supposedly-simple NoSQL option such as MongoDB is often chosen to get started... which soon becomes 50 distributed replica sets as volume increases. This talk describes how we designed a scalable distributed stats infrastructure from the ground up. KairosDB, a rewrite of OpenTSDB built on top of Cassandra, provides a solid foundation for storing time-series data. Unfortunately, though, it has some limitations: millisecond time granularity and lack of atomic upsert operations which make counting (critical to any stats infrastructure) a challenge. Additionally, running KairosDB atop Cassandra inside AWS brings its own set of challenges, such as managing Cassandra seeds and AWS security groups as you grow or shrink your Cassandra ring. In this deep-dive talk, we explore how we've used a mix of open-source and in-house tools to tackle these challenges and build a robust, scalable, distributed stats infrastructure.

Samantha Wang [InfluxData] | Best Practices on How to Transform Your Data Usi...![Samantha Wang [InfluxData] | Best Practices on How to Transform Your Data Usi...](https://cdn.slidesharecdn.com/ss_thumbnails/influxdaysnorthamerica2020-201005185945-thumbnail.jpg?width=560&fit=bounds)

![Samantha Wang [InfluxData] | Best Practices on How to Transform Your Data Usi...](https://cdn.slidesharecdn.com/ss_thumbnails/influxdaysnorthamerica2020-201005185945-thumbnail.jpg?width=560&fit=bounds)

![Samantha Wang [InfluxData] | Best Practices on How to Transform Your Data Usi...](https://cdn.slidesharecdn.com/ss_thumbnails/influxdaysnorthamerica2020-201005185945-thumbnail.jpg?width=560&fit=bounds)

![Samantha Wang [InfluxData] | Best Practices on How to Transform Your Data Usi...](https://cdn.slidesharecdn.com/ss_thumbnails/influxdaysnorthamerica2020-201005185945-thumbnail.jpg?width=560&fit=bounds)

Samantha Wang [InfluxData] | Best Practices on How to Transform Your Data Usi...InfluxData Samantha Wang [InfluxData] | Best Practices on How to Transform Your Data Using Telegraf and Flux | InfluxDays Virtual Experience NA 2020

Tulsa techfest Spark Core Aug 5th 2016

Tulsa techfest Spark Core Aug 5th 2016Mark Smith Spark DataFrames provide a more optimized way to work with structured data compared to RDDs. DataFrames allow skipping unnecessary data partitions when querying, such as only reading data partitions that match certain criteria like date ranges. DataFrames also integrate better with storage formats like Parquet, which stores data in a columnar format and allows skipping unrelated columns during queries to improve performance. The code examples demonstrate loading a CSV file into a DataFrame, finding and removing duplicate records, and counting duplicate records by key to identify potential duplicates.

クラウドDWHとしても進化を続けるPivotal Greenplumご紹介

クラウドDWHとしても進化を続けるPivotal Greenplumご紹介Masayuki Matsushita MPP型データベースとして世界初でオープンソース化されたGreenplumは、PostgreSQLを祖先に持ち、コミュニティの力も借りながら、クラウドDWH(マルチクラウド対応・データ分析プラットフォーム)としても進化を続けています。

このセッションでは、マルチクラウド対応の方向性・メリットを始め、アドバンストアナリティクス(地理・空間情報、グラフ分析、テキスト分析)やOSS系モジュール(Hadoop(HDFS)、SPARK、Kafka)への対応など、「Pivotal Greenplum」の最新情報をご紹介します。

Introduction to Cache-Oblivious Algorithms

Introduction to Cache-Oblivious AlgorithmsChristopher Gilbert In today's world developers are faced with the problem of writing high-performing algorithms that scale efficiently across a range of multi-core processors. Traditional blocked algorithms need to be tuned to each processor, but the discovery of cache-oblivious algorithms give developers new tools to tackle this emerging challenge. In this talk you will learn about the external memory model, the cache-oblivious model, and how to use these tools to create faster, scalable algorithms.

RAPIDS: ускоряем Pandas и scikit-learn на GPU Павел Клеменков, NVidia

RAPIDS: ускоряем Pandas и scikit-learn на GPU Павел Клеменков, NVidiaMail.ru Group Все мы знаем, что наш любимый Pandas исключительно однопоточный, а модели из scikit-learn часто учатся не очень быстро даже в несколько процессов. Поэтому в докладе я расскажу о проекте RAPIDS - наборе библиотек для анализа данных и построения предиктивных моделей с использованием NVIDIA GPU. В докладе я предложу подискутировать о том, что закон Мура больше не выполняется, рассмотрю принципы работы архитектуры CUDA. Разберу библиотеки cuDF и cuML, а также постараюсь предельно честно рассказать о том, ждать ли чуда от перехода на GPU и в каких случаях чудо неизбежно.

Lecture12

Lecture12tt_aljobory This document provides an introduction and overview of ScaLAPACK, a library of linear algebra routines for solving dense linear algebra problems in parallel. ScaLAPACK relies on BLAS, LAPACK, BLACS, and PBLAS to perform operations on dense matrices distributed across multiple processors using a 2D block cyclic distribution. Example code is provided to initialize the processor grid with BLACS, distribute a matrix and vector among processes, and solve a system of linear equations using ScaLAPACK routines.

User biglm

User biglmjohnatan pladott This document discusses strategies for analyzing moderately large data sets in R when the total number of observations (N) times the total number of variables (P) is too large to fit into memory all at once. It presents several approaches including loading data incrementally from files or databases, using randomized algorithms, and outsourcing computations to SQL. Specific examples discussed include linear regression on large data sets and whole genome association studies.

Inferno Scalable Deep Learning on Spark

Inferno Scalable Deep Learning on SparkDataWorks Summit/Hadoop Summit This document summarizes a presentation on Inferno, a system for scalable deep learning on Apache Spark. Inferno allows deep learning models built with Blaze, La Trobe University's deep learning system, to be trained faster using a Spark cluster. It coordinates distributed training of Blaze models across worker nodes, with optimized communication of weights and hyperparameters. Evaluation shows Inferno can train ResNet models on ImageNet up to 4-5 times faster than a single GPU. The presentation provides an overview of deep learning and Spark, demonstrates how Blaze allows easy model building, and explains Inferno's architecture for distributed deep learning training on Spark.

Apache Flink: API, runtime, and project roadmap

Apache Flink: API, runtime, and project roadmapKostas Tzoumas The document provides an overview of Apache Flink, an open source stream processing framework. It discusses Flink's programming model using DataSets and transformations, real-time stream processing capabilities, windowing functions, iterative processing, and visualization tools. It also provides details on Flink's runtime architecture, including its use of pipelined and staged execution, optimizations for iterative algorithms, and how the Flink optimizer selects execution plans.

Workshop "Can my .NET application use less CPU / RAM?", Yevhen Tatarynov

Workshop "Can my .NET application use less CPU / RAM?", Yevhen TatarynovFwdays In most cases it’s very hard to predict the number of resources needed for your .NET application. But If you spot some abnormal CPU or RAM usage, how to answer the question “Can my application use less?”.

Let’s see samples from real projects, where optimal resource usage by the application became one of the values for the product owner and see how less resource consumption can be.

The workshop will be actual for .NET developers who are interested in optimization of .NET applications, QA engineers who involved performance testing of .NET applications. It also will be interesting to everyone who "suspected" their .NET applications of non-optimal use of resources, but for some reason did not start an investigation.

Ad

More from Jéferson Machado (20)

druid.io

druid.ioJéferson Machado Druid.io presentation to show how druid.io works and how can he provide 100% of uptime through his Architecture.

Node.js, is it the solution for every problem?

Node.js, is it the solution for every problem?Jéferson Machado Presentation that try to explain how Node.js works, how can it deal with millions of concurrent users using just a single thread. Also there are some slides to talk about which problems it helps to solve.

Plano de carreira, isso funciona ? Me consegue uma bússola por favor. (Agile...

Plano de carreira, isso funciona ? Me consegue uma bússola por favor. (Agile...Jéferson Machado O documento discute práticas para o desenvolvimento de carreira de funcionários de forma mais efetiva, mencionando problemas comuns em abordagens tradicionais e propondo alternativas como acompanhamentos semanais, feedback 360, retrospectivas e métricas de retenção.

How to innovate ?

How to innovate ?Jéferson Machado The document provides tips on how to innovate, noting that innovation is necessary for organizational survival and growth. It identifies common barriers to innovation like fear of failure and an unwillingness to take risks. The document recommends cultivating a culture that encourages risk-taking and accepts both success and failure. It also suggests techniques for generating new ideas like brainstorming, design thinking, and using Google's design sprint process to take ideas from conception to prototyping in just five days.

Management 3.0 (TDC 2015)

Management 3.0 (TDC 2015)Jéferson Machado This document discusses different approaches to management and how organizations can evolve to focus more on people. It describes management 1.0 as hierarchical with top-down decision making, while management 2.0 introduced some improvements. Management 3.0 focuses on complexity and networks with an emphasis on people. Various views on leadership and motivation are presented, as well as how to develop skills, define values, create self-organizing teams, and use systems thinking to continuously improve and adapt the organization.

Management 3.0, como evoluir pessoas em conjunto com sua organização.

Management 3.0, como evoluir pessoas em conjunto com sua organização.Jéferson Machado O documento discute a evolução da gestão de pessoas em organizações, da Gestão 1.0 hierárquica para a Gestão 2.0 de soluções pontuais e agora para a Gestão 3.0 focada nas pessoas e sistemas complexos. A Gestão 3.0 requer gerentes como jardineiros, times auto-organizados e valores compartilhados, desenvolvendo competências por meio do modelo Shu-Ha-Ri e crescendo estruturas de forma sustentável usando o modelo de Panarchy.

Business model generation

Business model generationJéferson Machado This document provides links to resources about business model canvases and the lean canvas model. It includes links to the Business Model Generation website which has information on the business model canvas template and a downloadable poster version. Another link discusses why the lean canvas model is useful. Additional links direct to the LeanStack website and various social media profiles of someone named Jefersonm that may provide further information on canvases and lean startup methodologies.

Lean & T.O.C

Lean & T.O.CJéferson Machado This document contains contact information for Jéferson Machado, including social network URLs and links to resources about agile coaching, lean production, theory of constraints, quality management, and lean manufacturing. It lists Jéferson's Twitter, Facebook, GitHub, and SlideShare profiles, as well as several websites containing information on lean principles and practices.

Kanban metrics

Kanban metricsJéferson Machado This document provides links to resources about creating cumulative flow diagrams with Google Spreadsheets, including a specific spreadsheet document and links to the author's profiles on SlideShare, Twitter, and GitHub. The spreadsheet allows users to easily make cumulative flow diagrams visualizing project progress over time directly in a Google Sheets file.

Python - basics

Python - basicsJéferson Machado Python was created in 1980 by Guido Van Rossum. There are differences between Python versions 2.x and 3.x related to compatibility, understandability, maturity, and robustness. Some frameworks like Twisted and gevent have not yet migrated to Python 3.x, while others like NumPy, Django, Flask, CherryPy, Pyramid, PIL, cx_Freeze, and Py2exe have migrated. The document also includes code examples and information about a Python developer.

GROW

GROWJéferson Machado This document provides coaching tips for structuring a coaching session. It recommends using the tool as a map to focus the conversation. It also suggests asking open questions, listening well, enjoying silence, reflecting on goals, and preparing with notes. The document concludes by sharing the coach's social network information.

1 jeferson (grow)

1 jeferson (grow)Jéferson Machado This document provides coaching tips for structuring a coaching session. It recommends using the tool as a map to focus the conversation. It also suggests asking open questions, listening well, enjoying silence, reflecting on goals, and preparing with notes. The document concludes by sharing the coach's social network information.

Apache Pig

Apache PigJéferson Machado Pig is an extensible platform for analyzing large datasets that allows both local and MapReduce execution. It features a simple language called Pig Latin for loading, filtering, transforming, and storing data. Pig Latin scripts can be debugged using commands like DUMP, DESCRIBE, EXPLAIN, and ILLUSTRATE and allows working with data through operations like FILTER, FOREACH, GROUP, UNION, and SPLIT.

Apache HBase

Apache HBaseJéferson Machado HDFS provides a distributed file system that integrates seamlessly with Hadoop, offering features like fault tolerance, load balancing, and easy addition or removal of nodes. It is well suited for problems involving hundreds of millions or billions of rows of data where the full functionality of an RDBMS is not required, as long as sufficient hardware is available. The history of HDFS includes its origins in Google's BigTable paper in 2006 and its evolution as a Hadoop sub-project in 2008 before becoming a top-level Apache project in 2010.

Scala

ScalaJéferson Machado Scala is a general-purpose programming language that was created in 2003 by Martin Odersky and began life as a research project. It combines object-oriented and functional programming paradigms, with static typing and pattern matching, to enable the development of robust and scalable applications.

Management 3.0

Management 3.0Jéferson Machado Marse focuses on managing emergent and higher goals, not using targets or financial motivation. He believes that if you offer rewards, the goal becomes obtaining the reward rather than the goal itself. Marse also advocates making people's jobs dynamic to keep them engaged.

Theory of constraints

Theory of constraintsJéferson Machado This document provides resources for learning about constraints, the five focusing steps, and using the theory of constraints with lean including links to websites explaining these topics and a video. It also includes contact information for Jéferson Machado including his social media profiles on Twitter, Facebook, GitHub, and SlideShare.

Spring MVC

Spring MVCJéferson Machado This document discusses the Model-View-Controller (MVC) framework and provides an example Spring MVC application structure. It shows the typical flow of an MVC app including the WEB-INF configuration files, controllers, and JSP view pages. It also includes the author's social media links.

Continuous integration

Continuous integrationJéferson Machado This document discusses the practices of continuous integration for building software features. It lists the key practices as maintaining a single source repository, automating builds, making builds self-testing, committing to the mainline daily, building every commit on an integration machine, keeping builds fast, testing in a clone of the production environment, and ensuring all work is visible to everyone. Continuous integration allows for more frequent integration and testing of code changes, which helps reduce the likelihood and impact of bugs.

Ad

Recently uploaded (20)

Level 1-Safety.pptx Presentation of Electrical Safety

Level 1-Safety.pptx Presentation of Electrical SafetyJoseAlbertoCariasDel Level 1-Safety.pptx Presentation of Electrical Safety

Artificial Intelligence (AI) basics.pptx

Artificial Intelligence (AI) basics.pptxaditichinar its all about Artificial Intelligence(Ai) and Machine Learning and not on advanced level you can study before the exam or can check for some information on Ai for project

Data Structures_Introduction to algorithms.pptx

Data Structures_Introduction to algorithms.pptxRushaliDeshmukh2 Concept of Problem Solving, Introduction to Algorithms, Characteristics of Algorithms, Introduction to Data Structure, Data Structure Classification (Linear and Non-linear, Static and Dynamic, Persistent and Ephemeral data structures), Time complexity and Space complexity, Asymptotic Notation - The Big-O, Omega and Theta notation, Algorithmic upper bounds, lower bounds, Best, Worst and Average case analysis of an Algorithm, Abstract Data Types (ADT)

Compiler Design Unit1 PPT Phases of Compiler.pptx

Compiler Design Unit1 PPT Phases of Compiler.pptxRushaliDeshmukh2 Compiler phases

Lexical analysis

Syntax analysis

Semantic analysis

Intermediate (machine-independent) code generation

Intermediate code optimization

Target (machine-dependent) code generation

Target code optimization

fluke dealers in bangalore..............

fluke dealers in bangalore..............Haresh Vaswani The Fluke 925 is a vane anemometer, a handheld device designed to measure wind speed, air flow (volume), and temperature. It features a separate sensor and display unit, allowing greater flexibility and ease of use in tight or hard-to-reach spaces. The Fluke 925 is particularly suitable for HVAC (heating, ventilation, and air conditioning) maintenance in both residential and commercial buildings, offering a durable and cost-effective solution for routine airflow diagnostics.

The Gaussian Process Modeling Module in UQLab

The Gaussian Process Modeling Module in UQLabJournal of Soft Computing in Civil Engineering We introduce the Gaussian process (GP) modeling module developed within the UQLab software framework. The novel design of the GP-module aims at providing seamless integration of GP modeling into any uncertainty quantification workflow, as well as a standalone surrogate modeling tool. We first briefly present the key mathematical tools on the basis of GP modeling (a.k.a. Kriging), as well as the associated theoretical and computational framework. We then provide an extensive overview of the available features of the software and demonstrate its flexibility and user-friendliness. Finally, we showcase the usage and the performance of the software on several applications borrowed from different fields of engineering. These include a basic surrogate of a well-known analytical benchmark function; a hierarchical Kriging example applied to wind turbine aero-servo-elastic simulations and a more complex geotechnical example that requires a non-stationary, user-defined correlation function. The GP-module, like the rest of the scientific code that is shipped with UQLab, is open source (BSD license).

Data Structures_Linear data structures Linked Lists.pptx

Data Structures_Linear data structures Linked Lists.pptxRushaliDeshmukh2 Concept of Linear Data Structures, Array as an ADT, Merging of two arrays, Storage

Representation, Linear list – singly linked list implementation, insertion, deletion and searching operations on linear list, circularly linked lists- Operations for Circularly linked lists, doubly linked

list implementation, insertion, deletion and searching operations, applications of linked lists.

Introduction to FLUID MECHANICS & KINEMATICS

Introduction to FLUID MECHANICS & KINEMATICSnarayanaswamygdas Fluid mechanics is the branch of physics concerned with the mechanics of fluids (liquids, gases, and plasmas) and the forces on them. Originally applied to water (hydromechanics), it found applications in a wide range of disciplines, including mechanical, aerospace, civil, chemical, and biomedical engineering, as well as geophysics, oceanography, meteorology, astrophysics, and biology.

It can be divided into fluid statics, the study of various fluids at rest, and fluid dynamics.

Fluid statics, also known as hydrostatics, is the study of fluids at rest, specifically when there's no relative motion between fluid particles. It focuses on the conditions under which fluids are in stable equilibrium and doesn't involve fluid motion.

Fluid kinematics is the branch of fluid mechanics that focuses on describing and analyzing the motion of fluids, such as liquids and gases, without considering the forces that cause the motion. It deals with the geometrical and temporal aspects of fluid flow, including velocity and acceleration. Fluid dynamics, on the other hand, considers the forces acting on the fluid.

Fluid dynamics is the study of the effect of forces on fluid motion. It is a branch of continuum mechanics, a subject which models matter without using the information that it is made out of atoms; that is, it models matter from a macroscopic viewpoint rather than from microscopic.

Fluid mechanics, especially fluid dynamics, is an active field of research, typically mathematically complex. Many problems are partly or wholly unsolved and are best addressed by numerical methods, typically using computers. A modern discipline, called computational fluid dynamics (CFD), is devoted to this approach. Particle image velocimetry, an experimental method for visualizing and analyzing fluid flow, also takes advantage of the highly visual nature of fluid flow.

Fundamentally, every fluid mechanical system is assumed to obey the basic laws :

Conservation of mass

Conservation of energy

Conservation of momentum

The continuum assumption

For example, the assumption that mass is conserved means that for any fixed control volume (for example, a spherical volume)—enclosed by a control surface—the rate of change of the mass contained in that volume is equal to the rate at which mass is passing through the surface from outside to inside, minus the rate at which mass is passing from inside to outside. This can be expressed as an equation in integral form over the control volume.

The continuum assumption is an idealization of continuum mechanics under which fluids can be treated as continuous, even though, on a microscopic scale, they are composed of molecules. Under the continuum assumption, macroscopic (observed/measurable) properties such as density, pressure, temperature, and bulk velocity are taken to be well-defined at "infinitesimal" volume elements—small in comparison to the characteristic length scale of the system, but large in comparison to molecular length scale

Value Stream Mapping Worskshops for Intelligent Continuous Security

Value Stream Mapping Worskshops for Intelligent Continuous SecurityMarc Hornbeek This presentation provides detailed guidance and tools for conducting Current State and Future State Value Stream Mapping workshops for Intelligent Continuous Security.

Process Parameter Optimization for Minimizing Springback in Cold Drawing Proc...

Process Parameter Optimization for Minimizing Springback in Cold Drawing Proc...Journal of Soft Computing in Civil Engineering In tube drawing process, a tube is pulled out through a die and a plug to reduce its diameter and thickness as per the requirement. Dimensional accuracy of cold drawn tubes plays a vital role in the further quality of end products and controlling rejection in manufacturing processes of these end products. Springback phenomenon is the elastic strain recovery after removal of forming loads, causes geometrical inaccuracies in drawn tubes. Further, this leads to difficulty in achieving close dimensional tolerances. In the present work springback of EN 8 D tube material is studied for various cold drawing parameters. The process parameters in this work include die semi-angle, land width and drawing speed. The experimentation is done using Taguchi’s L36 orthogonal array, and then optimization is done in data analysis software Minitab 17. The results of ANOVA shows that 15 degrees die semi-angle,5 mm land width and 6 m/min drawing speed yields least springback. Furthermore, optimization algorithms named Particle Swarm Optimization (PSO), Simulated Annealing (SA) and Genetic Algorithm (GA) are applied which shows that 15 degrees die semi-angle, 10 mm land width and 8 m/min drawing speed results in minimal springback with almost 10.5 % improvement. Finally, the results of experimentation are validated with Finite Element Analysis technique using ANSYS.

Compiler Design_Lexical Analysis phase.pptx

Compiler Design_Lexical Analysis phase.pptxRushaliDeshmukh2 The role of the lexical analyzer

Specification of tokens

Finite state machines

From a regular expressions to an NFA

Convert NFA to DFA

Transforming grammars and regular expressions

Transforming automata to grammars

Language for specifying lexical analyzers

theory-slides-for react for beginners.pptx

theory-slides-for react for beginners.pptxsanchezvanessa7896 Everything you need to know about react.

Raish Khanji GTU 8th sem Internship Report.pdf

Raish Khanji GTU 8th sem Internship Report.pdfRaishKhanji This report details the practical experiences gained during an internship at Indo German Tool

Room, Ahmedabad. The internship provided hands-on training in various manufacturing technologies, encompassing both conventional and advanced techniques. Significant emphasis was placed on machining processes, including operation and fundamental

understanding of lathe and milling machines. Furthermore, the internship incorporated

modern welding technology, notably through the application of an Augmented Reality (AR)

simulator, offering a safe and effective environment for skill development. Exposure to

industrial automation was achieved through practical exercises in Programmable Logic Controllers (PLCs) using Siemens TIA software and direct operation of industrial robots

utilizing teach pendants. The principles and practical aspects of Computer Numerical Control

(CNC) technology were also explored. Complementing these manufacturing processes, the

internship included extensive application of SolidWorks software for design and modeling tasks. This comprehensive practical training has provided a foundational understanding of

key aspects of modern manufacturing and design, enhancing the technical proficiency and readiness for future engineering endeavors.

Process Parameter Optimization for Minimizing Springback in Cold Drawing Proc...

Process Parameter Optimization for Minimizing Springback in Cold Drawing Proc...Journal of Soft Computing in Civil Engineering

Apache Spark Internals - Part 2

- 2. Resilience Worker Executor Task Task Worker Executor Task Task Worker Executor Task Task Driver Master (Active) Job Job

- 3. Resilience Driver Worker Executor Task Task Worker Executor Task Task Worker Executor Task Task Driver Master (Active) Job Job ./spark-submit --deploy-mode "cluster" --supervise

- 4. Resilience Driver Worker Executor Task Task Worker Executor Task Task Worker Executor Task Task Driver Master (Active) Job Job Driver runs in the worker

- 5. Resilience Driver Worker Executor Task Task Worker Executor Task Task Worker Executor Task Task Driver Master (Active) Job Job Driver is started in a new worker

- 6. Resilience Master Master (Active) Job Job Zookeeper Master (Standby) Job Job Worker Executor Task Task Worker Executor Task Task Worker Executor Task Task Driver

- 7. Master (Active) Resilience Master Zookeeper Master (Standby) Job Job Job Job Driver Worker Executor Task Task Worker Executor Task Task Worker Executor Task Task

- 8. Master (Active) Resilience Worker Zookeeper Master (Standby) Job Job Job Job Driver Driver and Executor are also killed Worker Executor Task Task Worker Executor Task Task Worker Executor Task Task

- 9. Master (Active) Resilience Worker Zookeeper Master (Standby) Job Job Job Job Driver Worker is relaunched Driver and executor are also relaunched Worker Executor Task Task Worker Executor Task Task Worker Executor Task Task

- 10. Resilience RDD ● An RDD is an immutable, deterministically re-computable, distributed dataset. ● Each RDD remembers the lineage of deterministic operations that were used on a fault-tolerant input dataset to create it. ● If any partition of an RDD is lost due to a worker node failure, then that partition can be re-computed from the original fault-tolerant dataset using the lineage of operations. ● Assuming that all of the RDD transformations are deterministic, the data in the final transformed RDD will always be the same irrespective of failures in the Spark cluster.

- 11. cache logLinesRDD cleanedRDD collect() errosRDD Error, ts, msg1, ts, msg3, ts Error, ts, msg4, ts, msg1 Error, ts, msg1, ts Error, ts, ts, msg1 filter(fx) errorMsg1RDD count() saveToCassandra() Resilience RDD filter(fx) coalesce(2) If partition is damaged, it can recompute from his parent, if parents aren't in memory anymore, it'll reprocess from disk

- 12. RDD Shard allocation RDD - Resilient Distributed Dataset Error, ts, msg1, warn, ts, msg2, Error info, ts, msg8, info, ts, msg3, info Error, ts, msg5, ts, info Error, ts, info, msg9, ts, info, Error File (hdfs, s3, etc) partitions Default Algorithm: Hash partition RDD = Data abstraction It hides data partitioning and distribution complexity

- 13. Worker Executor Task Worker Executor Task Worker Executor TaskTask RDD Shard allocation RDD - Resilient Distributed Dataset Error, ts, msg1, warn, ts, msg2, Error info, ts, msg8, info, ts, msg3, info Error, ts, msg5, ts, info Error, ts, info, msg9, ts, info, Error File (hdfs, s3, etc) Default Algorithm: Hash partition partitions

- 14. Shard allocation Partition configuration - numbers of partition Specifying number of partition By default it create one partition for each processor core

- 15. Default settings: ● mapreduce.input.fileinputformat.split.minsize = 1 byte (minSize) ● dfs.block.size = 128 MB (cluster) / fs.local.block.size = 32 MB (local) (blockSize) Calculating goal size: e.g.: ● Total size of input files = T = 599 MB ● Desired number of partitions = P = 30 (parametrized) ● Partition Goal size = PGS = T / P = 599 / 30 = 19 MB Result: Math.max(1, Math.min(19, 32)) == 19 MB Shard allocation Partition configuration - defining partition size

- 16. Fewer partitions ● more data in each partition ● less network and disk i/o ● fast access to data ● increase memory pressure ● don't make use of parallelism More partitions ● increase parallelism processing ● less data in each partition ● more network and disk i/o Shard allocation Trade offs

- 17. Shard allocation Example - Cases - auxiliary function

- 18. Shard allocation Example - Case 1 Correctly distributed between 8 partitions

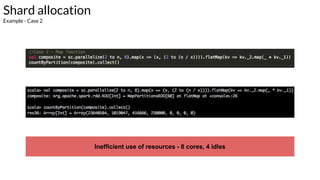

- 19. Shard allocation Example - Case 2 Inefficient use of resources - 8 cores, 4 idles

- 20. Shard allocation Example - Case 1 - explanation val = 2.000.000 / 8 = 250.000 Range partition: [0] -> 2 - 250.000 [1] -> 250.001 - 500.000 [2] -> 500.001 - 750.000 [3] -> 750.001 - 1.000.000 [4] -> 1.000.001 - 1.025.000 [5] -> 1.025.001 - 1.050,000 [6] -> 1.050.001 - 1.075.000 [7] -> 1.075.001 - 2.000.000

- 21. Shard allocation Example - Case 2 - explanation val = 2.000.000 map() turned into (key,value), where: Each value was a list of all integers we needed to multiply the key by to find the multiples up to 2 million. For half of them (all keys greater than 1 million) this meant that the value was an empty list E.g.: (2, Range(2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127, 128, 129, 130, 131, 132, 133, 134, 135, 136, 137, 138, 139, 140, 141, 142, 143, 144, 145, 146, 147, 148, 149, 150, 151, 152, 153, 154, 155, 156, 157, 158, 159,... ... (200013,Range(2, 3, 4, 5, 6, 7, 8, 9))

- 22. Shard allocation Example - Case 3 - fixing it using repartition Correctly distributed between 8 partitions Shuffle partitions