Ad

Apache Spark & Streaming

- 1. Apache Spark Buenos Aires High Scalability Buenos Aires, Argentina, Dic 2014 Fernando Rodriguez Olivera @frodriguez

- 2. Fernando Rodriguez Olivera Professor at Universidad Austral (Distributed Systems, Compiler Design, Operating Systems, …) Creator of mvnrepository.com Organizer at Buenos Aires High Scalability Group, Professor at nosqlessentials.com Twitter: @frodriguez

- 3. Apache Spark Apache Spark is a Fast and General Engine for Large-Scale data processing In-Memory computing primitives Supports for Batch, Interactive, Iterative and Stream processing with Unified API

- 4. Apache Spark Unified API for multiple kind of processing Batch (high throughput) Interactive (low latency) Stream (continuous processing) Iterative (results used immediately)

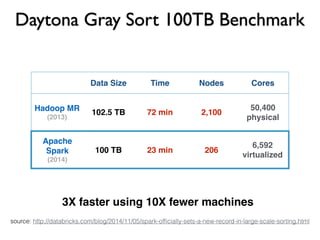

- 5. Daytona Gray Sort 100TB Benchmark Data Size Time Nodes Cores Hadoop MR (2013) 102.5 TB 72 min 2,100 50,400 physical Apache Spark (2014) 100 TB 23 min 206 6,592 virtualized source: http://databricks.com/blog/2014/11/05/spark-officially-sets-a-new-record-in-large-scale-sorting.html

- 6. Daytona Gray Sort 100TB Benchmark Data Size Time Nodes Cores Hadoop MR (2013) 102.5 TB 72 min 2,100 50,400 physical Apache Spark (2014) 100 TB 23 min 206 6,592 virtualized 3X faster using 10X fewer machines source: http://databricks.com/blog/2014/11/05/spark-officially-sets-a-new-record-in-large-scale-sorting.html

- 7. Hadoop vs Spark for Iterative Proc Logistic regression in Hadoop and Spark source: https://spark.apache.org/

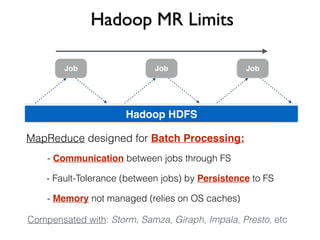

- 8. Hadoop MR Limits Job Job Job Hadoop HDFS MapReduce designed for Batch Processing: - Communication between jobs through FS - Fault-Tolerance (between jobs) by Persistence to FS - Memory not managed (relies on OS caches) Compensated with: Storm, Samza, Giraph, Impala, Presto, etc

- 9. Apache Spark Apache Spark (Core) Spark SQL Spark Streaming ML lib GraphX Powered by Scala and Akka APIs for Java, Scala, Python

- 10. Resilient Distributed Datasets (RDD) RDD of Strings Hello World ... ... A New Line ... ... hello The End ... Immutable Collection of Objects

- 11. Resilient Distributed Datasets (RDD) RDD of Strings Hello World ... ... A New Line ... ... hello The End ... Immutable Collection of Objects Partitioned and Distributed

- 12. Resilient Distributed Datasets (RDD) RDD of Strings Hello World ... ... A New Line ... ... hello The End ... Immutable Collection of Objects Partitioned and Distributed Stored in Memory

- 13. Resilient Distributed Datasets (RDD) RDD of Strings Hello World ... ... A New Line ... ... hello The End ... Immutable Collection of Objects Partitioned and Distributed Stored in Memory Partitions Recomputed on Failure

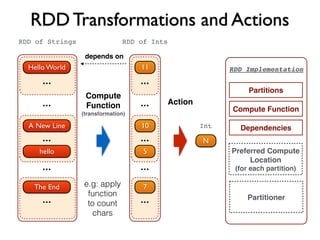

- 14. RDD Transformations and Actions RDD of Strings Hello World ... ... A New Line ... ... hello The End ...

- 15. RDD Transformations and Actions RDD of Strings Hello World ... ... A New Line ... ... hello The End ... Compute Function (transformation) e.g: apply function to count chars

- 16. RDD Transformations and Actions RDD of Strings Hello World ... ... A New Line ... ... hello The End ... RDD of Ints 11 ... ... 10 ... 5 ... 7 ... Compute Function (transformation) e.g: apply function to count chars

- 17. RDD Transformations and Actions RDD of Strings Hello World ... ... A New Line ... ... hello The End ... RDD of Ints 11 ... ... 10 ... 5 ... 7 ... depends on Compute Function (transformation) e.g: apply function to count chars

- 18. RDD Transformations and Actions RDD of Strings Hello World ... ... A New Line ... ... hello The End ... RDD of Ints 11 ... ... 10 ... 5 ... 7 ... depends on Compute Function (transformation) e.g: apply function to count chars Int N Action

- 19. RDD Transformations and Actions RDD of Strings Hello World ... ... A New Line ... ... hello The End ... RDD of Ints 11 ... ... 10 ... 5 ... 7 ... Compute Function (transformation) e.g: apply function to count chars RDD Implementation Partitions Compute Function Dependencies Preferred Compute Location (for each partition) Partitioner depends on Int N Action

- 20. Spark API val spark = new SparkContext() val lines = spark.textFile(“hdfs://docs/”) // RDD[String] val nonEmpty = lines.filter(l => l.nonEmpty()) // RDD[String] val count = nonEmpty.count Scala SparkContext spark = new SparkContext(); JavaRDD<String> lines = spark.textFile(“hdfs://docs/”) JavaRDD<String> nonEmpty = lines.filter(l -> l.length() > 0); long count = nonEmpty.count(); Java 8 Python spark = SparkContext() lines = spark.textFile(“hdfs://docs/”) nonEmpty = lines.filter(lambda line: len(line) > 0) count = nonEmpty.count()

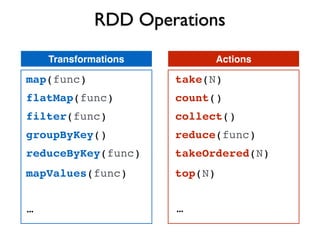

- 21. RDD Operations Transformations Actions map(func) flatMap(func) filter(func) take(N) count() collect() groupByKey() reduceByKey(func) reduce(func) mapValues(func) takeOrdered(N) top(N) … …

- 22. Text Processing Example Top Words by Frequency (Step by step)

- 23. Create RDD from External Data Apache Spark Hadoop FileSystem, I/O Formats, Codecs HDFS S3 HBase MongoDB Cassandra … Spark can read/write from any data source supported by Hadoop I/O via Hadoop is optional (e.g: Cassandra connector bypass Hadoop) // Step 1 - Create RDD from Hadoop Text File val docs = spark.textFile(“/docs/”) ElasticSearch

- 24. Function map RDD[String] RDD[String] Hello World A New Line hello ... The end .map(line => line.toLowerCase) hello world a new line hello ... the end = .map(_.toLowerCase) // Step 2 - Convert lines to lower case val lower = docs.map(line => line.toLowerCase)

- 25. Functions map and flatMap RDD[String] hello world a new line hello ... the end

- 26. Functions map and flatMap RDD[String] hello world a new line hello ... the end .map( … ) RDD[Array[String]] _.split(“s+”) hello a hello ... the world new line end

- 27. Functions map and flatMap RDD[String] hello world a new line hello ... the end .map( … ) RDD[Array[String]] _.split(“s+”) hello a hello ... the world new line end .flatten RDD[String] hello world a new line ... *

- 28. Functions map and flatMap hello world a new line hello ... the end RDD[Array[String]] hello .flatMap(line => line.split(“s+“)) RDD[String] .map( … ) _.split(“s+”) a hello ... the world new line end .flatten RDD[String] hello world a new line ... *

- 29. Functions map and flatMap RDD[String] hello world a new line hello ... the end .map( … ) RDD[Array[String]] _.split(“s+”) hello a world new line hello ... the end .flatten .flatMap(line => line.split(“s+“)) RDD[String] world // Step 3 - Split lines into words val words = lower.flatMap(line => line.split(“s+“)) Note: flatten() not available in spark, only flatMap hello a new line ... *

- 30. Key-Value Pairs RDD[Tuple2[String, Int]] RDD[String] RDD[(String, Int)] hello world a new line hello ... hello world a new line hello ... .map(word => Tuple2(word, 1)) 1 1 1 1 1 1 = .map(word => (word, 1)) // Step 4 - Split lines into words val counts = words.map(word => (word, 1)) Pair RDD

- 31. Shuffling RDD[(String, Int)] hello world a new line hello 1 1 1 1 1 1

- 32. Shuffling hello world a new line hello 1 1 1 1 1 1 RDD[(String, Iterator[Int])] world a 1 1 new 1 line hello 1 1 .groupByKey 1 RDD[(String, Int)]

- 33. Shuffling hello world a new line hello 1 1 1 1 1 1 RDD[(String, Iterator[Int])] world a 1 1 new 1 line hello 1 1 .groupByKey 1 RDD[(String, Int)] RDD[(String, Int)] world a 1 1 new 1 line hello 1 2 .mapValues _.reduce(…) (a,b) => a+b

- 34. Shuffling hello world a new line hello 1 1 1 1 1 1 RDD[(String, Iterator[Int])] world a 1 1 new 1 line hello 1 1 .groupByKey 1 .reduceByKey((a, b) => a + b) RDD[(String, Int)] RDD[(String, Int)] world a 1 1 new 1 line hello 1 2 .mapValues _.reduce(…) (a,b) => a+b

- 35. Shuffling RDD[(String, Int)] hello world a new line hello 1 1 1 1 1 1 RDD[(String, Iterator[Int])] world a 1 1 new 1 line hello 1 1 .groupByKey 1 RDD[(String, Int)] .reduceByKey((a, b) => a + b) // Step 5 - Count all words val freq = counts.reduceByKey(_ + _) world a 1 1 new 1 line hello 1 2 .mapValues _.reduce(…) (a,b) => a+b

- 36. Top N (Prepare data) RDD[(String, Int)] RDD[(Int, String)] world a 1 1 new 1 line hello 1 2 .map(_.swap) 1 1 1 new world a line hello 1 2 // Step 6 - Swap tuples (partial code) freq.map(_.swap)

- 37. Top N (First Attempt) RDD[(Int, String)] 1 1 1 new world a line hello 1 2

- 38. Top N (First Attempt) RDD[(Int, String)] 1 1 1 new world a line hello 1 2 .sortByKey RDD[(Int, String)] 2 1 1 a hello world new line 1 1 (sortByKey(false) for descending)

- 39. Top N (First Attempt) RDD[(Int, String)] Array[(Int, String)] 1 1 1 new world a line hello 1 2 hello world 2 1 RDD[(Int, String)] 2 1 1 a hello world .sortByKey .take(N) new line 1 1 (sortByKey(false) for descending)

- 40. Top N Array[(Int, String)] RDD[(Int, String)] 1 1 1 new world a line hello 1 2 world a 1 1 .top(N) hello line 2 1 hello line 2 1 local top N * local top N * reduction * local top N implemented by bounded priority queues // Step 6 - Swap tuples (complete code) val top = freq.map(_.swap).top(N)

- 41. Top Words by Frequency (Full Code) val spark = new SparkContext() // RDD creation from external data source val docs = spark.textFile(“hdfs://docs/”) // Split lines into words val lower = docs.map(line => line.toLowerCase) val words = lower.flatMap(line => line.split(“s+“)) val counts = words.map(word => (word, 1)) // Count all words (automatic combination) val freq = counts.reduceByKey(_ + _) // Swap tuples and get top results val top = freq.map(_.swap).top(N) top.foreach(println)

- 42. RDD Persistence (in-memory) RDD … ... ... … ... … ... … ... .cache() .persist() .persist(storageLevel) StorageLevel: MEMORY_ONLY, MEMORY_ONLY_SER, MEMORY_AND_DISK, MEMORY_AND_DISK_SER, DISK_ONLY, … (memory only) (memory only) (lazy persistence & caching)

- 43. RDD Lineage RDD Transformations words = sc.textFile(“hdfs://large/file/”) HadoopRDD .map(_.toLowerCase) .flatMap(_.split(“ “)) FlatMappedRDD nums = words.filter(_.matches(“[0-9]+”)) alpha.count() MappedRDD alpha = words.filter(_.matches(“[a-z]+”)) FilteredRDD FilteredRDD Lineage (built on the driver by the transformations) Action (run job on the cluster)

- 44. SchemaRDD & SQL SchemaRDD Row ... ... Row ... ... Row Row ... RRD of Row + Column Metadata Queries with SQL Support for Reflection, JSON, Parquet, …

- 45. SchemaRDD & SQL topWords Row ... ... Row ... ... Row Row ... case class Word(text: String, n: Int) val wordsFreq = freq.map { case (text, count) => Word(text, count) } // RDD[Word] wordsFreq.registerTempTable("wordsFreq") val topWords = sql("select text, n from wordsFreq order by n desc limit 20”) // RDD[Row] topWords.collect().foreach(println)

- 46. Spark Streaming DStream RDD RDD RDD RDD RDD RDD Data Collected, Buffered and Replicated by a Receiver (one per DStream) then Pushed to a stream as small RDDs Configurable Batch Intervals. e.g: 1 second, 5 seconds, 5 minutes Receiver e.g: Kafka, Kinesis, Flume, Sockets, Akka etc

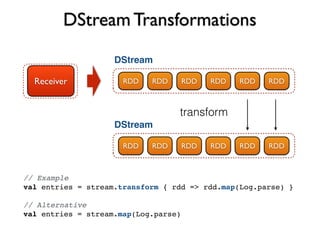

- 47. DStream Transformations DStream RDD RDD RDD RDD RDD RDD DStream transform RDD RDD RDD RDD RDD RDD Receiver // Example val entries = stream.transform { rdd => rdd.map(Log.parse) } // Alternative val entries = stream.map(Log.parse)

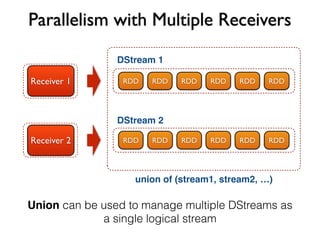

- 48. Parallelism with Multiple Receivers DStream 1 Receiver 1 RDD RDD RDD RDD RDD RDD DStream 2 Receiver 2 RDD RDD RDD RDD RDD RDD union of (stream1, stream2, …) Union can be used to manage multiple DStreams as a single logical stream

- 49. Sliding Windows DStream RDD RDD RDD RDD RDD RDD DStream … … … W3 W2 W1 Window Length: 3, Sliding Interval: 1 Receiver

- 50. Deployment with Hadoop A B C D /large/file allocates resources (cores and memory) Spark Worker Data Node 1 Application Spark Worker Data Node 3 Spark Worker Data Node 4 Spark Worker Data Node 2 A C B C A B A B Spark Master Name Node RF 3 D D D C Client Submit App (mode=cluster) Driver Executors Executors Executors DN + Spark HDFS Spark

- 51. Fernando Rodriguez Olivera twitter: @frodriguez

![Spark API

val spark = new SparkContext()

val lines = spark.textFile(“hdfs://docs/”) // RDD[String]

val nonEmpty = lines.filter(l => l.nonEmpty()) // RDD[String]

val count = nonEmpty.count

Scala

SparkContext spark = new SparkContext();

JavaRDD<String> lines = spark.textFile(“hdfs://docs/”)

JavaRDD<String> nonEmpty = lines.filter(l -> l.length() > 0);

long count = nonEmpty.count();

Java 8 Python

spark = SparkContext()

lines = spark.textFile(“hdfs://docs/”)

nonEmpty = lines.filter(lambda line: len(line) > 0)

count = nonEmpty.count()](https://image.slidesharecdn.com/apachesparkhs-141212054258-conversion-gate01/85/Apache-Spark-Streaming-20-320.jpg)

![Function map

RDD[String] RDD[String]

Hello World

A New Line

hello

...

The end

.map(line => line.toLowerCase)

hello world

a new line

hello

...

the end

=

.map(_.toLowerCase)

// Step 2 - Convert lines to lower case

val lower = docs.map(line => line.toLowerCase)](https://image.slidesharecdn.com/apachesparkhs-141212054258-conversion-gate01/85/Apache-Spark-Streaming-24-320.jpg)

![Functions map and flatMap

RDD[String]

hello world

a new line

hello

...

the end](https://image.slidesharecdn.com/apachesparkhs-141212054258-conversion-gate01/85/Apache-Spark-Streaming-25-320.jpg)

![Functions map and flatMap

RDD[String]

hello world

a new line

hello

...

the end

.map( … )

RDD[Array[String]]

_.split(“s+”)

hello

a

hello

...

the

world

new line

end](https://image.slidesharecdn.com/apachesparkhs-141212054258-conversion-gate01/85/Apache-Spark-Streaming-26-320.jpg)

![Functions map and flatMap

RDD[String]

hello world

a new line

hello

...

the end

.map( … )

RDD[Array[String]]

_.split(“s+”)

hello

a

hello

...

the

world

new line

end

.flatten

RDD[String]

hello

world

a

new

line

...

*](https://image.slidesharecdn.com/apachesparkhs-141212054258-conversion-gate01/85/Apache-Spark-Streaming-27-320.jpg)

![Functions map and flatMap

hello world

a new line

hello

...

the end

RDD[Array[String]]

hello

.flatMap(line => line.split(“s+“))

RDD[String]

.map( … )

_.split(“s+”)

a

hello

...

the

world

new line

end

.flatten

RDD[String]

hello

world

a

new

line

...

*](https://image.slidesharecdn.com/apachesparkhs-141212054258-conversion-gate01/85/Apache-Spark-Streaming-28-320.jpg)

![Functions map and flatMap

RDD[String]

hello world

a new line

hello

...

the end

.map( … )

RDD[Array[String]]

_.split(“s+”)

hello

a

world

new line

hello

...

the

end

.flatten

.flatMap(line => line.split(“s+“))

RDD[String]

world

// Step 3 - Split lines into words

val words = lower.flatMap(line => line.split(“s+“))

Note: flatten() not available in spark, only flatMap

hello

a

new

line

...

*](https://image.slidesharecdn.com/apachesparkhs-141212054258-conversion-gate01/85/Apache-Spark-Streaming-29-320.jpg)

![Key-Value Pairs

RDD[Tuple2[String, Int]]

RDD[String] RDD[(String, Int)]

hello

world

a

new

line

hello

...

hello

world

a

new

line

hello

...

.map(word => Tuple2(word, 1))

1

1

1

1

1

1

=

.map(word => (word, 1))

// Step 4 - Split lines into words

val counts = words.map(word => (word, 1))

Pair RDD](https://image.slidesharecdn.com/apachesparkhs-141212054258-conversion-gate01/85/Apache-Spark-Streaming-30-320.jpg)

![Shuffling

RDD[(String, Int)]

hello

world

a

new

line

hello

1

1

1

1

1

1](https://image.slidesharecdn.com/apachesparkhs-141212054258-conversion-gate01/85/Apache-Spark-Streaming-31-320.jpg)

![Shuffling

hello

world

a

new

line

hello

1

1

1

1

1

1

RDD[(String, Iterator[Int])]

world

a

1

1

new 1

line

hello

1

1

.groupByKey

1

RDD[(String, Int)]](https://image.slidesharecdn.com/apachesparkhs-141212054258-conversion-gate01/85/Apache-Spark-Streaming-32-320.jpg)

![Shuffling

hello

world

a

new

line

hello

1

1

1

1

1

1

RDD[(String, Iterator[Int])]

world

a

1

1

new 1

line

hello

1

1

.groupByKey

1

RDD[(String, Int)]

RDD[(String, Int)]

world

a

1

1

new 1

line

hello

1

2

.mapValues

_.reduce(…)

(a,b) => a+b](https://image.slidesharecdn.com/apachesparkhs-141212054258-conversion-gate01/85/Apache-Spark-Streaming-33-320.jpg)

![Shuffling

hello

world

a

new

line

hello

1

1

1

1

1

1

RDD[(String, Iterator[Int])]

world

a

1

1

new 1

line

hello

1

1

.groupByKey

1

.reduceByKey((a, b) => a + b)

RDD[(String, Int)]

RDD[(String, Int)]

world

a

1

1

new 1

line

hello

1

2

.mapValues

_.reduce(…)

(a,b) => a+b](https://image.slidesharecdn.com/apachesparkhs-141212054258-conversion-gate01/85/Apache-Spark-Streaming-34-320.jpg)

![Shuffling

RDD[(String, Int)]

hello

world

a

new

line

hello

1

1

1

1

1

1

RDD[(String, Iterator[Int])]

world

a

1

1

new 1

line

hello

1

1

.groupByKey

1

RDD[(String, Int)]

.reduceByKey((a, b) => a + b)

// Step 5 - Count all words

val freq = counts.reduceByKey(_ + _)

world

a

1

1

new 1

line

hello

1

2

.mapValues

_.reduce(…)

(a,b) => a+b](https://image.slidesharecdn.com/apachesparkhs-141212054258-conversion-gate01/85/Apache-Spark-Streaming-35-320.jpg)

![Top N (Prepare data)

RDD[(String, Int)] RDD[(Int, String)]

world

a

1

1

new 1

line

hello

1

2

.map(_.swap)

1

1

1 new

world

a

line

hello

1

2

// Step 6 - Swap tuples (partial code)

freq.map(_.swap)](https://image.slidesharecdn.com/apachesparkhs-141212054258-conversion-gate01/85/Apache-Spark-Streaming-36-320.jpg)

![Top N (First Attempt)

RDD[(Int, String)]

1

1

1 new

world

a

line

hello

1

2](https://image.slidesharecdn.com/apachesparkhs-141212054258-conversion-gate01/85/Apache-Spark-Streaming-37-320.jpg)

![Top N (First Attempt)

RDD[(Int, String)]

1

1

1 new

world

a

line

hello

1

2

.sortByKey

RDD[(Int, String)]

2

1

1 a

hello

world

new

line

1

1

(sortByKey(false) for descending)](https://image.slidesharecdn.com/apachesparkhs-141212054258-conversion-gate01/85/Apache-Spark-Streaming-38-320.jpg)

![Top N (First Attempt)

RDD[(Int, String)] Array[(Int, String)]

1

1

1 new

world

a

line

hello

1

2

hello

world

2

1

RDD[(Int, String)]

2

1

1 a

hello

world

.sortByKey .take(N)

new

line

1

1

(sortByKey(false) for descending)](https://image.slidesharecdn.com/apachesparkhs-141212054258-conversion-gate01/85/Apache-Spark-Streaming-39-320.jpg)

![Top N

Array[(Int, String)]

RDD[(Int, String)]

1

1

1 new

world

a

line

hello

1

2

world

a

1

1

.top(N)

hello

line

2

1

hello

line

2

1

local top N *

local top N *

reduction

* local top N implemented by bounded priority queues

// Step 6 - Swap tuples (complete code)

val top = freq.map(_.swap).top(N)](https://image.slidesharecdn.com/apachesparkhs-141212054258-conversion-gate01/85/Apache-Spark-Streaming-40-320.jpg)

![RDD Lineage

RDD Transformations

words = sc.textFile(“hdfs://large/file/”) HadoopRDD

.map(_.toLowerCase)

.flatMap(_.split(“ “)) FlatMappedRDD

nums = words.filter(_.matches(“[0-9]+”))

alpha.count()

MappedRDD

alpha = words.filter(_.matches(“[a-z]+”))

FilteredRDD

FilteredRDD

Lineage

(built on the driver

by the transformations)

Action (run job on the cluster)](https://image.slidesharecdn.com/apachesparkhs-141212054258-conversion-gate01/85/Apache-Spark-Streaming-43-320.jpg)

![SchemaRDD & SQL

topWords

Row

...

...

Row

...

...

Row

Row

...

case class Word(text: String, n: Int)

val wordsFreq = freq.map {

case (text, count) => Word(text, count)

} // RDD[Word]

wordsFreq.registerTempTable("wordsFreq")

val topWords = sql("select text, n

from wordsFreq

order by n desc

limit 20”) // RDD[Row]

topWords.collect().foreach(println)](https://image.slidesharecdn.com/apachesparkhs-141212054258-conversion-gate01/85/Apache-Spark-Streaming-45-320.jpg)