Ad

Artificial Neural Networks-Supervised Learning Models

- 1. Soft Computing: Artificial Neural Networks Dr. Baljit Singh Khehra Professor CSE Department Baba Banda Singh Bahadur Engineering College Fatehgarh Sahib-140407, Punjab, India

- 2. Soft Computing Soft Computing is a new field to construct new generation of AI , known as Computational Intelligence. Soft Computing is branch in which it is tried to build Intelligent Machines. Hard Computing requires a precisely stated analytical model and often a lot of computation time. Many Analytical models are valid for ideal cases. Real world problems exist in a non-ideal environment. Soft Computing is a collection of methodologies that aim to exploit the tolerance for imprecision and uncertainty to achieve tractability, robustness and low solution cost. The role model for Soft Computing is the human mind.

- 3. Soft Computing Techniques Soft Computing is defined as collection of techniques spanning many fields that fall under various categories in computational intelligence. Soft Computing has main three branches: Artificial Neural Networks (ANNs) Fuzzy logic: To handle uncertainty (partial information about the problem, unreliable information, information from more than one source about the problem that are conflicting) Evolutionary Computing : contains optimization Algorithms Genetic Algorithm (GA) Ant Colony Optimization (ACO) algorithm Biogeography based Optimization (BBO) approach Bacterial foraging optimization algorithm Gravitational search algorithm Cuckoo optimization algorithm Teaching-Learning-Based Optimization (TLBO) Big Crunch Optimization (BBBCO) algorithm

- 4. Neural Networks (NNs) A group of interconnected people that interact with each others to exchange information. CN is a group of two or more computer systems linked together to exchange information. A network of neurons Neurons are the cells in the brain that convey information about the world around us A human brain has 86 billion neurons of different kinds. But, we use only 10% of them.

- 5. Comparison b/w Real & Artificial Neurons

- 6. Artificial Neural Networks (ANNs) To simulate human brain behavior Mimic information processing capability of Human Brain (Human Nervous System). Computational or Mathematical Models of Human Brain based on some assumptions: Information processing occurs at many simple elements called Neurons. Signals are passed b/w neurons by connection Links. Each connection link has an Associated Weight. The output of each neuron is obtained by passing its input through Activation Function.

- 7. A Simple Artificial Neural Network Activation function which is Binary Sigmoid function )( inyfy x e xf 1 1 )( 332211 3 1 wxwxwxwxy i i iin inyin e yfy 1 1 )(

- 8. A Simple Artificial Neural Network with Multi-layers Each ANN is composed of a collection of neurons grouped in layers. Note the three layers: input, intermediate (called the hidden layer) and output. Several hidden layers can be placed between the input and output layers. )( inyfy x e xf 1 1 )( j j jin zvy 2 1 3 1i iijinj xwz )( injj zfz

- 9. Artificial Neural Networks (ANNs) An ANN is characterized by Its pattern of connections b/w neurons (called its architecture) Its method of determining weights on connections (Training or Learning Algorithm) Its Activation function. Features of ANN Adaptive Learning Self-organization Real-Time operation Fault Tolerance via redundant information coding. Information processing is local Memory is distributed: Long term: Weights Short term: Signal sends

- 10. Advantages of ANNs Lower interpolation error Good extrapolation capabilities. Generalization ability Fast response time in operational phase Free from numerical instability Learning not programming Parallelism in approach Distributed memory Intelligent behavior Capability to operate based on a multivariate and noisy or error prone training data set. Capability for modeling non-linear characteristics.

- 11. Applications of ANNs Designing fuzzy logic controllers Parameter estimation for nonlinear systems Optimization methods in real time traffic control Power system identification and control Power Load forecasting Weather forecasting Solving NP-Hard problems VLSI design Learning the topology and weights of neural networks Performance enhancement of neural networks Distributed data base design Allocation and scheduling on multi-computers. Signature verification study Computer assisted drug design Computer-aided disease diagnosis system CPU Job scheduling Pattern Recognition Speech Recognition Finger print Recognition Face Recognition Character/ Digit Recognition Signal processing applications in virtual instrumentation systems

- 12. Basic Building Blocks of ANNs Network Architecture Learning Algorithms Activation Functions Network Architecture: The arrangement of neurons into layers and the pattern of connection within and in-between layer are called the architecture of the network. Commonly used Network Architecture are

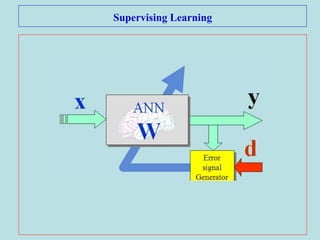

- 13. Learning of ANNs Learning or training algorithms are used to set weights and bias in Neural Networks. Types of Learning – Supervised learning – Unsupervised learning Supervised learning • Learning with a teacher • Learning by examples Training set Examples: Perceptron, ADALINE, MADALINE, Backpropagation etc.

- 15. Unsupervised Learning Self-organizing Clustering – Form proper clusters by discovering the similarities and dissimilarities among objects Examples: Kohonen Self-organizing MAP, ART1,ART2 etc.

- 16. Activation Functions Activation Function: Activation Function is used to calculate the output response of a neuron. Various types of activation functions Step function Hard Limiter function

- 17. Activation Functions Various types of activation functions Ramp function Unipolar Sigmoid function

- 18. Activation Functions Various types of activation functions Bipolar Sigmoid function

- 19. Rosenblatt’s Perceptron In 1962, Frank Rosenblatt developed an ANN called Perceptron. Perceptron is a computational model of the retina of the eye. Weights b/w S and A are fixed Weights b/w A and R are adjusted by Perceptron Learning Rule. Learning of Perceptron is supervised. Training algorithm is suitable for either Bipolar or Binary input with Bipolar target, fixed threshold and adjustable bias.

- 20. Perceptron Training Rule For each training pattern, net calculates the response of the output unit. The net determines whether an error occurred for the pattern. This is done by comparing the calculated output with target value. If an error occurred for a particular training pattern (y ≠ t), then weights are changed according to the following formula: wi (new) = wi (old)+ wi b (new ) = b (old)+ b where wi = α t xi b = α t t is target output value for the current training example y is Perceptron output α is small constant (e.g., 0.5) called learning rate The role of the learning rate is to moderate the degree to which weights are changed at each step.

- 21. Activation Function for Perceptron Binary Step Activation Function Output of Perceptron Perceptron only handle tasks which are linearly separable in in in in yif yif yif yfy 1 0 1 )(

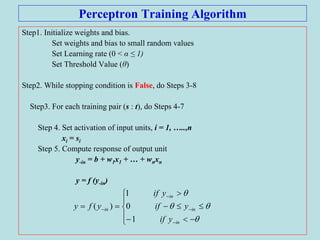

- 22. Perceptron Training Algorithm Step1. Initialize weights and bias. Set weights and bias to small random values Set Learning rate (0 < α ≤ 1) Set Threshold Value (θ) Step2. While stopping condition is False, do Steps 3-8 Step3. For each training pair (s : t), do Steps 4-7 Step 4. Set activation of input units, i = 1, …..,n xi = si Step 5. Compute response of output unit y-in = b + w1x1 + … + wnxn y = f (y-in) in in in in yif yif yif yfy 1 0 1 )(

- 23. Perceptron Training Algorithm Step.6 Update Weight and bias If an error occurred for a particular training pattern (y ≠ t), then, weights are changed according to the following formula: wi (new) = wi (old)+ wi b (new ) = b (old)+ b where wi = α t xi b = α t t is target output value for the current training example y is Perceptron output α is small constant (e.g., 0.5) called learning rate Else wi (new) = wi (old) b (new ) = b (old) Step 7. Test stopping condition

- 24. Perceptron Testing Algorithm Step1. Set calculated weights from training algorithm Set Learning rate (0 < α ≤ 1) Set Threshold Value (θ) Step2. For each input and target (s : t), do Steps 3-5 Step 3. Set activation of input units, i = 1, …..,n xi = si Step 4. Compute response of output unit y-in = b + w1x1 + … + wnxn y = f (y-in) Step.5 Calculate Error E=(t – y) in in in in yif yif yif yfy 1 0 1 )(

- 25. Development of Perceptron for AND Function Input Output 1 1 1 1 -1 -1 -1 1 -1 -1 -1 -1 Input Output 1 1 1 1 -1 -1 -1 1 -1 -1 -1 -1

- 26. Perceptron Training Algorithm for AND function x=[1 1 -1 -1;1 -1 1 -1]; t=[1 -1 -1 -1]; w=[0 0]; b=0; alpha=input('Enter Learning Rate='); theta=input('Enter Threshold Value='); epoch=0; maxepoch=100;

- 27. while epoch<mepoch for i = 1:4 yin=b*x(1,i)*w(1)+x(2,i)*w(2); if yin>theta y=1; end if yin<=theta & yin>=-theta y = 0; end if yin<-theta y = -1; end if y – t(i) ~= 0 for j = 1:2 w(j) = w(j) + alpha*t(i)*x(j, i); end b=b + alpha*t(i); end end epoch=epoch+1; end

- 28. disp('Perceptron for AND function'); disp('Final Weight Matrix'); disp(w); disp('Final Bias'); disp(b); OUTPUT Enter Learning Rate=1 Enter Threshold Value=0.5 Perceptron for AND function Final Weight Matrix 0 2 Final Bias 0

- 29. Perceptron Testing Algorithm for AND function x=[1 1 -1 -1;1 -1 1 -1]; w=[0 2]; b=0; for i=1:4 yin=b*x(1,i)*w(1)+x(2,i)*w(2); if yin>theta y(i)=1; end if yin<=theta & yin>=-theta y(i)=0; end if yin<-theta y(i)=-1; end end y OUTPUT: 1 -1 1 -1 Input Target Actual Output 1 1 1 1 1 -1 -1 -1 -1 1 -1 1 -1 -1 -1 -1

- 30. ADALINE In 1960, Widrow and Hoff developed ADALINE. It uses Bipolar (+1 or -1) activations for its input signals and target output. Weights and bias are updated using Delta Rule. wi (new) = wi (old)+ wi b (new ) = b (old)+ b where wi = α (t –y-in)xi b = α (t –y-in) t is target output value for the current training example y-in is input of output unit α is learning rate

- 31. ADALINE Training Algorithm Step1. Initialize weights and bias. Set weights and bias to small random values Set Learning rate (0 < α ≤ 1) Step2. While stopping condition is False, do Steps 3-7 Step3. For each training pair (s : t), do Steps 4-6 Step 4. Set activation of input units, i = 1, …..,n xi = si Step 5. Compute net input of output unit y-in = b + w1x1 + … + wnxn Step.6 Update Weight and bias wi (new) = wi (old) + α(t-y-in) xi b (new ) = b (old) + α(t-y-in) Step 7. Test stopping condition

- 32. ADALINE Testing Algorithm Step1. Set calculated weights from training algorithm Set Learning rate (0 < α ≤ 1) Step2. For each input and target (s : t), do Steps 3-5 Step 3. Set activation of input units, i = 1, …..,n xi = si Step 4. Compute response of output unit y-in = b + w1x1 + … + wnxn y = f (y-in) Step.5 Calculate Error E=(t – y) 01 01 )( in in in yif yif yfy

- 33. MADALINE In 1960, Widrow & Hoff developed MADALINE. Many ADALINES arranged in a multilayer net. A MADALINE with two hidden ADALINES and one output ADALINE. MADALINE uses Bipolar (+1 or -1) activations for its input signals and target output. Weights and bias on output ADALINE are fixed. Weights and bias on hidden ADALINES are updated using Widrow & Hoff rule.

- 34. MADALINE Activation Function Weights and bias on output ADALINE are fixed: v1 = v2 = b3 = 0.5 Weights and bias on hidden ADALINES are updated using Widrow & Hoff rule: If t = y, then, no weights and bias are updated Otherwise If t = 1, then, weights and bias are updated on zJ (unit whose net input is closed to 0) wiJ (new) = wiJ (old) + α (t –z-inJ)xi bJ (new ) = bJ (old) + α (t –z-inJ) If t = -1, then, weights and bias are updated on zK (unit whose net input is +tive) wiK (new) = wiK (old) + α (t –z-inK)xi bK (new ) = bK (old) + α (t –z-inK) 01 01 )( in in in yif yif yfy

- 35. MADALINE Training Algorithm Step1. Initialize weights and bias. Set weights and bias on output units to v1 = v2 = b3 = 0.5 Set weights and bias on hidden ADALINES to small random values Set Learning rate (0 < α ≤ 1) Step2. While stopping condition is False, do Steps 3-10 Step3. For each training pair (s : t), do Steps 4-9 Step 4. Set activation of input units, i = 1, …..,n xi = si Step 5. Compute net input of each hidden ADALINE unit z-in1 = b1 + w11x1 +w21x2 z-in2 = b2 + w12x1 +w22x2 Step 6. Determine output of each hidden ADALINE unit z1 = f (z-in1) z2 = f (z-in2)

- 36. MADALINE Training Algorithm Step 7. Compute net input of the output ADALINE unit y-in = b3 + v1z1 +v2z2 Step 8. Determine output of the output ADALINE unit y = f (y-in) Step9. Update Weights and bias using Widrow & Hoff rule: If t = y, then, no weights and bias are updated Otherwise If t = 1, then, weights and bias are updated on zJ wiJ (new) = wiJ (old) + α (1 –z-inJ)xi bJ (new ) = bJ (old) + α (t –z-inJ) If t = -1, then, weights and bias are updated on zK wiK (new) = wiK (old) + α (-1 –z-inK)xi bK (new ) = bK (old) + α (-1 –z-inK) Step10. Test stopping condition

- 37. MADALINE Testing Algorithm Step1. Set calculated weights from training algorithm Set Learning rate (0 < α ≤ 1) Step2. For each input and target (s : t), do Steps 3-8 Step 3. Set activation of input units, i = 1, …..,n xi = si Step 4. Compute net input of each hidden ADALINE unit z-in1 = b1 + w11x1 +w21x2 z-in2 = b2 + w12x1 +w22x2 Step 6. Determine output of each hidden ADALINE unit z1 = f (z-in1) z2 = f (z-in2) Step 7. Compute response of output unit y-in = b3 + v1z1 +v2z2 y = f (y-in) Step.8 Calculate Error E=(t – y) 01 01 )( in in in yif yif yfy

- 38. MADALINE Training Algorithm for XOR function Step 1. w11=0.05, w21=0.2,b1=0.3 w12=0.1,w22=0.2, b2=0.15 v1 = v2 = b3 = 0.5 α=0.5 Step2. Begin Training, do Steps 3-10 Step3. For 1st training pair (s : t) = (1 1:-1), do Steps 4-9 Step 4. Activation of input units, i = 1, 2 xi = si x1 = 1, x2 = 1 Step 5. Compute net input of each hidden ADALINE unit z-in1 = b1 + w11x1 +w21x2 z-in1 = 0.3+0.05b+0.2=0.55 z-in2 = b2 + w12x1 +w22x2 z-in2 = 0.15+0.1+0.2=0.45 Step 6. Determine output of each hidden ADALINE unit z1 = f (z-in1) z1 = 1 z2 = f (z-in2) z2 = 1 Input Target s1 s2 t 1 1 -1 1 -1 1 -1 1 1 -1 -1 -1

- 39. MADALINE Training Algorithm for XOR function Step 7. Compute net input of the output ADALINE unit y-in = b3 + v1z1 +v2z2 y-in = 0.5 + 0.5 +0.5=1.5 Step 8. Determine output of the output ADALINE unit y = f (y-in) y = 1 Step9. Update Weights and bias because Error occurred (t-y=-1-1=-2) If t = -1, then, weights and bias are updated on zK (unit whose net input is +tive) wiK (new) = wiK (old) + α (-1 –z-inK)xi bK (new ) = bK (old) + α (-1 –z-inK) b1 (new ) = b1 (old) + α (-1 –z-in1)=0.3+0.5(-1-0.55)= - 0.475 w1 1(new ) = w1 1(old) + α (-1 –z-in1) x1=0.05+0.5(-1-0.55)1= -0.725 Similarly w21(new) = -.0575, b2(new) = -0.575 w12(new) = -0.625, w22(new) = -0.525 Step10. Test stopping condition

- 40. MADALINE Training Algorithm for XOR function After 1st Training pair of 1st Iteration, New Weights and bias w11= -0.725, w21= -0.575, b1= -0.475 w12= -0.625, w22= -0.525, b2= -0.575 These weights and bias are used for 2nd training pair (1 -1: 1) in 1st iteration to get new weights and bias. New weights and bias obtained from 2nd training pair are used for 3rd training pair (-1 1: 1) in 1st iteration to get new weights and bias. New weights and bias obtained from 3rd training pair are used for 4th training pair (-1 -1: -1) in 1st iteration and get new weights and bias. Thus 1st Iteration is completed weights and bias obtained in 1st Iteration (obtained from 4th training pair ) are used for 1st training pair in 2nd Iteration to get new weights and bias Step10. Test stopping condition

- 41. Thanks

![Perceptron Training Algorithm for AND function

x=[1 1 -1 -1;1 -1 1 -1];

t=[1 -1 -1 -1];

w=[0 0];

b=0;

alpha=input('Enter Learning Rate=');

theta=input('Enter Threshold Value=');

epoch=0;

maxepoch=100;](https://image.slidesharecdn.com/ann-1-200421020426/85/Artificial-Neural-Networks-Supervised-Learning-Models-26-320.jpg)

![Perceptron Testing Algorithm for AND function

x=[1 1 -1 -1;1 -1 1 -1];

w=[0 2];

b=0;

for i=1:4

yin=b*x(1,i)*w(1)+x(2,i)*w(2);

if yin>theta

y(i)=1;

end

if yin<=theta & yin>=-theta

y(i)=0;

end

if yin<-theta

y(i)=-1;

end

end

y

OUTPUT: 1 -1 1 -1

Input Target Actual

Output

1 1 1 1

1 -1 -1 -1

-1 1 -1 1

-1 -1 -1 -1](https://image.slidesharecdn.com/ann-1-200421020426/85/Artificial-Neural-Networks-Supervised-Learning-Models-29-320.jpg)