Ad

Associative memory network

- 1. Department of Information Technology 1Soft Computing (ITC4256 ) Dr. C.V. Suresh Babu Professor Department of IT Hindustan Institute of Science & Technology Associative memory network

- 2. Department of Information Technology 2Soft Computing (ITC4256 ) Action Plan • Associative Memory Networks - Introduction to auto associative memory network - Auto associative memory architecture - Auto associative memory training & testing algorithm - Introduction to hetero associative memory network - Hetero associative memory architecture - Hetero associative memory training & testing algorithm • Quiz at the end of session

- 3. Department of Information Technology 3Soft Computing (ITC4256 ) Associative Memory Networks • These kinds of neural networks work on the basis of pattern association, which means they can store different patterns and at the time of giving an output they can produce one of the stored patterns by matching them with the given input pattern. • These types of memories are also called Content-Addressable Memory CAM.

- 4. Department of Information Technology 4Soft Computing (ITC4256 ) Auto Associative Memory - Architecture • This is a single layer neural network in which the input training vector and the output target vectors are the same. • As shown in the following figure, the architecture of Auto Associative memory network has ‘n’ number of input training vectors and similar ‘n’ number of output target vectors.

- 5. Department of Information Technology 5Soft Computing (ITC4256 ) Auto Associative Memory – Training Algorithm For training, this network is using the Hebb or Delta learning rule. Step 1 − Initialize all the weights to zero as wij = 0, i = 1 to n, j = 1 to n Step 2 − Perform steps 3-4 for each input vector. Step 3 − Activate each input unit as follows − xi = si (i = 1 to n) Step 4 − Activate each output unit as follows − yj = sj (j = 1 to n) Step 5 − Adjust the weights as follows − wij(new) = wij(old) + xiyj

- 6. Department of Information Technology 6Soft Computing (ITC4256 ) Auto Associative Memory – Testing Algorithm Step 1 − Set the weights obtained during training for Hebb’s rule. Step 2 − Perform steps 3-5 for each input vector. Step 3 − Set the activation of the input units equal to that of the input vector. Step 4 − Calculate the net input to each output unit j = 1 to n n yinj = ∑ xiwij i=1 Step 5 − Apply the following activation function to calculate the output yj = f(yinj) = +1 if yinj > 0 - 1 if yinj ⩽ 0

- 7. Department of Information Technology 7Soft Computing (ITC4256 ) Hetero Associative Memory • Similar to Auto Associative Memory network, this is also a single layer neural network. • The weights are determined so that the network stores a set of patterns.

- 8. Department of Information Technology 8Soft Computing (ITC4256 ) Hetero Associative Memory - Architecture • As shown in the following figure, the architecture of Hetero Associative Memory network has ‘n’ number of input training vectors and ‘m’ number of output target vectors.

- 9. Department of Information Technology 9Soft Computing (ITC4256 ) Hetero Associative Memory – Training Algorithm For training, this network is using the Hebb or Delta learning rule. Step 1 − Initialize all the weights to zero as wij = 0, i = 1 to n, j = 1 to m Step 2 − Perform steps 3-4 for each input vector. Step 3 − Activate each input unit as follows − xi = si (i = 1 to n) Step 4 − Activate each output unit as follows − yj = sj (j = 1 to m) Step 5 − Adjust the weights as follows − wij(new) = wij(old) + xiyj

- 10. Department of Information Technology 10Soft Computing (ITC4256 ) Hetero Associative Memory – Testing Algorithm Step 1 − Set the weights obtained during training for Hebb’s rule. Step 2 − Perform steps 3-5 for each input vector. Step 3 − Set the activation of the input units equal to that of the input vector. Step 4 − Calculate the net input to each output unit j = 1 to m n yinj = ∑ xiwij i=1 Step 5 − Apply the following activation function to calculate the output +1 if yinj > 0 yj = f(yinj) = 0 if yinj = 0 - 1 if yinj < 0

- 11. Department of Information Technology 11Soft Computing (ITC4256 ) Quiz - Questions 1. What is the other name of associative memory? 2. In which associative memory network, the input training vector and the output target vectors are the same? a) auto b) hetero c) iterative d) noniterative 3. In which associative memory network, the input training vector and the output target vectors are not the same? a) auto b) hetero c) iterative d) noniterative 4. For which algorithm does the associative memory networks use the Hebb or Delta learning rule? a) training b) testing c) processing d) none 5. For which algorithm does the associative memory networks set the activation of the input units equal to that of the input vector. a) training b) testing c) processing d) none

- 12. Department of Information Technology 12Soft Computing (ITC4256 ) Quiz - Answers 1. What is the other name of associative memory? Content-Addressable Memory (CAM) 2. In which associative memory network, the input training vector and the output target vectors are the same? a) auto 3. In which associative memory network, the input training vector and the output target vectors are not the same? b) hetero 4. For which algorithm does the associative memory networks use the Hebb or Delta learning rule? a) training 5. For which algorithm does the associative memory networks set the activation of the input units equal to that of the input vector. b) testing

- 13. Department of Information Technology 13Soft Computing (ITC4256 ) Action Plan • Associative Memory Networks (Cont…) - Introduction to iterative auto associative network - Introduction to bidirectional associative network - BAM operation - BAM stability and storage capacity • Quiz at the end of session • Assignment – 2: Write a detailed note on iterative auto associative memory.

- 14. Department of Information Technology 14Soft Computing (ITC4256 ) Iterative Auto Associative Network • Net does not respond to the input signal with the stored target pattern. • Respond like stored pattern. • Use the first response as input to the net again. • Iterative auto associative network recover original stored vector when presented with test vector close to it. • It is also known as recurrent auto associative networks.

- 15. Department of Information Technology 15Soft Computing (ITC4256 ) Bidirectional Associative Memory (BAM) • Bidirectional associative memory (BAM), first proposed by Bart Kosko, is a hetero associative network. • It associates patterns from one set, set A, to patterns from another set, set B, and vice versa. • Human memory is essentially associative. • We attempt to establish a chain of associations, and thereby to restore a lost memory.

- 16. Department of Information Technology 16Soft Computing (ITC4256 ) BAM Operation

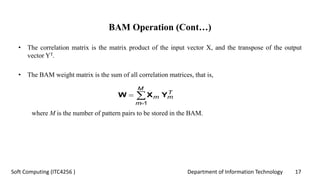

- 17. Department of Information Technology 17Soft Computing (ITC4256 ) BAM Operation (Cont…) • The correlation matrix is the matrix product of the input vector X, and the transpose of the output vector YT. • The BAM weight matrix is the sum of all correlation matrices, that is, where M is the number of pattern pairs to be stored in the BAM. T m M m m YXW 1

- 18. Department of Information Technology 18Soft Computing (ITC4256 ) BAM Operation (Cont…) • The input vector X (p) is applied to the transpose of weight matrix WT to produce an output vector Y(p). • Then, the output vector Y(p) is applied to the weight matrix W to produce a new input vector X(p+1). • This process is repeated until input and output vector become unchanged, or in other words, the BAM reaches stable state.

- 19. Department of Information Technology 19Soft Computing (ITC4256 ) Stability and Storage Capacity of the BAM • The BAM is unconditionally stable. • The maximum number of associations to be stored in the BAM should not exceed the number of neurons in the smaller layer. • The more serious problem with the BAM is incorrect convergence. • In fact, a stable association may be only slightly related to the initial input vector.

- 20. Department of Information Technology 20Soft Computing (ITC4256 ) Quiz - Questions 1. What is the other name of iterative auto associative networks? 2. BAM is a ------------ associative network. 3. What has to be created for each pattern pair in order to develop BAM? 4. The major issue with BAM is ------------ . 5. Who first proposed BAM?

- 21. Department of Information Technology 21Soft Computing (ITC4256 ) Quiz - Answers 1. What is the other name of iterative auto associative networks? Recurrent auto associative networks 2. BAM is a ------------ associative network. Hetero 3. What has to be created for each pattern pair in order to develop BAM? Correlation matrix 4. The major issue with BAM is ------------ . Incorrect convergence 5. Who first proposed BAM? Bart Kosko

- 22. Department of Information Technology 22Soft Computing (ITC4256 ) Action Plan • Associative Memory Networks (Cont…) - Introduction to Hopfield networks - Introduction to Discrete Hopfield networks - Discrete Hopfield networks training & testing algorithm - Energy function evaluation - Introduction to Continuous Hopfield networks • Quiz at the end of session

- 23. Department of Information Technology 23Soft Computing (ITC4256 ) Hopfield Networks • The Hopfield network represents an auto-associative type of memory. • Hopfield neural network was invented by Dr. John J. Hopfield in 1982. • It consists of a single layer which contains one or more fully connected recurrent neurons.

- 24. Department of Information Technology 24Soft Computing (ITC4256 ) Discrete Hopfield Network • The network has symmetrical weights with no self-connections i.e., wij = wji and wii = 0. Architecture • Following are some important points to keep in mind about discrete Hopfield network − - This model consists of neurons with one inverting and one non- inverting output. - The output of each neuron should be the input of other neurons but not the input of self.

- 25. Department of Information Technology 25Soft Computing (ITC4256 ) Discrete Hopfield Network (Cont…) - Weight/connection strength is represented by wij. - Weights should be symmetrical, i.e. wij = wji • The output from Y1 going to Y2, Yi and Yn have the weights w12, w1i and w1n respectively. Similarly, other arcs have the weights on them.

- 26. Department of Information Technology 26Soft Computing (ITC4256 ) Discrete Hopfield Network – Training Algorithm • During training of discrete Hopfield network, weights will be updated. • As we know that we can have the binary input vectors as well as bipolar input vectors. • Hence, in both the cases, weight updates can be done with the following relation: Case 1 − Binary input patterns For a set of binary patterns s p, p = 1 to P Here, s p = s1 p, s2 p,..., si p,..., sn p Weight Matrix is given by P wij = ∑ [2si(p)−1][2sj(p)−1] for i ≠ j p=1

- 27. Department of Information Technology 27Soft Computing (ITC4256 ) Discrete Hopfield Network – Training Algorithm Case 2 − Bipolar input patterns For a set of binary patterns s p, p = 1 to P Here, s p = s1 p, s2 p,..., si p,..., sn p Weight Matrix is given by P wij = ∑ [si(p)][sj(p)] for i ≠ j p=1

- 28. Department of Information Technology 28Soft Computing (ITC4256 ) Discrete Hopfield Network – Testing Algorithm Step 1 − Initialize the weights, which are obtained from training algorithm by using Hebbian principle. Step 2 − Perform steps 3-9, if the activations of the network is not consolidated. Step 3 − For each input vector X, perform steps 4-8. Step 4 − Make initial activation of the network equal to the external input vector X as follows − yi = xi for i = 1 to n Step 5 − For each unit Yi, perform steps 6-9.

- 29. Department of Information Technology 29Soft Computing (ITC4256 ) Discrete Hopfield Network – Testing Algorithm Step 6 − Calculate the net input of the network as follows − yini=xi+∑ yjwji j Step 7 − Apply the activation as follows over the net input to calculate the output − 1 if yini > θi yi = yi if yini = θi 0 if yini < θi Here θi is the threshold. Step 8 − Broadcast this output yi to all other units. Step 9 − Test the network for conjunction.

- 30. Department of Information Technology 30Soft Computing (ITC4256 ) Energy Function Evaluation • An energy function is defined as a function that is bonded and non- increasing function of the state of the system. • Energy function Ef, also called Lyapunov function determines the stability of discrete Hopfield network, and is characterized as follows − n n n n Ef = − 1 / 2 ∑ ∑ yi yj wij − ∑ xi yi + ∑ θi yi i=1 j=1 i=1 i=1

- 31. Department of Information Technology 31Soft Computing (ITC4256 ) Continuous Hopfield Network • Model − The model or architecture can be build up by adding electrical components such as amplifiers which can map the input voltage to the output voltage over a sigmoid activation function. • Energy Function Evaluation n n n n n yi Ef = 1 / 2 ∑ ∑ yiyjwij − ∑ xiyi + 1 / λ ∑ ∑ wijgri ∫ a−1(y)dy i=1 j=1 i=1 i=1 j=1 0 j≠i j≠i • Here λ is gain parameter and gri input conductance.

- 32. Department of Information Technology 32Soft Computing (ITC4256 ) Quiz - Questions 1. The Hopfield network is an ---------- associative type of memory. 2. Hopfield consists of a -------- layer which contains one or more fully connected recurrent neurons. a) single b) double c) triple d) linear 3. Which principle is used to initialize weights in testing algorithm? 4. What is the other name of energy function? 5. Continuous Hopfield network has --------- as a continuous variable. a) weight b) time c) bias d) none

- 33. Department of Information Technology 33Soft Computing (ITC4256 ) Quiz - Answers 1. The Hopfield network is an ---------- associative type of memory. Auto 2. Hopfield consists of a -------- layer which contains one or more fully connected recurrent neurons. a) single 3. Which principle is used to initialize weights in testing algorithm? Hebbian principle 4. What is the other name of energy function? Lyapunov function 5. Continuous Hopfield network has --------- as a continuous variable. b) time

![Department of Information Technology 26Soft Computing (ITC4256 )

Discrete Hopfield Network – Training Algorithm

• During training of discrete Hopfield network, weights will be updated.

• As we know that we can have the binary input vectors as well as bipolar

input vectors.

• Hence, in both the cases, weight updates can be done with the following

relation:

Case 1 − Binary input patterns

For a set of binary patterns s p, p = 1 to P

Here, s p = s1 p, s2 p,..., si p,..., sn p

Weight Matrix is given by

P

wij = ∑ [2si(p)−1][2sj(p)−1] for i ≠ j

p=1](https://image.slidesharecdn.com/2-200809090807/85/Associative-memory-network-26-320.jpg)

![Department of Information Technology 27Soft Computing (ITC4256 )

Discrete Hopfield Network – Training Algorithm

Case 2 − Bipolar input patterns

For a set of binary patterns s p, p = 1 to P

Here, s p = s1 p, s2 p,..., si p,..., sn p

Weight Matrix is given by

P

wij = ∑ [si(p)][sj(p)] for i ≠ j

p=1](https://image.slidesharecdn.com/2-200809090807/85/Associative-memory-network-27-320.jpg)