atc 3rd module compiler and automata.ppt

- 1. COMPILER DESIGN Topic: Lexical Analysis By RANJAN V

- 2. The Role of the Lexical Analyzer • As the first phase of a compiler, the main task of the lexical analyzer is to read the input characters of the source program, group them into lexemes, and produce as output a sequence of tokens for each lexeme in the source program. • It is common for the lexical analyzer to interact with the symbol table as well. When the lexical analyzer discovers a lexeme constituting an identifier, it needs to enter that lexeme into the symbol table.

- 3. The role of lexical analyzer Lexical Analyzer Parser Source program token getNextToken Symbol table To semantic analysis

- 4. Lexical Analyzer continue---- • Since the lexical analyzer is the part of the compiler that reads the source text, it may perform certain other tasks besides identification of lexemes They are: • One such task is stripping out comments and whitespace (blank, newline, tab • Another task is correlating error messages generated by the compiler with the source program. • i.e For instance, the lexical analyzer may keep track of the number of newline characters seen, so it can associate a line number with each error message.

- 5. Sometimes, lexical analyzers are divided into a cascade of two processes a) Scanning consists of the simple processes that do not require tokenization of the input, such as deletion of comments and compaction of consecutive whitespace characters into one. b) b) Lexical analysis proper is the more complex portion, where the scanner produces the sequence of tokens as output

- 6. Why to separate Lexical analysis and parsing(Syntax analyzer) 1. Simplicity of design The separation of lexical and syntactic analysis often allows us to simplify at least one of these tasks. For example, a parser that had to deal with comments and whitespace as syntactic units would be considerably more complex than one that can assume comments and whitespace have already been removed by the lexical analyzer 2. Improving compiler efficiency A separate lexical analyzer allows us to apply specialized techniques that serve only the lexical task, not the job of parsing.

- 7. Continue… 3. Enhancing compiler portability Input-device-specific peculiarities can be restricted to the lexical analyzer

- 8. Tokens, Patterns and Lexemes • A token is a pair a token name and an optional token value • A pattern is a description of the form that the lexemes of a token may take • A lexeme is a sequence of characters in the source program that matches the pattern for a token

- 9. Example Token Informal description Sample lexemes if else comparison id number literal Characters i, f Characters e, l, s, e < or > or <= or >= or == or != Letter followed by letter and digits Any numeric constant Anything but “ sorrounded by “ if else <=, != pi, score, D2 3.14159, 0, 6.02e23 “core dumped” printf(“total = %dn”, score);

- 10. Attributes for tokens • E = M * C ** 2 • <id, pointer to symbol table entry for E> • <assign-op> • <id, pointer to symbol table entry for M> • <mult-op> • <id, pointer to symbol table entry for C> • <exp-op> • <number, integer value 2>

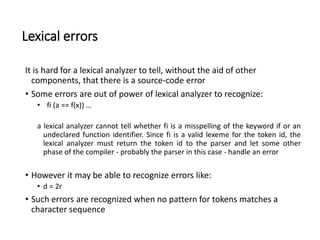

- 11. Lexical errors It is hard for a lexical analyzer to tell, without the aid of other components, that there is a source-code error • Some errors are out of power of lexical analyzer to recognize: • fi (a == f(x)) … a lexical analyzer cannot tell whether fi is a misspelling of the keyword if or an undeclared function identifier. Since fi is a valid lexeme for the token id, the lexical analyzer must return the token id to the parser and let some other phase of the compiler - probably the parser in this case - handle an error • However it may be able to recognize errors like: • d = 2r • Such errors are recognized when no pattern for tokens matches a character sequence

- 12. Error recovery Suppose a situation arises in which the lexical analyzer is unable to proceed because none of the patterns for tokens matches any prefix of the remaining input The simplest recovery strategy is "panic mode" recovery:- • Successive characters are ignored until we reach to a well formed token • Delete one character from the remaining input • Insert a missing character into the remaining input • Replace a character by another character • Transpose two adjacent characters

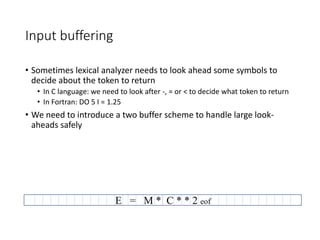

- 13. Input buffering • Sometimes lexical analyzer needs to look ahead some symbols to decide about the token to return • In C language: we need to look after -, = or < to decide what token to return • In Fortran: DO 5 I = 1.25 • We need to introduce a two buffer scheme to handle large look- aheads safely E = M * C * * 2 eof

- 14. Sentinels Switch (*forward++) { case eof: if (forward is at end of first buffer) { reload second buffer; forward = beginning of second buffer; } else if {forward is at end of second buffer) { reload first buffer; forward = beginning of first buffer; } else /* eof within a buffer marks the end of input */ terminate lexical analysis; break; cases for the other characters; } E = M eof * C * * 2 eof eof

- 15. Specification of tokens • In theory of compilation regular expressions are used to formalize the specification of tokens • Regular expressions are means for specifying regular languages • Example: • Letter_(letter_ | digit)* • Each regular expression is a pattern specifying the form of strings

- 16. Regular expressions • Ɛ is a regular expression, L(Ɛ) = {Ɛ} • If a is a symbol in ∑then a is a regular expression, L(a) = {a} • (r) | (s) is a regular expression denoting the language L(r) ∪ L(s) • (r)(s) is a regular expression denoting the language L(r)L(s) • (r)* is a regular expression denoting (L9r))* • (r) is a regular expression denting L(r)

- 17. Regular definitions d1 -> r1 d2 -> r2 … dn -> rn • Example: letter_ -> A | B | … | Z | a | b | … | Z | _ digit -> 0 | 1 | … | 9 id -> letter_ (letter_ | digit)*

- 18. Extensions • One or more instances: (r)+ • Zero of one instances: r? • Character classes: [abc] • Example: • letter_ -> [A-Za-z_] • digit -> [0-9] • id -> letter_(letter|digit)*

- 19. Recognition of tokens • Starting point is the language grammar to understand the tokens: stmt -> if expr then stmt | if expr then stmt else stmt | Ɛ expr -> term relop term | term term -> id | number

- 20. Recognition of tokens (cont.) • The next step is to formalize the patterns: digit -> [0-9] Digits -> digit+ number -> digit(.digits)? (E[+-]? Digit)? letter -> [A-Za-z_] id -> letter (letter|digit)* If -> if Then -> then Else -> else Relop -> < | > | <= | >= | = | <> • We also need to handle whitespaces: ws -> (blank | tab | newline)+

- 21. Transition diagrams • Transition diagram for relop

- 22. Transition diagrams (cont.) • Transition diagram for reserved words and identifiers

- 23. Transition diagrams (cont.) • Transition diagram for unsigned numbers

- 24. Transition diagrams (cont.) • Transition diagram for whitespace

- 25. Architecture of a transition-diagram-based lexical analyzer TOKEN getRelop() { TOKEN retToken = new (RELOP) while (1) { /* repeat character processing until a return or failure occurs */ switch(state) { case 0: c= nextchar(); if (c == ‘<‘) state = 1; else if (c == ‘=‘) state = 5; else if (c == ‘>’) state = 6; else fail(); /* lexeme is not a relop */ break; case 1: … … case 8: retract(); retToken.attribute = GT; return(retToken); }

- 26. Lexical Analyzer Generator - Lex Lexical Compiler Lex Source program lex.l lex.yy.c C compiler lex.yy.c a.out a.out Input stream Sequence of tokens

- 27. Structure of Lex programs declarations %% translation rules %% auxiliary functions Pattern {Action}

- 28. PUMPING LEMMA

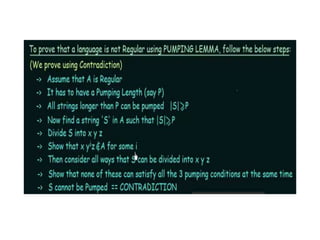

- 32. BY contradiction we can prove that all languages are not regular using pumping lemma

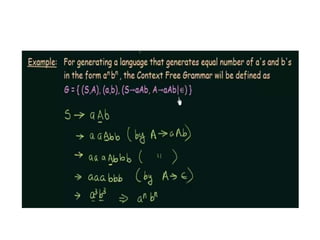

- 33. CFG

- 37. Leftmost and Right Most derivation Take an example of the below grammar

- 38. Production rule should be of the form as mentioned below for CFG

- 41. Example 2 of Leftmost and right most derivation 1. S->AB/€ 2. A->aB 3. B->Sb Derive “abb” from both leftmost and rightmost derivation. Left Most Derivation: Right Most derivation S->AB S->AB ->aBB -> Asb S-> € ->aSbB ->Ab A->aB ->abB ->aBb B-> Sb ->abSb ->aSbb S-> € ->abb ->abb

- 42. Sentential Forms

- 43. •

- 45. Parse tree or Derivation tree • The parse tree is the pictorial representation of derivations. Therefore, it is also known as derivation trees. The derivation tree is independent of the other in which productions are used. • A parse tree is an ordered tree in which nodes are labeled with the left side of the productions and in which the children of a node define its equivalent right parse tree also known as syntax tree, generation tree, or production tree. • A Parse Tree for a CFG G =(V,∑, P,S) is a tree satisfying the following conditions −

- 46. Conditions 1. Root has the label S, where S is the start symbol. 2. Each vertex of the parse tree has a label which can be a variable (V), terminal (Σ), or ε. 3. If A → C1,C2…….Cn is a production, then C1,C2…….Cn are children of node labeled A. 4. Leaf Nodes are terminal (Σ), and Interior nodes are variable (V). 5. The label of an internal vertex is always a variable Yield or result − Yield of Derivation Tree is the concatenation of labels of the leaves in left to right ordering.

- 49. Consider the Grammar given below − E⇒ E+E|E ∗E|id Find Leftmost and Rightmost Derivation for the string. Left Most : E ⇒ E+E ⇒ E+E+E ⇒ id+E+E ⇒ id+id+E ⇒ id+id+id

- 50. Right Most derivation: E ⇒ E+E ⇒ E+E+E ⇒ E+E+id ⇒ E+id+id ⇒ id+id+id

- 51. Example1 − If CFG has productions. S → a A S | a S → Sb A | SS | ba Show that S ⇒ *aa bb aa & construct parse tree whose yield is aa bb aa. Solution • S ⇒lm aAS • ⇒ a Sb A S • ⇒ aa b A S • ⇒ aa bba S ∴ S ⇒ * aa bb aa

- 52. lm S ⇒ aAS ⇒ a Sb A S ⇒ aa b A S ⇒ aa bba S ∴ S ⇒ * aa bb aa

- 54. Example 2 • Let us consider this grammar: E -> E+E|id We can create a 2 parse tree from this grammar to obtain a string id+id+id. The following are the 2 parse trees generated by left-most derivation:

- 55. Recursions

- 60. Left Factoring • Converting the grammar to Non-Deterministic to Deterministic

- 61. By converting the production into form A->αA’ A’->β1| β2 , Look the example below •

- 62. Example 2 A->aAB|aA B->bB|b Solution: Converting the above grammar into below form A->αA’ A’->β1| β2 A->aA’ B->b|B’ A’->AB|A B’->B|€

- 63. Example 3 A->aAB|aA|a Solution: Converting the above grammar into below form A->αA’ A’->β1| β2 A->aA’ A’->AB|A|€

- 64. Examples A->aB/abc Solution :A->aA’ A’->B|bc S->iEtS|iEtSeS|a E->b Solution: S-> iEtSS’ |a S’->€|es E->b

- 65. Examples • S->aSSbS|aSaSb|abb|b • S->aS’|b • S’->SSbS|SaSb|bb • S’’->SS”|bb • S”->SbS|aSb

![Extensions

• One or more instances: (r)+

• Zero of one instances: r?

• Character classes: [abc]

• Example:

• letter_ -> [A-Za-z_]

• digit -> [0-9]

• id -> letter_(letter|digit)*](https://image.slidesharecdn.com/atc3rdmodule-240618050228-8b4a7860/85/atc-3rd-module-compiler-and-automata-ppt-18-320.jpg)

![Recognition of tokens (cont.)

• The next step is to formalize the patterns:

digit -> [0-9]

Digits -> digit+

number -> digit(.digits)? (E[+-]? Digit)?

letter -> [A-Za-z_]

id -> letter (letter|digit)*

If -> if

Then -> then

Else -> else

Relop -> < | > | <= | >= | = | <>

• We also need to handle whitespaces:

ws -> (blank | tab | newline)+](https://image.slidesharecdn.com/atc3rdmodule-240618050228-8b4a7860/85/atc-3rd-module-compiler-and-automata-ppt-20-320.jpg)