Aussenac semanticsnl pwebsem2017-v4

- 1. New convergences between Natural language processing and knowledge engineering – An illustration with the extraction and representation of semantic relations Nathalie Aussenac-Gilles (IRIT – CNRS, Toulouse, France) [email protected]

- 2. Outline of the talk • Evolution of the Language and Knowledge duality in AI • Deep learning for NLP • Semantic relations • Finding semantic relations 2SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac12/09/2017

- 3. The language / Knowledge duality in early AI Natural Language Processing • Ambitious goal • To produce systems able to fully understand and represent the meaning of language • Target representation: logic • … inspired by linguistic >> computational linguistics Knowledge Engineering • Ambitious goal • To Produce systems able to fully solve problems that « classical » algorithms are not likely to solve • Target representation: logic • … inspired by human problem solving >> expert systems 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 3 Knowledge acquisition Natural Language Processing Logic-based representation KBSSyntactic Parsing / checking Spelling checking ….

- 4. The language / Knowledge duality in classical AI Natural Language Processing • To produce systems able to understand and build representations from language in order to build systems that perform language intensive tasks • Target applications – Identifying opinions – Providing abstracts – Translating from one language to another – Extracting information – Answering questions – Managing dialog systems Knowledge engineering • Collect knowledge from various sources to build representations and knowledge bases in order to build systems that perform or support knowledge intensive tasks • Knowledge based systems – Fault diagnosis – Classification – Repair – Task planning – simple design, … • Little focus on domain knowledge 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 4

- 5. The language / Knowledge duality in classical AI Natural Language Processing • Layered approach to deal with specific issues Knowledge Engineering • Layered models and reusable components • Cf CommonKADS 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 5 OCR/ tokenization Morphological / lexical analysis Syntactic analysis Semantic typing Discourse analysis Task model Inference structure Domain model Task library Problem solving methods Ontologies1995

- 6. The language / Knowledge duality at the era of the (semantic) web What the semantic web provides • Ambition – To make web pages and web data « understable » by algorithms – To give them a semantics by typing entities and concepts • Standards for knowledge representation – Improve interoperability – Promote knowledge and data reusability • An architecture to reach this goal – Web application composition: web services – Semantic annotation – Linking semantic data and making it open (LOD) 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 6

- 7. The language / Knowledge duality at the era of the (semantic) web Natural Language Processing • Larger corpora enable – Statistics – Probabilist language models – Machine learning • NLP benefits of new semantic datasets – Ontologies – Large KB: DBPedia, Yago, – Multilingual lexical KB: BabelNet Knowledge engineering • Text as knowledge sources – Information extraction techniques – Semantic typing – Relation extraction • Ontology engineering from text • Produce semantic resources – (domain) Ontologies – Large general KB • Ontology based applications – Connect services – make apointments – adapt processes to context – answer questions, search for information … 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 7 More knowledge sources, more data and more digital text

- 8. Linguistic clues for knowledge October 2014 From natural language to ontologies 8

- 9. Linguistic clues for knowledge October 2014 From natural language to ontologies 9 Named Entity Recognition Relation extraction Entity typing

- 10. Linguistic clues for knowledge October 2014 From natural language to ontologies 10 Name : Sofia Copola or Sofia Carmina Coppola Is-a : Person Born on: 1971, May 14 Born in: New York (USA) Job: Movie director, actor Nationality: American Name : Francis Ford Copola Is-a : Person Born on: … Born in: … Job: Movie director Nationality: American Has-child

- 11. Additional linguistic difficulties October 2014 From natural language to ontologies 11

- 12. Additional linguistic difficulties October 2014 From natural language to ontologies 12 reference / anaphora coordination distribution

- 13. Other difficult issues • Short (context-free) text: headlines or tweets cf http://www.cs.cmu.edu/~ark/TweetNLP/ • Asserting the value of facts • Parsing non standard English; neologism, spelling errors, syntax errors … • Non figurative language, humor, sarcasm … • segmentation issues • … • The Pope’s baby steps on gays • The Eiffel Tower is a 324 metres tall (including the antenas) wrought iron lattice tower … The Eiffel Tower is 312 metres tall … • Great job @jusInbieber! Were SOO PROUD of what you’ve accomplished! U taught us 2 #neversaynever & you yourself should never give up either ♥ • “Congratulation #lesbleus for your great match!” is ironic if the French soccer team has lost the match. • The New York-‐New Haven Railroad The New York-‐New Haven Railroad 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 13

- 14. Machine learning for NLP • Machine learning requires – Annotating examples (time consuming) – Selecting (and evaluating) appropriate features to describe the data in a processable way (complex, requires linguistic expertise and resources) – Selecting the appropriate learning algorithm – The ML algo pptimizes the weights on features 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 14 The #NSA wiretapped a whole country. No worries for #Belgium: it is not a whole country. positive example The Eiffel Tower is 324 metres tall. negative example … #irony or #humor positive example

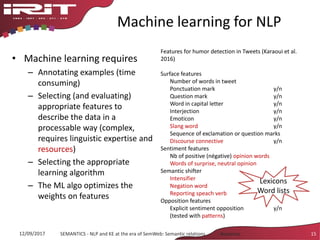

- 15. Machine learning for NLP • Machine learning requires – Annotating examples (time consuming) – Selecting (and evaluating) appropriate features to describe the data in a processable way (complex, requires linguistic expertise and resources) – Selecting the appropriate learning algorithm – The ML algo optimizes the weights on features 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 15 Features for humor detection in Tweets (Karaoui et al. 2016) Surface features Number of words in tweet Ponctuation mark y/n Question mark y/n Word in capital letter y/n Interjection y/n Emoticon y/n Slang word y/n Sequence of exclamation or question marks Discourse connective y/n Sentiment features Nb of positive (négative) opinion words Words of surprise, neutral opinion Semantic shifter Intensifier y/n Negation word y/n Reporting speach verb y/n Opposition features Explicit sentiment opposition y/n (tested with patterns) Lexicons Word lists

- 16. Challenging industrial applications … that require NLP AND semantic resources • Search engines (written and spoken) • Online advertisement matching • Automated/assisted translation • Sentiment analysis for marketing or finance • Speech recognition • Chatbots / Dialog (virtual) agents – Customer support – Controlling devices – Technical support to diagnose and repair 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 16

- 17. Current challenges according to D. Jurasky in 2012 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 17

- 18. Outline of the talk 18SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac12/09/2017 • The Language and Knowledge duality in AI • Deep learning for NLP • Semantic relations • Finding semantic relations

- 19. Recent shift: Deep Learning (DL) for NLP from C. Manning and R. Socher, Course about NLP with DL http://web.stanford.edu/class/cs224n/lectures/cs224n-2017-lecture1.pdf • Representation learning attempts to automatically learn good features or representations – Ex: vectors represent word distribution in corpus • Deep learning algorithms attempt to learn (multiple levels of) representation and an output • From “raw” inputs x (e.g., sound, characters, or words) • … not that “raw”: WORD VECTORS are the input 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 19

- 20. Recent shift: Deep Learning (DL) for NLP (Manning 2017 tutorial) • Deep NLP = deep learning + NLP • Reach the goals of NLP using some NLP works, representation learning and deep learning methods • Several big improvements in recent years in NLP – Levels: speech, words, syntax, semantics – Tools: parts-of-speech, entities, parsing – Applications: machine translation, sentiment analysis, dialogue agents, question answering 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 20

- 21. Recent shift: Deep Learning (DL) for NLP Distributional semantics • Semantic similarity is based on the distributional hypothesis [Harris 1954] • Take a word and its contexts: – tasty sooluceps – sweet sooluceps – stale sooluceps – freshly baked sooluceps • By looking at a word’s context, one can infer its meaning 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 21 food

- 22. Recent shift: Deep Learning (DL) for NLP Distributional semantics • Vectors to capture word meaning = frequency of co-occurring words • and matrix to capture word similarities 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 22 Red Tasty Rapid Second- hand Sweet Cherry 2 3 0 0 1 Strawberry 3 1 0 0 2 Car 2 0 3 2 0 Truck 1 0 3 1 0 Red Tasty Rapid Second- hand Sweet Cherry 52 104 0 0 75 Strawberry 68 85 0 0 42 Car 27 0 65 35 0 Truck 12 0 43 72 0 Red Tasty Fast Second- hand Sweet Cherry 752 604 0 1 575 Strawberry 868 584 2 0 642 Car 274 0 465 358 0 Truck 126 0 343 172 0 red Fast Strawberry Cherry Car Truck

- 23. Deep Learning for NLP • Syntactic Parsing of sentence structure 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 23 Input

- 24. Deep learning for NLP • Could be solving many problems – Morphologic analysis: vectors of Morphemes combined with NN – Semantics: Words, phrases and logical expressions are vectors -> compared using NN to evaluate their similarity – Sentiment analysis: combining various analyses with NN -> recursive NN – Question answering: vectors of facts compares with NN – … • But not all the NLP problems in any context (domain specific corpora …) • Requires large corpora to design word vectors, or to reuse existing word vectors (built on Wikipedia and GigaCorpus) – http://nlp.stanford.edu/projects/glove/ – https://github.com/idio/wiki2vec/ • Requires expertise to define the layers, the vectors and content of the NN 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 24

- 25. Outline of the talk • The Language and Knowledge duality in AI • Deep learning for NLP • Semantic relations • Finding semantic relations 25SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac12/09/2017

- 26. Semantic relations, what do we mean? • Semantic relation … what do you have in mind? – Binary relation – Hypernymy … meronymy – Causality, temporal, spatial – What about other kinds of relations? (Cat, eats, mouse) (« SimplyRed », plays, « The right thing ») (« Eiffel Tower », has-height, « 324 m ») (artist, performs, piece of music, date, location) • Relation extraction from text: what do we have in mind? – The relation is expressed in a single sentence. – The relation is expressed in tables or tagged XML sections 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 26 Binary relations Hierachical relations General relations N-ary relations

- 27. Semantic relations, what do we mean? Research field • Linguistics: semantic relations, semantic roles, discourse relations • Terminology – Weak structure – Stored in DB or SKOS models • Information extraction – Small set of classes – Gazetteers contain lists of entity labels What is a relation A tree comprises at least a trunk, roots and branches. A tree [Plants] comprises [meronymy] at least a trunk, roots and branches. (tree has_parts trunk) (tree, has_parts, roots) … in a gardening terminology looks for relations between instances 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 27 tree Plantation year Species Branches Tree1 1990 Oak > 20 Tree2 1995 Oak 15 whole parts

- 28. Semantic relations, what do we mean? Research field • Domain Ontology engineering – Formal (logic, RDF, OWL …) – Formal properties: transitivity … – used to infer new knowledge – part of a network – May be shared or reused • Semantic web – Independent triples that connect resources – Publically available in data repositories with W3C Standard format – Connect triples with existing ones, with web ontologies What is a relation bot:Tree bot:has_part bot:Branch 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 28 Trunk Has-part Root Plant Fonguscereals is_a Tree Has- part Branch bot:Tree bot:has-part bot:Branch bot:Plant bot:has-part bot:Root rdfs:subClassOf Has- part Root

- 29. Example: tree in DBPedia 29 dbpedia- owl:tree dbpedia-owl:Speciesdbpedia-owl:Place SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 12/09/2017

- 30. dbpedia- owl:PhysicalEntity rdfs:subClassOf dbpedia- owl:Organism Example: Plants in DbPedia 30 owl:SameAs yago:WordNet_Plant_ 100017222 dbpedia- owl:Plant dbpedia- owl:Acer_Stone bergae dbpedia- owl:Alopecurus_ca rolinianus dbpedia- owl:Alsmithia_long ipes dbpedia-owl:… rdf:typerdf:type rdf:typerdf:type SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac12/09/2017

- 31. Outline of the talk • The Language and Knowledge duality in AI • Deep learning for NLP • Semantic relations • Finding semantic relations 31SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac12/09/2017

- 32. Finding semantic relations, some parameters • Knowledge sources – human experts, text – existing semantic resources – Domain specific vs general knowledge • Text collection(s) – Size, domain specific vs general – Structure, quality of writing – Textual genre (knowledge rich text?) • Target representations – Input/ output format of the process – Nature of the semantic relation 32SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac12/09/2017

- 33. Finding semantic relations, some parameters • Extraction techniques from text – “obvious” language regularities, known relations and classes (or entities) -> Patterns – “more implicit” language regularities, medium size corpora, open list of classes/entities -> supervised learning – Very large corpora, unexpected relations -> unsupervised learning • Validation – What makes a relation representation valid? Relevant? 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 33

- 34. Historic perspective on relation extraction techniques • Early period: around 1990 – Patterns (Hearst, 1992) to explore definitions – Learning selectional preferences (Resnick) – Machine Learning : ASIUM (Faure, Nedellec) – Relations between classes • From 2000 to 2010: more patterns, more learning – Association rules (Maedche & Staab, 2000) – Supervised Learning from positive/ negative exemples – Joint use of various methods (Malaisé, 2005), Text2Onto (Cimiano, 2005), RelExt – Relations between entities • Since 2005: open relation extraction – Semi-supervised learning from small sets of data – Unsupervised learning: KnowItAll (Etzioni et al., 2005), TextRunner (Banko, 2007) – Distant supervision (using a KB) ; deep learning – Very large corpora (web) 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 34

- 35. Pattern-based relation extraction • Hearst, 1992. Patterns for hypernymy in English “Y such as X ((, X)* (, and|or ) X)” “such Y as X” “X or other Y” “X and other Y” “Y including X” “Y, especially X” • A shared list of patterns for French: MAR-REL – CRISTAL project (linguistics and NLP) – 3 types of binary relations: hypernymy, meronymy, cause – UIMA format – Evaluation on various corpora – http://redac.univ-tlse2.fr/misc/mar-rel_fr.html 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 35

- 36. Tuning a pattern … an endless effort ? • On appelle route nationale une route gérée par l’état. • Sur cette carte, on symbolise par un triangle un sommet de plus de 2000m. • Il appelle souvent son chat la nuit. -> error • On dénommait Louis-Philippe « la poire ». -> missed • On appellera dans la suite de ce mémoire relation lexicale une relation qui … -> missed 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 36

- 37. Pattern based relation extraction, known issues • A tree comprises at least a trunk, roots and branches. • With branches reaching the ground, the willow is an ornamental tree. • The tree of the neighbor has been delimed. • He climbs on the branches of the tree. • This tree is wonderful. Its branches reach the ground. • Plant tangerine trees in a sheltered place out of the wind. • verb: lexical variation; enumeration > various parts; modality (exactly, at least, at most, often, …) • With: meronymy pattern only in some genres (such as catalogs, biology documents); insertion between the arguments • Delimed : Term and pattern are in the same word; implicitness: requires background knowledge: delimed -> has_part branches (and branches are cut) • Of : Very ambiguous mark; polysemy reduced in [verb N1 of N2] • Its : reference; necessity to take into account two sentences • Out of: negative form: representation issue 37SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac12/09/2017

- 38. Pattern-based relation extraction, other issues • Not enough flexibility – not able to handle (unexpected) variations – Miss find many relations – Need adaptation to be relevant on a new corpus • Too strong "matching" between the sentence and the pattern itself • Generic patterns – widely used with poor results (no surprise) – often appear as a baseline • Building relevant domain/corpus-specific patterns is time consuming and difficult 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 38

- 39. Using ML to learn patterns (1) • Patterns are seen (and stored) as lexicalizations of ontology properties • Patterns are “extracted” from syntactic dependencies between related entities (in triples) • Assumes that patterns are structured around ONE lexical entry • Lemon format for lexical ontologies • Entries can be frames 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 39 ATOLL—A framework for the automatic induction of ontology lexica S. Walter, C. Unger, P. Cimiano, DKE (94), 148-162 (2014)

- 40. Using ML to learn patterns (1) • Patterns are seen (and stored) as lexicalizations of ontology properties • Patterns are “extracted” from syntactic dependencies between related entities (in triples) • Assumes that patterns are structured around ONE lexical entry • Lemon format for lexical ontologies • Entries can be frames 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 40 ATOLL—A framework for the automatic induction of ontology lexica S. Walter, C. Unger, P. Cimiano, DKE (94), 148-162 (2014)

- 41. Using ML to learn patterns (2) 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 41 Michelle Obama is the wife of Barack Obama, the current president. Michelle Obama allegedly told her husband, Barack Obama, to .. Michelle Obama, the 44th first lady and wife of President Barack Dbpedia:spouse Find all lexicalizations of the entities: Michelle Obama, Mrs. Obama, Michelle Robinson …

- 42. Using ML to learn patterns (3) 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 42 • Pattern = shortest path btw the 2 entities in the dependency graph [MichelleObama (subject), wife (root), of (preposition), BarackObama (object)] • Lexical entry in the ontology

- 43. Finding semantic relations: what can large corpora and machine learning do for you ? • Learning patterns – Poor results – Requires very large data sets – Reasonable for general knowledge • Learning relations – Much more relevant – Large variety of approaches in the state of the art – Key step = select feature 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 43

- 44. Using ML to learn relations: Hypotheses • A large variety of learning algorithms – classification – regression – Probabilities (naives Bayes) – Linear separation … • Classification = grouping similar learning objects – Ojects are designed from input sentences – Sentences where two arguments occur – Either vectors or graphs or lists made of features – Similarity measure: cosine or cartesian distance or sequence alignment for graphs 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 44

- 45. Main stages of the process 1. Preprocessing – Tagging the entities to be considered as arguments – NLP preprocessing 2. Object representation – Collect sentences where pairs co-occur – Identify features – Represent sentences with features 3. Training the algorithm (if supervised) 4. Running the trained model 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 45

- 46. Example2: Learning domain specific relations using ALVIS-RE (1) 1. Preprocessing (AlvisNLP/ML platform) – Tokenization in words and sentences – Lemmatization, POS tagging using CCS parser – Named Entity tagging (canonical form) – Dependency relations (graph) – Semantic relations are added when known (positive examples) – Word sequence relations (wordPath) 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 46 VALSAMOU D., Automatic Information Extraction from scientific scholar to build a network of biological regulations involved in the seed development of Arabidopsis Thaliana. ED STIC, univ. Paris Sud. 2017

- 47. Example2: Learning domain specific relations using ALVIS-RE (2) 2. Object representation • Representation as a path • 3 experiments : depencies, surface (wordPath relations) and a combination of the 2 • Find the shortest path between the terms Arg1 and Arg2. 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 47

- 48. Example2: Learning domain specific relations using ALVIS-RE (3) 2. Object representation • Paths are turned as sequences w,rel • Empty nodes (gaps) are added if needed + weight (gap penalty) • Weights are assigned to some words 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 48

- 49. Example2: Learning domain specific relations using ALVIS-RE (4) 4. Classification – Use SVM algorithm – Improved using semantic information • Distributional representations (DISCO or Word2Vec) • Classes manually related to each other • Classes from WordNet • Evaluation on a real corpus 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 49

- 50. Example3: learning relations from enumerative structures 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 50 IS_A IS_A Learning relations from an parallel enumerative structure = - classification task to identify the relation (IS_A, part_Of, other) - Term extraction to identify the primer and the items J.-P.Fauconnier, M. Kamel. Discovering Hypernymy Relations using Text Layout (regular paper). Joint Conference on Lexical and Computational Semantics (SEM 2015),(ACL), 2015.

- 51. Relation extraction: learning relations from enumerative structures • Corpus – 745 enumerative structures from Wikipedia pages – 3 relation types: taxonomic, ontological_non_taxonomic, non_ontological • Classification task – Feature definition – Automatic evaluation of features – 3 algorithms are compared : SVM, MaxEntropy and baseline (majority) – Training of the 2 algorithms • Results – 82% f-measure for SVM – Best result with a 2 step process (ontological yes/no -> feature and then taxonomic yes/no) 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 51

- 52. Example4: comparing patterns and ML for hypernym relation extraction • Overall objectif – Define various relation extraction techniques – Adapted to various ways to express relations • Sempedia project – To enrich the French DbPedia – To extract relations from Wikipedia pages • Experiment on desambiguation pages – Contain definitions and many hypernym relations – General knowledge • Techniques – Patterns – Basic pre-processing (no dependency parsing) – Distant supervised learning 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 52

- 53. Example4: Wikipedia desambiguation pages Different ways to express hypernym relations in the same corpus 07/09/2017 Extracting hypernym relations 53

- 54. Example4 : Application to Wikipedia Disambiguation Pages • Corpora – Reference corpus: 20 pages ; manual annotation (entities and relations linking entities) – Training corpus: all remaining French disambiguation pages (5904 pages) • Semantic resource : BabelNet (www.babel.org) – very large multilingual semantic network with about 14 million entries (Babel synsets) – connects concepts and named entities with semantic relations – rich in hypernym relations • Features 07/09/2017 Extracting hypernym relations 54

- 55. Example 4: Processing chain Preprocessing Corpus (Wikipedia disambiguation pages) Annotated corpus Term pairs extraction (<T1 1, T1 2>, sent1>) (<T2 1, T2 2>, sent2>) (<T3 1, T3 2>, sent3>) … Semantic resource BabelNet { <Tj 1, Tj 2>,sentj, <traitj 1, …, traitj p>, neg> }j Gazetteer (Babelnet terms) TTG { <Ti 1, Ti 2>,senti, <traiti 1, …, traiti p>, pos >}i Feature vectors building test set (2000 +, 2000 -) { <Tj 1, Tj 2>,sentj, <traitj 1, …, traitj p>, neg> }j training set (4000 +, 4000 -) Binary logistic regression (MaxEnt) Evaluation (precision, recall, F-measure) Learning model { <Ti 1, Ti 2>,senti, <traiti 1, …, traiti p>, pos >}i 07/09/2017 Extracting hypernym relations 55

- 56. Example4: Application to Wikipedia Disambiguation Pages • Evaluation on the reference corpus – 688 true positive examples and 278 true negative examples – Best results with window size of 3 – Comparison between 2 baselines and 2 models • Baseline1: generic lexico-syntactic patterns for French • Baseline2: generic patterns AND ad-hoc patterns for the disambiguation pages • Model_POSL: trained with vectors composed of POS and lemma features • Model_AllFeatures: trained with vectors composed of all features 07/09/2017 Extracting hypernym relations 56

- 57. Example4: Application to Wikipedia Disambiguation Pages - discussion – Number of true positive hypernym relations per type of hypernym expression – Quantitative gain: machine learning identifies more examples, no development cost, ensuring a systematic and less empirical approach. – Impact of the way relations are expressed: • ML performs as well as patterns on well-written text • Ad-hoc pattern perform (a little) better on low-written text • ML can identify all forms of relation expressions (current patterns are unable to identify relations with head modifiers) 07/09/2017 Extracting hypernym relations 57

- 58. • Examples – correctly identified by ML – would require additional ad-hoc patterns > extra cost (1) Louis Babel, prêtre-missionnaire oblat et explorateur du Nouveau-Québec (1826-1912) . <Louis Label, prêtre-missionnaire oblat> <Louis Label, explorateur du Nouveau-Quebec> (2) La fontaine a aussi désigné le “vaisseau de cuivre ou de quelque autre métal, où l’on garde de l’eau dans les maisons”, et encore le robinet de cuivre par où coule l’eau d’une fontaine, ou le vin d’un tonneau, ou quelque autre liqueur que ce soit. <fontaine, robinet de cuivre> Example4: Application to Wikipedia Disambiguation Pages 07/09/2017 Extracting hypernym relations 58

- 59. Example5: NN to extract relations from scientific papers • Corpus – Full scientific papers (ISTEX French project) – 15 years of Nature journal (50Ko of text) • Open relation extraction with distant supervision – Semantic resource: NCIT (thesaurus) – Learning objects: vector made of the word embedding vectors of a subset of lemmas of the sentences (around arguments) – Learning algorithm: Self Organizing Maps • Results – Find 13 classes 5 of which as easy to interpret with a majority of relations of one type – 80% of accuracy for hypernym relations 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 59

- 60. Example5: NN to extract relations from scientific papers • Difficulties with SOM – Size of the map > computation time – Interpretation of the resulting classes – Evaluation of the recall • Limitations of supervised learning hypotheses – One sentence may contain more than 2 domain concepts or entities > arbitrary selection of the 1st 2 – 2 entities may be related by several relations in the semantic resource > which annotation? – The vocabulary in the corpus and semantic resource may differ • Supervised learning requires expertise to adjust – The number of iterations to get the optimal number of classes – The size of the map or layers in the NN – The features that form the classified objects 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 60 Person Work-in Company holds

- 61. Towards more complementarity ML can be used to • To learn Patterns • To find relation in complex and long sentences • For open relation extraction, when the list of possible relations is not known • When a domain resource is available, ML with distant supervision • Deep learning makes the process fully automatic but requires very large corpora Pattern can be used as • input to define features: tag sequences matching the pattern (will become a feature) • an "easy method" when regularities are obvious (cf Polysemy pages in Wikipedia) • To boot-strap and automatically identify positive examples 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 61

- 62. Conclusion • Context – complexity and diversity of what we call semantic relation extraction – A lot of work has been done in designing and evaluating patterns for semantic relations • Many perspective to improve relation extraction – Capitalize better exiting patterns – Collect results about the most relevant features and the most efficient representation to feed ML algorithms – Need to implement pre-processing chains (even with NN algorithms) for a larger set of languages – Study how performant each technique is on a variety of NL text where relations are expressed in many ways – Design a plat-form where various methods could be used together 12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 62

![Recent shift:

Deep Learning (DL) for NLP

Distributional semantics

• Semantic similarity is based on the distributional

hypothesis [Harris 1954]

• Take a word and its contexts:

– tasty sooluceps

– sweet sooluceps

– stale sooluceps

– freshly baked sooluceps

• By looking at a word’s context, one can infer its meaning

12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 21

food](https://image.slidesharecdn.com/aussenacsemanticsnlpwebsem2017-v4-170912150211/85/Aussenac-semanticsnl-pwebsem2017-v4-21-320.jpg)

![Semantic relations,

what do we mean?

Research field

• Linguistics: semantic

relations, semantic roles,

discourse relations

• Terminology

– Weak structure

– Stored in DB or SKOS models

• Information extraction

– Small set of classes

– Gazetteers contain lists of

entity labels

What is a relation

A tree comprises at least a trunk, roots and

branches.

A tree [Plants] comprises [meronymy] at least a

trunk, roots and branches.

(tree has_parts trunk)

(tree, has_parts, roots) …

in a gardening terminology

looks for relations between instances

12/09/2017 SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac 27

tree Plantation year Species Branches

Tree1 1990 Oak > 20

Tree2 1995 Oak 15

whole

parts](https://image.slidesharecdn.com/aussenacsemanticsnlpwebsem2017-v4-170912150211/85/Aussenac-semanticsnl-pwebsem2017-v4-27-320.jpg)

![Pattern based relation extraction,

known issues

• A tree comprises at least a trunk,

roots and branches.

• With branches reaching the ground,

the willow is an ornamental tree.

• The tree of the neighbor has been

delimed.

• He climbs on the branches of the tree.

• This tree is wonderful. Its branches

reach the ground.

• Plant tangerine trees in a sheltered

place out of the wind.

• verb: lexical variation; enumeration >

various parts; modality (exactly, at

least, at most, often, …)

• With: meronymy pattern only in some

genres (such as catalogs, biology

documents); insertion between the

arguments

• Delimed : Term and pattern are in the

same word; implicitness: requires

background knowledge: delimed ->

has_part branches (and branches are

cut)

• Of : Very ambiguous mark; polysemy

reduced in [verb N1 of N2]

• Its : reference; necessity to take into

account two sentences

• Out of: negative form: representation

issue

37SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations - Aussenac12/09/2017](https://image.slidesharecdn.com/aussenacsemanticsnlpwebsem2017-v4-170912150211/85/Aussenac-semanticsnl-pwebsem2017-v4-37-320.jpg)

![Using ML to learn patterns (3)

12/09/2017

SEMANTICS - NLP and KE at the era of SemWeb: Semantic relations -

Aussenac

42

• Pattern = shortest path btw the 2 entities in the dependency graph

[MichelleObama (subject), wife (root), of (preposition), BarackObama (object)]

• Lexical entry in the ontology](https://image.slidesharecdn.com/aussenacsemanticsnlpwebsem2017-v4-170912150211/85/Aussenac-semanticsnl-pwebsem2017-v4-42-320.jpg)