Autoencoders in Deep Learning

Download as PPTX, PDF1 like3,900 views

1. Autoencoders are unsupervised neural networks that are useful for dimensionality reduction and clustering. They compress the input into a latent-space representation then reconstruct the output from this representation. 2. Deep autoencoders stack multiple autoencoder layers to learn hierarchical representations of the data. Each layer is trained sequentially. 3. Variational autoencoders use probabilistic encoders and decoders to learn a Gaussian latent space. They can generate new samples from the learned data distribution.

1 of 27

Downloaded 62 times

Ad

Recommended

Autoencoder

AutoencoderHARISH R An Autoencoder is a type of Artificial Neural Network used to learn efficient data codings in an unsupervised manner. The aim of an autoencoder is to learn a representation (encoding) for a set of data, typically for dimensionality reduction, by training the network to ignore signal “noise.”

Autoencoders

AutoencodersCloudxLab An autoencoder is an artificial neural network that is trained to copy its input to its output. It consists of an encoder that compresses the input into a lower-dimensional latent-space encoding, and a decoder that reconstructs the output from this encoding. Autoencoders are useful for dimensionality reduction, feature learning, and generative modeling. When constrained by limiting the latent space or adding noise, autoencoders are forced to learn efficient representations of the input data. For example, a linear autoencoder trained with mean squared error performs principal component analysis.

Introduction to Autoencoders

Introduction to AutoencodersYan Xu The document provides an introduction and overview of auto-encoders, including their architecture, learning and inference processes, and applications. It discusses how auto-encoders can learn hierarchical representations of data in an unsupervised manner by compressing the input into a code and then reconstructing the output from that code. Sparse auto-encoders and stacking multiple auto-encoders are also covered. The document uses handwritten digit recognition as an example application to illustrate these concepts.

Convolution Neural Network (CNN)

Convolution Neural Network (CNN)Suraj Aavula The presentation is made on CNN's which is explained using the image classification problem, the presentation was prepared in perspective of understanding computer vision and its applications. I tried to explain the CNN in the most simple way possible as for my understanding. This presentation helps the beginners of CNN to have a brief idea about the architecture and different layers in the architecture of CNN with the example. Please do refer the references in the last slide for a better idea on working of CNN. In this presentation, I have also discussed the different types of CNN(not all) and the applications of Computer Vision.

Generative Adversarial Network (GAN)

Generative Adversarial Network (GAN)Prakhar Rastogi basics of GAN neural network

GAN is a advanced tech in area of neural networks which will help to generate new data . This new data will be developed based over the past experiences and raw data.

UNIT-4.pptx

UNIT-4.pptxNiharikaThakur32 - Autoencoders are unsupervised neural networks that are used for dimensionality reduction and feature extraction. They compress the input into a latent-space representation and then reconstruct the output from this representation.

- The architecture of an autoencoder consists of an encoder that compresses the input into a latent space, a decoder that reconstructs the output from the latent space, and a reconstruction loss that is minimized during training.

- There are different types of autoencoders like undercomplete, convolutional, sparse, denoising, contractive, stacked, and deep autoencoders that apply additional constraints or have more complex architectures. Autoencoders can be used for tasks like image compression, anomaly detection, and feature learning.

Object detection presentation

Object detection presentationAshwinBicholiya The document describes a project that aims to develop a mobile application for real-time object and pose detection. The application will take in a real-time image as input and output bounding boxes identifying the objects in the image along with their class. The methodology involves preprocessing the image, then using the YOLO framework for object classification and localization. The goals are to achieve high accuracy detection that can be used for applications like vehicle counting and human activity recognition.

Introduction to Diffusion Models

Introduction to Diffusion ModelsSangwoo Mo The document provides an introduction to diffusion models. It discusses that diffusion models have achieved state-of-the-art performance in image generation, density estimation, and image editing. Specifically, it covers the Denoising Diffusion Probabilistic Model (DDPM) which reparametrizes the reverse distributions of diffusion models to be more efficient. It also discusses the Denoising Diffusion Implicit Model (DDIM) which generates rough sketches of images and then refines them, significantly reducing the number of sampling steps needed compared to DDPM. In summary, diffusion models have emerged as a highly effective approach for generative modeling tasks.

Autoencoder

AutoencoderMehrnaz Faraz 1. Autoencoders are unsupervised neural networks that are useful for dimensionality reduction and clustering. They learn an efficient coding of the input in an unsupervised manner.

2. Deep autoencoders, also known as stacked autoencoders, are autoencoders with multiple hidden layers that can learn hierarchical representations of the data. They are trained layer-by-layer to learn increasingly higher level features.

3. Variational autoencoders are a type of autoencoder that are probabilistic models, with the encoder output being the parameters of an assumed distribution such as Gaussian. They can generate new samples from the learned distribution.

Recurrent Neural Network (RNN) | RNN LSTM Tutorial | Deep Learning Course | S...

Recurrent Neural Network (RNN) | RNN LSTM Tutorial | Deep Learning Course | S...Simplilearn This presentation on Recurrent Neural Network will help you understand what is a neural network, what are the popular neural networks, why we need recurrent neural network, what is a recurrent neural network, how does a RNN work, what is vanishing and exploding gradient problem, what is LSTM and you will also see a use case implementation of LSTM (Long short term memory). Neural networks used in Deep Learning consists of different layers connected to each other and work on the structure and functions of the human brain. It learns from huge volumes of data and used complex algorithms to train a neural net. The recurrent neural network works on the principle of saving the output of a layer and feeding this back to the input in order to predict the output of the layer. Now lets deep dive into this presentation and understand what is RNN and how does it actually work.

Below topics are explained in this recurrent neural networks tutorial:

1. What is a neural network?

2. Popular neural networks?

3. Why recurrent neural network?

4. What is a recurrent neural network?

5. How does an RNN work?

6. Vanishing and exploding gradient problem

7. Long short term memory (LSTM)

8. Use case implementation of LSTM

Simplilearn’s Deep Learning course will transform you into an expert in deep learning techniques using TensorFlow, the open-source software library designed to conduct machine learning & deep neural network research. With our deep learning course, you'll master deep learning and TensorFlow concepts, learn to implement algorithms, build artificial neural networks and traverse layers of data abstraction to understand the power of data and prepare you for your new role as deep learning scientist.

Why Deep Learning?

It is one of the most popular software platforms used for deep learning and contains powerful tools to help you build and implement artificial neural networks.

Advancements in deep learning are being seen in smartphone applications, creating efficiencies in the power grid, driving advancements in healthcare, improving agricultural yields, and helping us find solutions to climate change. With this Tensorflow course, you’ll build expertise in deep learning models, learn to operate TensorFlow to manage neural networks and interpret the results.

And according to payscale.com, the median salary for engineers with deep learning skills tops $120,000 per year.

You can gain in-depth knowledge of Deep Learning by taking our Deep Learning certification training course. With Simplilearn’s Deep Learning course, you will prepare for a career as a Deep Learning engineer as you master concepts and techniques including supervised and unsupervised learning, mathematical and heuristic aspects, and hands-on modeling to develop algorithms. Those who complete the course will be able to:

Learn more at: https://www.simplilearn.com/

Recurrent Neural Networks, LSTM and GRU

Recurrent Neural Networks, LSTM and GRUananth Recurrent Neural Networks have shown to be very powerful models as they can propagate context over several time steps. Due to this they can be applied effectively for addressing several problems in Natural Language Processing, such as Language Modelling, Tagging problems, Speech Recognition etc. In this presentation we introduce the basic RNN model and discuss the vanishing gradient problem. We describe LSTM (Long Short Term Memory) and Gated Recurrent Units (GRU). We also discuss Bidirectional RNN with an example. RNN architectures can be considered as deep learning systems where the number of time steps can be considered as the depth of the network. It is also possible to build the RNN with multiple hidden layers, each having recurrent connections from the previous time steps that represent the abstraction both in time and space.

Deep neural networks

Deep neural networksSi Haem Deep learning and neural networks are inspired by biological neurons. Artificial neural networks (ANN) can have multiple layers and learn through backpropagation. Deep neural networks with multiple hidden layers did not work well until recent developments in unsupervised pre-training of layers. Experiments on MNIST digit recognition and NORB object recognition datasets showed deep belief networks and deep Boltzmann machines outperform other models. Deep learning is now widely used for applications like computer vision, natural language processing, and information retrieval.

Intro to Deep learning - Autoencoders

Intro to Deep learning - Autoencoders Akash Goel This document provides an overview of autoencoders and their use in unsupervised learning for deep neural networks. It discusses the history and development of neural networks, including early work in the 1940s-1980s and more recent advances in deep learning. It then explains how autoencoders work by setting the target values equal to the inputs, describes variants like denoising autoencoders, and how stacking autoencoders can create deep architectures for tasks like document retrieval, facial recognition, and signal denoising.

Convolutional Neural Networks (CNN)

Convolutional Neural Networks (CNN)Gaurav Mittal A comprehensive tutorial on Convolutional Neural Networks (CNN) which talks about the motivation behind CNNs and Deep Learning in general, followed by a description of the various components involved in a typical CNN layer. It explains the theory involved with the different variants used in practice and also, gives a big picture of the whole network by putting everything together.

Next, there's a discussion of the various state-of-the-art frameworks being used to implement CNNs to tackle real-world classification and regression problems.

Finally, the implementation of the CNNs is demonstrated by implementing the paper 'Age ang Gender Classification Using Convolutional Neural Networks' by Hassner (2015).

Perceptron (neural network)

Perceptron (neural network)EdutechLearners i. Perceptron

Representation & Issues

Classification

learning

ii. linear Separability

What Is Deep Learning? | Introduction to Deep Learning | Deep Learning Tutori...

What Is Deep Learning? | Introduction to Deep Learning | Deep Learning Tutori...Simplilearn This Deep Learning Presentation will help you in understanding what is Deep learning, why do we need Deep learning, applications of Deep Learning along with a detailed explanation on Neural Networks and how these Neural Networks work. Deep learning is inspired by the integral function of the human brain specific to artificial neural networks. These networks, which represent the decision-making process of the brain, use complex algorithms that process data in a non-linear way, learning in an unsupervised manner to make choices based on the input. This Deep Learning tutorial is ideal for professionals with beginners to intermediate levels of experience. Now, let us dive deep into this topic and understand what Deep learning actually is.

Below topics are explained in this Deep Learning Presentation:

1. What is Deep Learning?

2. Why do we need Deep Learning?

3. Applications of Deep Learning

4. What is Neural Network?

5. Activation Functions

6. Working of Neural Network

Simplilearn’s Deep Learning course will transform you into an expert in deep learning techniques using TensorFlow, the open-source software library designed to conduct machine learning & deep neural network research. With our deep learning course, you’ll master deep learning and TensorFlow concepts, learn to implement algorithms, build artificial neural networks and traverse layers of data abstraction to understand the power of data and prepare you for your new role as deep learning scientist.

Why Deep Learning?

It is one of the most popular software platforms used for deep learning and contains powerful tools to help you build and implement artificial neural networks.

Advancements in deep learning are being seen in smartphone applications, creating efficiencies in the power grid, driving advancements in healthcare, improving agricultural yields, and helping us find solutions to climate change. With this Tensorflow course, you’ll build expertise in deep learning models, learn to operate TensorFlow to manage neural networks and interpret the results.

You can gain in-depth knowledge of Deep Learning by taking our Deep Learning certification training course. With Simplilearn’s Deep Learning course, you will prepare for a career as a Deep Learning engineer as you master concepts and techniques including supervised and unsupervised learning, mathematical and heuristic aspects, and hands-on modeling to develop algorithms.

There is booming demand for skilled deep learning engineers across a wide range of industries, making this deep learning course with TensorFlow training well-suited for professionals at the intermediate to advanced level of experience. We recommend this deep learning online course particularly for the following professionals:

1. Software engineers

2. Data scientists

3. Data analysts

4. Statisticians with an interest in deep learning

Cnn

CnnNirthika Rajendran Convolutional neural networks (CNNs) learn multi-level features and perform classification jointly and better than traditional approaches for image classification and segmentation problems. CNNs have four main components: convolution, nonlinearity, pooling, and fully connected layers. Convolution extracts features from the input image using filters. Nonlinearity introduces nonlinearity. Pooling reduces dimensionality while retaining important information. The fully connected layer uses high-level features for classification. CNNs are trained end-to-end using backpropagation to minimize output errors by updating weights.

Machine Learning: Introduction to Neural Networks

Machine Learning: Introduction to Neural NetworksFrancesco Collova' 1. Machine learning involves developing algorithms that can learn from data and improve their performance over time without being explicitly programmed. 2. Neural networks are a type of machine learning algorithm inspired by the human brain that can perform both supervised and unsupervised learning tasks. 3. Supervised learning involves using labeled training data to infer a function that maps inputs to outputs, while unsupervised learning involves discovering hidden patterns in unlabeled data through techniques like clustering.

Generative adversarial networks

Generative adversarial networks남주 김 Generative Adversarial Networks (GANs) are a class of machine learning frameworks where two neural networks contest with each other in a game. A generator network generates new data instances, while a discriminator network evaluates them for authenticity, classifying them as real or generated. This adversarial process allows the generator to improve over time and generate highly realistic samples that can pass for real data. The document provides an overview of GANs and their variants, including DCGAN, InfoGAN, EBGAN, and ACGAN models. It also discusses techniques for training more stable GANs and escaping issues like mode collapse.

Recurrent neural networks rnn

Recurrent neural networks rnnKuppusamy P The document discusses recurrent neural networks (RNNs) and long short-term memory (LSTM) networks. It provides details on the architecture of RNNs including forward and back propagation. LSTMs are described as a type of RNN that can learn long-term dependencies using forget, input and output gates to control the cell state. Examples of applications for RNNs and LSTMs include language modeling, machine translation, speech recognition, and generating image descriptions.

Perceptron

PerceptronNagarajan 1. A perceptron is a basic artificial neural network that can learn linearly separable patterns. It takes weighted inputs, applies an activation function, and outputs a single binary value.

2. Multilayer perceptrons can learn non-linear patterns by using multiple layers of perceptrons with weighted connections between them. They were developed to overcome limitations of single-layer perceptrons.

3. Perceptrons are trained using an error-correction learning rule called the delta rule or the least mean squares algorithm. Weights are adjusted to minimize the error between the actual and target outputs.

Optimization for Deep Learning

Optimization for Deep LearningSebastian Ruder Talk on Optimization for Deep Learning, which gives an overview of gradient descent optimization algorithms and highlights some current research directions.

Hyperparameter Tuning

Hyperparameter TuningJon Lederman The document discusses hyperparameters and hyperparameter tuning in deep learning models. It defines hyperparameters as parameters that govern how the model parameters (weights and biases) are determined during training, in contrast to model parameters which are learned from the training data. Important hyperparameters include the learning rate, number of layers and units, and activation functions. The goal of training is for the model to perform optimally on unseen test data. Model selection, such as through cross-validation, is used to select the optimal hyperparameters. Training, validation, and test sets are also discussed, with the validation set used for model selection and the test set providing an unbiased evaluation of the fully trained model.

Simple Introduction to AutoEncoder

Simple Introduction to AutoEncoderJun Lang The document introduces autoencoders, which are neural networks that compress an input into a lower-dimensional code and then reconstruct the output from that code. It discusses that autoencoders can be trained using an unsupervised pre-training method called restricted Boltzmann machines to minimize the reconstruction error. Autoencoders can be used for dimensionality reduction, document retrieval by compressing documents into codes, and data visualization by compressing high-dimensional data points into 2D for plotting with different categories colored separately.

Feedforward neural network

Feedforward neural networkSopheaktra YONG This slide is prepared for the lectures-in-turn challenge within the study group of social informatics, kyoto university.

Support Vector Machines ( SVM )

Support Vector Machines ( SVM ) Mohammad Junaid Khan Welcome to the Supervised Machine Learning and Data Sciences.

Algorithms for building models. Support Vector Machines.

Classification algorithm explanation and code in Python ( SVM ) .

Introduction to Recurrent Neural Network

Introduction to Recurrent Neural NetworkKnoldus Inc. The document provides an introduction to recurrent neural networks (RNNs). It discusses how RNNs differ from feedforward neural networks in that they have internal memory and can use their output from the previous time step as input. This allows RNNs to process sequential data like time series. The document outlines some common RNN types and explains the vanishing gradient problem that can occur in RNNs due to multiplication of small gradient values over many time steps. It discusses solutions to this problem like LSTMs and techniques like weight initialization and gradient clipping.

Optimization in Deep Learning

Optimization in Deep LearningYan Xu This document summarizes various optimization techniques for deep learning models, including gradient descent, stochastic gradient descent, and variants like momentum, Nesterov's accelerated gradient, AdaGrad, RMSProp, and Adam. It provides an overview of how each technique works and comparisons of their performance on image classification tasks using MNIST and CIFAR-10 datasets. The document concludes by encouraging attendees to try out the different optimization methods in Keras and provides resources for further deep learning topics.

autoencoder-190813144108.pptx

autoencoder-190813144108.pptxkiran814572 - Autoencoders are unsupervised artificial neural networks useful for dimensionality reduction and clustering of unlabeled data. They compress the input into a latent-space representation and then reconstruct the output from this representation.

- Deep autoencoders, also known as stacked autoencoders, can learn hierarchical representations of the data by stacking multiple autoencoders together. The features learned by one autoencoder are used as the input for the next autoencoder in the stack.

- Variational autoencoders use probabilistic encoding and decoding to model the data distribution and can generate new samples from this distribution. They have been used for tasks like image generation, data manipulation, and unsupervised learning.

autoencoder-190813145130.pdf

autoencoder-190813145130.pdfSameer Gulshan This document discusses various types of autoencoders, including stacked autoencoders, denoising autoencoders, sparse autoencoders, and variational autoencoders. Autoencoders are unsupervised neural networks that learn efficient data encodings in order to reconstruct input data. They can be used for tasks like dimensionality reduction, feature learning, and generating new data samples. Variational autoencoders additionally use probabilistic encodings and decoders to learn latent space representations of input data.

Ad

More Related Content

What's hot (20)

Autoencoder

AutoencoderMehrnaz Faraz 1. Autoencoders are unsupervised neural networks that are useful for dimensionality reduction and clustering. They learn an efficient coding of the input in an unsupervised manner.

2. Deep autoencoders, also known as stacked autoencoders, are autoencoders with multiple hidden layers that can learn hierarchical representations of the data. They are trained layer-by-layer to learn increasingly higher level features.

3. Variational autoencoders are a type of autoencoder that are probabilistic models, with the encoder output being the parameters of an assumed distribution such as Gaussian. They can generate new samples from the learned distribution.

Recurrent Neural Network (RNN) | RNN LSTM Tutorial | Deep Learning Course | S...

Recurrent Neural Network (RNN) | RNN LSTM Tutorial | Deep Learning Course | S...Simplilearn This presentation on Recurrent Neural Network will help you understand what is a neural network, what are the popular neural networks, why we need recurrent neural network, what is a recurrent neural network, how does a RNN work, what is vanishing and exploding gradient problem, what is LSTM and you will also see a use case implementation of LSTM (Long short term memory). Neural networks used in Deep Learning consists of different layers connected to each other and work on the structure and functions of the human brain. It learns from huge volumes of data and used complex algorithms to train a neural net. The recurrent neural network works on the principle of saving the output of a layer and feeding this back to the input in order to predict the output of the layer. Now lets deep dive into this presentation and understand what is RNN and how does it actually work.

Below topics are explained in this recurrent neural networks tutorial:

1. What is a neural network?

2. Popular neural networks?

3. Why recurrent neural network?

4. What is a recurrent neural network?

5. How does an RNN work?

6. Vanishing and exploding gradient problem

7. Long short term memory (LSTM)

8. Use case implementation of LSTM

Simplilearn’s Deep Learning course will transform you into an expert in deep learning techniques using TensorFlow, the open-source software library designed to conduct machine learning & deep neural network research. With our deep learning course, you'll master deep learning and TensorFlow concepts, learn to implement algorithms, build artificial neural networks and traverse layers of data abstraction to understand the power of data and prepare you for your new role as deep learning scientist.

Why Deep Learning?

It is one of the most popular software platforms used for deep learning and contains powerful tools to help you build and implement artificial neural networks.

Advancements in deep learning are being seen in smartphone applications, creating efficiencies in the power grid, driving advancements in healthcare, improving agricultural yields, and helping us find solutions to climate change. With this Tensorflow course, you’ll build expertise in deep learning models, learn to operate TensorFlow to manage neural networks and interpret the results.

And according to payscale.com, the median salary for engineers with deep learning skills tops $120,000 per year.

You can gain in-depth knowledge of Deep Learning by taking our Deep Learning certification training course. With Simplilearn’s Deep Learning course, you will prepare for a career as a Deep Learning engineer as you master concepts and techniques including supervised and unsupervised learning, mathematical and heuristic aspects, and hands-on modeling to develop algorithms. Those who complete the course will be able to:

Learn more at: https://www.simplilearn.com/

Recurrent Neural Networks, LSTM and GRU

Recurrent Neural Networks, LSTM and GRUananth Recurrent Neural Networks have shown to be very powerful models as they can propagate context over several time steps. Due to this they can be applied effectively for addressing several problems in Natural Language Processing, such as Language Modelling, Tagging problems, Speech Recognition etc. In this presentation we introduce the basic RNN model and discuss the vanishing gradient problem. We describe LSTM (Long Short Term Memory) and Gated Recurrent Units (GRU). We also discuss Bidirectional RNN with an example. RNN architectures can be considered as deep learning systems where the number of time steps can be considered as the depth of the network. It is also possible to build the RNN with multiple hidden layers, each having recurrent connections from the previous time steps that represent the abstraction both in time and space.

Deep neural networks

Deep neural networksSi Haem Deep learning and neural networks are inspired by biological neurons. Artificial neural networks (ANN) can have multiple layers and learn through backpropagation. Deep neural networks with multiple hidden layers did not work well until recent developments in unsupervised pre-training of layers. Experiments on MNIST digit recognition and NORB object recognition datasets showed deep belief networks and deep Boltzmann machines outperform other models. Deep learning is now widely used for applications like computer vision, natural language processing, and information retrieval.

Intro to Deep learning - Autoencoders

Intro to Deep learning - Autoencoders Akash Goel This document provides an overview of autoencoders and their use in unsupervised learning for deep neural networks. It discusses the history and development of neural networks, including early work in the 1940s-1980s and more recent advances in deep learning. It then explains how autoencoders work by setting the target values equal to the inputs, describes variants like denoising autoencoders, and how stacking autoencoders can create deep architectures for tasks like document retrieval, facial recognition, and signal denoising.

Convolutional Neural Networks (CNN)

Convolutional Neural Networks (CNN)Gaurav Mittal A comprehensive tutorial on Convolutional Neural Networks (CNN) which talks about the motivation behind CNNs and Deep Learning in general, followed by a description of the various components involved in a typical CNN layer. It explains the theory involved with the different variants used in practice and also, gives a big picture of the whole network by putting everything together.

Next, there's a discussion of the various state-of-the-art frameworks being used to implement CNNs to tackle real-world classification and regression problems.

Finally, the implementation of the CNNs is demonstrated by implementing the paper 'Age ang Gender Classification Using Convolutional Neural Networks' by Hassner (2015).

Perceptron (neural network)

Perceptron (neural network)EdutechLearners i. Perceptron

Representation & Issues

Classification

learning

ii. linear Separability

What Is Deep Learning? | Introduction to Deep Learning | Deep Learning Tutori...

What Is Deep Learning? | Introduction to Deep Learning | Deep Learning Tutori...Simplilearn This Deep Learning Presentation will help you in understanding what is Deep learning, why do we need Deep learning, applications of Deep Learning along with a detailed explanation on Neural Networks and how these Neural Networks work. Deep learning is inspired by the integral function of the human brain specific to artificial neural networks. These networks, which represent the decision-making process of the brain, use complex algorithms that process data in a non-linear way, learning in an unsupervised manner to make choices based on the input. This Deep Learning tutorial is ideal for professionals with beginners to intermediate levels of experience. Now, let us dive deep into this topic and understand what Deep learning actually is.

Below topics are explained in this Deep Learning Presentation:

1. What is Deep Learning?

2. Why do we need Deep Learning?

3. Applications of Deep Learning

4. What is Neural Network?

5. Activation Functions

6. Working of Neural Network

Simplilearn’s Deep Learning course will transform you into an expert in deep learning techniques using TensorFlow, the open-source software library designed to conduct machine learning & deep neural network research. With our deep learning course, you’ll master deep learning and TensorFlow concepts, learn to implement algorithms, build artificial neural networks and traverse layers of data abstraction to understand the power of data and prepare you for your new role as deep learning scientist.

Why Deep Learning?

It is one of the most popular software platforms used for deep learning and contains powerful tools to help you build and implement artificial neural networks.

Advancements in deep learning are being seen in smartphone applications, creating efficiencies in the power grid, driving advancements in healthcare, improving agricultural yields, and helping us find solutions to climate change. With this Tensorflow course, you’ll build expertise in deep learning models, learn to operate TensorFlow to manage neural networks and interpret the results.

You can gain in-depth knowledge of Deep Learning by taking our Deep Learning certification training course. With Simplilearn’s Deep Learning course, you will prepare for a career as a Deep Learning engineer as you master concepts and techniques including supervised and unsupervised learning, mathematical and heuristic aspects, and hands-on modeling to develop algorithms.

There is booming demand for skilled deep learning engineers across a wide range of industries, making this deep learning course with TensorFlow training well-suited for professionals at the intermediate to advanced level of experience. We recommend this deep learning online course particularly for the following professionals:

1. Software engineers

2. Data scientists

3. Data analysts

4. Statisticians with an interest in deep learning

Cnn

CnnNirthika Rajendran Convolutional neural networks (CNNs) learn multi-level features and perform classification jointly and better than traditional approaches for image classification and segmentation problems. CNNs have four main components: convolution, nonlinearity, pooling, and fully connected layers. Convolution extracts features from the input image using filters. Nonlinearity introduces nonlinearity. Pooling reduces dimensionality while retaining important information. The fully connected layer uses high-level features for classification. CNNs are trained end-to-end using backpropagation to minimize output errors by updating weights.

Machine Learning: Introduction to Neural Networks

Machine Learning: Introduction to Neural NetworksFrancesco Collova' 1. Machine learning involves developing algorithms that can learn from data and improve their performance over time without being explicitly programmed. 2. Neural networks are a type of machine learning algorithm inspired by the human brain that can perform both supervised and unsupervised learning tasks. 3. Supervised learning involves using labeled training data to infer a function that maps inputs to outputs, while unsupervised learning involves discovering hidden patterns in unlabeled data through techniques like clustering.

Generative adversarial networks

Generative adversarial networks남주 김 Generative Adversarial Networks (GANs) are a class of machine learning frameworks where two neural networks contest with each other in a game. A generator network generates new data instances, while a discriminator network evaluates them for authenticity, classifying them as real or generated. This adversarial process allows the generator to improve over time and generate highly realistic samples that can pass for real data. The document provides an overview of GANs and their variants, including DCGAN, InfoGAN, EBGAN, and ACGAN models. It also discusses techniques for training more stable GANs and escaping issues like mode collapse.

Recurrent neural networks rnn

Recurrent neural networks rnnKuppusamy P The document discusses recurrent neural networks (RNNs) and long short-term memory (LSTM) networks. It provides details on the architecture of RNNs including forward and back propagation. LSTMs are described as a type of RNN that can learn long-term dependencies using forget, input and output gates to control the cell state. Examples of applications for RNNs and LSTMs include language modeling, machine translation, speech recognition, and generating image descriptions.

Perceptron

PerceptronNagarajan 1. A perceptron is a basic artificial neural network that can learn linearly separable patterns. It takes weighted inputs, applies an activation function, and outputs a single binary value.

2. Multilayer perceptrons can learn non-linear patterns by using multiple layers of perceptrons with weighted connections between them. They were developed to overcome limitations of single-layer perceptrons.

3. Perceptrons are trained using an error-correction learning rule called the delta rule or the least mean squares algorithm. Weights are adjusted to minimize the error between the actual and target outputs.

Optimization for Deep Learning

Optimization for Deep LearningSebastian Ruder Talk on Optimization for Deep Learning, which gives an overview of gradient descent optimization algorithms and highlights some current research directions.

Hyperparameter Tuning

Hyperparameter TuningJon Lederman The document discusses hyperparameters and hyperparameter tuning in deep learning models. It defines hyperparameters as parameters that govern how the model parameters (weights and biases) are determined during training, in contrast to model parameters which are learned from the training data. Important hyperparameters include the learning rate, number of layers and units, and activation functions. The goal of training is for the model to perform optimally on unseen test data. Model selection, such as through cross-validation, is used to select the optimal hyperparameters. Training, validation, and test sets are also discussed, with the validation set used for model selection and the test set providing an unbiased evaluation of the fully trained model.

Simple Introduction to AutoEncoder

Simple Introduction to AutoEncoderJun Lang The document introduces autoencoders, which are neural networks that compress an input into a lower-dimensional code and then reconstruct the output from that code. It discusses that autoencoders can be trained using an unsupervised pre-training method called restricted Boltzmann machines to minimize the reconstruction error. Autoencoders can be used for dimensionality reduction, document retrieval by compressing documents into codes, and data visualization by compressing high-dimensional data points into 2D for plotting with different categories colored separately.

Feedforward neural network

Feedforward neural networkSopheaktra YONG This slide is prepared for the lectures-in-turn challenge within the study group of social informatics, kyoto university.

Support Vector Machines ( SVM )

Support Vector Machines ( SVM ) Mohammad Junaid Khan Welcome to the Supervised Machine Learning and Data Sciences.

Algorithms for building models. Support Vector Machines.

Classification algorithm explanation and code in Python ( SVM ) .

Introduction to Recurrent Neural Network

Introduction to Recurrent Neural NetworkKnoldus Inc. The document provides an introduction to recurrent neural networks (RNNs). It discusses how RNNs differ from feedforward neural networks in that they have internal memory and can use their output from the previous time step as input. This allows RNNs to process sequential data like time series. The document outlines some common RNN types and explains the vanishing gradient problem that can occur in RNNs due to multiplication of small gradient values over many time steps. It discusses solutions to this problem like LSTMs and techniques like weight initialization and gradient clipping.

Optimization in Deep Learning

Optimization in Deep LearningYan Xu This document summarizes various optimization techniques for deep learning models, including gradient descent, stochastic gradient descent, and variants like momentum, Nesterov's accelerated gradient, AdaGrad, RMSProp, and Adam. It provides an overview of how each technique works and comparisons of their performance on image classification tasks using MNIST and CIFAR-10 datasets. The document concludes by encouraging attendees to try out the different optimization methods in Keras and provides resources for further deep learning topics.

Similar to Autoencoders in Deep Learning (20)

autoencoder-190813144108.pptx

autoencoder-190813144108.pptxkiran814572 - Autoencoders are unsupervised artificial neural networks useful for dimensionality reduction and clustering of unlabeled data. They compress the input into a latent-space representation and then reconstruct the output from this representation.

- Deep autoencoders, also known as stacked autoencoders, can learn hierarchical representations of the data by stacking multiple autoencoders together. The features learned by one autoencoder are used as the input for the next autoencoder in the stack.

- Variational autoencoders use probabilistic encoding and decoding to model the data distribution and can generate new samples from this distribution. They have been used for tasks like image generation, data manipulation, and unsupervised learning.

autoencoder-190813145130.pdf

autoencoder-190813145130.pdfSameer Gulshan This document discusses various types of autoencoders, including stacked autoencoders, denoising autoencoders, sparse autoencoders, and variational autoencoders. Autoencoders are unsupervised neural networks that learn efficient data encodings in order to reconstruct input data. They can be used for tasks like dimensionality reduction, feature learning, and generating new data samples. Variational autoencoders additionally use probabilistic encodings and decoders to learn latent space representations of input data.

Explanation of Autoencoder to Variontal Auto Encoder

Explanation of Autoencoder to Variontal Auto Encoderseshathirid Autoencoder to Variontal Auto Encoder notes

A Comprehensive Overview of Encoder and Decoder Architectures in Deep Learnin...

A Comprehensive Overview of Encoder and Decoder Architectures in Deep Learnin...ShubhamMittal569818 The encoder-decoder architecture is a fundamental framework in deep learning, commonly used in tasks such as sequence-to-sequence modeling, machine translation, and image generation. The encoder processes the input data into a compact representation, capturing essential features, while the decoder reconstructs the output from this encoded representation. This structure enables efficient learning of complex transformations and is widely applied in natural language processing (NLP), computer vision, and generative models.

Autoencoders in Computer Vision: A Deep Learning Approach for Image Denoising...

Autoencoders in Computer Vision: A Deep Learning Approach for Image Denoising...ShubhamMittal569818 Autoencoders are neural networks used for unsupervised learning, designed to encode input data into a lower-dimensional latent representation and then reconstruct it back with minimal loss. They consist of an encoder that compresses the input and a decoder that reconstructs it. In computer vision, autoencoders are widely used for image denoising, anomaly detection, dimensionality reduction, and feature extraction. Variants like denoising autoencoders (DAEs), variational autoencoders (VAEs), and convolutional autoencoders (CAEs) enhance their capabilities for different tasks.

Introduction to Autoencoders: Types and Applications

Introduction to Autoencoders: Types and ApplicationsAmr Rashed Introduction to Autoencoders: Types and Applications

Lec16 - Autoencoders.pptx

Lec16 - Autoencoders.pptxSameer Gulshan Autoencoders are unsupervised neural networks that are trained to reconstruct their input. They compress the input into a latent space encoding and then decode the encoding to reconstruct the original input. Variations include denoising autoencoders, which are trained to reconstruct clean inputs from corrupted versions, and sparse autoencoders, which add regularization to activations to learn a sparse code. Contractive autoencoders add a penalty to make the hidden units invariant to small changes in input.

UNIT-4.pdf

UNIT-4.pdfNiharikaThakur32 - Autoencoders are unsupervised neural networks that compress input data into a latent space representation and then reconstruct the output from this representation. They aim to copy their input to their output with minimal loss of information.

- Autoencoders consist of an encoder that compresses the input into a latent space and a decoder that decompresses this latent space back into the original input space. The network is trained to minimize the reconstruction loss between the input and output.

- Autoencoders are commonly used for dimensionality reduction, feature extraction, denoising images, and generating new data similar to the training data distribution.

UNIT-4.pdf

UNIT-4.pdfNiharikaThakur32 - Autoencoders are unsupervised neural networks that compress input data into a latent space representation and then reconstruct the output from this representation. They aim to copy their input to their output with minimal loss of information.

- Autoencoders consist of an encoder that compresses the input into a latent space and a decoder that decompresses this latent space back into the original input space. The network is trained to minimize the reconstruction loss between the input and output.

- Autoencoders are commonly used for dimensionality reduction, feature extraction, denoising images, and generating new data similar to the training data distribution.

Foundations: Artificial Neural Networks

Foundations: Artificial Neural Networksananth Artificial Neural Networks have been very successfully used in several machine learning applications. They are often the building blocks when building deep learning systems. We discuss the hypothesis, training with backpropagation, update methods, regularization techniques.

Seq2Seq (encoder decoder) model

Seq2Seq (encoder decoder) model佳蓉 倪 The document describes the sequence-to-sequence (seq2seq) model with an encoder-decoder architecture. It explains that the seq2seq model uses two recurrent neural networks - an encoder RNN that processes the input sequence into a fixed-length context vector, and a decoder RNN that generates the output sequence from the context vector. It provides details on how the encoder, decoder, and training process work in the seq2seq model.

Autoencoder

AutoencoderWataru Hirota This document discusses autoencoders, which are unsupervised neural networks that learn efficient data encodings. It describes typical autoencoder architectures, including stacked autoencoders, and different types such as denoising autoencoders, sparse autoencoders, and variational autoencoders. It also covers visualizing learned features, unsupervised pretraining, and implementations with TensorFlow.

AUTO ENCODERS (Deep Learning fundamentals)

AUTO ENCODERS (Deep Learning fundamentals)aayanshsingh0401 Deep Learning fundamental. Helps in understanding Auto Encoders

Autoencoder Forest for Anomaly Detection from IoT Time Series

Autoencoder Forest for Anomaly Detection from IoT Time SeriesYiqun Hu My talk for Datacouncil.ai Singapore 2019 https://www.datacouncil.ai/talks/time-based-autoencoder-ensemble-for-anomaly-detection-from-iot-time-series

zkStudyClub: CirC and Compiling Programs to Circuits

zkStudyClub: CirC and Compiling Programs to CircuitsAlex Pruden The programming languages community, the cryptography community, and others rely on translating programs in high-level source languages (e.g., C) to logical constraint representations. Unfortunately, building compilers for this task is difficult and time consuming. In this work, Alex Ozdemir et al present CirC, an infrastructure for building compilers for SNARKs that build upon a common abstraction: stateless, non-deterministic computations called existentially quantified circuits, or EQCs.

201907 AutoML and Neural Architecture Search

201907 AutoML and Neural Architecture SearchDaeJin Kim Brief introduction of NAS

Review of EfficientNet (Google Brain), RandWire (FAIR) papers

NAS flow slide from KihoSuh's slideshare (https://www.slideshare.net/KihoSuh/neural-architecture-search-with-reinforcement-learning-76883153)

[References]

[1] EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks (https://arxiv.org/abs/1905.11946)

[2] Exploring Randomly Wired Neural Networks for Image Recognition (https://arxiv.org/abs/1904.01569)

Anomaly Detection by ADGM / LVAE

Anomaly Detection by ADGM / LVAEPreferred Networks This document discusses anomaly detection using Auxiliary VAE (ADGM) and Ladder VAE (LVAE) models. It summarizes the VAE, ADGM, and LVAE models and how they are used for anomaly detection. It then evaluates the models on the NAB dataset, finding that while the models can detect anomalies based on reconstruction error on MNIST with noise, they have difficulty detecting anomalies in the NAB time-series data.

Ad

Recently uploaded (20)

BTech_CSE_LPU_Presentation.pptx.........

BTech_CSE_LPU_Presentation.pptx.........jinny kaur The B.Tech in Computer Science and Engineering (CSE) at Lovely Professional University (LPU) is a four-year undergraduate program designed to equip students with strong theoretical and practical foundations in computing. The curriculum is industry-aligned and includes core subjects like programming, data structures, algorithms, operating systems, computer networks, databases, and software engineering. Students can also choose specializations such as Artificial Intelligence, Data Science, Cybersecurity, and Cloud Computing. LPU emphasizes hands-on learning through modern labs, live projects, and internships. The university has collaborations with tech giants like Google, Microsoft, and IBM, offering students excellent exposure and placement opportunities. With a vibrant campus life, international diversity, and a strong placement record, LPU's B.Tech CSE program prepares students to become future-ready professionals in the fast-evolving tech world.

DT REPORT by Tech titan GROUP to introduce the subject design Thinking

DT REPORT by Tech titan GROUP to introduce the subject design ThinkingDhruvChotaliya2 This a Report of a Design Thinking

railway wheels, descaling after reheating and before forging

railway wheels, descaling after reheating and before forgingJavad Kadkhodapour railway wheels, descaling after reheating and before forging

Avnet Silica's PCIM 2025 Highlights Flyer

Avnet Silica's PCIM 2025 Highlights FlyerWillDavies22 See what you can expect to find on Avnet Silica's stand at PCIM 2025.

Explainable-Artificial-Intelligence-in-Disaster-Risk-Management (2).pptx_2024...

Explainable-Artificial-Intelligence-in-Disaster-Risk-Management (2).pptx_2024...LiyaShaji4 Explainable artificial intelligence

Level 1-Safety.pptx Presentation of Electrical Safety

Level 1-Safety.pptx Presentation of Electrical SafetyJoseAlbertoCariasDel Level 1-Safety.pptx Presentation of Electrical Safety

Data Structures_Searching and Sorting.pptx

Data Structures_Searching and Sorting.pptxRushaliDeshmukh2 Sorting Order and Stability in Sorting.

Concept of Internal and External Sorting.

Bubble Sort,

Insertion Sort,

Selection Sort,

Quick Sort and

Merge Sort,

Radix Sort, and

Shell Sort,

External Sorting, Time complexity analysis of Sorting Algorithms.

RESORT MANAGEMENT AND RESERVATION SYSTEM PROJECT REPORT.

RESORT MANAGEMENT AND RESERVATION SYSTEM PROJECT REPORT.Kamal Acharya The project developers created a system entitled Resort Management and Reservation System; it will provide better management and monitoring of the services in every resort business, especially D’ Rock Resort. To accommodate those out-of-town guests who want to remain and utilize the resort's services, the proponents planned to automate the business procedures of the resort and implement the system. As a result, it aims to improve business profitability, lower expenses, and speed up the resort's transaction processing. The resort will now be able to serve those potential guests, especially during the high season. Using websites for faster transactions to reserve on your desired time and date is another step toward technological advancement. Customers don’t need to walk in and hold in line for several hours. There is no problem in converting a paper-based transaction online; it's just the system that will be used that will help the resort expand. Moreover, Gerard (2012) stated that “The flexible online information structure was developed as a tool for the reservation theory's two primary applications. Computer use is more efficient, accurate, and faster than a manual or present lifestyle of operation. Using a computer has a vital role in our daily life and the advantages of the devices we use.

Ad

Autoencoders in Deep Learning

- 1. Auto Encoders 1 In the name of God Mehrnaz Faraz Faculty of Electrical Engineering K. N. Toosi University of Technology Milad Abbasi Faculty of Electrical Engineering Sharif University of Technology

- 2. Auto Encoders 2 • An unsupervised deep learning algorithm • Are artificial neural networks • Useful for dimensionality reduction and clustering Unlabeled data 𝑧 = 𝑠 𝑤𝑥 + 𝑏 𝑥 = 𝑠 𝑤′ z + 𝑏′ 𝑥 is 𝑥’s reconstruction 𝑧 is some latent representation or code and 𝑠 is a non-linearity such as the sigmoid 𝑧 𝑥𝑥 Encoder Decoder

- 3. Auto Encoders • Simple structure: 3 𝒙 𝟏 𝒙 𝟑 𝒙 𝟐 𝒙 𝟏 𝒙 𝟑 𝒙 𝟐 Input ReconstructedOutput Hidden Encoder Decoder

- 4. Undercomplete AE • Hidden layer is Undercomplete if smaller than the input layer – Compresses the input – Hidden nodes will be Good features for the training 4 𝑥 𝑥 𝑤 𝑤′ 𝑧

- 5. Overcomplete AE • Hidden layer is Overcomplete if greater than the input layer – No compression in hidden layer. – Each hidden unit could copy a different input component. 5 𝑥 𝑥 𝑤 𝑤′ 𝑧

- 6. Deep Auto Encoders • Deep Auto Encoders (DAE) • Stacked Auto Encoders (SAE) 6

- 7. Training Deep Auto Encoder • First layer: 7 𝒙 𝟏 𝒙 𝟒 𝒙 𝟑 𝒙 𝟐 𝒙 𝟏 𝒙 𝟒 𝒙 𝟑 𝒙 𝟐 𝒂 𝟑 𝒂 𝟐 𝒂 𝟏 Encoder Decoder

- 8. Training Deep Auto Encoder • Features of first layer: 8 𝒙 𝟏 𝒙 𝟒 𝒙 𝟑 𝒙 𝟐 𝒂 𝟑 𝒂 𝟐 𝒂 𝟏 𝑎1 𝑎2 𝑎3

- 9. Training Deep Auto Encoder • Second layer: 9 𝒂 𝟑 𝒂 𝟐 𝒂 𝟏 𝒂 𝟏 𝒂 𝟑 𝒂 𝟐 𝒃 𝟐 𝒃 𝟏

- 10. Training Deep Auto Encoder • Features of second layer: 10 𝒙 𝟏 𝒙 𝟒 𝒙 𝟑 𝒙 𝟐 𝒂 𝟑 𝒂 𝟐 𝒂 𝟏 𝒃 𝟐 𝒃 𝟏 𝑏1 𝑏2

- 11. Using Deep Auto Encoder • Feature extraction • Dimensionality reduction • Classification 11 𝒙 𝟏 𝒙 𝟒 𝒙 𝟑 𝒙 𝟐 𝒂 𝟑 𝒂 𝟐 𝒂 𝟏 𝒃 𝟐 𝒃 𝟏 Inputs Features Encoder

- 12. Using Deep Auto Encoder • Reconstruction 12 𝒙 𝟏 𝒙 𝟒 𝒙 𝟑 𝒙 𝟐 𝒂 𝟑 𝒂 𝟐 𝒂 𝟏 𝒃 𝟐 𝒃 𝟏 𝒂 𝟏 𝒂 𝟑 𝒂 𝟐 𝒙 𝟒 𝒙 𝟑 𝒙 𝟐 𝒙 𝟏 Encoder Decoder

- 13. Using AE • Denoising • Data compression • Unsupervised learning • Manifold learning • Generative model 13

- 14. Types of Auto Encoder • Stacked auto encoder (SAE) • Denoising auto encoder (DAE) • Sparse Auto Encoder (SAE) • Contractive Auto Encoder (CAE) • Convolutional Auto Encoder (CAE) • Variational Auto Encoder (VAE) 14

- 15. Generative Models • Given training data, generate new samples from same distribution – Variational Auto Encoder (VAE) – Generative Adversarial Network (GAN) 15

- 16. Variational Auto Encoder 16 Encoder Decoder Input x Output 𝐱𝒒∅ 𝒛|𝒙 𝒑 𝜽 𝒙|𝒛 𝒙 𝟏 𝒙 𝟒 𝒙 𝟑 𝒙 𝟐 𝒙 𝟏 𝒙 𝟒 𝒙 𝟑 𝒙 𝟐 𝒛 𝟏 𝒛 𝟐

- 17. Variational Auto Encoder • Use probabilistic encoding and decoding – Encoder: – Decoder: • x: Unknown probability distribution • z: Gaussian probability distribution 17 𝑞∅ 𝑧|𝑥 𝑝 𝜃 𝑥|𝑧

- 18. Training Variational Auto Encoder • Latent space: 18 𝒙 𝟏 𝒙 𝟒 𝒙 𝟑 𝒙 𝟐 𝒉 𝟏 𝒉 𝟐 𝒉 𝟑 𝝈 𝝁 𝒛 𝑞∅ 𝑧|𝑥 Mean Variance 1 dimensional Gaussian probability distribution If we have n neurons for 𝝈 and 𝝁 then we have n dimensional distribution

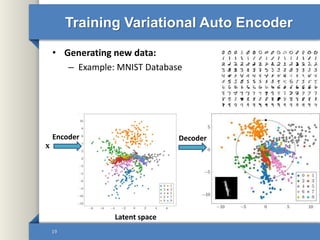

- 19. Training Variational Auto Encoder • Generating new data: – Example: MNIST Database 19 𝐱 Encoder Latent space Decoder

- 20. Generative Adversarial Network • VAE: • GAN: – Can generate samples – Trained by competing each other – Use neural network – Z is some random noise (Gaussian/Uniform). – Z can be thought as the latent representation of the image. 20 x Decoder 𝐱zEncoder z Generator 𝐱 x Discriminator Fake or real? Loss

- 21. GAN’s Architecture Real samples Discriminator Generated fake samples Fine tune training Latent space Noise Is D correct?Generator • Overview:

- 22. Using GAN • Image generation: 22

- 23. Using GAN • Data manipulation: 23

- 24. Denoising Auto Encoder • Add noise to its input, and train it to recover this original. 24

- 25. Denoising Auto Encoder 25 Input Output Hidden 3 Hidden 2 Hidden 1 +Noise Input Output Hidden 3 Hidden 2 Hidden 1 Dropout Randomly switched inputGaussian noise

- 26. Sparse Auto Encoder • Reduce the number of active neurons in the coding layer. – Add sparsity loss into the cost function. • Sparsity loss: – Kullback-Leibler(KL) divergence is commonly used. 26

- 27. Sparse Auto Encoder 27 1 ˆ log 1 log ˆ ˆ1 j j j KL 1 ˆ, ,sparse j j J w b J w b KL