Automation testing basics and tools presentation

- 1. 272: Software Engineering Fall 2008 Instructor: Tevfik Bultan Lecture 10: Testing, Automated Testing

- 2. Finding Errors in Software • We discussed various approaches to finding errors in programs – Static analysis techniques and tools such as • automated theorem proving, ESC/Java • model checking, Java pathfinder – Dynamic monitoring of assertions and contracts • JContractor • JML runtime assertion checker • Although these are interesting and promising research areas the most common way of looking for software errors in industry is testing – Testing: Checking correctness of software by executing the software on some inputs (test cases)

- 3. Software Testing • Goal of testing – finding faults in the software – demonstrating that there are no faults in the software (for the test cases that has been used during testing) • It is not possible to prove that there are no faults in the software using testing • Testing should help locate errors, not just detect their presence – a “yes/no” answer to the question “is the program correct?” is not very helpful • Testing should be repeatable – could be difficult for distributed or concurrent software – effect of the environment, uninitialized variables

- 4. Testing Software is Hard • If you are testing a bridge’s ability to sustain weight, and you test it with 1000 tons you can infer that it will sustain weight 1000 tons • This kind of reasoning does not work for software systems – software systems are not linear nor continuous • Exhaustively testing all possible input/output combinations is too expensive – the number of test cases increase exponentially with the number of input/output variables

- 5. Some Definitions • Let P be a program and let D denote its input domain • A test case d is an element of input domain d D – a test case gives a valuation for all the input variables of the program • A test set T is a finite set of test cases, i.e., a subset of D, T D • The basic difficulty in testing is finding a test set that will uncover the faults in the program • Exhaustive testing corresponds to setting T = D

- 6. Exhaustive Testing is Hard • Number of possible test cases (assuming 32 bit integers) – 232 232 = 264 • Do bigger test sets help? – Test set {(x=3,y=2), (x=2,y=3)} will detect the error – Test set {(x=3,y=2),(x=4,y=3),(x=5,y=1)} will not detect the error although it has more test cases • It is not the number of test cases • But, if T1 T2, then T1 will detect every fault detected by T2 int max(int x, int y) { if (x > y) return x; else return x; }

- 7. Exhaustive Testing • Assume that the input for the max procedure was an integer array of size n – Number of test cases: 232 n • Assume that the size of the input array is not bounded – Number of test cases: • The point is, naive exhaustive testing is pretty hopeless

- 8. Random Testing • Use a random number generator to generate test cases • Derive estimates for the reliability of the software using some probabilistic analysis • Coverage is a problem

- 9. Generating Test Cases Randomly • If we pick test cases randomly it is unlikely that we will pick a case where x and y have the same value • If x and y can take 232 different values, there are 264 possible test cases. In 232 of them x and y are equal – probability of picking a case where x is equal to y is 2-32 • It is not a good idea to pick the test cases randomly (with uniform distribution) in this case • So, naive random testing is pretty hopeless too bool isEqual(int x, int y) { if (x = y) z := false; else z := false; return z; }

- 10. Types of Testing • Functional (Black box) vs. Structural (White box) testing – Functional testing: Generating test cases based on the functionality of the software – Structural testing: Generating test cases based on the structure of the program – Black box testing and white box testing are synonyms for functional and structural testing, respectively. • In black box testing the internal structure of the program is hidden from the testing process • In white box testing internal structure of the program is taken into account • Module vs. Integration testing – Module testing: Testing the modules of a program in isolation – Integration testing: Testing an integrated set of modules

- 11. Functional Testing, Black-Box Testing • Functional testing: – identify the the functions which software is expected to perform – create test data which will check whether these functions are performed by the software – no consideration is given how the program performs these functions, program is treated as a black-box: black-box testing – need an oracle: oracle states precisely what the outcome of a program execution will be for a particular test case. This may not always be possible, oracle may give a range of plausible values • A systematic approach to functional testing: requirements based testing – driving test cases automatically from a formal specification of the functional requirements

- 12. Domain Testing • Partition the input domain to equivalence classes • For some requirements specifications it is possible to define equivalence classes in the input domain • Here is an example: A factorial function specification: – If the input value n is less than 0 then an appropriate error message must be printed. If 0 n < 20, then the exact value n! must be printed. If 20 n 200, then an approximate value of n! must be printed in floating point format using some approximate numerical method. The admissible error is 0.1% of the exact value. Finally, if n > 200, the input can be rejected by printing an appropriate error message. • Possible equivalence classes: D1 = {n<0}, D2 = {0 n < 20}, D3 = {20 n 200}, D4 = {n > 200} • Choose one test case per equivalence class to test

- 13. Equivalence Classes • If the equivalence classes are disjoint, then they define a partition of the input domain • If the equivalence classes are not disjoint, then we can try to minimize the number of test cases while choosing representatives from different equivalence classes • Example: D1 = {x is even}, D2 = {x is odd}, D3 = {x 0}, D4={x > 0} – Test set {x=48, x= –23} covers all the equivalence classes • On one extreme we can make each equivalence class have only one element which turns into exhaustive testing • The other extreme is choosing the whole input domain D as an equivalence class which would mean that we will use only one test case

- 14. Testing Boundary Conditions • For each range [R1, R2] listed in either the input or output specifications, choose five cases: – Values less than R1 – Values equal to R1 – Values greater than R1 but less than R2 – Values equal to R2 – Values greater than R2 • For unordered sets select two values – 1) in, 2) not in • For equality select 2 values – 1) equal, 2) not equal • For sets, lists select two cases – 1) empty, 2) not empty R1 R2

- 15. Testing Boundary Conditions • For the factorial example, ranges for variable n are: – [, 0], [0,20], [20,200], [200, ] – A possible test set: • {n = -5, n=0, n=11, n=20, n= 25, n=200, n= 3000} – If we know the maximum and minimum values that n can take we can also add those n=MIN, n=MAX to the test set.

- 16. Structural Testing, White-Box Testing • Structural Testing – the test data is derived from the structure of the software – white-box testing: the internal structure of the software is taken into account to derive the test cases • One of the basic questions in testing: – when should we stop adding new test cases to our test set? – Coverage metrics are used to address this question

- 17. Coverage Metrics • Coverage metrics – Statement coverage: all statements in the programs should be executed at least once – Branch coverage: all branches in the program should be executed at least once – Path coverage: all execution paths in the program should be executed at lest once • The best case would be to execute all paths through the code, but there are some problems with this: – the number of paths increases fast with the number of branches in the program – the number of executions of a loop may depend on the input variables and hence may not be possible to determine – most of the paths can be infeasible

- 18. Statement Coverage • Choose a test set T such that by executing program P for each test case in T, each basic statement of P is executed at least once • Executing a statement once and observing that it behaves correctly is not a guarantee for correctness, but it is an heuristic – this goes for all testing efforts since in general checking correctness is undecidable bool isEqual(int x, int y) { if (x = y) z := false; else z := false; return z; } int max(int x, int y) { if (x > y) return x; else return x; }

- 19. Statement Coverage areTheyPositive(int x, int y) { if (x >= 0) print(“x is positive”); else print(“x is negative”); if (y >= 0) print(“y is positive”); else print(“y is negative”); } Following test set will give us statement coverage: T1 = {(x=12,y=5), (x= 1,y=35), (x=115,y=13),(x=91,y= 2)} There are smaller test cases which will give us statement coverage too: T2 = {(x=12,y= 5), (x= 1,y=35)} There is a difference between these two test sets though

- 20. Statement vs. Branch Coverage assignAbsolute(int x) { if (x < 0) x := -x; z := x; } Consider this program segment, the test set T = {x=1} will give statement coverage, however not branch coverage (x < 0) x := -x z := x B0 B1 B2 Test set {x=1} does not execute this edge, hence, it does not give branch coverage true false Control Flow Graph:

- 21. Control Flow Graphs (CFGs) • Nodes in the control flow graph are basic blocks – A basic block is a sequence of statements always entered at the beginning of the block and exited at the end • Edges in the control flow graph represent the control flow if (x < y) { x = 5 * y; x = x + 3; } else y = 5; x = x+y; (x < y) x = 5 * y x = x + 3 y = 5 x = x+y B1 B2 B0 B3 • Each block has a sequence of statements • No jump from or to the middle of the block • Once a block starts executing, it will execute till the end Y N

- 22. Branch Coverage • Construct the control flow graph • Select a test set T such that by executing program P for each test case d in T, each edge of P’s control flow graph is traversed at least once (x < 0) x := -x z := x B0 B1 B2 Test set {x=1} does not execute this edge, hence, it does not give branch coverage Test set {x= 1, x=2}gives both statement and branch coverage true false

- 23. Path Coverage • Select a test set T such that by executing program P for each test case d in T, all paths leading from the initial to the final node of P’s control flow graph are traversed

- 24. Path Coverage areTheyPositive(int x, int y) { if (x >= 0) print(“x is positive”); else print(“x is negative”); if (y >= 0) print(“y is positive”); else print(“y is negative”); } (x >= 0) B0 B1 print(“x is p”) B2 print(“x is n”) (y >= 0) B3 B4 print(“y is p”) B5 print(“y is n”) return B6 Test set: T2 = {(x=12,y= 5), (x= 1,y=35)} gives both branch and statement coverage but it does not give path coverage Set of all execution paths: {(B0,B1,B3,B4,B6), (B0,B1,B3,B5,B6), (B0,B2,B3,B4,B6), (B0,B2,B3,B5,B6)} Test set T2 executes only paths: (B0,B1,B3,B5,B6) and (B0,B2,B3,B4,B6) true false true false

- 25. Path Coverage areTheyPositive(int x, int y) { if (x >= 0) print(“x is positive”); else print(“x is negative”); if (y >= 0) print(“y is positive”); else print(“y is negative”); } (x >= 0) B0 B1 print(“x is p”) B2 print(“x is n”) (y >= 0) B3 B4 print(“y is p”) B5 print(“y is n”) return B6 Test set: T1 = {(x=12,y=5), (x= 1,y=35), (x=115,y=13),(x=91,y= 2)} gives both branch, statement and path coverage true false true false

- 26. Path Coverage • Number of paths is exponential in the number of conditional branches – testing cost may be expensive • Note that every path in the control flow graphs may not be executable – It is possible that there are paths which will never be executed due to dependencies between branch conditions • In the presence of cycles in the control flow graph (for example loops) we need to clarify what we mean by path coverage – Given a cycle in the control flow graph we can go over the cycle arbitrary number of times, which will create an infinite set of paths – Redefine path coverage as: each cycle must be executed 0, 1, ..., k times where k is a constant (k could be 1 or 2)

- 27. Condition Coverage • In the branch coverage we make sure that we execute every branch at least once – For conditional branches, this means that, we execute the TRUE branch at least once and the FALSE branch at least once • Conditions for conditional branches can be compound boolean expressions – A compound boolean expression consists of a combination of boolean terms combined with logical connectives AND, OR, and NOT • Condition coverage: – Select a test set T such that by executing program P for each test case d in T, (1) each edge of P’s control flow graph is traversed at least once and (2) each boolean term that appears in a branch condition takes the value TRUE at least once and the value FALSE at least once • Condition coverage is a refinement of branch coverage (part (1) is same as the branch coverage)

- 28. Condition Coverage something(int x) { if (x < 0 || y < x) { y := -y; x := -x; } z := x; } T = {(x=1, y=1), (x=1, y=1)} will achieve statement, branch and path coverage, however T will not achieve condition coverage because the boolean term (y < x) never evaluates to true. This test set satisfies part (1) but does not satisfy part (2). (x < 0 || y < x) y := -y; x := -x; z := x B0 B1 B2 true false Control Flow Graph T = {(x=1, y=1), (x=1, y=0)} will not achieve condition coverage either. This test set satisfies part (2) but does not satisfy part (1). It does not achieve branch coverage since both test cases take the true branch, and, hence, it does not achieve condition coverage by definition. T = {(x=1, y=2), {(x=1, y=1)} achieves condition coverage.

- 29. Multiple Condition Coverage • Multiple Condition Coverage requires that all possible combination of truth assignments for the boolean terms in each branch condition should happen at least once • For example for the previous example we had: x < 0 && y < x • Test set {(x=1, y=2), (x=1, y=1)}, achieves condition coverage: – test case (x=1, y=2) makes term1=true, term2=true, and the whole expression evaluates to true (i.e., we take the true branch) – test case (x=1, y=1) makes term1=false, term2=false, and the whole expression evaluates to false (i.e., we take the false branch) • However, test set {(x=1, y= 2), (x=1, y=1)} does not achieve multiple condition coverage since we did not observe the following truth assignments – term1=true, term2=false – term1=false, term2=true term1 term2

- 30. Types of Testing • Unit (Module) testing – testing of a single module in an isolated environment • Integration testing – testing parts of the system by combining the modules • System testing – testing of the system as a whole after the integration phase • Acceptance testing – testing the system as a whole to find out if it satisfies the requirements specifications

- 31. Types of Testing • Unit (Module) testing – testing of a single module in an isolated environment • Integration testing – testing parts of the system by combining the modules • System testing – testing of the system as a whole after the integration phase • Acceptance testing – testing the system as a whole to find out if it satisfies the requirements specifications

- 32. Unit Testing • Involves testing a single isolated module • Note that unit testing allows us to isolate the errors to a single module – we know that if we find an error during unit testing it is in the module we are testing • Modules in a program are not isolated, they interact with each other. Possible interactions: – calling procedures in other modules – receiving procedure calls from other modules – sharing variables • For unit testing we need to isolate the module we want to test, we do this using two things – drivers and stubs

- 33. Drivers and Stubs • Driver: A program that calls the interface procedures of the module being tested and reports the results – A driver simulates a module that calls the module currently being tested • Stub: A program that has the same interface as a module that is being used by the module being tested, but is simpler. – A stub simulates a module called by the module currently being tested

- 34. Drivers and Stubs Driver Module Under Test Stub procedure call procedure call access to global variables • Driver and Stub should have the same interface as the modules they replace • Driver and Stub should be simpler than the modules they replace

- 35. Integration Testing • Integration testing: Integrated collection of modules tested as a group or partial system • Integration plan specifies the order in which to combine modules into partial systems • Different approaches to integration testing – Bottom-up – Top-down – Big-bang – Sandwich

- 36. Module Structure A C D E F G H • A uses C and D; B uses D; C uses E and F; D uses F, G, H and I; H uses I • Modules A and B are at level 3; Module D is at level 2 Modules C and H are at level 1; Modules E, F, G, I are at level 0 • level 0 components do not use any other components • level i components use at least one component on level i-1 and no component at a level higher than i-1 I B • We assume that the uses hierarchy is a directed acyclic graph. • If there are cycles merge them to a single module level 0 level 1

- 37. Bottom-Up Integration • Only terminal modules (i.e., the modules that do not call other modules) are tested in isolation • Modules at lower levels are tested using the previously tested higher level modules • Non-terminal modules are not tested in isolation • Requires a module driver for each module to feed the test case input to the interface of the module being tested – However, stubs are not needed since we are starting with the terminal modules and use already tested modules when testing modules in the lower levels

- 38. Bottom-up Integration A C D E F G H I B

- 39. Top-down Integration • Only modules tested in isolation are the modules which are at the highest level • After a module is tested, the modules directly called by that module are merged with the already tested module and the combination is tested • Requires stub modules to simulate the functions of the missing modules that may be called – However, drivers are not needed since we are starting with the modules which is not used by any other module and use already tested modules when testing modules in the higher levels

- 40. Top-down Integration A C D E F G H I B

- 41. Other Approaches to Integration • Sandwich Integration – Compromise between bottom-up and top-down testing – Simultaneously begin bottom-up and top-down testing and meet at a predetermined point in the middle • Big Bang Integration – Every module is unit tested in isolation – After all of the modules are tested they are all integrated together at once and tested – No driver or stub is needed – However, in this approach, it may be hard to isolate the bugs!

- 42. System Testing, Acceptance Testing • System and Acceptance testing follows the integration phase – testing the system as a whole • Test cases can be constructed based on the the requirements specifications – main purpose is to assure that the system meets its requirements • Manual testing – Somebody uses the software on a bunch of scenarios and records the results – Use cases and use case scenarios in the requirements specification would be very helpful here – manual testing is sometimes unavoidable: usability testing

- 43. System Testing, Acceptance Testing • Alpha testing is performed within the development organization • Beta testing is performed by a select group of friendly customers • Stress testing – push system to extreme situations and see if it fails – large number of data, high input rate, low input rate, etc.

- 44. Regression testing • You should preserve all the test cases for a program • During the maintenance phase, when a change is made to the program, the test cases that have been saved are used to do regression testing – figuring out if a change made to the program introduced any faults • Regression testing is crucial during maintenance – It is a good idea to automate regression testing so that all test cases are run after each modification to the software • When you find a bug in your program you should write a test case that exhibits the bug – Then using regression testing you can make sure that the old bugs do not reappear

- 45. Test Plan • Testing is a complicated task – it is a good idea to have a test plan • A test plan should specify – Unit tests – Integration plan – System tests – Regression tests

- 46. Mutation Analysis • Mutation analysis is used to figure out the quality of a test set • Mutation analysis creates mutants of a program by making changes to the program (change a condition, change an assignment, etc.) • Each mutant program and the original program are executed using the test set • If a mutant and the original program give different results for a test case then the test set detected that the mutant is different from the original program, hence the mutant is said to be dead • If test set does not detect the difference between the original program and some mutants, these mutants are said to be live • We want the test set to kill as many mutants as possible – Mutant programs can be equivalent to the original program, hence no test set can kill them

- 47. Automated Testing • Automated testing refers to the techniques which generate the test sets automatically • I will talk about two tools on automated testing – TestEra, Korat • TestEra is a specification-based functional (black-box) testing tool – Requires the user to write input/output specifications • Korat is also a kind of functional (black-box) testing tool – Requires the user to write a specification as a method in the class that is being testing • Both tools are used for unit testing – Testing of complex data structures • Both tools automatically generate test cases from specifications – They exhaustively generate all non-isomorphic test cases within a given scope

- 48. TestEra and Korat • The references are: – ``TestEra: Specification-based Testing of Java Programs Using SAT.'' S. Khurshid and D. Marinov. Automated Software Engineering Journal, Volume 11, Number 4. October 2004. – ``Korat: Automated Testing Based on Java Predicates.'' C. Boyapati, S. Khurshid and D. Marinov. ACM/SIGSOFT International Symposium on Software Testing and Analysis (ISSTA 2002), Rome, Italy. Jul 2002.

- 49. TestEra Framework • TestEra is a framework for automated testing of Java programs • TestEra’s main focus is unit testing of complex data structures – Examples: Red-black trees, linked lists etc. • TestEra has also been used in analyzing larger Java programs – Examples: Alloy Analyzer, Intentional Naming System

- 50. TestEra Framework • TestEra automatically generates all non-isomorphic test cases within a given input size – the input size corresponds to the scope in Alloy specifications • TestEra evaluates the correctness criteria for the automatically generated test cases • TestEra uses Alloy and Alloy Analyzer to generate the test cases and to evaluate the correctness criteria • TestEra produces concrete Java inputs as counterexamples to violated correctness criteria

- 51. TestEra Framework • TestEra framework requires the following: – A specification of inputs to a Java program written in Alloy • precondition – A correctness criterion written in Alloy • Class invariant and post-condition – An concretization function • which maps instances of Alloy specifications to concrete Java objects – An abstraction function • which maps the concrete Java objects to instances of Alloy specifications

- 52. TestEra Framework • TestEra generates all the non-isomorphic input instances using Alloy Analyzer – Two instances are isomorphic if there is a one to one mapping between the atoms of the two instances which preserve all the relations • TestEra uses the concretization function to translate the instances of the Alloy specification to Java inputs – These inputs form the test set • TestEra runs the program on the test set • TestEra maps the output produced by the program to Alloy using the abstraction function • Finally, TestEra uses the Alloy Analyzer to check the input and the output against the correctness criteria

- 53. TestEra Framework TestEra spec Alloy input spec Java tester Alloy I/O spec Alloy Analyzer Concretization Run Code Abstraction Alloy instances Alloy input Java input Java output Alloy output counter example Check correctness fail pass

- 54. Steps of the TestEra Framework • Identify a sequence of method calls to analyze • Create an Alloy specification for the inputs of these methods • Create an Alloy specification of the correctness criteria by relating the inputs to the outputs of these methods • Define a concretization translation a2j from an Alloy instance of the input specification to a Java input to these methods • Define an abstraction translation j2a from a Java output to an Alloy instance of the specification of the correctness cretiria that relates inputs to outputs

- 55. TestEra Spec • A TestEra specification is a combination of Alloy and Java code – This specification is split to three files • Alloy input specification • Java code for – translating input instances from Alloy to Java – running the sequence of Java methods to test – translating the Java output back to Alloy • Alloy specification for the correctness criteria which relates the input values to the output values

- 56. TestEra Analysis • TestEra uses Alloy Analyzer to generate all non-isomorphic instances of the Alloy input specification • Each instance is translated to Java input using concretization – this forms the test case for the sequence of Java methods to be tested • The sequence of methods are run on this input • The produced output is translated using abstraction back to Alloy • The input and output instances are checked against the correctness criterion • If the check fails a counter-example is reported – otherwise next Alloy instance is used for testing

- 57. An Example • A recursive method for performing merge sort on acyclic singly linked lists Java: class List { int elem; List next; static List mergeSort(List l) { ... } } Alloy: module list import integer sig List { elem: Integer, next: lone List } Signature declaration introduces the List type with functions: elem: List Integer next: List List next is a partial function which is indicated by the keyword lone

- 58. Input Specification module list import integer sig List { elem: Integer, next: lone List } fun Acyclic(l: List) { all n: l.*next | lone n.~next // at most one parent no l.~next } // head has no parent one sig Input in List {} fact GenerateInputs { Acyclic(Input) } Subsignature Input is a subset of List and it has exactly one atom which is indicated by the keyword one

- 59. Correctness Criteria fun Sorted(l: List) { all n: l.*next | some n.next => n.elem <= n.next.elem } funPerm(l1: List, l2:List) all e: Integer | #(e.~elem & l1.*next) = #(e.~elem & l2.*next) } fun MergeSortOK(i:List, o:List) { Acyclic(o) Sorted(o) Perm(i,o) } one sig Output in List {} fact OutputOK { MergeSortOk(Input, Output) } # denotes cardinality of sets

- 60. Counter-Examples • If an error is inserted in the method for merging where (l1.elem <= l2.elem) is changed to (l1.elem >= l2.elem) • Then TestEra generates a counter example Counterexample found: Input List: 1 -> 1 -> 3 -> 2 Output List: 3 -> 2 -> 1 -> 1

- 61. Abstraction and Concretization Translations • An abstraction function: j2a – translate Java instance to Alloy instance • A concretization function: a2j – translate Alloy instance to Java instance • These functions are written in Java by the user

- 62. Concretization • Concretization is implemented in two stages 1. Create a Java object for each atom in Alloy specification and store this correspondence in a map 2. Establish the relationships among the Java objects created in the first step

- 63. TestEra Case studies • Red-Black trees – Tested the implementation of Red-Black trees in java.util.TreeMap – They introduced some bugs and showed that they can catch them with TestEra framework • Intentional Naming System – A naming architecture for resource discovery and service location in dynamic networks – Found some bugs • Alloy Analyzer – Found some bugs in the Alloy Analyzer using TestEra framework

- 64. Korat • Another automated testing tool – Similar to TestEra but does not require extra Alloy specifications – Application domain is again unit testing of complex data structures • It uses Java predicates to generate the test cases – These are Java methods which return a boolean value – For example pre and post-conditions of methods • Korat generates the test cases from pre and postconditions of methods • There is no need to write an extra specification if the class contract is written as Java predicates (like the JContractor approach)

- 65. Korat • Korat uses the method precondition to automatically generate all nonisomorphic test cases up to a give small size – Given a predicate and a bound on the size of its inputs Korat generates all nonisomorphic inputs for which the predicate returns true • Korat then executes the method on each test case and uses the method postcondition as a test oracle to check the correctness of output – Korat exhaustively explores the bounded input space of the predicate but does so efficiently by monitoring the predicate’s executions and pruning large portions of the search space

- 66. An Example: Binary Tree import java.util.*; class BinaryTree { private Node root; private int size; static class Node { private Node left; private Node right; } public boolean repOk() { // this method checks the class invariant: // checks that empty tree has size zero // checks that the tree has no cycle // checks that the number of nodes in the tree is // equal to its size }

- 67. Finitization • Korat uses a finitization description to specify the finite bounds on the inputs (scope) public static Finitization finBinaryTree(int NUM_node){ Finitization f = new Finitization(BinaryTree.class); ObjSet nodes = f.createObjects(“Node”, NUM_node); nodes.add(null); f.set(“root”, nodes); f.set(“size”, NUM_node); f.set(“Node.left”, nodes); f.set(“Node.right”, nodes); return f; } • Korat automatically generates a finitization skeleton based on the type declarations in the Java code – Developers can restrict or extend this default finitization Creates a set of objects of Type “Node” with NUM_node objects in the set The value of size is set to NUM_node

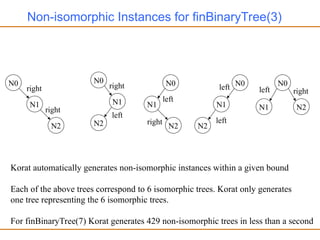

- 68. Non-isomorphic Instances for finBinaryTree(3) N0 N1 N2 N0 N1 N2 N0 N1 N2 N0 N1 N2 N0 N1 N2 right right right left left right left left right left Korat automatically generates non-isomorphic instances within a given bound Each of the above trees correspond to 6 isomorphic trees. Korat only generates one tree representing the 6 isomorphic trees. For finBinaryTree(7) Korat generates 429 non-isomorphic trees in less than a second

- 69. Isomorphic Instances N0 N1 N2 right left N0 N2 N1 right left N1 N2 N0 right left N1 N0 N2 right left N2 N1 N0 right left N2 N0 N1 right left

- 70. Generating test cases • The crucial component of Korat is the test case generation algorithms • Consider the binary tree example with scope 3 – There are three fields: root, left, right – Each of these fields can be assigned a node instance or null – There is one root field and there is one left and one right field for each node instance – Given n node instances, the state space (the set of all possible test cases) for the binary tree example is: (n+1)2n + 1 – Most of these structures are not valid binary trees • They do not satisfy the class invariant – Most of these structures are isomorphic (they are equivalent if we ignore the object identities)

- 71. Generating test cases • There are two techniques Korat uses to generate the test cases efficiently 1. Korat only generates non-isomorphic test cases 2. Korat prunes the state space by eliminating sets of test cases which do not satisfy the class invariant

- 72. Isomorphism • The isomorphism definition used in Korat is the following – O1, O2, ..., On are sets objects from n classes – O = O1 O2 ... On – P: the set of consisting of null and all values of primitive types that the fields of objects in O can contain – r O is a special root object – Given a test case C, OC is the set of objects reachable from r in C • Two test cases C and C’ are isomorphic iff there is a permutation on O, mapping objects from Oi to objects from Oi for all 1 i n, such that o, o’ OC . f fields(o) .p P . o.f == o’ in C iff (o).f == (o’) in C’ and o.f == p in C iff (o).f == p in C’

- 73. Isomorphism • In Korat isomorphism is defined with respect to a root object – for example this • Two test cases are defined to be isomorphic if the parts of their object graphs reachable from the root object are isomorphic • The isomorphism definition partitions the state space (i.e. the input domain) to a set of isomorphism partitions – Korat generates only one test case for each partition class

- 74. Generating Test Cases • Korat only generates the test cases which satisfies the input predicate: class invariant and the precondition • Korat explores the state space efficiently using backtracking – It does not generate all instances one by one and check the input predicate – It prunes its search of the state space based on the evaluation of the input predicate • If the method that checks the input predicate returns false without checking a field then there is no need to generate test cases which assign different values to that field – In order to exploit this, Korat keeps track of the fields that are accessed before the predicate returns false – For this to work well, predicate method should return false as soon as it detects a violation

- 75. Generating Test Cases • Korat orders all the elements in every class domain and every field domain • Each test case is represented as a vector of indices into the corresponding field domains N0 N1 N2 right left size=3 [1,0,2,3,0,0,0,0,] Test Case Corresponding Vector For the Binary Tree example assume that The class domain is ordered as N0 < N1 < N2 The field domains for root, left and right are ordered as null < N0 < N1 < N2 The size domain has one element which is 3 [1,0,2,3,0,0,0,0,] fields of the Binary tree object fields of the Node object N0 fields of the Node object N1

- 76. Generating Test Cases • Search starts with a candidate vector set to all zeros. • For each candidate vectors, Korat sets fields in the objects according to the values in the vector. • Korat then invokes repOk (i.e., class invariant) to check the validity of the current candidate. – During the execution of repOk, Korat monitors the fields that reopOK accesses, it stores the indices of the fields that are accessed by the repOK (field ordering) – For example, for the binary tree example, if the repOK accesses root, N0.left and N0.right, then the field ordering is 0, 2, 3 • Korat generates the next candidate vector by backtracking on the fields accessed by repOk. – First increments the field domain index for the field that is last in the field-ordering – If the field index exceeds the domain size, then Korat resets that index to zero and increments the domain index of the previous field in the field ordering

- 77. Generating Test Cases • Korat achieves non-isomorphic test case generation using the ordering of field domains and the vector representation • While generating the test cases, Korat ensures that the indices of the objects that belong to the same class domain are listed in nondecreasing order in the generated candidate vectors • This means that during backtracking, Korat looks for fields – that precede the field that is accessed last and – that have an object from the same class domain as the field that is accessed last – and makes sure that the object assigned to the field that is accessed last is higher in the ordering then those objects

- 78. Using Contracts in Testing • Korat checks the contracts written in JML on the generated instances //@ public invariant repOk(); //class invariant /*@ requires has(n) // precondition @ ensures !has(n) // postcondition @*/ public void remove(Node n) { ... } • Korat uses JML tool-set to translate JML annotations into runtime Java assertions

- 79. Using Contracts in Testing • Given a finitization, Korat generates all non-isomorphic test cases within the given scope (defined by the finitization) that satisfy the class invariant and the pre-condition • The post-conditions and class invariants provide a test oracle for testing – For each generated test case, execute the method that is being tested and check the class invariant and the post-condition • Korat uses JML tool-set to automatically generate test oracles from method post-conditions written as annotations in JML

- 80. Korat Performance • Checking a BinaryTree implementation with scope 8 takes 1/53 seconds, with scope 11 takes 56.21 seconds, with scope 12 takes 233.59 seconds. • Test case generation with Korat is more efficient than the test case generation with Alloy Analyzer.

![Testing Boundary Conditions

• For each range [R1, R2] listed in either the input or output

specifications, choose five cases:

– Values less than R1

– Values equal to R1

– Values greater than R1 but less than R2

– Values equal to R2

– Values greater than R2

• For unordered sets select two values

– 1) in, 2) not in

• For equality select 2 values

– 1) equal, 2) not equal

• For sets, lists select two cases

– 1) empty, 2) not empty

R1 R2](https://image.slidesharecdn.com/autotest-250404185553-9f8156cf/85/Automation-testing-basics-and-tools-presentation-14-320.jpg)

![Testing Boundary Conditions

• For the factorial example, ranges for variable n are:

– [, 0], [0,20], [20,200], [200, ]

– A possible test set:

• {n = -5, n=0, n=11, n=20, n= 25, n=200, n= 3000}

– If we know the maximum and minimum values that n can take we

can also add those n=MIN, n=MAX to the test set.](https://image.slidesharecdn.com/autotest-250404185553-9f8156cf/85/Automation-testing-basics-and-tools-presentation-15-320.jpg)

![Generating Test Cases

• Korat orders all the elements in every class domain and every field

domain

• Each test case is represented as a vector of indices into the

corresponding field domains

N0

N1 N2

right

left

size=3

[1,0,2,3,0,0,0,0,]

Test Case Corresponding Vector

For the Binary Tree example assume that

The class domain is ordered as N0 < N1 < N2

The field domains for root, left and right are ordered as null < N0 < N1 < N2

The size domain has one element which is 3

[1,0,2,3,0,0,0,0,]

fields of the

Binary tree object

fields of the Node object N0

fields of the

Node object N1](https://image.slidesharecdn.com/autotest-250404185553-9f8156cf/85/Automation-testing-basics-and-tools-presentation-75-320.jpg)