AWR Ambiguity: Performance reasoning when the numbers don't add up

21 likes4,776 views

A close look at an AWR report where DB Time is exceeded by the sum of DB CPU and foreground wait time. We recall core Oracle performance principles and instrumentation design on the way to untangling the confusion.

1 of 23

Downloaded 190 times

![DB Time

• Foreground session time in database calls

• Measured by DB code using clear instrumentation points:

• [call entry:start timer]

• [call exit:stop timer]

• System DB Time = SUM(Session DB Time)

We trust DB Time accuracy implicitly](https://image.slidesharecdn.com/awrambiguityotw15-151027055855-lva1-app6891/85/AWR-Ambiguity-Performance-reasoning-when-the-numbers-don-t-add-up-7-320.jpg)

Ad

Recommended

Analyzing and Interpreting AWR

Analyzing and Interpreting AWRpasalapudi This document provides an overview and interpretation of the Automatic Workload Repository (AWR) report in Oracle database. Some key points:

- AWR collects snapshots of database metrics and performance data every 60 minutes by default and retains them for 7 days. This data is used by tools like ADDM for self-management and diagnosing issues.

- The top timed waits in the AWR report usually indicate where to focus tuning efforts. Common waits include I/O waits, buffer busy waits, and enqueue waits.

- Other useful AWR metrics include parse/execute ratios, wait event distributions, and top activities to identify bottlenecks like parsing overhead, locking issues, or inefficient SQL.

Oracle Performance Tuning Fundamentals

Oracle Performance Tuning FundamentalsEnkitec Any DBA from beginner to advanced level, who wants to fill in some gaps in his/her knowledge about Performance Tuning on an Oracle Database, will benefit from this workshop.

Tanel Poder - Troubleshooting Complex Oracle Performance Issues - Part 1

Tanel Poder - Troubleshooting Complex Oracle Performance Issues - Part 1Tanel Poder The document describes troubleshooting a complex performance issue in an Oracle database. Key details:

- The problem was sporadic extreme slowness of the Oracle database and server lasting 1-20 minutes.

- Initial AWR reports and OS metrics showed a spike at 18:10 with CPU usage at 66.89%, confirming a problem occurred then.

- Further investigation using additional metrics was needed to fully understand the root cause, as initial diagnostics did not provide enough context about this brief problem period.

Performance Tuning With Oracle ASH and AWR. Part 1 How And What

Performance Tuning With Oracle ASH and AWR. Part 1 How And Whatudaymoogala The document discusses various techniques for identifying and analyzing SQL performance issues in an Oracle database, including gathering diagnostic data from AWR reports, ASH reports, SQL execution plans, and real-time SQL monitoring reports. It provides an overview of how to use these tools to understand what is causing performance problems by identifying what is slow, quantifying the impact, determining the component involved, and analyzing the root cause.

DB Time, Average Active Sessions, and ASH Math - Oracle performance fundamentals

DB Time, Average Active Sessions, and ASH Math - Oracle performance fundamentalsJohn Beresniewicz RMOUG 2020 abstract:

This session will cover core concepts for Oracle performance analysis first introduced in Oracle 10g and forming the backbone of many features in the Diagnostic and Tuning packs. The presentation will cover the theoretical basis and meaning of these concepts, as well as illustrate how they are fundamental to many user-facing features in both the database itself and Enterprise Manager.

Troubleshooting Complex Oracle Performance Problems with Tanel Poder

Troubleshooting Complex Oracle Performance Problems with Tanel PoderTanel Poder The document describes troubleshooting a performance issue involving parallel data loads into a data warehouse. It is determined that the slowness is due to recursive locking and buffer busy waits occurring during inserts into the SEG$ table as new segments are created by parallel CREATE TABLE AS SELECT statements. This is causing a nested locking ping-pong effect between the cache, transaction, and I/O layers as sessions repeatedly acquire and release locks and buffers.

AWR and ASH Deep Dive

AWR and ASH Deep DiveKellyn Pot'Vin-Gorman This document provides an overview of Automatic Workload Repository (AWR) and Active Session History (ASH) reports in Oracle Database. It discusses the various reports available in AWR and ASH, how to generate and interpret them. Key sections include explanations of the AWR reports, using ASH reports to identify specific database issues, and techniques for querying ASH data directly for detailed analysis. The document concludes with examples of using SQL to generate graphs of ASH data from the command line.

Ash masters : advanced ash analytics on Oracle

Ash masters : advanced ash analytics on Oracle Kyle Hailey The document discusses database performance tuning. It recommends using Active Session History (ASH) and sampling sessions to identify the root causes of performance issues like buffer busy waits. ASH provides key details on sessions, SQL statements, wait events, and durations to understand top resource consumers. Counting rows in ASH approximates time spent and is important for analysis. Sampling sessions in real-time can provide the SQL, objects, and blocking sessions involved in issues like buffer busy waits.

Oracle Database Performance Tuning Advanced Features and Best Practices for DBAs

Oracle Database Performance Tuning Advanced Features and Best Practices for DBAsZohar Elkayam Oracle Week 2017 slides.

Agenda:

Basics: How and What To Tune?

Using the Automatic Workload Repository (AWR)

Using AWR-Based Tools: ASH, ADDM

Real-Time Database Operation Monitoring (12c)

Identifying Problem SQL Statements

Using SQL Performance Analyzer

Tuning Memory (SGA and PGA)

Parallel Execution and Compression

Oracle Database 12c Performance New Features

Same plan different performance

Same plan different performanceMauro Pagano Presentation details a few of the most common reason why the same execution plan could perform differently across two databases

Oracle db performance tuning

Oracle db performance tuningSimon Huang This document discusses Oracle database performance tuning. It covers identifying common Oracle performance issues such as CPU bottlenecks, memory issues, and inefficient SQL statements. It also outlines the Oracle performance tuning method and tools like the Automatic Database Diagnostic Monitor (ADDM) and performance page in Oracle Enterprise Manager. These tools help administrators monitor performance, identify bottlenecks, implement ADDM recommendations, and tune SQL statements reactively when issues arise.

Tanel Poder - Scripts and Tools short

Tanel Poder - Scripts and Tools shortTanel Poder This is a recording of my Advanced Oracle Troubleshooting seminar preparation session - where I showed how I set up my command line environment and some of the main performance scripts I use!

Christo kutrovsky oracle, memory & linux

Christo kutrovsky oracle, memory & linuxKyle Hailey This document provides information about Pythian, a company that provides database management and consulting services. It begins by introducing the presenter, Christo Kutrovsky, and his background. It then provides details about Pythian, including that it was founded in 1997, has over 200 employees, 200 customers worldwide, and 5 offices globally. It notes Pythian's partnerships and awards. The document emphasizes Pythian's expertise in Oracle, SQL Server, and other technologies. It positions Pythian as a recognized leader in database management.

ASH and AWR on DB12c

ASH and AWR on DB12cKellyn Pot'Vin-Gorman This document provides an overview of the Automatic Workload Repository (AWR) and Active Session History (ASH) features in Oracle Database 12c. It discusses how AWR and ASH work, how to access and interpret their reports through the Oracle Enterprise Manager console and command line interface. Specific sections cover parsing AWR reports, querying ASH data directly, and using features like the SQL monitor to diagnose performance issues.

Ash architecture and advanced usage rmoug2014

Ash architecture and advanced usage rmoug2014John Beresniewicz This is the presentation on ASH that I did with Graham Wood at RMOUG 2014 and that represents the final best effort to capture essential and advanced ASH content as started in a presentation Uri Shaft and I gave at a small conference in Denmark sometime in 2012 perhaps. The presentation is also available publicly through the RMOUG website, so I felt at liberty to post it myself here. If it disappears it would likely be because I have been asked to remove it by Oracle.

Analyzing awr report

Analyzing awr reportsatish Gaddipati This document summarizes the main parts of an Oracle AWR report, including the snapshot details, load profile, top timed foreground events, time model statistics, and SQL section. The time model statistics indicate that 86.45% of database time was spent executing SQL statements. The top foreground event was waiting for database file sequential reads, taking up 62% of database time.

Exploring Oracle Database Performance Tuning Best Practices for DBAs and Deve...

Exploring Oracle Database Performance Tuning Best Practices for DBAs and Deve...Aaron Shilo The document provides an overview of Oracle database performance tuning best practices for DBAs and developers. It discusses the connection between SQL tuning and instance tuning, and how tuning both the database and SQL statements is important. It also covers the connection between the database and operating system, how features like data integrity and zero downtime updates are important. The presentation agenda includes topics like identifying bottlenecks, benchmarking, optimization techniques, the cost-based optimizer, indexes, and more.

Stop the Chaos! Get Real Oracle Performance by Query Tuning Part 1

Stop the Chaos! Get Real Oracle Performance by Query Tuning Part 1SolarWinds The document provides an overview and agenda for a presentation on optimizing Oracle database performance through query tuning. It discusses identifying performance issues, collecting wait event information, reviewing execution plans, and understanding how the Oracle optimizer works using features like adaptive plans and statistics gathering. The goal is to show attendees how to quickly find and focus on the queries most in need of tuning.

Oracle 10g Performance: chapter 02 aas

Oracle 10g Performance: chapter 02 aasKyle Hailey The document discusses average active sessions (AAS) as a single metric for measuring database performance and load, providing methods for calculating AAS using sampling of active session history (ASH) data or time statistics, and comparing the AAS value to metrics like CPU count to understand if the database is under or over utilized.

It also describes how the components of AAS like CPU usage and wait times can provide more insight, and how tools like the Oracle Enterprise Manager (OEM) can show AAS over time as well as its subcomponents to help identify performance bottlenecks.

Chasing the optimizer

Chasing the optimizerMauro Pagano Session about Oracle Cost-Based Optimizer inner workings, including a real SQL to show what presented

Understanding oracle rac internals part 2 - slides

Understanding oracle rac internals part 2 - slidesMohamed Farouk This document discusses Oracle Real Application Clusters (RAC) internals, specifically focusing on client connectivity and node membership. It provides details on how clients connect to a RAC database, including connect time load balancing, connect time and runtime connection failover. It also describes the key processes that manage node membership in Oracle Clusterware, including CSSD and how it uses network heartbeats and voting disks to monitor nodes and remove failed nodes from the cluster.

Using Statspack and AWR for Memory Monitoring and Tuning

Using Statspack and AWR for Memory Monitoring and TuningTexas Memory Systems, and IBM Company The document provides an overview of using Automatic Workload Repository (AWR) for memory analysis in an Oracle database. It discusses various memory structures like the database buffer cache, shared pool, and process memory. It outlines signs of memory issues and describes analyzing the top waits, load profile, instance efficiency, SQL areas, and other AWR report sections to identify and address performance problems related to memory configuration and usage.

Oracle Latch and Mutex Contention Troubleshooting

Oracle Latch and Mutex Contention TroubleshootingTanel Poder This is an intro to latch & mutex contention troubleshooting which I've delivered at Hotsos Symposium, UKOUG Conference etc... It's also the starting point of my Latch & Mutex contention sections in my Advanced Oracle Troubleshooting online seminar - but we go much deeper there :-)

Awr + 12c performance tuning

Awr + 12c performance tuningAiougVizagChapter This document provides an overview of Oracle Automatic Workload Repository (AWR) and Active Session History (ASH) analytics. It discusses the key components and architecture of AWR and ASH, how they collect and store database performance data, and how that data can be analyzed using tools like the Automatic Database Diagnostic Monitor (ADDM) and ASH Analytics. It also highlights new capabilities in Oracle 12c like Real-Time ADDM, AWR Compare Periods reporting, and enhanced dimensions and filters for the Top Activity page in ASH Analytics.

Oracle statistics by example

Oracle statistics by exampleMauro Pagano Session aims at introducing less familiar audience to the Oracle database statistics concept, why statistics are necessary and how the Oracle Cost-Based Optimizer uses them

AWR reports-Measuring CPU

AWR reports-Measuring CPUMohammed Yasir Hashmi The document discusses analyzing CPU usage metrics in Oracle AWR reports. It explains that AWR provides elapsed time, database time, and CPU time metrics. CPU time should be compared to total available CPU power to determine if it indicates a potential problem. The document demonstrates calculations for total CPU power and percentage of CPU used by Oracle processes based on numbers in an example AWR report. It also discusses using ASH to analyze CPU usage for specific time periods and investigating SQL statements consuming high CPU.

New Generation Oracle RAC Performance

New Generation Oracle RAC PerformanceAnil Nair New Generation Oracle RAC 19c focuses on diagnosing Oracle RAC performance issues. The document discusses tools used by Oracle's RAC performance engineering team to instrument and measure key code areas between releases. It also covers how Oracle RAC provides high availability and scalability for workloads like traditional apps, new apps, IoT workloads, and more. Diagnosing performance requires understanding factors like private network latency and configuration.

SQLd360

SQLd360Mauro Pagano SQLd360 is a free tool designed to help collecting and analyzing SQL Tuning-related info from an Oracle database.

Available for free on GitHub, just google "sqld360"

Oracle Open World Thursday 230 ashmasters

Oracle Open World Thursday 230 ashmastersKyle Hailey This document discusses database performance tuning using Oracle's ASH (Active Session History) feature. It provides examples of ASH queries to identify top wait events, long running SQL statements, and sessions consuming the most CPU. It also explains how to use ASH data to diagnose specific problems like buffer busy waits and latch contention by tracking session details over time.

Awr1page - Sanity checking time instrumentation in AWR reports

Awr1page - Sanity checking time instrumentation in AWR reportsJohn Beresniewicz Discusses Oracle time-based performance instrumentation as presented in AWR reports and inconsistencies between instrumentation sources that can cause confusion as conflicting information is presented. The cognitive load of investigating and reasoning about such conundrums is very high, discouraging even senior performance experts. A program (AWR1page) is discussed that consumes an AWR report and produces a 1-page normalized time summary by instrumentation source, precisely designed for reasoning about instrumentation inconsistencies in AWR reports.

Ad

More Related Content

What's hot (20)

Oracle Database Performance Tuning Advanced Features and Best Practices for DBAs

Oracle Database Performance Tuning Advanced Features and Best Practices for DBAsZohar Elkayam Oracle Week 2017 slides.

Agenda:

Basics: How and What To Tune?

Using the Automatic Workload Repository (AWR)

Using AWR-Based Tools: ASH, ADDM

Real-Time Database Operation Monitoring (12c)

Identifying Problem SQL Statements

Using SQL Performance Analyzer

Tuning Memory (SGA and PGA)

Parallel Execution and Compression

Oracle Database 12c Performance New Features

Same plan different performance

Same plan different performanceMauro Pagano Presentation details a few of the most common reason why the same execution plan could perform differently across two databases

Oracle db performance tuning

Oracle db performance tuningSimon Huang This document discusses Oracle database performance tuning. It covers identifying common Oracle performance issues such as CPU bottlenecks, memory issues, and inefficient SQL statements. It also outlines the Oracle performance tuning method and tools like the Automatic Database Diagnostic Monitor (ADDM) and performance page in Oracle Enterprise Manager. These tools help administrators monitor performance, identify bottlenecks, implement ADDM recommendations, and tune SQL statements reactively when issues arise.

Tanel Poder - Scripts and Tools short

Tanel Poder - Scripts and Tools shortTanel Poder This is a recording of my Advanced Oracle Troubleshooting seminar preparation session - where I showed how I set up my command line environment and some of the main performance scripts I use!

Christo kutrovsky oracle, memory & linux

Christo kutrovsky oracle, memory & linuxKyle Hailey This document provides information about Pythian, a company that provides database management and consulting services. It begins by introducing the presenter, Christo Kutrovsky, and his background. It then provides details about Pythian, including that it was founded in 1997, has over 200 employees, 200 customers worldwide, and 5 offices globally. It notes Pythian's partnerships and awards. The document emphasizes Pythian's expertise in Oracle, SQL Server, and other technologies. It positions Pythian as a recognized leader in database management.

ASH and AWR on DB12c

ASH and AWR on DB12cKellyn Pot'Vin-Gorman This document provides an overview of the Automatic Workload Repository (AWR) and Active Session History (ASH) features in Oracle Database 12c. It discusses how AWR and ASH work, how to access and interpret their reports through the Oracle Enterprise Manager console and command line interface. Specific sections cover parsing AWR reports, querying ASH data directly, and using features like the SQL monitor to diagnose performance issues.

Ash architecture and advanced usage rmoug2014

Ash architecture and advanced usage rmoug2014John Beresniewicz This is the presentation on ASH that I did with Graham Wood at RMOUG 2014 and that represents the final best effort to capture essential and advanced ASH content as started in a presentation Uri Shaft and I gave at a small conference in Denmark sometime in 2012 perhaps. The presentation is also available publicly through the RMOUG website, so I felt at liberty to post it myself here. If it disappears it would likely be because I have been asked to remove it by Oracle.

Analyzing awr report

Analyzing awr reportsatish Gaddipati This document summarizes the main parts of an Oracle AWR report, including the snapshot details, load profile, top timed foreground events, time model statistics, and SQL section. The time model statistics indicate that 86.45% of database time was spent executing SQL statements. The top foreground event was waiting for database file sequential reads, taking up 62% of database time.

Exploring Oracle Database Performance Tuning Best Practices for DBAs and Deve...

Exploring Oracle Database Performance Tuning Best Practices for DBAs and Deve...Aaron Shilo The document provides an overview of Oracle database performance tuning best practices for DBAs and developers. It discusses the connection between SQL tuning and instance tuning, and how tuning both the database and SQL statements is important. It also covers the connection between the database and operating system, how features like data integrity and zero downtime updates are important. The presentation agenda includes topics like identifying bottlenecks, benchmarking, optimization techniques, the cost-based optimizer, indexes, and more.

Stop the Chaos! Get Real Oracle Performance by Query Tuning Part 1

Stop the Chaos! Get Real Oracle Performance by Query Tuning Part 1SolarWinds The document provides an overview and agenda for a presentation on optimizing Oracle database performance through query tuning. It discusses identifying performance issues, collecting wait event information, reviewing execution plans, and understanding how the Oracle optimizer works using features like adaptive plans and statistics gathering. The goal is to show attendees how to quickly find and focus on the queries most in need of tuning.

Oracle 10g Performance: chapter 02 aas

Oracle 10g Performance: chapter 02 aasKyle Hailey The document discusses average active sessions (AAS) as a single metric for measuring database performance and load, providing methods for calculating AAS using sampling of active session history (ASH) data or time statistics, and comparing the AAS value to metrics like CPU count to understand if the database is under or over utilized.

It also describes how the components of AAS like CPU usage and wait times can provide more insight, and how tools like the Oracle Enterprise Manager (OEM) can show AAS over time as well as its subcomponents to help identify performance bottlenecks.

Chasing the optimizer

Chasing the optimizerMauro Pagano Session about Oracle Cost-Based Optimizer inner workings, including a real SQL to show what presented

Understanding oracle rac internals part 2 - slides

Understanding oracle rac internals part 2 - slidesMohamed Farouk This document discusses Oracle Real Application Clusters (RAC) internals, specifically focusing on client connectivity and node membership. It provides details on how clients connect to a RAC database, including connect time load balancing, connect time and runtime connection failover. It also describes the key processes that manage node membership in Oracle Clusterware, including CSSD and how it uses network heartbeats and voting disks to monitor nodes and remove failed nodes from the cluster.

Using Statspack and AWR for Memory Monitoring and Tuning

Using Statspack and AWR for Memory Monitoring and TuningTexas Memory Systems, and IBM Company The document provides an overview of using Automatic Workload Repository (AWR) for memory analysis in an Oracle database. It discusses various memory structures like the database buffer cache, shared pool, and process memory. It outlines signs of memory issues and describes analyzing the top waits, load profile, instance efficiency, SQL areas, and other AWR report sections to identify and address performance problems related to memory configuration and usage.

Oracle Latch and Mutex Contention Troubleshooting

Oracle Latch and Mutex Contention TroubleshootingTanel Poder This is an intro to latch & mutex contention troubleshooting which I've delivered at Hotsos Symposium, UKOUG Conference etc... It's also the starting point of my Latch & Mutex contention sections in my Advanced Oracle Troubleshooting online seminar - but we go much deeper there :-)

Awr + 12c performance tuning

Awr + 12c performance tuningAiougVizagChapter This document provides an overview of Oracle Automatic Workload Repository (AWR) and Active Session History (ASH) analytics. It discusses the key components and architecture of AWR and ASH, how they collect and store database performance data, and how that data can be analyzed using tools like the Automatic Database Diagnostic Monitor (ADDM) and ASH Analytics. It also highlights new capabilities in Oracle 12c like Real-Time ADDM, AWR Compare Periods reporting, and enhanced dimensions and filters for the Top Activity page in ASH Analytics.

Oracle statistics by example

Oracle statistics by exampleMauro Pagano Session aims at introducing less familiar audience to the Oracle database statistics concept, why statistics are necessary and how the Oracle Cost-Based Optimizer uses them

AWR reports-Measuring CPU

AWR reports-Measuring CPUMohammed Yasir Hashmi The document discusses analyzing CPU usage metrics in Oracle AWR reports. It explains that AWR provides elapsed time, database time, and CPU time metrics. CPU time should be compared to total available CPU power to determine if it indicates a potential problem. The document demonstrates calculations for total CPU power and percentage of CPU used by Oracle processes based on numbers in an example AWR report. It also discusses using ASH to analyze CPU usage for specific time periods and investigating SQL statements consuming high CPU.

New Generation Oracle RAC Performance

New Generation Oracle RAC PerformanceAnil Nair New Generation Oracle RAC 19c focuses on diagnosing Oracle RAC performance issues. The document discusses tools used by Oracle's RAC performance engineering team to instrument and measure key code areas between releases. It also covers how Oracle RAC provides high availability and scalability for workloads like traditional apps, new apps, IoT workloads, and more. Diagnosing performance requires understanding factors like private network latency and configuration.

SQLd360

SQLd360Mauro Pagano SQLd360 is a free tool designed to help collecting and analyzing SQL Tuning-related info from an Oracle database.

Available for free on GitHub, just google "sqld360"

Viewers also liked (20)

Oracle Open World Thursday 230 ashmasters

Oracle Open World Thursday 230 ashmastersKyle Hailey This document discusses database performance tuning using Oracle's ASH (Active Session History) feature. It provides examples of ASH queries to identify top wait events, long running SQL statements, and sessions consuming the most CPU. It also explains how to use ASH data to diagnose specific problems like buffer busy waits and latch contention by tracking session details over time.

Awr1page - Sanity checking time instrumentation in AWR reports

Awr1page - Sanity checking time instrumentation in AWR reportsJohn Beresniewicz Discusses Oracle time-based performance instrumentation as presented in AWR reports and inconsistencies between instrumentation sources that can cause confusion as conflicting information is presented. The cognitive load of investigating and reasoning about such conundrums is very high, discouraging even senior performance experts. A program (AWR1page) is discussed that consumes an AWR report and produces a 1-page normalized time summary by instrumentation source, precisely designed for reasoning about instrumentation inconsistencies in AWR reports.

Intro to ASH

Intro to ASHKyle Hailey The document describes Active Session History (ASH), a new methodology for performance tuning introduced by Kyle Hailey. ASH simplifies performance tuning by using statistical sampling to provide a multidimensional view of sessions, SQL, objects, users, and other database components over time. This allows identification of top resource consumers using less data collection than traditional methods.

How to find and fix your Oracle application performance problem

How to find and fix your Oracle application performance problemCary Millsap How long does your code take to run? Is it changing? When it is slow, WHY is it slow? Is it your fault, or somebody else's? Can you prove it? How much faster could your code be? Do you know how to measure the performance of your code as user workloads and data volumes increase? These are fundamental questions about performance, but the vast majority of Oracle application developers can't answer them. The most popular performance tools available to them—and to the database administrators that run their code in production—are incapable of answering any of these questions. But the Oracle Database can give you exactly what you need to answer these questions and many more. You can know exactly where YOUR CODE is spending YOUR TIME. This session explains how.

History of database monitoring

History of database monitoringKyle Hailey This document outlines the history of database monitoring from 1988 to the present. It describes early monitoring tools like Utlbstat/Utlestat from 1988-1990 that used ratios and averages. Patrol was one of the first database monitors introduced in 1993. M2 from 1994 introduced light-weight monitoring using direct memory access and sampling. Wait events became a key focus area from 1995 onward. Statspack was introduced in 1998 and provided more comprehensive monitoring than previous tools. Spotlight in 1999 made database problem diagnosis very easy without manuals. Later versions incorporated improved graphics, multi-dimensional views of top consumers, and sampling for faster problem identification.

The Most Important Things You Should Know about Oracle®

The Most Important Things You Should Know about Oracle®Cary Millsap I've been around hundreds of Oracle performance projects since 1989, and my colleagues have been around thousands more. In candid after-work talks about our experiences, there has been remarkable symmetry in what we believe makes a project successful. People everywhere seem to be discovering the same secret on their own, through the Agile, Lean Startup, and Design Thinking movements. The secret? Shorter feedback loops. But shortening your feedback loops in Oracle projects is easier said than done. It all depends on how well you Test and Measure. But how do you overcome the political and technical barriers to making great testing and measuring a part of your project plan?

Profiling the logwriter and database writer

Profiling the logwriter and database writerKyle Hailey The document discusses the behavior of the Oracle log writer (LGWR) process under different conditions. In idle mode, LGWR sleeps for 3 seconds at a time on a semaphore without writing to the redo log buffer. When a transaction is committed, LGWR may write the committed redo entries to disk either before or after the foreground process waits on a "log file sync" event, depending on whether LGWR has already flushed the data. The document also compares the "post-wait" and "polling" modes used for the log file sync wait.

Oaktable World 2014 Toon Koppelaars: database constraints polite excuse

Oaktable World 2014 Toon Koppelaars: database constraints polite excuseKyle Hailey The document discusses validation execution models for SQL assertions. It proposes moving from less efficient models that evaluate all assertions for every change (EM1) to more efficient models. Later models (EM3-EM5) evaluate only assertions involving changed tables, columns or literals based on parsing the assertion and change being made. The most efficient model (EM5) evaluates assertions only when the change transition effect potentially impacts the assertion. Overall the document argues SQL assertions could improve data quality if DBMS vendors supported more optimized evaluation models.

Awr1page - Sanity checking time instrumentation in AWR reports

Awr1page - Sanity checking time instrumentation in AWR reportsJohn Beresniewicz The presentation discusses issues with Oracle timing instrumentation and introduces AWR1page, a program that produces high-level instrumentation sanity checks from AWR text reports, on a single page.

Average Active Sessions RMOUG2007

Average Active Sessions RMOUG2007John Beresniewicz Understanding Average Active Sessions (AAS) is critical to understanding Oracle performance at the systemic level. This is my first presentation on the topic done at RMOUG Training Days in 2007. Later I will upload a more recent presentation on AAS from 2013.

SQL Tuning Methodology, Kscope 2013

SQL Tuning Methodology, Kscope 2013 Kyle Hailey The document describes a visual SQL tuning (VST) presentation. It provides steps for using VST to analyze SQL queries, including laying out the tables and joins in a diagram, identifying filters, and determining the optimal execution path starting with the most selective filter and joining down before joining up. Filters are important for determining the best path, with the goal of starting at the filter with the highest selectivity.

Average Active Sessions - OaktableWorld 2013

Average Active Sessions - OaktableWorld 2013John Beresniewicz A more recent presentation on the all-important topic of Average Active Sessions (AAS) for Oracle performance analysis.

DB Tech Showcase 2016 - E35 - SQLチューニング総合診療所的予防医学

DB Tech Showcase 2016 - E35 - SQLチューニング総合診療所的予防医学Hiroshi Sekiguchi The document contains execution plans for several SQL queries against database tables. The plans show operations like hash joins and full table scans. Memory and disk usage metrics are provided for different operations in the queries.

Mark Farnam : Minimizing the Concurrency Footprint of Transactions

Mark Farnam : Minimizing the Concurrency Footprint of TransactionsKyle Hailey The document discusses minimizing the concurrency footprint of transactions by using packaged procedures. It recommends instrumenting all code, including PL/SQL, for performance monitoring. It provides examples of submitting trivial transactions using different methods like sending code from the client, sending a PL/SQL block, or calling a stored procedure. Calling a stored procedure is preferred as it avoids re-parsing and re-sending code and allows instrumentation to be added without extra network traffic.

Dan Norris: Exadata security

Dan Norris: Exadata securityKyle Hailey The document discusses security considerations for installing and configuring an Oracle Exadata Database Machine. It recommends preparing for installation by collecting security requirements, subscribing to security alerts, and reviewing installation guidelines. During installation, it advises implementing available security features like the "Resecure Machine" step to tighten permissions and passwords. Post-deployment, it suggests addressing any site-specific security needs like changing default passwords and validating policies.

OakTable World Sep14 clonedb

OakTable World Sep14 clonedb Connor McDonald This document discusses using Oracle Database's block change tracking and direct NFS features to enable fast cloning of databases for development and testing purposes at low cost. Block change tracking allows incremental backups to be performed quickly, while direct NFS allows database files to be copied over the network efficiently to create clones that only require storage for changed blocks. Examples are provided demonstrating how this can be used to regularly clone a production database to multiple developer environments.

Indexes: Structure, Splits and Free Space Management Internals

Indexes: Structure, Splits and Free Space Management InternalsChristian Antognini The document discusses the internal structure and management of Oracle database indexes. It describes how B-tree indexes are structured, including the use of branch blocks and leaf blocks. It also covers concepts like index keys, splits that occur as indexes grow, free space management, and techniques for reorganizing indexes like rebuilding and coalescing. Bitmap indexes are also discussed, noting they use a compressed bitmap in their internal keys. Finally, some common myths about indexing are debunked, such as the idea that indexes need regular rebuilds to remain balanced.

Oaktable World 2014 Kevin Closson: SLOB – For More Than I/O!

Oaktable World 2014 Kevin Closson: SLOB – For More Than I/O!Kyle Hailey The document discusses using SLOB (Synthetic Load On Box) to test various Oracle database configurations and platforms. SLOB is described as a simple and predictable workload generator that allows testing the performance of databases under different conditions with minimal variability. The document outlines several potential uses of SLOB, including testing Oracle in-memory database options, multitenant architectures, and measuring the impact of database contention. It provides examples of using SLOB to analyze CPU and storage I/O performance.

OakTable World 2015 - Using XMLType content with the Oracle In-Memory Column...

OakTable World 2015 - Using XMLType content with the Oracle In-Memory Column...Marco Gralike This document discusses using Oracle's in-memory column store capabilities to improve performance of XML data stored and queried using XMLType. Key points include selectively applying in-memory storage to columns and indexes for XML data, issues with optimization and costs not fully accounting for performance gains, and opportunities for further optimization of XML retrieval using DOM/XOM. In-memory storage can significantly boost XML performance but careful design is still required.

OOUG - Oracle Performance Tuning with AAS

OOUG - Oracle Performance Tuning with AASKyle Hailey The document provides information about Kyle Hailey and his work in Oracle performance tuning. It includes details about his career history working with Oracle from 1990 to present, including roles in support, porting software versions, benchmarking, and performance tuning. Graphics and clear visualizations are emphasized as important tools for effectively communicating complex technical information and identifying problems. The goal is stated as simplifying database tuning information to empower database administrators.

Ad

Similar to AWR Ambiguity: Performance reasoning when the numbers don't add up (20)

Awrrpt 1 3004_3005

Awrrpt 1 3004_3005Kam Chan This report summarizes the workload on the ERPSIT database with the following key details:

- The database has 2 instances and is hosted on a Linux server with 4 CPUs and 7.8GB of memory.

- Between snapshots 3004 and 3005, there was 60.1 minutes of activity with 174 sessions.

- The largest consumers of database time were SQL execute elapsed time at 94.7% and DB CPU time at 63.4%.

Thomas+Niewel+ +Oracletuning

Thomas+Niewel+ +Oracletuningafa reg The document discusses monitoring and tuning Oracle databases on z/OS and z/Linux systems. It provides an overview of using Statspack to diagnose performance issues from high CPU usage, I/O utilization, or memory usage based on timed events, SQL statements, and tablespace I/O statistics. Potential causes and remedies are described for each area that could lead to bad response times.

AWR, ADDM, ASH, Metrics and Advisors.ppt

AWR, ADDM, ASH, Metrics and Advisors.pptbugzbinny This document provides an overview of various Oracle database tuning tools available in Oracle 10gR2, including the Automatic Workload Repository (AWR), Automatic Database Diagnostic Monitor (ADDM), Active Session History (ASH), metrics, and advisors. It discusses how these tools provide automated, consistent performance monitoring and issue identification to help tune and optimize the database with little manual effort.

Using AWR for IO Subsystem Analysis

Using AWR for IO Subsystem AnalysisTexas Memory Systems, and IBM Company The document discusses using Automatic Workload Repository (AWR) to analyze IO subsystem performance. It provides examples of AWR reports including foreground and background wait events, operating system statistics, wait histograms. The document recommends using this data to identify IO bottlenecks and guide tuning efforts like optimizing indexes to reduce full table scans.

Rmoug ashmaster

Rmoug ashmasterKyle Hailey This document discusses using Active Session History (ASH) to analyze and troubleshoot performance issues in an Oracle database. It provides an example of using ASH to identify the top CPU-consuming session over the last 5 minutes. It shows how to group and count ASH data to calculate metrics like average active sessions (AAS) and percentage of time spent on CPU. The document also discusses using ASH to identify top waiting sessions and analyze specific wait events like buffer busy waits.

Using AWR/Statspack for Wait Analysis

Using AWR/Statspack for Wait AnalysisTexas Memory Systems, and IBM Company The document is an AWR report that provides key statistics and configuration details about an Oracle database called AULTDB over a 60 minute period. It includes information like the number of sessions, database startup time, cache sizes, and wait events. The report is intended to help analyze wait times and identify potential performance bottlenecks in the database.

Awr1page OTW2018

Awr1page OTW2018John Beresniewicz Small updates for this version presented at OakTableWorld 2018

Discusses Oracle time-based performance instrumentation as presented in AWR reports and inconsistencies between instrumentation sources that can cause confusion as conflicting information is presented. The cognitive load of investigating and reasoning about such conundrums is very high, discouraging even senior performance experts. A program (AWR1page) is discussed that consumes an AWR report and produces a 1-page normalized time summary by instrumentation source, precisely designed for reasoning about instrumentation inconsistencies in AWR reports.

MySQL Cluster 7.3 Performance Tuning - Severalnines Slides

MySQL Cluster 7.3 Performance Tuning - Severalnines SlidesSeveralnines The MySQL Cluster 7.x series introduced a number of features to allow for fine-grained control over the real-time behaviour of the NDB storage engine. New threads have been introduced, and users are able to control placement of these threads, as well as locking the memory such that no swapping occurs. In an ideal run-time environment, CPUs handling data node threads will not execute other threads apart from OS kernel threads or interrupt handling. Correct tuning of certain parameters can be specially important for certain types of workloads.

This presentation covers the different tuning aspects of MySQL Cluster.

- Application design guidelines

- Schema Optimization

- Index Selection and Tuning

- Query Tuning

- OS Tuning

- Data Node internals

- Optimizations for real-time behaviour

This presentation looks closely at how to get the most out of your MySQL Cluster 7.x runtime environment.

active_session_history_oracle_performance.ppt

active_session_history_oracle_performance.pptcookie1969 This document provides an overview of Oracle's Active Session History (ASH) feature. ASH samples database sessions every second to capture session states and activity. It stores this data in an in-memory circular buffer and periodically writes samples to disk for analysis. ASH data provides insights into database time usage, top SQL, wait events, and blocking issues. It can be used for performance analysis by aggregating and analyzing ASH dimensions like SQL_ID, event, and wait class over time.

Oracle Database : Addressing a performance issue the drilldown approach

Oracle Database : Addressing a performance issue the drilldown approachLaurent Leturgez The document discusses addressing performance issues using a "drilldown approach". This approach involves first identifying if the database is overloaded, then identifying when it is overloaded, and finally identifying how database time is distributed in order to pinpoint bottlenecks. Various tools like AWR, Statspack, and code instrumentation are recommended to gather detailed performance data for analysis.

Hotsos 2011: Mining the AWR repository for Capacity Planning, Visualization, ...

Hotsos 2011: Mining the AWR repository for Capacity Planning, Visualization, ...Kristofferson A The document discusses mining the Automatic Workload Repository (AWR) in Oracle databases for capacity planning, visualization, and other real-world uses. It introduces Karl Arao as a speaker and discusses topics he will cover including AWR, diagnosing performance issues using AWR data, visualization of AWR data, capacity planning, and tools for working with AWR data like scripts and linear regression. References and resources on working with AWR are also provided.

How should I monitor my idaa

How should I monitor my idaaCuneyt Goksu This document discusses how to monitor an IBM Db2 Analytics Accelerator (IDAA). It provides an overview of the resources, use cases, and tools for monitoring an IDAA. Key metrics for monitoring include accelerator resources, system resources, SQL statements, workload, performance, and capacity planning. Tools mentioned for monitoring include the appliance UI, OMPE, Data Studio, DISPLAY ACCEL command, and stored procedures.

Oracle Database Performance Tuning Concept

Oracle Database Performance Tuning ConceptChien Chung Shen The document discusses Oracle database performance tuning. It covers reactive and proactive performance tuning, the top-down tuning methodology, common types of performance issues, and metrics for measuring performance such as response time and throughput. It also compares online transaction processing (OLTP) systems and data warehouses (DW), and describes different architectures for integrating OLTP and DW systems.

SQL Tuning, takes 3 to tango

SQL Tuning, takes 3 to tangoMauro Pagano - The document discusses using various Oracle diagnostic tools like AWR, ASH, and SQL Monitoring for SQL tuning. It focuses on scenarios where multiple data sources are needed to fully understand performance issues.

- Historical ASH and AWR data can provide different perspectives on SQL executions over time that help identify problems like long-running queries or concurrent executions. However, ASH only samples a subset of data so it may miss short queries.

- GV$SQL and AWR reports aggregate performance metrics over all executions of a SQL, so they do not show the full user experience if a query runs intermittently. ASH sampled data can help determine how database time relates to clock time in such cases.

-

Oracle Performance Tuning Fundamentals

Oracle Performance Tuning FundamentalsCarlos Sierra This document provides an overview of Oracle performance tuning fundamentals. It discusses key concepts like wait events, statistics, CPU utilization, and the importance of understanding the operating system, database, and business needs. It also introduces tools for monitoring performance like AWR, ASH, and dynamic views. The goal is to establish a foundational understanding of Oracle performance concepts and monitoring techniques.

Performance Scenario: Diagnosing and resolving sudden slow down on two node RAC

Performance Scenario: Diagnosing and resolving sudden slow down on two node RACKristofferson A This document summarizes the steps taken to diagnose and resolve a sudden slow down issue affecting applications running on a two node Real Application Clusters (RAC) environment. The troubleshooting process involved systematically measuring performance at the operating system, database, and session levels. Key findings included high wait times and fragmentation issues on the network interconnect, which were resolved by replacing the network switch. Measuring performance using tools like ASH, AWR, and OS monitoring was essential to systematically diagnose the problem.

Oow2007 performance

Oow2007 performanceRicky Zhu The document outlines common problems and solutions for optimizing performance in Oracle Real Application Clusters (RAC). It discusses RAC fundamentals like architecture and cache fusion. Common problems discussed include lost blocks due to interconnect issues, disk I/O bottlenecks, and expensive queries. Diagnostics tools like AWR and ADDM can identify cluster-wide I/O and query plan issues impacting performance. Configuring the private interconnect, I/O, and addressing bad SQL can help resolve performance problems.

IO Dubi Lebel

IO Dubi Lebelsqlserver.co.il This document discusses disk I/O performance testing tools. It introduces SQLIO and IOMETER for measuring disk throughput, latency, and IOPS. Examples are provided for running SQLIO tests and interpreting the output, including metrics like throughput in MB/s, latency in ms, and I/O histograms. Other disk performance factors discussed include the number of outstanding I/Os, block size, and sequential vs random access patterns.

MySQL 5.7 innodb_enhance_partii_20160527

MySQL 5.7 innodb_enhance_partii_20160527Saewoong Lee Release Date : 2016.05.27

Version : MySQL 5.7

Index :

- Part I : InnoDB Performance

- Part I : InnoDB Buffer Pool Flushing

- Part I : InnoDB internal Transaction General

- Part I : InnoDB Improved adaptive flushing

- Part II : InnoDB Online DDL

- Part II : Tablespace management

- Part II : InnoDB Bulk Load for Create Index

- Part II : InnoDB Temporary Tables

- Part II : InnoDB Full-Text CJK Support

- Part II : Support Syslog on Linux / Unix OS

- Part II : Performance_schema

- Part II : Useful tips

30334823 my sql-cluster-performance-tuning-best-practices

30334823 my sql-cluster-performance-tuning-best-practicesDavid Dhavan This document provides guidance on performance tuning MySQL Cluster. It outlines several techniques including:

- Optimizing the database schema through denormalization, proper primary key selection, and optimizing data types.

- Tuning queries through rewriting slow queries, adding appropriate indexes, and utilizing simple access patterns like primary key lookups.

- Configuring MySQL server parameters and hardware settings for optimal performance.

- Leveraging techniques like batching operations and parallel scanning to minimize network roundtrips and improve throughput.

The overall goal is to minimize network traffic for common queries through schema design, query optimization, configuration tuning, and hardware scaling. Performance tuning is an ongoing process of measuring, testing and optimizing based on application

Ad

More from John Beresniewicz (7)

ASHviz - Dats visualization research experiments using ASH data

ASHviz - Dats visualization research experiments using ASH dataJohn Beresniewicz RMOUG Training Days 2020 abstract:

The Active Session History (ASH) mechanism is a rich source of fine-grained data about database activity, and is the lynchpin for many database performance management features in the Diagnostic and Tuning packs. Many interesting stories about happenings in the database are buried in ASH waiting to be revealed, and data visualization is key to sifting these out from the high dimensionality and volume of ASH data. The session will cover a number of data visualization experiments conducted using a single ASH dump with an emphasis on the iterative process of discovering useful data visualizations.

NoSQL is Anti-relational

NoSQL is Anti-relationalJohn Beresniewicz NoSQL databases are not relational databases. While SQL is just a declarative query language that can interface with both relational and non-relational databases, relational databases have key distinguishing features not found in NoSQL databases, such as: 1) Storing all data in tables with a predefined schema, 2) Requiring data to conform to the schema on entry, and 3) Supporting ACID transactions to maintain absolute consistency. The document argues that NoSQL databases are application-driven and allow flexible, non-normalized data structures with eventual consistency rather than the data-driven design and joins of relational databases.

AAS Deeper Meaning

AAS Deeper MeaningJohn Beresniewicz This short presentation is about the deeper meaning of the core Oracle performance metric "Average Active Sessions" as the time derivative of the DB Time function, which explains why the Enterprise Manager DB Performance Page is literally a picture of DB Time (as the integral of AAS) as well as why "ASH Math" works to estimate DB Time (it's a Riemann sum as in first-year calculus.) Also, the relationship of AAS to Little's Law in queueing theory is briefly mentioned.

JB Design CV: products / mockups / experiments

JB Design CV: products / mockups / experiments John Beresniewicz A collection of screenshots, design mockups and conceptual prototypes for systems monitoring user interfaces and performance data visualizations spanning 20 years of my career from Savant to Oracle to the present. Wacky stuff.

Proactive performance monitoring with adaptive thresholds

Proactive performance monitoring with adaptive thresholdsJohn Beresniewicz Presentation given at UKOUG 2008 conference on the Adaptive Thresholds technology in Oracle database 10.2+ and Enterprise Manager 11. Adaptive Thresholds allows users to do consistent and effective performance monitoring across systems and architectures by using statistical characterization of metric streams to automatically set and adapt monitoring thresholds independent of application workload.

Ash Outliers UKOUG2011

Ash Outliers UKOUG2011John Beresniewicz An experimental and advanced usage of Oracle Event Histogram and ASH data to answer the question: has ASH sampled any latency outlier events? We use Event Histogram to characterize the probability distribution of event latencies and then join with ASH to find if high significance (low probability) events have been sampled. Presented at UKOUG in 2011.

Contract-oriented PLSQL Programming

Contract-oriented PLSQL ProgrammingJohn Beresniewicz This document discusses contract-oriented programming using design by contract principles in PL/SQL. It outlines the theory of design by contract, which treats modules like legally binding contracts with preconditions, postconditions, and invariants. It advocates standardizing assertions using a common ASSERTFAIL exception. The document provides examples of using assertions to enforce contracts by checking preconditions and postconditions. It emphasizes aggressively asserting preconditions, modularizing code into coherent packages, and crashing on assertion failures to find bugs.

Recently uploaded (20)

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdf

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdfAbi john Analyze the growth of meme coins from mere online jokes to potential assets in the digital economy. Explore the community, culture, and utility as they elevate themselves to a new era in cryptocurrency.

Rusty Waters: Elevating Lakehouses Beyond Spark

Rusty Waters: Elevating Lakehouses Beyond Sparkcarlyakerly1 Spark is a powerhouse for large datasets, but when it comes to smaller data workloads, its overhead can sometimes slow things down. What if you could achieve high performance and efficiency without the need for Spark?

At S&P Global Commodity Insights, having a complete view of global energy and commodities markets enables customers to make data-driven decisions with confidence and create long-term, sustainable value. 🌍

Explore delta-rs + CDC and how these open-source innovations power lightweight, high-performance data applications beyond Spark! 🚀

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In France

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In Francechb3 The latest updates on Manifest's pre-seed stage progress.

Linux Support for SMARC: How Toradex Empowers Embedded Developers

Linux Support for SMARC: How Toradex Empowers Embedded DevelopersToradex Toradex brings robust Linux support to SMARC (Smart Mobility Architecture), ensuring high performance and long-term reliability for embedded applications. Here’s how:

• Optimized Torizon OS & Yocto Support – Toradex provides Torizon OS, a Debian-based easy-to-use platform, and Yocto BSPs for customized Linux images on SMARC modules.

• Seamless Integration with i.MX 8M Plus and i.MX 95 – Toradex SMARC solutions leverage NXP’s i.MX 8 M Plus and i.MX 95 SoCs, delivering power efficiency and AI-ready performance.

• Secure and Reliable – With Secure Boot, over-the-air (OTA) updates, and LTS kernel support, Toradex ensures industrial-grade security and longevity.

• Containerized Workflows for AI & IoT – Support for Docker, ROS, and real-time Linux enables scalable AI, ML, and IoT applications.

• Strong Ecosystem & Developer Support – Toradex offers comprehensive documentation, developer tools, and dedicated support, accelerating time-to-market.

With Toradex’s Linux support for SMARC, developers get a scalable, secure, and high-performance solution for industrial, medical, and AI-driven applications.

Do you have a specific project or application in mind where you're considering SMARC? We can help with Free Compatibility Check and help you with quick time-to-market

For more information: https://www.toradex.com/computer-on-modules/smarc-arm-family

Role of Data Annotation Services in AI-Powered Manufacturing

Role of Data Annotation Services in AI-Powered ManufacturingAndrew Leo From predictive maintenance to robotic automation, AI is driving the future of manufacturing. But without high-quality annotated data, even the smartest models fall short.

Discover how data annotation services are powering accuracy, safety, and efficiency in AI-driven manufacturing systems.

Precision in data labeling = Precision on the production floor.

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...Alan Dix Talk at the final event of Data Fusion Dynamics: A Collaborative UK-Saudi Initiative in Cybersecurity and Artificial Intelligence funded by the British Council UK-Saudi Challenge Fund 2024, Cardiff Metropolitan University, 29th April 2025

https://alandix.com/academic/talks/CMet2025-AI-Changes-Everything/

Is AI just another technology, or does it fundamentally change the way we live and think?

Every technology has a direct impact with micro-ethical consequences, some good, some bad. However more profound are the ways in which some technologies reshape the very fabric of society with macro-ethical impacts. The invention of the stirrup revolutionised mounted combat, but as a side effect gave rise to the feudal system, which still shapes politics today. The internal combustion engine offers personal freedom and creates pollution, but has also transformed the nature of urban planning and international trade. When we look at AI the micro-ethical issues, such as bias, are most obvious, but the macro-ethical challenges may be greater.

At a micro-ethical level AI has the potential to deepen social, ethnic and gender bias, issues I have warned about since the early 1990s! It is also being used increasingly on the battlefield. However, it also offers amazing opportunities in health and educations, as the recent Nobel prizes for the developers of AlphaFold illustrate. More radically, the need to encode ethics acts as a mirror to surface essential ethical problems and conflicts.

At the macro-ethical level, by the early 2000s digital technology had already begun to undermine sovereignty (e.g. gambling), market economics (through network effects and emergent monopolies), and the very meaning of money. Modern AI is the child of big data, big computation and ultimately big business, intensifying the inherent tendency of digital technology to concentrate power. AI is already unravelling the fundamentals of the social, political and economic world around us, but this is a world that needs radical reimagining to overcome the global environmental and human challenges that confront us. Our challenge is whether to let the threads fall as they may, or to use them to weave a better future.

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptx

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptxAnoop Ashok In today's fast-paced retail environment, efficiency is key. Every minute counts, and every penny matters. One tool that can significantly boost your store's efficiency is a well-executed planogram. These visual merchandising blueprints not only enhance store layouts but also save time and money in the process.

Semantic Cultivators : The Critical Future Role to Enable AI

Semantic Cultivators : The Critical Future Role to Enable AIartmondano By 2026, AI agents will consume 10x more enterprise data than humans, but with none of the contextual understanding that prevents catastrophic misinterpretations.

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptx

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptxJustin Reock Building 10x Organizations with Modern Productivity Metrics

10x developers may be a myth, but 10x organizations are very real, as proven by the influential study performed in the 1980s, ‘The Coding War Games.’

Right now, here in early 2025, we seem to be experiencing YAPP (Yet Another Productivity Philosophy), and that philosophy is converging on developer experience. It seems that with every new method we invent for the delivery of products, whether physical or virtual, we reinvent productivity philosophies to go alongside them.

But which of these approaches actually work? DORA? SPACE? DevEx? What should we invest in and create urgency behind today, so that we don’t find ourselves having the same discussion again in a decade?

Build Your Own Copilot & Agents For Devs

Build Your Own Copilot & Agents For DevsBrian McKeiver May 2nd, 2025 talk at StirTrek 2025 Conference.

Procurement Insights Cost To Value Guide.pptx

Procurement Insights Cost To Value Guide.pptxJon Hansen Procurement Insights integrated Historic Procurement Industry Archives, serves as a powerful complement — not a competitor — to other procurement industry firms. It fills critical gaps in depth, agility, and contextual insight that most traditional analyst and association models overlook.

Learn more about this value- driven proprietary service offering here.

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...TrustArc Most consumers believe they’re making informed decisions about their personal data—adjusting privacy settings, blocking trackers, and opting out where they can. However, our new research reveals that while awareness is high, taking meaningful action is still lacking. On the corporate side, many organizations report strong policies for managing third-party data and consumer consent yet fall short when it comes to consistency, accountability and transparency.

This session will explore the research findings from TrustArc’s Privacy Pulse Survey, examining consumer attitudes toward personal data collection and practical suggestions for corporate practices around purchasing third-party data.

Attendees will learn:

- Consumer awareness around data brokers and what consumers are doing to limit data collection

- How businesses assess third-party vendors and their consent management operations

- Where business preparedness needs improvement

- What these trends mean for the future of privacy governance and public trust

This discussion is essential for privacy, risk, and compliance professionals who want to ground their strategies in current data and prepare for what’s next in the privacy landscape.

Mobile App Development Company in Saudi Arabia

Mobile App Development Company in Saudi ArabiaSteve Jonas EmizenTech is a globally recognized software development company, proudly serving businesses since 2013. With over 11+ years of industry experience and a team of 200+ skilled professionals, we have successfully delivered 1200+ projects across various sectors. As a leading Mobile App Development Company In Saudi Arabia we offer end-to-end solutions for iOS, Android, and cross-platform applications. Our apps are known for their user-friendly interfaces, scalability, high performance, and strong security features. We tailor each mobile application to meet the unique needs of different industries, ensuring a seamless user experience. EmizenTech is committed to turning your vision into a powerful digital product that drives growth, innovation, and long-term success in the competitive mobile landscape of Saudi Arabia.

Special Meetup Edition - TDX Bengaluru Meetup #52.pptx

Special Meetup Edition - TDX Bengaluru Meetup #52.pptxshyamraj55 We’re bringing the TDX energy to our community with 2 power-packed sessions:

🛠️ Workshop: MuleSoft for Agentforce

Explore the new version of our hands-on workshop featuring the latest Topic Center and API Catalog updates.

📄 Talk: Power Up Document Processing

Dive into smart automation with MuleSoft IDP, NLP, and Einstein AI for intelligent document workflows.

Splunk Security Update | Public Sector Summit Germany 2025

Splunk Security Update | Public Sector Summit Germany 2025Splunk Splunk Security Update

Sprecher: Marcel Tanuatmadja

Big Data Analytics Quick Research Guide by Arthur Morgan

Big Data Analytics Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

2025-05-Q4-2024-Investor-Presentation.pptx

2025-05-Q4-2024-Investor-Presentation.pptxSamuele Fogagnolo Cloudflare Q4 Financial Results Presentation

How Can I use the AI Hype in my Business Context?

How Can I use the AI Hype in my Business Context?Daniel Lehner 𝙄𝙨 𝘼𝙄 𝙟𝙪𝙨𝙩 𝙝𝙮𝙥𝙚? 𝙊𝙧 𝙞𝙨 𝙞𝙩 𝙩𝙝𝙚 𝙜𝙖𝙢𝙚 𝙘𝙝𝙖𝙣𝙜𝙚𝙧 𝙮𝙤𝙪𝙧 𝙗𝙪𝙨𝙞𝙣𝙚𝙨𝙨 𝙣𝙚𝙚𝙙𝙨?

Everyone’s talking about AI but is anyone really using it to create real value?

Most companies want to leverage AI. Few know 𝗵𝗼𝘄.

✅ What exactly should you ask to find real AI opportunities?

✅ Which AI techniques actually fit your business?

✅ Is your data even ready for AI?

If you’re not sure, you’re not alone. This is a condensed version of the slides I presented at a Linkedin webinar for Tecnovy on 28.04.2025.

AWR Ambiguity: Performance reasoning when the numbers don't add up

- 1. AWR Ambiguity: Performance reasoning when the numbers don’t add up John Beresniewicz Oak table founding member Oracle Performance maven, ASH expert, bodyboarder

- 2. an Oaktable inquiry… Oaktable member (I didn’t really understand the question)

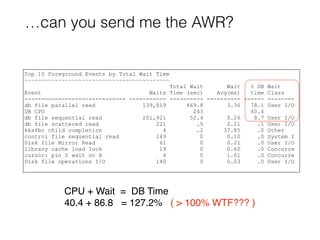

- 3. …can you send me the AWR? Top 10 Foreground Events by Total Wait Time ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ Total Wait Wait % DB Wait Event Waits Time (sec) Avg(ms) time Class ------------------------------ ----------- ---------- ---------- ------ -------- db file parallel read 139,819 469.8 3.36 78.1 User I/O DB CPU 243 40.4 db file sequential read 201,921 52.4 0.26 8.7 User I/O db file scattered read 221 .5 2.21 .1 User I/O kksfbc child completion 4 .2 37.85 .0 Other control file sequential read 249 0 0.10 .0 System I Disk file Mirror Read 61 0 0.21 .0 User I/O library cache load lock 19 0 0.60 .0 Concurre cursor: pin S wait on X 4 0 1.61 .0 Concurre Disk file operations I/O 140 0 0.03 .0 User I/O CPU + Wait = DB Time 40.4 + 86.8 = 127.2% ( > 100% WTF??? )

- 4. Quick Review

- 5. The Model • Summed over all foreground sessions • Wait time is active wait time (non-idle waits) • CPU and Wait time are non-overlapping DB Time = DB CPU time + Wait time

- 6. The Method • Account for DB Time accurately • Reduce DB Time spent doing the same work • focus on large chunks of similarly spent time • If system is CPU-bound, reduce CPU time first • wait times are inaccurate and wait tuning irrelevant

- 7. DB Time • Foreground session time in database calls • Measured by DB code using clear instrumentation points: • [call entry:start timer] • [call exit:stop timer] • System DB Time = SUM(Session DB Time) We trust DB Time accuracy implicitly

- 8. DB CPU • Foreground CPU actually used • does not include run-queue time • Measured by OS, collected by Oracle • independent instrumentation source • NOTE: AIX CPU reporting suspect for hyper-threaded cores We have high confidence in DB CPU accuracy

- 9. ASH and DB Time DB Time ~ COUNT(sampled sessions) * interval • “ON CPU”: session in a call and not waiting • this is derived, not “observed” • ASH always conforms to the model: • every sample is either ON CPU or WAITING Sessions using CPU in a wait will not show as ON CPU

- 10. Be mindful that… • AWR report presumes accurate instrumentation • ADDM presumes accurate instrumentation • Instrumentation is not always accurate • ergo, AWR report and ADDM may be misleading AWR and ADDM could (should) do some sanity checks

- 11. Back to Problem AWR

- 12. First questions • Elapsed time of report? • Version of Oracle DB? • bug lookup, report contents, available data • System CPU-bound?

- 13. Begin at the beginning… WORKLOAD REPOSITORY report for DB Name DB Id Instance Inst Num Startup Time Release RAC ------------ ----------- ------------ -------- --------------- ----------- --- DB12C 1329819247 db12cn1 1 04-Apr-15 06:19 12.1.0.2.0 NO Host Name Platform CPUs Cores Sockets Memory(GB) ---------------- -------------------------------- ---- ----- ------- ---------- ora1.dssdhop.lab Linux x86 64-bit 72 36 2 252.17 Snap Id Snap Time Sessions Curs/Sess --------- ------------------- -------- --------- Begin Snap: 410 04-Apr-15 06:22:16 61 .8 End Snap: 411 04-Apr-15 06:24:18 56 .7 Elapsed: 2.02 (mins) DB Time: 10.03 (mins) DB version? Elapsed time CPU bound? 12.1.0.2 2 minutes (120 secs) NO (36 >> 5 ; Cores >> AAS)

- 14. Next questions • What is DB Time over interval? • What is DB CPU over interval? • What is (expected) Wait Time over interval? • DB Time - DB CPU = Wait Time (expected) • What does ASH indicate?

- 15. Load Profile Load Profile Per Second Per Transaction Per Exec Per Call ~~~~~~~~~~~~~~~ --------------- --------------- --------- --------- DB Time(s): 5.0 150.4 0.01 3.13 DB CPU(s): 2.0 60.8 0.00 1.27 Background CPU(s): 0.0 0.4 0.00 0.00 Time(seconds) 0 150 300 450 600 DB Time DB CPU 600 240 Wait Time 360 DB Time = 600 secs ( 5 * 120 ) DB CPU = 240 secs ( 2 * 120 ) Wait Time = 360 secs ( 600 - 240 )

- 16. Adding measured wait times… Time(seconds) 0 200 400 600 800 DB Time CPU+Wait 52 470 600 240 excess wait 162 Top 10 Foreground Events by Total Wait Time ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ Total Wait Wait % DB Wait Event Waits Time (sec) Avg(ms) time Class ------------------------------ ----------- ---------- ---------- ------ -------- db file parallel read 139,819 469.8 3.36 78.1 User I/O db file sequential read 201,921 52.4 0.26 8.7 User I/O

- 17. What does ASH say? Time(seconds) 0 200 400 600 800 DB Time CPU+Wait ASH 80 52 410 470 600 60 240 CPU in wait Slot Event Slot Time (Duration) Count Event Count % Event -------------------- -------- ------------------------------ -------- ------- 06:22:16 (2.0 min) 55 db file parallel read 41 74.55 db file sequential read 8 14.55 CPU + Wait for CPU 6 10.91 ASH DB Time ~ 550 ASH CPU way off

- 18. What is our conclusion? • “db file parallel read” is consuming significant CPU under wait • 162 / 470 ~ 34% of the “wait” is actually CPU • 162 * 1000 / 139,819 ~ 1.16 ms/wait • block counts >>100 blocks ~ 1MB ~ 300 MB/sec • Not technically a bug Total Wait Wait % DB Wait Event Waits Time (sec) Avg(ms) time Class ------------------------------ ----------- ---------- ---------- ------ -------- db file parallel read 139,819 469.8 3.36 78.1 User I/O The model and reality do not always agree so well

- 19. Digging a bit further…OSSTAT Operating System Statistics DB/Inst: DB12C/db12cn1 Statistic Value End Value ------------------------- ---------------------- ---------------- BUSY_TIME 72,614 IDLE_TIME 795,376 IOWAIT_TIME 36,343 SYS_TIME 45,030 USER_TIME 27,559 LOAD 7 9 Time(seconds) 0 300 600 900 1200 Oracle AWR OSSTAT 450 363360 275240 DB CPU DB IOwait USER_TIME IOWAIT_TIME SYS_TIME? 1088 / 120 = 8.3 AAS }Non-Oracle process CPU doing I/O?

- 20. So what is Mr SLOB up to? • Benchmarking: pounding on parallel read wait event • Testing advanced I/O interfaces servicing this event? • SSD storage using I/O slaves perhaps? (non-DB sys time) • If we factor in ALL reads and ALL CPU: • 1000 * (450+275 tot CPU secs) / 15,496,576 tot reads • ~ 0.05 milliseconds CPU per random read

- 21. Instrumentation issues and symptoms Symptom Possible issue DB CPU >> ASH CPU (and significant wait time) CPU used within wait (this was the issue here) ASH CPU >> DB CPU System CPU-bound (ASH includes run-queue) DB Time >> DB CPU + Wait (and not CPU-bound) Un-instrumented wait (in call, not in wait, not on CPU) DB Time >> ASH DB Time 1. Double-counted DB Time 2. ASH dropped samples

- 22. Final advice • Don’t believe the unbelievable • Trust DB Time and DB CPU the most • Be wary of ASH CPU and measured Wait times • Always get ASH Report with AWR Report • Don’t ponder details if the big picture is not clear