Azure Stream Analytics

- 1. #sqlsatParma #sqlsat462November 28°, 2015 Azure Stream Analytics Marco Parenzan @marco_parenzan

- 4. #sqlsatParma #sqlsat462November 28°, 2015 Meet Marco Parenzan | @marco_parenzan Microsoft MVP 2015 for Azure Develop modern distributed and cloud solutions [email protected] Passion for speaking and inspiring programmers, students, people www.innovazionefvg.net I’m a developer!

- 5. #sqlsatParma #sqlsat462November 28°, 2015 Agenda Why a developer talks about analytics Analytics in a modern world Introduction to Azure Stream Analytics Stream Analytics Query Language (SAQL) Handling time in Azure Stream Analytics Scaling Analytics Conclusions

- 6. #sqlsatParma #sqlsat462November 28°, 2015 ANALYTICS IN A MODERN WORLD

- 7. #sqlsatParma #sqlsat462November 28°, 2015 What is Analytics From Wikipedia Analytics is the discovery and communication of meaningful patterns in data. Especially valuable in areas rich with recorded information, analytics relies on the simultaneous application of statistics, computer programming and operations research to quantify performance. Analytics often favors data visualization to communicate insight.

- 8. #sqlsatParma #sqlsat462November 28°, 2015 IoT proof of concept

- 9. #sqlsatParma #sqlsat462November 28°, 2015 Event-based systems Event I “something happened… …somewhere… …sometime! Event arrive at different times i.e. have unique timestamps Events arrive at different rates (events/sec). In any given period of time there may be 0, 1 or more events

- 10. #sqlsatParma #sqlsat462November 28°, 2015 Azure Service Bus Azure Service Bus Relay Queue Topic Notification Hub Event Hub NAT and Firewall Traversal Service Request/Response Services Unbuffered with TCP Throttling Transactional Cloud AMQP/HTTP Broker High-Scale, High-Reliability Messaging Sessions, Scheduled Delivery, etc. Transactional Message Distribution Up to 2000 subscriptions per Topic Up to 2K/100K filter rules per subscription High-scale notification distribution Most mobile push notification services Millions of notification targets Hyper Scale. A Million Clients. Concurrent.

- 11. #sqlsatParma #sqlsat462November 28°, 2015 Azure Event Hubs Event Producers > 1M Producers > 1GB/sec Aggregate Throughput Direct Hash Throughput Units: • 1 ≤ TUs ≤ Partition Count • TU: 1 MB/s writes, 2 MB/s reads

- 12. #sqlsatParma #sqlsat462November 28°, 2015 Microsoft Azure IoT Services Devices Device Connectivity Storage Analytics Presentation & Action Event Hubs SQL Database Machine Learning App Service Service Bus Table/Blob Storage Stream Analytics Power BI External Data Sources DocumentDB HDInsight Notification Hubs External Data Sources Data Factory Mobile Services BizTalk Services { }

- 13. #sqlsatParma #sqlsat462November 28°, 2015 ANALYTICS IN A MODERN WORLD

- 14. #sqlsatParma #sqlsat462November 28°, 2015 Traditional analytics Everything around us produce data From devices, sensors, infrastructures and applications Traditional Business Intelligence first collects data and analyzes it afterwards Typically 1 day latency, the day after But we live in a fast paced world Social media Internet of Things Just-in-time production Offline data is unuseful For many organizations, capturing and storing event data for later analysis is no longer enough Data at Rest

- 15. #sqlsatParma #sqlsat462November 28°, 2015 Analytics in a modern world We work with streaming data We want to monitor and analyze data in near real time Typically a few seconds up to a few minutes latency So we don’t have the time to stop, copy data and analyze, but we have to work with streams of data Data in motion

- 16. #sqlsatParma #sqlsat462November 28°, 2015 Scenarios Real-time ingestion, processing and archiving of data Real-time Analytics Connected devices (Internet of Things)

- 17. #sqlsatParma #sqlsat462November 28°, 2015 Why Stream Analytics in the Cloud? Not all data is local Event data is already in the Cloud Event data is globally distributed Bring the processing to the data, not the data to the processing 1 7

- 18. #sqlsatParma #sqlsat462November 28°, 2015 Apply cloud principles Focus on building solutions (PAAS or SAAS) Without having to manage complex infrastructure and software no hardware or other up-front costs and no time-consuming installation or setup has elastic scale where resources are efficiently allocated and paid for as requested Scale to any volume of data while still achieving high throughput, low-latency, and guaranteed resiliency Up and running in minutes

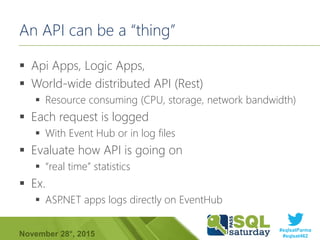

- 20. #sqlsatParma #sqlsat462November 28°, 2015 An API can be a “thing” Api Apps, Logic Apps, World-wide distributed API (Rest) Resource consuming (CPU, storage, network bandwidth) Each request is logged With Event Hub or in log files Evaluate how API is going on “real time” statistics Ex. ASP.NET apps logs directly on EventHub

- 21. #sqlsatParma #sqlsat462November 28°, 2015 INTRODUCTION TO AZURE STREAM ANALYTICS

- 22. #sqlsatParma #sqlsat462November 28°, 2015 What is Azure Stream Analytics? Azure Stream Analytics is a cost effective event processing engine Describe their desired transformations in SQL- like syntax Is a stream processing engine that is integrated with a scalable event queuing system like Azure Event Hubs

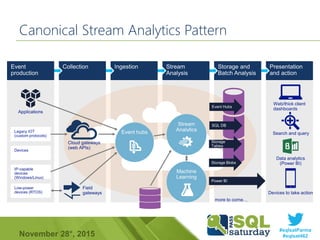

- 23. #sqlsatParma #sqlsat462November 28°, 2015 Canonical Stream Analytics Pattern

- 24. #sqlsatParma #sqlsat462November 28°, 2015 Real-time analytics Intake millions of events per second Intake millions of events per second (up to 1 GB/s) At variable loads Scale that accommodates variable loads Low processing latency, auto adaptive (sub-second to seconds) Transform, augment, correlate, temporal operations Correlate between different streams, or with reference data Find patterns or lack of patterns in data in real-time

- 25. #sqlsatParma #sqlsat462November 28°, 2015 No challenges with scale Elasticity of the cloud for scale out Spin up any number of resources on demand Scale from small to large when required Distributed, scale-out architecture

- 26. #sqlsatParma #sqlsat462November 28°, 2015 Fully managed No hardware (PaaS offering) Bypasses deployment expertise No software provisioning and maintaining No performance tuning Spin up any number of resources on demand Expand your business globally leveraging Azure regions

- 27. #sqlsatParma #sqlsat462November 28°, 2015 Mission critical availability Guaranteed events delivery Guaranteed not to lose events or incorrect output Guaranteed “once and only once” delivery of event Ability to replay events Guaranteed business continuity Guaranteed uptime (three nines of availability) Auto-recovery from failures Built in state management for fast recovery Effective Audits Privacy and security properties of solutions are evident Azure integration for monitoring and ops alerting

- 28. #sqlsatParma #sqlsat462November 28°, 2015 Lower costs Efficiently pay only for usage Architected for multi-tenancy Not paying for idle resources Typical cloud expense model Low startup costs Ability to incrementally add resources Reduce costs when business needs changes

- 29. #sqlsatParma #sqlsat462November 28°, 2015 Rapid development SQL like language High-level: focus on stream analytics solution Concise: less code to maintain First-class support for event streams and reference data Built in temporal semantics Built-in temporal windowing and joining Simple policy configuration to manage out-of-order events and late arrivals

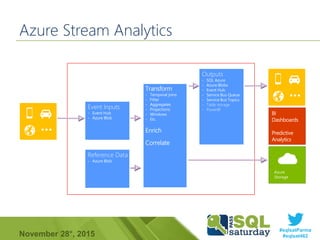

- 30. #sqlsatParma #sqlsat462November 28°, 2015 Azure Stream Analytics Data Source Collect Process ConsumeDeliver Event Inputs - Event Hub - Azure Blob Transform - Temporal joins - Filter - Aggregates - Projections - Windows - Etc. Enrich Correlate Outputs - SQL Azure - Azure Blobs - Event Hub - Service Bus Queue - Service Bus Topics - Table storage - PowerBI Azure Storage • Temporal Semantics • Guaranteed delivery • Guaranteed up time Reference Data - Azure Blob

- 31. #sqlsatParma #sqlsat462November 28°, 2015 Inputs sources for a Stream Analytics Job • Currently supported input Data Streams are Azure Event Hub , Azure IoT Hub and Azure Blob Storage. Multiple input Data Streams are supported. • Advanced options lets you configure how the Job will read data from the input blob (which folders to read from, when a blob is ready to be read, etc). • Reference data is usually static or changes very slowly over time. • Must be stored in Azure Blob Storage. • Cached for performance

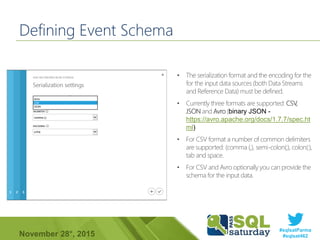

- 32. #sqlsatParma #sqlsat462November 28°, 2015 Defining Event Schema • The serialization format and the encoding for the for the input data sources (both Data Streams and Reference Data) must be defined. • Currently three formats are supported: CSV, JSON and Avro (binary JSON - https://avro.apache.org/docs/1.7.7/spec.ht ml) • For CSV format a number of common delimiters are supported: (comma (,), semi-colon(;), colon(:), tab and space. • For CSV and Avro optionally you can provide the schema for the input data.

- 33. #sqlsatParma #sqlsat462November 28°, 2015 Output for Stream Analytics Jobs Currently data stores supported as outputs Azure Blob storage: creates log files with temporal query results Ideal for archiving Azure Table storage: More structured than blob storage, easier to setup than SQL database and durable (in contrast to event hub) SQL database: Stores results in Azure SQL Database table Ideal as source for traditional reporting and analysis Event hub: Sends an event to an event hub Ideal to generate actionable events such as alerts or notifications Service Bus Queue: sends an event on a queue Ideal for sending events sequentially Service Bus Topics: sends an event to subscribers Ideal for sending events to many consumers PowerBI.com: Ideal for near real time reporting! DocumentDb: Ideal if you work with json and object graphs

- 35. #sqlsatParma #sqlsat462November 28°, 2015 STREAM ANALYTICS QUERY LANGUAGE (SAQL)

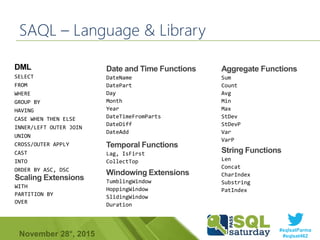

- 36. #sqlsatParma #sqlsat462November 28°, 2015 SAQL – Language & Library SELECT FROM WHERE GROUP BY HAVING CASE WHEN THEN ELSE INNER/LEFT OUTER JOIN UNION CROSS/OUTER APPLY CAST INTO ORDER BY ASC, DSC WITH PARTITION BY OVER DateName DatePart Day Month Year DateTimeFromParts DateDiff DateAdd TumblingWindow HoppingWindow SlidingWindow Duration Sum Count Avg Min Max StDev StDevP Var VarP Len Concat CharIndex Substring PatIndex Lag, IsFirst CollectTop

- 37. #sqlsatParma #sqlsat462November 28°, 2015 Supported types Type Description bigint Integers in the range -2^63 (-9,223,372,036,854,775,808) to 2^63-1 (9,223,372,036,854,775,807). float Floating point numbers in the range - 1.79E+308 to -2.23E-308, 0, and 2.23E-308 to 1.79E+308. nvarchar(max) Text values, comprised of Unicode characters. Note: A value other than max is not supported. datetime Defines a date that is combined with a time of day with fractional seconds that is based on a 24-hour clock and relative to UTC (time zone offset 0). Inputs will be casted into one of these types We can control these types with a CREATE TABLE statement: This does not create a table, but just a data type mapping for the inputs

- 38. #sqlsatParma #sqlsat462November 28°, 2015 INTO clause Pipelining data from input to output Without INTO clause we write to destination named ‘output’ We can have multiple outputs With INTO clause we can choose for every select the appropriate destination E.g. send events to blob storage for big data analysis, but send special events to event hub for alerting SELECT UserName, TimeZone INTO Output FROM InputStream WHERE Topic = 'XBox'

- 39. #sqlsatParma #sqlsat462November 28°, 2015 WHERE clause Specifies the conditions for the rows returned in the result set for a SELECT statement, query expression, or subquery There is no limit to the number of predicates that can be included in a search condition. SELECT UserName, TimeZone FROM InputStream WHERE Topic = 'XBox'

- 40. #sqlsatParma #sqlsat462November 28°, 2015 JOIN We can combine multiple event streams or an event stream with reference data via a join (inner join) or a left outer join In the join clause we can specify the time window in which we want the join to take place We use a special version of DateDiff for this

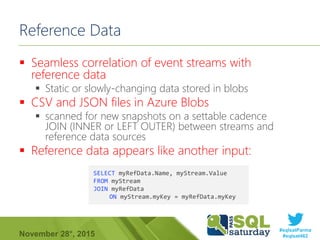

- 41. #sqlsatParma #sqlsat462November 28°, 2015 Reference Data Seamless correlation of event streams with reference data Static or slowly-changing data stored in blobs CSV and JSON files in Azure Blobs scanned for new snapshots on a settable cadence JOIN (INNER or LEFT OUTER) between streams and reference data sources Reference data appears like another input: SELECT myRefData.Name, myStream.Value FROM myStream JOIN myRefData ON myStream.myKey = myRefData.myKey

- 42. #sqlsatParma #sqlsat462November 28°, 2015 Reference data tips Currently reference data cannot be refreshed automatically. You need to stop the job and specify new snapshot with reference data Reference Data are only in Blog Practice says that you use services like Azure Data Factory to move data from Azure Data Sources to Azure Blob Storage Have you followed Francesco Diaz’s session?

- 43. #sqlsatParma #sqlsat462November 28°, 2015 UNION SELECT TollId, ENTime AS Time , LicensePlate FROM EntryStream TIMESTAMP BY ENTime UNION SELECT TollId, EXTime AS Time , LicensePlateFROM ExitStream TIMESTAMP BY EXTime TollId EntryTime LicensePlate … 1 2014-09-1012:01:00.000 JNB7001 … 1 2014-09-1012:02:00.000 YXZ1001 … 3 2014-09-1012:02:00.000 ABC1004 … TollId ExitTime LicensePlate 1 2009-06-2512:03:00.000 JNB7001 1 2009-06-2512:03:00.000 YXZ1001 3 2009-06-2512:04:00.000 ABC1004 TollId Time LicensePlate 1 2014-09-1012:01:00.000 JNB7001 1 2014-09-1012:02:00.000 YXZ1001 3 2014-09-1012:02:00.000 ABC1004 1 2009-06-2512:03:00.000 JNB7001 1 2009-06-2512:03:00.000 YXZ1001 3 2009-06-2512:04:00.000 ABC1004

- 44. #sqlsatParma #sqlsat462November 28°, 2015 STORING, FILTERING AND DECODING demo 4 4

- 45. #sqlsatParma #sqlsat462November 28°, 2015 HANDLING TIME IN AZURE STREAM ANALYTICS

- 46. #sqlsatParma #sqlsat462November 28°, 2015 Traditional queries Traditional querying assumes the data doesn’t change while you are querying it: query a fixed state If the data is changing: snapshots and transactions ‘freeze’ the data while we query it Since we query a finite state, our query should finish in a finite amount of time table query result table

- 47. #sqlsatParma #sqlsat462November 28°, 2015 A different kind of query When analyzing a stream of data, we deal with a potential infinite amount of data As a consequence our query will never end! To solve this problem most queries will use time windows stream temporal query result strea m

- 48. #sqlsatParma #sqlsat462November 28°, 2015 Arrival Time Vs Application Time Every event that flows through the system comes with a timestamp that can be accessed via System.Timestamp This timestamp can either be an application time which the user can specify in the query A record can have multiple timestamps associated with it The arrival time has different meanings based on the input sources. For the events from Azure Service Bus Event Hub, the arrival time is the timestamp given by the Event Hub For Blob storage, it is the blob’s last modified time. If the user wants to use an application time, they can do so using the TIMESTAMP BY keyword Data are sorted by timestamp column

- 49. #sqlsatParma #sqlsat462November 28°, 2015 Temporal Joins SELECT Make FROM EntryStream ES TIMESTAMP BY EntryTime JOIN ExitStream EX TIMESTAMP BY ExitTime ON ES.Make= EX.Make AND DATEDIFF(second,ES,EX) BETWEEN 0 AND 10 Time (Seconds) {“Mazda”,6} {“BMW”,7} {“Honda”,2} {“Volvo”,3}Toll Entry : {“Mazda”,3} {“BMW”,7}{“Honda”,2} {“Volvo”,3} Toll Exit : 0 5 10 15 20 25

- 50. #sqlsatParma #sqlsat462November 28°, 2015 Windowing Concepts Common requirement to perform some set-based operation (count, aggregation etc) over events that arrive within a specified period of time Group by returns data aggregated over a certain subset of data How to define a subset in a stream? Windowing functions! Each Group By requires a windowing function

- 51. #sqlsatParma #sqlsat462November 28°, 2015 Three types of windows Every window operation outputs events at the end of the window The output of the window will be single event based on the aggregate function used. The event will have the time stamp of the window All windows have a fixed length 5 1 Tumbling window Aggregate per time interval Hopping window Schedule overlapping windows Sliding window Windows constant re-evaluated

- 52. #sqlsatParma #sqlsat462November 28°, 2015 Tumbling Window 1 5 4 26 8 6 5 Time (secs) 1 5 4 26 8 6 A 20-second Tumbling Window 3 6 1 5 3 6 1 Tumbling windows: • Repeat • Are non-overlapping SELECT TollId, COUNT(*) FROM EntryStream TIMESTAMP BY EntryTime GROUP BY TollId, TumblingWindow(second, 20) Query: Count the total number of vehicles entering each toll booth every interval of 20 seconds. An event can belong to only one tumbling window

- 53. #sqlsatParma #sqlsat462November 28°, 2015 Hopping Window 1 5 4 26 8 6 A 20-second Hopping Window with a10 second “Hop” Hopping windows: • Repeat • Can overlap • Hop forward in time by a fixed period Same as tumbling window if hop size = window size Events can belong to more than one hopping window SELECT COUNT(*), TollId FROM EntryStream TIMESTAMP BY EntryTime GROUP BY TollId, HoppingWindow (second, 20,10) 4 26 8 6 5 3 6 1 1 5 4 26 8 6 5 3 6 15 3 QUERY: Count the number of vehicles entering each toll booth every interval of 20 seconds; update results every 10 seconds

- 54. #sqlsatParma #sqlsat462November 28°, 2015 Sliding Window 1 5 A 20-secondSliding Window Sliding window: • Continuously moves forward by an ε (epsilon) • Produces an output only during the occurrence of an event • Every windows will have at least one event Events can belong to more than one sliding window SELECT TollId, Count(*) FROM EntryStream ES GROUP BY TollId, SlidingWindow (second, 20) HAVING Count(*) > 10 Query: Find all the toll booths which have served more than 10 vehicles in the last 20 seconds 1 8 8 5 1 9 5 1 9

- 55. #sqlsatParma #sqlsat462November 28°, 2015 1 5 A 20-secondSliding Window 1 8 8 51 9 51 9 5 9 «5» enter «1» enter «9» enter «1» exit «5» exit 9 «9» exit «8» enter

- 56. #sqlsatParma #sqlsat462November 28°, 2015 TEMPORAL TASKS demo 5 6

- 57. #sqlsatParma #sqlsat462November 28°, 2015 SCALING ANALYTICS

- 58. #sqlsatParma #sqlsat462November 28°, 2015 Steaming Unit Is a measure of the computing resource available for processing a Job A streaming unit can process up to 1 Mb / second By default every job consists of 1 streaming unit. Total number of streaming units that can be used depends on : rate of incoming events complexity of the query

- 59. #sqlsatParma #sqlsat462November 28°, 2015 Multiple steps, multiple outputs A query can have multiple steps to enable pipeline execution A step is a sub-query defined using WITH (“common table expression”) The only query outside of the WITH keyword is also counted as a step Can be used to develop complex queries more elegantly by creating a intermediary named result Each step’s output can be sent to multiple output targets using INTO WITH Step1 AS ( SELECT Count(*) AS CountTweets, Topic FROM TwitterStream PARTITION BY PartitionId GROUP BY TumblingWindow(second, 3), Topic, PartitionId ), Step2 AS ( SELECT Avg(CountTweets) FROM Step1 GROUP BY TumblingWindow(minute, 3) ) SELECT * INTO Output1 FROM Step1 SELECT * INTO Output2 FROM Step2 SELECT * INTO Output3 FROM Step2

- 60. #sqlsatParma #sqlsat462November 28°, 2015 Scaling Concepts – Partitions When a query is partitioned, input events will be processed and aggregated in a separate partition groups Output events are produced for each partition group To read from Event Hubs ensure that the number of partitions match The query within the step must have the Partition By keyword If your input is a partitioned event hub, we can write partitioned queries and partitioned subqueries (WITH clause) A non-partitioned query with a 3-fold partitioned subquery can have (1+3) * 4 = 24 streaming units! SELECT Count(*) AS Count, Topic FROM TwitterStream PARTITION BY PartitionId GROUP BY TumblingWindow(minute, 3), Topic, PartitionId Query Result1 Query Result2 Query Result3 Event Hub

- 61. #sqlsatParma #sqlsat462November 28°, 2015 Out of order inputs Event Hub guarantees monotonicity of the timestamp on each partition of the Event Hub All events from all partitions are merged by timestamp order, there will be no out of order events. When it's important for you to use sender's timestamp, so a timestamp from the event payload is chosen using "timestamp by," there can be several sources or disorderness introduced. Producers of the events have clock skews. Network delay from the producers sending the events to Event Hub. Clock skews between Event Hub partitions. Do we skip them (drop) or do we pretend they happened just now (adjust)?

- 62. #sqlsatParma #sqlsat462November 28°, 2015 Handling out of order events On the configuration tab, you will find the following defaults. Using 0 seconds as the out of order tolerance window means you assert all events are in order all the time. To allow ASA to correct the disorderness, you can specify a non-zero out of order tolerance window size. ASA will buffer events up to that window and reorder them using the user chosen timestamp before applying the temporal transformation. Because of the buffering, the side effect is the output is delayed by the same amount of time As a result, you will need to tune the value to reduce the number of out of order events and keep the latency low.

- 63. #sqlsatParma #sqlsat462November 28°, 2015 STRUCTURING AND SCALING QUERY demo 6 3

- 65. #sqlsatParma #sqlsat462November 28°, 2015 Summary Azure Stream Analytics is the PaaS solution for Analytics on streaming data It is programmable with a SQL-like language Handling time is a special and central feature Scale with cloud principles: elastic, self service, multitenant, pay per use More questions: Other solutions Pricing What to do with that data? Futures

- 66. #sqlsatParma #sqlsat462November 28°, 2015 Microsoft real-time stream processing options

- 67. #sqlsatParma #sqlsat462November 28°, 2015 Apache Storm (in HDInsight) Apache Storm is a distributed, fault-tolerant, open source real-time event processing solution. Storm was originally used by Twitter to process massive streams of data from the Twitter firehose. Today, Storm is an incubator project as part of the Apache Software foundation. Typically, Storm will be integrated with a scalable event queuing system like Apache Kafka or Azure Event Hubs.

- 68. #sqlsatParma #sqlsat462November 28°, 2015 Stream Analytics vs Apache Storm Storm: Data Transformation Can handle more dynamic data (if you're willing to program) Requires programming Stream Analytics Ease of Setup JSON and CSV format only Can change queries within 4 minutes Only takes inputs from Event Hub, Blob Storage Only outputs to Azure Blob, Azure Tables, Azure SQL, PowerBI

- 69. #sqlsatParma #sqlsat462November 28°, 2015 Pricing Pricing based on volume per job: Volume of data processed Streaming units required to process the data stream Price (USD) Volume of Data Processed Volume of data processed by the streaming job (in GB) € 0.0009 per GB Streaming Unit* Blended measure of CPU, memory, throughput. € 0.0262 per hour € 18,864 per month

- 70. #sqlsatParma #sqlsat462November 28°, 2015 Azure Machine Learning Undestand the “sequence” of data in the history to predict the future But Azure can ‘learn’ which values preceded issues Azure Machine Learning

- 71. #sqlsatParma #sqlsat462November 28°, 2015 • • – Inside Office 365

- 72. #sqlsatParma #sqlsat462November 28°, 2015 Futures [started] Native integration with Azure Machine Learning Provide better ways to debug. [planned] Call to a REST endpoint to invoke custom code [under review] Take input from DocumentDb

- 73. #sqlsatParma #sqlsat462November 28°, 2015 Thanks Don’t forget to compile evaluations form here http://speakerscore.com/SqlSatParma2015 Marco Parenzan http://twitter.com/marco_parenzan http://www.slideshare.net/marcoparenzan http://www.github.com/marcoparenzan

Editor's Notes

- #5: Meet Stephen Hall, I have gotten to know Stephen very well in the past several months working on this project. Stephen started District Computers more than 10 years ago with the customer in mind. His company specializes in Small Business with a focus on Office365.

- #16: https://azure.microsoft.com/en-us/documentation/articles/stream-analytics-get-started/?WT.mc_id=Blog_SQL_Announce_DI

- #17: ingest a continuous stream of data and do in-flight processing like scrubbing PII information, adding geo-tagging, and doing IP lookups before being sent to a data store. Fraud Detection: Monitor financial transactions in real-time to detect fraudulent activity. Business Operations: Analyze real-time data to respond to dynamic environments in order to take immediate action provide real-time dashboarding where customers can see trends that happen immediately when they occur. Collect real-time metrics to gain immediate insight into a website’s usage patters or application performance. get real-time information from their connected devices like machines, buildings, or cars so that relevant action can be done. This can include scheduling a repair technician, pushing down software updates or to perform a specific automated action. Monitor and diagnose real-time data from connected devices such as vehicles, buildings, or machinery in order to generate alerts, respond to events, or optimize operations.

- #25: Key Points: Stream Analytics provides processing events at scale – millions per second – with variable loads analyzing the data in real-time – event correlating with reference data. Talk track: Processes millions of events per second Scale accommodates variable loads and preserves even order on a per-device basis Performs continuous real-time analytics for transforming, augmenting, correlating using temporal operations. This allows pattern and anomaly detection Correlates streaming data with reference – more static – data Think of augmenting events containing IPs with geo-location data or real-time stock market trading events with stock information.

- #28: Key Points: Stream Analytics has built-in guaranteed event delivery and business continuity which is critical for providing reliability and resiliency. Talk track: You will not lose any events. The service provides exactly once delivery of events. You don’t have to write any code for this and you can use it to replay events on failures or from a particular time based on the retention policy you have setup with Event Hubs. 3 9’s availability built into the service. Recovery from failures does not need to start at the beginning of a window. It can start from when the failure occurred in the window. This enables businesses to be as real-time as possible.

- #30: Stream Analytics gives developers the fastest productivity experience by abstracting the complexities of writing code for scale out over distributed systems and for custom analytics. Instead, developers need only describe the desired transformations using a declarative SQL language and the system will handle everything else. Normally, event processing solutions are arduous to implement because of the amount of custom code that needs to be written. Developers have to write code that reflects distributed systems taking into account coding for parallelization, deployment over a distributed platform, scheduling and monitoring. Furthermore, code for the analytical functions also must be written. While other cloud services for the most part have solutions that handle programming over the distributed platform, likely their code still is procedural and thus lower level and more complex to write (as compared to SQL commands. On-premise software may not even be designed to scale to data of high volumes through distributed scale out architectures. Key Points: Normally, event processing solutions are arduous to implement because of the amount of custom code that needs to be written. Developers have to write code that reflects distributed systems taking into account coding for parallelization, deployment over a distributed platform, scheduling and monitoring. Furthermore, code for the analytical functions also must be written. While other cloud services for the most part have solutions that handle programming over the distributed platform, likely their code still is procedural and thus lower level and more complex to write (as compared to SQL commands). On-premises software may not even be designed to scale to data of high volumes through distributed scale out architectures. Talk track: Developers focus on using a SQL-like language to construct stream processing logic and not worrying about accounting for parallelization, deployment to a distributed platform or creating temporal operators. Use the SQL-like language across streams to filter, project, aggregate, compare reference data, and perform temporal operations. Development, maintenance, and debugging can be done entirely through the Azure Management Portal. For public preview, support: Input: Azure Event Hubs, Azure Blobs Output: Azure Event Hubs, Azure Blobs, and Azure SQL Database, Azure Tables

- #32: The Azure portal provides wizards to guide the user through the processing of adding inputs. Every Job must have at least one data stream source. It can have multiple data streams. Currently supported data stream sources are Event Hubs and Blob Storage. Reference data is optional. Ref data is usually data that changes infrequently. An example might be a product catalog, data that maps city name to zipcode, customer profile data etc. Ref data is cached in memory for improved performance. Currently ref data must be in Blob Storage. The wizard collects all the information needed to read events from the input data. Blob Storage advanced option lets you specify additional details such as: Blob Serialization boundary: This setting determines when a blob is ready for reading. Stream Analytics supports Blob Boundary (the blob can be uploaded as a single piece or in blocks, but every block must be committed before the blob is read and can't be appended) and Block Boundary (blocks can be continuously added, and each block is individually readable and can be read as it's committed). Path Pattern: The file path used to locate your blobs within the specified container. Within the path, you may choose to specify one or more instances of the following 3 variables: {date}, {time}, {partition}. Ex 1: cluster1/logs/{date}/{time}/{partition} or Ex 2: cluster1/logs/{date} You can test whether you entered the correct information by testing for connectivity Every data streams must have a name (Input Alias). You use this name in the query to refer to a specific data stream. [It is the name of the ‘table’ you select from; more on this later].

- #33: In additional the connectivity information for the data sources, you must also specify the serialization format for the events coming from the source. Currently 3 serialization formats are supported: JSON, CSV and Avro For CSV format you can specify the delimiter (comma, semi-colon, colon, tab or space). Only utf-8 encoding is supported for now.

- #34: The Azure portal provides wizards to guide the user through the processing of add an output. The process for adding outputs to a Job is similar to that of adding inputs. The wizard collects all the information required to connect and store the results in the ouptput. In addition to Blob Storage and Event Hubs, ASA also supports storing the results in an Azure SQL Database. Note that when you use an Azure SQL database the schema of the result event and the Azure SQL database table must be compatible. Just as with inputs you have to define the serialization formats for blob storage and event hubs. The three supported formats are CSV, JSON and Avro. Utf-8 is the supported encoding format.

- #42: Currently reference data cannot be refreshed automatically. You need to stop the job and specify new snapshot with reference data. We are working on reference data refresh functionality, stay tuned for updates.

- #44: UNION Combines the results of two or more queries into a single result set that includes all the rows that belong to all queries in the union. The UNION operation is different from using joins that combine columns from two tables. The following are basic rules for combining the result sets of two queries by using UNION: The number and the order of the columns must be the same in all queries. The data types must be compatible. ALL keyword Incorporates all rows into the results including duplicates. If not specified, duplicate rows are removed.

- #50: Like standard T-SQL, JOINs in the Azure Stream Analytics query language are used to combine records from two or more input sources. JOINs in Azure Stream Analytics are temporal in nature, meaning that each JOIN must provide some limits on how far the matching rows can be separated in time. For instance, saying “join EntryStream events with ExitStream events when they occur on the same LicensePlate and TollId and within 5 minutes of each other” is legitimate; but “join EntryStream events with ExitStream events when they occur on the same LicensePlate and TollId” is not – it would match each EntryStream with an unbounded and potentially infinite collection of all ExitStream to the same LicensePlate and TollId. The time bounds for the relationship are specified inside the ON clause of the JOIN, using the DATEDIFF function. The query in this slide joins events in the Entry and Exit Stream only if they are less than 10 seconds apart. The two “Mazda” events in the EntryStream and ExitStream will NOT be joined because they are more than 10 seconds apart. The two “Honda” events will not be joined because, even though they are less than 10 seconds apart, the event in the ExitStream has a timestamp earlier than event in the EntryStream. DATEDIFF(second,ES,EX) for these two events will be a negative number. Note: DATEDIFF used in the SELECT statement uses the general syntax where we pass a datetime column or expression as the second and third parameter. But when we use the DATEDIFF function inside the JOIN condition, we pass the input_source name or its alias. Internally the timestamp associated for each event in that source is picked. You cannot use SELECT * in JOINS

- #51: Windowing (extensions to T-SQL) In applications that process real-time events, a common requirement is to perform some set-based computation (aggregation) or other operations over subsets of events that fall within some period of time. Because the concept of time is a fundamental necessity to complex event-processing systems, it’s important to have a simple way to work with the time component of query logic in the system. In ASA, these subsets of events are defined through windows to represent groupings by time. A window contains event data along a timeline and enables you to perform various operations against the events within that window. For example, you may want to sum the values of payload field. Every window operation outputs event at the end of the window. The windows of ASA are closed at the window start time and open at the window end time. For example, if you have a 5 minute window from 12:00 AM to 12:05 AM all events with timestamp greater than 12:00 AM and up to timestamp 12:05 AM inclusive will be included within this window. The output of the window will be a single event based on the aggregate function used with a timestamp equal to the window end time. The timestamp of the output event of the window can be projected in the SELECT statement using the System.Timestamp property using an alias. Every window automatically aligns itself to the zeroth hour. For example, a 5 minute tumbling window will align itself to (12:00-12:05] , (12:05-12:10], … Note: All windows should be used in a GROUP BY clause. In the example, the SUM of the events in first Window = 1+5+4+6+2 = 18. Currently all window types are of fixed width (fixed interval)

- #53: Tumbling windows specify a repeating, non-overlapping time interval of a fixed size. Syntax: TUMBLINGWINDOW(timeunit, windowsize) Timeunit – day, hour, minute, second, millisecond, microsecond, nanosecond. Windowsize – a bigInteger that described the size (width) of a window. Note that because tumbling windows are non-overlapping each event can only belong to one tumbling window. The query just counts the numbers of vehicles passing the toll station every 20 seconds, grouped by Toll Id.

- #54: To get a finer granularity of time, we can use a generalized version of tumbling window, called Hopping Window. Hopping windows are windows that "hop" forward in time by a fixed period. The window is defined by two time spans: the hop size H and the window size S. For every H time unit, a new window of size S is created. The tumbling window is a special case of a hopping window where the hop size is equal to the window size. Syntax HOPPINGWINDOW ( timeunit , windowsize , hopsize ) HOPPINGWINDOW ( Duration( timeunit , windowsize ) , Hop (timeunit , windowsize ) Note: The Hopping Window can be used in the above two ways. If the windowsize and the hopsize has the same timeunit, you can use it without the Duration and Hop functions. The Duration function can also be used with other types of windows to specify the window size

- #55: A Sliding window is a fixed length window which moves forward by an (€) epsilon and produces an output only during the occurrence of an event. An epsilon is one hundredth of a nanosecond. Syntax SLIDINGWINDOW ( timeunit , windowsize ) SLIDINGWINDOW(DURATION(timeunit, windowsize), Hop(timeunit, windowsize))

- #59: The number of streaming units that a job can utilize depends on the partition configuration for the inputs and the query defined for the job. Note also that a valid value for the stream units must be used. The valid values start at 1, 3, 6 and then upwards in increments of 6, as shown below.

- #61: Partitioning a step enables more streaming units to be allocated to a job as there is a limit on the number of units that can be assigned to an un-partitioned step. Partitioning requires that all three conditions listed in the slide be satisfied. When a query is partitioned, the input events will be processed and aggregated in separate partition groups, and outputs events are generated for each of the groups. If a combined aggregate is desirable, you must create a second non-partitioned step to aggregate. The preview release of Azure Stream Analytics doesn't support partitioning by column names. You can only partition by the PartitionId field, which is a built-in field in your query. The PartitionId field indicates from which partition of source data stream the event is from. Since Event Hubs supports partitioning, you can easily develop partitioned queries that read data from Event Hubs.

- #67: Microsoft offers both on-premises and cloud-based real-time stream processing options. StreamInsight is offered as part of SQL Server and should be used for on-premises deployments. The Microsoft Azure platform offers a vast set of data services, and while it’s a luxury to have such a broad array of capabilities to select from, it can also present a challenge. Designing a solution requires that you evaluate which offerings are best suited to your requirements as part of the planning and design project phases. There are a number of instances where Azure provides similar platforms for a given task. For example, Storm for Azure HDInsight and Azure Stream Analytics are both platform-as-a-service (PaaS) offerings providing real-time event stream processing. Both of these services are highly capable engines suitable for a range of solution deployments, however, some of the differences will influence the decision for which services is best suited to a project. Storm for Azure HDInsight is an Apache open-source stream analytics platform running on Microsoft Azure to do real-time data processing. Storm is highly flexible with contributions from the Hadoop community and highly customizable through any development language like Java and .NET (deep Visual Studio IDE integration). Azure Stream Analytics is a fully managed Microsoft first party event processing engine that provides real-time analytics in a SQL-based query language to speed time of development. Stream Analytics makes it easy to operationalize event processing with a small number of resources and drives a low price point with its multi-tenancy architecture.

- #69: http://stackoverflow.com/questions/31130025/azure-storm-vs-azure-stream-analytics http://blogs.technet.com/b/dataplatforminsider/archive/2014/10/16/the-ins-and-outs-of-apache-storm-real-time-processing-for-hadoop.aspx?WT.mc_id=Blog_SQL_Announce_DI

- #70: https://azure.microsoft.com/en-us/documentation/articles/stream-analytics-comparison-storm/

- #73: (http://feedback.azure.com/forums/270577-azure-stream-analytics)

![#sqlsatParma

#sqlsat462November 28°, 2015

Futures

[started]

Native integration with Azure Machine Learning

Provide better ways to debug.

[planned]

Call to a REST endpoint to invoke custom code

[under review]

Take input from DocumentDb](https://image.slidesharecdn.com/2015-151128143842-lva1-app6892/85/Azure-Stream-Analytics-72-320.jpg)