Bank of China Tech Talk 2: Introduction to Streaming Data and Stream Processing with Apache Kafka

0 likes233 views

Watch the webcast here: https://videos.confluent.io/watch/yaWXJTKELvUGfPx9cnmhZ8 Speaker: Kenneth Cheung

1 of 76

Downloaded 10 times

Ad

Recommended

Elastically Scaling Kafka Using Confluent

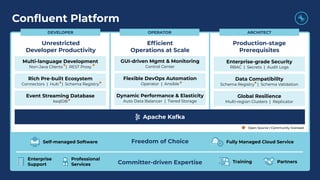

Elastically Scaling Kafka Using Confluentconfluent This document discusses how Confluent Platform provides elastic scaling for Apache Kafka. It offers fully managed cloud services through Confluent Cloud or self-managed software. Confluent Cloud allows users to easily scale Kafka workloads from 0 MBps to GBps without complex provisioning. It also offers pay-for-use pricing where customers only pay for the data streamed, with the ability to scale to zero. For self-managed deployments, Confluent Platform enables dynamic scaling of Kafka clusters on Kubernetes through features like tiered storage and self-balancing clusters that can rebalance partitions in seconds versus hours for other Kafka services.

What is Apache Kafka®?

What is Apache Kafka®?confluent Viktor Gamov, Confluent, Developer Advocate

Apache Kafka is an open source distributed streaming platform that allows you to build applications and process events as they occur. Viktor Gamov (developer Advocate at Confluent) walks through how it works and important underlying concepts. As a real-time, scalable, and durable system, Kafka can be used for fault-tolerant storage as well as for other use cases, such as stream processing, centralized data management, metrics, log aggregation, event sourcing, and more.

This talk will explain what a streaming platform such as Apache Kafka is and some of the use cases and design patterns around its use—including several examples of where it is solving real business problems.

https://www.meetup.com/Chennai-Kafka/events/269942117/

Westpac Bank Tech Talk 2: Introduction to Streaming Data and Stream Processin...

Westpac Bank Tech Talk 2: Introduction to Streaming Data and Stream Processin...confluent Watch the webcast here: https://videos.confluent.io/watch/P5up2YQX9QdVMhmYfsXy7Q

Speaker: Brett Randall

Cloud native Kafka | Sascha Holtbruegge and Margaretha Erber, HiveMQ

Cloud native Kafka | Sascha Holtbruegge and Margaretha Erber, HiveMQHostedbyConfluent Joins in Kafka Streams and ksqlDB are a killer-feature for data processing and basic join semantics are well understood. However, in a streaming world records are associated with timestamps that impact the semantics of joins: welcome to the fabulous world of _temporal_ join semantics. For joins, timestamps are as important as the actual data and it is important to understand how they impact the join result.

In this talk we want to deep dive on the different types of joins, with a focus of their temporal aspect. Furthermore, we relate the individual join operators to the overall ""time engine"" of the Kafka Streams query runtime and explain its relationship to operator semantics. To allow developers to apply their knowledge on temporal join semantics, we provide best practices, tip and tricks to ""bend"" time, and configuration advice to get the desired join results. Last, we give an overview of recent, and an outlook to future, development that improves joins even further.

ksqlDB Workshop

ksqlDB Workshopconfluent Watch the webcast here: https://videos.confluent.io/watch/woobbdupdrwpJCHZKEwF5E?

Speaker: Patrick Druley

Event Driven Architecture with a RESTful Microservices Architecture (Kyle Ben...

Event Driven Architecture with a RESTful Microservices Architecture (Kyle Ben...confluent Tinder’s Quickfire Pipeline powers all things data at Tinder. It was originally built using AWS Kinesis Firehoses and has since been extended to use both Kafka and other event buses. It is the core of Tinder’s data infrastructure. This rich data flow of both client and backend data has been extended to service a variety of needs at Tinder, including Experimentation, ML, CRM, and Observability, allowing backend developers easier access to shared client side data. We perform this using many systems, including Kafka, Spark, Flink, Kubernetes, and Prometheus. Many of Tinder’s systems were natively designed in an RPC first architecture.

Things we’ll discuss decoupling your system at scale via event-driven architectures include:

– Powering ML, backend, observability, and analytical applications at scale, including an end to end walk through of our processes that allow non-programmers to write and deploy event-driven data flows.

– Show end to end the usage of dynamic event processing that creates other stream processes, via a dynamic control plane topology pattern and broadcasted state pattern

– How to manage the unavailability of cached data that would normally come from repeated API calls for data that’s being backfilled into Kafka, all online! (and why this is not necessarily a “good” idea)

– Integrating common OSS frameworks and libraries like Kafka Streams, Flink, Spark and friends to encourage the best design patterns for developers coming from traditional service oriented architectures, including pitfalls and lessons learned along the way.

– Why and how to avoid overloading microservices with excessive RPC calls from event-driven streaming systems

– Best practices in common data flow patterns, such as shared state via RocksDB + Kafka Streams as well as the complementary tools in the Apache Ecosystem.

– The simplicity and power of streaming SQL with microservices

Cloud-Based Event Stream Processing Architectures and Patterns with Apache Ka...

Cloud-Based Event Stream Processing Architectures and Patterns with Apache Ka...HostedbyConfluent The Apache Kafka ecosystem is very rich with components and pieces that make for designing and implementing secure, efficient, fault-tolerant and scalable event stream processing (ESP) systems. Using real-world examples, this talk covers why Apache Kafka is an excellent choice for cloud-native and hybrid architectures, how to go about designing, implementing and maintaining ESP systems, best practices and patterns for migrating to the cloud or hybrid configurations, when to go with PaaS or IaaS, what options are available for running Kafka in cloud or hybrid environments and what you need to build and maintain successful ESP systems that are secure, performant, reliable, highly-available and scalable.

Death of the dumb pipes: Using Apache Kafka® for Integration projects

Death of the dumb pipes: Using Apache Kafka® for Integration projectsHostedbyConfluent Guru Sattanathan, Confluent, Senior Solutions Engineer

Enterprise Integration technologies (aka Middleware) are the key enablers when it comes to Real-time data flows or Event Driven Architecture. Starting from real-time payments, e-commerce, travel booking systems, etc, everything is powered by a middleware underneath. It did transform a lot of things but with caveats!

Are ESB’s & MQ’s enough for today’s integration needs? Do you know their technical debts?

If you are someone looking at integrating your applications or an Integration Architect this session is for you. It's time to refresh yourself and see how organizations are building integrations today.

In this session, we will go in this order:

-Recap on Enterprise Integration technologies

-What are the key flaws & What needs improvement?

-What is Apache Kafka?

-Rethinking Integration using Apache Kafka

https://www.meetup.com/KafkaMelbourne/events/280590162/

How to Discover, Visualize, Catalog, Share and Reuse your Kafka Streams (Jona...

How to Discover, Visualize, Catalog, Share and Reuse your Kafka Streams (Jona...HostedbyConfluent As Kafka deployments grow within your organization, so do the challenges around lifecycle management. For instance, do you really know what streams exist, who is producing and consuming them? What is the effect of upstream changes? How is this information kept up to date, so it is relevant and consistent to others looking to reuse these streams? Ever wish you had a way to view and visualize graphically the relationships between schemas, topics and applications? In this talk we will show you how to do that and get more value from your Kafka Streaming infrastructure using an event portal. It’s like an API portal but specialized for event streams and publish/subscribe patterns. Join us to see how you can automatically discover event streams from your Kafka clusters, import them to a catalog and then leverage code gen capabilities to ease development of new applications.

Kafka in Context, Cloud, & Community (Simon Elliston Ball, Cloudera) Kafka Su...

Kafka in Context, Cloud, & Community (Simon Elliston Ball, Cloudera) Kafka Su...HostedbyConfluent The document discusses Kafka in the context of cloud platforms and open source communities. It describes several Apache projects that can be used with Kafka, such as Apache NiFi for data collection, Apache Flink for stream processing, and Apache Ranger for security. It also outlines features of Cloudera's platform for managing Kafka deployments, including unified governance tools, monitoring, and services to simplify operations. Finally, it discusses how Kafka can be deployed across cloud, on-premise, and hybrid environments with auto-scaling and other management capabilities.

Building Event Streaming Microservices with Spring Boot and Apache Kafka | Ja...

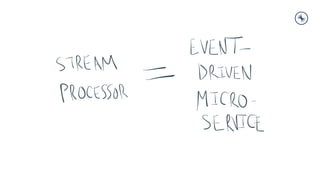

Building Event Streaming Microservices with Spring Boot and Apache Kafka | Ja...HostedbyConfluent This document summarizes Jan Svoboda's presentation on building event streaming microservices with Spring Boot and Apache Kafka. Jan discusses his motivation for learning Kafka and microservices development. He outlines a typical journey from strangling monoliths to building event-driven architectures using Kafka. Jan explains how Spring and Kafka integrate well together. The remainder of the presentation demonstrates building a sample application using these techniques and dives into common architecture patterns like event sourcing, CQRS, scaling stateful services, and reverse proxy routing.

All Streams Ahead! ksqlDB Workshop ANZ

All Streams Ahead! ksqlDB Workshop ANZconfluent Watch the available webcast: https://videos.confluent.io/watch/Jbb3PUbgDFck6jLcCkVvur?

Speaker: David Peterson

Deep Dive Series #3: Schema Validation + Structured Audit Logs

Deep Dive Series #3: Schema Validation + Structured Audit Logsconfluent Eine weitere neue sicherheitsrelevante Funktion in Confluent Platform 5.4 sind Structured Audit Logs. Jetzt ist natürlich alles in Kafka ein Log, aber Kafka protokolliert nicht, was Kafka mit Kafka macht - nur das, was in einen Topics geschrieben wird.

Im dritten Teil der Deep Dive Sessions besprechen wir neben den Structured Audit Logs außerdem die "Weiterentwicklung" der bereits bekannten Schema Registry: Die Schema Validation agiert auf dem Topic-Level und stellt sicher, dass jede einzelne Message, die zu einem bestimmten Topic erstellt wird in der Schema Registry überprüft wird. Mehr dazu erklären wir in unserem Deep Dive #3.

Building a Web Application with Kafka as your Database

Building a Web Application with Kafka as your Databaseconfluent This document provides an overview of a presentation on building a web application using Apache Kafka as the database. The presentation discusses using Kafka for messaging in both request-driven and event-driven architectures. It then covers using Kafka and Kafka Streams as an alternative to a traditional database for the benefits of performance, scaling, and being the system of record. The presentation reviews building a quiz application called Quizzer that uses Kafka Streams to store and access data, including designing topologies for starting quizzes, submitting answers, and retrieving next questions. Key takeaways include using Kafka Streams features like transformations, joins, and state stores to access data within topologies.

Technical Deep Dive: Using Apache Kafka to Optimize Real-Time Analytics in Fi...

Technical Deep Dive: Using Apache Kafka to Optimize Real-Time Analytics in Fi...confluent Watch this talk here: https://www.confluent.io/online-talks/using-apache-kafka-to-optimize-real-time-analytics-financial-services-iot-applications

When it comes to the fast-paced nature of capital markets and IoT, the ability to analyze data in real time is critical to gaining an edge. It’s not just about the quantity of data you can analyze at once, it’s about the speed, scale, and quality of the data you have at your fingertips.

Modern streaming data technologies like Apache Kafka and the broader Confluent platform can help detect opportunities and threats in real time. They can improve profitability, yield, and performance. Combining Kafka with Panopticon visual analytics provides a powerful foundation for optimizing your operations.

Use cases in capital markets include transaction cost analysis (TCA), risk monitoring, surveillance of trading and trader activity, compliance, and optimizing profitability of electronic trading operations. Use cases in IoT include monitoring manufacturing processes, logistics, and connected vehicle telemetry and geospatial data.

This online talk will include in depth practical demonstrations of how Confluent and Panopticon together support several key applications. You will learn:

-Why Apache Kafka is widely used to improve performance of complex operational systems

-How Confluent and Panopticon open new opportunities to analyze operational data in real time

-How to quickly identify and react immediately to fast-emerging trends, clusters, and anomalies

-How to scale data ingestion and data processing

-Build new analytics dashboards in minutes

Why Kafka Works the Way It Does (And Not Some Other Way) | Tim Berglund, Conf...

Why Kafka Works the Way It Does (And Not Some Other Way) | Tim Berglund, Conf...HostedbyConfluent Studying the ""how"" of Kafka makes you better at using Kafka, but studying its ""whys"" makes you better at so much more. In looking at the tradeoffs behind a system like Kafka, we learn to reason more clearly about distributed systems and to make high-stakes technology adoption decisions more effectively. These are skills we all want to improve!

In this talk, we'll examine trade-offs on which our favorite distributed messaging system takes opinionated positions:

- Whether to store data contiguously or using an index

- How many storage tiers are best?

- Where should metadata live?

- And more.

It's always useful to dissect a modern distributed system with the goal of understanding it better, and it's even better to learn to deeper architectural principles in the process. Come to this talk for a generous helping of both.

Testing Event Driven Architectures: How to Broker the Complexity | Frank Kilc...

Testing Event Driven Architectures: How to Broker the Complexity | Frank Kilc...HostedbyConfluent This document discusses testing event-driven architectures. It begins by defining common event-driven architecture patterns like event notifications and event sourcing. It then discusses brokering the complexity of event-driven architectures by describing how events are communicated between producers and consumers via channels. The document outlines what information should be included in events like payloads and headers. It also discusses the difference between orchestration and choreography in event-driven systems. It provides an example of how events can be used to mediate changes within a system using order validation. Finally, it demonstrates how to test event-driven architectures using specifications and discusses accelerating API quality through testing tools that support multiple protocols and definitions.

Server Sent Events using Reactive Kafka and Spring Web flux | Gagan Solur Ven...

Server Sent Events using Reactive Kafka and Spring Web flux | Gagan Solur Ven...HostedbyConfluent Server-Sent Events (SSE) is a server push technology where clients receive automatic server updates through the secure http connection. SSE can be used in apps like live stock updates, that use one way data communications and also helps to replace long polling by maintaining a single connection and keeping a continuous event stream going through it. We used a simple Kafka producer to publish messages onto Kafka topics and developed a reactive Kafka consumer by leveraging Spring Webflux to read data from Kafka topic in non-blocking manner and send data to clients that are registered with Kafka consumer without closing any http connections. This implementation allows us to send data in a fully asynchronous & non-blocking manner and allows us to handle a massive number of concurrent connections. We’ll cover:

•Push data to external or internal apps in near real time

•Push data onto the files and securely copy them to any cloud services

•Handle multiple third-party apps integrations

James Watters, Pivotal | Kafka Summit NYC 2019 Keynote (Spring Boot+Kafka: Th...

James Watters, Pivotal | Kafka Summit NYC 2019 Keynote (Spring Boot+Kafka: Th...confluent This document discusses how Spring Boot and Kafka can form a new enterprise platform for continuous delivery. It provides examples of companies like Netflix transitioning to using Spring Boot as their core Java framework. The document advocates building applications around event-driven microservices using Spring Boot, Kafka streams, and a streaming data platform to enable arbitrary scaling, multi-cloud capabilities, and continuous delivery across the enterprise.

5 lessons learned for successful migration to Confluent cloud | Natan Silinit...

5 lessons learned for successful migration to Confluent cloud | Natan Silinit...HostedbyConfluent Confluent Cloud makes Devops engineers lives a lot more easier.

Yet moving 1500 microservices, 10K topics and 100K partitions to a multi-cluster Confluent cloud can be a challenge.

In this talk you will hear about 5 lessons that Wix has learned in order to successfully meet this challenge.

These lessons include:

1. Automation, Automation, Automation - all the process has to be completely automated at such scale

2. Prefer a gradual approach - E.g. migrate topics in small chunks and not all at once. Reduces risks if things go bad

3. Cleanup first - avoid migrating unused topics or topics with too many unnecessary partitions

Hybrid Kafka, Taking Real-time Analytics to the Business (Cody Irwin, Google ...

Hybrid Kafka, Taking Real-time Analytics to the Business (Cody Irwin, Google ...HostedbyConfluent Apache Kafka users who want to leverage Google Cloud Platform's (GCPs) data analytics platform and open source hosting capabilities can bridge their existing Kafka infrastructure on-premise or in other clouds to GCP using Confluent's replicator tool and managed Kafka service on GCP. Using actual customer examples and a reference architecture, we'll showcase how existing Kafka users can stream data to GCP and use it in popular tools like Apache Beam on Dataflow, BigQuery, Google Cloud Storage (GCS), Spark on Dataproc, and Tensorflow for data warehousing, data processing, data storage, and advanced analytics using AI and ML.

Event Streaming CTO Roundtable for Cloud-native Kafka Architectures

Event Streaming CTO Roundtable for Cloud-native Kafka ArchitecturesKai Wähner Technical thought leadership presentation to discuss how leading organizations move to real-time architecture to support business growth and enhance customer experience. This is a forum to discuss use cases with your peers to understand how other digital-native companies are utilizing data in motion to drive competitive advantage.

Agenda:

- Data in Motion with Event Streaming and Apache Kafka

- Streaming ETL Pipelines

- IT Modernisation and Hybrid Multi-Cloud

- Customer Experience and Customer 360

- IoT and Big Data Processing

- Machine Learning and Analytics

Build a Bridge to Cloud with Apache Kafka® for Data Analytics Cloud Services

Build a Bridge to Cloud with Apache Kafka® for Data Analytics Cloud Servicesconfluent Build a Bridge to Cloud with Apache Kafka® for Data Analytics Cloud Services, Perry Krol, Head of Systems Engineering, CEMEA, Confluent

https://www.meetup.com/Frankfurt-Apache-Kafka-Meetup-by-Confluent/events/269751169/

Kubernetes connectivity to Cloud Native Kafka | Evan Shortiss and Hugo Guerre...

Kubernetes connectivity to Cloud Native Kafka | Evan Shortiss and Hugo Guerre...HostedbyConfluent If you want to build an ecosystem of streaming data to your Kafka platform, you will need a much easier way for your developer to quickly move what’s on the source to your cluster. Better yet, making the connector serverless so it would NOT waste any resources for being idle, and having a trusted partner manage your Kafka infrastructure for you. In this session, we will show you how easy we have made streaming data with great user experience. Flexible resource management with our new secret weapon in the Apache Camel project -- Kamelet. We’ll also demonstrate how Red Hat OpenShift Streams for Apache Kafka simplifies the provisioning of Kafka deployments in a public cloud, managing the cluster,topics, and configuring secure access to the Kafka cluster for your developers.

Introduction to Apache Kafka and Confluent... and why they matter!

Introduction to Apache Kafka and Confluent... and why they matter!Paolo Castagna This is a short introduction to Apache Kafka and Confluent (the company founded by the creator of Kafka). The slides cover Apache Kafka APIs including Kafka Connect and Kafka Streams (part of Apache Kafka). Other open source, ASL licensed, projects are mentioned: #KSQL, Schema Registry, REST Proxy, etc.

Many thanks to Codemotion and Seacom for hosting the event.

Demystifying Event-Driven Architectures with Apache Kafka | Bogdan Sucaciu, P...

Demystifying Event-Driven Architectures with Apache Kafka | Bogdan Sucaciu, P...HostedbyConfluent Event-Driven Architectures (EDA ) are perceived as mythical objects that instantly transform your systems into ""real-time"" ones! BUT, come to think of it, aren't they already ""real-time""? I mean, adding an item to the cart is pretty much instant in ( most ) webshops.

In fact, EDA solves an entirely different set of problems and with the help of Apache Kafka, we will walk through the (re)evolution path. Microservices are easy to get started with, but once we do, we keep stumbling across the same issues: data access, consistency, and failures ( sounds familiar? ).

The solution? Patterns, patterns, patterns … You’ve probably heard about terms such as “Event Notification”, “Event-carried State Transfer”, or even “Event Sourcing”, but how can they be used to solve our problems? And more importantly, how can we use Apache Kafka to take advantage of these patterns?

I guess we will find out soon!

Data Transformations on Ops Metrics using Kafka Streams (Srividhya Ramachandr...

Data Transformations on Ops Metrics using Kafka Streams (Srividhya Ramachandr...confluent This document discusses using Kafka Streams to transform operational metrics data from Priceline applications before loading it into Splunk. It describes how the legacy monitoring system worked, and the motives for moving to Kafka and Kafka Streams. It then explains how data is collected from applications into Kafka topics, and how various transformations like formatting, key application, and aggregation are performed using Kafka Streams before loading to Splunk. It also discusses testing, monitoring, and debugging Kafka Streams applications.

Bank of China (HK) Tech Talk 1: Dive Into Apache Kafka

Bank of China (HK) Tech Talk 1: Dive Into Apache Kafkaconfluent Watch the webcast here: https://videos.confluent.io/watch/WwmbzUR7bpakR9NLwRhEAX?

Speaker: Kenneth Cheung

Au delà des brokers, un tour de l’environnement Kafka | Florent Ramière

Au delà des brokers, un tour de l’environnement Kafka | Florent Ramièreconfluent During the Confluent Streaming event in Paris, Florent Ramière, Technical Account Manager at Confluent, goes beyond brokers, introducing a whole new ecosystem with Kafka Streams, KSQL, Kafka Connect, Rest proxy, Schema Registry, MirrorMaker, etc.

Beyond the brokers - A tour of the Kafka ecosystem

Beyond the brokers - A tour of the Kafka ecosystemDamien Gasparina Beyond the brokers - A tour of the Kafka ecosystem. Presentation done the 28/03/2019 (Lyon JUG: https://www.meetup.com/Lyon-Java-User-Group-LyonJUG/events/259569434/)

Ad

More Related Content

What's hot (20)

How to Discover, Visualize, Catalog, Share and Reuse your Kafka Streams (Jona...

How to Discover, Visualize, Catalog, Share and Reuse your Kafka Streams (Jona...HostedbyConfluent As Kafka deployments grow within your organization, so do the challenges around lifecycle management. For instance, do you really know what streams exist, who is producing and consuming them? What is the effect of upstream changes? How is this information kept up to date, so it is relevant and consistent to others looking to reuse these streams? Ever wish you had a way to view and visualize graphically the relationships between schemas, topics and applications? In this talk we will show you how to do that and get more value from your Kafka Streaming infrastructure using an event portal. It’s like an API portal but specialized for event streams and publish/subscribe patterns. Join us to see how you can automatically discover event streams from your Kafka clusters, import them to a catalog and then leverage code gen capabilities to ease development of new applications.

Kafka in Context, Cloud, & Community (Simon Elliston Ball, Cloudera) Kafka Su...

Kafka in Context, Cloud, & Community (Simon Elliston Ball, Cloudera) Kafka Su...HostedbyConfluent The document discusses Kafka in the context of cloud platforms and open source communities. It describes several Apache projects that can be used with Kafka, such as Apache NiFi for data collection, Apache Flink for stream processing, and Apache Ranger for security. It also outlines features of Cloudera's platform for managing Kafka deployments, including unified governance tools, monitoring, and services to simplify operations. Finally, it discusses how Kafka can be deployed across cloud, on-premise, and hybrid environments with auto-scaling and other management capabilities.

Building Event Streaming Microservices with Spring Boot and Apache Kafka | Ja...

Building Event Streaming Microservices with Spring Boot and Apache Kafka | Ja...HostedbyConfluent This document summarizes Jan Svoboda's presentation on building event streaming microservices with Spring Boot and Apache Kafka. Jan discusses his motivation for learning Kafka and microservices development. He outlines a typical journey from strangling monoliths to building event-driven architectures using Kafka. Jan explains how Spring and Kafka integrate well together. The remainder of the presentation demonstrates building a sample application using these techniques and dives into common architecture patterns like event sourcing, CQRS, scaling stateful services, and reverse proxy routing.

All Streams Ahead! ksqlDB Workshop ANZ

All Streams Ahead! ksqlDB Workshop ANZconfluent Watch the available webcast: https://videos.confluent.io/watch/Jbb3PUbgDFck6jLcCkVvur?

Speaker: David Peterson

Deep Dive Series #3: Schema Validation + Structured Audit Logs

Deep Dive Series #3: Schema Validation + Structured Audit Logsconfluent Eine weitere neue sicherheitsrelevante Funktion in Confluent Platform 5.4 sind Structured Audit Logs. Jetzt ist natürlich alles in Kafka ein Log, aber Kafka protokolliert nicht, was Kafka mit Kafka macht - nur das, was in einen Topics geschrieben wird.

Im dritten Teil der Deep Dive Sessions besprechen wir neben den Structured Audit Logs außerdem die "Weiterentwicklung" der bereits bekannten Schema Registry: Die Schema Validation agiert auf dem Topic-Level und stellt sicher, dass jede einzelne Message, die zu einem bestimmten Topic erstellt wird in der Schema Registry überprüft wird. Mehr dazu erklären wir in unserem Deep Dive #3.

Building a Web Application with Kafka as your Database

Building a Web Application with Kafka as your Databaseconfluent This document provides an overview of a presentation on building a web application using Apache Kafka as the database. The presentation discusses using Kafka for messaging in both request-driven and event-driven architectures. It then covers using Kafka and Kafka Streams as an alternative to a traditional database for the benefits of performance, scaling, and being the system of record. The presentation reviews building a quiz application called Quizzer that uses Kafka Streams to store and access data, including designing topologies for starting quizzes, submitting answers, and retrieving next questions. Key takeaways include using Kafka Streams features like transformations, joins, and state stores to access data within topologies.

Technical Deep Dive: Using Apache Kafka to Optimize Real-Time Analytics in Fi...

Technical Deep Dive: Using Apache Kafka to Optimize Real-Time Analytics in Fi...confluent Watch this talk here: https://www.confluent.io/online-talks/using-apache-kafka-to-optimize-real-time-analytics-financial-services-iot-applications

When it comes to the fast-paced nature of capital markets and IoT, the ability to analyze data in real time is critical to gaining an edge. It’s not just about the quantity of data you can analyze at once, it’s about the speed, scale, and quality of the data you have at your fingertips.

Modern streaming data technologies like Apache Kafka and the broader Confluent platform can help detect opportunities and threats in real time. They can improve profitability, yield, and performance. Combining Kafka with Panopticon visual analytics provides a powerful foundation for optimizing your operations.

Use cases in capital markets include transaction cost analysis (TCA), risk monitoring, surveillance of trading and trader activity, compliance, and optimizing profitability of electronic trading operations. Use cases in IoT include monitoring manufacturing processes, logistics, and connected vehicle telemetry and geospatial data.

This online talk will include in depth practical demonstrations of how Confluent and Panopticon together support several key applications. You will learn:

-Why Apache Kafka is widely used to improve performance of complex operational systems

-How Confluent and Panopticon open new opportunities to analyze operational data in real time

-How to quickly identify and react immediately to fast-emerging trends, clusters, and anomalies

-How to scale data ingestion and data processing

-Build new analytics dashboards in minutes

Why Kafka Works the Way It Does (And Not Some Other Way) | Tim Berglund, Conf...

Why Kafka Works the Way It Does (And Not Some Other Way) | Tim Berglund, Conf...HostedbyConfluent Studying the ""how"" of Kafka makes you better at using Kafka, but studying its ""whys"" makes you better at so much more. In looking at the tradeoffs behind a system like Kafka, we learn to reason more clearly about distributed systems and to make high-stakes technology adoption decisions more effectively. These are skills we all want to improve!

In this talk, we'll examine trade-offs on which our favorite distributed messaging system takes opinionated positions:

- Whether to store data contiguously or using an index

- How many storage tiers are best?

- Where should metadata live?

- And more.

It's always useful to dissect a modern distributed system with the goal of understanding it better, and it's even better to learn to deeper architectural principles in the process. Come to this talk for a generous helping of both.

Testing Event Driven Architectures: How to Broker the Complexity | Frank Kilc...

Testing Event Driven Architectures: How to Broker the Complexity | Frank Kilc...HostedbyConfluent This document discusses testing event-driven architectures. It begins by defining common event-driven architecture patterns like event notifications and event sourcing. It then discusses brokering the complexity of event-driven architectures by describing how events are communicated between producers and consumers via channels. The document outlines what information should be included in events like payloads and headers. It also discusses the difference between orchestration and choreography in event-driven systems. It provides an example of how events can be used to mediate changes within a system using order validation. Finally, it demonstrates how to test event-driven architectures using specifications and discusses accelerating API quality through testing tools that support multiple protocols and definitions.

Server Sent Events using Reactive Kafka and Spring Web flux | Gagan Solur Ven...

Server Sent Events using Reactive Kafka and Spring Web flux | Gagan Solur Ven...HostedbyConfluent Server-Sent Events (SSE) is a server push technology where clients receive automatic server updates through the secure http connection. SSE can be used in apps like live stock updates, that use one way data communications and also helps to replace long polling by maintaining a single connection and keeping a continuous event stream going through it. We used a simple Kafka producer to publish messages onto Kafka topics and developed a reactive Kafka consumer by leveraging Spring Webflux to read data from Kafka topic in non-blocking manner and send data to clients that are registered with Kafka consumer without closing any http connections. This implementation allows us to send data in a fully asynchronous & non-blocking manner and allows us to handle a massive number of concurrent connections. We’ll cover:

•Push data to external or internal apps in near real time

•Push data onto the files and securely copy them to any cloud services

•Handle multiple third-party apps integrations

James Watters, Pivotal | Kafka Summit NYC 2019 Keynote (Spring Boot+Kafka: Th...

James Watters, Pivotal | Kafka Summit NYC 2019 Keynote (Spring Boot+Kafka: Th...confluent This document discusses how Spring Boot and Kafka can form a new enterprise platform for continuous delivery. It provides examples of companies like Netflix transitioning to using Spring Boot as their core Java framework. The document advocates building applications around event-driven microservices using Spring Boot, Kafka streams, and a streaming data platform to enable arbitrary scaling, multi-cloud capabilities, and continuous delivery across the enterprise.

5 lessons learned for successful migration to Confluent cloud | Natan Silinit...

5 lessons learned for successful migration to Confluent cloud | Natan Silinit...HostedbyConfluent Confluent Cloud makes Devops engineers lives a lot more easier.

Yet moving 1500 microservices, 10K topics and 100K partitions to a multi-cluster Confluent cloud can be a challenge.

In this talk you will hear about 5 lessons that Wix has learned in order to successfully meet this challenge.

These lessons include:

1. Automation, Automation, Automation - all the process has to be completely automated at such scale

2. Prefer a gradual approach - E.g. migrate topics in small chunks and not all at once. Reduces risks if things go bad

3. Cleanup first - avoid migrating unused topics or topics with too many unnecessary partitions

Hybrid Kafka, Taking Real-time Analytics to the Business (Cody Irwin, Google ...

Hybrid Kafka, Taking Real-time Analytics to the Business (Cody Irwin, Google ...HostedbyConfluent Apache Kafka users who want to leverage Google Cloud Platform's (GCPs) data analytics platform and open source hosting capabilities can bridge their existing Kafka infrastructure on-premise or in other clouds to GCP using Confluent's replicator tool and managed Kafka service on GCP. Using actual customer examples and a reference architecture, we'll showcase how existing Kafka users can stream data to GCP and use it in popular tools like Apache Beam on Dataflow, BigQuery, Google Cloud Storage (GCS), Spark on Dataproc, and Tensorflow for data warehousing, data processing, data storage, and advanced analytics using AI and ML.

Event Streaming CTO Roundtable for Cloud-native Kafka Architectures

Event Streaming CTO Roundtable for Cloud-native Kafka ArchitecturesKai Wähner Technical thought leadership presentation to discuss how leading organizations move to real-time architecture to support business growth and enhance customer experience. This is a forum to discuss use cases with your peers to understand how other digital-native companies are utilizing data in motion to drive competitive advantage.

Agenda:

- Data in Motion with Event Streaming and Apache Kafka

- Streaming ETL Pipelines

- IT Modernisation and Hybrid Multi-Cloud

- Customer Experience and Customer 360

- IoT and Big Data Processing

- Machine Learning and Analytics

Build a Bridge to Cloud with Apache Kafka® for Data Analytics Cloud Services

Build a Bridge to Cloud with Apache Kafka® for Data Analytics Cloud Servicesconfluent Build a Bridge to Cloud with Apache Kafka® for Data Analytics Cloud Services, Perry Krol, Head of Systems Engineering, CEMEA, Confluent

https://www.meetup.com/Frankfurt-Apache-Kafka-Meetup-by-Confluent/events/269751169/

Kubernetes connectivity to Cloud Native Kafka | Evan Shortiss and Hugo Guerre...

Kubernetes connectivity to Cloud Native Kafka | Evan Shortiss and Hugo Guerre...HostedbyConfluent If you want to build an ecosystem of streaming data to your Kafka platform, you will need a much easier way for your developer to quickly move what’s on the source to your cluster. Better yet, making the connector serverless so it would NOT waste any resources for being idle, and having a trusted partner manage your Kafka infrastructure for you. In this session, we will show you how easy we have made streaming data with great user experience. Flexible resource management with our new secret weapon in the Apache Camel project -- Kamelet. We’ll also demonstrate how Red Hat OpenShift Streams for Apache Kafka simplifies the provisioning of Kafka deployments in a public cloud, managing the cluster,topics, and configuring secure access to the Kafka cluster for your developers.

Introduction to Apache Kafka and Confluent... and why they matter!

Introduction to Apache Kafka and Confluent... and why they matter!Paolo Castagna This is a short introduction to Apache Kafka and Confluent (the company founded by the creator of Kafka). The slides cover Apache Kafka APIs including Kafka Connect and Kafka Streams (part of Apache Kafka). Other open source, ASL licensed, projects are mentioned: #KSQL, Schema Registry, REST Proxy, etc.

Many thanks to Codemotion and Seacom for hosting the event.

Demystifying Event-Driven Architectures with Apache Kafka | Bogdan Sucaciu, P...

Demystifying Event-Driven Architectures with Apache Kafka | Bogdan Sucaciu, P...HostedbyConfluent Event-Driven Architectures (EDA ) are perceived as mythical objects that instantly transform your systems into ""real-time"" ones! BUT, come to think of it, aren't they already ""real-time""? I mean, adding an item to the cart is pretty much instant in ( most ) webshops.

In fact, EDA solves an entirely different set of problems and with the help of Apache Kafka, we will walk through the (re)evolution path. Microservices are easy to get started with, but once we do, we keep stumbling across the same issues: data access, consistency, and failures ( sounds familiar? ).

The solution? Patterns, patterns, patterns … You’ve probably heard about terms such as “Event Notification”, “Event-carried State Transfer”, or even “Event Sourcing”, but how can they be used to solve our problems? And more importantly, how can we use Apache Kafka to take advantage of these patterns?

I guess we will find out soon!

Data Transformations on Ops Metrics using Kafka Streams (Srividhya Ramachandr...

Data Transformations on Ops Metrics using Kafka Streams (Srividhya Ramachandr...confluent This document discusses using Kafka Streams to transform operational metrics data from Priceline applications before loading it into Splunk. It describes how the legacy monitoring system worked, and the motives for moving to Kafka and Kafka Streams. It then explains how data is collected from applications into Kafka topics, and how various transformations like formatting, key application, and aggregation are performed using Kafka Streams before loading to Splunk. It also discusses testing, monitoring, and debugging Kafka Streams applications.

Bank of China (HK) Tech Talk 1: Dive Into Apache Kafka

Bank of China (HK) Tech Talk 1: Dive Into Apache Kafkaconfluent Watch the webcast here: https://videos.confluent.io/watch/WwmbzUR7bpakR9NLwRhEAX?

Speaker: Kenneth Cheung

Similar to Bank of China Tech Talk 2: Introduction to Streaming Data and Stream Processing with Apache Kafka (20)

Au delà des brokers, un tour de l’environnement Kafka | Florent Ramière

Au delà des brokers, un tour de l’environnement Kafka | Florent Ramièreconfluent During the Confluent Streaming event in Paris, Florent Ramière, Technical Account Manager at Confluent, goes beyond brokers, introducing a whole new ecosystem with Kafka Streams, KSQL, Kafka Connect, Rest proxy, Schema Registry, MirrorMaker, etc.

Beyond the brokers - A tour of the Kafka ecosystem

Beyond the brokers - A tour of the Kafka ecosystemDamien Gasparina Beyond the brokers - A tour of the Kafka ecosystem. Presentation done the 28/03/2019 (Lyon JUG: https://www.meetup.com/Lyon-Java-User-Group-LyonJUG/events/259569434/)

Beyond the Brokers: A Tour of the Kafka Ecosystem

Beyond the Brokers: A Tour of the Kafka Ecosystemconfluent This document provides an overview of the Kafka ecosystem. It discusses how Kafka can be used to ingest, process, and analyze massive amounts of structured and unstructured data from various sources in real-time. It describes how Kafka Connect can be used to easily integrate Kafka with other data sources and sinks, how clients in various languages can communicate with Kafka, and how stream processing tools like Kafka Streams and KSQL can be used to analyze streaming data using simple SQL-like queries. It also discusses how the Kafka ecosystem addresses challenges like schema management, deployment on Kubernetes, and more.

IoT Sensor Analytics with Python, Jupyter, TensorFlow, Keras, Apache Kafka, K...

IoT Sensor Analytics with Python, Jupyter, TensorFlow, Keras, Apache Kafka, K...Kai Wähner IoT Sensor Analytics with Apache Kafka, KSQL, TensorFlow and MQTT => Kafka-Native End-to-End IoT Data Integration and Processing.

Large numbers of IoT devices lead to big data and the need for further processing and analysis. Apache Kafka is a highly scalable and distributed open source streaming platform, which can connect to MQTT and other IoT standards. Kafka ingests, stores, processes and forwards high volumes of data from thousands of IoT devices.

The rapidly expanding world of stream processing can be daunting, with new concepts such as various types of time semantics, windowed aggregates, changelogs, and programming frameworks to master. KSQL is the streaming SQL engine on top of Apache Kafka which simplifies all this and make stream processing available to everyone without the need to write source code.

This talk shows how to leverage Kafka and KSQL in an IoT sensor analytics scenario for predictive maintenance and integration with real time monitoring systems. A live demo shows how to embed and deploy Machine Learning models - built with frameworks like TensorFlow, DeepLearning4J or H2O - into mission-critical and scalable real time applications.

Beyond the brokers - Un tour de l'écosystème Kafka

Beyond the brokers - Un tour de l'écosystème KafkaFlorent Ramiere Apache Kafka ne se résume pas aux brokers, il y a tout un écosystème open-source qui gravite autour. Je vous propose ainsi de découvrir les principaux composants comme Kafka Streams, KSQL, Kafka Connect, Rest proxy, Schema Registry, MirrorMaker, etc.

MongoDB .local Chicago 2019: MongoDB Atlas Jumpstart

MongoDB .local Chicago 2019: MongoDB Atlas JumpstartMongoDB Join this talk and test session with MongoDB Support where you'll go over the configuration and deployment of an Atlas environment. Setup a service that you can take back in a production-ready state and prepare to unleash your inner genius.

MQTT. Kafka. InfluxDB. SQL. IoT Harmony. #tutorial by Stefan Bocutiu

MQTT. Kafka. InfluxDB. SQL. IoT Harmony. #tutorial by Stefan Bocutiulandoop Build an end-to-end Kafka-based pipeline in minutes!

Enhanced Data pipelines with no code.

An Apache Kafka tutorial with Lenses by Stefan Bocutiu.

What's New in Confluent Platform 5.5

What's New in Confluent Platform 5.5confluent Watch this webcast here: https://www.confluent.io/online-talks/whats-new-in-confluent-platform-55/

Join the Confluent Product Marketing team as we provide an overview of Confluent Platform 5.5, which makes Apache Kafka and event streaming more broadly accessible to developers with enhancements to data compatibility, multi-language development, and ksqlDB.

Building an event-driven architecture with Apache Kafka allows you to transition from traditional silos and monolithic applications to modern microservices and event streaming applications. With these benefits has come an increased demand for Kafka developers from a wide range of industries. The Dice Tech Salary Report recently ranked Kafka as the highest-paid technological skill of 2019, a year removed from ranking it second.

With Confluent Platform 5.5, we are making it even simpler for developers to connect to Kafka and start building event streaming applications, regardless of their preferred programming languages or the underlying data formats used in their applications.

This session will cover the key features of this latest release, including:

-Support for Protobuf and JSON schemas in Confluent Schema Registry and throughout our entire platform

-Exactly once semantics for non-Java clients

-Admin functions in REST Proxy (preview)

-ksqlDB 0.7 and ksqlDB Flow View in Confluent Control Center

Budapest Data/ML - Building Modern Data Streaming Apps with NiFi, Flink and K...

Budapest Data/ML - Building Modern Data Streaming Apps with NiFi, Flink and K...Timothy Spann Budapest Data/ML - Building Modern Data Streaming Apps with NiFi, Flink and Kafka

Apache NiFi, Apache Flink, Apache Kafka

Timothy Spann

Principal Developer Advocate

Cloudera

Data in Motion

https://budapestdata.hu/2023/en/speakers/timothy-spann/

Timothy Spann

Principal Developer Advocate

Cloudera (US)

LinkedIn · GitHub · datainmotion.dev

June 8 · Online · English talk

Building Modern Data Streaming Apps with NiFi, Flink and Kafka

In my session, I will show you some best practices I have discovered over the last 7 years in building data streaming applications including IoT, CDC, Logs, and more.

In my modern approach, we utilize several open-source frameworks to maximize the best features of all. We often start with Apache NiFi as the orchestrator of streams flowing into Apache Kafka. From there we build streaming ETL with Apache Flink SQL. We will stream data into Apache Iceberg.

We use the best streaming tools for the current applications with FLaNK. flankstack.dev

BIO

Tim Spann is a Principal Developer Advocate in Data In Motion for Cloudera. He works with Apache NiFi, Apache Pulsar, Apache Kafka, Apache Flink, Flink SQL, Apache Pinot, Trino, Apache Iceberg, DeltaLake, Apache Spark, Big Data, IoT, Cloud, AI/DL, machine learning, and deep learning. Tim has over ten years of experience with the IoT, big data, distributed computing, messaging, streaming technologies, and Java programming.

Previously, he was a Developer Advocate at StreamNative, Principal DataFlow Field Engineer at Cloudera, a Senior Solutions Engineer at Hortonworks, a Senior Solutions Architect at AirisData, a Senior Field Engineer at Pivotal and a Team Leader at HPE. He blogs for DZone, where he is the Big Data Zone leader, and runs a popular meetup in Princeton & NYC on Big Data, Cloud, IoT, deep learning, streaming, NiFi, the blockchain, and Spark. Tim is a frequent speaker at conferences such as ApacheCon, DeveloperWeek, Pulsar Summit and many more. He holds a BS and MS in computer science.

G rpc talk with intel (3)

G rpc talk with intel (3)Intel Google and Intel speak on NFV and SFC service delivery

The slides are as presented at the meet up "Out of Box Network Developers" sponsored by Intel Networking Developer Zone

Here is the Agenda of the slides:

How DPDK, RDT and gRPC fit into SDI/SDN, NFV and OpenStack

Key Platform Requirements for SDI

SDI Platform Ingredients: DPDK, IntelⓇRDT

gRPC Service Framework

IntelⓇ RDT and gRPC service framework

The Road Most Traveled: A Kafka Story | Heikki Nousiainen, Aiven

The Road Most Traveled: A Kafka Story | Heikki Nousiainen, AivenHostedbyConfluent When moving to a cloud native architecture Moogsoft knew they needed more scale than Rabbit could provide. Moogsoft moved into Kafka which is known for quick writing and driving heavy event driven workloads on top of niceties such as replayability. Choosing the tool was easy, finding a vendor that ticked all their boxes was not. They needed to ensure scalability, upgradability, builds via existing IAC pipelines, and observability via existing tools. When Moogsoft found Aiven, they were impressed with their offering and ability to scale on demand. During this presentation we will explore how Moogsoft used Aiven for Kafka to manage and scale their data in the cloud.

Overview SQL Server 2019

Overview SQL Server 2019Juan Fabian The document outlines the roadmap for SQL Server, including enhancements to performance, security, availability, development tools, and big data capabilities. Key updates include improved intelligent query processing, confidential computing with secure enclaves, high availability options on Kubernetes, machine learning services, and tools in Azure Data Studio. The roadmap aims to make SQL Server the most secure, high performing, and intelligent data platform across on-premises, private cloud and public cloud environments.

Netflix Cloud Architecture and Open Source

Netflix Cloud Architecture and Open Sourceaspyker A presentation on the Netflix Cloud Architecture and NetflixOSS open source. For the All Things Open 2015 conference in Raleigh 2015/10/19. #ATO2015 #NetflixOSS

Music city data Hail Hydrate! from stream to lake

Music city data Hail Hydrate! from stream to lakeTimothy Spann Music city data Hail Hydrate! from stream to lake

with apache pulsar, apache flink, apache nifi, datalake, cloud, streaming data, IoT.

Cloud Native Application Development - build fast, cheap, scalable and agile ...

Cloud Native Application Development - build fast, cheap, scalable and agile ...Lucas Jellema The document discusses Oracle Cloud Native Application Development. It describes how to build fast, scalable software on Oracle Cloud Infrastructure using a cloud native approach. It provides an overview of various Oracle Cloud services that can be used for cloud native application development, including Functions, API Gateway, NoSQL Database, Streaming and Notifications. It then demonstrates a sample cloud native application that collects tweets, stores them in a NoSQL database and sends reports via email using various Oracle Cloud services.

Confluent Platform 5.5 + Apache Kafka 2.5 => New Features (JSON Schema, Proto...

Confluent Platform 5.5 + Apache Kafka 2.5 => New Features (JSON Schema, Proto...Kai Wähner Confluent Platform 5.5 introduces several new features to simplify event streaming development including adding Protobuf and JSON schema support throughout the platform, providing exactly-once semantics for non-Java clients, introducing administrative functions to the REST Proxy, expanding the functionality of ksqlDB with new aggregates and data types, and adding a ksqlDB flow view to Confluent Control Center for increased visibility of streaming applications. The release is also based on the latest Apache Kafka 2.5 version.

Zero to 1000+ Applications - Large Scale CD Adoption at Cisco with Spinnaker ...

Zero to 1000+ Applications - Large Scale CD Adoption at Cisco with Spinnaker ...DevOps.com As part of its Cloud-native transformation, Cisco needed to modernize its software delivery process. Scalability, multi-cloud deployment to its OpenShift environment and public clouds, and the ability to support Cisco’s extensive policy, compliance, and security requirements made open source Spinnaker a logical choice for a modern continuous delivery platform.

As one of the world’s top technology providers with one of the largest and most diverse software development organizations, Cisco had to overcome some unique challenges to be able to onboard 10,000+ developers, 1000+ monolithic and non-cloud native applications, and achieve the high availability and reliability needed to support mission-critical production applications.

Join us for this new webinar as Balaji Siva, VP of Products at OpsMx engages Anil Anaberumutt, IT architect at Cisco, and Red Hat Sr. Solutions Architect, Vikas Grover, in a discussion about Cisco’s CD challenges and the lessons learned, best practices implemented, and key results achieved on their CD transformation journey from zero to over 1000 applications.

Safer Commutes & Streaming Data | George Padavick, Ohio Department of Transpo...

Safer Commutes & Streaming Data | George Padavick, Ohio Department of Transpo...HostedbyConfluent The Ohio Department of Transportation has adopted Confluent as the event driven enabler of DriveOhio, a modern Intelligent Transportation System. DriveOhio digitally links sensors, cameras, speed monitoring equipment, and smart highway assets in real time, to dynamically adjust the surface road network to maximize the safety and efficiency for travelers. Over the past 24 months the team has increased the number and types of devices within the DriveOhio environment, while also working to see their vendors adopt Kafka to better participate in data sharing.

Confluent Platform 5.4 + Apache Kafka 2.4 Overview (RBAC, Tiered Storage, Mul...

Confluent Platform 5.4 + Apache Kafka 2.4 Overview (RBAC, Tiered Storage, Mul...Kai Wähner Introducing new features in Confluent Platform 5.4 and Apache Kafka 2.4...

CP 5.4 (based on AK 2.4)

Security:

Role-Based Access Control (RBAC)

Structured Audit Logs

Resilience:

Multi-Region Clusters (MRC)

Data Compatibility:

Server-side Schema Validation

Management & Monitoring:

Control Center enhancements

RBAC management

Replicator monitoring

Performance & Elasticity:

Tiered Storage (preview)

Stream Processing:

New ksqlDB features like Pull Queries and Kafka Connect Integration (preview)

DevOps Sydney- Building Better Containers with Habitat

DevOps Sydney- Building Better Containers with HabitatMatt Ray Presentation and demo of Habitat. The demos were clustered Redis and the National Parks Tomcat application.

Ad

More from confluent (20)

Webinar Think Right - Shift Left - 19-03-2025.pptx

Webinar Think Right - Shift Left - 19-03-2025.pptxconfluent Créer des Data Product réutilisables avec intégration Data Warehouse / Data Lake :

Think Right, Shift Left

Migration, backup and restore made easy using Kannika

Migration, backup and restore made easy using Kannikaconfluent In this presentation, you’ll discover how easily you can migrate data from any Kafka-compatible event hub to Confluent using Kannika’s intuitive self-service interface. We’ll guide you through the process, showing how the same approach can be applied to define specific event data sets and effortlessly spin up secure environments for demos, testing, or other purposes.

You’ll also learn how to back up event data in just a few steps by transferring compressed data to the cloud storage location of your choice. In addition, we’ll demonstrate how to restore filtered datasets of topics, ensuring quick recovery and maintaining business continuity when needed.

Five Things You Need to Know About Data Streaming in 2025

Five Things You Need to Know About Data Streaming in 2025confluent Topics that Peter covers:

Tapping into the Potential of Data Products: Data drives some of today's most important business use cases. Data products enable instant access to reliable and trustworthy data by eliminating the data mess created by point-to-point connections.

The Need to Tap into 'Quick Thinking': The C-level has to reorient itself so it doesn't become the bottleneck to adaptability in a data-driven world. Nine in 10 (90%) business leaders say they must now react in real-time. Learn what you can do to provide executive access to real-time data to enable 'Quick Thinking.'

Rise Above Data Hurdles: Discover how to enforce governance at data production. Reestablishing trustworthiness later is almost always harder, so investing in data tools that solve business problems rather than add to them is essential.

Paradigm to Shift Left: Shift Left is a new paradigm for processing and governing data at any scale, complexity, and latency. Shift Left moves the processing and governance of data closer to the source, enabling organisations to build their data once, build it right and reuse it anywhere within moments of its creation.

The Need for a Strategic View: The positive correlation between data streaming maturity and significant business returns underscores the importance of a long-term, strategic view of data streaming investments. It also highlights the value of advancing beyond initial, siloed use cases to a more integrated approach that leverages data streaming across the enterprise.

From Stream to Screen: Real-Time Data Streaming to Web Frontends with Conflue...

From Stream to Screen: Real-Time Data Streaming to Web Frontends with Conflue...confluent In this presentation, we’ll demonstrate how Confluent and Lightstreamer come together to tackle the last-mile challenge of extending your Kafka architecture to web and mobile platforms.

Learn how to effortlessly build real-time web applications within minutes, subscribing to Kafka topics directly from your web pages, with unmatched low latency and high scalability.

Explore how Confluent's leading Kafka platform and Lightstreamer's intelligent proxy work seamlessly to bridge Kafka with the internet frontier, delivering data in real-time.

Confluent per il settore FSI: Accelerare l'Innovazione con il Data Streaming...

Confluent per il settore FSI: Accelerare l'Innovazione con il Data Streaming...confluent Confluent per il settore FSI:

- Cos'è il Data Streaming e perché la tua azienda ne ha bisogno

- Chi siamo e come Confluent può aiutarti:

- Rendere Kafka ampiamente accessibile

- Stream, Connect, Process e Governance

- Deep dive sulle soluzioni tecnologiche implementate all'interno della Data Streaming Platform

- Dalla teoria alla pratica: applicazioni reali delle architetture FSI

Data in Motion Tour 2024 Riyadh, Saudi Arabia

Data in Motion Tour 2024 Riyadh, Saudi Arabiaconfluent Data streaming platforms are becoming increasingly important in today’s fast-paced world. From retail giants who need to monitor inventory levels to ensure stores never run out of items, to new-age, innovative banks who are building out-of-the-box banking solutions for traditional retail banks, data streaming platforms are at the centre, powering these workflows.

Data streaming platforms connect all your applications, systems, and teams with a shared view of the most up-to-date, real-time data. From Gen AI, stream governance to stream processing - it’s these cutting edge developments that will be featured during the day.

Build a Real-Time Decision Support Application for Financial Market Traders w...

Build a Real-Time Decision Support Application for Financial Market Traders w...confluent Quix's intuitive visual programming interface and extensive library of pre-built components make it easy to build these applications without complex coding. Experience how this dynamic duo accelerates the development and deployment of your trading strategies, empowering you to make more informed decisions with real-time data!

Strumenti e Strategie di Stream Governance con Confluent Platform

Strumenti e Strategie di Stream Governance con Confluent Platformconfluent All your data continuously streamed, processed,

governed and shared as a product,

making it instantly valuable, usable, and

trustworthy everywhere

Compose Gen-AI Apps With Real-Time Data - In Minutes, Not Weeks

Compose Gen-AI Apps With Real-Time Data - In Minutes, Not Weeksconfluent As businesses strive to stay at the forefront of innovation, the ability to quickly develop scalable Generative AI (GenAI) applications is essential. Join us for an exclusive webinar featuring MIA Platform, MongoDB, and Confluent, where you'll learn how to compose GenAI apps with real-time data integration in a fraction of the time.

Discover how these three powerful platforms work together to ensure applications remain responsive, relevant, and adaptive to user preferences and contextual changes. Our experts will guide you through leveraging MIA Platform's microservices architecture and low-code development, MongoDB's flexibility, and Confluent's stream processing capabilities. Experience live demonstrations and practical insights that will transform your approach to AI-driven app development, enabling you to accelerate your development process from weeks to mere minutes. Don't miss this opportunity to keep your business at the cutting edge.

Building Real-Time Gen AI Applications with SingleStore and Confluent

Building Real-Time Gen AI Applications with SingleStore and Confluentconfluent Discover how SingleStore and Confluent together create a powerful foundation for real-time generative AI applications. Learn how SingleStore's high-performance data platform and Confluent integrate to process and analyze streaming data in real-time. We'll explore real-world, innovative solutions and show you how SingleStore + Confluent can unlock new gen AI opportunities with your clients.

Unlocking value with event-driven architecture by Confluent

Unlocking value with event-driven architecture by Confluentconfluent Sfrutta il potere dello streaming di dati in tempo reale e dei microservizi basati su eventi per il futuro di Sky con Confluent e Kafka®.

In questo tech talk esploreremo le potenzialità di Confluent e Apache Kafka® per rivoluzionare l'architettura aziendale e sbloccare nuove opportunità di business. Ne approfondiremo i concetti chiave, guidandoti nella creazione di applicazioni scalabili, resilienti e fruibili in tempo reale per lo streaming di dati.

Scoprirai come costruire microservizi basati su eventi con Confluent, sfruttando i vantaggi di un'architettura moderna e reattiva.

Il talk presenterà inoltre casi d'uso reali di Confluent e Kafka®, dimostrando come queste tecnologie possano ottimizzare i processi aziendali e generare valore concreto.

Il Data Streaming per un’AI real-time di nuova generazione

Il Data Streaming per un’AI real-time di nuova generazioneconfluent Per costruire applicazioni di AI affidabili, sicure e governate occorre una base dati in tempo reale altrettanto solida. Ancor più quando ci troviamo a gestire ingenti flussi di dati in continuo movimento.

Come arrivarci? Affidati a una vera piattaforma di data streaming che ti permetta di scalare e creare rapidamente applicazioni di AI in tempo reale partendo da dati affidabili.

Scopri di più! Non perdere il nostro prossimo webinar durante il quale avremo l’occasione di:

• Esplorare il paradigma della GenAI e di come questa nuova tecnnologia sta rimodellando il panorama aziendale, rispondendo alla necessità di offrire un contesto e soluzioni in tempo reale che soddisfino le esigenze della tua azienda.

• Approfondire le incertezze del panorama dell'AI in evoluzione e l'importanza cruciale del data streaming e dell'elaborazione dati.

• Vedere in dettaglio l'architettura in continua evoluzione e il ruolo chiave di Kafka e Confluent nelle applicazioni di AI.

• Analizzare i vantaggi di una piattaforma di streaming dei dati come Confluent nel collegare l'eredità legacy e la GenAI, facilitando lo sviluppo e l’utilizzo di AI predittive e generative.

Unleashing the Future: Building a Scalable and Up-to-Date GenAI Chatbot with ...

Unleashing the Future: Building a Scalable and Up-to-Date GenAI Chatbot with ...confluent As businesses strive to remain at the cutting edge of innovation, the demand for scalable and up-to-date conversational AI solutions has become paramount. Generative AI (GenAI) chatbots that seamlessly integrate into our daily lives and adapt to the ever-evolving nuances of human interaction are crucial. Real-time data plays a pivotal role in ensuring the responsiveness and relevance of these chatbots, empowering them to stay abreast of the latest trends, user preferences, and contextual information.

Break data silos with real-time connectivity using Confluent Cloud Connectors

Break data silos with real-time connectivity using Confluent Cloud Connectorsconfluent Connectors integrate Apache Kafka® with external data systems, enabling you to move away from a brittle spaghetti architecture to one that is more streamlined, secure, and future-proof. However, if your team still spends multiple dev cycles building and managing connectors using just open source Kafka Connect, it’s time to consider a faster and cost-effective alternative.

Building API data products on top of your real-time data infrastructure

Building API data products on top of your real-time data infrastructureconfluent This talk and live demonstration will examine how Confluent and Gravitee.io integrate to unlock value from streaming data through API products.

You will learn how data owners and API providers can document, secure data products on top of Confluent brokers, including schema validation, topic routing and message filtering.

You will also see how data and API consumers can discover and subscribe to products in a developer portal, as well as how they can integrate with Confluent topics through protocols like REST, Websockets, Server-sent Events and Webhooks.

Whether you want to monetize your real-time data, enable new integrations with partners, or provide self-service access to topics through various protocols, this webinar is for you!

Evolving Data Governance for the Real-time Streaming and AI Era

Evolving Data Governance for the Real-time Streaming and AI Eraconfluent Evolving Data Governance for the

Real-time Streaming and AI Era

Andrew Foo

Customer Solutions @ Confluent

Catch the Wave: SAP Event-Driven and Data Streaming for the Intelligence Ente...

Catch the Wave: SAP Event-Driven and Data Streaming for the Intelligence Ente...confluent In our exclusive webinar, you'll learn why event-driven architecture is the key to unlocking cost efficiency, operational effectiveness, and profitability. Gain insights on how this approach differs from API-driven methods and why it's essential for your organization's success.

Ad

Recently uploaded (20)

Hands On: Create a Lightning Aura Component with force:RecordData

Hands On: Create a Lightning Aura Component with force:RecordDataLynda Kane Slide Deck from the 3/26/2020 virtual meeting of the Cleveland Developer Group presentation on creating a Lightning Aura Component using force:RecordData.

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager API

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager APIUiPathCommunity Join this UiPath Community Berlin meetup to explore the Orchestrator API, Swagger interface, and the Test Manager API. Learn how to leverage these tools to streamline automation, enhance testing, and integrate more efficiently with UiPath. Perfect for developers, testers, and automation enthusiasts!

📕 Agenda

Welcome & Introductions

Orchestrator API Overview

Exploring the Swagger Interface

Test Manager API Highlights

Streamlining Automation & Testing with APIs (Demo)

Q&A and Open Discussion

Perfect for developers, testers, and automation enthusiasts!

👉 Join our UiPath Community Berlin chapter: https://community.uipath.com/berlin/

This session streamed live on April 29, 2025, 18:00 CET.

Check out all our upcoming UiPath Community sessions at https://community.uipath.com/events/.

Splunk Security Update | Public Sector Summit Germany 2025

Splunk Security Update | Public Sector Summit Germany 2025Splunk Splunk Security Update

Sprecher: Marcel Tanuatmadja

Technology Trends in 2025: AI and Big Data Analytics

Technology Trends in 2025: AI and Big Data AnalyticsInData Labs At InData Labs, we have been keeping an ear to the ground, looking out for AI-enabled digital transformation trends coming our way in 2025. Our report will provide a look into the technology landscape of the future, including:

-Artificial Intelligence Market Overview

-Strategies for AI Adoption in 2025

-Anticipated drivers of AI adoption and transformative technologies

-Benefits of AI and Big data for your business

-Tips on how to prepare your business for innovation

-AI and data privacy: Strategies for securing data privacy in AI models, etc.

Download your free copy nowand implement the key findings to improve your business.

"Client Partnership — the Path to Exponential Growth for Companies Sized 50-5...

"Client Partnership — the Path to Exponential Growth for Companies Sized 50-5...Fwdays Why the "more leads, more sales" approach is not a silver bullet for a company.

Common symptoms of an ineffective Client Partnership (CP).

Key reasons why CP fails.

Step-by-step roadmap for building this function (processes, roles, metrics).

Business outcomes of CP implementation based on examples of companies sized 50-500.

Semantic Cultivators : The Critical Future Role to Enable AI

Semantic Cultivators : The Critical Future Role to Enable AIartmondano By 2026, AI agents will consume 10x more enterprise data than humans, but with none of the contextual understanding that prevents catastrophic misinterpretations.

Learn the Basics of Agile Development: Your Step-by-Step Guide

Learn the Basics of Agile Development: Your Step-by-Step GuideMarcel David New to Agile? This step-by-step guide is your perfect starting point. "Learn the Basics of Agile Development" simplifies complex concepts, providing you with a clear understanding of how Agile can improve software development and project management. Discover the benefits of iterative work, team collaboration, and flexible planning.

tecnologias de las primeras civilizaciones.pdf

tecnologias de las primeras civilizaciones.pdffjgm517 descaripcion detallada del avance de las tecnologias en mesopotamia, egipto, roma y grecia.

Role of Data Annotation Services in AI-Powered Manufacturing

Role of Data Annotation Services in AI-Powered ManufacturingAndrew Leo From predictive maintenance to robotic automation, AI is driving the future of manufacturing. But without high-quality annotated data, even the smartest models fall short.

Discover how data annotation services are powering accuracy, safety, and efficiency in AI-driven manufacturing systems.

Precision in data labeling = Precision on the production floor.

Build Your Own Copilot & Agents For Devs

Build Your Own Copilot & Agents For DevsBrian McKeiver May 2nd, 2025 talk at StirTrek 2025 Conference.

2025-05-Q4-2024-Investor-Presentation.pptx

2025-05-Q4-2024-Investor-Presentation.pptxSamuele Fogagnolo Cloudflare Q4 Financial Results Presentation

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...TrustArc Most consumers believe they’re making informed decisions about their personal data—adjusting privacy settings, blocking trackers, and opting out where they can. However, our new research reveals that while awareness is high, taking meaningful action is still lacking. On the corporate side, many organizations report strong policies for managing third-party data and consumer consent yet fall short when it comes to consistency, accountability and transparency.

This session will explore the research findings from TrustArc’s Privacy Pulse Survey, examining consumer attitudes toward personal data collection and practical suggestions for corporate practices around purchasing third-party data.

Attendees will learn:

- Consumer awareness around data brokers and what consumers are doing to limit data collection

- How businesses assess third-party vendors and their consent management operations

- Where business preparedness needs improvement

- What these trends mean for the future of privacy governance and public trust

This discussion is essential for privacy, risk, and compliance professionals who want to ground their strategies in current data and prepare for what’s next in the privacy landscape.

AI and Data Privacy in 2025: Global Trends

AI and Data Privacy in 2025: Global TrendsInData Labs In this infographic, we explore how businesses can implement effective governance frameworks to address AI data privacy. Understanding it is crucial for developing effective strategies that ensure compliance, safeguard customer trust, and leverage AI responsibly. Equip yourself with insights that can drive informed decision-making and position your organization for success in the future of data privacy.

This infographic contains:

-AI and data privacy: Key findings

-Statistics on AI data privacy in the today’s world

-Tips on how to overcome data privacy challenges

-Benefits of AI data security investments.

Keep up-to-date on how AI is reshaping privacy standards and what this entails for both individuals and organizations.

Big Data Analytics Quick Research Guide by Arthur Morgan

Big Data Analytics Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

Rusty Waters: Elevating Lakehouses Beyond Spark

Rusty Waters: Elevating Lakehouses Beyond Sparkcarlyakerly1 Spark is a powerhouse for large datasets, but when it comes to smaller data workloads, its overhead can sometimes slow things down. What if you could achieve high performance and efficiency without the need for Spark?

At S&P Global Commodity Insights, having a complete view of global energy and commodities markets enables customers to make data-driven decisions with confidence and create long-term, sustainable value. 🌍

Explore delta-rs + CDC and how these open-source innovations power lightweight, high-performance data applications beyond Spark! 🚀

Network Security. Different aspects of Network Security.

Network Security. Different aspects of Network Security.gregtap1 Network Security. Different aspects of Network Security.

Special Meetup Edition - TDX Bengaluru Meetup #52.pptx

Special Meetup Edition - TDX Bengaluru Meetup #52.pptxshyamraj55 We’re bringing the TDX energy to our community with 2 power-packed sessions:

🛠️ Workshop: MuleSoft for Agentforce

Explore the new version of our hands-on workshop featuring the latest Topic Center and API Catalog updates.

📄 Talk: Power Up Document Processing

Dive into smart automation with MuleSoft IDP, NLP, and Einstein AI for intelligent document workflows.

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdf

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdfAbi john Analyze the growth of meme coins from mere online jokes to potential assets in the digital economy. Explore the community, culture, and utility as they elevate themselves to a new era in cryptocurrency.

Automation Dreamin' 2022: Sharing Some Gratitude with Your Users

Automation Dreamin' 2022: Sharing Some Gratitude with Your UsersLynda Kane Slide Deck from Automation Dreamin'2022 presentation Sharing Some Gratitude with Your Users on creating a Flow to present a random statement of Gratitude to a User in Salesforce.

Bank of China Tech Talk 2: Introduction to Streaming Data and Stream Processing with Apache Kafka

- 1. Tech Talk #2 Introduction to Streaming Data and Stream Processing with Apache Kafka Kenneth Cheung Sr. Solutions Engineer, Greater China Kenneth@confluent.io