Hadoop Summit 2012 | Bayesian Counters AKA In Memory Data Mining for Large Data Sets

Processing of large data requires new approaches to data mining: low, close to linear, complexity and stream processing. While in the traditional data mining the practitioner is usually presented with a static dataset, which might have just a timestamp attached to it, to infer a model for predicting future/takeout observations, in stream processing the problem is often posed as extracting as much information as possible on the current data to convert them to an actionable model within a limited time window. In this talk I present an approach based on HBase counters for mining over streams of data, which allows for massively distributed processing and data mining. I will consider overall design goals as well as HBase schema design dilemmas to speed up knowledge extraction process. I will also demo efficient implementations of Naive Bayes, Nearest Neighbor and Bayesian Learning on top of Bayesian Counters.

![Bayesian Counters

• [A=a1;B=b1] -> 5

• [A=a1;B=b2] -> 15

Pr(A|B) = Pr(AB)/Pr(B) • …

= Count(AB)/Count(B) • [A=a2;B=b1] -> 3

• …](https://image.slidesharecdn.com/bayesiancounters-20120613-120618124928-phpapp01/85/Hadoop-Summit-2012-Bayesian-Counters-AKA-In-Memory-Data-Mining-for-Large-Data-Sets-13-320.jpg)

![Anatomy of a counter

Region (divide between)

Counter/Table

File Column family

Iris

[sepal_width=2;class=0] Column qualifier

30 mins

1321038671 Version

1321038998

15

2 hours

Value (data)

Cars …](https://image.slidesharecdn.com/bayesiancounters-20120613-120618124928-phpapp01/85/Hadoop-Summit-2012-Bayesian-Counters-AKA-In-Memory-Data-Mining-for-Large-Data-Sets-15-320.jpg)

![Clique ranking

What is the best structure of a Bayesian Network

I(X;Y)=ΣΣp(x,y)log[p(x,y)/p(x)p(y)]

Where x in X and y in Y

Using random projection can generalize on

abstract subset Z](https://image.slidesharecdn.com/bayesiancounters-20120613-120618124928-phpapp01/85/Hadoop-Summit-2012-Bayesian-Counters-AKA-In-Memory-Data-Mining-for-Large-Data-Sets-21-320.jpg)

![Assoc

• Confidence (A -> B): count(A and B)/count(A)

• Lift (A -> B): count(A and B)/[count(A) x count(B)]

• Usually filtered on support: count(A and B)

• Frequent itemset search](https://image.slidesharecdn.com/bayesiancounters-20120613-120618124928-phpapp01/85/Hadoop-Summit-2012-Bayesian-Counters-AKA-In-Memory-Data-Mining-for-Large-Data-Sets-22-320.jpg)

Recommended

![[241]large scale search with polysemous codes](https://cdn.slidesharecdn.com/ss_thumbnails/241large-scalesearchwithpolysemouscodes-171017003327-thumbnail.jpg?width=560&fit=bounds)

![[241]large scale search with polysemous codes](https://cdn.slidesharecdn.com/ss_thumbnails/241large-scalesearchwithpolysemouscodes-171017003327-thumbnail.jpg?width=560&fit=bounds)

More Related Content

What's hot (20)

Viewers also liked (16)

Similar to Hadoop Summit 2012 | Bayesian Counters AKA In Memory Data Mining for Large Data Sets (20)

More from Cloudera, Inc. (20)

Recently uploaded (20)

Hadoop Summit 2012 | Bayesian Counters AKA In Memory Data Mining for Large Data Sets

- 1. Bayesian Counters aka In Memory Data Mining for Large DataSets Alex Kozlov, Ph.D., Principal Solutions Architect, Cloudera Inc. @alexvk2009 (Twitter) June 13-th, 2012

- 3. My past (aka about me)

- 4. Agenda • Current trends (large data, real time, uncertainty) • What is Bayesian Counters • Naïve Bayes • NN • Clique ranking • Association Rules • Some performance results • Conclusions ©2012 Cloudera, Inc. All Rights Reserved. 4

- 5. A Distributed System Centralized Distributed • SPoF • Availability • Strict synchronization/Locking • Redundancy/Fault Tolerance • Better Resource Management • Flexible • Interactive

- 7. State space explosion • Chess alpha-beta tree has 1045 nodes • We can solve only 1018 state space • Go has 10360 nodes • Given the Moore’s law we’ll be there only by 2120 Can we help? Uncertainty rules the world! Or use distributed systems

- 8. More zeros • Most powerful computer (2019): 1024 ops/sec • Seconds in a year: 3 x 107 seconds • Sun’s expected life: 107 years We can probably be done with chess!

- 9. Time Examples Value vs time • Advertising: if you don’t figure what the user wants in 5 minutes, you lost him • Intrusion detection: the damage may be significantly 0 1 2 3 4 5 6 7 8 9 bigger after a few minutes Value Precision after break-in • Missing/misconfigured pages http://cetas.net http://www.woopra.com http://www.wibidata.com/

- 10. What we’ve learned so far • There is a lot of data out there • The storage capacity of a distributed systems today is overwhelming • We need to admit that some problems will never be solved • Time is a critical factor

- 11. Why (not) to Mine from HD? • L1 Cache: 64 bits per CPU clock • Move computation to the data: cycle (10-9 sec) 1010 bytes per but ML wants all your data! second, latency in ns • And sorted… • HD – 12 x 100 x 106 bytes per second, latency in ms What if it does not fit in • Network – 10 GbE switches RAM? (depends on distance, topology) • East-West coast latency 20-40 ms (ms within a datacenter) • Work on reasonable subsets

- 12. Push computations to the source • Collect relevant information at the source (pairwise correlations, can be done in parallel using Hbase) Compare: -> computations to data = MapReduce -> data to computations = map side join

- 13. Bayesian Counters • [A=a1;B=b1] -> 5 • [A=a1;B=b2] -> 15 Pr(A|B) = Pr(AB)/Pr(B) • … = Count(AB)/Count(B) • [A=a2;B=b1] -> 3 • …

- 14. Time What if we want to access more recent data more often? • Key: subset of variables with their values + timestamp (variable length) • Value: count (8 bytes) index Key 1 Value Key 2 Value Key 3 Value Key 4 Value Column families are different HFiles (30 min, 2 hours, 24 hours, 5 days, etc.) Pr(A|B, last 20 minutes)

- 15. Anatomy of a counter Region (divide between) Counter/Table File Column family Iris [sepal_width=2;class=0] Column qualifier 30 mins 1321038671 Version 1321038998 15 2 hours Value (data) Cars …

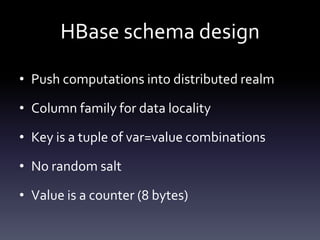

- 17. HBase schema design • Push computations into distributed realm • Column family for data locality • Key is a tuple of var=value combinations • No random salt • Value is a counter (8 bytes)

- 18. Implementations • Naïve Bayes • Nearest Neighbor • Association rules • Clique ranking

- 19. Naïve Bayes Pr(C|F1, F2, ..., FN) =1/z Pr(C) Πi Pr(F |C) i Required only pairwise counters (complexity N2) *Linear if we fix the target node

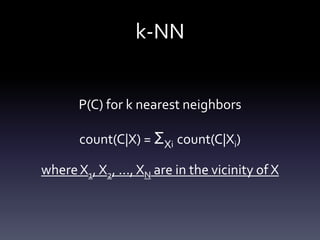

- 20. k-NN P(C) for k nearest neighbors count(C|X) = ΣXi count(C|Xi) where X1, X2, ..., XN are in the vicinity of X

- 21. Clique ranking What is the best structure of a Bayesian Network I(X;Y)=ΣΣp(x,y)log[p(x,y)/p(x)p(y)] Where x in X and y in Y Using random projection can generalize on abstract subset Z

- 22. Assoc • Confidence (A -> B): count(A and B)/count(A) • Lift (A -> B): count(A and B)/[count(A) x count(B)] • Usually filtered on support: count(A and B) • Frequent itemset search

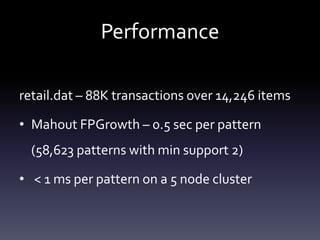

- 23. Performance retail.dat – 88K transactions over 14,246 items • Mahout FPGrowth – 0.5 sec per pattern (58,623 patterns with min support 2) • < 1 ms per pattern on a 5 node cluster

- 24. FPGrowth performance Row Support Rules Time(ms) 1 1 69,309 25,659,052 2 2 58,623 23,103,547 3 4 48,270 20,782,325 4 8 38,661 17,643,592 5 16 28,988 13,994,334 6 32 19,939 9,714,935

- 26. Time nb iris class=2 sepal_length=5;petal_length=1.4 300 Target Variable Time (seconds from now) Predictors

- 27. Conclusions • Storing n-wise counts is a powerful data analysis paradigm • We can implement a number of powerful algorithms on top of counters • A system that will know about the world more than you would ever dare to admit

- 28. Thank you! 31

- 29. Questions? freenode: #cloudera / #hadoop http://www.cloudera.com Do not hesitate to email alexvk@{gmail,cloudera}.com 32 ©2012 Cloudera, Inc. All Rights Reserved.

Editor's Notes

- #2: Not about HDFS and/or Hadoop/HBaseNot about DB design (we can store PB of data)I am going to a lot of customers and “recommend” things. Sometimes they listen. If not, they come back.Most of the “data scientist” say that only simple/linear algos work on large dataHow to go beyond a simple “grep” or unique countMy approach to data mining and knowledge discovery (a.k.a. data science’)Common problems: not enough memory, state space explosion, exponential running timesHow to solve them?

- #3: Josh Wills definition of a computer scientist:+ I am better at Physics than any data scientist (besides maybe Kevin Weil from Twitter)

- #5: I’ve heard talks about data locality, task workload distribution, and reliability in 1998-1999. Everyone(almost) thought that distributed computations on commodity workstations is not a great idea.MapReduce was born on 2002-2004Hadoop had a world record of sorting 1TB 100-byte records (just under a minute)Can do the same on a ~50 node cluster today1PB close to 30 minutesI will talk abouty some tendencies that I see in data analysis areaAbout Cloudera and CDHAbout Distributed SystemsWhy to keep Dataset in MemoryCurrent TrendsWhat is Bayesian CountersNaïve BayesNNBayesian NetworksAssociation rulesConclusions

- #6: Interest in Hadoop is surging…Hadoop is: ‘A scalable fault-tolerant distributed system for data storage and processing’Hadoop History2002-2004: Doug Cutting and Mike Cafarella started working on Nutch2003-2004: Google publishes GFS and MapReduce papers 2004: Cutting adds DFS & MapReduce support to Nutch2006: Yahoo! hires Cutting, Hadoop spins out of Nutch2007: NY Times converts 4TB of archives over 100 EC2s2008: Web-scale deployments at Y!, Facebook, Last.fmApril 2008: Yahoo does fastest sort of a TB, 3.5mins over 910 nodesMay 2009:Yahoo does fastest sort of a TB, 62secs over 1460 nodesYahoo sorts a PB in 16.25hours over 3658 nodesJune 2009, Oct 2009: Hadoop Summit, Hadoop WorldSeptember 2009: Doug Cutting joins ClouderaSeptember 2011: sort 1BPB in 32 minutes on 8,000 nodesCloudera helps other companies to embrace the technology

- #7: Centralized system have more global barriers (as a rule)Distributed system are less resource efficient (unless one recomputes certain thing over and over)Democracy vs. DictatorshipEverything does look simple in a centarlized system

- #8: If you’ve been in Cloudera long enough you remember April 1-st, 2010 blog http://www.cloudera.com/blog/2010/04/pushing-the-limits-of-distributed-processing/ written by OmerApple Q2: 35.1 million iPhones 11.8 million iPads30 million iPads x 32GB = 10^18 Bytes (exabyte)RFID collects a bunch of information, remote devices will collect more. Moreover they are stateful devices (another way to say smart).

- #9: What do you do when the data is collected (beyond ETL)? You expand it.You can pre-create some of the combinations in a distributed way.A few algorithms run in linear time, but they are not really interesting.Random projections do work, but they are just an artifact of poor problem formulation in the first place.Admit that certain problems are not solvable (by brute force)Learn to leave with uncertaintyWhy not to build heuristics? Turkey paradox. One wrong move can lead to a disastrous outcome. (Jolly Chen)Using a IBM machine w/ 2,880 cores at 4.25 GHz, 16 terabytes of RAM running at about 10,750,000 single-core/CPU-hours, they solved the King's Gambit (a classical chess opening).Where is the limit? We can not solve a Shrodinger equation for the while universe (we will not be able to store state)

- #10: Some of the problems will never be solvedGo – 10360 nodes in a game tree2,598,960 possible hands in poker (but it is a more complex game as it involves dealing with incomplete information, emotions, as is bridge)It can also be noted that since there are about 31 million seconds in a year, it would take about 2¼ years, playing 16 hours a day at one move per second, to play 47 million moves. As to 1048, since the future age of the universe is projected to be less than 1000 trillion years[10] and no computer is projected to compute anything close to a trillion Teraflops (one yottaflop), any number higher than 1039 is beyond possibility of being played.

- #11: At least some of the problems will not be solved in timeIf we had all the time (the universe is projected to be less than 1000 trillion years) we could (probably) get the exact answerSome analytical companies:http://cetas.net/ acquired by VMWarehttp://www.woopra.com analyses traffic to a website real-timehttp://www.wibidata.com/ our friends

- #12: Data mining likesto have every bit of information at one place. This is not necessary and not required for probabilistic computations. And more and more computations are about uncertainty and risk management. Should be ~ 15 minutes

- #13: Diskmoves at 50 m/s vs 300,000,000 m/sIt is much easier for me to grab a remote from a table than to go to LA and back with a remoteRAM is faster than disks (RAM ns, disk ms) There are 1,832,160 feet in 347 milesCombining storage or processing capabilities across a distributed system of machines is non-trivialCan we do at least 1,000 feet (300 m)?Network?There is no “virtual memory”HBase (sparse map column-family oriented DB) with enhanced consistency guarantees

- #14: There was:Push computation to the data (MapReduce)Push data to the computations (Map side join)We need to push something to where it can be done is a distributed fashionWhat we did with storage, needs to be done with statistical computationsIf you carry one thing out of my talk I want it to be this: push computations to the source

- #15: Pre-compute pieces at the source of dataWe will show that we can do Naïve Bayes, Assoc, NNAnd potentially push new reqs to the source

- #16: More recent column families are accessed more oftenVersioning can be used for that, but we didn’t go this pathColumn family gives you data locality (more recent data are accessed more frequently)

- #17: Value is just 8 bytes -> sweet case for Hbase

- #18: No salt (or random key)Column families, keys and column names are just ascii for now

- #19: Data mining likesto have every bit of information at one place. This is not necessary and not required for probabilistic computations. And more and more computations are about uncertainty and risk management. Should be ~ 15 minutes

- #20: Should be around 20 minutes

- #21: Assumes conditional independence of predictors given the target. Can be completely substantiated given pairwise counts.Remember each key starts for a prefix var=val, there are only N such prefixes for each record!

- #22: Need full cardinality of the counters

- #23: A generic measure of mutual information between two subsets of nodesFor random variables this is 0After this a BN learning is just a min span tree

- #24: Assoc is anitemset generation (which can be done with dynamically adjusting the types of counts we collect)A frequent measure of importance is support

- #26: The # of rules grows with decreasing supportEven for min support one it is not disastrous

- #27: This is DataDesk (just to show that Microsoft is not my only tool)To convert exponential things to linear just take a log of the X axisLinear deals with additions, exponential with multiplication (or addition of the logs)The actual time per pattern increases with min support!

- #28: The amount of time per itemset increases

- #29: Transparently traderecencyvs statistical error(time can be replaced with min # of trials or counts)

- #30: Push computations to the sourceConvert an exponential problem to linaerThe problem is linear in # of observationsNon-linear part has been moved out to the sourceIn plans: dynamic adjustment of counters (depth, time buckets) to collectWhat we accomplished is to make an exponential problem linear by distributing compute-intensive parta.k.a. MapReduce for data mining (+ get time dependence for free)The code will be in public domain (still working with the contractor of this work)

- #31: Anyone to work on this?

- #33: I hope that I got you interested...If you want to contribute let me knowCloudera is hiring