Big Data and Hadoop Ecosystem

3 likes3,034 views

Big data and Hadoop are introduced as ways to handle the increasing volume, variety, and velocity of data. Hadoop evolved as a solution to process large amounts of unstructured and semi-structured data across distributed systems in a cost-effective way using commodity hardware. It provides scalable and parallel processing via MapReduce and HDFS distributed file system that stores data across clusters and provides redundancy and failover. Key Hadoop projects include HDFS, MapReduce, HBase, Hive, Pig and Zookeeper.

1 of 27

Downloaded 83 times

Ad

Recommended

Hadoop overview

Hadoop overviewSiva Pandeti Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of commodity hardware. It addresses challenges in big data by providing reliability, scalability, and fault tolerance. Hadoop allows distributed processing of large datasets across clusters using MapReduce and can scale from single servers to thousands of machines, each offering local computation and storage. It is widely used for applications such as log analysis, data warehousing, and web indexing.

Introduction To Hadoop Ecosystem

Introduction To Hadoop EcosystemInSemble Introduction to Hadoop Ecosystem was presented to Lansing Java User Group on 2/17/2015 by Vijay Mandava and Lan Jiang. The demo was built on top of HDP 2.2 and AWS cloud.

Hadoop ecosystem

Hadoop ecosystemStanley Wang Hadoop is a distributed processing framework for large datasets. It utilizes HDFS for storage and MapReduce as its programming model. The Hadoop ecosystem has expanded to include many other tools. YARN was developed to address limitations in the original Hadoop architecture. It provides a common platform for various data processing engines like MapReduce, Spark, and Storm. YARN improves scalability, utilization, and supports multiple workloads by decoupling cluster resource management from application logic. It allows different applications to leverage shared Hadoop cluster resources.

HADOOP TECHNOLOGY ppt

HADOOP TECHNOLOGY pptsravya raju The most well known technology used for Big Data is Hadoop.

It is actually a large scale batch data processing system

Introduction to Big Data & Hadoop Architecture - Module 1

Introduction to Big Data & Hadoop Architecture - Module 1Rohit Agrawal Learning Objectives - In this module, you will understand what is Big Data, What are the limitations of the existing solutions for Big Data problem; How Hadoop solves the Big Data problem, What are the common Hadoop ecosystem components, Hadoop Architecture, HDFS and Map Reduce Framework, and Anatomy of File Write and Read.

Hadoop Ecosystem

Hadoop EcosystemSandip Darwade The document discusses the Hadoop ecosystem, which includes core Apache Hadoop components like HDFS, MapReduce, YARN, as well as related projects like Pig, Hive, HBase, Mahout, Sqoop, ZooKeeper, Chukwa, and HCatalog. It provides overviews and diagrams explaining the architecture and purpose of each component, positioning them as core functionality that speeds up Hadoop processing and makes Hadoop more usable and accessible.

Migrating structured data between Hadoop and RDBMS

Migrating structured data between Hadoop and RDBMSBouquet - The document discusses migrating structured data between Hadoop and relational databases using a tool called Bouquet.

- Bouquet allows users to select data from a relational database, which is then sent to Spark via Kafka and stored in HDFS/Tachyon for processing.

- The enriched data in Spark can then be re-injected back into the original database.

Hadoop Ecosystem

Hadoop EcosystemLior Sidi A comprehensive overview on the entire Hadoop operations and tools: cluster management, coordination, injection, streaming, formats, storage, resources, processing, workflow, analysis, search and visualization

Hadoop And Their Ecosystem

Hadoop And Their Ecosystemsunera pathan The document provides an overview of Hadoop and its ecosystem. It discusses the history and architecture of Hadoop, describing how it uses distributed storage and processing to handle large datasets across clusters of commodity hardware. The key components of Hadoop include HDFS for storage, MapReduce for processing, and additional tools like Hive, Pig, HBase, Zookeeper, Flume, Sqoop and Oozie that make up its ecosystem. Advantages are its ability to handle unlimited data storage and high speed processing, while disadvantages include lower speeds for small datasets and limitations on data storage size.

Introduction to the Hadoop Ecosystem (FrOSCon Edition)

Introduction to the Hadoop Ecosystem (FrOSCon Edition)Uwe Printz Talk held at the FrOSCon 2013 on 24.08.2013 in Sankt Augustin, Germany

Agenda:

- What is Big Data & Hadoop?

- Core Hadoop

- The Hadoop Ecosystem

- Use Cases

- What‘s next? Hadoop 2.0!

The Evolution of the Hadoop Ecosystem

The Evolution of the Hadoop EcosystemCloudera, Inc. The document provides an overview of the Apache Hadoop ecosystem. It describes Hadoop as a distributed, scalable storage and computation system based on Google's architecture. The ecosystem includes many related projects that interact, such as YARN, HDFS, Impala, Avro, Crunch, and HBase. These projects innovate independently but work together, with Hadoop serving as a flexible data platform at the core.

What are Hadoop Components? Hadoop Ecosystem and Architecture | Edureka

What are Hadoop Components? Hadoop Ecosystem and Architecture | EdurekaEdureka! YouTube Link: https://youtu.be/ll_O9JsjwT4

** Big Data Hadoop Certification Training - https://www.edureka.co/big-data-hadoop-training-certification **

This Edureka PPT on "Hadoop components" will provide you with detailed knowledge about the top Hadoop Components and it will help you understand the different categories of Hadoop Components. This PPT covers the following topics:

What is Hadoop?

Core Components of Hadoop

Hadoop Architecture

Hadoop EcoSystem

Hadoop Components in Data Storage

General Purpose Execution Engines

Hadoop Components in Database Management

Hadoop Components in Data Abstraction

Hadoop Components in Real-time Data Streaming

Hadoop Components in Graph Processing

Hadoop Components in Machine Learning

Hadoop Cluster Management tools

Follow us to never miss an update in the future.

YouTube: https://www.youtube.com/user/edurekaIN

Instagram: https://www.instagram.com/edureka_learning/

Facebook: https://www.facebook.com/edurekaIN/

Twitter: https://twitter.com/edurekain

LinkedIn: https://www.linkedin.com/company/edureka

Castbox: https://castbox.fm/networks/505?country=in

Hadoop and Distributed Computing

Hadoop and Distributed ComputingFederico Cargnelutti This document discusses distributed computing and Hadoop. It begins by explaining distributed computing and how it divides programs across several computers. It then introduces Hadoop, an open-source Java framework for distributed processing of large data sets across clusters of computers. Key aspects of Hadoop include its scalable distributed file system (HDFS), MapReduce programming model, and ability to reliably process petabytes of data on thousands of nodes. Common use cases and challenges of using Hadoop are also outlined.

Hadoop

Hadoop ABHIJEET RAJ The document summarizes a technical seminar on Hadoop. It discusses Hadoop's history and origin, how it was developed from Google's distributed systems, and how it provides an open-source framework for distributed storage and processing of large datasets. It also summarizes key aspects of Hadoop including HDFS, MapReduce, HBase, Pig, Hive and YARN, and how they address challenges of big data analytics. The seminar provides an overview of Hadoop's architecture and ecosystem and how it can effectively process large datasets measured in petabytes.

Hadoop Primer

Hadoop PrimerSteve Staso Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of computers. It allows for the reliable, scalable, and distributed processing of petabytes of data. Hadoop consists of Hadoop Distributed File System (HDFS) for storage and Hadoop MapReduce for processing vast amounts of data in parallel on large clusters of commodity hardware in a reliable, fault-tolerant manner. Many large companies use Hadoop for applications such as log analysis, web indexing, and data mining of large datasets.

Apache hadoop technology : Beginners

Apache hadoop technology : BeginnersShweta Patnaik This presentation is about apache hadoop technology. It may be helpful for the beginners to know some terminologies of hadoop.

Hadoop Technologies

Hadoop TechnologiesKannappan Sirchabesan The document discusses various Hadoop technologies including HDFS, MapReduce, Pig/Hive, HBase, Flume, Oozie, Zookeeper, and HBase. HDFS provides reliable storage across multiple machines by replicating data on different nodes. MapReduce is a framework for processing large datasets in parallel. Pig and Hive provide high-level languages for analyzing data stored in Hadoop. Flume collects log data as it is generated. Oozie manages Hadoop jobs. Zookeeper allows distributed coordination. HBase provides a fault-tolerant way to store large amounts of sparse data.

Hadoop hive presentation

Hadoop hive presentationArvind Kumar Hadoop is an open-source framework for distributed storage and processing of large datasets across clusters of commodity hardware. It addresses problems with traditional systems like data growth, network/server failures, and high costs by allowing data to be stored in a distributed manner and processed in parallel. Hadoop has two main components - the Hadoop Distributed File System (HDFS) which provides high-throughput access to application data across servers, and the MapReduce programming model which processes large amounts of data in parallel by splitting work into map and reduce tasks.

Syncsort et le retour d'expérience ComScore

Syncsort et le retour d'expérience ComScoreModern Data Stack France This document summarizes Syncsort's high performance data integration solutions for Hadoop contexts. Syncsort has over 40 years of experience innovating performance solutions. Their DMExpress product provides high-speed connectivity to Hadoop and accelerates ETL workflows. It uses partitioning and parallelization to load data into HDFS 6x faster than native methods. DMExpress also enhances usability with a graphical interface and accelerates MapReduce jobs by replacing sort functions. Customers report TCO reductions of 50-75% and ROI within 12 months by using DMExpress to optimize their Hadoop deployments.

Real time hadoop + mapreduce intro

Real time hadoop + mapreduce introGeoff Hendrey augmented my real-time hadoop talk to include a programming intro to mapreduce for google developer groups

Apache Hadoop at 10

Apache Hadoop at 10Cloudera, Inc. This document summarizes the history and evolution of Apache Hadoop over the past 10 years. It discusses how Hadoop originated from Doug Cutting's work on Nutch in 2002. It grew to include HDFS for storage and MapReduce for processing. Yahoo was an early large-scale user. The community has expanded Hadoop to include over 25 components like Hive, HBase, Spark and more. The open source model and ability to adapt have helped Hadoop succeed and it will continue to evolve to handle new data sources and cloud deployments in the next 10 years.

Hadoop-Quick introduction

Hadoop-Quick introductionSandeep Singh - Data is a precious resource that can last longer than the systems themselves (Tim Berners-Lee)

- Hadoop is an open-source framework for distributed storage and processing of large datasets across clusters of commodity hardware. It provides reliability, scalability and flexibility.

- Hadoop consists of HDFS for storage and MapReduce for processing. The main nodes include NameNode, DataNodes, JobTracker and TaskTrackers. Tools like Hive, Pig, HBase extend its capabilities for SQL-like queries, data flows and NoSQL access.

Facebooks Petabyte Scale Data Warehouse using Hive and Hadoop

Facebooks Petabyte Scale Data Warehouse using Hive and Hadooproyans Facebooks Petabyte Scale Data Warehouse using Hive and Hadoop.

More info here

http://www.royans.net/arch/hive-facebook/

Column Stores and Google BigQuery

Column Stores and Google BigQueryCsaba Toth From Hadoop, through HIVE, Spark, YARN, searching for the holy grail for low latency SQL dialect like big data query. I talk about OLTP and OLAP, row stores and column stores, and finally arrive to BigQuery. Demo on the web console.

Introduction to the Hadoop Ecosystem (IT-Stammtisch Darmstadt Edition)

Introduction to the Hadoop Ecosystem (IT-Stammtisch Darmstadt Edition)Uwe Printz Talk held at the IT-Stammtisch Darmstadt on 08.11.2013

Agenda:

- What is Big Data & Hadoop?

- Core Hadoop

- The Hadoop Ecosystem

- Use Cases

- What‘s next? Hadoop 2.0!

Nextag talk

Nextag talkJoydeep Sen Sarma Hive provides an SQL-like interface to query data stored in Hadoop's HDFS distributed file system and processed using MapReduce. It allows users without MapReduce programming experience to write queries that Hive then compiles into a series of MapReduce jobs. The document discusses Hive's components, data model, query planning and optimization techniques, and performance compared to other frameworks like Pig.

Hadoop Ecosystem Overview

Hadoop Ecosystem OverviewGerrit van Vuuren This document provides an overview of big data ecosystems, including common log formats, compression techniques, data collection methods, distributed storage options like HDFS and S3, distributed processing frameworks like Hadoop MapReduce and Storm, workflow managers, real-time storage options, and other related topics. It describes technologies like Kafka, HBase, Cassandra, Pig, Hive, Oozie, and Azkaban; compares advantages and disadvantages of HDFS, S3, HBase and other storage systems; and provides references for further information.

Big data and Hadoop

Big data and HadoopRahul Agarwal This document provides an overview of big data and Hadoop. It discusses why Hadoop is useful for extremely large datasets that are difficult to manage in relational databases. It then summarizes what Hadoop is, including its core components like HDFS, MapReduce, HBase, Pig, Hive, Chukwa, and ZooKeeper. The document also outlines Hadoop's design principles and provides examples of how some of its components like MapReduce and Hive work.

Managing Big data using Hadoop Map Reduce in Telecom Domain

Managing Big data using Hadoop Map Reduce in Telecom DomainAM Publications Map reduce is a programming model for analysing and processing large massive data sets. Apache Hadoop is an efficient frame work and the most popular implementation of the map reduce model. Hadoop’s success has motivated research interest and has led to different modifications as well as extensions to framework. In this paper, the challenges faced in different domains like data storage, analytics, online processing and privacy/ security issues while handling big data are explored. Also, the various possible solutions with respect to Telecom domain with Hadoop Map reduce implementation is discussed in this paper.

Harnessing Big Data in Real-Time

Harnessing Big Data in Real-TimeDataWorks Summit This document discusses harnessing big data in real-time. It outlines how business requirements are increasingly demanding real-time insights from data. Traditional systems struggle with high latency, complexity, and costs when dealing with big data. The document proposes using SAP HANA and Hadoop together to enable instant analytics on vast amounts of data. It provides examples of using this approach for cancer genome analysis and other use cases to generate personalized and timely results.

Ad

More Related Content

What's hot (20)

Hadoop And Their Ecosystem

Hadoop And Their Ecosystemsunera pathan The document provides an overview of Hadoop and its ecosystem. It discusses the history and architecture of Hadoop, describing how it uses distributed storage and processing to handle large datasets across clusters of commodity hardware. The key components of Hadoop include HDFS for storage, MapReduce for processing, and additional tools like Hive, Pig, HBase, Zookeeper, Flume, Sqoop and Oozie that make up its ecosystem. Advantages are its ability to handle unlimited data storage and high speed processing, while disadvantages include lower speeds for small datasets and limitations on data storage size.

Introduction to the Hadoop Ecosystem (FrOSCon Edition)

Introduction to the Hadoop Ecosystem (FrOSCon Edition)Uwe Printz Talk held at the FrOSCon 2013 on 24.08.2013 in Sankt Augustin, Germany

Agenda:

- What is Big Data & Hadoop?

- Core Hadoop

- The Hadoop Ecosystem

- Use Cases

- What‘s next? Hadoop 2.0!

The Evolution of the Hadoop Ecosystem

The Evolution of the Hadoop EcosystemCloudera, Inc. The document provides an overview of the Apache Hadoop ecosystem. It describes Hadoop as a distributed, scalable storage and computation system based on Google's architecture. The ecosystem includes many related projects that interact, such as YARN, HDFS, Impala, Avro, Crunch, and HBase. These projects innovate independently but work together, with Hadoop serving as a flexible data platform at the core.

What are Hadoop Components? Hadoop Ecosystem and Architecture | Edureka

What are Hadoop Components? Hadoop Ecosystem and Architecture | EdurekaEdureka! YouTube Link: https://youtu.be/ll_O9JsjwT4

** Big Data Hadoop Certification Training - https://www.edureka.co/big-data-hadoop-training-certification **

This Edureka PPT on "Hadoop components" will provide you with detailed knowledge about the top Hadoop Components and it will help you understand the different categories of Hadoop Components. This PPT covers the following topics:

What is Hadoop?

Core Components of Hadoop

Hadoop Architecture

Hadoop EcoSystem

Hadoop Components in Data Storage

General Purpose Execution Engines

Hadoop Components in Database Management

Hadoop Components in Data Abstraction

Hadoop Components in Real-time Data Streaming

Hadoop Components in Graph Processing

Hadoop Components in Machine Learning

Hadoop Cluster Management tools

Follow us to never miss an update in the future.

YouTube: https://www.youtube.com/user/edurekaIN

Instagram: https://www.instagram.com/edureka_learning/

Facebook: https://www.facebook.com/edurekaIN/

Twitter: https://twitter.com/edurekain

LinkedIn: https://www.linkedin.com/company/edureka

Castbox: https://castbox.fm/networks/505?country=in

Hadoop and Distributed Computing

Hadoop and Distributed ComputingFederico Cargnelutti This document discusses distributed computing and Hadoop. It begins by explaining distributed computing and how it divides programs across several computers. It then introduces Hadoop, an open-source Java framework for distributed processing of large data sets across clusters of computers. Key aspects of Hadoop include its scalable distributed file system (HDFS), MapReduce programming model, and ability to reliably process petabytes of data on thousands of nodes. Common use cases and challenges of using Hadoop are also outlined.

Hadoop

Hadoop ABHIJEET RAJ The document summarizes a technical seminar on Hadoop. It discusses Hadoop's history and origin, how it was developed from Google's distributed systems, and how it provides an open-source framework for distributed storage and processing of large datasets. It also summarizes key aspects of Hadoop including HDFS, MapReduce, HBase, Pig, Hive and YARN, and how they address challenges of big data analytics. The seminar provides an overview of Hadoop's architecture and ecosystem and how it can effectively process large datasets measured in petabytes.

Hadoop Primer

Hadoop PrimerSteve Staso Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of computers. It allows for the reliable, scalable, and distributed processing of petabytes of data. Hadoop consists of Hadoop Distributed File System (HDFS) for storage and Hadoop MapReduce for processing vast amounts of data in parallel on large clusters of commodity hardware in a reliable, fault-tolerant manner. Many large companies use Hadoop for applications such as log analysis, web indexing, and data mining of large datasets.

Apache hadoop technology : Beginners

Apache hadoop technology : BeginnersShweta Patnaik This presentation is about apache hadoop technology. It may be helpful for the beginners to know some terminologies of hadoop.

Hadoop Technologies

Hadoop TechnologiesKannappan Sirchabesan The document discusses various Hadoop technologies including HDFS, MapReduce, Pig/Hive, HBase, Flume, Oozie, Zookeeper, and HBase. HDFS provides reliable storage across multiple machines by replicating data on different nodes. MapReduce is a framework for processing large datasets in parallel. Pig and Hive provide high-level languages for analyzing data stored in Hadoop. Flume collects log data as it is generated. Oozie manages Hadoop jobs. Zookeeper allows distributed coordination. HBase provides a fault-tolerant way to store large amounts of sparse data.

Hadoop hive presentation

Hadoop hive presentationArvind Kumar Hadoop is an open-source framework for distributed storage and processing of large datasets across clusters of commodity hardware. It addresses problems with traditional systems like data growth, network/server failures, and high costs by allowing data to be stored in a distributed manner and processed in parallel. Hadoop has two main components - the Hadoop Distributed File System (HDFS) which provides high-throughput access to application data across servers, and the MapReduce programming model which processes large amounts of data in parallel by splitting work into map and reduce tasks.

Syncsort et le retour d'expérience ComScore

Syncsort et le retour d'expérience ComScoreModern Data Stack France This document summarizes Syncsort's high performance data integration solutions for Hadoop contexts. Syncsort has over 40 years of experience innovating performance solutions. Their DMExpress product provides high-speed connectivity to Hadoop and accelerates ETL workflows. It uses partitioning and parallelization to load data into HDFS 6x faster than native methods. DMExpress also enhances usability with a graphical interface and accelerates MapReduce jobs by replacing sort functions. Customers report TCO reductions of 50-75% and ROI within 12 months by using DMExpress to optimize their Hadoop deployments.

Real time hadoop + mapreduce intro

Real time hadoop + mapreduce introGeoff Hendrey augmented my real-time hadoop talk to include a programming intro to mapreduce for google developer groups

Apache Hadoop at 10

Apache Hadoop at 10Cloudera, Inc. This document summarizes the history and evolution of Apache Hadoop over the past 10 years. It discusses how Hadoop originated from Doug Cutting's work on Nutch in 2002. It grew to include HDFS for storage and MapReduce for processing. Yahoo was an early large-scale user. The community has expanded Hadoop to include over 25 components like Hive, HBase, Spark and more. The open source model and ability to adapt have helped Hadoop succeed and it will continue to evolve to handle new data sources and cloud deployments in the next 10 years.

Hadoop-Quick introduction

Hadoop-Quick introductionSandeep Singh - Data is a precious resource that can last longer than the systems themselves (Tim Berners-Lee)

- Hadoop is an open-source framework for distributed storage and processing of large datasets across clusters of commodity hardware. It provides reliability, scalability and flexibility.

- Hadoop consists of HDFS for storage and MapReduce for processing. The main nodes include NameNode, DataNodes, JobTracker and TaskTrackers. Tools like Hive, Pig, HBase extend its capabilities for SQL-like queries, data flows and NoSQL access.

Facebooks Petabyte Scale Data Warehouse using Hive and Hadoop

Facebooks Petabyte Scale Data Warehouse using Hive and Hadooproyans Facebooks Petabyte Scale Data Warehouse using Hive and Hadoop.

More info here

http://www.royans.net/arch/hive-facebook/

Column Stores and Google BigQuery

Column Stores and Google BigQueryCsaba Toth From Hadoop, through HIVE, Spark, YARN, searching for the holy grail for low latency SQL dialect like big data query. I talk about OLTP and OLAP, row stores and column stores, and finally arrive to BigQuery. Demo on the web console.

Introduction to the Hadoop Ecosystem (IT-Stammtisch Darmstadt Edition)

Introduction to the Hadoop Ecosystem (IT-Stammtisch Darmstadt Edition)Uwe Printz Talk held at the IT-Stammtisch Darmstadt on 08.11.2013

Agenda:

- What is Big Data & Hadoop?

- Core Hadoop

- The Hadoop Ecosystem

- Use Cases

- What‘s next? Hadoop 2.0!

Nextag talk

Nextag talkJoydeep Sen Sarma Hive provides an SQL-like interface to query data stored in Hadoop's HDFS distributed file system and processed using MapReduce. It allows users without MapReduce programming experience to write queries that Hive then compiles into a series of MapReduce jobs. The document discusses Hive's components, data model, query planning and optimization techniques, and performance compared to other frameworks like Pig.

Hadoop Ecosystem Overview

Hadoop Ecosystem OverviewGerrit van Vuuren This document provides an overview of big data ecosystems, including common log formats, compression techniques, data collection methods, distributed storage options like HDFS and S3, distributed processing frameworks like Hadoop MapReduce and Storm, workflow managers, real-time storage options, and other related topics. It describes technologies like Kafka, HBase, Cassandra, Pig, Hive, Oozie, and Azkaban; compares advantages and disadvantages of HDFS, S3, HBase and other storage systems; and provides references for further information.

Big data and Hadoop

Big data and HadoopRahul Agarwal This document provides an overview of big data and Hadoop. It discusses why Hadoop is useful for extremely large datasets that are difficult to manage in relational databases. It then summarizes what Hadoop is, including its core components like HDFS, MapReduce, HBase, Pig, Hive, Chukwa, and ZooKeeper. The document also outlines Hadoop's design principles and provides examples of how some of its components like MapReduce and Hive work.

Viewers also liked (20)

Managing Big data using Hadoop Map Reduce in Telecom Domain

Managing Big data using Hadoop Map Reduce in Telecom DomainAM Publications Map reduce is a programming model for analysing and processing large massive data sets. Apache Hadoop is an efficient frame work and the most popular implementation of the map reduce model. Hadoop’s success has motivated research interest and has led to different modifications as well as extensions to framework. In this paper, the challenges faced in different domains like data storage, analytics, online processing and privacy/ security issues while handling big data are explored. Also, the various possible solutions with respect to Telecom domain with Hadoop Map reduce implementation is discussed in this paper.

Harnessing Big Data in Real-Time

Harnessing Big Data in Real-TimeDataWorks Summit This document discusses harnessing big data in real-time. It outlines how business requirements are increasingly demanding real-time insights from data. Traditional systems struggle with high latency, complexity, and costs when dealing with big data. The document proposes using SAP HANA and Hadoop together to enable instant analytics on vast amounts of data. It provides examples of using this approach for cancer genome analysis and other use cases to generate personalized and timely results.

Hw09 Hadoop Based Data Mining Platform For The Telecom Industry

Hw09 Hadoop Based Data Mining Platform For The Telecom IndustryCloudera, Inc. The document summarizes a parallel data mining platform called BC-PDM developed by China Mobile Communication Corporation to address the challenges of analyzing their large scale telecom data. Key points:

- BC-PDM is based on Hadoop and designed to perform ETL and data mining algorithms in parallel to enable scalable analysis of datasets exceeding hundreds of terabytes.

- The platform implements various ETL operations and data mining algorithms using MapReduce. Initial experiments showed a 10-50x speedup over traditional solutions.

- Future work includes improving data security, migrating online systems to the platform, and enhancing the user interface.

Hadoop Boosts Profits in Media and Telecom Industry

Hadoop Boosts Profits in Media and Telecom IndustryDataWorks Summit 1) The document discusses 21 use cases for using Hadoop in the telecommunications industry across network infrastructure, service and security, sales and marketing, and new business functions.

2) It provides details on specific use cases such as using Hadoop for network capacity planning, customer experience analytics based on call detail records, and improving contact center and field service productivity.

3) The document also outlines the typical journey an organization takes to become data-driven and the roles needed in a center of excellence at different stages of the journey.

Dataiku big data paris - the rise of the hadoop ecosystem

Dataiku big data paris - the rise of the hadoop ecosystemDataiku This document discusses the rise of the Hadoop ecosystem. It outlines how the ecosystem has expanded from the original Hadoop components of HDFS for storage and MapReduce for distributed computation. New frameworks have emerged that allow for real-time queries, updates, and machine learning on big data. These include Spark, Storm, Drill, and streaming engines. The ecosystem is now a complex network of interoperable tools for storage, computation, analytics and machine learning on large datasets.

The Hadoop Ecosystem for Developers

The Hadoop Ecosystem for DevelopersZohar Elkayam This document provides an overview of the Hadoop ecosystem. It begins with introducing big data challenges around volume, variety, and velocity of data. It then introduces Hadoop as an open-source framework for distributed storage and processing of large datasets across clusters of computers. The key components of Hadoop are HDFS (Hadoop Distributed File System) for distributed storage and high throughput access to application data, and MapReduce as a programming model for distributed computing on large datasets. HDFS stores data reliably using data replication across nodes and is optimized for throughput over large files and datasets.

Hadoop And Their Ecosystem ppt

Hadoop And Their Ecosystem pptsunera pathan The document provides an overview of Hadoop and its ecosystem. It discusses the history and architecture of Hadoop, describing how it uses distributed storage and processing to handle large datasets across clusters of commodity hardware. The key components of Hadoop include HDFS for storage, MapReduce for processing, and an ecosystem of related projects like Hive, HBase, Pig and Zookeeper that provide additional functions. Advantages are its ability to handle unlimited data storage and high speed processing, while disadvantages include lower speeds for small datasets and limitations on data storage size.

Hadoop Ecosystem at a Glance

Hadoop Ecosystem at a GlanceNeev Technologies Hadoop as we know is a Java based massive scalable distributed framework for processing large data (several peta bytes) across a cluster (1000s) of commodity computers.

The Hadoop ecosystem has grown over the last few years and there is a lot of jargon in terms of tools as well as frameworks.

Many organizations are investing & innovating heavily in Hadoop to make it better and easier. The mind map on the next slide should be useful to get a high level picture of the ecosystem.

Hadoop ecosystem

Hadoop ecosystemRan Silberman Hadoop became the most common systm to store big data.

With Hadoop, many supporting systems emerged to complete the aspects that are missing in Hadoop itself.

Together they form a big ecosystem.

This presentation covers some of those systems.

While not capable to cover too many in one presentation, I tried to focus on the most famous/popular ones and on the most interesting ones.

Hadoop Ecosystem at Twitter - Kevin Weil - Hadoop World 2010

Hadoop Ecosystem at Twitter - Kevin Weil - Hadoop World 2010Cloudera, Inc. The document discusses the benefits of exercise for mental health. Regular physical activity can help reduce anxiety and depression and improve mood and cognitive functioning. Exercise causes chemical changes in the brain that may help protect against mental illness and improve symptoms.

Hadoop ecosystem

Hadoop ecosystemtfmailru The document discusses the Hadoop ecosystem. It describes several components including HDFS, MapReduce, Hive, Pig, HBase, Flume, Whirr, Oozie, Mahout and CDH. It provides examples of how to use each component and discusses their features and use cases. The presentation was given by Kai Voigt of Cloudera to provide an overview of the Hadoop ecosystem.

Map reduce - simplified data processing on large clusters

Map reduce - simplified data processing on large clustersCleverence Kombe The document describes MapReduce, a programming model and software framework for processing large datasets in a distributed computing environment. It discusses how MapReduce allows users to specify map and reduce functions to parallelize tasks across large clusters of machines. It also covers how MapReduce handles parallelization, fault tolerance, and load balancing transparently through an easy-to-use programming interface.

Hadoop ecosystem framework n hadoop in live environment

Hadoop ecosystem framework n hadoop in live environmentDelhi/NCR HUG The document provides an overview of the Hadoop ecosystem and how several large companies such as Google, Yahoo, Facebook, and others use Hadoop in production. It discusses the key components of Hadoop including HDFS, MapReduce, HBase, Pig, Hive, Zookeeper and others. It also summarizes some of the large-scale usage of Hadoop at these companies for applications such as web indexing, analytics, search, recommendations, and processing massive amounts of data.

Hadoop Ecosystem

Hadoop EcosystemPatrick Nicolas There is a lot more to Hadoop than Map-Reduce. An increasing number of engineers and researchers involved in processing and analyzing large amount of data, regards Hadoop as an ever expanding ecosystem of open sources libraries, including NoSQL, scripting and analytics tools.

Introduction to the Hadoop Ecosystem with Hadoop 2.0 aka YARN (Java Serbia Ed...

Introduction to the Hadoop Ecosystem with Hadoop 2.0 aka YARN (Java Serbia Ed...Uwe Printz Talk held at the Java User Group on 05.09.2013 in Novi Sad, Serbia

Agenda:

- What is Big Data & Hadoop?

- Core Hadoop

- The Hadoop Ecosystem

- Use Cases

- What‘s next? Hadoop 2.0!

Hadoop Ecosystem Architecture Overview

Hadoop Ecosystem Architecture Overview Senthil Kumar Hadoop Ecosystems overview and diagrams - helps to understand list of subprojects in hadoop in diagramatic way.

Introduction to Map-Reduce

Introduction to Map-ReduceBrendan Tierney This document provides a high-level overview of MapReduce and Hadoop. It begins with an introduction to MapReduce, describing it as a distributed computing framework that decomposes work into parallelized map and reduce tasks. Key concepts like mappers, reducers, and job tracking are defined. The structure of a MapReduce job is then outlined, showing how input is divided and processed by mappers, then shuffled and sorted before being combined by reducers. Example map and reduce functions for a word counting problem are presented to demonstrate how a full MapReduce job works.

Apache Flume - DataDayTexas

Apache Flume - DataDayTexasArvind Prabhakar Arvind Prabhakar presented on Apache Flume. He discussed that Flume is an open-source system for aggregating large amounts of log and streaming data from many sources and efficiently transporting it to data stores and processing systems. It is designed to handle high volumes of continuously arriving data from distributed servers or devices. Flume uses a pipeline-based architecture that allows for reliable, scalable, and customizable data ingestion.

Hadoop Ecosystem | Big Data Analytics Tools | Hadoop Tutorial | Edureka

Hadoop Ecosystem | Big Data Analytics Tools | Hadoop Tutorial | Edureka Edureka! This Edureka Hadoop Ecosystem Tutorial (Hadoop Ecosystem blog: https://goo.gl/EbuBGM) will help you understand about a set of tools and services which together form a Hadoop Ecosystem. Below are the topics covered in this Hadoop Ecosystem Tutorial:

Hadoop Ecosystem:

1. HDFS - Hadoop Distributed File System

2. YARN - Yet Another Resource Negotiator

3. MapReduce - Data processing using programming

4. Spark - In-memory Data Processing

5. Pig, Hive - Data Processing Services using Query

6. HBase - NoSQL Database

7. Mahout, Spark MLlib - Machine Learning

8. Apache Drill - SQL on Hadoop

9. Zookeeper - Managing Cluster

10. Oozie - Job Scheduling

11. Flume, Sqoop - Data Ingesting Services

12. Solr & Lucene - Searching & Indexing

13. Ambari - Provision, Monitor and Maintain Cluster

Ad

Similar to Big Data and Hadoop Ecosystem (20)

Big Data and Hadoop - History, Technical Deep Dive, and Industry Trends

Big Data and Hadoop - History, Technical Deep Dive, and Industry TrendsEsther Kundin An overview of the history of Big Data, followed by a deep dive into the Hadoop ecosystem. Detailed explanation of how HDFS, MapReduce, and HBase work, followed by a discussion of how to tune HBase performance. Finally, a look at industry trends, including challenges faced and being solved by Bloomberg for using Hadoop for financial data.

4. hadoop גיא לבנברג

4. hadoop גיא לבנברגTaldor Group This document discusses big data and Hadoop. It provides an overview of Hadoop, including what it is, how it works, and its core components like HDFS and MapReduce. It also discusses what Hadoop is good for, such as processing large datasets, and what it is not as good for, like low-latency queries or transactional systems. Finally, it covers some best practices for implementing Hadoop, such as infrastructure design and performance considerations.

Big Data and Hadoop - History, Technical Deep Dive, and Industry Trends

Big Data and Hadoop - History, Technical Deep Dive, and Industry TrendsEsther Kundin An overview of the history of Big Data, followed by a deep dive into the Hadoop ecosystem. Detailed explanation of how HDFS, MapReduce, and HBase work, followed by a discussion of how to tune HBase performance. Finally, a look at industry trends, including challenges faced and being solved by Bloomberg for using Hadoop for financial data.

Scaling Storage and Computation with Hadoop

Scaling Storage and Computation with Hadoopyaevents Hadoop provides a distributed storage and a framework for the analysis and transformation of very large data sets using the MapReduce paradigm. Hadoop is partitioning data and computation across thousands of hosts, and executes application computations in parallel close to their data. A Hadoop cluster scales computation capacity, storage capacity and IO bandwidth by simply adding commodity servers. Hadoop is an Apache Software Foundation project; it unites hundreds of developers, and hundreds of organizations worldwide report using Hadoop. This presentation will give an overview of the Hadoop family projects with a focus on its distributed storage solutions

Hadoop Distributed File System

Hadoop Distributed File SystemVaibhav Jain HDFS allows storing large amounts of data across multiple machines by splitting files into blocks and replicating those blocks for reliability. It addresses challenges of big data like volume, velocity, and variety by providing a distributed storage solution that scales horizontally. Traditional systems are limited by network bandwidth, storage capacity of individual machines, and single points of failure. HDFS introduces a scalable architecture with a master NameNode and slave DataNodes that stores data blocks, addressing these issues through data distribution and fault tolerance.

Bigdata

BigdataAyush Agrawal Big data analytics involves collecting and analyzing large, complex datasets. There are three key aspects of big data: volume, referring to the large size of datasets; velocity, meaning the speed of data input and processing; and variety, the different data types including text, audio, video and more. Hadoop is an open-source framework that allows processing and querying vast amounts of data across clusters of computers. It uses HDFS for distributed storage and MapReduce as a processing paradigm to break work into parallelized chunks. R can be used with Hadoop for advanced analytics and visualization of large datasets stored in Hadoop.

Introduction to Hadoop and Big Data

Introduction to Hadoop and Big DataJoe Alex This document provides an introduction to Hadoop and big data. It discusses the new kinds of large, diverse data being generated and the need for platforms like Hadoop to process and analyze this data. It describes the core components of Hadoop, including HDFS for distributed storage and MapReduce for distributed processing. It also discusses some of the common applications of Hadoop and other projects in the Hadoop ecosystem like Hive, Pig, and HBase that build on the core Hadoop framework.

Big data Hadoop

Big data Hadoop Ayyappan Paramesh The document provides an overview of big data and Hadoop fundamentals. It discusses what big data is, the characteristics of big data, and how it differs from traditional data processing approaches. It then describes the key components of Hadoop including HDFS for distributed storage, MapReduce for distributed processing, and YARN for resource management. HDFS architecture and features are explained in more detail. MapReduce tasks, stages, and an example word count job are also covered. The document concludes with a discussion of Hive, including its use as a data warehouse infrastructure on Hadoop and its query language HiveQL.

Big Data and Hadoop Training in Chandigarh

Big Data and Hadoop Training in ChandigarhBig Boxx Animation Academy Start Your Career as a Big Data Expert in Top MNC's. Join today Big Data and Hadoop Training in Chandigarh at BigBoxx Academy and get 100% Placement Assistance.

Big data and hadoop overvew

Big data and hadoop overvewKunal Khanna The document provides an overview of big data and Hadoop, discussing what big data is, current trends and challenges, approaches to solving big data problems including distributed computing, NoSQL, and Hadoop, and introduces HDFS and the MapReduce framework in Hadoop for distributed storage and processing of large datasets.

Hadoop ppt1

Hadoop ppt1chariorienit We Provide Hadoop training institute in Hyderabad and Bangalore with corporate training by 12+ Experience faculty.

Real-time industry experts from MNCs

Resume Preparation by expert Professionals

Lab exercises

Interview Preparation

Experts advice

Tcloud Computing Hadoop Family and Ecosystem Service 2013.Q3

Tcloud Computing Hadoop Family and Ecosystem Service 2013.Q3tcloudcomputing-tw 經過幾年的大數據與Hadoop的洗禮,相信大部分的人都知道何謂大數據以及大數據所帶來的問題,這次的介紹會著墨在大數據與Hadoop生態系統的演變與應用以及Hadoop 2.0的架構;除此之外,Hadoop生態系統也有不小的變化,將會在這次的介紹一併告訴大家。

Bigdata workshop february 2015

Bigdata workshop february 2015 clairvoyantllc This document provides an overview of big data and Hadoop. It discusses what big data is, why it has become important recently, and common use cases. It then describes how Hadoop addresses challenges of processing large datasets by distributing data and computation across clusters. The core Hadoop components of HDFS for storage and MapReduce for processing are explained. Example MapReduce jobs like wordcount are shown. Finally, higher-level tools like Hive and Pig that provide SQL-like interfaces are introduced.

2. hadoop fundamentals

2. hadoop fundamentalsLokesh Ramaswamy Hadoop is an open source framework for distributed storage and processing of large datasets across clusters of commodity hardware. It uses Google's MapReduce programming model and Google File System for reliability. The Hadoop architecture includes a distributed file system (HDFS) that stores data across clusters and a job scheduling and resource management framework (YARN) that allows distributed processing of large datasets in parallel. Key components include the NameNode, DataNodes, ResourceManager and NodeManagers. Hadoop provides reliability through replication of data blocks and automatic recovery from failures.

Hadoop

Hadoopavnishagr This document discusses Hadoop, an open-source software framework for distributed storage and processing of large datasets across clusters of computers. It describes how Hadoop uses HDFS for scalable, fault-tolerant storage and MapReduce for parallel processing. The core components of Hadoop - HDFS and MapReduce - allow for distributed processing of large datasets across commodity hardware, providing capabilities for scalability, cost-effectiveness, and efficient distributed computing.

Big Data Architecture Workshop - Vahid Amiri

Big Data Architecture Workshop - Vahid Amiridatastack Big Data Architecture Workshop

This slide is about big data tools, thecnologies and layers that can be used in enterprise solutions.

TopHPC Conference

2019

Hadoop.pptx

Hadoop.pptxarslanhaneef The document provides an overview of Hadoop, including:

- A brief history of Hadoop and its origins from Google and Apache projects

- An explanation of Hadoop's architecture including HDFS, MapReduce, JobTracker, TaskTracker, and DataNodes

- Examples of how large companies like Yahoo, Facebook, and Amazon use Hadoop for applications like log processing, searches, and advertisement targeting

Hadoop.pptx

Hadoop.pptxsonukumar379092 The document provides an overview of Hadoop, including:

- A brief history of Hadoop and its origins at Google and Yahoo

- An explanation of Hadoop's architecture including HDFS, MapReduce, JobTracker, TaskTracker, and DataNodes

- Examples of how large companies like Facebook and Amazon use Hadoop to process massive amounts of data

List of Engineering Colleges in Uttarakhand

List of Engineering Colleges in UttarakhandRoorkee College of Engineering, Roorkee If you are search Best Engineering college in India, Then you can trust RCE (Roorkee College of Engineering) services and facilities. They provide the best education facility, highly educated and experienced faculty, well furnished hostels for both boys and girls, top computerized Library, great placement opportunity and more at affordable fee.

Introduction to BIg Data and Hadoop

Introduction to BIg Data and HadoopAmir Shaikh Hadoop is an open-source framework for distributed storage and processing of large datasets across clusters of commodity hardware. It addresses limitations in traditional RDBMS for big data by allowing scaling to large clusters of commodity servers, high fault tolerance, and distributed processing. The core components of Hadoop are HDFS for distributed storage and MapReduce for distributed processing. Hadoop has an ecosystem of additional tools like Pig, Hive, HBase and more. Major companies use Hadoop to process and gain insights from massive amounts of structured and unstructured data.

Ad

Recently uploaded (20)

SCI BIZ TECH QUIZ (OPEN) PRELIMS XTASY 2025.pptx

SCI BIZ TECH QUIZ (OPEN) PRELIMS XTASY 2025.pptxRonisha Das SCI BIZ TECH QUIZ (OPEN) PRELIMS - XTASY 2025

How to Set warnings for invoicing specific customers in odoo

How to Set warnings for invoicing specific customers in odooCeline George Odoo 16 offers a powerful platform for managing sales documents and invoicing efficiently. One of its standout features is the ability to set warnings and block messages for specific customers during the invoicing process.

To study Digestive system of insect.pptx

To study Digestive system of insect.pptxArshad Shaikh Education is one thing no one can take away from you.”

To study the nervous system of insect.pptx

To study the nervous system of insect.pptxArshad Shaikh The *nervous system of insects* is a complex network of nerve cells (neurons) and supporting cells that process and transmit information. Here's an overview:

Structure

1. *Brain*: The insect brain is a complex structure that processes sensory information, controls behavior, and integrates information.

2. *Ventral nerve cord*: A chain of ganglia (nerve clusters) that runs along the insect's body, controlling movement and sensory processing.

3. *Peripheral nervous system*: Nerves that connect the central nervous system to sensory organs and muscles.

Functions

1. *Sensory processing*: Insects can detect and respond to various stimuli, such as light, sound, touch, taste, and smell.

2. *Motor control*: The nervous system controls movement, including walking, flying, and feeding.

3. *Behavioral responThe *nervous system of insects* is a complex network of nerve cells (neurons) and supporting cells that process and transmit information. Here's an overview:

Structure

1. *Brain*: The insect brain is a complex structure that processes sensory information, controls behavior, and integrates information.

2. *Ventral nerve cord*: A chain of ganglia (nerve clusters) that runs along the insect's body, controlling movement and sensory processing.

3. *Peripheral nervous system*: Nerves that connect the central nervous system to sensory organs and muscles.

Functions

1. *Sensory processing*: Insects can detect and respond to various stimuli, such as light, sound, touch, taste, and smell.

2. *Motor control*: The nervous system controls movement, including walking, flying, and feeding.

3. *Behavioral responses*: Insects can exhibit complex behaviors, such as mating, foraging, and social interactions.

Characteristics

1. *Decentralized*: Insect nervous systems have some autonomy in different body parts.

2. *Specialized*: Different parts of the nervous system are specialized for specific functions.

3. *Efficient*: Insect nervous systems are highly efficient, allowing for rapid processing and response to stimuli.

The insect nervous system is a remarkable example of evolutionary adaptation, enabling insects to thrive in diverse environments.

The insect nervous system is a remarkable example of evolutionary adaptation, enabling insects to thrive

GDGLSPGCOER - Git and GitHub Workshop.pptx

GDGLSPGCOER - Git and GitHub Workshop.pptxazeenhodekar This presentation covers the fundamentals of Git and version control in a practical, beginner-friendly way. Learn key commands, the Git data model, commit workflows, and how to collaborate effectively using Git — all explained with visuals, examples, and relatable humor.

Phoenix – A Collaborative Renewal of Children’s and Young People’s Services C...

Phoenix – A Collaborative Renewal of Children’s and Young People’s Services C...Library Association of Ireland

Social Problem-Unemployment .pptx notes for Physiotherapy Students

Social Problem-Unemployment .pptx notes for Physiotherapy StudentsDrNidhiAgarwal Unemployment is a major social problem, by which not only rural population have suffered but also urban population are suffered while they are literate having good qualification.The evil consequences like poverty, frustration, revolution

result in crimes and social disorganization. Therefore, it is

necessary that all efforts be made to have maximum.

employment facilities. The Government of India has already

announced that the question of payment of unemployment

allowance cannot be considered in India

YSPH VMOC Special Report - Measles Outbreak Southwest US 5-3-2025.pptx

YSPH VMOC Special Report - Measles Outbreak Southwest US 5-3-2025.pptxYale School of Public Health - The Virtual Medical Operations Center (VMOC) A measles outbreak originating in West Texas has been linked to confirmed cases in New Mexico, with additional cases reported in Oklahoma and Kansas. The current case count is 817 from Texas, New Mexico, Oklahoma, and Kansas. 97 individuals have required hospitalization, and 3 deaths, 2 children in Texas and one adult in New Mexico. These fatalities mark the first measles-related deaths in the United States since 2015 and the first pediatric measles death since 2003.

The YSPH Virtual Medical Operations Center Briefs (VMOC) were created as a service-learning project by faculty and graduate students at the Yale School of Public Health in response to the 2010 Haiti Earthquake. Each year, the VMOC Briefs are produced by students enrolled in Environmental Health Science Course 581 - Public Health Emergencies: Disaster Planning and Response. These briefs compile diverse information sources – including status reports, maps, news articles, and web content– into a single, easily digestible document that can be widely shared and used interactively. Key features of this report include:

- Comprehensive Overview: Provides situation updates, maps, relevant news, and web resources.

- Accessibility: Designed for easy reading, wide distribution, and interactive use.

- Collaboration: The “unlocked" format enables other responders to share, copy, and adapt seamlessly. The students learn by doing, quickly discovering how and where to find critical information and presenting it in an easily understood manner.

CURRENT CASE COUNT: 817 (As of 05/3/2025)

• Texas: 688 (+20)(62% of these cases are in Gaines County).

• New Mexico: 67 (+1 )(92.4% of the cases are from Eddy County)

• Oklahoma: 16 (+1)

• Kansas: 46 (32% of the cases are from Gray County)

HOSPITALIZATIONS: 97 (+2)

• Texas: 89 (+2) - This is 13.02% of all TX cases.

• New Mexico: 7 - This is 10.6% of all NM cases.

• Kansas: 1 - This is 2.7% of all KS cases.

DEATHS: 3

• Texas: 2 – This is 0.31% of all cases

• New Mexico: 1 – This is 1.54% of all cases

US NATIONAL CASE COUNT: 967 (Confirmed and suspected):

INTERNATIONAL SPREAD (As of 4/2/2025)

• Mexico – 865 (+58)

‒Chihuahua, Mexico: 844 (+58) cases, 3 hospitalizations, 1 fatality

• Canada: 1531 (+270) (This reflects Ontario's Outbreak, which began 11/24)

‒Ontario, Canada – 1243 (+223) cases, 84 hospitalizations.

• Europe: 6,814

Ultimate VMware 2V0-11.25 Exam Dumps for Exam Success

Ultimate VMware 2V0-11.25 Exam Dumps for Exam SuccessMark Soia Boost your chances of passing the 2V0-11.25 exam with CertsExpert reliable exam dumps. Prepare effectively and ace the VMware certification on your first try

Quality dumps. Trusted results. — Visit CertsExpert Now: https://www.certsexpert.com/2V0-11.25-pdf-questions.html

Quality Contril Analysis of Containers.pdf

Quality Contril Analysis of Containers.pdfDr. Bindiya Chauhan Quality control test for containers, rubber closures and secondary packing materials.

Biophysics Chapter 3 Methods of Studying Macromolecules.pdf

Biophysics Chapter 3 Methods of Studying Macromolecules.pdfPKLI-Institute of Nursing and Allied Health Sciences Lahore , Pakistan. This chapter provides an in-depth overview of the viscosity of macromolecules, an essential concept in biophysics and medical sciences, especially in understanding fluid behavior like blood flow in the human body.

Key concepts covered include:

✅ Definition and Types of Viscosity: Dynamic vs. Kinematic viscosity, cohesion, and adhesion.

⚙️ Methods of Measuring Viscosity:

Rotary Viscometer

Vibrational Viscometer

Falling Object Method

Capillary Viscometer

🌡️ Factors Affecting Viscosity: Temperature, composition, flow rate.

🩺 Clinical Relevance: Impact of blood viscosity in cardiovascular health.

🌊 Fluid Dynamics: Laminar vs. turbulent flow, Reynolds number.

🔬 Extension Techniques:

Chromatography (adsorption, partition, TLC, etc.)

Electrophoresis (protein/DNA separation)

Sedimentation and Centrifugation methods.

How to track Cost and Revenue using Analytic Accounts in odoo Accounting, App...

How to track Cost and Revenue using Analytic Accounts in odoo Accounting, App...Celine George Analytic accounts are used to track and manage financial transactions related to specific projects, departments, or business units. They provide detailed insights into costs and revenues at a granular level, independent of the main accounting system. This helps to better understand profitability, performance, and resource allocation, making it easier to make informed financial decisions and strategic planning.

Presentation of the MIPLM subject matter expert Erdem Kaya

Presentation of the MIPLM subject matter expert Erdem KayaMIPLM Presentation of the MIPLM subject matter expert Erdem Kaya

How to Customize Your Financial Reports & Tax Reports With Odoo 17 Accounting

How to Customize Your Financial Reports & Tax Reports With Odoo 17 AccountingCeline George The Accounting module in Odoo 17 is a complete tool designed to manage all financial aspects of a business. Odoo offers a comprehensive set of tools for generating financial and tax reports, which are crucial for managing a company's finances and ensuring compliance with tax regulations.

How to manage Multiple Warehouses for multiple floors in odoo point of sale

How to manage Multiple Warehouses for multiple floors in odoo point of saleCeline George The need for multiple warehouses and effective inventory management is crucial for companies aiming to optimize their operations, enhance customer satisfaction, and maintain a competitive edge.

Geography Sem II Unit 1C Correlation of Geography with other school subjects

Geography Sem II Unit 1C Correlation of Geography with other school subjectsProfDrShaikhImran The correlation of school subjects refers to the interconnectedness and mutual reinforcement between different academic disciplines. This concept highlights how knowledge and skills in one subject can support, enhance, or overlap with learning in another. Recognizing these correlations helps in creating a more holistic and meaningful educational experience.

Exploring-Substances-Acidic-Basic-and-Neutral.pdf

Exploring-Substances-Acidic-Basic-and-Neutral.pdfSandeep Swamy Exploring Substances:

Acidic, Basic, and

Neutral

Welcome to the fascinating world of acids and bases! Join siblings Ashwin and

Keerthi as they explore the colorful world of substances at their school's

National Science Day fair. Their adventure begins with a mysterious white paper

that reveals hidden messages when sprayed with a special liquid.

In this presentation, we'll discover how different substances can be classified as

acidic, basic, or neutral. We'll explore natural indicators like litmus, red rose

extract, and turmeric that help us identify these substances through color

changes. We'll also learn about neutralization reactions and their applications in

our daily lives.

by sandeep swamy

The ever evoilving world of science /7th class science curiosity /samyans aca...

The ever evoilving world of science /7th class science curiosity /samyans aca...Sandeep Swamy The Ever-Evolving World of

Science

Welcome to Grade 7 Science4not just a textbook with facts, but an invitation to

question, experiment, and explore the beautiful world we live in. From tiny cells

inside a leaf to the movement of celestial bodies, from household materials to

underground water flows, this journey will challenge your thinking and expand

your knowledge.

Notice something special about this book? The page numbers follow the playful

flight of a butterfly and a soaring paper plane! Just as these objects take flight,

learning soars when curiosity leads the way. Simple observations, like paper

planes, have inspired scientific explorations throughout history.

Phoenix – A Collaborative Renewal of Children’s and Young People’s Services C...

Phoenix – A Collaborative Renewal of Children’s and Young People’s Services C...Library Association of Ireland

YSPH VMOC Special Report - Measles Outbreak Southwest US 5-3-2025.pptx

YSPH VMOC Special Report - Measles Outbreak Southwest US 5-3-2025.pptxYale School of Public Health - The Virtual Medical Operations Center (VMOC)

Biophysics Chapter 3 Methods of Studying Macromolecules.pdf

Biophysics Chapter 3 Methods of Studying Macromolecules.pdfPKLI-Institute of Nursing and Allied Health Sciences Lahore , Pakistan.

Big Data and Hadoop Ecosystem

- 1. Big Data and Hadoop Presenter Rajkumar Singh http://rajkrrsingh.blogspot.com/ http://in.linkedin.com/in/rajkrrsingh

- 2. Big Data and Hadoop Introduction Volume Variety Velocity Facebook Google Plus Twitter LinkedIn Stock Exchange Healthcare Telecom Structured,SemiStructured,unstructured Facebook Stock Exchange Healthcare Telecom Mobile Devices GPS Security Infrastructure

- 3. The Problem e.g. Stock Market

- 4. The Solution (Hadoop Evolution) Traditional Approach

- 5. GB->TB->PB--ZB so the processing with RDBMS is Impossible

- 6. Challenges In Big data • Storage -- PB • Processing – In a timely manner • Variety of data -- S/SS/US • Cost

- 7. To overcome Big Data Challenges Hadoop evolves • Cost Effective – Commodity HW • Big Cluster – (1000 Nodes) --- Provides Storage n Processing • Parallel Processing – Map reduce • Big Storage – Memory per node * no of Nodes / RF • Fail over mechanism – Automatic Failover • Data Distribution • Map Reduce Framework • Moving Code to data • Heterogeneous Hardware System (IBM,HP,AIX,Oracle Machine of any memory and CPU configuration) • Scalable

- 9. What is Hadoop • Java Framework to Process erroneous amount of data Hadoop Core • HDFS • Programming Construct (Map Reduce)

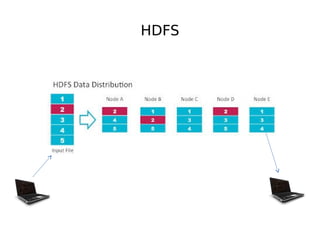

- 10. HDFS

- 12. Hadoop Ecosystem

- 13. Hadoop Sub-Projects • Hadoop Common: The common utilities that support the other Hadoop subprojects. • Hadoop Distributed File System (HDFS™): A distributed file system that provides high-throughput access to application data. • Hadoop MapReduce: A software framework for distributed processing of large data sets on compute clusters. Other Hadoop-related projects at Apache include: • Avro™: A data serialization system. • Cassandra™: A scalable multi-master database with no single points of failure. • Chukwa™: A data collection system for managing large distributed systems. • HBase™: A scalable, distributed database that supports structured data storage for large tables. • Hive™: A data warehouse infrastructure that provides data summarization and ad hoc querying. • Mahout™: A Scalable machine learning and data mining library. • Pig™: A high-level data-flow language and execution framework for parallel computation. • ZooKeeper™: A high-performance coordination service for distributed applications.

- 14. HDFS 250 GB DFS 250 GB 1 TB File 250 GB Based on GFS 250 GB

- 15. HDFS : Use Cases • Very large file. • Reading/Streaming Data Access. Read data in large volume Write once and Read frequent • Expensive Hardware. • Low latency Access. • Lots of small files • Parallel write/ Arbitrary Read

- 16. HDFS Building Blocks Default Block Size 64MB 128MB 1GB file = 1024 MB/128 MB = 8 Blocks For Small File Size 100 MB File < Block Size (128 MB) : Optimize for storage = 1 Block of HDFS of size 100 MB

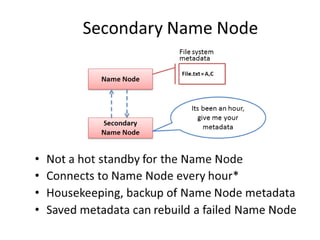

- 17. HDFS Daemon Services • Name Node • Secondary Name Node • Data Node GFS (Master/Slave Architecture)

- 18. HDFS Write File 1: D1,D2,D4 File 2: D1,D2,D3 128 MB RF = 3 D1 D1,D2,D4 D2 D3 D4

- 22. HDFS File System Commands

- 25. HDFS Federation

- 27. Copying Data from one Cluster to another Cluster UAT Cluster Prod Cluster Parallel copying using distcp hadoop distcp hdfs://uat:54311/user/rajkrrsingh/input hdfs://prod:54311/user/rajkrrsingh/input