Ad

Big data/Hadoop/HANA Basics

- 1. [ How Big Data Technologies Provide Solutions for Big Data Problems John Choate – PMMS SIG Chair David Burdett – Strategic Technology Advisor, SAP Henrik Wagner, Global SAP Lead-Alliances, EMC Corp

- 2. [ The Challenge of Big Data Decision-Maker Customer LOB User Data IT Developer 2 Analyst

- 3. [ The 5 Part Series Webinar 1: Why Big Data matters, how it can fit into your Business and Technology Roadmap, and how it can enable your business! Webinar 2: How Big Data technologies provide Solutions for Big Data problems Webinar 3: Using Hadoop in an SAP Landscape with HANA Webinar 4: Leveraging Hadoop with SAP HANA smart data access Webinar 5: Using SAP Data Services with Hadoop and SAP HANA Resources … Webinar Registration 1. Go to www.saphana.com 2. Search “ASUG Big Data Webinar” 3. Registration links in blog … Big Data, Hadoop and Hana – How they Integrate and How they Enable your Business! Info on SAP and Big Data – go to www.sapbigdata.com 3

- 4. [ AREAS TO COVER SETTING THE STAGE MARKET TECHNOLOGY USE CASES SUMMARY 4

- 5. [ How did we get here? Facebook: 1 billion users; 600 mobile users; more than 42 million pages and 9 million apps Youtube: More people have mobile 4 billion views per day phones thanGoogle+: 400 million registered users electricity or safe drinking watermillion monthly connected users Skype: 250 REAL TIME 3,000,000 1,000,000+ SOLD people had access to internet worldwide BIG DATA SOCIAL MOBILE PERSONAL COMPUTER AND CLIENT SERVER DATABASE (CIRCA 1980) 1990 B2B / B2C WWW ANALYTICS (CIRCA 1980) PREDICTIVE ANALYTICS (CIRCA 1980) 2000 SEMANTIC ANALYTICS (CIRCA 1980) 2005 2010 2015 2013 5

- 6. [ How big is Big Data? Today we measure available data in zettabytes (1 trillion gigabytes) IN 2011, THE AMOUNT OF DATA SURPASSED 90% OF THE WORLD DATA TODAY has been created in the last two years alone! 1.8 ZETTABYTES Eight 32GB iPads per person alive in the world 6

- 7. [ Big Data Simplified Definition “Big data” is high-volume, velocity and -variety information assets that demand cost-effective, innovative forms of information processing for enhanced insight and decision making Gartner 7 Three Key Parts Part One: 3V’s – Volume, Velocity, Variety Part Two: Cost-Effective, Innovative Forms of Information Processing Part Three: Enhanced insight for “Real Time” decision making

- 8. [ The 7 Key Drivers Behind the Big Data Movement? * * http://hortonworks.com/blog/7-key-drivers-for-the-big-data-market/ Business Opportunity to enable innovative new business models Potential for new insights that drive competitive advantage Technical Data collected and stored continues to grow exponentially Data is increasingly everywhere and in many formats Traditional solutions are failing under new requirements Financial Cost of data systems, as a percentage of IT spend, continues to grow Cost advantages of commodity hardware & open source software 8

- 9. [ Todays Key Challenges in Big Data Data Analytics 1. Data Capture & Retention – What data should be kept and why 2. Behavioral Analytics – Understanding and leveraging customer behavior 3. Predictive Analytics – Using new data types (sentiment, clickstream, video, image and text) to predict future events 9 Information Strategy 1. Which investments will deliver most business value and ROI? 2. Governance – New expectations for data quality and management 3. Talent – How will you assemble the right teams and align skills? Enterprise Information Management (EIM) 1. User expectations – Making “Big Data” accessible for the end user in “real-time” 2. Costs – How to provide access to big data in a rapid and cost-effective way to support better decision-making? 3. Tools – Have you identified the processes, tools and technologies you need to support big data in your enterprise?

- 10. [PRESENTATION CONTENT SETTING THE STAGE MARKET TECHNOLOGY USE CASES SUMMARY 10

- 11. [ The RAPIDLY GROWING Market “By 2015, 4.4 million IT jobs globally will be created to support big data, generating 1.9 million IT jobs in the United States” Peter Sondergaard, Senior Vice President at Gartner and global head of Research http://www.gartner.com/newsroom/id/2207915 “The Global big data market is estimated to be $14.87 billion in 2013 and expected to grow to $46.34 billion … an estimated Compounded Annual Growth Rate (CAGR) of 25.52% from 2013 to 2018” “IDC expects the Big Data technology and services market to grow at a 31.7% compound annual growth rate through 2016” http://www.idc.com/getdoc.jsp?containerId=238746 11 http://www.marketsandmarkets.com/PressReleases/big-data.asp

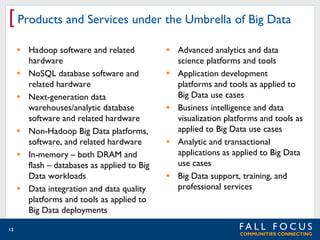

- 12. [ Products and Services under the Umbrella of Big Data Hadoop software and related hardware NoSQL database software and related hardware Next-generation data warehouses/analytic database software and related hardware Non-Hadoop Big Data platforms, software, and related hardware In-memory – both DRAM and flash – databases as applied to Big Data workloads Data integration and data quality platforms and tools as applied to Big Data deployments 12 Advanced analytics and data science platforms and tools Application development platforms and tools as applied to Big Data use cases Business intelligence and data visualization platforms and tools as applied to Big Data use cases Analytic and transactional applications as applied to Big Data use cases Big Data support, training, and professional services

- 13. [ WHO IS SPENDING $$$ ON BIG DATA ? COMPANIES INDUSTRIES Median = $10M MOST 25% Spend less $2.5M 15% Spend greater $100M 7% Spend greater than $500M 13 Banking High Tech Telecommunications Travel LEAST Energy/Resources Life Sciences Retail 2012 Tata Consulting Services (TCS) Global Study

- 14. [ How the market is growing Wikibon: http://wikibon.org/wiki/v/Big_Data_Vendor_Revenue_ and_Market_Forecast_2012-2017 Wikibon: http://wikibon.org/vault/Special:FilePath/2012BigDataSegment Growth20112017.png Fastest growing area is Applications (49% CAGR), 2012-17 14

- 15. [ Big Data Vendor Revenue Big Data vendors are a mix of established players and pure-plays Source Data: http://wikibon.org/wiki/v/Big_Data_Vendor_Revenue_and_Marke t_Forecast_2012-2017 15

- 16. [ 10 Big Data Trends Changing the Face of Business 1. Machine Data and the Internet of Things Takes Center Stage 6. Large Companies Are Increasingly Turning to Big Data 2. Compound Applications That Combine Data Sets to Create Value 7. Most Companies Spend Very Little, A Few Spend A Lot 3. Explosion of Innovation Built on Open Source Big Data Tools 4. Companies Taking a Proactive Approach to Identifying Where Big Data Can Have an Impact 5. There Are More Actual Production Big Data Projects 16 8. Investments Are Geared Toward Generating and Maintaining Revenue 9. The Greatest ROI of Big Data Is Coming from the Logistics and Finance Functions 10. The Biggest Challenges Are as Much Cultural as Technological

- 17. [PRESENTATION CONTENT SETTING THE STAGE MARKET TECHNOLOGY USE CASES SUMMARY 17

- 18. [ Aspect of Time Value of Data “HOT” Data may be better suited for “In Memory” HANA residency. This data largely derived from structured SAP sources. “WARM” and “COLD” Data may be better suited for HADOOP residency. This data is largely unstructured in nature and may present very large data sets (multi PB). Business value reflected by Use Cases may consist of queries and data structures in three different ways: Enabled by SAP HANA Enabled by HADOOP Enabled by HANA and HADOOP simultaneously 18 EMC Corporation

- 19. [ SAP’s Technology Use Case View EMC Corporation 19

- 20. [ Big Data High Level Software Architecture Big Data Storage holds the data in memory or on SSD/HDD Big Data Database Software manages data in the Big Data Storage. Includes SQL and NoSQL DBMS. Processing Engines are software that can process / manipulate data in the Big Data Storage Processing Engines Software Analytic Software analyzes data using the Processing Engines or Big Data DB Software Big Data Applications provide solutions for specific business problems In-memory Development Software is used to build Big Data Applications Visualization Software presents the results to end users from Analytic Software or Big Data Applications Data Capture Software Data Capture Software on-boards and manages data from multiple Data Sources Data Sources Development Software Management Software handles operational of the Big Data implementation / solution Big Data Applications Analytic Software Big Data Database Software Visualization Software Management Software Big Data Storage 20 SSD HDD

- 21. Big Data Hive/HBase Database Software Visualization Software Mahout/ Processing Engines Software Giraph, etc Big Data Applications Analytic Software Big Data Cassandra Database Software Data Sources Hadoop Big Data Storage Cassandra Management Software Management Software Data Capture Software Big Data Applications Analytic Software Big Data MongoDB Database Software Data Capture Software Data Sources Cassandra Software In-memory Big Data Storage MongoDB SSD HDD Visualization Processing Engines Software In-memory SSD HDD Software Processing Engines Software In-memory Big Data Storage Hadoop HDFS Visualization Development Software Analytic Software Management Software Big Data Applications Development Software Development Software [ Big Data Software Other Solutions Data Capture Software Data Sources MongoDB Big Data Software solutions only handle part of the problem 21 SSD HDD

- 22. [ Big Data Software Architecture and HANA Development HANA Studio Software ANALYZE – Analytics! Big Data Applications Analytic SAP BI Software Tools Big Data HANA / Sybase IQ Database Software Visualizatio SAP Lumira n Software Processing “R” Engine, Text Engines Analytics, etc. Software SAP Landscape Management Management Software In-memory 22 SAP HANA Sybase IQ Big Data Storage Hadoop HDFS DataSAP Data Services Capture Software Data Sources SSD HDD Analyze and visualize Big Data using tools that best serve your business needs. Reduce delays associated with complex analysis of large data sets using in-memory analytics. New opportunities and expose hidden risks using algorithms, R integration, and predictive analysis. Enable business users to access and visualize insight using charts, graphs, maps, and more. Uncover hidden value from unstructured data with text analytics. ACELERATE – “Real Time” Visibility Increase business speed with cost-performance data processing options In-memory processing with SAP HANA to massively parallel processing with the SAP Sybase IQ database Distributed processing of large data sets with Hadoop. ACQUIRE – Meet the Expanding Data Demand Acquire and store large volumes of data from a variety of data sources. Flexible data management capabilities delivered via the SAP HANA platform. Best option based on business requirements for accessibility, complexity of analytics, processing speed, and storage costs. See: http://www.sapbigdata.com/platform/

- 23. [PRESENTATION CONTENT SETTING THE STAGE MARKET TECHNOLOGY USE CASES SUMMARY 23

- 24. [ Looking for Big Data Potential in your Company ACQUIRE – Meet the Expanding Data Demand 1. Acquire and store large volumes of data from a variety of data sources. 2. Flexible data management capabilities delivered via the SAP HANA platform. 3. Best option based on business requirements for accessibility, complexity of analytics, processing speed, and storage costs. ACELERATE – “Real Time” Visibility 1. Increase business speed with cost-performance data processing options 2. In-memory processing with SAP HANA to massively parallel processing with the SAP Sybase IQ database 3. Distributed processing of large data sets with Hadoop. ANALYZE – Analytics! 1. Analyze and visualize Big Data using tools that best serve your business needs. 2. 3. 4. 5. 24 Reduce delays associated with complex analysis of large data sets using in-memory analytics. New opportunities and expose hidden risks using algorithms, R integration, and predictive analysis. Enable business users to access and visualize insight using charts, graphs, maps, and more. Uncover hidden value from unstructured data with text analytics.

- 25. [ OVERCOMING OBJECTIONS – USE CASES 1. Big Data Projects are too expensive 2. Big Data is Technology in search of a Business Problem to solve! 3. Big Data is an IT project, we don’t need to involve the business. 4. Big Data is just the new Buzzword phrase, just like Cloud! Soon another trend and new buzzword will come along. 5. We don’t have the skills to use Big Data Solutions. 25

- 26. [ Big Data and Competitive Advantage Utilize your data to gain a competitive advantage! Competitiveness of fact-finders vs. fumblers Fumblers Fumblers Leading businesses can outpace the competition because they can: • Base decisions on the latest, granular multi-structured data • Make decisions on analytics rather than intuition Factfinders Factfinders • Frequently reassess forecasts and plans • Utilize analytics to support a spectrum of strategic, operational and tactical decision making • Rapidly evaluate alternative scenarios Laggards Leaders n=1,002 Source: IDC‘s SAP HANA Market Assessment, August 2011 26

- 27. [ Soliciting Allies REVENUE ROI Sales Finance Marketing Logistics Customer Service Marketing R&D/NPI Sales IT Finance Greater 25% HR 27 2012 Tata Consulting Services (TCS) Global Study

- 28. [ T-Mobile USA, Inc. Telecom – Optimize Marketing Campaigns Effectiveness Product: Agile Datamart 56x faster analysis 5 Billion+ records for 33M customers report executed in 9 seconds Business Challenges Proliferation of offers/micro-offers increasingly strategic in a highly competitive market Marketing Operations needs to collect, analyze and report on results of campaigns/offers very quickly and with great flexibility Current and future campaigns have to be fine tuned to improve customer adoption and profitability Technical Challenges Data for 33M customers required a lot of time to be explored and analyzed in detail with previous technology Benefits Dynamic read outs on the upsell/cross sell performance of store and call centers Easy, fast assess to the performance of all campaigns (e.g. by geo, by store, etc) Quicker forecast of the financial impact of marketing campaigns “ ” Based on the rapid analytics that we’re performing on SAP HANA, we are now able to quickly fine tune our current and future campaigns to improve the customer adoption rate, reduce churn and increase profit Alison Bessho, Director, Enterprise Systems Business Solutions, T-Mobile USA 28

- 29. [ University of Kentucky Higher Education – Student Retention Business Challenges $1.1M increase in revenue with 1% increase in retention rate Enable the University to increase student retention and thus increase the Graduation Rate from 60% to 70% over a 10 Year period Huge costs and longer turnaround time for student classification to improve student satisfaction and the retention rate 420x improvement Technical Challenges in reporting speed: It Lack of speed, accuracy and visibility into data analysis took 2-3 seconds as against the competition Oracle DW which took 15-20 minutes Handling Big data efficiently: SAP ECC V6 production system is 1.5 TB and SAP BW V7 and Oracle Data Warehouse combined is 4 TB Benefits 15x improvement in Increased Student Retention Rate, fast collect new information related to student interactions and various student behaviors Query load time Reduced IT Infrastructure Costs and increased IT FTE productivity “” Allow the University to retire several systems including Informatica, BI Web Focus (IBI), and Oracle (DB) SAP HANA offers an effective real-time data driven system which is essential to giving immediate performance feedback and increase retention rate of students, increasing millions in revenue for the University every year. Vince Kellen, CIO University of Kentucky 29

- 30. [ Hardware Preventative Maintenance Business Challenges A computer server manufacturer wants to implement effective preventative maintenance by identifying problems as they arise then take prompt action to prevent the problem occurring at other customer sites Technical Challenges Identifying problems by analyzing text data from call centers, customer questionnaires together with server logs generated by their hardware Combining results with CRM, sales and manufacturing data to predict which servers are likely to have problems in the future Solution Use SAP Data Services to analyze call center data and questionnaires stored in Hadoop and identify potential problems Use HANA to merge results from Hadoop with server logs to identify indicators in those logs of potential problems Combine with CRM, bill of material and production/manufacturing data to identify cases where preventative maintenance would help 30

- 31. [ Data Warehouse Migration Business Challenges A high tech company with a major web presence uses non-SAP software for its data warehouse to analyze the activity on their web site properties and combine it with data in SAP Business Suite They want to both reduce the cost and improve the responsiveness of their data warehouse solutions by moving to a combination of SAP HANA and Hadoop Technical Challenges How to complete the migration without disrupting existing reporting processes Solution – this was a four step process Step 1. Replicate Data in Hadoop. SAP Data Services is used to replicate in Hadoop all data from web logs and SAP Business Suite being captured by the current Data Warehouse Step 2. Aggregate Data in Hadoop. The aggregation process in the existing Data Warehouse is reimplemented in Hadoop and the aggregate results fed back to the existing Data Warehouse significantly reducing its workload. Step 3. Copy the Aggregate Data to HANA. The aggregate data created by Hadoop is also copied to HANA together with historical aggregate data already in the existing Data Warehouse. The result is that eventually HANA has a complete copy of the data in the existing Data Warehouse. Step 4. Replace Reporting by SAP HANA. New reports are developed in HANA to replace reports in the original Data Warehouse. Once complete, the original Data Warehouse will be decommissioned. The end result is a faster, more responsive and lower cost Data Warehouse built on HANA and Hadoop. 31

- 32. [PRESENTATION CONTENT SETTING THE STAGE MARKET TECHNOLOGY CASE STUDY SUMMARY 32

- 33. [ SUMMARY 1. The Big data Market Is Not Going Away! 2. There are 3 Distinct Components of BD Market 3. Its Not a New Trend but way for Technology To Enable Your Business 4. Case Studies HELP to visualize your own Companies BD Opportunities – Benchmark & Assess! 5. Don’t go the Journey Alone – There are many resources available to make your Journey Successful! 33

- 34. [ Q&A Questions ? 34

- 35. [ The 5 Part Series Webinar 1: Why Big Data matters, how it can fit into your Business and Technology Roadmap, and how it can enable your business! Webinar 2: How Big Data technologies provide Solutions for Big Data problems Webinar 3: Using Hadoop in an SAP Landscape with HANA Webinar 4: Leveraging Hadoop with SAP HANA smart data access Webinar 5: Using SAP Data Services with Hadoop and SAP HANA Resources … Webinar Registration 1. Go to www.saphana.com 2. Search “ASUG Big Data Webinar” 3. Registration links in blog … Big Data, Hadoop and Hana – How they Integrate and How they Enable your Business! Info on SAP and Big Data – go to www.sapbigdata.com 35

- 36. THANK YOU FOR PARTICIPATING. SESSION CODE: Learn more year-round at www.asug.com