Simple, Modular and Extensible Big Data Platform Concept

4 likes2,063 views

Few slides outlining a simple, modular and extensible big data platform concept, leveraging the growing ecosystem.

1 of 17

Downloaded 40 times

Ad

Recommended

Parallel Linear Regression in Interative Reduce and YARN

Parallel Linear Regression in Interative Reduce and YARNDataWorks Summit Online learning techniques, such as Stochastic Gradient Descent (SGD), are powerful when applied to risk minimization and convex games on large problems. However, their sequential design prevents them from taking advantage of newer distributed frameworks such as Hadoop/MapReduce. In this session, we will take a look at how we parallelized linear regression parameter optimization on the next-gen YARN framework Iterative Reduce.

Lightening Fast Big Data Analytics using Apache Spark

Lightening Fast Big Data Analytics using Apache SparkManish Gupta Quick Introduction of Hadoop & it's Limitation

Introduction of Spark

Spark Architecture

Programming model of Spark

Demo

Spark Use Cases

Distributed Deep Learning with Apache Spark and TensorFlow with Jim Dowling

Distributed Deep Learning with Apache Spark and TensorFlow with Jim DowlingDatabricks Methods that scale with available computation are the future of AI. Distributed deep learning is one such method that enables data scientists to massively increase their productivity by (1) running parallel experiments over many devices (GPUs/TPUs/servers) and (2) massively reducing training time by distributing the training of a single network over many devices. Apache Spark is a key enabling platform for distributed deep learning, as it enables different deep learning frameworks to be embedded in Spark workflows in a secure end-to-end pipeline. In this talk, we examine the different ways in which Tensorflow can be included in Spark workflows to build distributed deep learning applications.

We will analyse the different frameworks for integrating Spark with Tensorflow, from Horovod to TensorflowOnSpark to Databrick’s Deep Learning Pipelines. We will also look at where you will find the bottlenecks when training models (in your frameworks, the network, GPUs, and with your data scientists) and how to get around them. We will look at how to use Spark Estimator model to perform hyper-parameter optimization with Spark/TensorFlow and model-architecture search, where Spark executors perform experiments in parallel to automatically find good model architectures.

The talk will include a live demonstration of training and inference for a Tensorflow application embedded in a Spark pipeline written in a Jupyter notebook on the Hops platform. We will show how to debug the application using both Spark UI and Tensorboard, and how to examine logs and monitor training. The demo will be run on the Hops platform, currently used by over 450 researchers and students in Sweden, as well as at companies such as Scania and Ericsson.

Summary machine learning and model deployment

Summary machine learning and model deploymentNovita Sari This document discusses machine learning and model deployment. It provides an overview of machine learning, including the types of problems it can be applied to and common machine learning techniques. It then discusses the typical machine learning workflow, including data profiling, exploration, feature engineering, modeling, evaluation, and deployment. It also covers the two main types of machine learning - supervised and unsupervised learning. Finally, it discusses options for deploying machine learning models, including rewriting code in a different language or using an API-first approach. It provides steps for creating a machine learning API using the Python framework Flask.

PyMADlib - A Python wrapper for MADlib : in-database, parallel, machine learn...

PyMADlib - A Python wrapper for MADlib : in-database, parallel, machine learn...Srivatsan Ramanujam These are slides from my talk @ DataDay Texas, in Austin on 30 Mar 2013

(http://2013.datadaytexas.com/schedule)

Favorite and Fork PyMADlib on GitHub: https://github.com/gopivotal/pymadlib

MADlib: http://madlib.net

Pivotal Data Labs - Technology and Tools in our Data Scientist's Arsenal

Pivotal Data Labs - Technology and Tools in our Data Scientist's Arsenal Srivatsan Ramanujam These slides give an overview of the technology and the tools used by Data Scientists at Pivotal Data Labs. This includes Procedural Languages like PL/Python, PL/R, PL/Java, PL/Perl and the parallel, in-database machine learning library MADlib. The slides also highlight the power and flexibility of the Pivotal platform from embracing open source libraries in Python, R or Java to using new computing paradigms such as Spark on Pivotal HD.

Hopsworks at Google AI Huddle, Sunnyvale

Hopsworks at Google AI Huddle, SunnyvaleJim Dowling Hopsworks is a platform for designing and operating End to End Machine Learning using PySpark and TensorFlow/PyTorch. Early access is now available on GCP. Hopsworks includes the industry's first Feature Store. Hopsworks is open-source.

Neo4j vs giraph

Neo4j vs giraphNishant Gandhi This document surveys and compares three large-scale graph processing platforms: Apache Giraph, Hadoop-MapReduce, and Neo4j. It analyzes their programming models and performance based on previous studies. Hadoop was found to have the worst performance for graph algorithms due to its lack of optimizations for graphs. Giraph was generally the fastest platform due to its in-memory computations and message passing model. Neo4j performed well for small graphs due to its caching but did not scale as well as distributed platforms for large graphs. The document concludes that distributed graph-specific platforms like Giraph outperform generic platforms for most graph problems.

Pivotal OSS meetup - MADlib and PivotalR

Pivotal OSS meetup - MADlib and PivotalRgo-pivotal With the explosion of big data, the need for fast and inexpensive analytics solutions has become a key basis of competition in many industries. Extracting the value of big data with analytics can be complex, and requires advanced skills.

At Pivotal, we are building open-source solutions (MADlib, PivotalR, PyMadlib) to simplify this process for the user, while maintaining the efficiency necessary for big data analysis.

This talk will provide information about MADlib, an open source library of SQL-based algorithms for machine learning, data mining and statistics that run at large scale within a database engine, with no need for data import/export to other tools.

It provides an overview of the library’s architecture and compares various statistical methods with those available in Apache Mahout.

We also introduce, PivotalR, a R-based wrapper for MADlib that allows data scientists and programmers to access power of MADlib along with the ease of use of R.

A Pipeline for Distributed Topic and Sentiment Analysis of Tweets on Pivotal ...

A Pipeline for Distributed Topic and Sentiment Analysis of Tweets on Pivotal ...Srivatsan Ramanujam Unstructured data is everywhere - in the form of posts, status updates, bloglets or news feeds in social media or in the form of customer interactions Call Center CRM. While many organizations study and monitor social media for tracking brand value and targeting specific customer segments, in our experience blending the unstructured data with the structured data in supplementing data science models has been far more effective than working with it independently.

In this talk we will show case an end-to-end topic and sentiment analysis pipeline we've built on the Pivotal Greenplum Database platform for Twitter feeds from GNIP, using open source tools like MADlib and PL/Python. We've used this pipeline to build regression models to predict commodity futures from tweets and in enhancing churn models for telecom through topic and sentiment analysis of call center transcripts. All of this was possible because of the flexibility and extensibility of the platform we worked with.

Spark 101

Spark 101Mohit Garg Spark is an in-memory cluster computing framework that provides high performance for large-scale data processing. It excels over Hadoop by keeping data in memory as RDDs (Resilient Distributed Datasets) for faster processing. The document provides an overview of Spark architecture including its core-based execution model compared to Hadoop's JVM-based model. It also demonstrates Spark's programming model using RDD transformations and actions through an example of log mining, showing how jobs are lazily evaluated and distributed across the cluster.

NextGen Apache Hadoop MapReduce

NextGen Apache Hadoop MapReduceHortonworks Arun C Murthy, Founder and Architect at Hortonworks Inc., talks about the upcoming Next Generation Apache Hadoop MapReduce framework at the Hadoop Summit, 2011.

Big learning 1.2

Big learning 1.2Mohit Garg This document provides a summary of practical machine learning on big data platforms. It begins with an introduction and agenda, then provides a quick brief on the machine learning process. It discusses the current landscape of open source tools, including evolutionary drivers and examples. It covers case studies from Twitter and their experience. Finally, it discusses architectural forces like Moore's Law and Kryder's Law that are shaping the field. The document aims to present a unified approach for machine learning on big data platforms and discuss how industry leaders are implementing these techniques.

Distributed TensorFlow on Hadoop, Mesos, Kubernetes, Spark

Distributed TensorFlow on Hadoop, Mesos, Kubernetes, SparkJan Wiegelmann Run more experiments faster and in parallel. Share and reproduce research. Go from research to real products.

Hivemall: Scalable machine learning library for Apache Hive/Spark/Pig

Hivemall: Scalable machine learning library for Apache Hive/Spark/PigDataWorks Summit/Hadoop Summit This document discusses Hivemall, an open source machine learning library for Apache Hive, Spark, and Pig. It provides concise summaries of Hivemall in 3 sentences or less:

Hivemall is a scalable machine learning library built as a collection of Hive UDFs that allows users to perform machine learning tasks like classification, regression, and recommendation using SQL queries. Hivemall supports many popular machine learning algorithms and can run in parallel on large datasets using Apache Spark, Hive, Pig, and other big data frameworks. The document outlines how to run a machine learning workflow with Hivemall on Spark, including loading data, building a model, and making predictions.

Extending Hadoop for Fun & Profit

Extending Hadoop for Fun & ProfitMilind Bhandarkar Apache Hadoop project, and the Hadoop ecosystem has been designed be extremely flexible, and extensible. HDFS, Yarn, and MapReduce combined have more that 1000 configuration parameters that allow users to tune performance of Hadoop applications, and more importantly, extend Hadoop with application-specific functionality, without having to modify any of the core Hadoop code.

In this talk, I will start with simple extensions, such as writing a new InputFormat to efficiently process video files. I will provide with some extensions that boost application performance, such as optimized compression codecs, and pluggable shuffle implementations. With refactoring of MapReduce framework, and emergence of YARN, as a generic resource manager for Hadoop, one can extend Hadoop further by implementing new computation paradigms.

I will discuss one such computation framework, that allows Message Passing applications to run in the Hadoop cluster alongside MapReduce. I will conclude by outlining some of our ongoing work, that extends HDFS, by removing namespace limitations of the current Namenode implementation.

Hopsworks in the cloud Berlin Buzzwords 2019

Hopsworks in the cloud Berlin Buzzwords 2019 Jim Dowling This talk, given at Berlin Buzzwords 2019, describes the recent progress in making Hopsworks a cloud-native platform, with HA data-center support added for HopsFS.

Spark Streaming and MLlib - Hyderabad Spark Group

Spark Streaming and MLlib - Hyderabad Spark GroupPhaneendra Chiruvella - A brief introduction to Spark Core

- Introduction to Spark Streaming

- A Demo of Streaming by evaluation top hashtags being used

- Introduction to Spark MLlib

- A Demo of MLlib by building a simple movie recommendation engine

HopsML Meetup talk on Hopsworks + ROCm/AMD June 2019

HopsML Meetup talk on Hopsworks + ROCm/AMD June 2019Jim Dowling - The document discusses Hopsworks now supporting both Nvidia (CUDA) and AMD (ROCm) GPUs for deep learning workloads.

- New AMD GPUs like the Vega R7 and upcoming Navi architecture GPUs will challenge Nvidia's dominance in deep learning by offering 1.25x higher performance per clock and 1.5x higher performance per watt along with GDDR6 memory and PCIe 4.0 support.

- Hopsworks provides a platform for distributed deep learning training and hyperparameter optimization using frameworks like TensorFlow and Spark that can run on ROCm-enabled AMD GPUs in addition to Nvidia GPUs.

Cloud Services for Big Data Analytics

Cloud Services for Big Data AnalyticsGeoffrey Fox We present a software model built on the Apache software stack (ABDS) that is well used in modern cloud computing, which we enhance with HPC concepts to derive HPC-ABDS.

We discuss layers in this stack

We give examples of integrating ABDS with HPC

We discuss how to implement this in a world of multiple infrastructures and evolving software environments for users, developers and administrators

We present Cloudmesh as supporting Software-Defined Distributed System as a Service or SDDSaaS with multiple services on multiple clouds/HPC systems.

We explain the functionality of Cloudmesh as well as the 3 administrator and 3 user modes supported

Practical Distributed Machine Learning Pipelines on Hadoop

Practical Distributed Machine Learning Pipelines on HadoopDataWorks Summit This document summarizes machine learning pipelines in Apache Spark using MLlib. It introduces Spark DataFrames for structured data manipulation and Apache Spark MLlib for building machine learning workflows. An example text classification pipeline is presented to demonstrate loading data, feature extraction, training a logistic regression model, and evaluating performance. Parameter tuning is discussed as an important part of the machine learning process.

Apache Spark 2.4 Bridges the Gap Between Big Data and Deep Learning

Apache Spark 2.4 Bridges the Gap Between Big Data and Deep LearningDataWorks Summit Big data and AI are joined at the hip: AI applications require massive amounts of training data to build state-of-the-art models. The problem is, big data frameworks like Apache Spark and distributed deep learning frameworks like TensorFlow don’t play well together due to the disparity between how big data jobs are executed and how deep learning jobs are executed.

Apache Spark 2.4 introduced a new scheduling primitive: barrier scheduling. User can indicate Spark whether it should be using the MapReduce mode or barrier mode at each stage of the pipeline, thus it’s easy to embed distributed deep learning training as a Spark stage to simplify the training workflow. In this talk, I will demonstrate how to build a real case pipeline which combines data processing with Spark and deep learning training with TensorFlow step by step. I will also share the best practices and hands-on experiences to show the power of this new features, and bring more discussion on this topic.

The Bitter Lesson of ML Pipelines

The Bitter Lesson of ML Pipelines Jim Dowling Wasp4All Conference talk nov 2019.

https://wasp-sweden.org/event/wasp4all-future-computing-platforms-for-x/

Why Apache Spark is the Heir to MapReduce in the Hadoop Ecosystem

Why Apache Spark is the Heir to MapReduce in the Hadoop EcosystemCloudera, Inc. Learn why Apache Spark is replacing MapReduce as the defailt general data processing engine for Hadoop ecosystem components

DASK and Apache Spark

DASK and Apache SparkDatabricks Gurpreet Singh from Microsoft gave a talk on scaling Python for data analysis and machine learning using DASK and Apache Spark. He discussed the challenges of scaling the Python data stack and compared options like DASK, Spark, and Spark MLlib. He provided examples of using DASK and PySpark DataFrames for parallel processing and showed how DASK-ML can be used to parallelize Scikit-Learn models. Distributed deep learning with tools like Project Hydrogen was also covered.

Generating Recommendations at Amazon Scale with Apache Spark and Amazon DSSTNE

Generating Recommendations at Amazon Scale with Apache Spark and Amazon DSSTNEDataWorks Summit/Hadoop Summit This document discusses using Apache Spark and Amazon DSSTNE to generate product recommendations at scale. It summarizes that Amazon uses Spark and Zeppelin notebooks to allow data scientists to develop queries in an agile manner. Deep learning jobs are run on GPUs using Amazon ECS, while CPU jobs run on Amazon EMR. DSSTNE is optimized for large sparse neural networks and allows defining networks in a human-readable JSON format to efficiently handle Amazon's large recommendation problems.

Hadoop Internals (2.3.0 or later)

Hadoop Internals (2.3.0 or later)Emilio Coppa Apache Hadoop: design and implementation. Lecture in the Big data computing course (http://twiki.di.uniroma1.it/twiki/view/BDC/WebHome), Department of Computer Science, Sapienza University of Rome.

Enterprise Scale Topological Data Analysis Using Spark

Enterprise Scale Topological Data Analysis Using SparkAlpine Data This document discusses scaling topological data analysis (TDA) using the Mapper algorithm to analyze large datasets. It describes how the authors built the first open-source scalable implementation of Mapper called Betti Mapper using Spark. Betti Mapper uses locality-sensitive hashing to bin data points and compute topological summaries on prototype points to achieve an 8-11x performance improvement over a naive Spark implementation. The key aspects of Betti Mapper that enable scaling to enterprise datasets are locality-sensitive hashing for sampling and using prototype points to reduce the distance matrix computation.

Foxvalley bigdata

Foxvalley bigdataTom Rogers What is Big Data and the Hadoop ecosystem? An introduction to an open source framework for using big data.

Architecting Your First Big Data Implementation

Architecting Your First Big Data ImplementationAdaryl "Bob" Wakefield, MBA This document provides an overview of architecting a first big data implementation. It defines key concepts like Hadoop, NoSQL databases, and real-time processing. It recommends asking questions about data, technology stack, and skills before starting a project. Distributed file systems, batch tools, and streaming systems like Kafka are important technologies for big data architectures. The document emphasizes moving from batch to real-time processing as a major opportunity.

Ad

More Related Content

What's hot (20)

Pivotal OSS meetup - MADlib and PivotalR

Pivotal OSS meetup - MADlib and PivotalRgo-pivotal With the explosion of big data, the need for fast and inexpensive analytics solutions has become a key basis of competition in many industries. Extracting the value of big data with analytics can be complex, and requires advanced skills.

At Pivotal, we are building open-source solutions (MADlib, PivotalR, PyMadlib) to simplify this process for the user, while maintaining the efficiency necessary for big data analysis.

This talk will provide information about MADlib, an open source library of SQL-based algorithms for machine learning, data mining and statistics that run at large scale within a database engine, with no need for data import/export to other tools.

It provides an overview of the library’s architecture and compares various statistical methods with those available in Apache Mahout.

We also introduce, PivotalR, a R-based wrapper for MADlib that allows data scientists and programmers to access power of MADlib along with the ease of use of R.

A Pipeline for Distributed Topic and Sentiment Analysis of Tweets on Pivotal ...

A Pipeline for Distributed Topic and Sentiment Analysis of Tweets on Pivotal ...Srivatsan Ramanujam Unstructured data is everywhere - in the form of posts, status updates, bloglets or news feeds in social media or in the form of customer interactions Call Center CRM. While many organizations study and monitor social media for tracking brand value and targeting specific customer segments, in our experience blending the unstructured data with the structured data in supplementing data science models has been far more effective than working with it independently.

In this talk we will show case an end-to-end topic and sentiment analysis pipeline we've built on the Pivotal Greenplum Database platform for Twitter feeds from GNIP, using open source tools like MADlib and PL/Python. We've used this pipeline to build regression models to predict commodity futures from tweets and in enhancing churn models for telecom through topic and sentiment analysis of call center transcripts. All of this was possible because of the flexibility and extensibility of the platform we worked with.

Spark 101

Spark 101Mohit Garg Spark is an in-memory cluster computing framework that provides high performance for large-scale data processing. It excels over Hadoop by keeping data in memory as RDDs (Resilient Distributed Datasets) for faster processing. The document provides an overview of Spark architecture including its core-based execution model compared to Hadoop's JVM-based model. It also demonstrates Spark's programming model using RDD transformations and actions through an example of log mining, showing how jobs are lazily evaluated and distributed across the cluster.

NextGen Apache Hadoop MapReduce

NextGen Apache Hadoop MapReduceHortonworks Arun C Murthy, Founder and Architect at Hortonworks Inc., talks about the upcoming Next Generation Apache Hadoop MapReduce framework at the Hadoop Summit, 2011.

Big learning 1.2

Big learning 1.2Mohit Garg This document provides a summary of practical machine learning on big data platforms. It begins with an introduction and agenda, then provides a quick brief on the machine learning process. It discusses the current landscape of open source tools, including evolutionary drivers and examples. It covers case studies from Twitter and their experience. Finally, it discusses architectural forces like Moore's Law and Kryder's Law that are shaping the field. The document aims to present a unified approach for machine learning on big data platforms and discuss how industry leaders are implementing these techniques.

Distributed TensorFlow on Hadoop, Mesos, Kubernetes, Spark

Distributed TensorFlow on Hadoop, Mesos, Kubernetes, SparkJan Wiegelmann Run more experiments faster and in parallel. Share and reproduce research. Go from research to real products.

Hivemall: Scalable machine learning library for Apache Hive/Spark/Pig

Hivemall: Scalable machine learning library for Apache Hive/Spark/PigDataWorks Summit/Hadoop Summit This document discusses Hivemall, an open source machine learning library for Apache Hive, Spark, and Pig. It provides concise summaries of Hivemall in 3 sentences or less:

Hivemall is a scalable machine learning library built as a collection of Hive UDFs that allows users to perform machine learning tasks like classification, regression, and recommendation using SQL queries. Hivemall supports many popular machine learning algorithms and can run in parallel on large datasets using Apache Spark, Hive, Pig, and other big data frameworks. The document outlines how to run a machine learning workflow with Hivemall on Spark, including loading data, building a model, and making predictions.

Extending Hadoop for Fun & Profit

Extending Hadoop for Fun & ProfitMilind Bhandarkar Apache Hadoop project, and the Hadoop ecosystem has been designed be extremely flexible, and extensible. HDFS, Yarn, and MapReduce combined have more that 1000 configuration parameters that allow users to tune performance of Hadoop applications, and more importantly, extend Hadoop with application-specific functionality, without having to modify any of the core Hadoop code.

In this talk, I will start with simple extensions, such as writing a new InputFormat to efficiently process video files. I will provide with some extensions that boost application performance, such as optimized compression codecs, and pluggable shuffle implementations. With refactoring of MapReduce framework, and emergence of YARN, as a generic resource manager for Hadoop, one can extend Hadoop further by implementing new computation paradigms.

I will discuss one such computation framework, that allows Message Passing applications to run in the Hadoop cluster alongside MapReduce. I will conclude by outlining some of our ongoing work, that extends HDFS, by removing namespace limitations of the current Namenode implementation.

Hopsworks in the cloud Berlin Buzzwords 2019

Hopsworks in the cloud Berlin Buzzwords 2019 Jim Dowling This talk, given at Berlin Buzzwords 2019, describes the recent progress in making Hopsworks a cloud-native platform, with HA data-center support added for HopsFS.

Spark Streaming and MLlib - Hyderabad Spark Group

Spark Streaming and MLlib - Hyderabad Spark GroupPhaneendra Chiruvella - A brief introduction to Spark Core

- Introduction to Spark Streaming

- A Demo of Streaming by evaluation top hashtags being used

- Introduction to Spark MLlib

- A Demo of MLlib by building a simple movie recommendation engine

HopsML Meetup talk on Hopsworks + ROCm/AMD June 2019

HopsML Meetup talk on Hopsworks + ROCm/AMD June 2019Jim Dowling - The document discusses Hopsworks now supporting both Nvidia (CUDA) and AMD (ROCm) GPUs for deep learning workloads.

- New AMD GPUs like the Vega R7 and upcoming Navi architecture GPUs will challenge Nvidia's dominance in deep learning by offering 1.25x higher performance per clock and 1.5x higher performance per watt along with GDDR6 memory and PCIe 4.0 support.

- Hopsworks provides a platform for distributed deep learning training and hyperparameter optimization using frameworks like TensorFlow and Spark that can run on ROCm-enabled AMD GPUs in addition to Nvidia GPUs.

Cloud Services for Big Data Analytics

Cloud Services for Big Data AnalyticsGeoffrey Fox We present a software model built on the Apache software stack (ABDS) that is well used in modern cloud computing, which we enhance with HPC concepts to derive HPC-ABDS.

We discuss layers in this stack

We give examples of integrating ABDS with HPC

We discuss how to implement this in a world of multiple infrastructures and evolving software environments for users, developers and administrators

We present Cloudmesh as supporting Software-Defined Distributed System as a Service or SDDSaaS with multiple services on multiple clouds/HPC systems.

We explain the functionality of Cloudmesh as well as the 3 administrator and 3 user modes supported

Practical Distributed Machine Learning Pipelines on Hadoop

Practical Distributed Machine Learning Pipelines on HadoopDataWorks Summit This document summarizes machine learning pipelines in Apache Spark using MLlib. It introduces Spark DataFrames for structured data manipulation and Apache Spark MLlib for building machine learning workflows. An example text classification pipeline is presented to demonstrate loading data, feature extraction, training a logistic regression model, and evaluating performance. Parameter tuning is discussed as an important part of the machine learning process.

Apache Spark 2.4 Bridges the Gap Between Big Data and Deep Learning

Apache Spark 2.4 Bridges the Gap Between Big Data and Deep LearningDataWorks Summit Big data and AI are joined at the hip: AI applications require massive amounts of training data to build state-of-the-art models. The problem is, big data frameworks like Apache Spark and distributed deep learning frameworks like TensorFlow don’t play well together due to the disparity between how big data jobs are executed and how deep learning jobs are executed.

Apache Spark 2.4 introduced a new scheduling primitive: barrier scheduling. User can indicate Spark whether it should be using the MapReduce mode or barrier mode at each stage of the pipeline, thus it’s easy to embed distributed deep learning training as a Spark stage to simplify the training workflow. In this talk, I will demonstrate how to build a real case pipeline which combines data processing with Spark and deep learning training with TensorFlow step by step. I will also share the best practices and hands-on experiences to show the power of this new features, and bring more discussion on this topic.

The Bitter Lesson of ML Pipelines

The Bitter Lesson of ML Pipelines Jim Dowling Wasp4All Conference talk nov 2019.

https://wasp-sweden.org/event/wasp4all-future-computing-platforms-for-x/

Why Apache Spark is the Heir to MapReduce in the Hadoop Ecosystem

Why Apache Spark is the Heir to MapReduce in the Hadoop EcosystemCloudera, Inc. Learn why Apache Spark is replacing MapReduce as the defailt general data processing engine for Hadoop ecosystem components

DASK and Apache Spark

DASK and Apache SparkDatabricks Gurpreet Singh from Microsoft gave a talk on scaling Python for data analysis and machine learning using DASK and Apache Spark. He discussed the challenges of scaling the Python data stack and compared options like DASK, Spark, and Spark MLlib. He provided examples of using DASK and PySpark DataFrames for parallel processing and showed how DASK-ML can be used to parallelize Scikit-Learn models. Distributed deep learning with tools like Project Hydrogen was also covered.

Generating Recommendations at Amazon Scale with Apache Spark and Amazon DSSTNE

Generating Recommendations at Amazon Scale with Apache Spark and Amazon DSSTNEDataWorks Summit/Hadoop Summit This document discusses using Apache Spark and Amazon DSSTNE to generate product recommendations at scale. It summarizes that Amazon uses Spark and Zeppelin notebooks to allow data scientists to develop queries in an agile manner. Deep learning jobs are run on GPUs using Amazon ECS, while CPU jobs run on Amazon EMR. DSSTNE is optimized for large sparse neural networks and allows defining networks in a human-readable JSON format to efficiently handle Amazon's large recommendation problems.

Hadoop Internals (2.3.0 or later)

Hadoop Internals (2.3.0 or later)Emilio Coppa Apache Hadoop: design and implementation. Lecture in the Big data computing course (http://twiki.di.uniroma1.it/twiki/view/BDC/WebHome), Department of Computer Science, Sapienza University of Rome.

Enterprise Scale Topological Data Analysis Using Spark

Enterprise Scale Topological Data Analysis Using SparkAlpine Data This document discusses scaling topological data analysis (TDA) using the Mapper algorithm to analyze large datasets. It describes how the authors built the first open-source scalable implementation of Mapper called Betti Mapper using Spark. Betti Mapper uses locality-sensitive hashing to bin data points and compute topological summaries on prototype points to achieve an 8-11x performance improvement over a naive Spark implementation. The key aspects of Betti Mapper that enable scaling to enterprise datasets are locality-sensitive hashing for sampling and using prototype points to reduce the distance matrix computation.

Generating Recommendations at Amazon Scale with Apache Spark and Amazon DSSTNE

Generating Recommendations at Amazon Scale with Apache Spark and Amazon DSSTNEDataWorks Summit/Hadoop Summit

Similar to Simple, Modular and Extensible Big Data Platform Concept (20)

Foxvalley bigdata

Foxvalley bigdataTom Rogers What is Big Data and the Hadoop ecosystem? An introduction to an open source framework for using big data.

Architecting Your First Big Data Implementation

Architecting Your First Big Data ImplementationAdaryl "Bob" Wakefield, MBA This document provides an overview of architecting a first big data implementation. It defines key concepts like Hadoop, NoSQL databases, and real-time processing. It recommends asking questions about data, technology stack, and skills before starting a project. Distributed file systems, batch tools, and streaming systems like Kafka are important technologies for big data architectures. The document emphasizes moving from batch to real-time processing as a major opportunity.

1.demystifying big data & hadoop

1.demystifying big data & hadoopdatabloginfo This document provides an overview of big data and Hadoop. It defines big data as large, complex datasets that are difficult to store and process using traditional systems. Examples of big data sources are listed. Hadoop is introduced as an open source framework for distributed processing of large datasets across commodity computers. Key components of Hadoop like HDFS for data storage and MapReduce for parallel processing are described. Reasons for moving to Hadoop include its ability to handle large, unstructured datasets across clusters of servers in a scalable way. The future of data growth and the Hadoop ecosystem are also discussed briefly.

Hadoop and the Data Warehouse: When to Use Which

Hadoop and the Data Warehouse: When to Use Which DataWorks Summit In recent years, Apache™ Hadoop® has emerged from humble beginnings to disrupt the traditional disciplines of information management. As with all technology innovation, hype is rampant, and data professionals are easily overwhelmed by diverse opinions and confusing messages.

Even seasoned practitioners sometimes miss the point, claiming for example that Hadoop replaces relational databases and is becoming the new data warehouse. It is easy to see where these claims originate since both Hadoop and Teradata® systems run in parallel, scale up to enormous data volumes and have shared-nothing architectures. At a conceptual level, it is easy to think they are interchangeable, but the differences overwhelm the similarities. This session will shed light on the differences and help architects, engineering executives, and data scientists identify when to deploy Hadoop and when it is best to use MPP relational database in a data warehouse, discovery platform, or other workload-specific applications.

Two of the most trusted experts in their fields, Steve Wooledge, VP of Product Marketing from Teradata and Jim Walker of Hortonworks will examine how big data technologies are being used today by practical big data practitioners.

Big Data and Cloud Computing

Big Data and Cloud ComputingFarzad Nozarian This document discusses cloud and big data technologies. It provides an overview of Hadoop and its ecosystem, which includes components like HDFS, MapReduce, HBase, Zookeeper, Pig and Hive. It also describes how data is stored in HDFS and HBase, and how MapReduce can be used for parallel processing across large datasets. Finally, it gives examples of using MapReduce to implement algorithms for word counting, building inverted indexes and performing joins.

Fundamentals of big data analytics and Hadoop

Fundamentals of big data analytics and HadoopArchana Gopinath What is Big Data Analytics?

Tools of Big Data, types of Big Data & Basics of Hadoop, Modules and Benefits of Hadoop.

Big Data Analytics with Hadoop

Big Data Analytics with HadoopPhilippe Julio Hadoop, flexible and available architecture for large scale computation and data processing on a network of commodity hardware.

Big data and hadoop

Big data and hadoopPrashanth Yennampelli This document discusses big data and the Apache Hadoop framework. It defines big data as large, complex datasets that are difficult to process using traditional tools. Hadoop is an open-source framework for distributed storage and processing of big data across commodity hardware. It has two main components - the Hadoop Distributed File System (HDFS) for storage, and MapReduce for processing. HDFS stores data across clusters of machines with redundancy, while MapReduce splits tasks across processors and handles shuffling and sorting of data. Hadoop allows cost-effective processing of large, diverse datasets and has become a standard for big data.

M. Florence Dayana - Hadoop Foundation for Analytics.pptx

M. Florence Dayana - Hadoop Foundation for Analytics.pptxDr.Florence Dayana Hadoop Foundation for Analytics

History of Hadoop

Features of Hadoop

Key Advantages of Hadoop

Why Hadoop

Versions of Hadoop

Eco Projects

Essential of Hadoop ecosystem

RDBMS versus Hadoop

Key Aspects of Hadoop

Components of Hadoop

How to use Big Data and Data Lake concept in business using Hadoop and Spark...

How to use Big Data and Data Lake concept in business using Hadoop and Spark...Institute of Contemporary Sciences Big Data is the reality of modern business: from big companies to small ones, everybody is trying to find their own benefit. Big Data technologies are not meant to replace traditional ones, but to be complementary to them. In this presentation you will hear what is Big Data and Data Lake and what are the most popular technologies used in Big Data world. We will also speak about Hadoop and Spark, and how they integrate with traditional systems and their benefits.

Big Data Practice_Planning_steps_RK

Big Data Practice_Planning_steps_RKRajesh Jayarman This document provides an overview of big data fundamentals and considerations for setting up a big data practice. It discusses key big data concepts like the four V's of big data. It also outlines common big data questions around business context, architecture, skills, and presents sample reference architectures. The document recommends starting a big data practice by identifying use cases, gaining management commitment, and setting up a center of excellence. It provides an example use case of retail web log analysis and presents big data architecture patterns.

Big Data Open Source Technologies

Big Data Open Source Technologiesneeraj rathore This presentation provides an overview of big data open source technologies. It defines big data as large amounts of data from various sources in different formats that traditional databases cannot handle. It discusses that big data technologies are needed to analyze and extract information from extremely large and complex data sets. The top technologies are divided into data storage, analytics, mining and visualization. Several prominent open source technologies are described for each category, including Apache Hadoop, Cassandra, MongoDB, Apache Spark, Presto and ElasticSearch. The presentation provides details on what each technology is used for and its history.

Big Data Open Source Tools and Trends: Enable Real-Time Business Intelligence...

Big Data Open Source Tools and Trends: Enable Real-Time Business Intelligence...Perficient, Inc. This document discusses big data tools and trends that enable real-time business intelligence from machine logs. It provides an overview of Perficient, a leading IT consulting firm, and introduces the speakers Eric Roch and Ben Hahn. It then covers topics like what constitutes big data, how machine data is a source of big data, and how tools like Hadoop, Storm, Elasticsearch can be used to extract insights from machine data in real-time through open source solutions and functional programming approaches like MapReduce. It also demonstrates a sample data analytics workflow using these tools.

MODULE 1: Introduction to Big Data Analytics.pptx

MODULE 1: Introduction to Big Data Analytics.pptxNiramayKolalle This presentation introduces Big Data Analytics, its significance, and its impact on various industries. It explains how massive data generation, processing frameworks, and analytical techniques are transforming decision-making and business intelligence.

1. Introduction to Big Data

The world generates over 400 million terabytes of data daily.

Each person contributes approximately 0.0635 terabytes of data every day.

India has 151 data centers, playing a key role in global data management.

2. Characteristics of Big Data

The presentation discusses the 3Vs (Volume, Velocity, Variety) and extends to more Vs such as:

Veracity (trustworthiness of data),

Value (usefulness of data),

Variability (inconsistent data patterns),

Visualization (interpreting data effectively).

3. Big Data Technologies and Applications

Hadoop Ecosystem: Open-source framework enabling scalable and distributed processing.

HDFS (Hadoop Distributed File System): Stores large files efficiently across multiple servers.

MapReduce: Processes large datasets using parallel computation.

Apache Spark: In-memory processing framework, 100x faster than MapReduce.

4. Big Data Storage and Processing Tools

Data Storage: HDFS, HBase (NoSQL database).

Data Processing: MapReduce, Apache Spark.

Query Execution: Hive, Pig, Apache Drill.

Machine Learning: Mahout, MLlib.

Cluster Management: Zookeeper, Ambari.

5. Hadoop Ecosystem Components

YARN (Yet Another Resource Negotiator): Allocates resources for parallel processing.

Apache Hive: SQL-like querying for Hadoop.

Apache Pig: High-level data flow language reducing coding complexity.

Apache Kafka: Real-time message streaming platform.

Apache HBase: NoSQL database for Hadoop.

Apache Oozie: Workflow scheduler for handling large-scale data jobs.

Apache Flume & Sqoop: Data ingestion tools for structured and unstructured data.

6. Data Processing Frameworks

Batch Processing: Suitable for historical data analysis (Hadoop, MapReduce).

Real-Time Processing: Required for instant decision-making (Apache Kafka, Apache Storm).

7. Big Data Applications

Healthcare: Predictive analytics for disease outbreaks and patient monitoring.

Retail & E-Commerce: Personalized recommendations and fraud detection.

Finance: Risk assessment, algorithmic trading, fraud prevention.

Social Media & Advertising: Sentiment analysis, targeted marketing.

IoT & Smart Cities: Traffic monitoring, energy management.

8. Challenges and Limitations of Big Data

Data Privacy & Security: Handling sensitive user information securely.

Processing Speed: Traditional systems struggle with real-time analytics.

Scalability: Managing exponentially growing data volumes.

Conclusion:

This presentation covers the fundamental concepts, technologies, and real-world applications of Big Data Analytics. By leveraging Hadoop, Spark, and other big data tools, businesses can extract valuable insights, enhance efficiency, and improve decision-making processes. These detailed descriptions

Apache Hadoop Hive

Apache Hadoop HiveSome corner at the Laboratory The initiation of The Hadoop Apache Hive began in 2007 by Facebook due to its data growth.

This ETL system began to fail over few years as more people joined Facebook.

In August 2008, Facebook decided to move to scalable a more scalable open-source Hadoop environment; Hive

Facebook, Netflix and Amazons support the Apache Hive SQL now known as the HiveQL

Azure Cafe Marketplace with Hortonworks March 31 2016

Azure Cafe Marketplace with Hortonworks March 31 2016Joan Novino Azure Big Data: “Got Data? Go Modern and Monetize”.

In this session you will learn how to architected, developed, and build completely in the open, Hortonworks Data Platform (HDP) that provides an enterprise ready data platform to adopt a Modern Data Architecture.

Hadoop in a Nutshell

Hadoop in a NutshellAnthony Thomas The document provides an overview of Hadoop, including:

- What Hadoop is and its core modules like HDFS, YARN, and MapReduce.

- Reasons for using Hadoop like its ability to process large datasets faster across clusters and provide predictive analytics.

- When Hadoop should and should not be used, such as for real-time analytics versus large, diverse datasets.

- Options for deploying Hadoop including as a service on cloud platforms, on infrastructure as a service providers, or on-premise with different distributions.

- Components that make up the Hadoop ecosystem like Pig, Hive, HBase, and Mahout.

Big data and hadoop overvew

Big data and hadoop overvewKunal Khanna The document provides an overview of big data and Hadoop, discussing what big data is, current trends and challenges, approaches to solving big data problems including distributed computing, NoSQL, and Hadoop, and introduces HDFS and the MapReduce framework in Hadoop for distributed storage and processing of large datasets.

Hadoop - Architectural road map for Hadoop Ecosystem

Hadoop - Architectural road map for Hadoop Ecosystemnallagangus This document provides an overview of an architectural roadmap for implementing a Hadoop ecosystem. It begins with definitions of big data and Hadoop's history. It then describes the core components of Hadoop, including HDFS, MapReduce, YARN, and ecosystem tools for abstraction, data ingestion, real-time access, workflow, and analytics. Finally, it discusses security enhancements that have been added to Hadoop as it has become more mainstream.

Cloudera Impala - San Diego Big Data Meetup August 13th 2014

Cloudera Impala - San Diego Big Data Meetup August 13th 2014cdmaxime Cloudera Impala presentation to San Diego Big Data Meetup (http://www.meetup.com/sdbigdata/events/189420582/)

How to use Big Data and Data Lake concept in business using Hadoop and Spark...

How to use Big Data and Data Lake concept in business using Hadoop and Spark...Institute of Contemporary Sciences

Ad

Recently uploaded (20)

Adobe Analytics NOAM Central User Group April 2025 Agent AI: Uncovering the S...

Adobe Analytics NOAM Central User Group April 2025 Agent AI: Uncovering the S...gmuir1066 Discussion of Highlights of Adobe Summit 2025

Simple_AI_Explanation_English somplr.pptx

Simple_AI_Explanation_English somplr.pptxssuser2aa19f Ai artificial intelligence ai with python course first upload

computer organization and assembly language.docx

computer organization and assembly language.docxalisoftwareengineer1 computer organization and assembly language : its about types of programming language along with variable and array description..https://www.nfciet.edu.pk/

Day 1 - Lab 1 Reconnaissance Scanning with NMAP, Vulnerability Assessment wit...

Day 1 - Lab 1 Reconnaissance Scanning with NMAP, Vulnerability Assessment wit...Abodahab IHOY78T6R5E45TRYTUYIU

How to join illuminati Agent in uganda call+256776963507/0741506136

How to join illuminati Agent in uganda call+256776963507/0741506136illuminati Agent uganda call+256776963507/0741506136 How to join illuminati Agent in uganda call+256776963507/0741506136

AI Competitor Analysis: How to Monitor and Outperform Your Competitors

AI Competitor Analysis: How to Monitor and Outperform Your CompetitorsContify AI competitor analysis helps businesses watch and understand what their competitors are doing. Using smart competitor intelligence tools, you can track their moves, learn from their strategies, and find ways to do better. Stay smart, act fast, and grow your business with the power of AI insights.

For more information please visit here https://www.contify.com/

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...James Francis Paradigm Asset Management By James Francis, CEO of Paradigm Asset Management

In the landscape of urban safety innovation, Mt. Vernon is emerging as a compelling case study for neighboring Westchester County cities. The municipality’s recently launched Public Safety Camera Program not only represents a significant advancement in community protection but also offers valuable insights for New Rochelle and White Plains as they consider their own safety infrastructure enhancements.

FPET_Implementation_2_MA to 360 Engage Direct.pptx

FPET_Implementation_2_MA to 360 Engage Direct.pptxssuser4ef83d Engage Direct 360 marketing optimization sas entreprise miner

How iCode cybertech Helped Me Recover My Lost Funds

How iCode cybertech Helped Me Recover My Lost Fundsireneschmid345 I was devastated when I realized that I had fallen victim to an online fraud, losing a significant amount of money in the process. After countless hours of searching for a solution, I came across iCode cybertech. From the moment I reached out to their team, I felt a sense of hope that I can recommend iCode Cybertech enough for anyone who has faced similar challenges. Their commitment to helping clients and their exceptional service truly set them apart. Thank you, iCode cybertech, for turning my situation around!

[email protected]

Thingyan is now a global treasure! See how people around the world are search...

Thingyan is now a global treasure! See how people around the world are search...Pixellion We explored how the world searches for 'Thingyan' and 'သင်္ကြန်' and this year, it’s extra special. Thingyan is now officially recognized as a World Intangible Cultural Heritage by UNESCO! Dive into the trends and celebrate with us!

How to join illuminati Agent in uganda call+256776963507/0741506136

How to join illuminati Agent in uganda call+256776963507/0741506136illuminati Agent uganda call+256776963507/0741506136

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...James Francis Paradigm Asset Management

Ad

Simple, Modular and Extensible Big Data Platform Concept

- 1. Beyond the Big Elephant Satish Mohan

- 2. Data Big data ecosystem is evolving and changing rapidly. • Data grows faster than Moore’s law • massive, unstructured, and dirty • don’t always know what questions to answer • Driving architectural transition • scale up -> scale out • compute, network, storage 0 2 4 6 8 10 12 14 2010 2011 2012 2013 2014 2015 Moore's Law Overall Data

- 3. Growing Landscape Databases / Data warehousing Dremel Hadoop Data Analysis & Platforms Operational Big Data search Business Intelligence Data Mining jHepWork Social Corona Graphs Document Store Raven DB KeyValue Multimodel Object databases Picolisp XML Databses Grid Solutions Multidimensional Multivalue database Data aggregation Created by: www.bigdata-startups.com

- 4. Growing Landscape Databases / Data warehousing Dremel Hadoop Data Analysis & Platforms Operational Big Data search Business Intelligence Data Mining jHepWork Social Corona Graphs Document Store Raven DB KeyValue Multimodel Object databases Picolisp XML Databses Grid Solutions Multidimensional Multivalue database Data aggregation Created by: www.bigdata-startups.com A major driver of IT spending • $232 billion in spending through 2016 (Gartner) • $3.6 billion injected into startups focused on big data (2013) ! ! ! Wikibon big data market distribution ! ! ! ! Services 44% Software 19% Hardware 37% http://wikibon.org/wiki/v/Big_Data_Vendor_Revenue_and_Market_Forecast_2012-2017

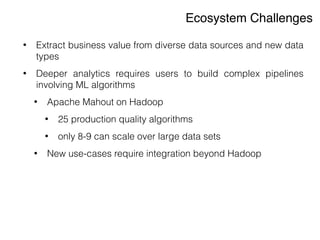

- 5. Ecosystem Challenges • Building a working data processing environment has become a challenging and highly complex task. • Exponential growth of the frameworks, standard libraries and transient dependencies • Constant flow of new features, bug fixes, and other changes are almost a disaster • Struggle to convert early experiments into a scalable environment for managing data (however big) !

- 6. Ecosystem Challenges • Extract business value from diverse data sources and new data types • Deeper analytics requires users to build complex pipelines involving ML algorithms • Apache Mahout on Hadoop • 25 production quality algorithms • only 8-9 can scale over large data sets • New use-cases require integration beyond Hadoop

- 7. Apache Hadoop • The de-facto standard for data processing is rarely, if ever, used in isolation. • input comes from other frameworks • output get consumed by other frameworks • Good for batch processing and data-parallel processing • Beyond Hadoop Map-Reduce • real-time computation and programming models • multiple topologies, mixed workloads, multi-tenancy • reduced latency between batch and end-use services

- 8. Hadoop Ecosystem - Technology Partnerships Jan 2013 Data, Datameer Hadoop software distribution ties into Active Directory, Microsoft's System Center, and Microsoft virtualization technologies to simplify deployment and management.

- 9. Platform Goals An integrated infrastructure that allows emerging technologies to take advantage of our existing ecosystem and keep pace with end use cases • Consistent, compact and flexible means of integrating, deploying and managing containerised big data applications, services and frameworks • Unification of data computation models: batch, interactive, and streaming. • Efficient resource isolation and sharing models that allow multiple services and frameworks to leverage resources across shared pools on demand • Simple, Modular and Extensible

- 10. Key Elements Resource Manager Unified Framework Applications / Frameworks / Services DistributedStorage AbstractAPIs

- 11. Platform - Core Applications / Services / Frameworks Unified Framework Distributed Storage SPARK AbstractAPIs RedHatStorage Resource Manager MESOS SharkSQL Streaming Core Partner Community

- 12. Platform - Extend through Partnerships Applications / Services / Frameworks Unified Framework Distributed Storage SPARK AbstractAPIs RedHatStorage HDFS Tachyon MapR Resource Manager MESOS YARN SharkSQL Streaming GraphX MLlib BlinkDB Hadoop Hive Storm MPI Marathon Chronos Core Partner Community

- 13. Perfection is not the immediate goal. Abstraction is what we need

- 14. Backup Slides

- 15. Mesos - mesos.apache.org An abstracted scheduler/executor layer, to receive/consume resource offers and thus perform tasks or run services, atop a distributed file system (RHS by default) • Fault-tolerant replicated master using ZooKeeper • Scalability to 10,000s of nodes • Isolation between tasks with Linux Containers • Multi-resource scheduling (memory and CPU aware) • Java, Python and C++ APIs • scalability to 10,000s of nodes • Primarily written in C++ ! ! Resource Manager

- 16. Spark - spark.incubator.apache.org Unified framework for large scale data processing. • Fast and expressive framework interoperable with Apache Hadoop • Key idea: RDDs “resilient distributed datasets” that can automatically be rebuilt on failure • Keep large working sets in memory • Fault tolerance mechanism based on “lineage” • Unifies batch, streaming, interactive computational models • In-memory cluster computing framework for applications that reuse working sets of data • Iterative algorithms: machine learning, graph processing, optimization • Interactive data mining: order of magnitude faster than disk-based tools ! • Powerful APIs in Scala, Python, Java • Interactive shell ! Unified Framework Streaming Interactive Batch Unified Framework

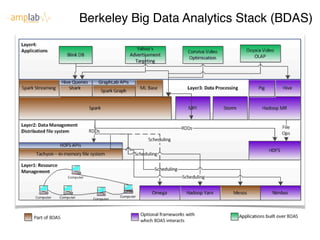

- 17. Berkeley Big Data Analytics Stack (BDAS) 7 Berkeley Big-data Analytics Stack (BDAS) 7 Berkeley Big-data Analytics Stack (BD y Big-data Analytics Stack (BDAS) 7 Berkeley Big-data Analy