Building NLP solutions using Python

Download as PPTX, PDF2 likes501 views

Charlotte Bots and AI group meetup presentation for September 2018 on Building Natural Language Processing solutions

1 of 23

Downloaded 34 times

Ad

Recommended

Building NLP solutions for Davidson ML Group

Building NLP solutions for Davidson ML Groupbotsplash.com This document provides an overview of natural language processing (NLP) and discusses various NLP applications and techniques. It covers the scope of NLP including natural language understanding, generation, and speech recognition/synthesis. Example applications mentioned include chatbots, sentiment analysis, text classification, summarization, and more. Popular Python packages for NLP like NLTK, SpaCy, and Gensim are also highlighted. Techniques like word embeddings, neural networks, and deep learning approaches to NLP are briefly outlined.

Feature Engineering for NLP

Feature Engineering for NLPBill Liu Monthly AI Tech Talks in Toronto 2019-08-28

https://www.meetup.com/aittg-toronto

The talk will cover the end-to-end details including contextual and linguistic feature extraction, vectorization, n-grams, topic modeling, named entity resolution which are based on concepts from mathematics, information retrieval and natural language processing. We will also be diving into more advanced feature engineering strategies such as word2vec, GloVe and fastText that leverage deep learning models.

In addition, attendees will learn how to combine NLP features with numeric and categorical features and analyze the feature importance from the resulting models.

The following libraries will be used to demonstrate the aforementioned feature engineering techniques: spaCy, Gensim, fasText and Keras in Python.

https://www.meetup.com/aittg-toronto/events/261940480/

Natural language processing: feature extraction

Natural language processing: feature extractionGabriel Hamilton This document discusses natural language processing (NLP) and feature extraction. It explains that NLP can be used for applications like search, translation, and question answering. The document then discusses extracting features from text like paragraphs, sentences, words, parts of speech, entities, sentiment, topics, and assertions. Specific features discussed in more detail include frequency, relationships between words, language features, supervised machine learning, classifiers, encoding words, word vectors, and parse trees. Tools mentioned for NLP include Google Cloud NLP, Spacy, OpenNLP, and Stanford Core NLP.

TechTalk #13 Grokking: Marrying Elasticsearch with NLP to solve real-world se...

TechTalk #13 Grokking: Marrying Elasticsearch with NLP to solve real-world se...Grokking VN This document discusses marrying natural language processing (NLP) techniques with Elasticsearch to solve real-world search problems. It outlines the key ingredients of gathering and extracting content from data sources, preprocessing text, and modeling terms, phrases and entities. It then describes how Elasticsearch can be used for basic analysis, filtering, recommendations and deduplication. Specific NLP techniques like key phrase extraction, named entity recognition and semantic hashing are proposed to improve search quality beyond bag-of-words approaches. The document concludes with a summary of considering analysis, queries, indexing versus search tradeoffs, and paying attention to the data input step.

Natural Language Search in Solr

Natural Language Search in SolrTommaso Teofili This document discusses using natural language processing (NLP) techniques to enable natural language search in Apache Solr. It describes integrating Apache UIMA with Solr to allow NLP algorithms to analyze documents and queries. Custom Lucene analyzers and a QParserPlugin are used to index enriched fields and extract concepts from queries. The approach aims to improve search recall and precision by understanding language.

Webinar: OpenNLP and Solr for Superior Relevance

Webinar: OpenNLP and Solr for Superior RelevanceLucidworks Lucidworks Senior Software Engineer and Solr Committer Steve Rowe explains how to increase relevance using Solr with Apache OpenNLP.

The power of community: training a Transformer Language Model on a shoestring

The power of community: training a Transformer Language Model on a shoestringSujit Pal I recently participated in a community event to train an ALBERT language model for the Bengali language. The event was organized by Neuropark, Hugging Face, and Yandex Research. The training was done collaboratively in a distributed manner using free GPU resources provided by Colab and Kaggle. Volunteers were recruited on Twitter and project coordination happened on Discord. At its peak, there were approximately 50 volunteers from all over the world simultaneously engaged in training the model. The distributed training was done on the Hivemind platform from Yandex Research, and the software to train the model in a data-parallel manner was developed by Hugging Face. In this talk I provide my perspective of the project as a somewhat curious participant. I will describe the Hivemind platform, the training regimen, and the evaluation of the language model on downstream tasks. I will also cover some challenges we encountered that were peculiar to the Bengali language (and Indic languages in general).

Natural Language Processing with Graph Databases and Neo4j

Natural Language Processing with Graph Databases and Neo4jWilliam Lyon Originally presented at DataDay Texas in Austin, this presentation shows how a graph database such as Neo4j can be used for common natural language processing tasks, such as building a word adjacency graph, mining word associations, summarization and keyword extraction and content recommendation.

Build Mandarin AI Conversational Agent with Rasa

Build Mandarin AI Conversational Agent with RasaHao-Yuan Chen Rasa 為開源的機器學習框架,提供 Python 開發者一個便於打造基於文本和語音互動的智慧型對話代理人,然而,在中文的場景下,Rasa 既有的元件往往表現不出令人滿意的品質。

本次分享將以 Rasa 範例「餐廳搜尋助理」 出發,讓我們透過 ckiptagger 及 客製化元件,看著他如何學會繁體中文。

An Introduction to NLP4L - Natural Language Processing Tool for Apache Lucene...

An Introduction to NLP4L - Natural Language Processing Tool for Apache Lucene...Lucidworks The document describes the NLP4L framework, which aims to improve search experiences for Lucene-based search systems. It provides reference implementations of plugins and corpora that use NLP/ML technologies to generate models, dictionaries, and indexes. It also includes an interface for users to examine output dictionaries since NLP/ML results may not be perfect. The framework is pluggable and processes data sources through various NLP tasks to generate tagged corpora, dictionaries, and document vectors to enhance search features like suggestions, translations, entity extraction, and ranking.

The State of #NLProc

The State of #NLProcVsevolod Dyomkin A brief overview of the current state of Natural Language Processing: main tasks, approaches, models, data sets

Learning to Rank Presentation (v2) at LexisNexis Search Guild

Learning to Rank Presentation (v2) at LexisNexis Search GuildSujit Pal An introduction to Learning to Rank, with case studies using RankLib with and without plugins provided by Solr and Elasticsearch. RankLib is a library of learning to rank algorithms, which includes some popular LTR algorithms such as LambdaMART, RankBoost, RankNet, etc.

Nikko Ström at AI Frontiers: Deep Learning in Alexa

Nikko Ström at AI Frontiers: Deep Learning in AlexaAI Frontiers Alexa is the service that understands spoken language in Amazon Echo and other voice enabled devices. Alexa relies heavily on machine learning and deep neural networks for speech recognition, text-to-speech, language understanding, skill selection, and more. In this talk Nikko presents an overview of deep learning in Alexa and gives a few illustrating examples.

Introduction to Natural Language Processing (NLP)

Introduction to Natural Language Processing (NLP)WingChan46 This document introduces natural language processing (NLP) and describes how it works. NLP involves using AI techniques like machine learning to understand and generate human language. It converts unstructured text into structured knowledge. Key NLP tasks include entity recognition, topic analysis, sentiment analysis, and classification. Common applications are spellcheckers, recommendation systems, voice assistants, search engines, and language translation. An example project called Switch uses NLP techniques on Twitter data to build a job search engine. It extracts entities, classifies tweets, and provides a website for users to search relevant job postings.

Hacking Lucene and Solr for Fun and Profit

Hacking Lucene and Solr for Fun and Profitlucenerevolution This document summarizes Grant Ingersoll's presentation on hacking Lucene and Solr for fun and profit. It discusses using Lucene and Solr for tasks beyond classic text search like data analysis, spatial search, and question answering. It provides examples of using Lucene for classification, recommendations, and indexing non-spatial data like time ranges spatially. Resources for further information are also listed.

Webinar: Simpler Semantic Search with Solr

Webinar: Simpler Semantic Search with SolrLucidworks Hear from Lucidworks Senior Solutions Consultant Ted Sullivan about how you can leverage Apache Solr and Lucidworks Fusion to improve semantic awareness of your search applications.

An Introduction to Natural Language Processing

An Introduction to Natural Language ProcessingTyrone Systems Learn about how Natural Language Processing in AI can be used and how it applies to you in the real world.

You can learn about NLP concepts, Pre-processing steps, Vectorization Methods, Generative and Unsupervised methods. All the resource is available for you to grow your knowledge and skills about Natural Language Processing webinar!

Sentiment Analysis Using Solr

Sentiment Analysis Using SolrPradeep Pujari Solr is an open source, widely used, popular IR machine. It can be used for simple sentiment analysis and sentiment retrieval tool. Its multi-language analyzers together with UIMA (Unstructured Information Management Architecture) framework can be extended for sentiment extraction. Each sentence passes through a series of pluggable annotators. Entity and its associated polarity are detected for each sentence. Polarity of each sentence is stored into Solr index. Persistent model files can be created from training data and accessed at run time.

Semantic & Multilingual Strategies in Lucene/Solr: Presented by Trey Grainger...

Semantic & Multilingual Strategies in Lucene/Solr: Presented by Trey Grainger...Lucidworks This document outlines Trey Grainger's presentation on semantic and multilingual strategies in Lucene/Solr. It discusses text analysis, language-specific analysis chains, multilingual search strategies like having separate fields or indexes per language or putting all languages in one field. It also covers automatic language identification, semantic search, and concludes with a discussion of one field to handle all languages.

Shrinking the Haystack" using Solr and OpenNLP

Shrinking the Haystack" using Solr and OpenNLPlucenerevolution Presented by Wes Caldwell, Chief Architect, ISS, Inc.

The customers in the Intelligence Community and Department of Defense that ISS services have a big data challenge. The sheer volume of data being produced and ultimately consumed by large enterprise systems has grown exponentially in a short amount of time. Providing analysts the ability to interpret meaning, and act on time-critical information is a top priority for ISS. In this session, we will explore our journey into building a search and discovery system for our customers that combines Solr, OpenNLP, and other open source technologies to enable analysts to "Shrink the Haystack" into actionable information.

NLP from scratch

NLP from scratch Bryan Gummibearehausen The document presents a neural network architecture for various natural language processing (NLP) tasks such as part-of-speech tagging, chunking, named entity recognition, and semantic role labeling. It shows results comparable to state-of-the-art using word embeddings learned from a large unlabeled corpus, and improved results from joint training of the tasks. The network transforms words into feature vectors, extracts higher-level features through neural layers, and is trained via backpropagation. Benchmark results demonstrate performance on par with traditional task-specific systems without heavy feature engineering.

Vectorization - Georgia Tech - CSE6242 - March 2015

Vectorization - Georgia Tech - CSE6242 - March 2015Josh Patterson This document discusses vectorization, which is the process of converting raw data like text into numerical feature vectors that can be fed into machine learning algorithms. It covers the vector space model for text vectorization where each unique word is mapped to an index in a vector and the value is the word count. Common text vectorization strategies like bag-of-words, TF-IDF, and kernel hashing are explained. General vectorization techniques for different attribute types like nominal, ordinal, interval and ratio are also overviewed along with feature engineering methods and the Canova tool.

Building a Neural Machine Translation System From Scratch

Building a Neural Machine Translation System From ScratchNatasha Latysheva Human languages are complex, diverse and riddled with exceptions – translating between different languages is therefore a highly challenging technical problem. Deep learning approaches have proved powerful in modelling the intricacies of language, and have surpassed all statistics-based methods for automated translation. This session begins with an introduction to the problem of machine translation and discusses the two dominant neural architectures for solving it – recurrent neural networks and transformers. A practical overview of the workflow involved in training, optimising and adapting a competitive neural machine translation system is provided. Attendees will gain an understanding of the internal workings and capabilities of state-of-the-art systems for automatic translation, as well as an appreciation of the key challenges and open problems in the field.

Searching with vectors

Searching with vectorsSimon Hughes Talk by Simon Hughes at the Haystack search conference in 2019. Discussing how to implement semantic search within an inverted index using vectors.

Sequence Modelling with Deep Learning

Sequence Modelling with Deep LearningNatasha Latysheva Much of data is sequential – think speech, text, DNA, stock prices, financial transactions and customer action histories. Modern methods for modelling sequence data are often deep learning-based, composed of either recurrent neural networks (RNNs) or attention-based Transformers. A tremendous amount of research progress has recently been made in sequence modelling, particularly in the application to NLP problems. However, the inner workings of these sequence models can be difficult to dissect and intuitively understand.

This presentation/tutorial will start from the basics and gradually build upon concepts in order to impart an understanding of the inner mechanics of sequence models – why do we need specific architectures for sequences at all, when you could use standard feed-forward networks? How do RNNs actually handle sequential information, and why do LSTM units help longer-term remembering of information? How can Transformers do such a good job at modelling sequences without any recurrence or convolutions?

In the practical portion of this tutorial, attendees will learn how to build their own LSTM-based language model in Keras. A few other use cases of deep learning-based sequence modelling will be discussed – including sentiment analysis (prediction of the emotional valence of a piece of text) and machine translation (automatic translation between different languages).

The goals of this presentation are to provide an overview of popular sequence-based problems, impart an intuition for how the most commonly-used sequence models work under the hood, and show that quite similar architectures are used to solve sequence-based problems across many domains.

CoLing 2016

CoLing 2016Matīss The document summarizes the 26th International Conference on Computational Linguistics (COLING) held in Osaka, Japan in December 2016. Over 1100 presenters attended, with 1039 papers submitted and a 32% acceptance rate. Key areas included neural networks, machine translation, dialog systems, and natural language processing applications. Plenary speakers addressed topics such as universal dependencies in parsing and grounded semantics for hybrid machine translation. The conference featured presentations and posters on recent research advances, including character-level named entity recognition, interactive attention for neural machine translation, and improving attention modeling for machine translation.

MongoDB & Machine Learning

MongoDB & Machine LearningTom Maiaroto Update: Social Harvest is going open source, see http://www.socialharvest.io for more information.

My MongoSV 2011 talk about implementing machine learning and other algorithms in MongoDB. With a little real-world example at the end about what Social Harvest is doing with MongoDB. For more updates about my research, check out my blog at www.shift8creative.com

Enriching Solr with Deep Learning for a Question Answering System - Sanket Sh...

Enriching Solr with Deep Learning for a Question Answering System - Sanket Sh...Lucidworks The document discusses research into using deep learning to improve question answering systems. It describes using Solr to retrieve documents and then using machine learning models to rerank the results. The research compared various supervised and unsupervised models for question similarity and answer selection tasks. For question similarity, ensemble models using TFIDF and sentence embeddings performed best. For answer selection, deep learning models outperformed traditional models when sufficient training data was available.

Taming Text

Taming TextGrant Ingersoll Presentation from March 18th, 2013 Triangle Java User Group on Taming Text. Presentation covers search, question answering, clustering, classification, named entity recognition, etc. See http://www.manning.com/ingersoll for more.

Introduction to Text Mining

Introduction to Text MiningMinha Hwang The class outline covers introduction to unstructured data analysis, word-level analysis using vector space model and TF-IDF, beyond word-level analysis using natural language processing, and a text mining demonstration in R mining Twitter data. The document provides background on text mining, defines what text mining is and its tasks. It discusses features of text data and methods for acquiring texts. It also covers word-level analysis methods like vector space model and TF-IDF, and applications. It discusses limitations of word-level analysis and how natural language processing can help. Finally, it demonstrates Twitter mining in R.

Ad

More Related Content

What's hot (20)

Build Mandarin AI Conversational Agent with Rasa

Build Mandarin AI Conversational Agent with RasaHao-Yuan Chen Rasa 為開源的機器學習框架,提供 Python 開發者一個便於打造基於文本和語音互動的智慧型對話代理人,然而,在中文的場景下,Rasa 既有的元件往往表現不出令人滿意的品質。

本次分享將以 Rasa 範例「餐廳搜尋助理」 出發,讓我們透過 ckiptagger 及 客製化元件,看著他如何學會繁體中文。

An Introduction to NLP4L - Natural Language Processing Tool for Apache Lucene...

An Introduction to NLP4L - Natural Language Processing Tool for Apache Lucene...Lucidworks The document describes the NLP4L framework, which aims to improve search experiences for Lucene-based search systems. It provides reference implementations of plugins and corpora that use NLP/ML technologies to generate models, dictionaries, and indexes. It also includes an interface for users to examine output dictionaries since NLP/ML results may not be perfect. The framework is pluggable and processes data sources through various NLP tasks to generate tagged corpora, dictionaries, and document vectors to enhance search features like suggestions, translations, entity extraction, and ranking.

The State of #NLProc

The State of #NLProcVsevolod Dyomkin A brief overview of the current state of Natural Language Processing: main tasks, approaches, models, data sets

Learning to Rank Presentation (v2) at LexisNexis Search Guild

Learning to Rank Presentation (v2) at LexisNexis Search GuildSujit Pal An introduction to Learning to Rank, with case studies using RankLib with and without plugins provided by Solr and Elasticsearch. RankLib is a library of learning to rank algorithms, which includes some popular LTR algorithms such as LambdaMART, RankBoost, RankNet, etc.

Nikko Ström at AI Frontiers: Deep Learning in Alexa

Nikko Ström at AI Frontiers: Deep Learning in AlexaAI Frontiers Alexa is the service that understands spoken language in Amazon Echo and other voice enabled devices. Alexa relies heavily on machine learning and deep neural networks for speech recognition, text-to-speech, language understanding, skill selection, and more. In this talk Nikko presents an overview of deep learning in Alexa and gives a few illustrating examples.

Introduction to Natural Language Processing (NLP)

Introduction to Natural Language Processing (NLP)WingChan46 This document introduces natural language processing (NLP) and describes how it works. NLP involves using AI techniques like machine learning to understand and generate human language. It converts unstructured text into structured knowledge. Key NLP tasks include entity recognition, topic analysis, sentiment analysis, and classification. Common applications are spellcheckers, recommendation systems, voice assistants, search engines, and language translation. An example project called Switch uses NLP techniques on Twitter data to build a job search engine. It extracts entities, classifies tweets, and provides a website for users to search relevant job postings.

Hacking Lucene and Solr for Fun and Profit

Hacking Lucene and Solr for Fun and Profitlucenerevolution This document summarizes Grant Ingersoll's presentation on hacking Lucene and Solr for fun and profit. It discusses using Lucene and Solr for tasks beyond classic text search like data analysis, spatial search, and question answering. It provides examples of using Lucene for classification, recommendations, and indexing non-spatial data like time ranges spatially. Resources for further information are also listed.

Webinar: Simpler Semantic Search with Solr

Webinar: Simpler Semantic Search with SolrLucidworks Hear from Lucidworks Senior Solutions Consultant Ted Sullivan about how you can leverage Apache Solr and Lucidworks Fusion to improve semantic awareness of your search applications.

An Introduction to Natural Language Processing

An Introduction to Natural Language ProcessingTyrone Systems Learn about how Natural Language Processing in AI can be used and how it applies to you in the real world.

You can learn about NLP concepts, Pre-processing steps, Vectorization Methods, Generative and Unsupervised methods. All the resource is available for you to grow your knowledge and skills about Natural Language Processing webinar!

Sentiment Analysis Using Solr

Sentiment Analysis Using SolrPradeep Pujari Solr is an open source, widely used, popular IR machine. It can be used for simple sentiment analysis and sentiment retrieval tool. Its multi-language analyzers together with UIMA (Unstructured Information Management Architecture) framework can be extended for sentiment extraction. Each sentence passes through a series of pluggable annotators. Entity and its associated polarity are detected for each sentence. Polarity of each sentence is stored into Solr index. Persistent model files can be created from training data and accessed at run time.

Semantic & Multilingual Strategies in Lucene/Solr: Presented by Trey Grainger...

Semantic & Multilingual Strategies in Lucene/Solr: Presented by Trey Grainger...Lucidworks This document outlines Trey Grainger's presentation on semantic and multilingual strategies in Lucene/Solr. It discusses text analysis, language-specific analysis chains, multilingual search strategies like having separate fields or indexes per language or putting all languages in one field. It also covers automatic language identification, semantic search, and concludes with a discussion of one field to handle all languages.

Shrinking the Haystack" using Solr and OpenNLP

Shrinking the Haystack" using Solr and OpenNLPlucenerevolution Presented by Wes Caldwell, Chief Architect, ISS, Inc.

The customers in the Intelligence Community and Department of Defense that ISS services have a big data challenge. The sheer volume of data being produced and ultimately consumed by large enterprise systems has grown exponentially in a short amount of time. Providing analysts the ability to interpret meaning, and act on time-critical information is a top priority for ISS. In this session, we will explore our journey into building a search and discovery system for our customers that combines Solr, OpenNLP, and other open source technologies to enable analysts to "Shrink the Haystack" into actionable information.

NLP from scratch

NLP from scratch Bryan Gummibearehausen The document presents a neural network architecture for various natural language processing (NLP) tasks such as part-of-speech tagging, chunking, named entity recognition, and semantic role labeling. It shows results comparable to state-of-the-art using word embeddings learned from a large unlabeled corpus, and improved results from joint training of the tasks. The network transforms words into feature vectors, extracts higher-level features through neural layers, and is trained via backpropagation. Benchmark results demonstrate performance on par with traditional task-specific systems without heavy feature engineering.

Vectorization - Georgia Tech - CSE6242 - March 2015

Vectorization - Georgia Tech - CSE6242 - March 2015Josh Patterson This document discusses vectorization, which is the process of converting raw data like text into numerical feature vectors that can be fed into machine learning algorithms. It covers the vector space model for text vectorization where each unique word is mapped to an index in a vector and the value is the word count. Common text vectorization strategies like bag-of-words, TF-IDF, and kernel hashing are explained. General vectorization techniques for different attribute types like nominal, ordinal, interval and ratio are also overviewed along with feature engineering methods and the Canova tool.

Building a Neural Machine Translation System From Scratch

Building a Neural Machine Translation System From ScratchNatasha Latysheva Human languages are complex, diverse and riddled with exceptions – translating between different languages is therefore a highly challenging technical problem. Deep learning approaches have proved powerful in modelling the intricacies of language, and have surpassed all statistics-based methods for automated translation. This session begins with an introduction to the problem of machine translation and discusses the two dominant neural architectures for solving it – recurrent neural networks and transformers. A practical overview of the workflow involved in training, optimising and adapting a competitive neural machine translation system is provided. Attendees will gain an understanding of the internal workings and capabilities of state-of-the-art systems for automatic translation, as well as an appreciation of the key challenges and open problems in the field.

Searching with vectors

Searching with vectorsSimon Hughes Talk by Simon Hughes at the Haystack search conference in 2019. Discussing how to implement semantic search within an inverted index using vectors.

Sequence Modelling with Deep Learning

Sequence Modelling with Deep LearningNatasha Latysheva Much of data is sequential – think speech, text, DNA, stock prices, financial transactions and customer action histories. Modern methods for modelling sequence data are often deep learning-based, composed of either recurrent neural networks (RNNs) or attention-based Transformers. A tremendous amount of research progress has recently been made in sequence modelling, particularly in the application to NLP problems. However, the inner workings of these sequence models can be difficult to dissect and intuitively understand.

This presentation/tutorial will start from the basics and gradually build upon concepts in order to impart an understanding of the inner mechanics of sequence models – why do we need specific architectures for sequences at all, when you could use standard feed-forward networks? How do RNNs actually handle sequential information, and why do LSTM units help longer-term remembering of information? How can Transformers do such a good job at modelling sequences without any recurrence or convolutions?

In the practical portion of this tutorial, attendees will learn how to build their own LSTM-based language model in Keras. A few other use cases of deep learning-based sequence modelling will be discussed – including sentiment analysis (prediction of the emotional valence of a piece of text) and machine translation (automatic translation between different languages).

The goals of this presentation are to provide an overview of popular sequence-based problems, impart an intuition for how the most commonly-used sequence models work under the hood, and show that quite similar architectures are used to solve sequence-based problems across many domains.

CoLing 2016

CoLing 2016Matīss The document summarizes the 26th International Conference on Computational Linguistics (COLING) held in Osaka, Japan in December 2016. Over 1100 presenters attended, with 1039 papers submitted and a 32% acceptance rate. Key areas included neural networks, machine translation, dialog systems, and natural language processing applications. Plenary speakers addressed topics such as universal dependencies in parsing and grounded semantics for hybrid machine translation. The conference featured presentations and posters on recent research advances, including character-level named entity recognition, interactive attention for neural machine translation, and improving attention modeling for machine translation.

MongoDB & Machine Learning

MongoDB & Machine LearningTom Maiaroto Update: Social Harvest is going open source, see http://www.socialharvest.io for more information.

My MongoSV 2011 talk about implementing machine learning and other algorithms in MongoDB. With a little real-world example at the end about what Social Harvest is doing with MongoDB. For more updates about my research, check out my blog at www.shift8creative.com

Enriching Solr with Deep Learning for a Question Answering System - Sanket Sh...

Enriching Solr with Deep Learning for a Question Answering System - Sanket Sh...Lucidworks The document discusses research into using deep learning to improve question answering systems. It describes using Solr to retrieve documents and then using machine learning models to rerank the results. The research compared various supervised and unsupervised models for question similarity and answer selection tasks. For question similarity, ensemble models using TFIDF and sentence embeddings performed best. For answer selection, deep learning models outperformed traditional models when sufficient training data was available.

Similar to Building NLP solutions using Python (20)

Taming Text

Taming TextGrant Ingersoll Presentation from March 18th, 2013 Triangle Java User Group on Taming Text. Presentation covers search, question answering, clustering, classification, named entity recognition, etc. See http://www.manning.com/ingersoll for more.

Introduction to Text Mining

Introduction to Text MiningMinha Hwang The class outline covers introduction to unstructured data analysis, word-level analysis using vector space model and TF-IDF, beyond word-level analysis using natural language processing, and a text mining demonstration in R mining Twitter data. The document provides background on text mining, defines what text mining is and its tasks. It discusses features of text data and methods for acquiring texts. It also covers word-level analysis methods like vector space model and TF-IDF, and applications. It discusses limitations of word-level analysis and how natural language processing can help. Finally, it demonstrates Twitter mining in R.

Machine Learning & Apache Mahout

Machine Learning & Apache MahoutDomingo Suarez Torres Machine Learning es una rama de la inteligencia artificial, que nos permite utilizar algoritmos que pueden operar sobre datos para determinar comportamiento, patrones, preferencias, etc.

Apache Mahout es una librería de código abierto que implementa una diversidad de algoritmos de Machine Learning, que bien pueden ser usados para construir un motor de recomendaciones para dirigir compras.

How Oracle Uses CrowdFlower For Sentiment Analysis

How Oracle Uses CrowdFlower For Sentiment AnalysisCrowdFlower Learn how Oracle uses CrowdFlower to train and perfect Machine Learning algorithms to build sentiment & other models that classify text.

AI presentation and introduction - Retrieval Augmented Generation RAG 101

AI presentation and introduction - Retrieval Augmented Generation RAG 101vincent683379 Brief Introduction to Generative AI and LLM in particular.

Overview of the market, and usages of LLMs.

What's it like to train and build a model.

Retrieval Augmented Generation 101, explained for non savvies, and a perspective of what are the moving parts making it complex

Machine Learning Toolssssssssssssss.pptx

Machine Learning Toolssssssssssssss.pptxsalehaalsaleh602 afjbjsvbhoifkfvzuvijfenjsbphfejavfarhfejpnal kbarfi[naj kbvinaf jk

python_libraries_for_artificial_intelligence.pptx

python_libraries_for_artificial_intelligence.pptxsalehaalsaleh602 this file contain the library in python and support the AI

Natural language processing and search

Natural language processing and searchNathan McMinn An overview of some core concept in natural language processing, some example (experimental for now!) use cases, and a brief survey of some tools I have explored.

Final presentation

Final presentationNitish Upreti This document outlines an approach to query formulation for similarity search using term extraction algorithms. It discusses the challenges of similarity search and constructing queries from documents. The solution involves preprocessing documents, extracting candidate terms, building an index, calculating statistical features, executing term extraction algorithms, and postprocessing outputs. Evaluation on a plagiarism detection dataset found TF-IDF and RIDF performed best among algorithms tested. The code is available on GitHub and further improvements could integrate topic modeling.

Deep learning for NLP

Deep learning for NLPShishir Choudhary In this talk we cover

1. Why NLP and DL

2. Practical Challenges

3. Some Popular Deep Learning models for NLP

Today you can take any webpage in any language and translate it automatically into language you know! You can also cut paste an article or other document into NLP systems and immediately get list of companies and people it talks about, topics that are relevant and the sentiment of the document. When you talk to Google or Amazon assistant, you are using NLP systems. NLP is not perfect but given the advances in last two years and continuing, it is a growing field. Let’s see how it actually works, specifically using Deep learning

About Shishir

Shishir is a Senior Data Scientist at Thomson Reuters working on Deep Learning and NLP to solve real customer pain, even ones they have become used to.

Designing and Implementing Search Solutions

Designing and Implementing Search SolutionsFindwise Presentation at Gothenburg University, the 8th of March 2012 by Svetoslav Marinov, Tobias Berg and Björn Klockljung-Johansson.

Webinar: Fusion 3.1 - What's New

Webinar: Fusion 3.1 - What's NewLucidworks Fusion 3.1 comes with exciting new features that will make your search more personal and better targeted. Join us for a webinar to learn more about Fusion's features, what's new in this release, and what's around the corner for Fusion.

Data science and Hadoop

Data science and HadoopDonald Miner A talk I gave on what Hadoop does for the data scientist. I talk about data exploration, NLP, Classifiers, and recommendation systems, plus some other things. I tried to depict a realistic view of Hadoop here.

Drupal and Apache Stanbol

Drupal and Apache StanbolAlkuvoima This document discusses semantic annotation using custom vocabularies. It introduces Gabriel Dragomir and provides background on semantic web and linked data. It then describes Apache Stanbol, a framework for semantic annotation of documents. Stanbol allows modular processing of documents using configurable workflows and vocabularies. The document outlines Stanbol's architecture and components. It also discusses integrating Stanbol with Drupal for semantic indexing and annotation of content. A demo is proposed to index Drupal data in Stanbol and annotate entities using DBPedia and a custom semantic web vocabulary.

Data Acquisition for Sentiment Analysis

Data Acquisition for Sentiment AnalysisAli BELCAID This document provides an overview of the components and architecture for a project to acquire unstructured data from various sources for sentiment analysis. The main objectives are to streamline data acquisition, create corpora for contextual opinions and sentiments, and detect trends based on reviews and comments. The proposed architecture uses Python, Django, Scrapy, MySQL/MongoDB/Hbase for data storage, and R Project and Hadoop for text mining and massive storage. It describes how crawlers and APIs will be used to gather data from social media and other sources for preprocessing, analysis and output of results.

Dataiku hadoop summit - semi-supervised learning with hadoop for understand...

Dataiku hadoop summit - semi-supervised learning with hadoop for understand...Dataiku This document summarizes a presentation on using semi-supervised learning on Hadoop to understand user behaviors on large websites. It discusses clustering user sessions to identify different user segments, labeling the clusters, then using supervised learning to classify all sessions. Key metrics like satisfaction scores are then computed for each segment to identify opportunities to improve the user experience and business metrics. Smoothing is applied to metrics over time to avoid scaring people with daily fluctuations. The overall goal is to measure and drive user satisfaction across diverse users.

Text Mining

Text MiningBiniam Asnake Text mining is the process of extracting useful information and patterns from large collections of unstructured documents. It involves preprocessing texts, applying techniques like categorization, clustering, and summarization, and presenting or visualizing the results. While text mining has many applications in business, science, and other domains, it also faces challenges related to linguistics, analytics, and integrating domain knowledge. The document outlines the definition, techniques, applications, advantages, and limitations of text mining.

Ad

More from botsplash.com (14)

Migrating to postgresql

Migrating to postgresqlbotsplash.com This document summarizes a presentation about migrating to PostgreSQL. It discusses PostgreSQL's history and features, including its open source nature, performance, extensibility, and support for JSON, XML, and other data types. It also covers installation, common SQL features, indexing, concurrency control using MVCC, and best practices for optimization. The presentation aims to explain why developers may want to use PostgreSQL as an alternative or complement to other databases.

Bootstrap SaaS startup using Open Source Tools

Bootstrap SaaS startup using Open Source Toolsbotsplash.com Presentation for All Things Open Conference on "How to bootstrap SaaS startup using Open Source Tools"

Devops Days, 2019 - Charlotte

Devops Days, 2019 - Charlottebotsplash.com - Ramu Pulipati presented on Botsplash's journey to implement continuous integration and delivery (CI/CD) processes. They initially used shell scripts and Ansible but found deployment took too long. They then tried Circle CI but had issues with dynamic IP ranges and SSH key distribution. They moved to using AWS Code Suite (CodeBuild, CodeDeploy, CodePipeline) but found it added complexity and was difficult to evolve. Their lessons were to start simple, set clear goals, and accomplish them incrementally rather than taking on too much at once.

Getting started with postgresql

Getting started with postgresqlbotsplash.com This document provides an overview of Postgresql, including its history, capabilities, advantages over other databases, best practices, and references for further learning. Postgresql is an open source relational database management system that has been in development for over 30 years. It offers rich SQL support, high performance, ACID transactions, and extensive extensibility through features like JSON, XML, and programming languages.

Chat interfaces, Extension to Digital Marketing

Chat interfaces, Extension to Digital Marketingbotsplash.com Presentation for Charlotte Digital Marketing group about usage and best practices of Live Chat and Chatbots.

Cloud computing options

Cloud computing optionsbotsplash.com Charlotte Cloud Computing Meetup presentation. Credits to Mary Meeker 2018 report for Cloud Marketshare chart.

Data Science meets Digital Marketing

Data Science meets Digital Marketingbotsplash.com Visual complexity can both increase and decrease consumer engagement on social media. The researchers analyzed over 630,000 Instagram posts from 633 brands to understand how different dimensions of visual complexity impact engagement, as measured by likes. They found an inverted U-shape relationship between luminance variation/edge density and engagement, suggesting moderate levels maximize it. The number and dissimilarity of concepts positively impacted engagement when interacting, while increased visual clutter decreased it. Overall, the study suggests visual complexity can engage consumers on social media if its different dimensions are properly balanced.

botsplash deep dive

botsplash deep divebotsplash.com The document discusses live chat versus chat bots and proposes a solution using both. It notes that live chat is time consuming and expensive while bots are a one-time build that are good for repetitive tasks. However, bots have limitations around context switching and understanding. The proposed solution is a chat platform that allows both agents and bots to engage customers across channels, with bots handling automated interactions and intelligence features to help bots understand context and language within a business domain.

Building Twitter bot using Python

Building Twitter bot using Pythonbotsplash.com Charlotte Bots and AI meetup - June 2017. Presentation and code slides walkthrough. Link: https://github.com/aarpy/twitdemo

Python for data science

Python for data sciencebotsplash.com Charlotte Bots and AI Meetup presentation by Sankalp Gabbita, MS Graduate specializing in Data Science from UNC Charlotte

Live development & tools

Live development & toolsbotsplash.com Modern development platforms have built-in or easy to setup live reload and hot loading capabilities to provide a quick feedback loop that greatly boosts productivity when coding. These tools can be used across web, mobile, and desktop applications for CSS, Node.js, Django, Ruby on Rails, React Native, and Windows to automatically refresh changes in the browser without manually reloading. Linting and type checking tools like ESLint and Flow can be used with editors like Atom to maintain code quality, find bugs, and reduce time spent debugging so more time can be spent building great products.

AI Use Cases discussion

AI Use Cases discussionbotsplash.com The document discusses various AI use cases, companies utilizing AI, channels for AI assistants, and machine learning toolkits and implementations. It provides examples of AI being used for shopping recommendations, sales analysis, predictive suggestions, 360 degree banking views, self-driving cars, adaptive learning, home automation, crop dusting drones, and detecting fake news. Popular channels mentioned are voice assistants, workplace messaging, and social messaging. Toolkits discussed are Tensorflow, CNTK, MXNet, and PaddlePaddle along with deep learning frameworks like Torch, Caffe, and Keras. Recommended learning resources on the topics are also provided.

Career advice for beginner software engineers

Career advice for beginner software engineersbotsplash.com This document provides career advice for beginner software engineers. It discusses finding a job, working on the job, building products, and personal development. The document recommends planning and preparing for a job search, using sites like Indeed and LinkedIn, and taking MOOCs or boot camps. It also discusses different job positions, working as part of an agile organization, building products, and the importance of continuous learning.

Node.js Getting Started &amd Best Practices

Node.js Getting Started &amd Best Practicesbotsplash.com This document provides an overview of Node.js, including getting started, best practices, features, challenges, and deployment. It discusses Node.js basics, when to use it, popular applications, development tools, key features like modules and events, the NPM package manager, common mistakes, alternatives to callbacks, important packages, and deployment/monitoring best practices.

Ad

Recently uploaded (20)

#AdminHour presents: Hour of Code2018 slide deck from 12/6/2018

#AdminHour presents: Hour of Code2018 slide deck from 12/6/2018Lynda Kane Slide Deck from the #AdminHour's Hour of Code session on 12/6/2018 that features learning to code using the Python Turtle library to create snowflakes

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...TrustArc Most consumers believe they’re making informed decisions about their personal data—adjusting privacy settings, blocking trackers, and opting out where they can. However, our new research reveals that while awareness is high, taking meaningful action is still lacking. On the corporate side, many organizations report strong policies for managing third-party data and consumer consent yet fall short when it comes to consistency, accountability and transparency.

This session will explore the research findings from TrustArc’s Privacy Pulse Survey, examining consumer attitudes toward personal data collection and practical suggestions for corporate practices around purchasing third-party data.

Attendees will learn:

- Consumer awareness around data brokers and what consumers are doing to limit data collection

- How businesses assess third-party vendors and their consent management operations

- Where business preparedness needs improvement

- What these trends mean for the future of privacy governance and public trust

This discussion is essential for privacy, risk, and compliance professionals who want to ground their strategies in current data and prepare for what’s next in the privacy landscape.

Automation Dreamin' 2022: Sharing Some Gratitude with Your Users

Automation Dreamin' 2022: Sharing Some Gratitude with Your UsersLynda Kane Slide Deck from Automation Dreamin'2022 presentation Sharing Some Gratitude with Your Users on creating a Flow to present a random statement of Gratitude to a User in Salesforce.

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdf

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdfAbi john Analyze the growth of meme coins from mere online jokes to potential assets in the digital economy. Explore the community, culture, and utility as they elevate themselves to a new era in cryptocurrency.

Salesforce AI Associate 2 of 2 Certification.docx

Salesforce AI Associate 2 of 2 Certification.docxJosé Enrique López Rivera Salesforce AI Associate 2 of 2

How Can I use the AI Hype in my Business Context?

How Can I use the AI Hype in my Business Context?Daniel Lehner 𝙄𝙨 𝘼𝙄 𝙟𝙪𝙨𝙩 𝙝𝙮𝙥𝙚? 𝙊𝙧 𝙞𝙨 𝙞𝙩 𝙩𝙝𝙚 𝙜𝙖𝙢𝙚 𝙘𝙝𝙖𝙣𝙜𝙚𝙧 𝙮𝙤𝙪𝙧 𝙗𝙪𝙨𝙞𝙣𝙚𝙨𝙨 𝙣𝙚𝙚𝙙𝙨?

Everyone’s talking about AI but is anyone really using it to create real value?

Most companies want to leverage AI. Few know 𝗵𝗼𝘄.

✅ What exactly should you ask to find real AI opportunities?

✅ Which AI techniques actually fit your business?

✅ Is your data even ready for AI?

If you’re not sure, you’re not alone. This is a condensed version of the slides I presented at a Linkedin webinar for Tecnovy on 28.04.2025.

2025-05-Q4-2024-Investor-Presentation.pptx

2025-05-Q4-2024-Investor-Presentation.pptxSamuele Fogagnolo Cloudflare Q4 Financial Results Presentation

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat The MCP (Model Context Protocol) is a framework designed to manage context and interaction within complex systems. This SlideShare presentation will provide a detailed overview of the MCP Model, its applications, and how it plays a crucial role in improving communication and decision-making in distributed systems. We will explore the key concepts behind the protocol, including the importance of context, data management, and how this model enhances system adaptability and responsiveness. Ideal for software developers, system architects, and IT professionals, this presentation will offer valuable insights into how the MCP Model can streamline workflows, improve efficiency, and create more intuitive systems for a wide range of use cases.

"Client Partnership — the Path to Exponential Growth for Companies Sized 50-5...

"Client Partnership — the Path to Exponential Growth for Companies Sized 50-5...Fwdays Why the "more leads, more sales" approach is not a silver bullet for a company.

Common symptoms of an ineffective Client Partnership (CP).

Key reasons why CP fails.

Step-by-step roadmap for building this function (processes, roles, metrics).

Business outcomes of CP implementation based on examples of companies sized 50-500.

Buckeye Dreamin' 2023: De-fogging Debug Logs

Buckeye Dreamin' 2023: De-fogging Debug LogsLynda Kane Slide Deck from Buckeye Dreamin' 2023: De-fogging Debug Logs which went over how to capture and read Salesforce Debug Logs

Automation Hour 1/28/2022: Capture User Feedback from Anywhere

Automation Hour 1/28/2022: Capture User Feedback from AnywhereLynda Kane Slide Deck from Automation Hour 1/28/2022 presentation Capture User Feedback from Anywhere presenting setting up a Custom Object and Flow to collection User Feedback in Dynamic Pages and schedule a report to act on that feedback regularly.

Leading AI Innovation As A Product Manager - Michael Jidael

Leading AI Innovation As A Product Manager - Michael JidaelMichael Jidael Unlike traditional product management, AI product leadership requires new mental models, collaborative approaches, and new measurement frameworks. This presentation breaks down how Product Managers can successfully lead AI Innovation in today's rapidly evolving technology landscape. Drawing from practical experience and industry best practices, I shared frameworks, approaches, and mindset shifts essential for product leaders navigating the unique challenges of AI product development.

In this deck, you'll discover:

- What AI leadership means for product managers

- The fundamental paradigm shift required for AI product development.

- A framework for identifying high-value AI opportunities for your products.

- How to transition from user stories to AI learning loops and hypothesis-driven development.

- The essential AI product management framework for defining, developing, and deploying intelligence.

- Technical and business metrics that matter in AI product development.

- Strategies for effective collaboration with data science and engineering teams.

- Framework for handling AI's probabilistic nature and setting stakeholder expectations.

- A real-world case study demonstrating these principles in action.

- Practical next steps to begin your AI product leadership journey.

This presentation is essential for Product Managers, aspiring PMs, product leaders, innovators, and anyone interested in understanding how to successfully build and manage AI-powered products from idea to impact. The key takeaway is that leading AI products is about creating capabilities (intelligence) that continuously improve and deliver increasing value over time.

Cyber Awareness overview for 2025 month of security

Cyber Awareness overview for 2025 month of securityriccardosl1 Cyber awareness training educates employees on risk associated with internet and malicious emails

Into The Box Conference Keynote Day 1 (ITB2025)

Into The Box Conference Keynote Day 1 (ITB2025)Ortus Solutions, Corp This is the keynote of the Into the Box conference, highlighting the release of the BoxLang JVM language, its key enhancements, and its vision for the future.

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptx

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptxJustin Reock Building 10x Organizations with Modern Productivity Metrics

10x developers may be a myth, but 10x organizations are very real, as proven by the influential study performed in the 1980s, ‘The Coding War Games.’

Right now, here in early 2025, we seem to be experiencing YAPP (Yet Another Productivity Philosophy), and that philosophy is converging on developer experience. It seems that with every new method we invent for the delivery of products, whether physical or virtual, we reinvent productivity philosophies to go alongside them.

But which of these approaches actually work? DORA? SPACE? DevEx? What should we invest in and create urgency behind today, so that we don’t find ourselves having the same discussion again in a decade?

Linux Professional Institute LPIC-1 Exam.pdf

Linux Professional Institute LPIC-1 Exam.pdfRHCSA Guru Introduction to LPIC-1 Exam - overview, exam details, price and job opportunities

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat

Building NLP solutions using Python

- 1. Building NLP solutions using Python By Ramu Pulipati, @botsplash

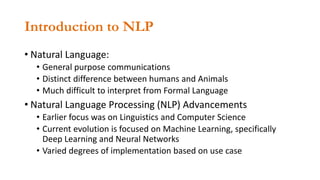

- 2. Introduction to NLP • Natural Language: • General purpose communications • Distinct difference between humans and Animals • Much difficult to interpret from Formal Language • Natural Language Processing (NLP) Advancements • Earlier focus was on Linguistics and Computer Science • Current evolution is focused on Machine Learning, specifically Deep Learning and Neural Networks • Varied degrees of implementation based on use case

- 3. Scope of Natural Language Processing • Read • Natural Language Understanding (NLU) • Write • Natural Language Generation (NLG) • Speak • Speech Recognition / Syntesis

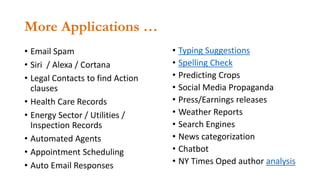

- 5. More Applications … • Email Spam • Siri / Alexa / Cortana • Legal Contacts to find Action clauses • Health Care Records • Energy Sector / Utilities / Inspection Records • Automated Agents • Appointment Scheduling • Auto Email Responses • Typing Suggestions • Spelling Check • Predicting Crops • Social Media Propaganda • Press/Earnings releases • Weather Reports • Search Engines • News categorization • Chatbot • NY Times Oped author analysis

- 6. State of NLP Source: https://www.slideshare.net/healess/sk-t-academy-lecture-note

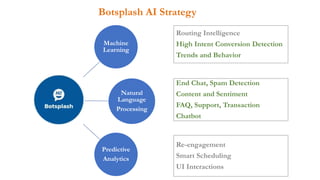

- 7. Botsplash AI Strategy Machine Learning Natural Language Processing Predictive Analytics Routing Intelligence High Intent Conversion Detection Trends and Behavior End Chat, Spam Detection Content and Sentiment FAQ, Support, Transaction Chatbot Re-engagement Smart Scheduling UI Interactions

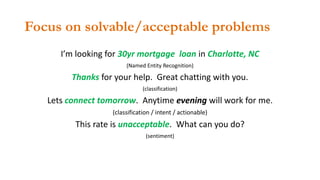

- 8. Focus on solvable/acceptable problems I’m looking for 30yr mortgage loan in Charlotte, NC (Named Entity Recognition) Thanks for your help. Great chatting with you. (classification) Lets connect tomorrow. Anytime evening will work for me. (classification / intent / actionable) This rate is unacceptable. What can you do? (sentiment)

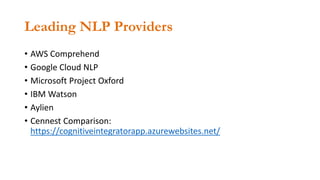

- 9. Leading NLP Providers • AWS Comprehend • Google Cloud NLP • Microsoft Project Oxford • IBM Watson • Aylien • Cennest Comparison: https://cognitiveintegratorapp.azurewebsites.net/

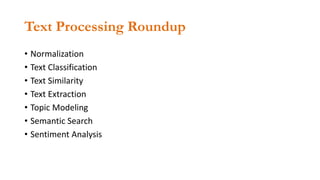

- 10. Text Processing Roundup • Normalization • Text Classification • Text Similarity • Text Extraction • Topic Modeling • Semantic Search • Sentiment Analysis

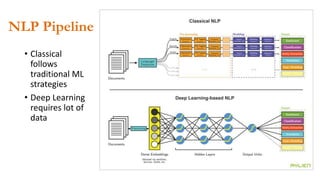

- 11. NLP Pipeline • Classical follows traditional ML strategies • Deep Learning requires lot of data

- 12. Getting started • Python Installation. Use 3+. • Data science packages installation. Use “pip install” or Anaconda • Always use “virtualenv” when setting up environments. • Start with Jupyter notebooks and convert it production code. • Use cloud hosted jupyter notebooks with access to GPU from floydhub, paperspace, Google, Amazon or Azure

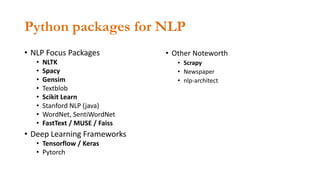

- 13. Python packages for NLP • NLP Focus Packages • NLTK • Spacy • Gensim • Textblob • Scikit Learn • Stanford NLP (java) • WordNet, SentiWordNet • FastText / MUSE / Faiss • Deep Learning Frameworks • Tensorflow / Keras • Pytorch • Other Noteworth • Scrapy • Newspaper • nlp-architect

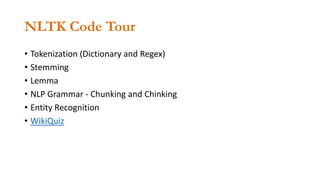

- 14. NLTK Code Tour • Tokenization (Dictionary and Regex) • Stemming • Lemma • NLP Grammar - Chunking and Chinking • Entity Recognition • WikiQuiz

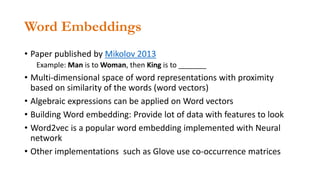

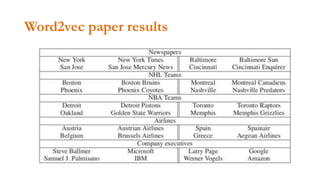

- 15. Word Embeddings • Paper published by Mikolov 2013 Example: Man is to Woman, then King is to _______ • Multi-dimensional space of word representations with proximity based on similarity of the words (word vectors) • Algebraic expressions can be applied on Word vectors • Building Word embedding: Provide lot of data with features to look • Word2vec is a popular word embedding implemented with Neural network • Other implementations such as Glove use co-occurrence matrices

- 17. Spacy.io Lightning Tour • Industrial Strength, Fast • POS Tagging and Dependency Parsing • Named Entities, Word embedding and Similarity • Custom Pipelines • Visualization

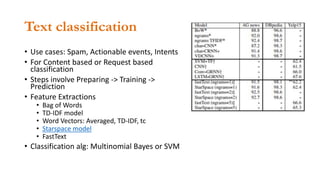

- 18. Text classification • Use cases: Spam, Actionable events, Intents • For Content based or Request based classification • Steps involve Preparing -> Training -> Prediction • Feature Extractions • Bag of Words • TD-IDF model • Word Vectors: Averaged, TD-IDF, tc • Starspace model • FastText • Classification alg: Multinomial Bayes or SVM

- 19. Steps to classifying your data 1. Identify tags to be applied 2. Manually add tags for the data (possibly in the application) 3. Build a classification algorithm 4. Setup your application to auto classify tags 5. Evaluate silently and then enable the actions

- 20. Sentiment Analysis • Use case: Reviews, Chat transcripts, etc • Supervised techniques are effective for a domain • Packages: • SentiWordNet • StanfordNLP • Spacy Sentiment Analysis (incomplete)

- 21. Summarization • Summarization is hard • Uses variety of techniques including Text extraction, Feature Matrix, TD-IDF, Co-location, SVD and other methods • Implement LSA to under • Review of implementations: • Spacy • TextRank • Pyteaser • Textteaser • Sumy

- 22. Code Review / Demo Apps • Jupyter Notebooks • NLTK Code Review • Space Code Review • NLTK Grammar Parsing • WikiQuiz • Sequence to Sequence Chatbot • DeepQA demo • Topic Modeling Code Review • Text Similarity – Phrase Matcher API

- 23. Follow up Learning • Websites: • Allen AI - NLP • Fast AI • Malabuba • Coursera • Youtube • Resources • Sanni Oluwatoyin Yetunde Google Slides • Cambridge Data Science Group presentation • nlp.fast.ai

Editor's Notes

- #3: Natural language is ambiguous, where formal language is precise Formal language: Programming language

- #8: The botsplash framework encompasses and build on strong concepts and strategy to augment business processes to achieve best outcome for business and customers of the business botsplash is a Software-as-a-Service platform on a model of B-2-b-2-C. We want the “B”(business) to provide “C”(consumers of business) the best, easy to use and reliable technology to reduce costs , increase business transactions, efficiency and customer satisfaction.

- #12: ML Strategies: * Explore data and use visualizations * Create Train and Test data * Setup training algorithm and feature * Train Model * Test the result * Rinse and Repeat until the results are satisfactory

- #19: Multinomial Naïve Bayes is used to predict more than 2 classes. Popular Bayes algorithm that expects each feature is independent Support vector machine are supervised algorithms used for classification, regression, anomaly and outlier detections For classification algorithm, we focus on following metrics: accuracy, precision, recall and f1 score