Ceph Performance Profiling and Reporting

Download as PPTX, PDF0 likes650 views

Presentation from Ceph Day Sunnyvale 2016 by Brent Compton and Kyle Bader from Red Hat. http://ceph.com/cephdays/

1 of 28

Downloaded 53 times

Ad

Recommended

Ceph on All Flash Storage -- Breaking Performance Barriers

Ceph on All Flash Storage -- Breaking Performance BarriersCeph Community The document discusses a new software-defined all-flash storage system called InfiniFlash that uses Ceph software to break performance barriers. It provides disaggregated storage, compute, and software for better scaling and lower costs compared to traditional monolithic storage systems. InfiniFlash uses flash drive cards in a 3U JBOD configuration and can deliver up to 2 million IOPS, less than 1ms latency, and 15GB/s throughput while consuming only 400W of power. When used with Ceph software, it provides a highly scalable block and object storage solution for private and public cloud environments.

AF Ceph: Ceph Performance Analysis and Improvement on Flash

AF Ceph: Ceph Performance Analysis and Improvement on FlashCeph Community Presentation from Ceph Day Sunnyvale 2016 by Byung-Su Park from SK Telecom. http://ceph.com/cephdays/

Ceph Day Melbourne - Ceph on All-Flash Storage - Breaking Performance Barriers

Ceph Day Melbourne - Ceph on All-Flash Storage - Breaking Performance BarriersCeph Community The document discusses a presentation about Ceph on all-flash storage using InfiniFlash systems to break performance barriers. It describes how Ceph has been optimized for flash storage and how InfiniFlash systems provide industry-leading performance of over 1 million IOPS and 6-9GB/s of throughput using SanDisk flash technology. The presentation also covers how InfiniFlash can provide scalable performance and capacity for large-scale enterprise workloads.

Stabilizing Ceph

Stabilizing CephCeph Community Presentation from Ceph Day Sunnyvale 2016 by Yuming Ma and Seth Mason from Cisco. http://ceph.com/cephdays/

Using Recently Published Ceph Reference Architectures to Select Your Ceph Con...

Using Recently Published Ceph Reference Architectures to Select Your Ceph Con...Patrick McGarry This document discusses using recently published Ceph reference architectures to select a Ceph configuration. It provides an inventory of existing reference architectures from Red Hat and SUSE. It previews highlights from an upcoming Intel and Red Hat Ceph reference architecture paper, including recommended configurations and hardware. It also describes an Intel all-NVMe Ceph benchmark configuration for MySQL workloads. In summary, reference architectures provide guidelines for building optimized Ceph solutions based on specific workloads and use cases.

Ceph Day Shanghai - Recovery Erasure Coding and Cache Tiering

Ceph Day Shanghai - Recovery Erasure Coding and Cache TieringCeph Community This document discusses recovery, erasure coding, and cache tiering in Ceph. It provides an overview of the RADOS components including OSDs, monitors, and CRUSH, which calculates data placement across the cluster. It describes how peering and recovery work to maintain data consistency. It also outlines how Ceph implements tiered storage with cache and backing pools, using erasure coding for durability and caching techniques to improve performance.

2016-JAN-28 -- High Performance Production Databases on Ceph

2016-JAN-28 -- High Performance Production Databases on CephCeph Community Presentation from Medallia for monthly Ceph Tech Talks (http://ceph.com/ceph-tech-talks/) series.

Associated Video: https://youtu.be/OqlC7S3cUKs

Journey to Stability: Petabyte Ceph Cluster in OpenStack Cloud

Journey to Stability: Petabyte Ceph Cluster in OpenStack CloudPatrick McGarry Cisco Cloud Services provides an OpenStack platform to Cisco SaaS applications using a worldwide deployment of Ceph clusters storing petabytes of data. The initial Ceph cluster design experienced major stability problems as the cluster grew past 50% capacity. Strategies were implemented to improve stability including client IO throttling, backfill and recovery throttling, upgrading Ceph versions, adding NVMe journals, moving the MON levelDB to SSDs, rebalancing the cluster, and proactively detecting slow disks. Lessons learned included the importance of devops practices, sharing knowledge, rigorous testing, and balancing performance, cost and time.

Ceph Day Melbourne - Walk Through a Software Defined Everything PoC

Ceph Day Melbourne - Walk Through a Software Defined Everything PoCCeph Community This document summarizes a proof of concept for a software defined everything architecture using OpenStack and Midokura software defined networking. The objectives are to abstract compute, network and storage resources and dynamically allocate them as needed. It aims to provide scalability, availability, isolation, metering and automation of infrastructure. The proof of concept uses OpenStack for compute with Ceph for storage, and Midokura for software defined networking to provide logical switching, routing, firewalling and load balancing. It details the architecture, infrastructure, configuration and lessons learned from the implementation.

Ceph Community Talk on High-Performance Solid Sate Ceph

Ceph Community Talk on High-Performance Solid Sate Ceph Ceph Community The document summarizes a presentation given by representatives from various companies on optimizing Ceph for high-performance solid state drives. It discusses testing a real workload on a Ceph cluster with 50 SSD nodes that achieved over 280,000 read and write IOPS. Areas for further optimization were identified, such as reducing latency spikes and improving single-threaded performance. Various companies then described their contributions to Ceph performance, such as Intel providing hardware for testing and Samsung discussing SSD interface improvements.

Ceph: Low Fail Go Scale

Ceph: Low Fail Go Scale Ceph Community High performance disk drives for in-storage compute

Toshiba

Ceph Collaboration Day - OpenStack Summit Tokyo 2015

Ceph Day KL - Ceph on All-Flash Storage

Ceph Day KL - Ceph on All-Flash Storage Ceph Community The document discusses Ceph storage performance on all-flash storage systems. It describes how SanDisk optimized Ceph for all-flash environments by tuning the OSD to handle the high performance of flash drives. The optimizations allowed over 200,000 IOPS per OSD using 12 CPU cores. Testing on SanDisk's InfiniFlash storage system showed it achieving over 1.5 million random read IOPS and 200,000 random write IOPS at 64KB block size. Latency was also very low, with 99% of operations under 5ms for reads. The document outlines reference configurations for the InfiniFlash system optimized for small, medium and large workloads.

Accelerating Cassandra Workloads on Ceph with All-Flash PCIE SSDS

Accelerating Cassandra Workloads on Ceph with All-Flash PCIE SSDSCeph Community This document summarizes the performance of an all-NVMe Ceph cluster using Intel P3700 NVMe SSDs. Key results include achieving over 1.35 million 4K random read IOPS and 171K 4K random write IOPS with sub-millisecond latency. Partitioning the NVMe drives into multiple OSDs improved performance and CPU utilization compared to a single OSD per drive. The cluster also demonstrated over 5GB/s of sequential bandwidth.

Ceph Day San Jose - Object Storage for Big Data

Ceph Day San Jose - Object Storage for Big Data Ceph Community This document discusses using object storage for big data. It outlines key stakeholders in big data projects and what they want from object storage solutions. It then discusses using the Ceph object store to provide an elastic data lake that can disaggregate compute resources from storage. This allows analytics to be performed directly on the object store without expensive ETL processes. It also describes testing various analytics use cases and workloads with the Ceph object store.

Ceph Day Beijing - Ceph RDMA Update

Ceph Day Beijing - Ceph RDMA UpdateDanielle Womboldt Ceph is evolving its network stack to improve performance. It is moving from AsyncMessenger to using RDMA for better scalability and lower latency. RDMA support is now built into Ceph and provides native RDMA using verbs or RDMA-CM. This allows using InfiniBand or RoCE networks with Ceph. Work continues to fully leverage RDMA for features like zero-copy replication and erasure coding offload.

MySQL Head-to-Head

MySQL Head-to-HeadPatrick McGarry This document outlines an agenda for a presentation on running MySQL on Ceph storage. It includes a comparison of MySQL on Ceph versus AWS, results from a head-to-head performance lab test between the two platforms, and considerations for hardware architectures and configurations optimized for MySQL workloads on Ceph. The lab tests showed that Ceph could match or exceed AWS on both performance metrics like IOPS/GB and price/performance metrics like storage cost per IOP.

Ceph Day London 2014 - Best Practices for Ceph-powered Implementations of Sto...

Ceph Day London 2014 - Best Practices for Ceph-powered Implementations of Sto...Ceph Community This document discusses Dell's support for CEPH storage solutions and provides an agenda for a CEPH Day event at Dell. Key points include:

- Dell is a certified reseller of Red Hat-Inktank CEPH support, services, and training.

- The agenda covers why Dell supports CEPH, hardware recommendations, best practices shared with CEPH colleagues, and a concept for research data storage that is seeking input.

- Recommended CEPH architectures, components, configurations, and considerations are discussed for planning and implementing a CEPH solution. Dell server hardware options that could be used are also presented.

Ceph Day Melbourne - Scale and performance: Servicing the Fabric and the Work...

Ceph Day Melbourne - Scale and performance: Servicing the Fabric and the Work...Ceph Community The document discusses scale and performance challenges in providing storage infrastructure for research computing. It describes Monash University's implementation of the Ceph distributed storage system across multiple clusters to provide a "fabric" for researchers' storage needs in a flexible, scalable way. Key points include:

- Ceph provides software-defined storage that is scalable and can integrate with other systems like OpenStack.

- Multiple Ceph clusters have been implemented at Monash of varying sizes and purposes, including dedicated clusters for research data storage.

- The infrastructure provides different "tiers" of storage with varying performance and cost characteristics to meet different research needs.

- Ongoing work involves expanding capacity and upgrading hardware to improve performance

Ceph Day Melbourne - Troubleshooting Ceph

Ceph Day Melbourne - Troubleshooting Ceph Ceph Community This document provides troubleshooting guidance for issues with Ceph. It begins by suggesting identifying the problem domain as either performance, hang, crash, or unexpected behavior. For each problem, it recommends tools and techniques for further investigation such as debugging logs, profiling tools, and source code analysis. Debugging steps include establishing baselines, identifying implicated hosts or subsystems, increasing log verbosity, and tracing transactions through logs. The document emphasizes starting at the user end and working back towards Ceph to isolate issues.

Ceph Day San Jose - All-Flahs Ceph on NUMA-Balanced Server

Ceph Day San Jose - All-Flahs Ceph on NUMA-Balanced Server Ceph Community The document discusses optimizing Ceph storage performance on QCT servers using NUMA-balanced hardware and tuning. It provides details on QCT hardware configurations for throughput, capacity and IOPS-optimized Ceph storage. It also describes testing done in QCT labs using a 5-node all-NVMe Ceph cluster that showed significant performance gains from software tuning and using multiple OSD partitions per SSD.

Ceph Day Beijing - Our journey to high performance large scale Ceph cluster a...

Ceph Day Beijing - Our journey to high performance large scale Ceph cluster a...Danielle Womboldt This document discusses optimizing performance in large scale CEPH clusters at Alibaba. It describes two use models for writing data in CEPH and improvements made to recovery performance by implementing partial and asynchronous recovery. It also details fixes made to bugs that caused data loss or inconsistency. Additionally, it proposes offloading transaction queueing from PG workers to improve performance by leveraging asynchronous transaction workers and evaluating this approach through bandwidth testing.

Intel - optimizing ceph performance by leveraging intel® optane™ and 3 d nand...

Intel - optimizing ceph performance by leveraging intel® optane™ and 3 d nand...inwin stack Kenny Chang (張任伯) (Storage Solution Architect, Intel)

With the trend that Solid State Drive (SSD) becomes more affordable, more and more cloud providers are trying to provide high performance, highly reliable storage for their customers with SSDs. Ceph is becoming one of most open source scale-out storage solutions in worldwide market. More and more customers have strong demands that using SSD in Ceph to build high performance storage solutions for their Openstack clouds.

The disrupted Intel® Optane SSDs based on 3D Xpoint technology fills the performance gap between DRAM and NAND based SSD while the Intel® 3D NAND TLC is reducing cost gap between SSD and traditional spindle hard drive and makes it possible for all flash storage. In this session, we will

1) Discuss OpenStack storage Ceph reference design on the first Intel Optane (3D Xpoint) and P4500 TLC NAND based all-flash Ceph cluster, it delivers multi-million IOPS with extremely low latency as well as increase storage density with competitive dollar-per-gigabyte costs

2) Share Ceph bluestore tunings and optimizations, latency analysis, TCO model, IOPS/TB, IOPS/$ based on the reference architecture to demonstrate this high performance, cost effective solution.

CephFS in Jewel: Stable at Last

CephFS in Jewel: Stable at LastCeph Community Presentation from 2016 Austin OpenStack Summit.

The Ceph upstream community is declaring CephFS stable for the first time in the recent Jewel release, but that declaration comes with caveats: while we have filesystem repair tools and a horizontally scalable POSIX filesystem, we have default-disabled exciting features like horizontally-scalable metadata servers and snapshots. This talk will present exactly what features you can expect to see, what's blocking the inclusion of other features, and what you as a user can expect and can contribute by deploying or testing CephFS.

Ceph Day Beijing - Ceph All-Flash Array Design Based on NUMA Architecture

Ceph Day Beijing - Ceph All-Flash Array Design Based on NUMA ArchitectureDanielle Womboldt This document discusses an all-flash Ceph array design from QCT based on NUMA architecture. It provides an agenda that covers all-flash Ceph and use cases, QCT's all-flash Ceph solution for IOPS, an overview of QCT's lab environment and detailed architecture, and the importance of NUMA. It also includes sections on why all-flash storage is used, different all-flash Ceph use cases, QCT's IOPS-optimized all-flash Ceph solution, benefits of using NVMe storage, QCT's lab test environment, Ceph tuning recommendations, and benefits of using multi-partitioned NVMe SSDs for Ceph OSDs.

Ceph Tech Talk -- Ceph Benchmarking Tool

Ceph Tech Talk -- Ceph Benchmarking ToolCeph Community Ceph Benchmarking Tool (CBT) is a Python framework for benchmarking Ceph clusters. It has client and monitor personalities for generating load and setting up the cluster. CBT includes benchmarks for RADOS operations, librbd, KRBD on EXT4, KVM with RBD volumes, and COSBench tests against RGW. Test plans are defined in YAML files and results are archived for later analysis using tools like awk, grep, and gnuplot.

Accelerating Ceph with iWARP RDMA over Ethernet - Brien Porter, Haodong Tang

Accelerating Ceph with iWARP RDMA over Ethernet - Brien Porter, Haodong TangCeph Community Cephalocon APAC 2018

March 22-23, 2018 - Beijing, China

Brien Porter, Intel Software Engineer

Haodong Tang, Intel Software Engineer

Walk Through a Software Defined Everything PoC

Walk Through a Software Defined Everything PoCCeph Community This document summarizes a proof of concept for a software defined data center using OpenStack and Midokura MidoNet software defined networking. The POC used 4 controllers, 8 Ceph storage nodes, and 16 compute nodes with Midokura providing logical layer 2-4 networking services. Key lessons learned included planning the underlay network configuration, optimizing Zookeeper connections, and improving OpenStack deployment processes which can be complex. Performance testing showed Ceph throughput was higher for reads than writes and SSD journaling improved IOPS. The streamlined workflow provided by the software defined infrastructure could help reduce costs and management complexity for organizations.

Ceph Day Beijing - Optimizing Ceph Performance by Leveraging Intel Optane and...

Ceph Day Beijing - Optimizing Ceph Performance by Leveraging Intel Optane and...Danielle Womboldt Optimizing Ceph performance by leveraging Intel Optane and 3D NAND TLC SSDs. The document discusses using Intel Optane SSDs as journal/metadata drives and Intel 3D NAND SSDs as data drives in Ceph clusters. It provides examples of configurations and analysis of a 2.8 million IOPS Ceph cluster using this approach. Tuning recommendations are also provided to optimize performance.

Ceph Ecosystem Update - Ceph Day Frankfurt (Feb 2014)

Ceph Ecosystem Update - Ceph Day Frankfurt (Feb 2014)Patrick McGarry Ceph is expanding its community efforts in several areas. It has been accepted as a mentoring organization for Google Summer of Code 2014. It is working to increase academic outreach and collaboration with students. The Ceph wiki and contribution guidelines pages have been updated. Ceph Days user conferences will be held in multiple locations worldwide. Efforts continue to form a Ceph Foundation and work with various open source projects on integration. Global Ceph meetups are community-organized for local users. The next Ceph Developer Summit will be a virtual event in March 2014 to coordinate project work. Community metrics are now available on a public website.

Developing a Ceph Appliance for Secure Environments

Developing a Ceph Appliance for Secure EnvironmentsCeph Community Keeper Technology develops a Ceph appliance called keeperSAFE for secure storage environments. The keeperSAFE appliance provides a preconfigured Linux distribution, automated installation using Ansible, enclosure management tools, a graphical user interface for monitoring and configuration, data collection and analytics, encryption capabilities, and extensive testing. It is designed for environments that require high availability, no single points of failure, easy management, and auditability. The keeperSAFE appliance addresses the challenges of deploying and managing Ceph at scale in restricted, mission critical environments.

Ad

More Related Content

What's hot (20)

Ceph Day Melbourne - Walk Through a Software Defined Everything PoC

Ceph Day Melbourne - Walk Through a Software Defined Everything PoCCeph Community This document summarizes a proof of concept for a software defined everything architecture using OpenStack and Midokura software defined networking. The objectives are to abstract compute, network and storage resources and dynamically allocate them as needed. It aims to provide scalability, availability, isolation, metering and automation of infrastructure. The proof of concept uses OpenStack for compute with Ceph for storage, and Midokura for software defined networking to provide logical switching, routing, firewalling and load balancing. It details the architecture, infrastructure, configuration and lessons learned from the implementation.

Ceph Community Talk on High-Performance Solid Sate Ceph

Ceph Community Talk on High-Performance Solid Sate Ceph Ceph Community The document summarizes a presentation given by representatives from various companies on optimizing Ceph for high-performance solid state drives. It discusses testing a real workload on a Ceph cluster with 50 SSD nodes that achieved over 280,000 read and write IOPS. Areas for further optimization were identified, such as reducing latency spikes and improving single-threaded performance. Various companies then described their contributions to Ceph performance, such as Intel providing hardware for testing and Samsung discussing SSD interface improvements.

Ceph: Low Fail Go Scale

Ceph: Low Fail Go Scale Ceph Community High performance disk drives for in-storage compute

Toshiba

Ceph Collaboration Day - OpenStack Summit Tokyo 2015

Ceph Day KL - Ceph on All-Flash Storage

Ceph Day KL - Ceph on All-Flash Storage Ceph Community The document discusses Ceph storage performance on all-flash storage systems. It describes how SanDisk optimized Ceph for all-flash environments by tuning the OSD to handle the high performance of flash drives. The optimizations allowed over 200,000 IOPS per OSD using 12 CPU cores. Testing on SanDisk's InfiniFlash storage system showed it achieving over 1.5 million random read IOPS and 200,000 random write IOPS at 64KB block size. Latency was also very low, with 99% of operations under 5ms for reads. The document outlines reference configurations for the InfiniFlash system optimized for small, medium and large workloads.

Accelerating Cassandra Workloads on Ceph with All-Flash PCIE SSDS

Accelerating Cassandra Workloads on Ceph with All-Flash PCIE SSDSCeph Community This document summarizes the performance of an all-NVMe Ceph cluster using Intel P3700 NVMe SSDs. Key results include achieving over 1.35 million 4K random read IOPS and 171K 4K random write IOPS with sub-millisecond latency. Partitioning the NVMe drives into multiple OSDs improved performance and CPU utilization compared to a single OSD per drive. The cluster also demonstrated over 5GB/s of sequential bandwidth.

Ceph Day San Jose - Object Storage for Big Data

Ceph Day San Jose - Object Storage for Big Data Ceph Community This document discusses using object storage for big data. It outlines key stakeholders in big data projects and what they want from object storage solutions. It then discusses using the Ceph object store to provide an elastic data lake that can disaggregate compute resources from storage. This allows analytics to be performed directly on the object store without expensive ETL processes. It also describes testing various analytics use cases and workloads with the Ceph object store.

Ceph Day Beijing - Ceph RDMA Update

Ceph Day Beijing - Ceph RDMA UpdateDanielle Womboldt Ceph is evolving its network stack to improve performance. It is moving from AsyncMessenger to using RDMA for better scalability and lower latency. RDMA support is now built into Ceph and provides native RDMA using verbs or RDMA-CM. This allows using InfiniBand or RoCE networks with Ceph. Work continues to fully leverage RDMA for features like zero-copy replication and erasure coding offload.

MySQL Head-to-Head

MySQL Head-to-HeadPatrick McGarry This document outlines an agenda for a presentation on running MySQL on Ceph storage. It includes a comparison of MySQL on Ceph versus AWS, results from a head-to-head performance lab test between the two platforms, and considerations for hardware architectures and configurations optimized for MySQL workloads on Ceph. The lab tests showed that Ceph could match or exceed AWS on both performance metrics like IOPS/GB and price/performance metrics like storage cost per IOP.

Ceph Day London 2014 - Best Practices for Ceph-powered Implementations of Sto...

Ceph Day London 2014 - Best Practices for Ceph-powered Implementations of Sto...Ceph Community This document discusses Dell's support for CEPH storage solutions and provides an agenda for a CEPH Day event at Dell. Key points include:

- Dell is a certified reseller of Red Hat-Inktank CEPH support, services, and training.

- The agenda covers why Dell supports CEPH, hardware recommendations, best practices shared with CEPH colleagues, and a concept for research data storage that is seeking input.

- Recommended CEPH architectures, components, configurations, and considerations are discussed for planning and implementing a CEPH solution. Dell server hardware options that could be used are also presented.

Ceph Day Melbourne - Scale and performance: Servicing the Fabric and the Work...

Ceph Day Melbourne - Scale and performance: Servicing the Fabric and the Work...Ceph Community The document discusses scale and performance challenges in providing storage infrastructure for research computing. It describes Monash University's implementation of the Ceph distributed storage system across multiple clusters to provide a "fabric" for researchers' storage needs in a flexible, scalable way. Key points include:

- Ceph provides software-defined storage that is scalable and can integrate with other systems like OpenStack.

- Multiple Ceph clusters have been implemented at Monash of varying sizes and purposes, including dedicated clusters for research data storage.

- The infrastructure provides different "tiers" of storage with varying performance and cost characteristics to meet different research needs.

- Ongoing work involves expanding capacity and upgrading hardware to improve performance

Ceph Day Melbourne - Troubleshooting Ceph

Ceph Day Melbourne - Troubleshooting Ceph Ceph Community This document provides troubleshooting guidance for issues with Ceph. It begins by suggesting identifying the problem domain as either performance, hang, crash, or unexpected behavior. For each problem, it recommends tools and techniques for further investigation such as debugging logs, profiling tools, and source code analysis. Debugging steps include establishing baselines, identifying implicated hosts or subsystems, increasing log verbosity, and tracing transactions through logs. The document emphasizes starting at the user end and working back towards Ceph to isolate issues.

Ceph Day San Jose - All-Flahs Ceph on NUMA-Balanced Server

Ceph Day San Jose - All-Flahs Ceph on NUMA-Balanced Server Ceph Community The document discusses optimizing Ceph storage performance on QCT servers using NUMA-balanced hardware and tuning. It provides details on QCT hardware configurations for throughput, capacity and IOPS-optimized Ceph storage. It also describes testing done in QCT labs using a 5-node all-NVMe Ceph cluster that showed significant performance gains from software tuning and using multiple OSD partitions per SSD.

Ceph Day Beijing - Our journey to high performance large scale Ceph cluster a...

Ceph Day Beijing - Our journey to high performance large scale Ceph cluster a...Danielle Womboldt This document discusses optimizing performance in large scale CEPH clusters at Alibaba. It describes two use models for writing data in CEPH and improvements made to recovery performance by implementing partial and asynchronous recovery. It also details fixes made to bugs that caused data loss or inconsistency. Additionally, it proposes offloading transaction queueing from PG workers to improve performance by leveraging asynchronous transaction workers and evaluating this approach through bandwidth testing.

Intel - optimizing ceph performance by leveraging intel® optane™ and 3 d nand...

Intel - optimizing ceph performance by leveraging intel® optane™ and 3 d nand...inwin stack Kenny Chang (張任伯) (Storage Solution Architect, Intel)

With the trend that Solid State Drive (SSD) becomes more affordable, more and more cloud providers are trying to provide high performance, highly reliable storage for their customers with SSDs. Ceph is becoming one of most open source scale-out storage solutions in worldwide market. More and more customers have strong demands that using SSD in Ceph to build high performance storage solutions for their Openstack clouds.

The disrupted Intel® Optane SSDs based on 3D Xpoint technology fills the performance gap between DRAM and NAND based SSD while the Intel® 3D NAND TLC is reducing cost gap between SSD and traditional spindle hard drive and makes it possible for all flash storage. In this session, we will

1) Discuss OpenStack storage Ceph reference design on the first Intel Optane (3D Xpoint) and P4500 TLC NAND based all-flash Ceph cluster, it delivers multi-million IOPS with extremely low latency as well as increase storage density with competitive dollar-per-gigabyte costs

2) Share Ceph bluestore tunings and optimizations, latency analysis, TCO model, IOPS/TB, IOPS/$ based on the reference architecture to demonstrate this high performance, cost effective solution.

CephFS in Jewel: Stable at Last

CephFS in Jewel: Stable at LastCeph Community Presentation from 2016 Austin OpenStack Summit.

The Ceph upstream community is declaring CephFS stable for the first time in the recent Jewel release, but that declaration comes with caveats: while we have filesystem repair tools and a horizontally scalable POSIX filesystem, we have default-disabled exciting features like horizontally-scalable metadata servers and snapshots. This talk will present exactly what features you can expect to see, what's blocking the inclusion of other features, and what you as a user can expect and can contribute by deploying or testing CephFS.

Ceph Day Beijing - Ceph All-Flash Array Design Based on NUMA Architecture

Ceph Day Beijing - Ceph All-Flash Array Design Based on NUMA ArchitectureDanielle Womboldt This document discusses an all-flash Ceph array design from QCT based on NUMA architecture. It provides an agenda that covers all-flash Ceph and use cases, QCT's all-flash Ceph solution for IOPS, an overview of QCT's lab environment and detailed architecture, and the importance of NUMA. It also includes sections on why all-flash storage is used, different all-flash Ceph use cases, QCT's IOPS-optimized all-flash Ceph solution, benefits of using NVMe storage, QCT's lab test environment, Ceph tuning recommendations, and benefits of using multi-partitioned NVMe SSDs for Ceph OSDs.

Ceph Tech Talk -- Ceph Benchmarking Tool

Ceph Tech Talk -- Ceph Benchmarking ToolCeph Community Ceph Benchmarking Tool (CBT) is a Python framework for benchmarking Ceph clusters. It has client and monitor personalities for generating load and setting up the cluster. CBT includes benchmarks for RADOS operations, librbd, KRBD on EXT4, KVM with RBD volumes, and COSBench tests against RGW. Test plans are defined in YAML files and results are archived for later analysis using tools like awk, grep, and gnuplot.

Accelerating Ceph with iWARP RDMA over Ethernet - Brien Porter, Haodong Tang

Accelerating Ceph with iWARP RDMA over Ethernet - Brien Porter, Haodong TangCeph Community Cephalocon APAC 2018

March 22-23, 2018 - Beijing, China

Brien Porter, Intel Software Engineer

Haodong Tang, Intel Software Engineer

Walk Through a Software Defined Everything PoC

Walk Through a Software Defined Everything PoCCeph Community This document summarizes a proof of concept for a software defined data center using OpenStack and Midokura MidoNet software defined networking. The POC used 4 controllers, 8 Ceph storage nodes, and 16 compute nodes with Midokura providing logical layer 2-4 networking services. Key lessons learned included planning the underlay network configuration, optimizing Zookeeper connections, and improving OpenStack deployment processes which can be complex. Performance testing showed Ceph throughput was higher for reads than writes and SSD journaling improved IOPS. The streamlined workflow provided by the software defined infrastructure could help reduce costs and management complexity for organizations.

Ceph Day Beijing - Optimizing Ceph Performance by Leveraging Intel Optane and...

Ceph Day Beijing - Optimizing Ceph Performance by Leveraging Intel Optane and...Danielle Womboldt Optimizing Ceph performance by leveraging Intel Optane and 3D NAND TLC SSDs. The document discusses using Intel Optane SSDs as journal/metadata drives and Intel 3D NAND SSDs as data drives in Ceph clusters. It provides examples of configurations and analysis of a 2.8 million IOPS Ceph cluster using this approach. Tuning recommendations are also provided to optimize performance.

Viewers also liked (7)

Ceph Ecosystem Update - Ceph Day Frankfurt (Feb 2014)

Ceph Ecosystem Update - Ceph Day Frankfurt (Feb 2014)Patrick McGarry Ceph is expanding its community efforts in several areas. It has been accepted as a mentoring organization for Google Summer of Code 2014. It is working to increase academic outreach and collaboration with students. The Ceph wiki and contribution guidelines pages have been updated. Ceph Days user conferences will be held in multiple locations worldwide. Efforts continue to form a Ceph Foundation and work with various open source projects on integration. Global Ceph meetups are community-organized for local users. The next Ceph Developer Summit will be a virtual event in March 2014 to coordinate project work. Community metrics are now available on a public website.

Developing a Ceph Appliance for Secure Environments

Developing a Ceph Appliance for Secure EnvironmentsCeph Community Keeper Technology develops a Ceph appliance called keeperSAFE for secure storage environments. The keeperSAFE appliance provides a preconfigured Linux distribution, automated installation using Ansible, enclosure management tools, a graphical user interface for monitoring and configuration, data collection and analytics, encryption capabilities, and extensive testing. It is designed for environments that require high availability, no single points of failure, easy management, and auditability. The keeperSAFE appliance addresses the challenges of deploying and managing Ceph at scale in restricted, mission critical environments.

Ceph on 64-bit ARM with X-Gene

Ceph on 64-bit ARM with X-GeneCeph Community Presentation from Ceph Day Sunnyvale 2016 by Kumar Sankaran from Applied Micro. http://ceph.com/cephdays/

Ceph Day Bring Ceph To Enterprise

Ceph Day Bring Ceph To EnterpriseAlex Lau Suse Enterprise Storage 3 provides iSCSI access to connect to ceph storage remotely over TCP/IP, allowing clients to access ceph storage using the iSCSI protocol. The iSCSI target driver in SES3 provides access to RADOS block devices. This allows any iSCSI initiator to connect to SES3 over the network. SES3 also includes optimizations for iSCSI gateways like offloading operations to object storage devices to reduce locking on gateway nodes.

Build an High-Performance and High-Durable Block Storage Service Based on Ceph

Build an High-Performance and High-Durable Block Storage Service Based on CephRongze Zhu This document discusses building a high-performance and durable block storage service using Ceph. It describes the architecture, including a minimum deployment of 12 OSD nodes and 3 monitor nodes. It outlines optimizations made to Ceph, Qemu, and the operating system configuration to achieve high performance, including 6000 IOPS and 170MB/s throughput. It also discusses how the CRUSH map can be optimized to reduce recovery times and number of copysets to improve durability to 99.99999999%.

My SQL on Ceph

My SQL on CephRed_Hat_Storage At Percona Live in April 2016, Red Hat's Kyle Bader reviewed the general architecture of Ceph and then discussed the results of a series of benchmarks done on small to mid-size Ceph clusters, which led to the development of prescriptive guidance around tuning Ceph storage nodes (OSDs).

Your 1st Ceph cluster

Your 1st Ceph clusterMirantis This document provides an overview and planning guidelines for a first Ceph cluster. It discusses Ceph's object, block, and file storage capabilities and how it integrates with OpenStack. Hardware sizing examples are given for a 1 petabyte storage cluster with 500 VMs requiring 100 IOPS each. Specific lessons learned are also outlined, such as realistic IOPS expectations from HDD and SSD backends, recommended CPU and RAM per OSD, and best practices around networking and deployment.

Ad

Similar to Ceph Performance Profiling and Reporting (20)

Ceph

CephHien Nguyen Van This document summarizes a presentation about Ceph, an open-source distributed storage system. It discusses Ceph's introduction and components, benchmarks Ceph's block and object storage performance on Intel architecture, and describes optimizations like cache tiering and erasure coding. It also outlines Intel's product portfolio in supporting Ceph through optimized CPUs, flash storage, networking, server boards, software libraries, and contributions to the open source Ceph community.

Ceph: Open Source Storage Software Optimizations on Intel® Architecture for C...

Ceph: Open Source Storage Software Optimizations on Intel® Architecture for C...Odinot Stanislas Après la petite intro sur le stockage distribué et la description de Ceph, Jian Zhang réalise dans cette présentation quelques benchmarks intéressants : tests séquentiels, tests random et surtout comparaison des résultats avant et après optimisations. Les paramètres de configuration touchés et optimisations (Large page numbers, Omap data sur un disque séparé, ...) apportent au minimum 2x de perf en plus.

IMCSummit 2015 - Day 1 Developer Track - Evolution of non-volatile memory exp...

IMCSummit 2015 - Day 1 Developer Track - Evolution of non-volatile memory exp...In-Memory Computing Summit Yesterday's thinking may still believe NVMe (NVM Express) is in transition to a production ready solution. In this session, we will discuss how the evolution of NVMe is ready for production, the history and evolution of NVMe and the Linux stack to address where NVMe has progressed today to become the low latency, highly reliable database key value store mechanism that will drive the future of cloud expansion. Examples of protocol efficiencies and types of storage engines that are optimizing for NVMe will be discussed. Please join us for an exciting session where in-memory computing and persistence have evolved.

Ceph Day Netherlands - Ceph @ BIT

Ceph Day Netherlands - Ceph @ BIT Ceph Community Ceph was implemented at BIT to replace their existing storage solution due to performance issues, lack of scalability, and high maintenance costs. They deployed Ceph to provide redundant block, file, and object storage across three datacenters. The production cluster was built on an IPv6-only network with out-of-band management and extensive monitoring. Challenges during development included getting Ansible to bootstrap the cluster correctly and dealing with namespace issues when running Ceph commands.

LUG 2014

LUG 2014Hitoshi Sato This document discusses performance improvements to the Lustre parallel file system in versions 2.5 through large I/O patches, metadata improvements, and metadata scaling with distributed namespace (DNE). It summarizes evaluations showing improved throughput from 4MB RPC, reduced degradation with large numbers of threads using SSDs over NL-SAS, high random read performance from SSD pools, and significant metadata performance gains in Lustre 2.4 from DNE allowing nearly linear scaling. Key requirements for next-generation storage include extreme IOPS, tiered architectures using local flash with parallel file systems, and reducing infrastructure needs while maintaining throughput.

9/ IBM POWER @ OPEN'16

9/ IBM POWER @ OPEN'16Kangaroot Open Source Software on OpenPOWER systems.

With 100% open source system software (including the firmware), OpenPOWER is the most open server architecture in the market. Based on the IBM POWER8 chip, this new family of servers featuring the latest Nvidia NVLink technology runs all the software solutions presented at OPEN'16 with significant cost advantages. This session explains how Docker, EnterpriseDB and many others benefit from this advanced design, and how 200+ technology companies including Google and RackSpace are collaborating in an open development alliance to build the datacenter of the future.

Sql server 2016 it just runs faster sql bits 2017 edition

Sql server 2016 it just runs faster sql bits 2017 editionBob Ward SQL Server 2016 includes several performance improvements that help it run faster than previous versions:

1. Automatic Soft NUMA partitions workloads across NUMA nodes when there are more than 8 CPUs per node to avoid bottlenecks.

2. Dynamic memory objects are now partitioned by CPU to avoid contention on global memory objects.

3. Redo operations can now be parallelized across multiple tasks to improve performance during database recovery.

Ceph Day Beijing - Ceph all-flash array design based on NUMA architecture

Ceph Day Beijing - Ceph all-flash array design based on NUMA architectureCeph Community This document discusses an all-flash Ceph array design from QCT based on NUMA architecture. It provides an agenda that covers all-flash Ceph and use cases, QCT's all-flash Ceph solution for IOPS, an overview of QCT's lab environment and detailed architecture, and the importance of NUMA. It also includes sections on why all-flash storage is used, different all-flash Ceph use cases, QCT's IOPS-optimized all-flash Ceph solution, benefits of using NVMe storage, and techniques for configuring and optimizing all-flash Ceph performance.

Red Hat Storage Day New York - New Reference Architectures

Red Hat Storage Day New York - New Reference ArchitecturesRed_Hat_Storage The document provides an overview and summary of Red Hat's reference architecture work including MySQL and Hadoop, software-defined NAS, and digital media repositories. It discusses trends toward disaggregating Hadoop compute and storage and various data flow options. It also summarizes performance testing Red Hat conducted comparing AWS EBS and Ceph for MySQL workloads, and analyzing factors like IOPS/GB ratios, core-to-flash ratios, and pricing. Server categories and vendor examples are defined. Comparisons of throughput and costs at scale between software-defined scale-out storage and traditional enterprise NAS solutions are also presented.

Accelerating hbase with nvme and bucket cache

Accelerating hbase with nvme and bucket cacheDavid Grier This set of slides describes some initial experiments which we have designed for discovering improvements for performance in Hadoop technologies using NVMe technology

Accelerating EDA workloads on Azure – Best Practice and benchmark on Intel EM...

Accelerating EDA workloads on Azure – Best Practice and benchmark on Intel EM...Meng-Ru (Raymond) Tsai Benchmark EDA workloads on Azure Intel Emerald Rapids (EMR) VMs.

The article evaluates the performance of the latest Azure VMs using the 5th Gen Intel® Xeon® Platinum 8537C (Emerald Rapids) processor by comparing them to the previous Ice Lake generation. Using two EDA tools, Cadence Spectre-X and Synopsys VCS, the benchmarks involve real-world scenarios including single-threaded, multi-threaded, and multiple jobs running on one node.

Results show that Spectre-X performs 12 to 18% better on D64ds v6 instances and 22 to 29% better on FX64v2 instances compared to D64ds v5 instances. The D64ds v6 instances were found to be more cost-effective, while FX64mds v2 instances achieved the shortest total runtime. For Synopsys VCS, the benchmarks revealed a speedup of 17 to 43% for Emerald Rapids instances over Ice Lake instances across various parallel simulations. The findings offer EDA customers options on which Azure EMR instances to select based on the cost-efficiency analysis.

What we unlearned_and_learned_by_moving_from_m9000_to_ssc_ukoug2014

What we unlearned_and_learned_by_moving_from_m9000_to_ssc_ukoug2014Philippe Fierens The document discusses moving databases from 3 Oracle M9000 servers to a new Oracle SPARC SuperCluster (SSC) system. It describes the key phases of the project including lifting and shifting the databases from the M9000s to application domains on the SSC, making use of the SSC's integrated storage cells, upgrading databases from Oracle 9i and 10g to 11g, and consolidating databases. It also covers issues encountered such as performance problems after the initial migration and regressions encountered during patching cycles. The document provides details on configuring features on the SSC like RAC One Node, Data Guard, and database resource management.

optimizing_ceph_flash

optimizing_ceph_flashVijayendra Shamanna Vijayendra Shamanna from SanDisk presented on optimizing the Ceph distributed storage system for all-flash architectures. Some key points:

1) Ceph is an open-source distributed storage system that provides file, block, and object storage interfaces. It operates by spreading data across multiple commodity servers and disks for high performance and reliability.

2) SanDisk has optimized various aspects of Ceph's software architecture and components like the messenger layer, OSD request processing, and filestore to improve performance on all-flash systems.

3) Testing showed the optimized Ceph configuration delivering over 200,000 IOPS and low latency with random 8K reads on an all-flash setup.

Revisiting CephFS MDS and mClock QoS Scheduler

Revisiting CephFS MDS and mClock QoS SchedulerYongseok Oh This presents the CephFS performance scalability and evaluation results. Specifically, it addresses some technical issues such as multi core scalability, cache size, static pinning, recovery, and QoS.

Accelerating HBase with NVMe and Bucket Cache

Accelerating HBase with NVMe and Bucket CacheNicolas Poggi on-Volatile-Memory express (NVMe) standard promises and order of magnitude faster storage than regular SSDs, while at the same time being more economical than regular RAM on TB/$. This talk evaluates the use cases and benefits of NVMe drives for its use in Big Data clusters with HBase and Hadoop HDFS.

First, we benchmark the different drives using system level tools (FIO) to get maximum expected values for each different device type and set expectations. Second, we explore the different options and use cases of HBase storage and benchmark the different setups. And finally, we evaluate the speedups obtained by the NVMe technology for the different Big Data use cases from the YCSB benchmark.

In summary, while the NVMe drives show up to 8x speedup in best case scenarios, testing the cost-efficiency of new device technologies is not straightforward in Big Data, where we need to overcome system level caching to measure the maximum benefits.

Kauli SSPにおけるVyOSの導入事例

Kauli SSPにおけるVyOSの導入事例Kazuhito Ohkawa This document summarizes case studies of using VyOS in Kauli's supply-side platform (SSP). It discusses:

1) How VyOS is used as the default gateway router for all of Kauli's servers, handling up to 400 million ads per day. Peak traffic graphs show the load on the VyOS router.

2) Techniques for scaling the VyOS implementation, such as using OSPF/ECMP routing after adding more VyOS routers.

3) Tuning tips for optimizing VyOS performance, including settings for NUMA, NAPI, buffer sizes, CPU affinity, and conntrack tables.

4) How microburst traffic patterns can cause

SQL Server It Just Runs Faster

SQL Server It Just Runs FasterBob Ward Based on the popular blog series, join me in taking a deep dive and a behind the scenes look at how SQL Server 2016 “It Just Runs Faster”, focused on scalability and performance enhancements. This talk will discuss the improvements, not only for awareness, but expose design and internal change details. The beauty behind ‘It Just Runs Faster’ is your ability to just upgrade, in place, and take advantage without lengthy and costly application or infrastructure changes. If you are looking at why SQL Server 2016 makes sense for your business you won’t want to miss this session.

Oracle RAC Presentation at Oracle Open World

Oracle RAC Presentation at Oracle Open WorldPaul Marden Presentation given with Imtiaz Mazhary and Bruce Carter at Oracle Open World 2007 while working at BA.

The state of SQL-on-Hadoop in the Cloud

The state of SQL-on-Hadoop in the CloudDataWorks Summit/Hadoop Summit The document summarizes the results of a study that evaluated the performance of different Platform-as-a-Service offerings for running SQL on Hadoop workloads. The study tested Amazon EMR, Google Cloud DataProc, Microsoft Azure HDInsight, and Rackspace Cloud Big Data using the TPC-H benchmark at various data sizes up to 1 terabyte. It found that at 1TB, lower-end systems had poorer performance. In general, HDInsight running on D4 instances and Rackspace Cloud Big Data on dedicated hardware had the best scalability and execution times. The study provides insights into the performance, scalability, and price-performance of running SQL on Hadoop in the cloud.

The state of SQL-on-Hadoop in the Cloud

The state of SQL-on-Hadoop in the CloudNicolas Poggi With the increase of Hadoop offerings in the Cloud, users are faced with many decisions to make: which Cloud provider, VMs to choose, cluster sizing, storage type, or even if to go to fully managed Platform-as-a-Service (PaaS) Hadoop? As the answer is always "depends on your data and usage", this talk will guide participants over an overview of the different PaaS solutions for the leading Cloud providers. By highlighting the main results benchmarking their SQL-on-Hadoop (i.e., Hive) services using the ALOJA benchmarking project. To compare their current offerings in terms of readiness, architectural differences, and cost-effectiveness (performance-to-price), to entry-level Hadoop based deployments. As well as briefly presenting how to replicate results and create custom benchmarks from internal apps. So that users can make their own decisions about choosing the right provider to their particular data needs.

IMCSummit 2015 - Day 1 Developer Track - Evolution of non-volatile memory exp...

IMCSummit 2015 - Day 1 Developer Track - Evolution of non-volatile memory exp...In-Memory Computing Summit

Accelerating EDA workloads on Azure – Best Practice and benchmark on Intel EM...

Accelerating EDA workloads on Azure – Best Practice and benchmark on Intel EM...Meng-Ru (Raymond) Tsai

Ad

Recently uploaded (20)

TestMigrationsInPy: A Dataset of Test Migrations from Unittest to Pytest (MSR...

TestMigrationsInPy: A Dataset of Test Migrations from Unittest to Pytest (MSR...Andre Hora Unittest and pytest are the most popular testing frameworks in Python. Overall, pytest provides some advantages, including simpler assertion, reuse of fixtures, and interoperability. Due to such benefits, multiple projects in the Python ecosystem have migrated from unittest to pytest. To facilitate the migration, pytest can also run unittest tests, thus, the migration can happen gradually over time. However, the migration can be timeconsuming and take a long time to conclude. In this context, projects would benefit from automated solutions to support the migration process. In this paper, we propose TestMigrationsInPy, a dataset of test migrations from unittest to pytest. TestMigrationsInPy contains 923 real-world migrations performed by developers. Future research proposing novel solutions to migrate frameworks in Python can rely on TestMigrationsInPy as a ground truth. Moreover, as TestMigrationsInPy includes information about the migration type (e.g., changes in assertions or fixtures), our dataset enables novel solutions to be verified effectively, for instance, from simpler assertion migrations to more complex fixture migrations. TestMigrationsInPy is publicly available at: https://github.com/altinoalvesjunior/TestMigrationsInPy.

Who Watches the Watchmen (SciFiDevCon 2025)

Who Watches the Watchmen (SciFiDevCon 2025)Allon Mureinik Tests, especially unit tests, are the developers’ superheroes. They allow us to mess around with our code and keep us safe.

We often trust them with the safety of our codebase, but how do we know that we should? How do we know that this trust is well-deserved?

Enter mutation testing – by intentionally injecting harmful mutations into our code and seeing if they are caught by the tests, we can evaluate the quality of the safety net they provide. By watching the watchmen, we can make sure our tests really protect us, and we aren’t just green-washing our IDEs to a false sense of security.

Talk from SciFiDevCon 2025

https://www.scifidevcon.com/courses/2025-scifidevcon/contents/680efa43ae4f5

Download YouTube By Click 2025 Free Full Activated

Download YouTube By Click 2025 Free Full Activatedsaniamalik72555 Copy & Past Link 👉👉

https://dr-up-community.info/

"YouTube by Click" likely refers to the ByClick Downloader software, a video downloading and conversion tool, specifically designed to download content from YouTube and other video platforms. It allows users to download YouTube videos for offline viewing and to convert them to different formats.

The Significance of Hardware in Information Systems.pdf

The Significance of Hardware in Information Systems.pdfdrewplanas10 The Significance of Hardware in Information Systems: The Types Of Hardware and What They Do

Why Orangescrum Is a Game Changer for Construction Companies in 2025

Why Orangescrum Is a Game Changer for Construction Companies in 2025Orangescrum Orangescrum revolutionizes construction project management in 2025 with real-time collaboration, resource planning, task tracking, and workflow automation, boosting efficiency, transparency, and on-time project delivery.

Download Wondershare Filmora Crack [2025] With Latest![Download Wondershare Filmora Crack [2025] With Latest](https://cdn.slidesharecdn.com/ss_thumbnails/neo4j-howkgsareshapingthefutureofgenerativeaiatawssummitlondonapril2024-240426125209-2d9db05d-250419-250428115407-a04afffa-thumbnail.jpg?width=560&fit=bounds)

![Download Wondershare Filmora Crack [2025] With Latest](https://cdn.slidesharecdn.com/ss_thumbnails/neo4j-howkgsareshapingthefutureofgenerativeaiatawssummitlondonapril2024-240426125209-2d9db05d-250419-250428115407-a04afffa-thumbnail.jpg?width=560&fit=bounds)

![Download Wondershare Filmora Crack [2025] With Latest](https://cdn.slidesharecdn.com/ss_thumbnails/neo4j-howkgsareshapingthefutureofgenerativeaiatawssummitlondonapril2024-240426125209-2d9db05d-250419-250428115407-a04afffa-thumbnail.jpg?width=560&fit=bounds)

![Download Wondershare Filmora Crack [2025] With Latest](https://cdn.slidesharecdn.com/ss_thumbnails/neo4j-howkgsareshapingthefutureofgenerativeaiatawssummitlondonapril2024-240426125209-2d9db05d-250419-250428115407-a04afffa-thumbnail.jpg?width=560&fit=bounds)

Download Wondershare Filmora Crack [2025] With Latesttahirabibi60507 Copy & Past Link 👉👉

http://drfiles.net/

Wondershare Filmora is a video editing software and app designed for both beginners and experienced users. It's known for its user-friendly interface, drag-and-drop functionality, and a wide range of tools and features for creating and editing videos. Filmora is available on Windows, macOS, iOS (iPhone/iPad), and Android platforms.

Scaling GraphRAG: Efficient Knowledge Retrieval for Enterprise AI

Scaling GraphRAG: Efficient Knowledge Retrieval for Enterprise AIdanshalev If we were building a GenAI stack today, we'd start with one question: Can your retrieval system handle multi-hop logic?

Trick question, b/c most can’t. They treat retrieval as nearest-neighbor search.

Today, we discussed scaling #GraphRAG at AWS DevOps Day, and the takeaway is clear: VectorRAG is naive, lacks domain awareness, and can’t handle full dataset retrieval.

GraphRAG builds a knowledge graph from source documents, allowing for a deeper understanding of the data + higher accuracy.

Solidworks Crack 2025 latest new + license code

Solidworks Crack 2025 latest new + license codeaneelaramzan63 Copy & Paste On Google >>> https://dr-up-community.info/

The two main methods for installing standalone licenses of SOLIDWORKS are clean installation and parallel installation (the process is different ...

Disable your internet connection to prevent the software from performing online checks during installation

Exploring Wayland: A Modern Display Server for the Future

Exploring Wayland: A Modern Display Server for the FutureICS Wayland is revolutionizing the way we interact with graphical interfaces, offering a modern alternative to the X Window System. In this webinar, we’ll delve into the architecture and benefits of Wayland, including its streamlined design, enhanced performance, and improved security features.

Kubernetes_101_Zero_to_Platform_Engineer.pptx

Kubernetes_101_Zero_to_Platform_Engineer.pptxCloudScouts Presentacion de la primera sesion de Zero to Platform Engineer

Adobe Marketo Engage Champion Deep Dive - SFDC CRM Synch V2 & Usage Dashboards

Adobe Marketo Engage Champion Deep Dive - SFDC CRM Synch V2 & Usage DashboardsBradBedford3 Join Ajay Sarpal and Miray Vu to learn about key Marketo Engage enhancements. Discover improved in-app Salesforce CRM connector statistics for easy monitoring of sync health and throughput. Explore new Salesforce CRM Synch Dashboards providing up-to-date insights into weekly activity usage, thresholds, and limits with drill-down capabilities. Learn about proactive notifications for both Salesforce CRM sync and product usage overages. Get an update on improved Salesforce CRM synch scale and reliability coming in Q2 2025.

Key Takeaways:

Improved Salesforce CRM User Experience: Learn how self-service visibility enhances satisfaction.

Utilize Salesforce CRM Synch Dashboards: Explore real-time weekly activity data.

Monitor Performance Against Limits: See threshold limits for each product level.

Get Usage Over-Limit Alerts: Receive notifications for exceeding thresholds.

Learn About Improved Salesforce CRM Scale: Understand upcoming cloud-based incremental sync.

Mastering Fluent Bit: Ultimate Guide to Integrating Telemetry Pipelines with ...

Mastering Fluent Bit: Ultimate Guide to Integrating Telemetry Pipelines with ...Eric D. Schabell It's time you stopped letting your telemetry data pressure your budgets and get in the way of solving issues with agility! No more I say! Take back control of your telemetry data as we guide you through the open source project Fluent Bit. Learn how to manage your telemetry data from source to destination using the pipeline phases covering collection, parsing, aggregation, transformation, and forwarding from any source to any destination. Buckle up for a fun ride as you learn by exploring how telemetry pipelines work, how to set up your first pipeline, and exploring several common use cases that Fluent Bit helps solve. All this backed by a self-paced, hands-on workshop that attendees can pursue at home after this session (https://o11y-workshops.gitlab.io/workshop-fluentbit).

PDF Reader Pro Crack Latest Version FREE Download 2025

PDF Reader Pro Crack Latest Version FREE Download 2025mu394968 🌍📱👉COPY LINK & PASTE ON GOOGLE https://dr-kain-geera.info/👈🌍

PDF Reader Pro is a software application, often referred to as an AI-powered PDF editor and converter, designed for viewing, editing, annotating, and managing PDF files. It supports various PDF functionalities like merging, splitting, converting, and protecting PDFs. Additionally, it can handle tasks such as creating fillable forms, adding digital signatures, and performing optical character recognition (OCR).

Automation Techniques in RPA - UiPath Certificate

Automation Techniques in RPA - UiPath CertificateVICTOR MAESTRE RAMIREZ Automation Techniques in RPA - UiPath Certificate

How to Optimize Your AWS Environment for Improved Cloud Performance

How to Optimize Your AWS Environment for Improved Cloud PerformanceThousandEyes How to Optimize Your AWS Environment for Improved Cloud Performance

How Valletta helped healthcare SaaS to transform QA and compliance to grow wi...

How Valletta helped healthcare SaaS to transform QA and compliance to grow wi...Egor Kaleynik This case study explores how we partnered with a mid-sized U.S. healthcare SaaS provider to help them scale from a successful pilot phase to supporting over 10,000 users—while meeting strict HIPAA compliance requirements.

Faced with slow, manual testing cycles, frequent regression bugs, and looming audit risks, their growth was at risk. Their existing QA processes couldn’t keep up with the complexity of real-time biometric data handling, and earlier automation attempts had failed due to unreliable tools and fragmented workflows.

We stepped in to deliver a full QA and DevOps transformation. Our team replaced their fragile legacy tests with Testim’s self-healing automation, integrated Postman and OWASP ZAP into Jenkins pipelines for continuous API and security validation, and leveraged AWS Device Farm for real-device, region-specific compliance testing. Custom deployment scripts gave them control over rollouts without relying on heavy CI/CD infrastructure.

The result? Test cycle times were reduced from 3 days to just 8 hours, regression bugs dropped by 40%, and they passed their first HIPAA audit without issue—unlocking faster contract signings and enabling them to expand confidently. More than just a technical upgrade, this project embedded compliance into every phase of development, proving that SaaS providers in regulated industries can scale fast and stay secure.

Adobe Illustrator Crack FREE Download 2025 Latest Version

Adobe Illustrator Crack FREE Download 2025 Latest Versionkashifyounis067 🌍📱👉COPY LINK & PASTE ON GOOGLE http://drfiles.net/ 👈🌍

Adobe Illustrator is a powerful, professional-grade vector graphics software used for creating a wide range of designs, including logos, icons, illustrations, and more. Unlike raster graphics (like photos), which are made of pixels, vector graphics in Illustrator are defined by mathematical equations, allowing them to be scaled up or down infinitely without losing quality.

Here's a more detailed explanation:

Key Features and Capabilities:

Vector-Based Design:

Illustrator's foundation is its use of vector graphics, meaning designs are created using paths, lines, shapes, and curves defined mathematically.

Scalability:

This vector-based approach allows for designs to be resized without any loss of resolution or quality, making it suitable for various print and digital applications.

Design Creation:

Illustrator is used for a wide variety of design purposes, including:

Logos and Brand Identity: Creating logos, icons, and other brand assets.

Illustrations: Designing detailed illustrations for books, magazines, web pages, and more.

Marketing Materials: Creating posters, flyers, banners, and other marketing visuals.

Web Design: Designing web graphics, including icons, buttons, and layouts.

Text Handling:

Illustrator offers sophisticated typography tools for manipulating and designing text within your graphics.

Brushes and Effects:

It provides a range of brushes and effects for adding artistic touches and visual styles to your designs.

Integration with Other Adobe Software:

Illustrator integrates seamlessly with other Adobe Creative Cloud apps like Photoshop, InDesign, and Dreamweaver, facilitating a smooth workflow.

Why Use Illustrator?

Professional-Grade Features:

Illustrator offers a comprehensive set of tools and features for professional design work.

Versatility:

It can be used for a wide range of design tasks and applications, making it a versatile tool for designers.

Industry Standard:

Illustrator is a widely used and recognized software in the graphic design industry.

Creative Freedom:

It empowers designers to create detailed, high-quality graphics with a high degree of control and precision.

WinRAR Crack for Windows (100% Working 2025)

WinRAR Crack for Windows (100% Working 2025)sh607827 copy and past on google ➤ ➤➤ https://hdlicense.org/ddl/

WinRAR Crack Free Download is a powerful archive manager that provides full support for RAR and ZIP archives and decompresses CAB, ARJ, LZH, TAR, GZ, ACE, UUE, .

Expand your AI adoption with AgentExchange

Expand your AI adoption with AgentExchangeFexle Services Pvt. Ltd. AgentExchange is Salesforce’s latest innovation, expanding upon the foundation of AppExchange by offering a centralized marketplace for AI-powered digital labor. Designed for Agentblazers, developers, and Salesforce admins, this platform enables the rapid development and deployment of AI agents across industries.

Email: [email protected]

Phone: +1(630) 349 2411

Website: https://www.fexle.com/blogs/agentexchange-an-ultimate-guide-for-salesforce-consultants-businesses/?utm_source=slideshare&utm_medium=pptNg

Ceph Performance Profiling and Reporting

- 1. CEPH PERFORMANCE Profiling and Reporting Brent Compton, Director Storage Solution Architectures Kyle Bader, Sr Storage Architect Veda Shankar, Sr Storage Architect

- 2. HOW WELL CAN CEPH PERFORM? WHICH OF MY WORKLOADS CAN IT HANDLE? HOW WILL CEPH PERFORM ON MY SERVERS? INSERT DESIGNATOR, IF NEEDED2 Questions that continually surface FAQ FROM THE COMMUNITY

- 3. PERCEIVED RANGE OF CEPH PERF ACTUAL (MEASURED) RANGE OF CEPH PERF INSERT DESIGNATOR, IF NEEDED3 Finding the right server and network config for the job HOW WELL CAN CEPH PERFORM?

- 4. https://github.com/ceph/ceph-brag (email [email protected] for access) INSERT DESIGNATOR, IF NEEDED4 Ceph performance leaderboard (ceph-brag) coming to ceph.com INVITATION TO BE PART OF THE ANSWER

- 5. INSERT DESIGNATOR, IF NEEDED5 Posted throughput results A LEADERBOARD FOR CEPH PERF RESULTS

- 6. INSERT DESIGNATOR, IF NEEDED6 Looking for Beta submitters prior to general availability on Ceph.com LEADERBOARD ATTRIBUTION AND DETAILS

- 7. INSERT DESIGNATOR, IF NEEDED7 Still under construction EMERGING LEADERBOARD FOR IOPS

- 8. OpenStack Starter 64 TB S 256TB + M 1PB + L 2PB+ MySQL Perf Node IOPs optimized Digital Media Perf Node Throughput optimized Archive Node Cost-Capacity optimized MAPPING CONFIGS TO WORKLOAD IO CATEGORIES

- 9. INSERT DESIGNATOR, IF NEEDED9 Some pertinent measures • MBps • $/MBps • MBps/provisioned-TB • Watts/MBps • MTTR (self-heal from server failure) Range of MBps measured with Ceph on different server configs DIGITAL MEDIA PERF NODES 0 100 200 300 400 500 HDD sample SSD sample 4M Read MBps per Drive 4M Write MBps per Drive

- 10. Sequential Read Throughput vs IO Block Size THROUGHPUT PER OSD DEVICE (READ) INSERT DESIGNATOR, IF NEEDED10 0.00 10.00 20.00 30.00 40.00 50.00 60.00 70.00 80.00 90.00 100.00 64 512 1024 4096 MB/secperOSDDevice IO Block Size D51PH-1ULH - 12xOSD+3xSSD, 2x10G (3xRep) D51PH-1ULH - 12xOSD+0xSSD, 2x10G (EC3:2) T21P-4U/Dual - 35xOSD+2xPCIe, 1x40G (3xRep) T21P-4U/Dual - 35xOSD+2xPCIe, 1x40G (EC2:2) T21P-4U/Dual - 35xOSD+0xSSD, 10G+10G (EC2:2)

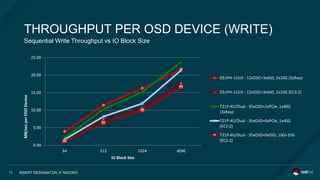

- 11. Sequential Write Throughput vs IO Block Size THROUGHPUT PER OSD DEVICE (WRITE) INSERT DESIGNATOR, IF NEEDED11 0.00 5.00 10.00 15.00 20.00 25.00 64 512 1024 4096 MB/secperOSDDevice IO Block Size D51PH-1ULH - 12xOSD+3xSSD, 2x10G (3xRep) D51PH-1ULH - 12xOSD+3xSSD, 2x10G (EC3:2) T21P-4U/Dual - 35xOSD+2xPCIe, 1x40G (3xRep) T21P-4U/Dual - 35xOSD+0xPCIe, 1x40G (EC2:2) T21P-4U/Dual - 35xOSD+0xSSD, 10G+10G (EC2:2)

- 12. Sequential Throughput vs Different Server Sizes SERVER SCALABILITY INSERT DESIGNATOR, IF NEEDED12 0 10 20 30 40 50 60 70 80 90 100 12 Disks / OSDs (D51PH) 35 Disks / OSDs (T21P) MBytes/sec/disk Rados-4M-seq-read/Disk Rados-4M-seq-write/Disk

- 13. Sequential Throughput vs Different Protection Methods (Replication v. Erasure-coding) DATA PROTECTION METHODS INSERT DESIGNATOR, IF NEEDED13 0 10 20 30 40 50 60 70 80 90 100 Rados-4M-Seq-Reads/disk Rados-4M-Seq-Writes/disk MBytes/sec/disk D51PH-1ULH - 12xOSD+0xSSD, 2x10G (EC3:2) D51PH-1ULH - 12xOSD+3xSSD, 2x10G (EC3:2) D51PH-1ULH - 12xOSD+3xSSD, 2x10G (3xRep)

- 14. Sequential IO Latency vs Different Journal Approaches JOURNALING INSERT DESIGNATOR, IF NEEDED14 0 500 1000 1500 2000 2500 3000 3500 4000 Rados-4M-Seq-Reads Latency Rados-4M-Seq-Writes Latency Latencyinmsec T21P-4U/Dual - 35xOSD+2xPCIe, 1x40G (3xRep) T21P-4U/Dual - 35xOSD+0xPCIe, 1x40G (3xRep)

- 15. Sequential Throughput vs Different Network Bandwidth NETWORK INSERT DESIGNATOR, IF NEEDED15 0 10 20 30 40 50 60 70 80 90 100 Rados-4M-Seq-Reads/disk Rados-4M-Seq-Writes/disk MBytes/sec/disk T21P-4U/Dual - 35xOSD+2xPCIe, 1x40G (3xRep) T21P-4U/Dual - 35xOSD+2xPCIe, 10G+10G (3xRep)

- 16. Sequential Throughput v. Different OSD Media Types (All-flash v. Magnetic) MEDIA TYPE 16

- 17. Different Configs vs $/MBps (lowest = best) PRICE/PERFORMANCE 17 D51PH-1ULH - 12xOSD+3xSSD, 2x10G (3xRep) T21P-4U/Dual - 35xOSD+2xPCIe, 1x40G (3xRep) $/MBps Price/Perf (w) Price/Perf (r)

- 18. Different Configs vs $/MBps (lowest = best) PRICE/PERFORMANCE 18 D51PH-1ULH - 12xOSD+3xSSD, 2x10G (3xRep) T21P-4U/Dual - 35xOSD+2xPCIe, 1x40G (3xRep) $/MBps Price/Perf (w) Price/Perf (r)

- 19. INSERT DESIGNATOR, IF NEEDED19 Some pertinent measures • MySQL Sysbench requests/sec • IOPS (4K, 16K random) • $/IOP • IOPS/provisioned-GB • Watts/IOP Range of IOPS measured with Ceph on different server configs MYSQL PERF NODES 0 10000 20000 30000 40000 50000 60000 HDD sample SSD sample 4K Read IOPS per Drive 4K Write IOPS per Drive

- 20. AWS provisioned-IOPS v. Ceph all-flash configs SYSBENCH REQUEST/SEC 20 0 10000 20000 30000 40000 50000 60000 70000 80000 P-IOPS m4.4XL Ceph cluster cl: 16 vcpu/64MB (1 instance, 14% capacity) Ceph cluster cl: 16 vcpu/64MB (10 instances, 87% capacity) Sysbench Read Req/sec Sysbench Write Req/sec Sysbench 70/30 R/W Req/sec

- 21. AWS use of IOPS/GB throttles GETTING DETERMINISTIC IOPS 21 0.0 5.0 10.0 15.0 20.0 25.0 30.0 35.0 P-IOPS m4.4XL P-IOPS r3.2XL GP-SSD r3.2XL MySQL IOPS/GB, Sysbench Reads MySQL IOPS/GB, Sysbench Writes

- 22. Ceph IOPS/GB varying with instance quantity and cluster capacity utilization MYSQL INSTANCES AND CLUSTER CAPACITY 22 26 87 19 0 10 20 30 40 50 60 70 80 90 100 P-IOPS m4.4XL Ceph cluster cl: 16 vcpu/64MB (1 instance, 14% capacity) Ceph cluster cl: 16 vcpu/64MB (10 instances, 87% capacity)

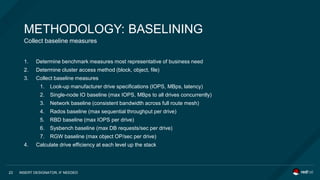

- 23. Collect baseline measures INSERT DESIGNATOR, IF NEEDED23 METHODOLOGY: BASELINING 1. Determine benchmark measures most representative of business need 2. Determine cluster access method (block, object, file) 3. Collect baseline measures 1. Look-up manufacturer drive specifications (IOPS, MBps, latency) 2. Single-node IO baseline (max IOPS, MBps to all drives concurrently) 3. Network baseline (consistent bandwidth across full route mesh) 4. Rados baseline (max sequential throughput per drive) 5. RBD baseline (max IOPS per drive) 6. Sysbench baseline (max DB requests/sec per drive) 7. RGW baseline (max object OP/sec per drive) 4. Calculate drive efficiency at each level up the stack

- 24. Towards deterministic performance INSERT DESIGNATOR, IF NEEDED24 METHODOLOGY: WATERMARKS 1. Identify IOPS/GB at 35% and 70% cluster utilization (with corresponding MySQL instances) 2. Identify MBps/TB at 35% and 70% cluster utilization 3. Determine target IOPS/GB or MBps at target cluster utilization 4. (experimential) Set block device IO throttles to cap consumption by any single client

- 25. Towards comparable results INSERT DESIGNATOR, IF NEEDED25 COMMON TOOLS 1. CBT – Ceph Benchmarking Tool

- 26. https://github.com/ceph/ceph-brag (email [email protected] for access) INSERT DESIGNATOR, IF NEEDED26 Ceph performance leaderboard (ceph-brag) coming to ceph.com INVITATION TO BE PART OF THE ANSWER

- 28. 4K Random Write IOPS v. Different Controllers and software configs RAID CONTROLLER WRITE-BACK (HDD OSDS) 28