chapter 4-Functional Dependency and Normilization.pdf

- 1. Fundamentals of Database system Chapter Four: Functional Dependency and Normalization Prepared by: Misganaw Abeje University of Gondar College Of Informatics Department of computer science [email protected]

- 2. outline ⚫ Goals of Functional Dependency and Normalization ⚫ Functional Dependency ⚫ Normalization – 1st NF – 2nd NF – 3rd NF – BCNF BY: MA

- 3. Goals 1. To make Each tuple in a relation should represent one entity or relationship instance – Only foreign keys should be used to refer to other entities – Entity and relationship attributes should be kept apart as much as possible – Design a schema that can be explained easily relation by relation. The semantics of attributes should be easy to interpret. 2. Prevent cause problems due to Mixing attributes of multiple entities may – Information is stored redundantly wasting storage – Problems with update anomalies: – Insertion anomalies – Deletion anomalies: When a project is deleted, it will result in deleting all the BY: MA

- 4. 3. It can prevent Null Values in Tuples ⚫ Relations should be designed such that their tuples will have as few NULL values as possible – Attributes that are NULL frequently could be placed in separate relations (with the primary key) – Reasons for nulls: I. attribute not applicable or invalid II. attribute value unknown (may exist) III. value known to exist, but unavailable ⚫ Generally Functional dependency and normalization is used to help to develop a good database model BY: MA

- 5. Functional dependency (FD) ⚫ Definition: A functional dependency (FD) on a relation schema R is a constraint X → Y, where X and Y are subsets of attributes of R. ⚫ Definition: an FD is a relationship between an attribute "Y" and a determinant (1 or more other attributes) "X" such that for a given value of a determinant the value of the attribute is uniquely defined. 1. X is a determinant 2. X determines Y 3. Y is functionally dependent on X 4. X → Y BY: MA

- 6. ⚫ The determination of functional dependencies is an important part of designing databases in the relational model, and In Database normalization and de-normalization. ⚫ The most important properties of functional dependencies are Armstrong's axioms, which are used in database normalization: Subset Property (Axiom of Reflexivity): If Y is a subset of X, then X → Y Augmentation (Axiom of Augmentation): ⚫ If X → Y, then XZ → YZ ⚫ Transitivity (Axiom of Transitivity): • If X → Y and Y → Z, then X → Z BY: MA

- 7. ⚫ Functional Dependencies: We say an attribute, B, has a functional dependency on another attribute, A, if for any two records, which have the same value for A, then the values for B in these two records must be the same. We illustrate this as: A → B ⚫ Example: Suppose we keep track of employee email addresses, and we only track one email address for each employee. Suppose each employee is identified by their unique employee number. We say there is a functional dependency of email address on employee number: employee number → email address BY: MA

- 8. Functional Dependencies 8 EmpNum EmpEmail EmpFname EmpLname 123 [email protected] John Doe 456 [email protected] Peter Smith 555 [email protected] Alan Lee 633 [email protected] Peter Doe 787 [email protected] Alan Lee If EmpNum is the PK then the FDs: EmpNum → EmpEmail EmpNum → EmpFname EmpNum → EmpLname must exist. BY: MA

- 9. Functional Dependencies 9 EmpNum → EmpEmail EmpNum → EmpFname EmpNum → EmpLname EmpNum EmpEmail EmpFname EmpLname EmpNum EmpEmail EmpFname EmpLname 3 different ways you might see FDs depicted BY: MA

- 10. Determinant ⚫ Functional Dependency EmpNum → EmpEmail – Attribute on the LHS is known as the determinant • EmpNum is a determinant of EmpEmail ⚫ Transitive dependency Consider attributes A, B, and C, and where A → B and B → C. ⚫ Functional dependencies are transitive, which means that we also have the functional dependency A → C ⚫ We say that C is transitively dependent on A through B. 10 BY: MA

- 11. Transitive dependency 11 EmpNum EmpEmail DeptNum DeptNname EmpNum EmpEmail DeptNum DeptNname DeptName is transitively dependent on EmpNum via DeptNum EmpNum → DeptName EmpNum → DeptNum DeptNum → DeptName BY: MA

- 12. Partial dependency 12 A partial dependency exists when an attribute B is functionally dependent on an attribute A, and A is a component of a multipart candidate key. InvNum LineNum Qty InvDate Candidate keys: {InvNum, LineNum} InvDate is partially dependent on {InvNum, LineNum} as InvNum is a determinant of InvDate and InvNum is part of a candidate key BY: MA

- 13. Normalization ⚫ A large database defined as a single relation may result in data duplication. This repetition of data may result in: – Making relations very large. – It isn't easy to maintain and update data as it would involve searching many records in relation. – Wastage and poor utilization of disk space and resources. – The likelihood of errors and inconsistencies increases. ⚫ So to handle these problems, we should analyze and decompose the relations with redundant data into smaller, simpler, and well-structured relations that are satisfy desirable properties. Normalization is a process of decomposing the relations into relations with fewer attributes. BY: MA

- 14. What is Normalization? ⚫ Normalization is the process of organizing the data in the database. ⚫ Normalization is used to minimize the redundancy from a relation or set of relations. It is also used to eliminate undesirable characteristics like Insertion, Update, and Deletion Anomalies. ⚫ Normalization divides the larger table into smaller and links them using relationships. ⚫ The normal form is used to reduce redundancy from the database table. ⚫ Why do we need Normalization? The main reason for normalizing the relations is removing these anomalies. Failure to eliminate anomalies leads to data redundancy and can cause data integrity and other problems as the database grows. Normalization consists of a series of guidelines that helps to guide you in creating a good BY: MA

- 15. ⚫ Advantages of Normalization – Normalization helps to minimize data redundancy. – Greater overall database organization. – Data consistency within the database. – Much more flexible database design. – Enforces the concept of relational integrity. ⚫ Disadvantages of Normalization – You cannot start building the database before knowing what the user needs. – The performance degrades when normalizing the relations to higher normal forms, i.e., 4NF, 5NF. – It is very time-consuming and difficult to normalize relations of a higher degree. BY: MA

- 16. Types of Normalization ⚫ We discuss different types of normal forms: first, second, third, and Boyce-Codd normal forms ⚫ 1NF, 2NF, 3NF, and BCNF, 4NF,5NF ⚫ Normalization is a process that “improves” a database design by generating relations that are of higher normal forms. ⚫ The objective of normalization: “to create relations where every dependency is on the key, the whole key, and nothing but the key”. ⚫ Definition. The normal form of a relation refers to the highest normal form condition that it meets, and hence indicates the degree to which it has been normalized. 16 BY: MA

- 17. Definitions of Keys and Attributes Participating in Keys ⚫ A superkey of a relation schema R = {A1, A2, ... , An} is a set of attributes S ⊆ R with the property that no two tuples t1 and t2 in any legal relation state r of R will have t 1[S] = t2[S]. ⚫ If a relation schema has more than one key, each is called a candidate key. One of the candidate keys is arbitrarily designated to be the primary key, and the others are called secondary keys. ⚫ An attribute of relation schema R is called a prime attribute of R if it is a member of some candidate key of R. An attribute is called nonprime if it is not a prime attribute—that is, if it is not a member of any candidate key. BY: MA

- 18. Normalization 18 There is a sequence to normal forms: 1NF is considered the weakest, 2NF is stronger than 1NF, 3NF is stronger than 2NF, and BCNF is considered the strongest Also, any relation that is in BCNF, is in 3NF; any relation in 3NF is in 2NF; and any relation in 2NF is in 1NF. BY: MA

- 19. Normalization 19 BCNF 3NF 2NF 1NF a relation in BCNF, is also in 3NF a relation in 3NF is also in 2NF a relation in 2NF is also in 1NF BY: MA

- 20. Normalization 20 • We consider a relation in BCNF to be fully normalized. • The benefit of higher normal forms is that update semantics for the affected data are simplified. • This means that applications required to maintain the database are simpler. • A design that has a lower normal form than another design has more redundancy. Uncontrolled redundancy can lead to data integrity problems. BY: MA

- 21. First Normal Form ⚫ We say a relation is in 1NF if all values stored in the relation are single-valued and atomic. ⚫ It states that an attribute of a table cannot hold multiple values. It must hold only single-valued attribute. ⚫ First normal form disallows the multi-valued attribute, composite attribute, and their combinations. 1NF places restrictions on the structure of relations Values must be simple. 21 BY: MA

- 22. First Normal Form 22 The following in not in 1NF EmpNum EmpPhone EmpDegrees 123 233-9876 333 233-1231 BA, BSc, PhD 679 233-1231 BSc, MSc EmpDegrees is a multi-valued field: employee 679 has two degrees: BSc and MSc employee 333 has three degrees: BA, BSc, PhD BY: MA

- 23. First Normal Form 23 To obtain 1NF relations we must, without loss of information, replace the above with two relations - see next slide EmpNum EmpPhone EmpDegrees 123 233-9876 333 233-1231 BA, BSc, PhD 679 233-1231 BSc, MSc BY: MA

- 24. First Normal Form 24 EmpNum EmpDegree 333 BA 333 BSc 333 PhD 679 BSc MSc 679 EmpNum EmpPhone 123 233-9876 333 233-1231 679 233-1231 An outer join between Employee and EmployeeDegree will produce the information we saw before Employee EmployeeDegree BY: MA

- 25. Exercise: which NF is satisfy? Normalize into next higher NF? BY: MA EMP_ID EMP_NAME EMP_PHONE EMP_STATE 14 John 7272826385, 9064738238 UP 20 Harry 8574783832 Bihar 12 Sam 7390372389, 8589830302 Punjab

- 26. Second Normal Form 26 Second Normal Form • Definition. A relation schema R is in 2NF if every nonprime attribute in R is fully functionally dependent on the primary key of R. A relation is in 2NF if it is in 1NF, and every non- key attribute is fully dependent on each candidate key. (That is, we don’t have any partial functional dependency.) • 2NF (and 3NF) both involve the concepts of key and non-key attributes. • A key attribute is any attribute that is part of a key; any attribute that is not a key attribute, is a non-key attribute. • A relation in 2NF will not have any partial dependencies BY: MA

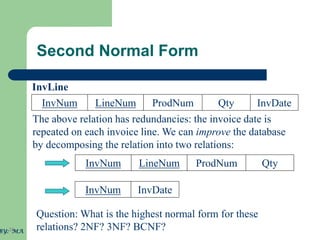

- 27. Second Normal Form ⚫ Consider this InvLine table (in 1NF): ⚫ InvNum, LineNum →ProdNum, Qty ⚫ InvNum→ InvDate ⚫ In this relation there are two candidate keys: InvNum and LineNum. ⚫ ProdNum, Qty and Invdate are the only non-key attribute, and from those non key attributes ProdNum, Qty it is fully dependent on each candidate key (InvNum, LineNum) whereas Invdate is partial functional dependency on key (InvNum). ⚫ Therefore the relation is not 2NF since there is a partial dependency of InvDate on InvNum, it is 1NF. InvNum LineNum ProdNum Qty InvDate BY: MA

- 28. Second Normal Form 28 LineNum ProdNum Qty InvNum InvDate InvLine The above relation has redundancies: the invoice date is repeated on each invoice line. We can improve the database by decomposing the relation into two relations: LineNum ProdNum Qty InvNum InvDate InvNum Question: What is the highest normal form for these relations? 2NF? 3NF? BCNF? BY: MA

- 29. Is the following relation in 2NF? • It is 2NFbecause of all non key attributes are full depend on the given candidate key, but not in 3NF, nor in BCNF

- 30. 30 2NF, but not in 3NF, nor in BCNF: since dnumber is not a candidate key and we have: dnumber → dname. EmployeeDept ename ssn bdate address dnumber dname BY: MA

- 31. Third Normal Form 31 Third Normal Form • A relation is in 3NF if the relation is in 2NF and all determinants of non-key attributes are candidate keys That is, for any functional dependency: X → Y, where Y is a non-key attribute (or a set of non-key attributes), X is a candidate key. • This definition of 3NF differs from BCNF only in the specification of non-key attributes - 3NF is weaker than BCNF. (BCNF requires all determinants to be candidate keys.) • A relation in 3NF will not have any transitive dependencies of non-key attribute on a candidate key through another non- key attribute. BY: MA

- 32. Third Normal Form 32 EmpNum EmpName DeptNum DeptName EmpName, DeptNum, and DeptName are non-key attributes. DeptNum determines DeptName, a non-key attribute, and DeptNum is not a candidate key. Consider this Employee relation Is the relation in BCNF? … no Is the relation in 3NF? … no Is the relation in 2NF? … yes Candidate keys are? … BY: MA

- 33. Third Normal Form 33 EmpNum EmpName DeptNum DeptName We correct the situation by decomposing the original relation into two 3NF relations. Note the decomposition is lossless. EmpNum EmpName DeptNum DeptName DeptNum Verify these two relations are in 3NF. BY: MA

- 34. Boyce-Codd Normal Form(BCNF) 34 • BCNF is defined very simply: For a table to satisfy the Boyce- Codd Normal Form, it should satisfy the following two conditions: – It should be in the Third Normal Form. – And, for any dependency A → B, A should be a super key or candidate key. • The second point sounds a bit tricky, right? In simple words, it means, that for a dependency A → B, if B is a prime attribute, A cannot be a non-prime attribute. • An attribute that is not part of any candidate key is known as non-prime attribute. An attribute that is a part of one of the candidate keys is known as prime attribute. BY: MA

- 35. 35 student_id subject professor 101 Java P.Java 101 C++ P.Cpp 102 Java P.Java2 103 C# P.Chash 104 Java P.Java Below we have a college enrolment table with columns student_id, subject and professor. As you can see, we have also added some sample data to the table. In the table abov • One student can enroll for multiple subjects. For example, student with student_id 101, has opted for subjects - Java & C++ • For each subject, a professor is assigned to the student. • And, there can be multiple professors teaching one subject like we have for Java. BY: MA

- 36. 36 • Well, in the table above student_id, subject together form the primary key, because using student_id and subject, we can find all the columns of the table. • One more important point to note here is, one professor teaches only one subject, but one subject may have two different professors. • Hence, there is a dependency between subject and professor here, where subject depends on the professor name. • This table satisfies the 1st Normal form because all the values are atomic, column names are unique and all the values stored in a particular column are of same domain. • This table also satisfies the 2nd Normal Form as their is no Partial Dependency. • And, there is no Transitive Dependency, hence the table also satisfies ,….BCNF BY: MA

- 37. 37 Why this table is not in BCNF? • In the table above, student_id, subject form primary key, which means subject column is a prime attribute. • But, there is one more dependency, professor → subject. • And while subject is a prime attribute, professor is a non-prime attribute, which is not allowed by BCNF. • How to satisfy BCNF? • To make this relation(table) satisfy BCNF, we will decompose this table into two tables, student table and professor table. • Below we have the structure for both the tables. ,….BCNF BY: MA

- 38. 38 student_id p_id 101 1 101 2 and so on... p_id professor subject 1 P.Java Java 2 P.Cpp C++ Student Table Professor Table ,….BCNF BY: MA

- 39. 39 course_no instr_no student_no instr_no student_no course_no instr_no {student_no, instr_no} → course_no {student_no, course_no} → instr_no instr_no → course_no ,….BCNF BY: MA

- 40. Main Difference Between BCNF and 3NF ⚫ Most relations in 3NF are also in BCNF, the only time this may not be true is when there is more than one candidate key for a relation and at least one of composite in 3NF. BY: MA

- 41. Summary ⚫ We say a relation is in 1NF if all values stored in the relation are single-valued and atomic. ⚫ A relation is in 2NF if it is in 1NF, and every non-key attribute is fully dependent on each candidate key. (That is, we don’t have any partial functional dependency.) ⚫ A relation is in 3NF if the relation is in 2NF and all determinants of non-key attributes are candidate keys BY: MA

- 42. Exercise 1. Consider the relation R, which has attributes that hold schedules of courses and sections at a university; R = {Course_no, Sec_no, Offering_dept, Credit_hours, Course_level, Instructor_ssn, Semester, Year, Days_hours, Room_no, No_of_students}. Suppose that the following functional dependencies hold on R: {Course_no} → {Offering_dept, Credit_hours, Course_level} {Course_no, Sec_no, Semester, Year} → {Days_hours, Room_no, No_of_students, Instructor_ssn} {Room_no, Days_hours, Semester, Year} → {Instructor_ssn, Course_no, Sec_no} ⚫ Try to determine which sets of attributes form keys of R. How would you normalize this relation? 42 BY: MA

- 43. 2. Suppose that we have the following requirements for a university database that is used to keep track of students’ transcripts: a. The university keeps track of each student’s name (Sname), student number (Snum), Social Security number (Ssn), current address (Sc_addr) and phone (Sc_phone), permanent address (Sp_addr) and phone (Sp_phone),birth date (Bdate), sex (Sex), class (Class) (‘freshman’, ‘sophomore’, ... ,‘graduate’), major department (Major_code), minor department(Minor_code) (if any), and degree program (Prog) (‘b.a.’, ‘b.s.’, ... , ‘ph.d.’).Both Ssn and student number have unique values for each student. b. Each department is described by a name (Dname), department code (Dcode), office number (Doffice), office phone (Dphone), and college (Dcollege). Both name and code have unique values for each department. c. Each course has a course name (Cname), description (Cdesc), course number (Cnum), number of semester hours (Credit), level (Level), and offering department (Cdept). The course number is unique for each course. 43 BY: MA

- 44. d. Each section has an instructor (Iname), semester (Semester), year (Year), course (Sec_course), and section number (Sec_num). The section numberdistinguishes different sections of the same course that are taught during the same semester/year; its values are 1, 2, 3, ..., up to the total number of sections taught during each semester. e. A grade record refers to a student (Ssn), a particular section, and a grade (Grade). Design a relational database schema for this database application. First show all the functional dependencies that should hold among the attributes. Then design relation schemas for the database that are each in 3NF or BCNF. Specify the key attributes of each relation. Note any unspecified requirements, and make appropriate assumptions to render the specification complete BY: MA

- 45. ⚫ Thank you BY: MA

![Definitions of Keys and Attributes

Participating in Keys

⚫ A superkey of a relation schema R = {A1, A2, ... , An} is a set of

attributes S ⊆ R with the property that no two tuples t1 and t2 in any

legal relation state r of R will have t 1[S] = t2[S].

⚫ If a relation schema has more than one key, each is called a

candidate key. One of the candidate keys is arbitrarily designated

to be the primary key, and the others are called secondary keys.

⚫ An attribute of relation schema R is called a prime attribute of R if

it is a member of some candidate key of R. An attribute is called

nonprime if it is not a prime attribute—that is, if it is not a member

of any candidate key.

BY: MA](https://image.slidesharecdn.com/chapter4-functionaldependencyandnormilization-240323091040-efb846dc/85/chapter-4-Functional-Dependency-and-Normilization-pdf-17-320.jpg)